Full-Body Motion Capture-Based Virtual Reality Multi-Remote Collaboration System

Abstract

:1. Introduction

2. Literature Review

2.1. Immersive Collaboration Platform

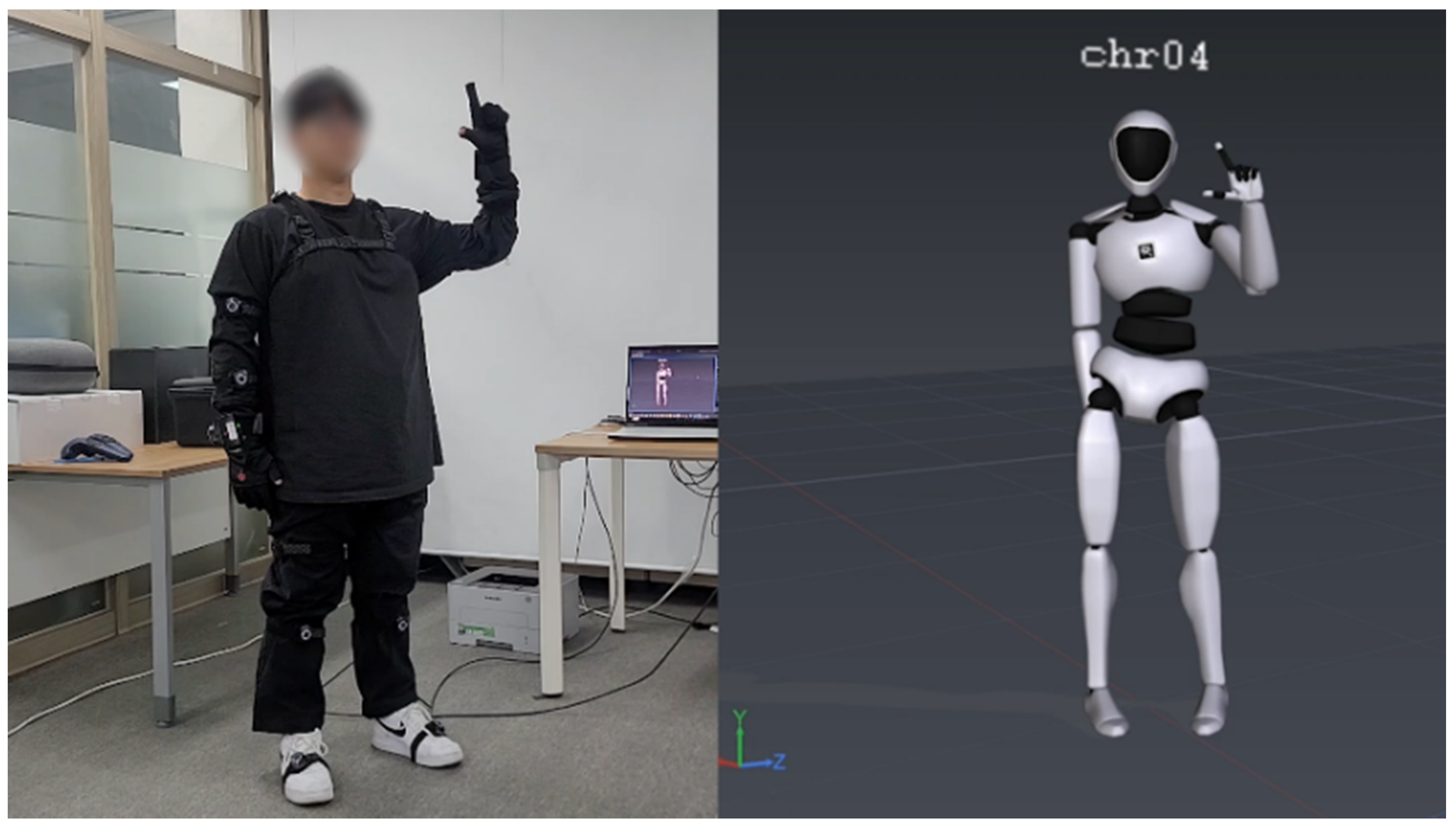

2.2. Motion Capture

3. Proposed System

3.1. Proposed System Development Environment

- OS: Window 10

- CPU: Intel® Core™ i9-10980HK CPU @ 2.40 GHz 3.10 GHz

- RAM: 32.0 GB

- GPU: NVDIA GeForce GTX 2080 Super

- Framework: Unity 2019.4.18f, Visual Studio 2019

- Language: C#

- Hardware: Perception Neuron Studio, HTC Vive Pro Eye, Oculus Rift S

- Software: Axis Studio Software, Unity

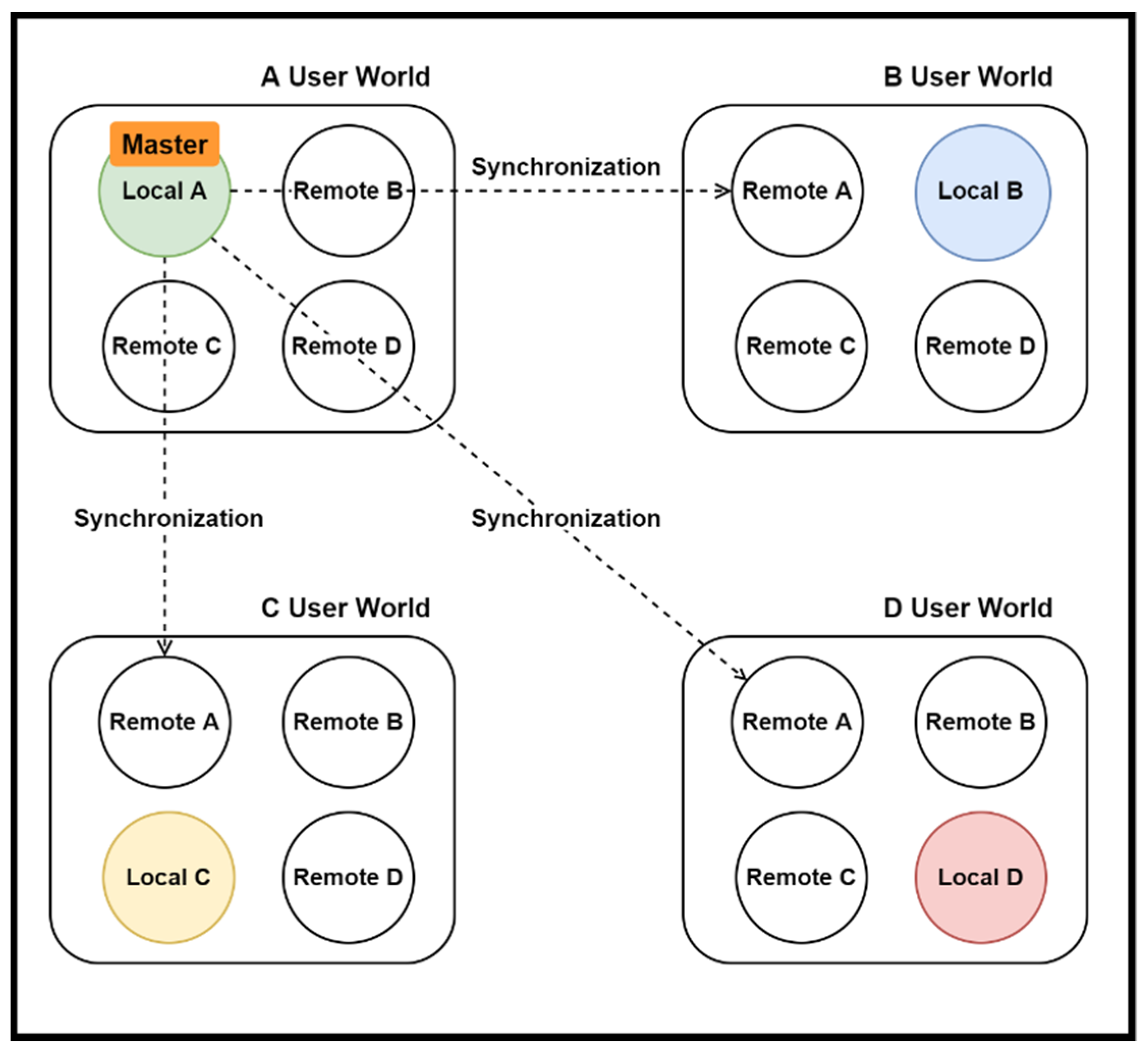

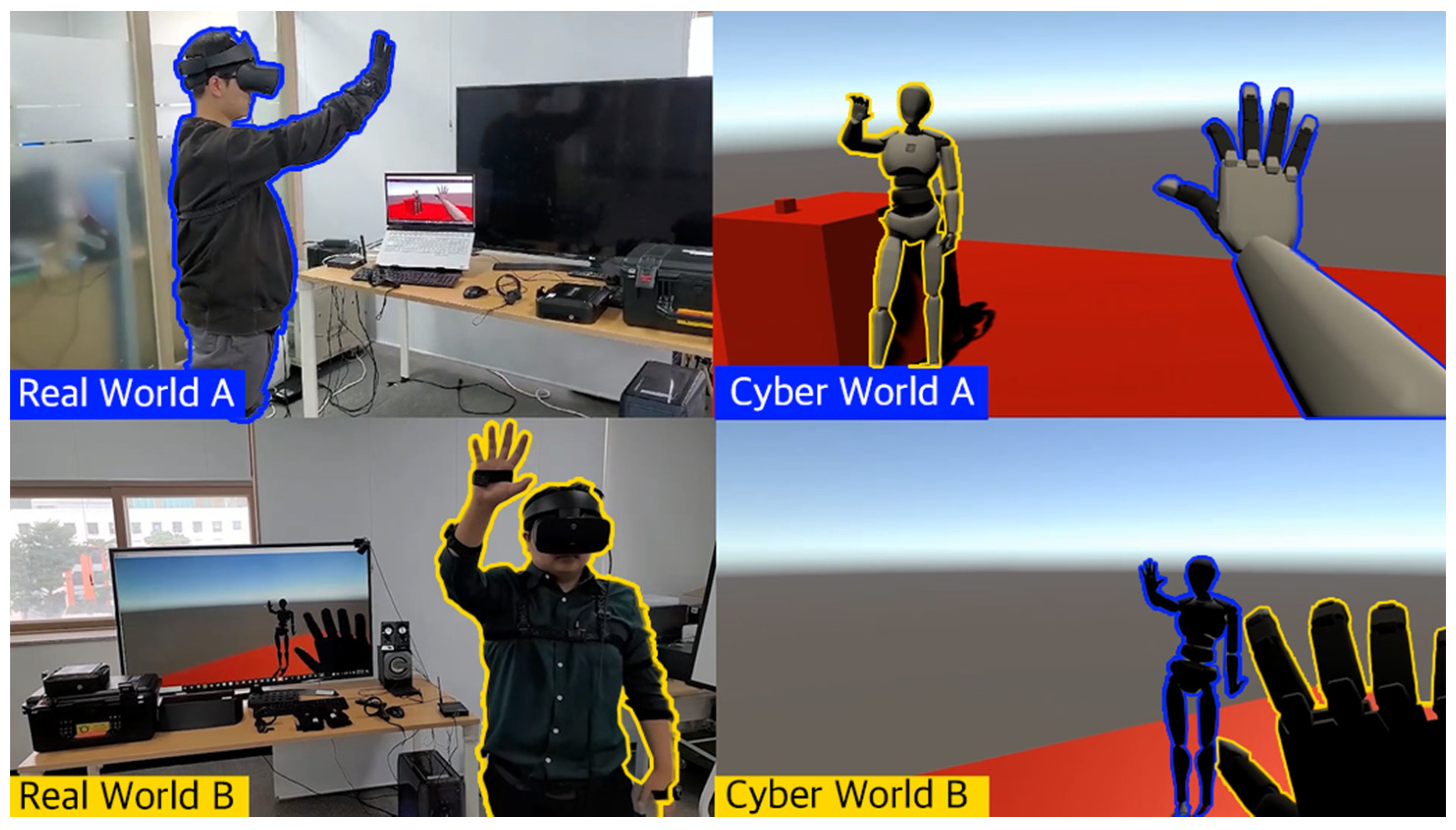

3.2. System Development

4. Experiments and Results

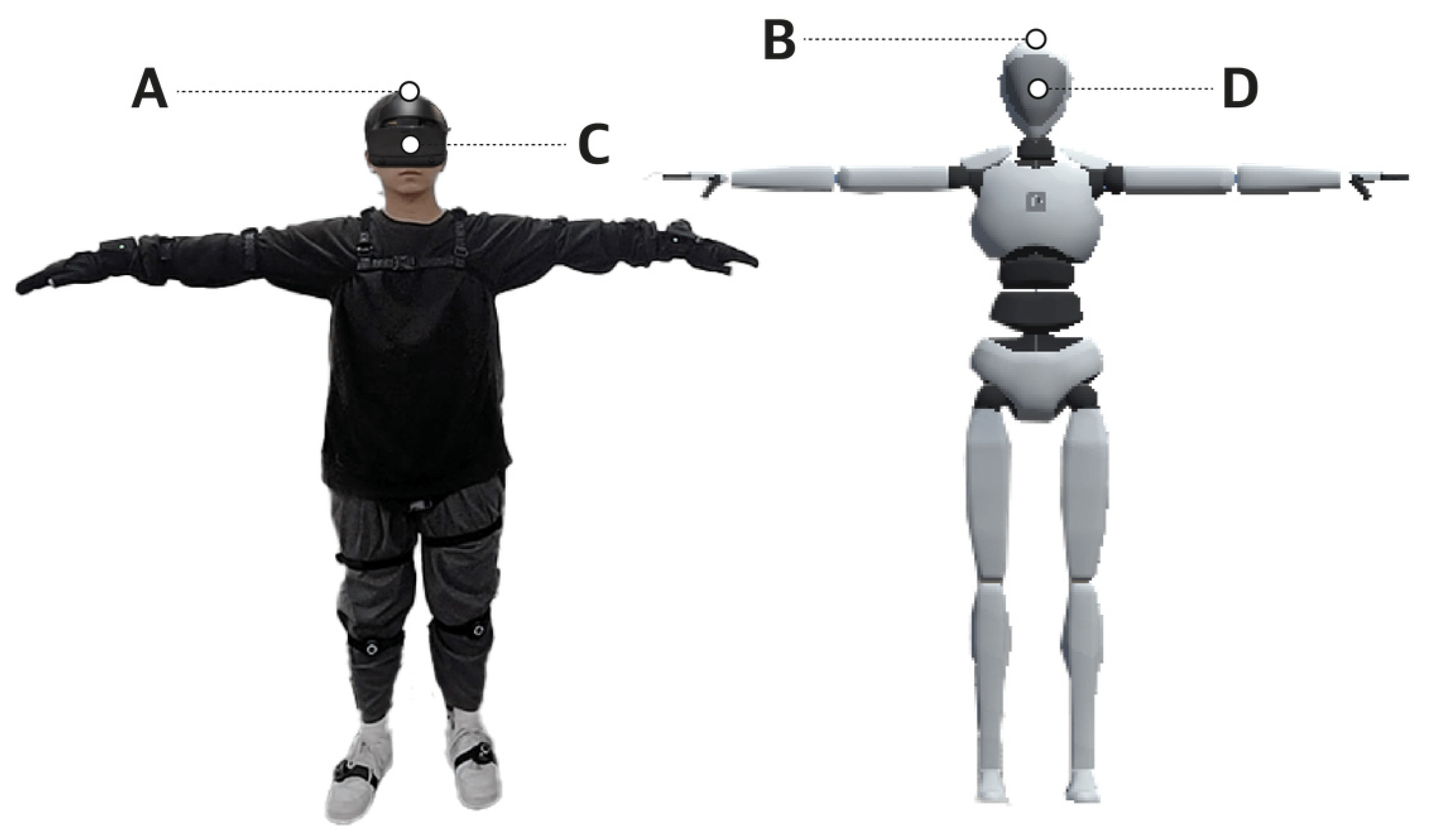

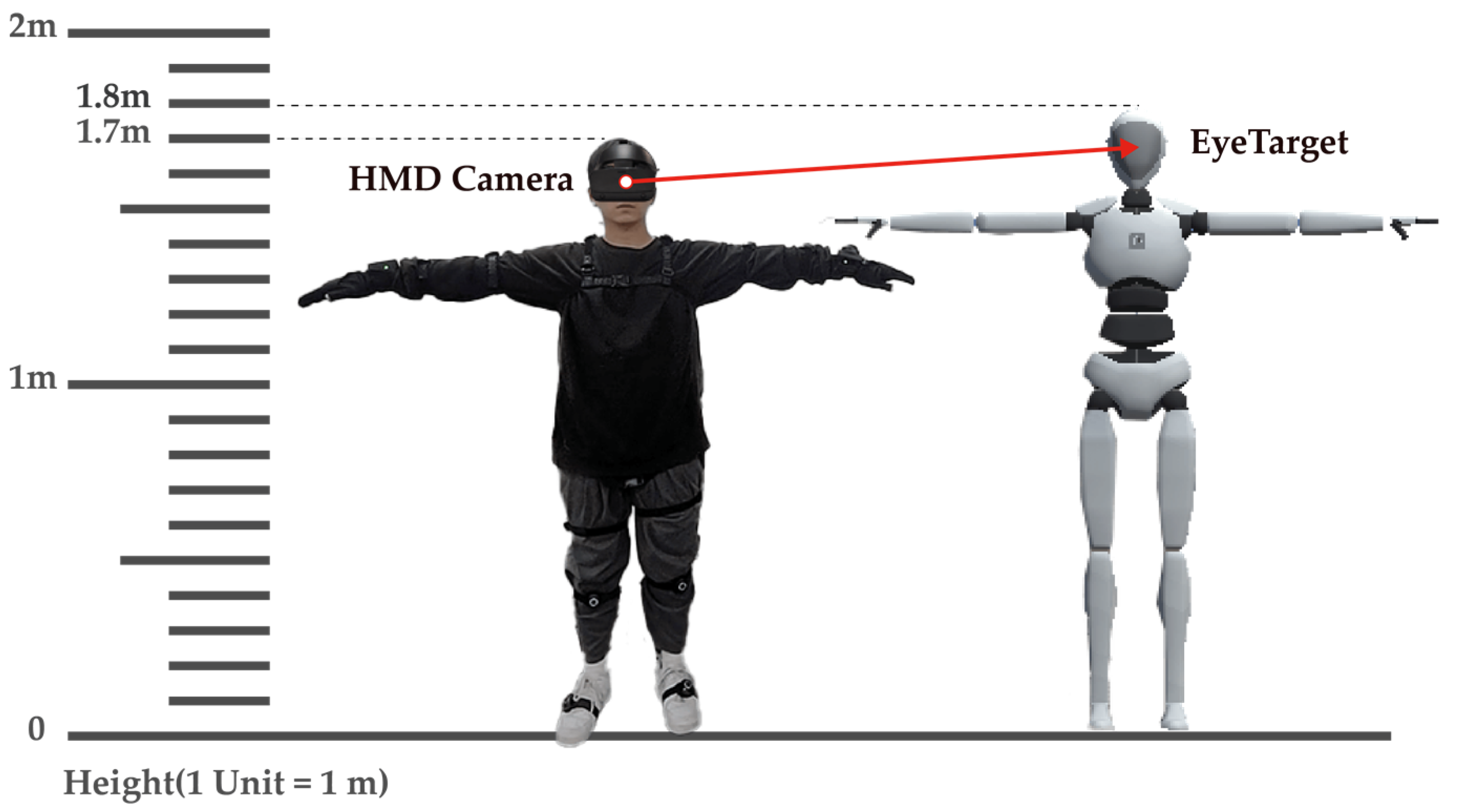

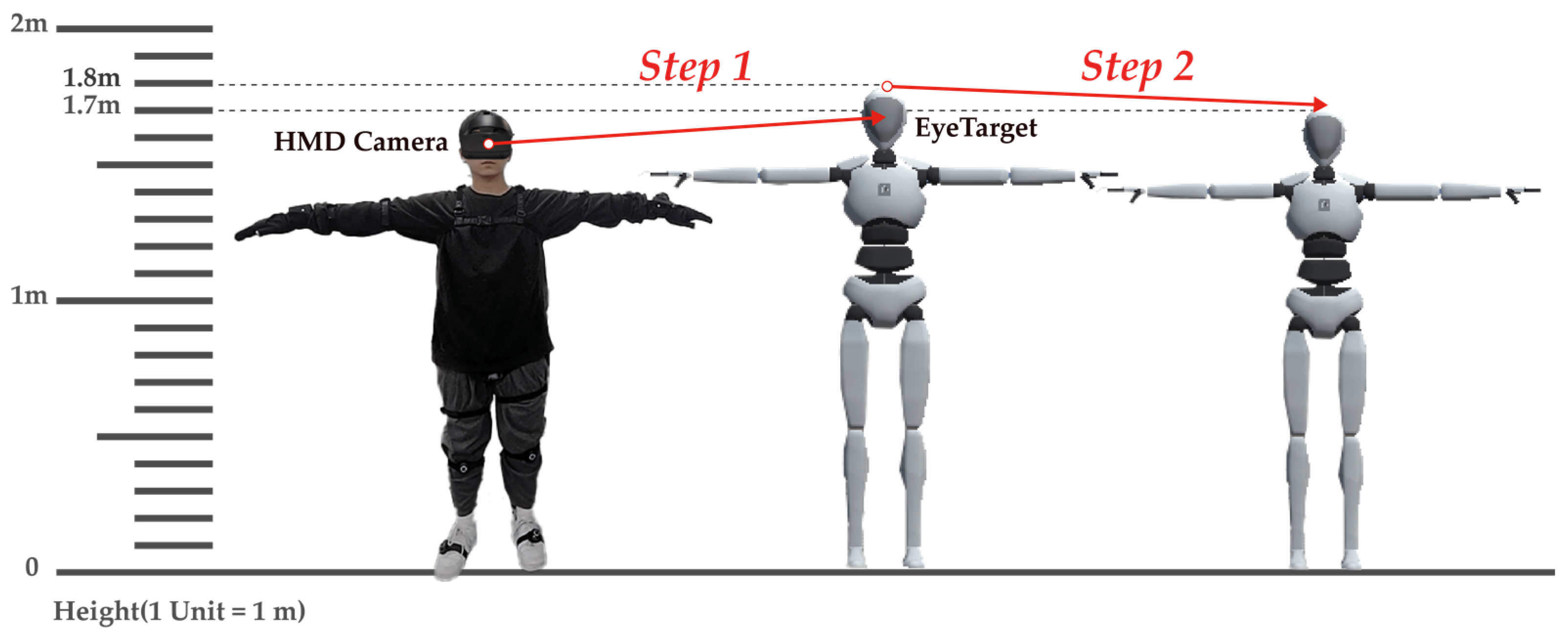

4.1. Character Scale Synchronization

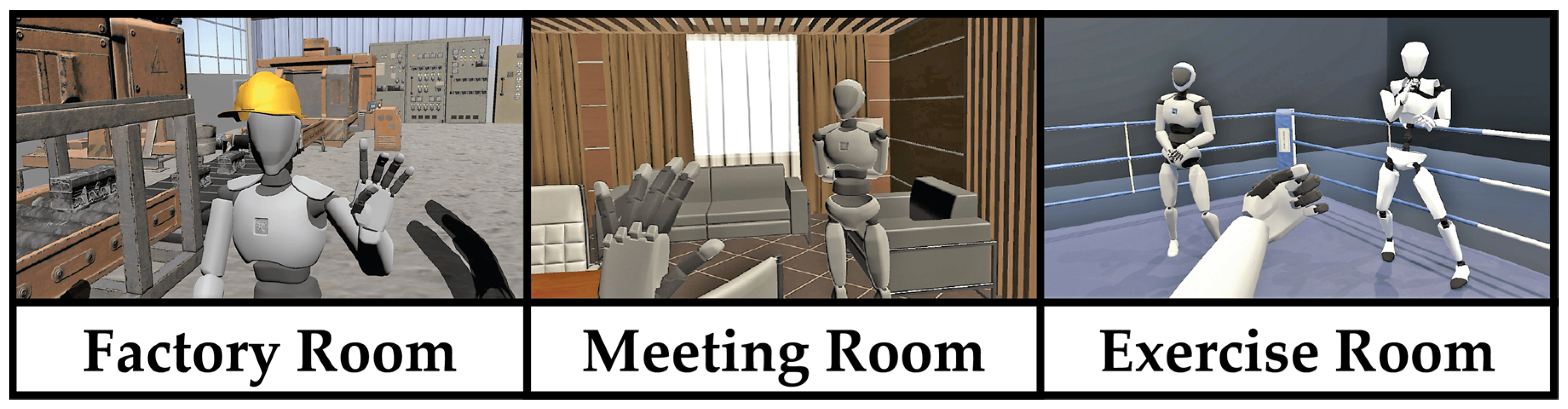

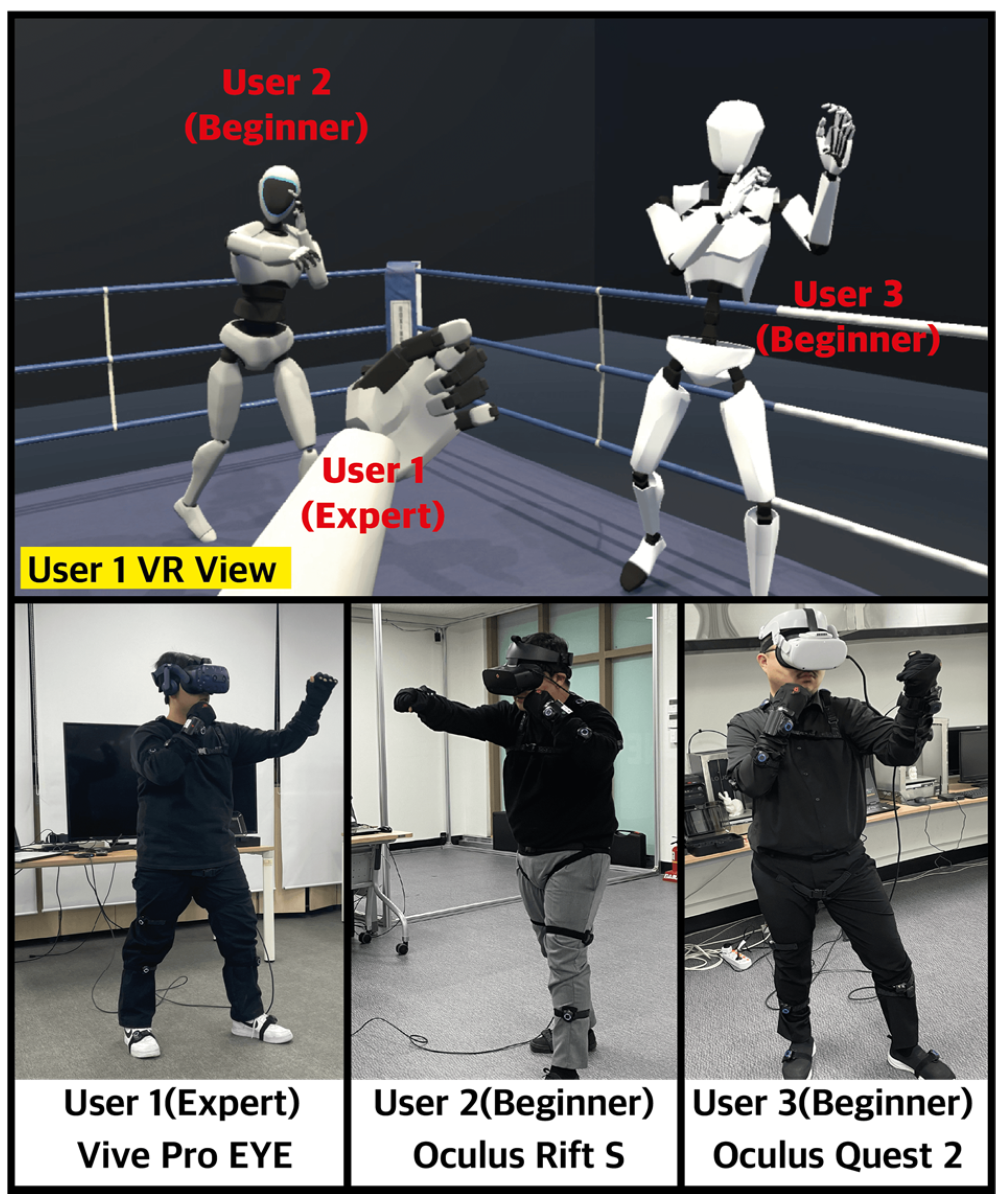

4.2. Multi Remote Collaboration System

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Byrnes, K.G.; Kiely, P.A.; Dunne, C.P.; McDermott, K.W.; Coffey, J.C. Communication, collaboration and contagion:“Virtualisation” of anatomy during COVID-19. Clin. Anat. 2021, 34, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.; Jeong, I.; Lee, J.; Kim, J.; Cho, J. VR content for Non contact job training and experience. In Proceedings of the 2021 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Korea, 19–21 October 2021; IEEE: New York, NY, USA, 2021; pp. 486–489. [Google Scholar]

- Rahmat, A. An Analysis of Applying Zoom Cloud Meeting towards EFL Learning in Pandemic Era COVID-19. Br. J. Bhs. Dan Sastra Ingg. 2021, 10, 114–134. [Google Scholar] [CrossRef]

- Syaharuddin, S.; Husain, H.; Herianto, H.; Jusmiana, A. The effectiveness of advance organiser learning model assisted by Zoom Meeting application. Cypriot J. Educ. Sci. 2021, 16, 952–966. [Google Scholar] [CrossRef]

- Mahmood, T.; Fulmer, W.; Mungoli, N.; Huang, J.; Lu, A. Improving information sharing and collaborative analysis for remote geospatial visualization using mixed reality. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 14–18 October 2019; IEEE: New York, NY, USA, 2019; pp. 236–247. [Google Scholar]

- Transparency Market Research. Available online: https://www.transparencymarketresearch.com/immersive-technologies-market.html (accessed on 10 April 2022).

- Sutherland, I.E. A head-mounted three dimensional display. In Proceedings of the AFIPS Fall Joint Computer Conference, Part I, San Francisco, CA, USA, 9–11 December 1968; pp. 757–764. [Google Scholar]

- Han, S.; Kim, J. A study on immersion of hand interaction for mobile platform virtual reality contents. Symmetry 2017, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Gallagher, S. Philosophical conceptions of the self: Implications for cognitive science. Trends Cogn. Sci. 2000, 4, 14–21. [Google Scholar] [CrossRef]

- Botvinick, M.; Cohen, J. Rubber hands ‘feel’touch that eyes see. Nature 1998, 391, 756. [Google Scholar] [CrossRef]

- Petkova, V.I.; Khoshnevis, M.; Ehrsson, H.H. The perspective matters! Multisensory integration in ego-centric reference frames determines full-body ownership. Front. Psychol. 2011, 2, 35. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peck, T.C.; Seinfeld, S.; Aglioti, S.M.; Slater, M. Putting yourself in the skin of a black avatar reduces implicit racial bias. Conscious. Cogn. 2013, 22, 779–787. [Google Scholar] [CrossRef]

- Egeberg, M.C.S.; Lind, S.L.R.; Serubugo, S.; Skantarova, D.; Kraus, M. Extending the human body in virtual reality: Effect of sensory feedback on agency and ownership of virtual wings. In Proceedings of the 2016 Virtual Reality International Conference, New York, NY, USA, 23–25 March 2016; pp. 1–4. [Google Scholar]

- Truong, P.; Hölttä-Otto, K.; Becerril, P.; Turtiainen, R.; Siltanen, S. Multi-User Virtual Reality for Remote Collaboration in Construction Projects: A Case Study with High-Rise Elevator Machine Room Planning. Electronics 2021, 10, 2806. [Google Scholar] [CrossRef]

- Engage. Available online: https://engagevr.io/ (accessed on 15 April 2022).

- Sync. Available online: https://sync.vive.com/ (accessed on 15 April 2022).

- Mootup. Available online: https://mootup.com/ (accessed on 15 April 2022).

- Jeong, D.C.; Xu, J.J.; Miller, L.C. Inverse kinematics and temporal convolutional networks for sequential pose analysis in vr. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Virtual Reality, Virtual/Online, 14–18 December 2020; IEEE: New York, NY, USA, 2020; pp. 274–281. [Google Scholar]

- Tea, S.; Panuwatwanich, K.; Ruthankoon, R.; Kaewmoracharoen, M. Multiuser immersive virtual reality application for real-time remote collaboration to enhance design review process in the social distancing era. J. Eng. Des. Technol. 2021, 20, 281–298. [Google Scholar] [CrossRef]

- Young, J.; Langlotz, T.; Cook, M.; Mills, S.; Regenbrecht, H. Immersive telepresence and remote collaboration using mobile and wearable devices. IEEE Trans. Vis. Comput. Graph. 2019, 25, 1908–1918. [Google Scholar] [CrossRef] [PubMed]

- Diaz-Kommonen, L.; Vishwanath, G. Accessible Immersive Platforms for Virtual Exhibitions involving Cultural Heritage. In Proceedings of the Electronic Visualisation and the Arts, Florence, Italy, 14 June 2021; Leonardo Libri Srl: Firenze, Italy, 2021; pp. 125–130. [Google Scholar]

- Saffo, D.; Di Bartolomeo, S.; Yildirim, C.; Dunne, C. Remote and collaborative virtual reality experiments via social vr platforms. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15. [Google Scholar]

- Softspace. Available online: https://soft.space/ (accessed on 15 April 2022).

- Dream. Available online: https://dreamos.com/ (accessed on 15 April 2022).

- Sketchbox Design. Available online: https://design.sketchbox3d.com/ (accessed on 15 April 2022).

- Worldviz. Available online: https://www.worldviz.com/virtual-reality-collaboration-software (accessed on 15 April 2022).

- Frame. Available online: https://framevr.io/ (accessed on 15 April 2022).

- Rumii. Available online: https://www.dogheadsimulations.com/rumii (accessed on 15 April 2022).

- Altspace VR. Available online: https://altvr.com/ (accessed on 15 April 2022).

- Glue. Available online: https://glue.work/ (accessed on 15 April 2022).

- Immersed. Available online: https://immersed.com/ (accessed on 15 April 2022).

- meetingRoom. Available online: https://meetingroom.io/ (accessed on 15 April 2022).

- Foretell Reality. Available online: https://foretellreality.com/ (accessed on 15 April 2022).

- Wonda. Available online: https://www.wondavr.com/ (accessed on 15 April 2022).

- Bigscreen. Available online: https://www.bigscreenvr.com/ (accessed on 15 April 2022).

- vSpatial. Available online: https://www.vspatial.com/ (accessed on 15 April 2022).

- hubs moz://a. Available online: https://hubs.mozilla.com/ (accessed on 15 April 2022).

- Rec Room. Available online: https://recroom.com/ (accessed on 15 April 2022).

- Microsoft Mesh. Available online: https://www.microsoft.com/en-us/mesh (accessed on 15 April 2022).

- MeetinVR. Available online: https://www.meetinvr.com/ (accessed on 15 April 2022).

- MetaQuest. Available online: https://www.oculus.com/workrooms/?locale=ko_KR (accessed on 15 April 2022).

- Arthur. Available online: https://www.arthur.digital/ (accessed on 15 April 2022).

- The Wild. Available online: https://thewild.com/ (accessed on 15 April 2022).

- Spatial. Available online: https://spatial.io/ (accessed on 15 April 2022).

- VRChat. Available online: https://hello.vrchat.com/ (accessed on 15 April 2022).

- Neos. Available online: https://neos.com/ (accessed on 15 April 2022).

- V-Armed. Available online: https://www.v-armed.com/features/ (accessed on 15 April 2022).

- Mendes, D.; Medeiros, D.; Sousa, M.; Ferreira, R.; Raposo, A.; Ferreira, A.; Jorge, J. Mid-air modeling with boolean operations in VR. In Proceedings of the IEEE Symposium 3D User Interfaces, Los Angeles, CA, USA, 18–19 March 2017; pp. 154–157. [Google Scholar]

- Schwind, V.; Knierim, P.; Tasci, C.; Franczak, P.; Haas, N.; Henze, N. “These are not my hands!” Effect of Gender on the Perception of Avatar Hands in Virtual Reality. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, Denver, CO, USA, 6–11 May 2017; pp. 1577–1582. [Google Scholar]

- Sers, R.; Forrester, S.; Moss, E.; Ward, S.; Ma, J.; Zecca, M. Validity of the Perception Neuron inertial motion capture system for upper body motion analysis. Measurement 2020, 149, 107024. [Google Scholar] [CrossRef]

- Wang, T.Y.; Sato, Y.; Otsuki, M.; Kuzuoka, H.; Suzuki, Y. Effect of Full Body Avatar in Augmented Reality Remote Collaboration. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; IEEE: New York, NY, USA, 2019; pp. 1221–1222. [Google Scholar]

- Gong, S.J. Motion capture and video motion analysis. Sports Sci. 2019, 146, 40–45. [Google Scholar]

- Agarwala, A.; Hertzmann, A.; Salesin, D.H.; Seitz, S.M. Keyframe-based tracking for rotoscoping and animation. ACM Trans. Graph. 2004, 23, 584–591. [Google Scholar] [CrossRef] [Green Version]

- Perception Neuron. Available online: https://www.neuronmocap.com/ (accessed on 22 April 2022).

- EA. Available online: https://www.ea.com/ko-kr/games/fifa/fifa-22/hypermotion/ (accessed on 22 April 2022).

- Wei, W.; Kurita, K.; Kuang, J.; Gao, A. Real-Time Limb Motion Tracking with a Single IMU Sensor for Physical Therapy Exercises. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Mexico, 1–5 November 2021; pp. 7152–7157. [Google Scholar]

- Wei, W.; Carter, M.; Sujit, D. Towards on-demand virtual physical therapist: Machine learning-based patient action understanding, assessment and task recommendation. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1824–1835. [Google Scholar] [CrossRef]

- Herrow, M.F.M.; Azraai, N.Z. Digital Preservation of Intangible Cultural heritage of Joget Dance Movement Using Motion Capture Technology. Int. J. Herit. Art Multimed. 2021, 4, 1–13. [Google Scholar] [CrossRef]

- Conti, M.; Gathani, J.; Tricomi, P.P. Virtual Influencers in Online Social Media. IEEE Commun. Mag. 2022, 1–13. [Google Scholar] [CrossRef]

- Tee, K.S.; Low, E.; Saim, H.; Zakaria, W.N.W.; Khialdin, S.B.M.; Isa, H.; Awad, M.I.; Soon, C.F. A study on the ergonomic assessment in the workplace. AIP Conf. Proc. 2017, 1883, 020034. [Google Scholar]

- Cho, Y.; Park, K.S. Design and development of the multiple Kinect sensor-based exercise pose estimation system. J. Inf. Commun. Converg. Eng. 2017, 21, 558–567. [Google Scholar]

- Naeemabadi, M.; Dinesen, B.; Andersen, O.K.; Hansen, J. Influence of a marker-based motion capture system on the performance of Microsoft Kinect v2 skeleton algorithm. IEEE Sens. J. 2018, 19, 171–179. [Google Scholar] [CrossRef] [Green Version]

- Podkosova, I.; Vasylevska, K.; Schoenauer, C.; Vonach, E.; Fikar, P.; Bronederk, E.; Kaufmann, H. Immersivedeck: A large-scale wireless VR system for multiple users. In Proceedings of the IEEE 9th Workshop on Software Engineering and Architectures for Realtime Interactive Systems, Greenville, SC, USA, 13–23 March 2016; pp. 1–7. [Google Scholar]

- Malleson, C.; Kosek, M.; Klaudiny, M.; Huerta, I.; Bazin, J.C.; Sorkine-Hornung, A.; Mine, M.; Mitchell, K. Rapid one-shot acquisition of dynamic VR avatars. In Proceedings of the IEEE Virtual Reality, Los Angeles, CA, USA, 18–22 March 2017; pp. 131–140. [Google Scholar]

- Kishore, S.; Navarro, X.; Dominguez, E.; de la Pena, N.; Slater, M. Beaming into the news: A system for and case study of tele-immersive journalism. IEEE Comput. Graph. Appl. 2018, 38, 89–101. [Google Scholar] [CrossRef] [PubMed]

- Unity. Available online: https://unity.com/kr (accessed on 24 April 2022).

- Kučera, E.; Haffner, O.; Leskovský, R. Multimedia Application for Object-oriented Programming Education Developed by Unity Engine. In Proceedings of the 2020 Cybernetics & Informatics (K & I), Velke Karlovice, Czech Republic, 29 January–1 February 2020; IEEE: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Kim, H.S.; Hong, N.; Kim, M.; Yoon, S.G.; Yu, H.W.; Kong, H.J.; Kim, S.J.; Chai, Y.J.; Choi, H.J.; Choi, J.Y.; et al. Application of a perception neuron® system in simulation-based surgical training. J. Clin. Med. 2019, 8, 124. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gleicher, M. Retargetting motion to new characters. In Proceedings of the 25th Annual Conference on Computer Graphics and Interactive Techniques, Orlando, FL, USA, 19–24 July 1998; ACM: New York, NY, USA, 1998; pp. 33–42. [Google Scholar]

| Immersive Collaboration Platforms | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Number | System | Cross-Platform | Avatar Type (VR Environment) | Custom Avatars | Maximum User in a Room | ||||||||

| VR | AR | PC | Mobile | Head | Arm | Hand | Torso | Full-Body | |||||

| IK | Motion Capture | ||||||||||||

| 1 | Softspace [23] | O | O | O | 12 | ||||||||

| 2 | Dream [24] | O | O | O | 6 | ||||||||

| 3 | Sketchbox [25] | O | O | O | O | 16 | |||||||

| 4 | VIZIBLE [26] | O | O | O | O | 6 | |||||||

| 5 | FrameVR [27] | O | O | O | O | O | O | 100 | |||||

| 6 | Rumii [28] | O | O | O | O | O | O | O | 40 | ||||

| 7 | AltspaceVR [29] | O | O | O | O | O | O | 50 | |||||

| 8 | Glue [30] | O | O | O | O | O | O | O | 10 | ||||

| 9 | Immersed [31] | O | O | O | O | O | O | 12 | |||||

| 10 | meeting Room [32] | O | O | O | O | O | O | O | 12 | ||||

| 11 | Foretell Reality [33] | O | O | O | O | O | O | 50 | |||||

| 12 | WondaVR [34] | O | O | O | O | O | O | O | 50 | ||||

| 13 | BigScreen [35] | O | O | O | O | O | 12 | ||||||

| 14 | VSPatial [36] | O | O | O | O | O | 16 | ||||||

| 15 | MozillaHubs [37] | O | O | O | O | O | O | O | 25 | ||||

| 16 | RecRoom [38] | O | O | O | O | O | O | O | 40 | ||||

| 17 | Mesh [39] | O | O | O | O | O | O | O | O | 8 | |||

| 18 | MeetingVR [40] | O | O | O | O | O | O | O | 32 | ||||

| 19 | Horizon Workrooms [41] | O | O | O | O | O | O | 16 | |||||

| 20 | Arthur [42] | O | O | O | O | O | O | O | 70 | ||||

| 21 | The Wild [43] | O | O | O | O | O | O | O | O | 8 | |||

| 22 | Spatial [44] | O | O | O | O | O | O | O | O | O | 50 | ||

| 23 | VR Chat [45] | O | O | O | O | 40 | |||||||

| 24 | Neos VR [46] | O | O | O | O | 20 | |||||||

| 25 | ENGAGE [15] | O | O | O | O | O | 70 | ||||||

| 26 | Vive sync [16] | O | O | O | O | O | 30 | ||||||

| 27 | MOOTUP [17] | O | O | O | O | O | O | 50 | |||||

| 28 | V-Armed [47] | O | O | 10 | |||||||||

| Title | Optical Marker-Based Method | Markerless Measurement Method | Inertial Measurement Unit |

|---|---|---|---|

| Company | OptiTrack, Motion Analysis VICON, ART | Microsoft—Kinect | Xsens, Noitom, Nansense, Rokoko |

| Capture method | Optical camera data | Kinect camera data | IMU(Inertial Measurement Unit) inertial sensors data |

| Cost | Very High cost | Low cost | High Cost |

| Available places | Indoor studio | Indoor | Indoor, Outdoor |

| Test Group | Real World Height | Virtual Character Average Height | Difference Value |

|---|---|---|---|

| subject 1 | 163 | 162.85 | 0.15 |

| subject 2 | 163 | 162.12 | 0.88 |

| subject 3 | 164.3 | 164.07 | 0.23 |

| subject 4 | 164 | 163.11 | 0.89 |

| subject 5 | 167.5 | 167.36 | 0.14 |

| subject 6 | 173 | 172.87 | 0.13 |

| subject 7 | 173.5 | 173.09 | 0.41 |

| subject 8 | 180 | 179.11 | 0.89 |

| Mean | 0.465 | ||

| PC 1 | PC 2 | PC 3 | |

|---|---|---|---|

| CPU | Intel® Core™ i9-10980HK CPU @ 2.40 GHz 3.10 GHz | Intel® Core™ i7-10870H CPU @ 2.20 GHz 2.21 GHz | Intel® Core™ i7-10870H CPU @ 2.20 GHz 2.21 GHz |

| RAM | 32.0 GB | 16.0 GB | 16.0 GB |

| GPU | NVDIA GeForce GTX 2080 Super | NVDIA GeForce RTX 3070 | NVDIA GeForce RTX 3070 |

| VR Device | HTC Vive pro Eye | Oculus Rift S | Oculus Quest 2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ha, E.; Byeon, G.; Yu, S. Full-Body Motion Capture-Based Virtual Reality Multi-Remote Collaboration System. Appl. Sci. 2022, 12, 5862. https://doi.org/10.3390/app12125862

Ha E, Byeon G, Yu S. Full-Body Motion Capture-Based Virtual Reality Multi-Remote Collaboration System. Applied Sciences. 2022; 12(12):5862. https://doi.org/10.3390/app12125862

Chicago/Turabian StyleHa, Eunchong, Gongkyu Byeon, and Sunjin Yu. 2022. "Full-Body Motion Capture-Based Virtual Reality Multi-Remote Collaboration System" Applied Sciences 12, no. 12: 5862. https://doi.org/10.3390/app12125862