Consistent Regularized Non-Negative Tucker Decomposition for Three-Dimensional Tensor Data Representation

Abstract

1. Introduction

2. Preliminaries

3. Model and Algorithm

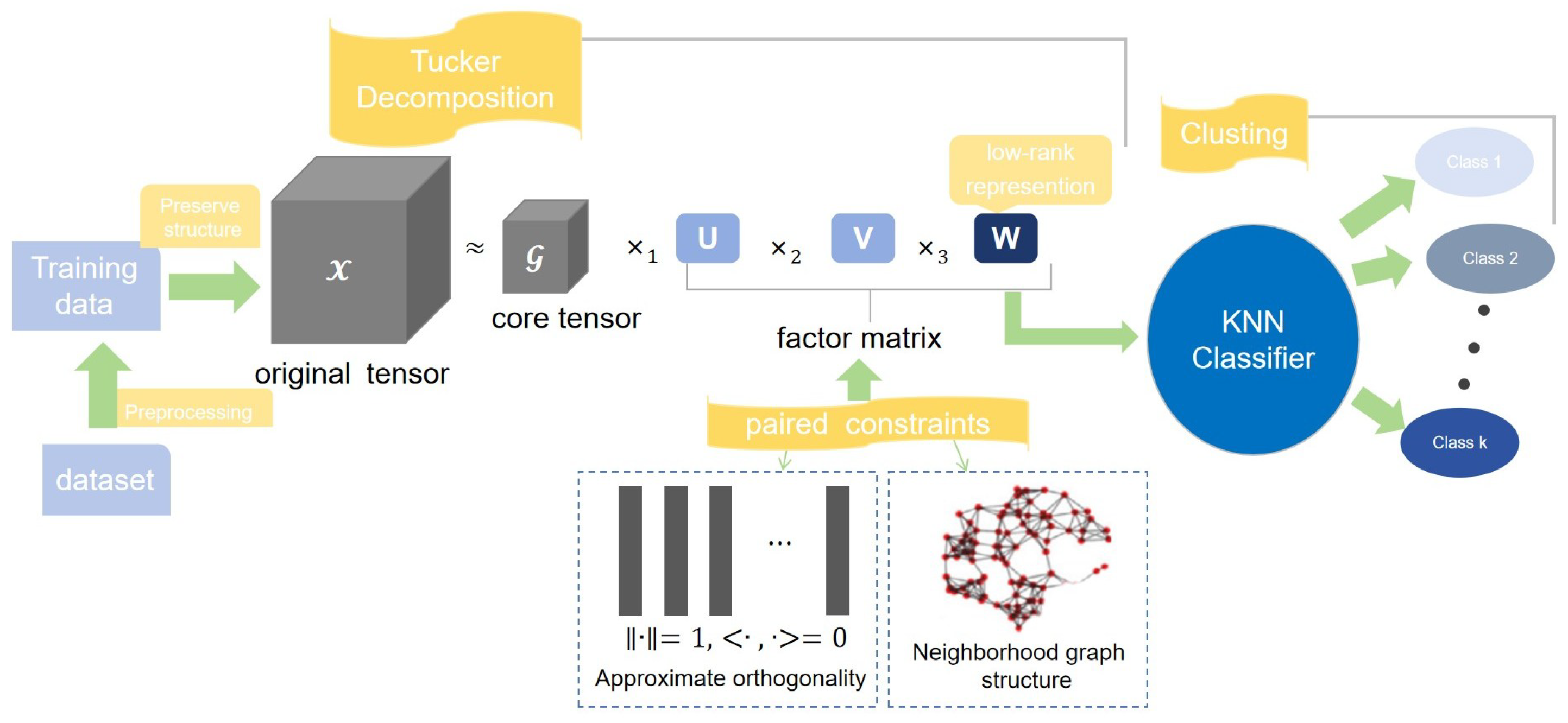

3.1. Motivations and Model

- Step one: The unit-norm constraints on the column of are not mandatory, and direct removal is also feasible. For this item, we choose not to ignore it but to handle it to achieve a more comprehensive consideration. It can be implemented through to attain unit-norm constraint, where r is number of columns in matrix .

- Step two: The orthogonality constraint is where denotes the i-th column of the matrix . The orthogonality constraint of independent relationships between columns is of utmost importance. Considering the non-negativity constraints, we set for any r and j. From the previous text, we have learned that is held, where , be an all-one vector, be an identity matrix.

3.2. Computational Algorithm

| Algorithm 1 The MPCNTD method |

|

3.3. Discussion on Convergence

3.4. Computational Complexity

4. Experiments

4.1. Datasets Description

4.2. Evaluation Measures

4.3. Parameter Sensitivity

- Simulation of incomplete data situationAs an unsupervised algorithm, MPCNTD is directly affected by the proportion of extracted data. In this part, we consider the performance of the model under incomplete data conditions, which is crucial for practical applications. It’s not that the more samples extracted, the better the algorithm’s behavior. Multiple sampling results to simulate incomplete data can help explore the overall performance of the algorithm. The sampling ratio for each dataset is 10%, 20%, 30%, 40%, 50%, 60%, and the results are recorded as the average of 10 replicates each time. Figure 2 shows the clustering performance of the algorithm and the curve of the percentage of sampled data. ACC, NMI, and PUR are represented by solid blue, red, and green lines, respectively. The corresponding dashed line displays the average level of the datasets.

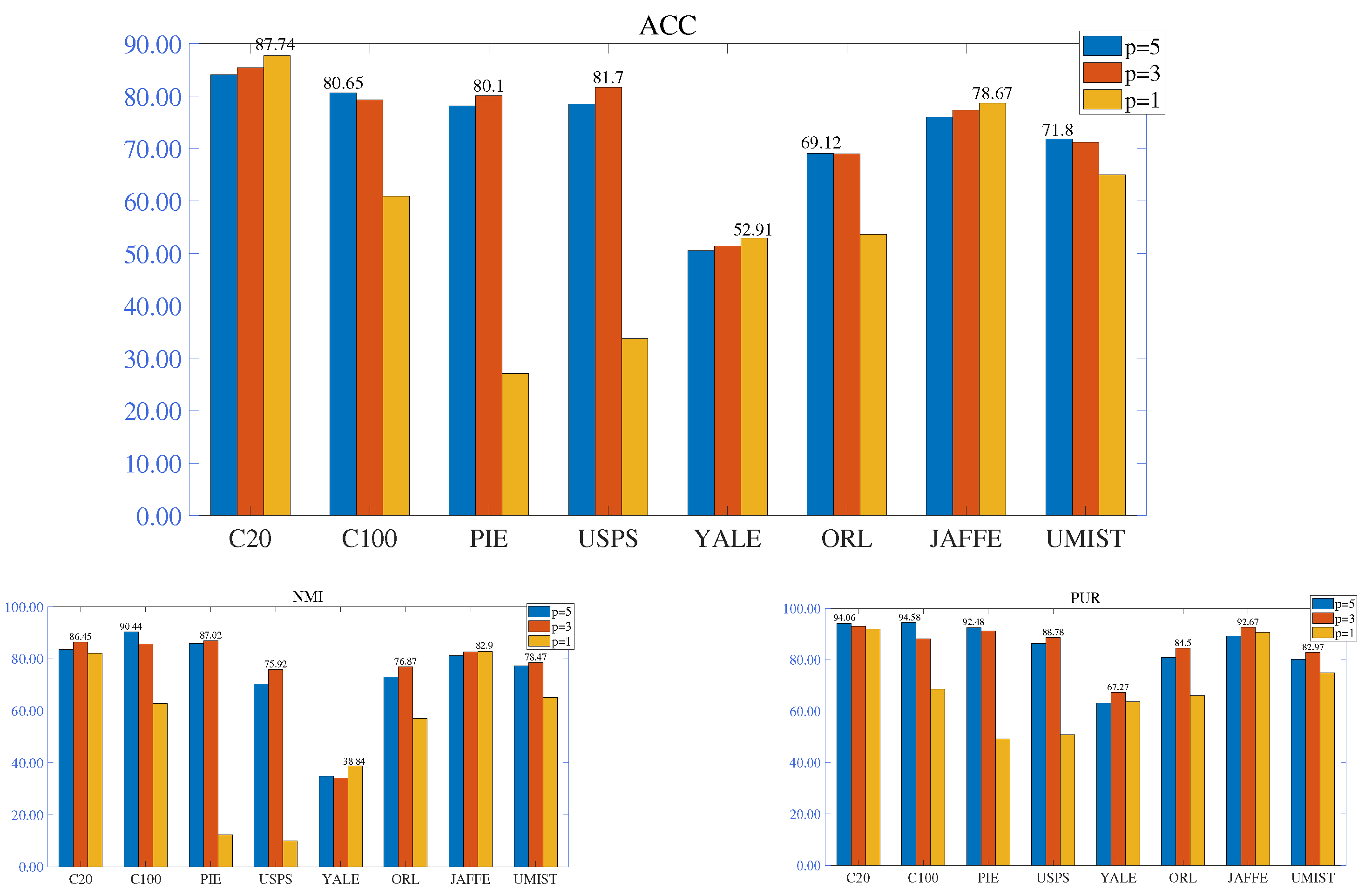

- Graph construction detailsParameter p in figure construction is discussed. For the graph in MPCNTD, the nearest neighbours of KNN are set to . The average classification ACC, NMI and PUR results are reported in Figure 3. Parameter , and are presented in blue, red, and orange columns, respectively. The optimal result can be seen at its columnar top. We found that smaller values are not necessarily better. Due to the strong correlation between points (i.e., data and data) during the graphic construction process, redundant graphic information is generated. Select appropriate p values in practical problems.

- Hyper-parameter protocol about andThere are multiple adjustable regularized parameters and in our model to balance the fundamental decomposition and consistent regularization terms. To diminish confusion in parameter selection, we set and and deeply explore the clustering ability set in this way. The grid control method is considered to acquire the relatively optimal parameters. The numerical selection of two parameters is by using prior-free criteria. We select situations to achieve the best clustering effect and provide robustness plots. To weigh the three measurement criteria, the numerical effect of ACC is the essential decisive factor, assisted with NMI and PUR. Specific experimental results are presented in Figure 4.

4.4. Experiments for Effectiveness and Analysis

- (1)

- Based on eight image datasets, MPCNTD attains the competent clustering performance in most situations, which demonstrates that MPCNTD can reveal additional discriminative information for tensor data. For example, the average clustering ACC of MPCNTD on the datasets COIL20, COIL100, PIE, USPS, YALE, ORL, JAFFE, and UMIST is higher than the second best method by 3.11%, 2.99%, 1.01%, 5.48%, 6.18%, 3.71%, 2.40% and 2.02%, respectively.

- (2)

- The matrix dimension reduction approach NMF, GNMF, GDNMF outperform the k-means method with regard to existing outcome. This is because matrix dimension reduction retain more structures of the original data while discovering more accurate data representations. Moreover, the numerical result of NTD-based model is better than that of the NMF-based methods, which illustrates that tensor-based method have advantages over matrix-based methods in data representation and reduction in dimension.

- (3)

- We found that the NTD method has weak performance in clustering problems because our iteration steps are uniformly set to 100 and we choose a unified initial state. When the iteration process is relaxed and appropriate initial values are selected, this method is still feasible. The result of ONMF losing to NMF is the same. This situation indirectly illustrates that considering consistent regularization not only accelerates the iteration process, but also makes data representation more accurate.

- (4)

- MPCNTD obtains contestable clustering performance in all experiments. This situation is due to the truth that MPCNTD captures multiple pieces of information from various directions, and this consistent regularization contains graph info and a softly orthogonal structure. Graph learning can maintain the consistency of geometric information of matrices in different spaces, and the balance between multi-graph regularization maximizes the exploration of geometric information of tensor data. The normalization and orthogonality rules in approximate orthogonality work together to maintain data independence as much as possible, resulting in better clustering performance.

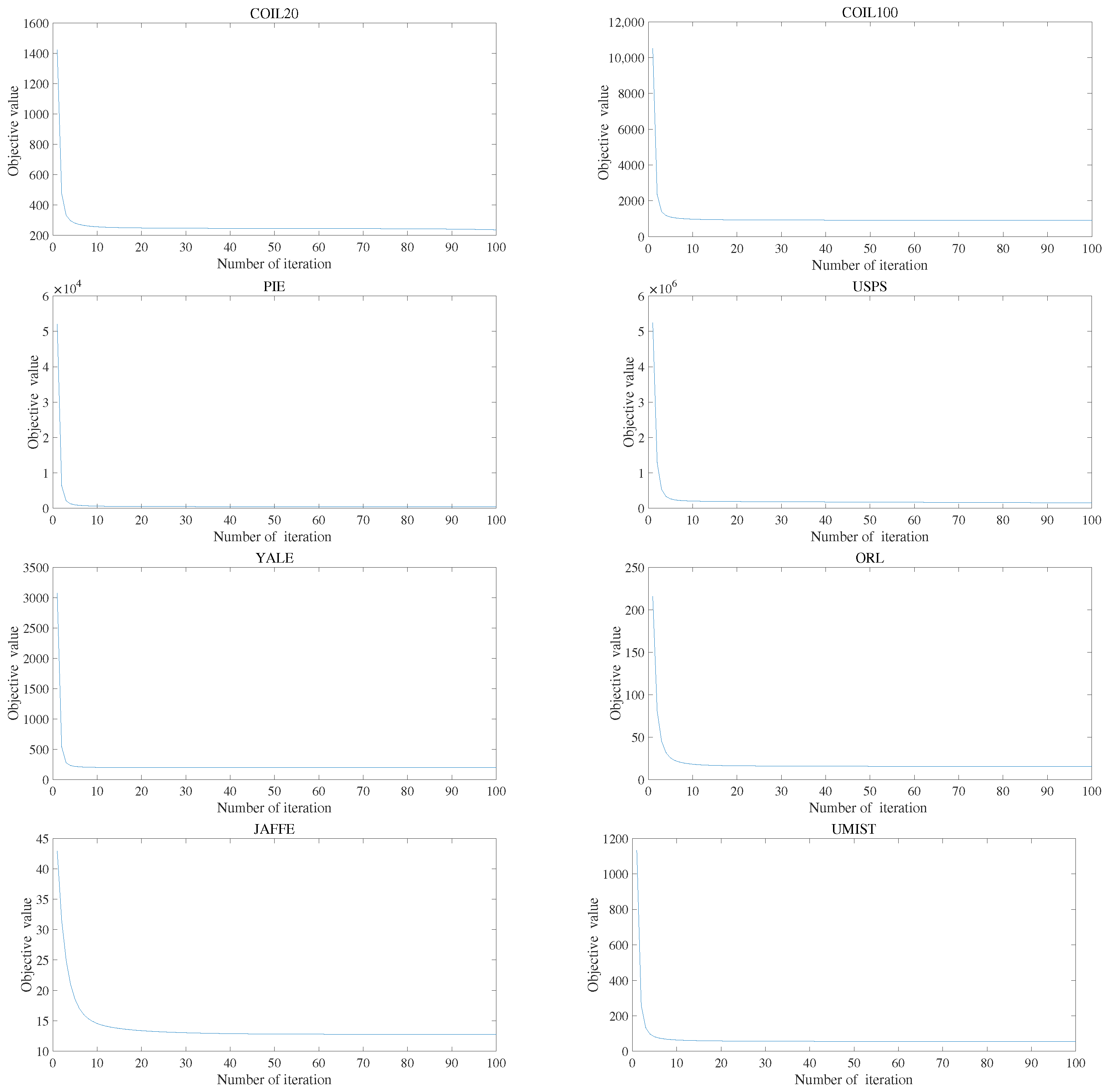

4.5. Convergence Study and Running Times

5. Conclusions and Expectation

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Zhou, G.; Cichocki, A.; Zhang, Y.; Mandic, D.P. Group component analysis for multiblock data: Common and individual feature extraction. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 2426–2439. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.D.; Seung, H.S. Learning the parts of objects by non-negative matrix factorization. Nature 1999, 401, 788–791. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.; Seung, H.S. Algorithms for non-negative matrix factorization. In Proceedings of the 14th International Conference on Neural Information Processing Systems, Denver, CO, USA, 1 January 2000; Volume 13. [Google Scholar]

- Kuang, D.; Ding, C.; Park, H. Symmetric nonnegative matrix factorization for graph clustering. In Proceedings of the 2012 SIAM International Conference on Data Mining, SIAM, Anaheim, CA, USA, 26–28 April 2012; pp. 106–117. [Google Scholar]

- De Handschutter, P.; Gillis, N. A consistent and flexible framework for deep matrix factorizations. Pattern Recognit. 2023, 134, 109102. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Kim, Y.D.; Choi, S. Nonnegative tucker decomposition. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Wang, Z.; Dellaportas, P.; Kosmidis, I. Bayesian tensor factorisations for time series of counts. Mach. Learn. 2023, 113, 3731–3750. [Google Scholar] [CrossRef]

- Yu, Y.; Zhou, G.; Zheng, N.; Qiu, Y.; Xie, S.; Zhao, Q. Graph-regularized non-negative tensor-ring decomposition for multiway representation learning. IEEE Trans. Cybern. 2022, 53, 3114–3127. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; Guan, J.; Li, Z. Unsupervised feature selection via graph regularized nonnegative CP decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 2582–2594. [Google Scholar] [CrossRef] [PubMed]

- Cai, D.; He, X.; Han, J.; Huang, T.S. Graph regularized nonnegative matrix factorization for data representation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 1548–1560. [Google Scholar] [CrossRef] [PubMed]

- Shang, F.; Jiao, L.; Wang, F. Graph dual regularization non-negative matrix factorization for co-clustering. Pattern Recognit. 2012, 45, 2237–2250. [Google Scholar] [CrossRef]

- Huang, Q.; Yin, X.; Chen, S.; Wang, Y.; Chen, B. Robust nonnegative matrix factorization with structure regularization. Neurocomputing 2020, 412, 72–90. [Google Scholar] [CrossRef]

- Ding, C.; He, X.; Simon, H.D. On the equivalence of nonnegative matrix factorization and spectral clustering. In Proceedings of the 2005 SIAM International Conference on Data Mining, SIAM, Newport Beach, CA, USA, 21–23 April 2005; pp. 606–610. [Google Scholar]

- Ding, C.; Li, T.; Peng, W.; Park, H. Orthogonal nonnegative matrix t-factorizations for clustering. In Proceedings of the 12th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; pp. 126–135. [Google Scholar]

- Li, B.; Zhou, G.; Cichocki, A. Two efficient algorithms for approximately orthogonal nonnegative matrix factorization. IEEE Signal Process. Lett. 2014, 22, 843–846. [Google Scholar] [CrossRef]

- Qiu, Y.; Zhou, G.; Zhang, Y.; Xie, S. Graph regularized nonnegative tucker decomposition for tensor data representation. In Proceedings of the ICASSP 2019—2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8613–8617. [Google Scholar]

- Qiu, Y.; Zhou, G.; Wang, Y.; Zhang, Y.; Xie, S. A generalized graph regularized non-negative tucker decomposition framework for tensor data representation. IEEE Trans. Cybern. 2020, 52, 594–607. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Lu, L.; Chen, Z. Nonnegative Tucker Decomposition with Graph Regularization and Smooth Constraint for Clustering. Pattern Recognit. 2023, 148, 110207. [Google Scholar] [CrossRef]

- Chen, D.; Zhou, G.; Qiu, Y.; Yu, Y. Adaptive graph regularized non-negative Tucker decomposition for multiway dimensionality reduction. Multimed. Tools Appl. 2024, 83, 9647–9668. [Google Scholar] [CrossRef]

- Li, X.; Ng, M.K.; Cong, G.; Ye, Y.; Wu, Q. MR-NTD: Manifold regularization nonnegative tucker decomposition for tensor data dimension reduction and representation. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 1787–1800. [Google Scholar] [CrossRef] [PubMed]

- Pan, J.; Ng, M.K.; Liu, Y.; Zhang, X.; Yan, H. Orthogonal nonnegative tucker decomposition. SIAM J. Sci. Comput. 2021, 43, B55–B81. [Google Scholar] [CrossRef]

- Qiu, Y.; Sun, W.; Zhang, Y.; Gu, X.; Zhou, G. Approximately orthogonal nonnegative Tucker decomposition for flexible multiway clustering. Sci. China Technol. Sci. 2021, 64, 1872–1880. [Google Scholar] [CrossRef]

| Datasets | Samples | Dimensions | Classes | Type | Tensor Size | |

|---|---|---|---|---|---|---|

| 1 | COIL20 | 1440 | 1024 | 20 | Object | |

| 2 | COIL100 | 7200 | 1024 | 100 | Object | |

| 3 | PIE | 2856 | 1024 | 68 | Face | |

| 4 | USPS | 9298 | 256 | 10 | Handwritten | |

| 5 | YALE | 165 | 1024 | 15 | Face | |

| 6 | ORL | 400 | 1024 | 40 | Face | |

| 7 | JAFFE | 213 | 4096 | 10 | Face | |

| 8 | UMIST | 575 | 1024 | 20 | Face |

| K-Means | NMF | ONMF | GNMF | GDNMF | NTD | GNTD | MPCNTD | |

|---|---|---|---|---|---|---|---|---|

| ACC (%) | ||||||||

| COIL20 | 53.47 ± 4.05 | 55.37 ± 3.90 | 49.18 ± 1.91 | 69.11 ± 6.82 | 70.05 ± 4.75 | 44.24 ± 2.19 | 69.17 ± 6.60 | 73.16 ± 4.76 |

| COIL100 | 43.91 ± 2.08 | 45.19 ± 1.60 | 37.12 ± 0.93 | 55.69 ± 2.66 | 55.75 ± 2.81 | 21.71 ± 0.31 | 55.98 ± 1.88 | 58.97 ± 1.85 |

| PIE | 23.84 ± 1.18 | 41.11 ± 2.68 | 21.69 ± 0.48 | 68.17 ± 3.58 | 68.43 ± 4.09 | 53.73 ± 1.42 | 70.31 ± 2.35 | 71.32 ± 2.84 |

| USPS | 64.46 ± 3.38 | 38.55 ± 1.80 | 36.26 ± 3.53 | 68.77 ± 10.74 | 69.22 ± 10.35 | 27.88 ± 1.40 | 72.34 ± 7.21 | 77.82 ± 12.98 |

| Yale | 35.58 ± 2.89 | 37.21 ± 1.62 | 35.88 ± 1.80 | 38.48 ± 2.08 | 38.48 ± 2.08 | 36.55 ± 2.08 | 41.03 ± 2.32 | 47.21 ± 2.53 |

| ORL | 49.87 ± 2.71 | 48.62 ± 2.7 | 37.00 ± 0.89 | 51.08 ± 2.08 | 52.17 ± 1.86 | 19.62 ± 0.84 | 51.05 ± 1.53 | 55.88 ± 2.02 |

| JAFFE | 48.92 ± 4.31 | 45.68 ± 2.83 | 37.79 ± 2.74 | 50.23 ± 4.64 | 58.08 ± 4.65 | 22.58 ± 1.20 | 59.15 ± 3.60 | 61.55 ± 4.37 |

| UMIST | 40.28 ± 2.18 | 38.12 ± 3.00 | 34.37 ± 1.48 | 60.00 ± 5.54 | 60.45 ± 4.82 | 27.57 ± 1.17 | 60.14 ± 4.93 | 62.47 ± 4.35 |

| NMI (%) | ||||||||

| COIL20 | 69.61 ± 1.56 | 69.65 ± 2.33 | 61.87 ± 2.01 | 84.82 ± 3.50 | 84.92 ± 2.22 | 55.13 ± 0.91 | 85.58 ± 2.81 | 86.95 ± 1.68 |

| COIL100 | 72.33 ± 0.81 | 72.41 ± 0.34 | 62.48 ± 0.51 | 79.09 ± 1.47 | 79.31 ± 1.33 | 47.04 ± 0.11 | 80.30 ± 0.73 | 80.31 ± 0.65 |

| PIE | 53.57 ± 0.69 | 70.30 ± 1.49 | 46.13 ± 0.39 | 85.91 ± 1.33 | 85.88 ± 1.34 | 79.45 ± 0.52 | 85.39 ± 1.10 | 86.60 ± 0.87 |

| USPS | 60.35 ± 1.48 | 27.09 ± 1.72 | 23.64 ± 3.86 | 78.69 ± 4.33 | 78.61 ± 4.35 | 16.31 ± 0.69 | 78.82 ± 3.07 | 80.83 ± 4.93 |

| Yale | 40.67 ± 3.95 | 42.06 ± 2.24 | 39.64 ± 1.30 | 44.15 ± 1.79 | 44.13 ± 1.72 | 43.82 ± 2.07 | 47.26 ± 1.61 | 53.51 ± 1.50 |

| ORL | 69.37 ± 1.40 | 69.11 ± 2.04 | 58.36 ± 0.78 | 70.26 ± 0.92 | 70.17 ± 1.11 | 41.66 ± 0.92 | 70.25 ± 0.68 | 72.98 ± 0.83 |

| JAFFE | 58.52 ± 2.28 | 56.47 ± 2.46 | 48.44 ± 1.38 | 76.53 ± 2.53 | 76.70 ± 2.29 | 38.94 ± 0.93 | 76.62 ± 2.02 | 77.22 ± 1.81 |

| UMIST | 52.41 ± 3.76 | 45.40 ± 2.09 | 35.60 ± 2.02 | 57.18 ± 3.47 | 62.30 ± 2.37 | 13.25 ± 1.41 | 61.08 ± 1.93 | 64.79 ± 2.39 |

| PUR (%) | ||||||||

| COIL20 | 66.42 ± 2.14 | 65.56 ± 2.40 | 60.18 ± 2.36 | 85.09 ± 3.70 | 85.66 ± 2.83 | 50.85 ± 1.06 | 88.24 ± 2.69 | 87.10 ± 1.61 |

| COIL100 | 57.87 ± 1.14 | 56.84 ± 1.12 | 45.74 ± 0.50 | 72.70 ± 1.29 | 72.48 ± 1.65 | 25.12 ± 0.38 | 70.72 ± 1.35 | 70.02 ± 1.38 |

| PIE | 29.61 ± 0.89 | 49.52 ± 1.39 | 29.56 ± 0.69 | 84.70 ± 1.29 | 84.85 ± 1.25 | 62.70 ± 1.19 | 83.18 ± 1.36 | 84.68 ± 1.26 |

| USPS | 70.79 ± 1.71 | 53.18 ± 2.39 | 56.12 ± 5.24 | 84.94 ± 4.16 | 85.30 ± 3.88 | 31.23 ± 1.11 | 84.84 ± 2.68 | 88.52 ± 4.17 |

| Yale | 48.42 ± 3.66 | 44.61 ± 2.44 | 44.67 ± 1.83 | 45.94 ± 0.94 | 46.00 ± 0.92 | 42.79 ± 2.25 | 48.48 ± 2.41 | 53.33 ± 1.59 |

| ORL | 59.25 ± 2.69 | 56.63 ± 2.80 | 44.65 ± 1.12 | 58.30 ± 0.79 | 59.25 ± 0.62 | 24.38 ± 1.60 | 58.20 ± 0.91 | 62.60 ± 0.66 |

| JAFFE | 60.00 ± 4.43 | 52.35 ± 4.04 | 41.31 ± 2.25 | 64.55 ± 3.19 | 68.03 ± 1.87 | 27.42 ± 2.72 | 68.69 ± 1.98 | 70.56 ± 2.53 |

| UMIST | 45.51 ± 2.29 | 43.29 ± 2.69 | 40.12 ± 1.98 | 69.13 ± 3.20 | 69.23 ± 3.07 | 32.49 ± 1.37 | 69.86 ± 3.19 | 72.35 ± 2.01 |

| Datasets | K-Means | NMF | ONMF | GNMF | GDNMF | NTD | GNTD | MPCNTD |

|---|---|---|---|---|---|---|---|---|

| COIL20 | 0.9575 | 4.8707 | 17.8615 | 7.7992 | 6.7093 | 8.5009 | 7.6108 | 11.6861 |

| COIL100 | 12.7224 | 55.5005 | 389.9492 | 59.3759 | 60.6811 | 106.4293 | 44.9294 | 87.1066 |

| PIE | 4.3523 | 18.2649 | 77.1014 | 19.7781 | 19.7723 | 84.8791 | 34.1724 | 36.5172 |

| USPS | 3.4253 | 2.5031 | 179.4944 | 10.4931 | 11.8063 | 9.3728 | 34.7790 | 44.8650 |

| YALE | 0.0628 | 1.6680 | 0.5067 | 1.2088 | 0.6632 | 1.5704 | 1.3235 | 1.4317 |

| ORL | 0.2675 | 1.5499 | 3.2105 | 2.0715 | 2.3844 | 2.1336 | 3.4087 | 2.3716 |

| JAFFE | 0.2869 | 2.5672 | 4.2324 | 3.0784 | 2.6312 | 3.6669 | 3.3649 | 3.8293 |

| UMIST | 0.3352 | 1.5462 | 5.4409 | 1.9811 | 2.6882 | 3.3493 | 3.5304 | 3.6853 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, X.; Lu, L. Consistent Regularized Non-Negative Tucker Decomposition for Three-Dimensional Tensor Data Representation. Symmetry 2025, 17, 1969. https://doi.org/10.3390/sym17111969

Gao X, Lu L. Consistent Regularized Non-Negative Tucker Decomposition for Three-Dimensional Tensor Data Representation. Symmetry. 2025; 17(11):1969. https://doi.org/10.3390/sym17111969

Chicago/Turabian StyleGao, Xiang, and Linzhang Lu. 2025. "Consistent Regularized Non-Negative Tucker Decomposition for Three-Dimensional Tensor Data Representation" Symmetry 17, no. 11: 1969. https://doi.org/10.3390/sym17111969

APA StyleGao, X., & Lu, L. (2025). Consistent Regularized Non-Negative Tucker Decomposition for Three-Dimensional Tensor Data Representation. Symmetry, 17(11), 1969. https://doi.org/10.3390/sym17111969