Anthropometric Landmarks Extraction and Dimensions Measurement Based on ResNet

Abstract

:1. Introduction

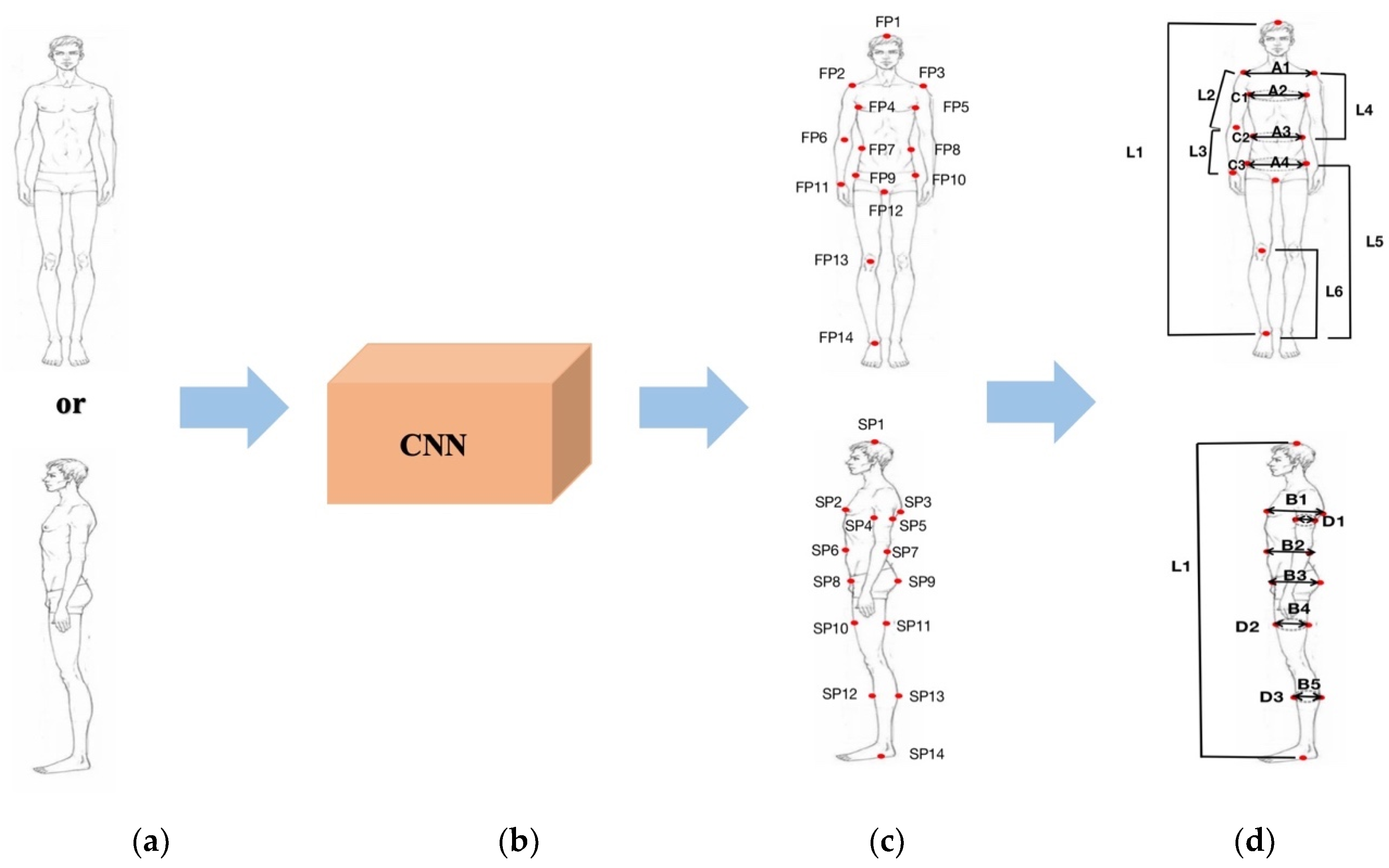

- (1)

- A new way to extract landmarks from 2D images is proposed, that is, extracting landmarks by a deep convolutional neural network.

- (2)

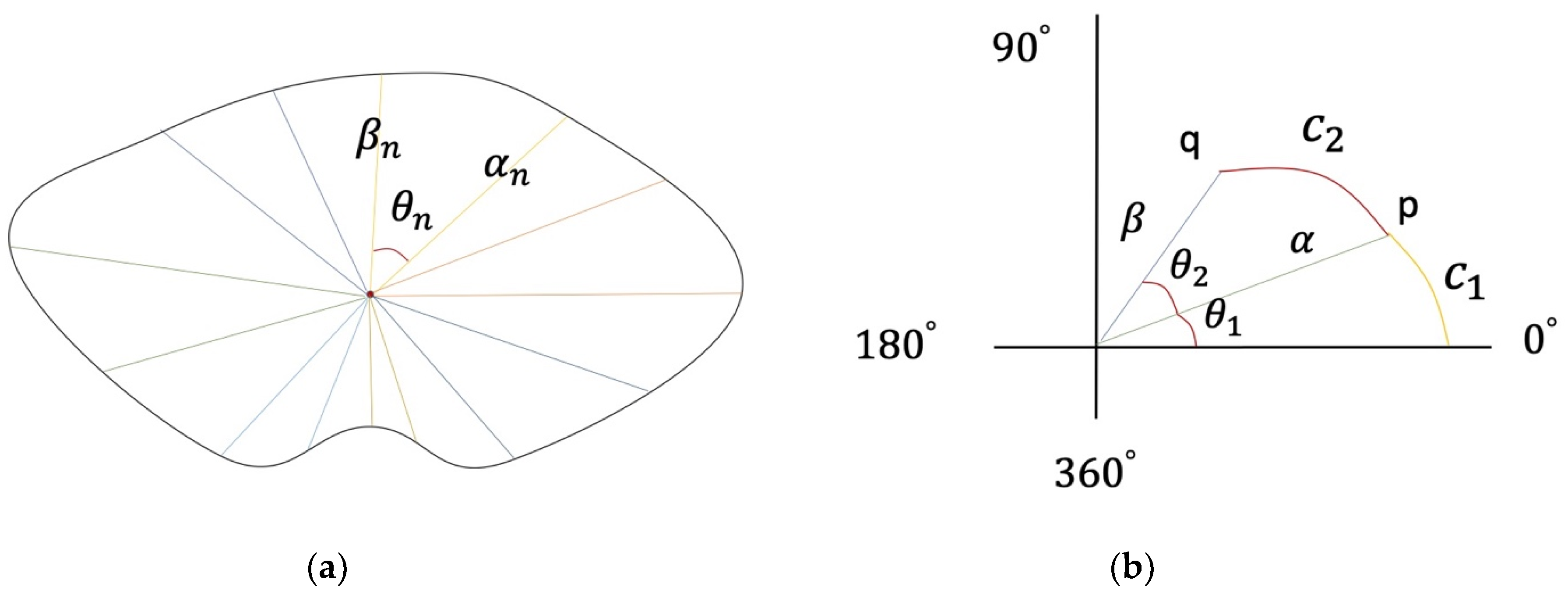

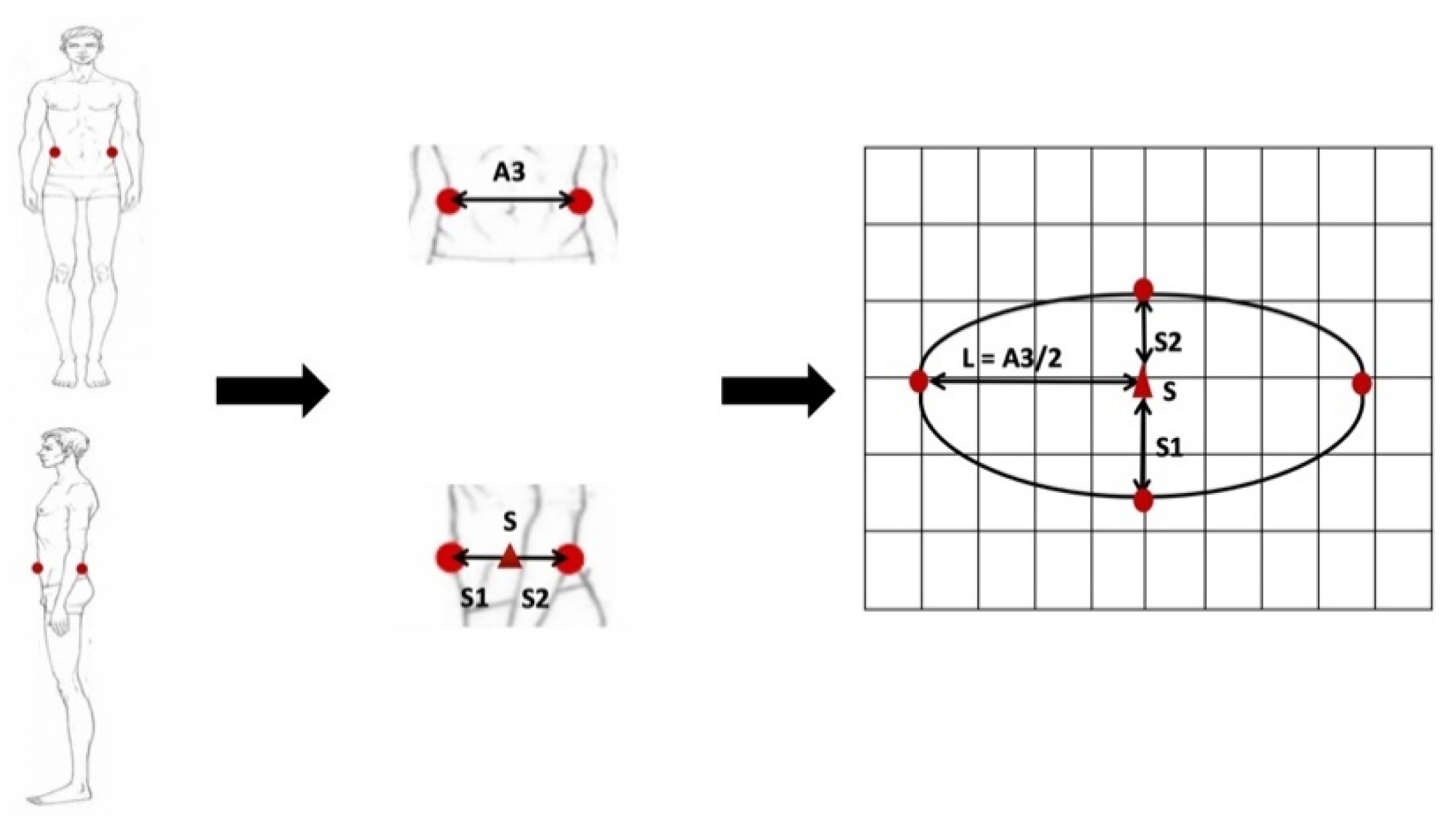

- A multi-ellipse model is proposed, and the position of the axis division point of the ellipse model is determined by the thickness–width ratio of the body parts, which reduces the error of anthropometric dimensions.

- (3)

- The method is evaluated on real samples and compared with other methods, which shows the accuracy of the multi-ellipse model when the number of images is 2.

2. Related Work

3. Proposed Method

3.1. Landmarks Extraction Based on ResNet

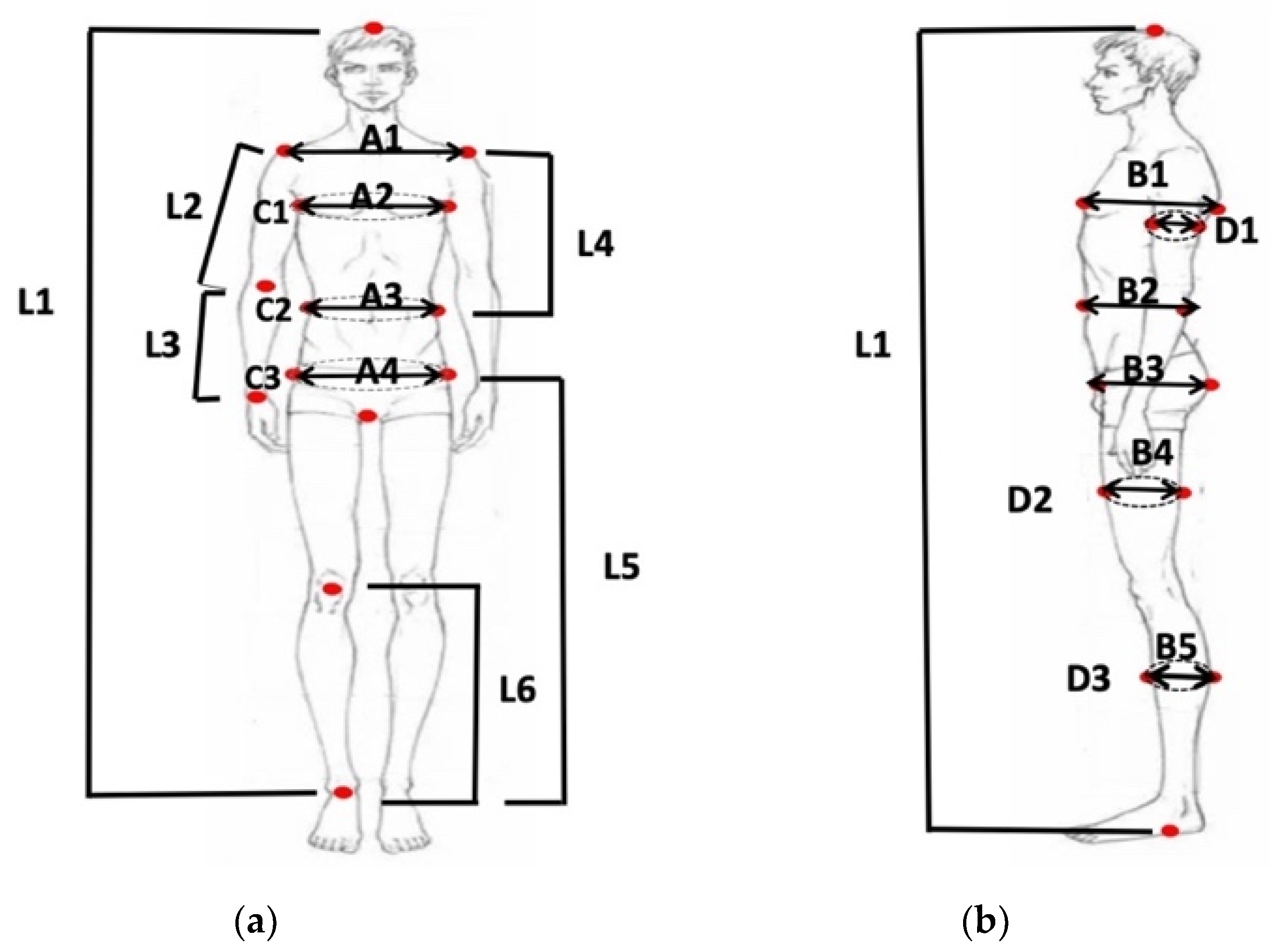

3.2. Anthropometric Dimension Calculation Based on Multi-Ellipse Model

4. Experiment and Result

4.1. Datasets

4.2. Training Details

4.3. Result

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gültepe, U.; Güdükbay, U. Real-time virtual fitting with body measurement and motion smoothing. Comput. Graph. 2014, 43, 31–43. [Google Scholar] [CrossRef]

- Mahnic, M.; Mahnica, M.; Petraka, S. Investigation of the Fit of Computer-based Parametric Garment Prototypes. J. Fiber Bioeng. Inform. 2013, 6, 51–61. [Google Scholar] [CrossRef]

- Apeagyei, P.R.; Tyler, D. Ethical practice and methodological considerations in researching body cathexis for fashion products. J. Fash. Mark. Manag. Int. J. 2007, 11, 332–348. [Google Scholar] [CrossRef]

- Heath, B.H.; Carter, J.E.L. A modified somatotype method. Am. J. Phys. Anthr. 1967, 27, 57–74. [Google Scholar] [CrossRef] [PubMed]

- Ahn, S.H.; Park, J.; Nam, Y.-J.; Yun, M.H. 1C1-2 Analysis and Usability Testing of the 3D Scanning Method for Anthropometric Measurement of the Elderly. Jpn. J. Ergon. 2015, 51, S394–S397. [Google Scholar] [CrossRef] [Green Version]

- Singh, A.K. The importance of anthropometric measurement error. Open Access Libr. J. 2014, 1, 1–9. [Google Scholar] [CrossRef]

- Jain, S.; Rustagi, A.; Saurav, S.; Saini, R.; Singh, S. Three-dimensional CNN-inspired deep learning architecture for Yoga pose recognition in the real-world environment. Neural Comput. Appl. 2020, 1–15. [Google Scholar] [CrossRef]

- Luvizon, D.C.; Picard, D.; Tabia, H. 2D/3D Pose Estimation and Action Recognition Using Multitask Deep Learning. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5137–5146. [Google Scholar]

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour Detection and Hierarchical Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–916. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; He, K.; Sun, J. Instance-Aware Semantic Segmentation via Multi-task Network Cascades. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3150–3158. [Google Scholar]

- Aslam, M.; Rajbdad, F.; Khattak, S.; Azmat, S. Automatic measurement of anthropometric dimensions using frontal and lateral silhouettes. IET Comput. Vis. 2017, 11, 434–447. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Cruz-Mota, J.; Bogdanova, I.; Paquier, B.; Bierlaire, M.; Thiran, J. Scale Invariant Feature Transform on the Sphere: Theory and Applications. Int. J. Comput. Vis. 2012, 98, 217–241. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. Comput. Vis. Image Underst. 2008, 110, 404–417. [Google Scholar] [CrossRef]

- Loper, M.; Mahmood, N.; Romero, J.; Pons-Moll, G.; Black, M.J. SMPL: A Skinned Multi-Person Linear Model. ACM Trans. Graph. (TOG) 2015, 34, 1–16. [Google Scholar] [CrossRef]

- Tompson, J.J.; Jain, A.; Lecun, Y.; Bregler, C. Joint Training of a Convolutional Network and a Graphical Model for Human Pose Estimation. arXiv 2014, arXiv:1406.2984. [Google Scholar]

- Wei, S.-E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional Pose Machines. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4724–4732. [Google Scholar]

- Nie, X.; Feng, J.; Zuo, Y.; Yan, S. Human Pose Estimation with Parsing Induced Learner. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2100–2108. [Google Scholar]

- GB/T 38131-2019. Acquisition Method of Datum Points for Clothing Anthropometry; Standards Press of China: Beijing, China, 2019. [Google Scholar]

- GB/T 16160-2017. Anthropometric Definitions and Methods for Garment; Standards Press of China: Beijing, China, 2017. [Google Scholar]

- Konečný, J.; Richtárik, P. Semi-Stochastic Gradient Descent Methods. Front. Appl. Math. Stat. 2017, 3, 9. [Google Scholar] [CrossRef] [Green Version]

- Pishchulin, L.; Insafutdinov, E.; Tang, S.; Andres, B.; Andriluka, M.; Gehler, P.; Schiele, B. DeepCut: Joint Subset Partition and Labeling for Multi Person Pose Estimation. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4929–4937. [Google Scholar]

- Lohman, T.G.; Roche, A.F.; Martorell, R. Anthropometric Standardization Reference Manual; Human Kinetics Books: Champaign, IL, USA, 1988. [Google Scholar]

- Lin, Y.-L.; Wang, M.-J.J. Constructing 3D human model from front and side images. Expert Syst. Appl. 2012, 39, 5012–5018. [Google Scholar] [CrossRef]

| Landmark | Definition |

|---|---|

| FP1, SP1 Vertex | The highest point of the head. |

| FP2 Right Acromion | The most lateral point of the lateral edge of the spine (acromial process) of the right scapula, projected vertically to the surface of the skin. |

| FP3 Left Acromion | The most lateral point of the lateral edge of the spine (acromial process) of the left scapula, projected vertically to the surface of the skin. |

| FP4 Right bust width point | The front fold point of the armpit on right arm scye line determined using a scale placed under arm. |

| FP5 Left bust width point | The front fold point of the armpit on left arm scye line determined using a scale placed under arm. |

| FP6 Anterior elbow point | The point on the anterior view of elbow. |

| FP7 Right lateral waist point | The right intersection of the lateral midlines of human body and the waistline. |

| FP8 Left lateral waist point | The left intersection of the lateral midlines of human body and the waistline. |

| FP9 Right lateral buttock | The right intersection of the coronal plane of human body and the hip line. |

| FP10 Left lateral buttock | The left intersection of the coronal plane of human body and the hip line. |

| FP11 Right wrist point | The most prominent point of the bulge of the head of ulna in right arm. |

| FP12 Perineum point or crotch | The intersection of the sagittal plane and the line connecting the lowest point of the left ischial tuberosity and right ischial tuberosity. |

| SP2 Thelion | The most anterior point of the bust. |

| SP3 Posterior bust depth point | The back fold point of the armpit on arm scye line determined using a scale placed under arm. |

| SP4 Anterior arm depth point | The middle point on the anterior edge of arm. |

| SP5 Posterior arm depth point | The middle point on the posterior edge of arm. |

| SP6 Anterior waist point | The anterior intersection of the sagittal plane of human body and the waistline. |

| SP7 Posterior waist point | The posterior intersection of the sagittal plane of human body and the waistline. |

| SP8 Anterior peak of buttock | The most prominent point on the anterior edge of the ilium. |

| SP9 Peak of buttock | The most prominent point in the buttock. |

| SP10 Anterior thigh depth point | The middle point on the anterior edge of thigh. |

| SP11 Posterior thigh depth point | The middle point on the posterior edge of thigh. |

| SP12 Anterior crus depth point | The middle point on the anterior edge of crus. |

| SP13 Posterior crus depth point | The middle point on the posterior edge of crus. |

| SP14 Foot point | The inferior margin of prominence of the medial malleolus. |

| Dimension | Definition | Dimension | Definition |

|---|---|---|---|

| L1 | Height | B2 | Waist Depth |

| L2 | Upper arm Length | B3 | Hip Depth |

| L3 | Lower arm Length | B4 | Thigh Depth |

| L4 | Torso Height | B5 | Crus Depth |

| L5 | Hip Height | C1 | Chest Girth |

| L6 | Knee Height | C2 | Waist Girth |

| A1 | Shoulder Breadth | C3 | Hip Girth |

| A2 | Chest Breadth | D1 | Upper arm Girth |

| A3 | Waist Breadth | D2 | Mid-Thigh Girth |

| A4 | Hip Breadth | D3 | Calf Girth |

| B1 | Bust Depth |

| Number | Gender | Age | Weight (kg) | BMI |

|---|---|---|---|---|

| 41 | female | 20–50 | 45–75 | 17–28 |

| 46 | male | 20–50 | 45–85 | 17–28 |

| Training Times | Learning Rate |

|---|---|

| 10,000 | 0.05 |

| 430,000 | 0.02 |

| 730,000 | 0.002 |

| 1,030,000 | 0.001 |

| Image Type | Evaluation Method | Body Part | Landmark | Accuracy |

|---|---|---|---|---|

| Front image | PCK-0.5 | Shoulder * | FP2, FP3 | 97.80% |

| Chest * | FP4, FP5 | 98.00% | ||

| Waist * | FP7, FP8 | 97.40% | ||

| Hip * | FP9, FP10 | 97.60% | ||

| Head | FP1 | 98.20% | ||

| Elbow | FP6 | 94.60% | ||

| Wrist | FP11 | 93.20% | ||

| Crotch | FP12 | 98.00% | ||

| Knee | FP13 | 96.80% | ||

| Ankle | FP14 | 97.20% | ||

| Side image | PCK-0.5 | Arm * | SP4, SP5 | 93.20% |

| Chest * | SP2, SP3 | 97.40% | ||

| Waist * | SP6, SP7 | 97.40% | ||

| Hip * | SP8, SP9 | 97.20% | ||

| Thigh * | SP10, SP11 | 93.20% | ||

| Calf * | SP12, SP13 | 93.60% | ||

| Head | SP1 | 98.60% | ||

| Ankle | SP14 | 97.20% |

| Dimension | Intra-Observer Reliability | Inter-Observer Reliability | ||

|---|---|---|---|---|

| Trainer1 | Trainer2 | Trainer3 | R | |

| L1 | 0.9 | 0.93 | 0.93 | 0.86 |

| L2 | 0.87 | 0.89 | 0.88 | 0.83 |

| L3 | 0.95 | 0.97 | 0.94 | 0.86 |

| L4 | 0.85 | 0.93 | 0.91 | 0.82 |

| L5 | 0.94 | 0.92 | 0.89 | 0.8 |

| L6 | 0.97 | 0.98 | 0.95 | 0.92 |

| A1 | 0.82 | 0.85 | 0.83 | 0.78 |

| A2 | 0.88 | 0.92 | 0.93 | 0.82 |

| A3 | 0.92 | 0.94 | 0.92 | 0.85 |

| A4 | 0.83 | 0.85 | 0.81 | 0.84 |

| B1 | 0.94 | 0.93 | 0.98 | 0.95 |

| B2 | 0.87 | 0.9 | 0.91 | 0.91 |

| B3 | 0.87 | 0.85 | 0.82 | 0.8 |

| B4 | 0.86 | 0.89 | 0.88 | 0.85 |

| B5 | 0.97 | 0.95 | 0.97 | 0.89 |

| C1 | 0.9 | 0.93 | 0.91 | 0.84 |

| C2 | 0.86 | 0.84 | 0.87 | 0.83 |

| C3 | 0.97 | 0.96 | 0.96 | 0.92 |

| D1 | 0.93 | 0.95 | 0.89 | 0.87 |

| D2 | 0.86 | 0.89 | 0.88 | 0.85 |

| D3 | 0.93 | 0.95 | 0.94 | 0.91 |

| Type | Code | Body Part | MAD (mm) | MAE (mm) |

|---|---|---|---|---|

| height | L1 | height | 3.5 | 6 |

| L2 | arm | 4.3 | 6 | |

| L3 | forearm | 2.7 | 6 | |

| L4 | back | 3.9 | 5 | |

| L5 | pants | 5.8 | 7 | |

| L6 | knee | 2 | 3 | |

| width | A1 | shoulder | 5.1 | 8 |

| A2 | chest | 3.4 | 8 | |

| A3 | waist | 3.7 | 7 | |

| A4 | hip | 4.2 | 7 | |

| depth | B1 | chest | 2.2 | 4 |

| B2 | waist | 2.5 | 4 | |

| B3 | hip | 4.3 | 8 | |

| B4 | thigh | 2.6 | 5 | |

| B5 | calf | 2.2 | 5 | |

| girth | D1 | arm | 7.3 | 9 |

| D2 | thigh | 7.8 | 9 | |

| D3 | calf | 7.5 | 9 | |

| girth | C1 | chest | 6.4 | 15 |

| C2 | waist | 6.9 | 11 | |

| C3 | hip | 6.7 | 12 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Liu, B.; Dong, Y.; Pang, S.; Tao, X. Anthropometric Landmarks Extraction and Dimensions Measurement Based on ResNet. Symmetry 2020, 12, 1997. https://doi.org/10.3390/sym12121997

Wang X, Liu B, Dong Y, Pang S, Tao X. Anthropometric Landmarks Extraction and Dimensions Measurement Based on ResNet. Symmetry. 2020; 12(12):1997. https://doi.org/10.3390/sym12121997

Chicago/Turabian StyleWang, Xun, Baohua Liu, Yukun Dong, Shanchen Pang, and Xixi Tao. 2020. "Anthropometric Landmarks Extraction and Dimensions Measurement Based on ResNet" Symmetry 12, no. 12: 1997. https://doi.org/10.3390/sym12121997