Abstract

Linear programming and polyhedral representation conversion methods have been widely applied to game theory to compute equilibria. Here, we introduce new applications of these methods to two game-theoretic scenarios in which players aim to secure sufficiently large payoffs rather than maximum payoffs. The first scenario concerns truncation selection, a variant of the replicator equation in evolutionary game theory where players with fitnesses above a threshold survive and reproduce while the remainder are culled. We use linear programming to find the sets of equilibria of this dynamical system and show how they change as the threshold varies. The second scenario considers opponents who are not fully rational but display partial malice: they require a minimum guaranteed payoff before acting to minimize their opponent’s payoff. For such cases, we show how generalized maximin procedures can be computed with linear programming to yield improved defensive strategies against such players beyond the classical maximin approach. For both scenarios, we provide detailed computational procedures and illustrate the results with numerical examples.

1. Introduction

Game theory plays an important role in mathematically modeling the behavior and decision-making of players whether, or not they are human (). This feature allows the theory to have applications in fields such as social sciences, economics, biology, and computer science. In particular, many studies have focused on two-player games, which are an essential foundation of many models of interactions between individuals. As the size of players’ strategy sets increases, however, analyzing (say, finding the Nash equilibria) the multi-variable polynomial payoff expressions becomes computationally complex (; ). In such situations, support enumeration is a standard approach to finding Nash equilibria (; ; ). Linear programming can be used as an auxiliary tool for support enumeration and has been well applied to game theory (; ; ), often to compute minimax/maximin strategies, Nash equilibria, correlated equilibria, and related solution concepts. In this study, we convert problems into linear programs (or, more fundamentally, polyhedral conversion problems) and apply them to two additional scenarios: truncation selection and opponents that show a degree of irrationality or malice. We focus on these two scenarios because they both require consideration of worst-case scenarios, and they can be naturally expressed in linear algebraic language and converted to linear programs.

Before addressing irrational or malicious opponents, we begin with a discussion of rationality. In classical game theory, particularly for finite normal-form games, stable outcomes of games are predicted by Nash equilibria () (and its variants), which rely on at least two assumptions: that payoff matrices accurately and quantitatively reflect what matters to each player, and that all players aim to maximize their payoffs. In practice, however, players cannot always assume that their opponents are rational and must instead make decisions based on payoffs for all possible scenarios. For example, players may encounter opponents that are satisficers (), irrational (), unfamiliar with the game (), prone to making errors (), or malicious (). Another typical reason for the assumption of rationality to fail is that, in practice, payoff matrices usually only reflect the material payoffs of players, failing to capture players’ complicated underlying emotions. In the case of maliciousness, players may attempt to decrease their opponent’s payoff on purpose rather than maximize their own payoff. Such behavior can be explained by social norms that promote costly punishment (), a component of relational utility in a player’s payoffs (), or the game being a sub-game of a larger game where opponents’ payoffs negatively affect one another. Players may also encounter games with incomplete information, such as Bayesian games (), where analyzing an opponent’s behavior with certainty is impossible. In controlling industrial scenarios, we may only seek satisfactory outcomes rather than optimal ones, especially if the optimization is difficult. In such situations, players may wish to guarantee themselves a minimum payoff ()—i.e., control for worst-case scenarios ().

Risk-averse or security strategies to handle such scenarios have been well studied (; , ; ). A classic example is the maximin strategy, where a player assumes the worst-case scenario will happen and chooses a strategy that maximizes their payoff under that assumption. However, players may seek a balance between securing a lower-bound payoff and exploiting an opponent’s partial rationality. A safe equilibrium formalizes this trade-off by each player assigning probabilities over maximin and Nash equilibrium strategies (). When a player is willing to accept a worst-case payoff below the maximin value, they can respond more flexibly to educated beliefs about opponents’ behaviors. This flexibility may lead to higher payoffs, particularly when combined with methods in behavioral game theory (; ). In this paper, we design a model to capture the behavior of partially malicious players: players who aim to increase their payoffs when they are low but become malicious once they exceed a certain payoff threshold. While a fully malicious player reduces the problem to a classic zero-sum game, our framework generalizes this case to opponents exhibiting partial malice. We demonstrate how a rational player can enhance their payoff from the maximin payoff if the opponent follows such a decision rule, and how such players would compute their ideal strategies. We analyze strategies for both rational players facing partially malicious opponents and malicious players seeking to minimize their opponents’ payoffs under this decision rule.

The population-level dynamics of avoiding worst-case outcomes can be modeled by truncation selection, an evolutionary algorithm in which only players with fitnesses (i.e., average payoffs) above a specified threshold survive and reproduce (; ; , ; ; ; ; ; , ). Thresholds may be fixed or dynamic and can depend on variables such as time, which is relevant for reproduction timing (). Truncation selection emphasizes adequacy: as long as a player’s fitness exceeds the threshold, they propagate at an equal rate. This approach has applications in computer science and mathematical biology, including genetic algorithms () and as an alternative to the replicator equation (). Truncation selection can exhibit markedly different dynamics than the replicator equation (), where population change depends on deviations from the average fitness (). Truncation selection can be classified into two different methods: independent truncation selection, where the threshold is a fixed value; and dependent truncation selection, where a fixed proportion of the population survives, making the threshold contingent upon the population’s fitness distribution (, ). In this paper, we emphasize the role of linear programming and the concept of safe strategies in analyzing independent truncation, since the threshold condition can be naturally expressed by linear program constraints. In reformulating independent truncation selection as linear programs and polyhedral conversion problems, we offer a computationally efficient and analytically transparent framework for studying it.

2. Results

2.1. Games with Malicious Players

We begin with a scenario where the column player is rational and the row player is partially malicious, given a specified risk aversion threshold . The definitions of the matrices can be found in Section 4. Knowing that her opponent is malicious, it would be intuitive for the column player to adopt a maximin strategy as a defensive measure. However, due to the minimum payoff requirement, the row player’s strategy space may be restricted. So, even if the column player exposes herself to risk by playing a strategy not in the set of maximin strategies, the row player might be unable to fully exploit the worst case. Since the column player can threaten the loss of his minimum requirement. This creates the potential for the column player to secure a payoff at least as high as the maximin value through a computation method that leverages the row player’s restricted strategy space.

To begin the analysis, consider the row player’s strategy space as a convex hull of probability vectors. The vertices of this space can be obtained by solving a polyhedral representation conversion problem (see Appendix B for a review):

This polyhedral conversion problem yields the vertices of the restricted strategy space for the malicious player. Let these resulting vertices (strategies) be . By the fundamental idea of linear programming, one of these strategies yields the worst payoff the row player can make the column player accept. Therefore, the column player’s payoff is with denoting her strategy.

Selecting the column player’s optimal strategy in this scenario is equivalent to solving a generalized maximin problem. To see this, define . Then, the following maximin problem is equivalent to maximizing :

Each entry of is the column player’s payoff against a row player’s vertex strategy. By maximizing the minimum value, we obtain a defensive strategy robust against the row player’s restricted strategy set. One can see that this linear program as a generalization of maximin problems, where our opponent’s mixed strategies are limited in the convex hull of columns of the matrix. When the row player is unrestricted in his strategy, reduces to the identity matrix (since all pure strategies are available), and this problem recovers the maximin strategy. Depending on how severely the row player’s strategy space is restricted by his minimum requirement, this optimization may or may not yield a different strategy for the column player, but her payoff will always be at least as high as the classical maximin value.

We end the analysis by giving a numerical example for the game:

where the row player is partially malicious with a risk aversion threshold of (and thus his minimum requirement is approximately ), and the column player is rational. A maximin strategy for the column player is and the corresponding worst-case payoff is . However, since the row player’s strategy set is restricted, the generalized maximin procedure yields an enhanced column strategy and the worst payoff the opponent can enforce is .

Now consider how the row player computes his strategy. When he plays strategy , the possible payoffs available to the column player are . Since the column player is rational, she will choose her best response to these, so his goal is to minimize her best possible payoff while satisfying his own minimum payoff constraint, which can be represented by the following optimization problem:

This is a generalized minimax problem, which extends the standard formulation with additional constraints from the minimum payoff requirement. Solving this program yields a strategy that simultaneously satisfies this constraint and minimizes the column player’s best response payoff. This upper bound is theoretically the same value as the rational player’s enhanced payoff derived earlier, agreeing with the famous minimax theorem (). For the row player in the example game above, a generalized minimax strategy is approximately , and the corresponding best possible payoff for the column player under this strategy is again , which is in agreement with the minimax theorem.

2.2. Independent Truncation Selection

We begin by analyzing results of an agent-based numerical simulation of a Hawk-Dove game, using a similar but simplified setup as (); (); (). Assuming a large number of competitions between players, we use expected payoffs instead of stochastic ones, which are the fitnesses; implications of this modeling choice are discussed in more depth in Appendix C. Payoffs for the two types of players, hawks and doves, are determined by the row payoff matrix taken from ():

with the first strategy representing hawks and the second doves. Figure 1 shows the equilibrium frequency of hawks as a function of . Consider the shape of the distribution of data samples. The upper bounds of the data have two distinct linear boundaries separated by a turning point. To explain this observation, consider the payoff matrix shifted by the payoff threshold: . If a population state satisfies , then both hawks and doves will have payoffs above . This is connected to computing the column maximin value of , which is 15. Thus, for all , there exists no state such that hawks and doves both have fitnesses above . Conversely, for , the fitnesses of both hawks and doves will be greater than for every state .

Figure 1.

Equilibrium frequency of hawks under truncation selection vs. the payoff threshold . Simulations were performed on populations with initial frequencies of hawks uniformly randomly chosen from , and over a set of thresholds from to 15 in increments of 1. Each vertical slice depicts the final frequencies of hawks after 16 rounds of truncation selection for 128 different initial conditions given the x-value threshold. The upper bounds of the outcomes have two distinct linear boundaries with a turning point at .

Although extending the horizontal axis of Figure 1 beyond is unnecessary for this model, it can be necessary when there is significant variance in payoffs, as in the models of (); (); (). Because outliers are possible, and thus the boundary is different. For , culling and extinction are rare, but not impossible for stochastic payoffs. For , primarily populations with states equal to a column maximin strategy (which is exactly in this case) can avoid extinction. Increasing shrinks this range linearly into a single point. The turning point is when , which is at the threshold . We care about the determinant because it would be possible for non-trivial states to exist, making the fitnesses of all players meet the threshold. For , we can explain the linear boundary by computing the range of safe spaces where we expect no extinctions. This is relatively easy for games, because the set of safe spaces is a subset of the probability hyperplane with dimension 2 (a line segment). The comparison to the original result in () is included in Appendix C where we show that the difference between our result and theirs is due to low population sizes and stochastic payoffs with non-negligible variances. As the population size increases, their results converge to ours.

We can obtain the convex hull of vertices by finding the two extreme points of the line segment using the following linear program (note that for higher dimensions, this must be converted to a polyhedral representation conversion problem):

The linear objective function is just a vector in the standard basis of , meaning that we are minimizing an entry in . Doing this for both entries allows us to obtain the convex hull. When , the extreme states tell us that hawks become extinct before (since the first row of is zero). When , doves go extinct before hawks for a similar reason (the second row of is zero). The two slopes in Figure 1 are given by the following two relations:

where the unspecified fitnesses (denoted by and for hawks and doves, respectively) are non-negative. Solving these equations yields the slopes of the two upper boundaries: and .

By the above arguments, we conclude that no culling occurs for below the minimum possible payoff, and only rare players survive for above the column maximin value. This analysis provides a direct way to compute a naive safe space, assuming full support (about which we will elaborate below).

For higher-dimensional games, the computation of safe spaces is more complex. It is possible that a player type goes extinct, irreversibly reducing the game to a lower-dimensional sub-game for which there may be a different safe space. We adopt the game-theoretic notion of a strategy being in the support to express the surviving strategies in such a scenario. Then, given a specified threshold payoff, we compute safe spaces for all possible supports and take the union to find the set of all safe strategies.

To see this procedure, consider a game with the following payoff matrix:

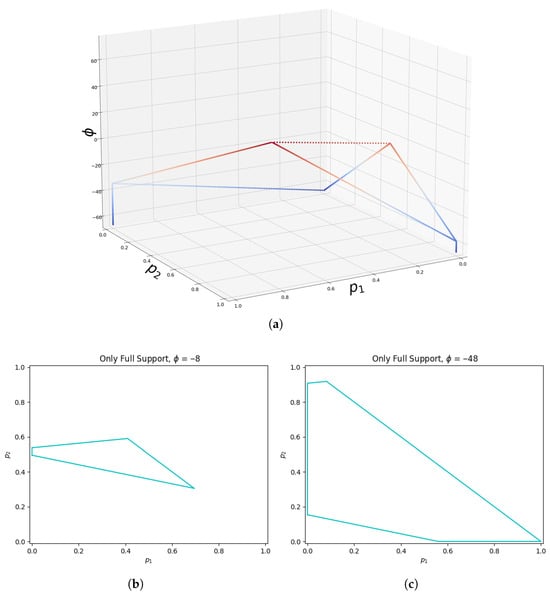

This game has the pure Nash equilibria , , and , and the mixed Nash equilibria and where and . And it has the maximin value of approximately . The numerical results for the safe spaces of this game are generated by the following procedure and plotted in Figure 2 and Figure 3, where the first plot is the safe space only considering the full support, and the second plot is the safe space where all supports are considered. Since the vertices of the safe spaces are three-dimensional probability vectors, there are only two degrees of freedom. Thus, we only need to choose two components to record and the remaining axis to record the thresholds. In our plot, the x-axis is the first coordinate of the vertices, the y-axis is the second, and the z-axis is the axis of thresholds. For different threshold values, we can compute the vertices of the safe spaces. This can be done by solving the conversion problem stated earlier and then recording the values for each vertex and plotting the three axes.

Figure 2.

Visualization of the full support safe space for a game (panel (a)). Each horizontal slice of the shape is the polyhedron corresponding to the safe space given by the threshold , where a colder color represents a lower threshold. Panels (b) and (c) are examples of horizontal slices at thresholds and , respectively. Vertices of the polyhedra vary linearly and converge to maximin strategies (typically unique, but not unique in general) at the maximin value.

Figure 3.

Visualization of the overall safe space for a game (panel (a)). This shape is a union of convex subsets (though the union is not convex in general) for all sub-games obtained by using all possible supports. Panels (b) and (c) are examples of horizontal slices at and , respectively. The supports are differentiated by color. One can see that the safe space is convex for one support, but the unions of safe spaces for all supports are not convex in general.

Given a payoff matrix , our results are generated in the following way. First, we compute the column maximin value of the row payoff matrix. Since the vector of fitnesses for each player type are convex combinations of the columns of the row payoff matrix where the coefficients are the frequencies of each player type. We are looking for the maximum possible value of the minimum entry in the fitness vector under all possible convex column combinations. This is exactly the column maximin value. This maximin value is the maximum threshold given that a full-support safe space exists. Note that the maximin value may vary if the support is changed due to the irreversibility in truncation selection. Following this, we generate a set of thresholds to observe, ranging from the minimum of the payoff matrix to its maximum. When a threshold is less than or equal to the minimum, no culling happens, and when greater than the maximin value, no non-empty safe space exists for full support with respect to the maximin value.

3. Discussion

We have applied the concept of safe spaces, linear programming, and polyhedral representation conversion to two subjects of game theory: partially malicious players and independent truncation selection. Our aim was to develop methods that allow players to manage worst-case scenarios numerically.

Regarding partially malicious players, we constructed a simple model for players who are partially malicious. Such players only become malicious after securing a specified minimum payoff. If we require defensive plays against such players, we may use a generalized maximin procedure. In the case where both players have set their risk thresholds sufficiently low, both will have non-empty safe strategy sets, and thus the game could be formulated as a bilinear game (). Future studies could develop and refine our decision rule, and the impetus for maliciousness could be directly modeled, such as by including relational utility. Additionally, truncation selection and the generalized maximin methods presented here may be used to address problems in control theory (; ).

With respect to truncation selection, we showed that the equilibria are convex subsets of the probability space for each support. As the selection threshold varies linearly, the vertices of safe spaces also change linearly. When the threshold is exactly equal to the maximin value, the safe space converges to the set of maximin strategies. When the threshold is above the maximin value, extinction happens, and the game is irreversibly reduced. In general, the states do not converge to one of the Nash equilibria of the underlying game. These findings hold under the assumption of large populations, though prior work has shown how small populations can exhibit unexpected behaviors (; ; ). Using the polyhedral conversion approach, we can compute the set of safe spaces analytically or less computationally intensively (with respect to the population size) than with stochastic simulations. Additionally, these methods are simpler than analyzing differential algebraic equations, which have been used to model truncation selection (). However, our methods can still be computationally expensive for strategy spaces of high dimension. The computational complexity of polyhedral conversion algorithms depends on several factors (; ): size of intermediate polytopes (sensitive to the ordering of half-spaces), dimension, number of half-spaces, and vertices. And, there exists a class of polytopes for which the double description method is exponential (). Nonetheless, we recommend the methods stated above for practical problems of relatively low dimension, while conducting simulations may be more practical for games of high dimension.

More broadly, our paper provides two additional scenarios in game theory requiring dense computations that benefit from linear programming and polyhedral conversion. This reinforces the value of expressing game-theoretic concepts in linear algebraic language and converting problems into feasible problems that these algorithms can solve.

4. Materials and Methods

4.1. Risk Aversion and Partially Malicious Players

We begin by defining some basic game-theoretic concepts in linear algebraic terms, since this is a useful formulation for linear and polyhedron conversion problems (which are detailed in Appendix A and Appendix B). A finite two-player normal-form game can be represented by a payoff table where the rows and columns represent the players’ pure strategies, and each entry contains a tuple of payoffs to the row and column players, respectively. For example:

We separate this table into two matrices: the row payoff matrix , containing the first entries of each tuple; and the column payoff matrix , containing the second entries. A mixed strategy for the row (column) player is a probability vector whose dimension equals the number of rows (columns) of the payoff table. If the row player adopts strategy , her possible payoffs against a pure strategy playing column player is the portfolio . Similarly, if the column player adopts strategy , her portfolio is .

Players generally aim to maximize their payoffs, typically assuming that their opponents are rational. When this assumption fails (e.g., when opponents behave maliciously), a player’s degree of risk aversion becomes a key factor in strategy selection. Risk aversion can be measured in a variety of ways (; ). Here, we measure the risk aversion of a player’s strategy when facing an opponent whose behavior cannot be trusted to be fully rational in the following way. Consider a column player who is unsure of the rationality of their opponent (or indeed if they are malicious). The worst payoff to this player over all possible outcomes is . Let denote their maximin value—their highest guaranteed payoff against any opponent. When , we define the column player’s risk aversion for employing strategy as follows:

where is the portfolio for strategy . This equation maps to a real value, rescaling the payoff of the portfolio between the worst-case payoff () and the maximin guarantee () with intermediate values corresponding to partial risk aversion. We characterize a player by specifying a threshold value of her risk aversion , where she will restrict herself to strategies with risk aversion that meet or exceed it. Note that this measure is meaningful only when , since no improvement over the worst outcome is possible otherwise.

For our model of partially malicious behavior, the objective of a partially malicious player is twofold. They prioritize obtaining a payoff that satisfies their basic needs, a minimum value they require. Once that payoff is secured, they act maliciously by minimizing their opponent’s payoff. We proceed by assuming that the column player is partially malicious. Such a player’s decision-making can be determined by the following function that takes both players’ payoffs along with a specified minimum payoff threshold and maps them to a value:

is the minimum payoff the column player is satisfied with, and and are the payoffs of the row and column player, respectively. The function is strictly decreasing in , ensuring malicious behavior. For simplicity, we set . We emphasize that, although this resembles a typical utility function, it does not satisfy the continuity axiom of expected utility maximization (). The negative infinity simply reflects the player’s priority of achieving the minimum required payoff before optimizing their malicious objective. In practice, a sufficiently low real value, smaller than the minimum value of g in the game, can be used to maintain consistency with the framework of expected utility.

4.2. Truncation Selection

Independent truncation selection models the evolution of the state of a population with a mixture of players who play pure strategies of a symmetric game (). The population state is a vector whose entries are the proportions of the population of each strategy. The evolutionary process begins by specifying a selection pressure, a fixed threshold payoff for survival. Players compete against one another, earning payoffs from each encounter with an opponent. This process can be implemented by simulating round-robin tournaments with stochastic payoffs (; ), which generates a distribution of the payoffs averaged over the competitions. Payoffs may also be represented by distributions and studied with continuous dynamical systems (). Regardless of how payoffs are particularly earned, at the end of the competition, players with averaged payoffs below the threshold are culled and do not reproduce. The remaining players each reproduce at the same rate so as to replace the culled players. In this way, players need to only have adequate payoffs that meet the threshold and receive no further benefit for exceeding it.

Our model of truncation selection assumes a large number of interactions between players. Thus, the payoff variance is negligible, and players effectively earn their fitnesses (i.e., their payoffs averaged over all interactions), the entries of the row payoff matrix (see Appendix C for further details). In our agent-based simulations, we initialize a population of N players of randomly determined pure strategies. In each round of the game, players earn the average payoff from each interaction and those with payoffs below the threshold are culled. The remaining population reproduces to maintain the population size, rounding to the nearest whole number. This evolution is iterated until equilibria or extinction. Note that one round may be all that is required to reach an equilibrium.

Approaching this problem analytically, note that if the culling threshold payoff is less than or equal to the column maximin value of , then there exists a set of states where all player types meet or exceed the threshold. Thus, the population remains unchanged, and thus we treat such states as equilibria. We call the set of such equilibria the safe space. The set of equilibria for a given support typically forms a convex subset of the strategy simplex, and the overall safe space is the union of safe spaces for each support (note that the union of equilibria across supports is not convex in general). Assuming a full-support state (for other supports, one simply crosses out the corresponding rows and columns that are not in support), we can compute these equilibria via the following polyhedral conversion problem:

The first two constraints restrict the solution space inside the probability simplex, while the last enforces the equilibrium condition (i.e., that the payoffs of all player-types meet or exceed ).

When is less than or equal to the column maximin value of , the vertices in V-representation form a convex subset of the probability simplex. When the is exactly the column maximin value of , the V-representation is exactly the set of maximin strategies (typically single points, but can be a convex set in general). When is above the column maximin value of , the polyhedron is the empty set. Thus, all states will have at least one player type go extinct, reducing the game to a sub-game. States outside of a non-empty safe space may evolve toward this space, toward a reduced game, or toward complete extinction of all player types.

Author Contributions

Conceptualization, Z.Z. and B.M.; methodology, Z.Z. and B.M.; software, Z.Z.; validation, Z.Z.; formal analysis, Z.Z. and B.M.; investigation, Z.Z. and B.M.; writing—original draft preparation, Z.Z. and B.M.; writing—review and editing, Z.Z. and B.M.; visualization, Z.Z.; supervision, B.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Code is available at https://github.com/bmorsky/LP_malice_and_truncation (accessed on 30 August 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Linear Programming Methods for Computing Maximin Values

The maximin value for a player is the maximum possible value she can be guaranteed to obtain, and the strategies guaranteeing this value are called maximin strategies. In this section, we quickly review a linear programming method to compute, without loss of generality, the column player’s maximin strategy of a game.

One of the column player’s maximin strategies is a convex combination of the columns of the payoff matrix where the portfolio vector has its minimum entries maximized over the convex hull of the columns of . The value of the minimum entries, , is called the maximin value of this game for the column player. One can see that adding a scalar to all entries of will not change the column player’s maximin strategies: the maximin value of is just shifted by this scalar. One can also see that any with a positive column will have a positive maximin value, because the value is at least the minimum entry of the pure strategy corresponding to this column. The method for finding a maximin strategy for the column player can be stated as the following optimization problem:

Note that we do not know when we construct the linear program. To proceed, we can convert this optimization problem into a linear programming problem by expressing the objective function as a linear function of the variable vector.

An easy way to do this conversion is by first adding a scalar k to every entry of such that , one can arbitrarily choose a column to make it positive. Then, rather than using a probability vector , we use a non-negative vector . Dividing both sides of the inequality constraints by , setting , and ignoring the constraint for the moment, we obtain the following optimization problem:

Because , we can express the objective function as a linear function of , namely . Now, the optimization problem can be seen as a linear programming problem:

The solution for this problem yields a particular maximin strategy . The column maximin value of is the reciprocal of , shifted back by the scalar k we have previously added: .

Linear programming can be seen as iterating over the vertices of the polytope specified by the constraints (if feasible). The one that maximizes the linear objective function is a particular solution, based on the observation that solutions of such problems will always have intersection with at least one vertex of the polytope.

Appendix B. Polyhedral Representation Conversion

Since it is useful to use tools for solving polyhedral representation conversion problems when studying truncation selection, here we will briefly review this topic but also direct the reader to () for further details. Again, without loss of generality, let’s assume we are the column player. Then the portfolio vectors for each pure strategies are the columns of . The set of possible column portfolios for this game would be the convex hull of the columns, since mixed strategies are convex combinations over the columns. This set can be seen as a convex polyhedron in , where n is the number of rows of . A convex polyhedron has two equivalent expressions, namely V-representations and H-representations.

Under V-representations, the collection of the vertices of the convex hull is used to define the unique convex polyhedron. The set of possible portfolio vectors are naturally given in V-representations. To be specific, say , , …, are the columns of . These vectors correspond to the portfolio vectors of the player’s pure strategies. Then, the V-representation of the convex polyhedron equivalent to the set of possible portfolio vectors is , where stands for convex hulls.

Under H-representation, the polyhedron is described by a set of linear half-spaces, the intersection of which is exactly the convex polyhedron. A set of linear inequalities and equalities are typically used and arranged such that they are in form and . Here and are the matrices consisting of the coefficients of the inequalities and equalities, respectively.

In this paper we avoid repeatedly emphasizing the convex property by assuming a polyhedron is convex unless specified otherwise. The problem of converting a polyhedron from one representation to another is called a polyhedron conversion problem. Given feasibility, there are computational algorithms (; ) that solve this type of problem (though they can be computationally expensive for high dimensions).

Appendix C. Observing the Vanishing Variance for the Hawk-Dove Game

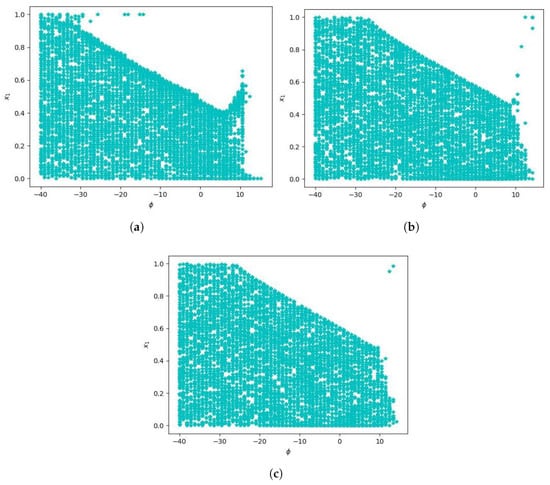

Here we elaborate on the dynamics of the Hawk-Dove game under independent truncation selection explored in (). To begin, we reproduce their original agent-based simulation result in Figure A1 to contrast with Figure 1 from the main text. The update rule and all parameters are taken from (). Before explaining these differences, we specify the truncation algorithm.

Specify a population size N and a survival threshold . Then, randomly determine the initial frequency of hawks noting that the frequency of doves is . Then, for each hawk and dove, there is a round robin tournament (which has high computational complexity in population size). We can replace the total mixing by expressing the fitness for interactions between hawks and doves as stochastic variables where i is the strategy earning the payoff and j is their opponent. and are the stochastic variables of the average payoffs of hawks and doves, respectively, which are a mixture of the other distributions. To obtain the expressions of means and variances of these distributions, we use the fact that for an individual hawk, it has interacted with approximately hawks and doves for fairly large populations. Then the cumulative payoff is averaged by population size, so we have the following:

By the calculation rules for linear combinations of normal random variables, we are able to obtain and . Similarly for doves, we have and . An immediate observation is that the standard deviations are inversely dependent to the root of population size. As the variances go to zero, and we obtain the scenario in the main text. From our exploration of these simulations, any population size above roughly will be able to converge closely to the result in the main text. However the round robin tournament simulation becomes computationally expensive as population size increases.

Figure A1.

Plotted here are the results of typical runs of the model in () with a population sizes for panels (a), (b), and (c), respectively.

Another difference between Figure 1 and Figure A1 is that the upper limit of hawks is higher in the former figure than the latter. Note that the mean payoffs for both hawks and doves decrease as increases. However, the standard deviation for hawks increases as increases, and the standard deviation for doves decreases as increases. Thus, for a high initial hawk frequency, hawks have a higher chance of being below a low threshold than doves. However, for large , the probability of doves obtaining adequate payoffs becomes low. Since the standard deviation of hawks is much larger, hawks have a greater chance to obtain adequate payoffs even when the threshold is above the maximin value. Although a large standard deviation means a higher probability for extremely low payoffs, when analyzing whether a population goes extinct, we only care about the probability for survival. Thus, for a threshold that is significantly larger than the mean payoffs, the strategy with a larger payoff variance will have a higher chance of survival.

References

- Avis, D., & Fukuda, K. (1991, June 10–12). A pivoting algorithm for convex hulls and vertex enumeration of arrangements and polyhedra. Seventh Annual Symposium on Computational Geometry (pp. 98–104), North Conway, NH, USA. [Google Scholar]

- Avis, D., Fukuda, K., & Picozzi, S. (2002). On canonical representations of convex polyhedra. In Mathematical software (pp. 350–360). World Scientific. [Google Scholar]

- Binmore, K. (1992). Fun and games. In A text on game theory. D. C. Heath & Co. [Google Scholar]

- Bremner, D., Sikiric, M. D., & Schürmann, A. (2009). Polyhedral representation conversion up to symmetries. In CRM proceedings (Vol. 48, pp. 45–72). Springer. [Google Scholar]

- Bruyere, V., Filiot, E., Randour, M., & Raskin, J.-F. (2014, April 5–6). Expectations or guarantees? I want it all! A crossroad between games and MDPs. 2nd International Workshop on Strategic Reasoning (Vol. 146, pp. 1–8), Grenoble, France. [Google Scholar]

- Burton-Chellew, M. N., El Mouden, C., & West, S. A. (2016). Conditional cooperation and confusion in public-goods experiments. Proceedings of the National Academy of Sciences of the United States of America, 113(5), 1291–1296. [Google Scholar] [CrossRef] [PubMed]

- Cressman, R., & Tao, Y. (2014). The replicator equation and other game dynamics. Proceedings of the National Academy of Sciences of the United States of America, 111(Suppl. 3), 10810–10817. [Google Scholar] [CrossRef]

- Dave, A., & Malikopoulos, A. A. (2024). Approximate information states for worst case control and learning in uncertain systems. IEEE Transactions on Automatic Control, 70(1), 127–142. [Google Scholar] [CrossRef]

- Dickhaut, J., & Kaplan, T. (1993). A program for finding Nash equilibria. In Economic and financial modeling with mathematica® (pp. 148–166). Springer. [Google Scholar] [CrossRef]

- Etessami, K., & Yannakakis, M. (2010). On the complexity of Nash equilibria and other fixed points. SIAM Journal on Computing, 39(6), 2531–2597. [Google Scholar] [CrossRef]

- Ficici, S. G. (2006, July 8–12). A game-theoretic investigation of selection methods in two-population coevolution. 8th Annual Conference on Genetic and Evolutionary Computation (pp. 321–328), Seattle, WA, USA. [Google Scholar] [CrossRef]

- Ficici, S. G., Melnik, O., & Pollack, J. B. (2005). A game-theoretic and dynamical-systems analysis of selection methods in coevolution. IEEE Transactions on Evolutionary Computation, 9(6), 580–602. [Google Scholar] [CrossRef]

- Ficici, S. G., & Pollack, J. B. (2000, July 10–12). Effects of finite populations on evolutionary stable strategies. 2nd Annual Conference on Genetic and Evolutionary Computation (pp. 927–934), Las Vegas, NV, USA. [Google Scholar]

- Ficici, S. G., & Pollack, J. B. (2007). Evolutionary dynamics of finite populations in games with polymorphic fitness equilibria. Journal of Theoretical Biology, 247(3), 426–441. [Google Scholar] [CrossRef]

- Fogel, D. B., Fogel, G. B., & Andrews, P. C. (1997). On the instability of evolutionary stable strategies. BioSystems, 44(2), 135–152. [Google Scholar] [CrossRef]

- Fogel, G. B., Andrews, P. C., & Fogel, D. B. (1998). On the instability of evolutionary stable strategies in small populations. Ecological Modelling, 109(3), 283–294. [Google Scholar] [CrossRef]

- Fogel, G. B., & Fogel, D. B. (2011). Simulating natural selection as a culling mechanism on finite populations with the hawk–dove game. BioSystems, 104(1), 57–62. [Google Scholar] [CrossRef] [PubMed]

- Fudenberg, D., & Liang, A. (2019). Predicting and understanding initial play. American Economic Review, 109(12), 4112–4141. [Google Scholar] [CrossRef]

- Fukuda, K., & Prodon, A. (1995). Double description method revisited. In Franco-Japanese and Franco-Chinese conference on combinatorics and computer science (pp. 91–111). Springer. [Google Scholar]

- Ganzfried, S. (2023, December 13–15). Safe equilibrium. 2023 62nd IEEE Conference on Decision and Control (CDC) (pp. 5230–5236), Singapore. [Google Scholar]

- Garg, J., Jiang, A. X., & Mehta, R. (2011). Bilinear games: Polynomial time algorithms for rank based subclasses. In International workshop on internet and network economics (pp. 399–407). Springer. [Google Scholar]

- Harsanyi, J. C. (1968). Games with incomplete information played by ‘bayesian’ players. Management Science. [Google Scholar]

- Henrich, J., McElreath, R., Barr, A., Ensminger, J., Barrett, C., Bolyanatz, A., Cardenas, J. C., Gurven, M., Gwako, E., & Henrich, N. (2006). Costly punishment across human societies. Science, 312(5781), 1767–1770. [Google Scholar] [CrossRef] [PubMed]

- Holler, M. J. (1990). The unprofitability of mixed-strategy equilibria in two-person games: A second folk-theorem. Economics Letters, 32(4), 319–323. [Google Scholar] [CrossRef]

- Holler, M. J. (1992). Nash equilibrium reconsidered and an option for maximin. Quality and Quantity, 26(3), 323–335. [Google Scholar] [CrossRef]

- Holler, M. J., & Høst, V. (2019). Maximin vs. Nash equilibrium: Theoretical results and empirical evidence. In Optimal decisions in markets and planned economies (pp. 245–255). Routledge. [Google Scholar]

- Htun, Y. (2005). Irrationality in game theory. World Scientific. [Google Scholar]

- Jebari, K., & Madiafi, M. (2013). Selection methods for genetic algorithms. International Journal of Emerging Sciences, 3(4), 333–344. [Google Scholar]

- John von Neumann, O. M. (1944). Theory of games and economic behavior. Princeton University Press. [Google Scholar]

- Li, J., Yang, M., Lewis, F. L., & Zheng, M. (2024). Compensator-based self-learning: Optimal operational control for two-time-scale systems with input constraints. IEEE Transactions on Industrial Informatics, 20(7), 9465–9475. [Google Scholar] [CrossRef]

- Liu, Y., & Zhu, Q. (2023). Event-triggered adaptive neural network control for stochastic nonlinear systems with state constraints and time-varying delays. IEEE Transactions on Neural Networks and Learning Systems, 34(4), 1932–1944. [Google Scholar] [CrossRef]

- Lucchetti, R. (2006). Linear programming and game theory. In Convexity and well-posed problems (pp. 117–137). Springer. [Google Scholar]

- Meyer, D., & Meyer, J. (2006). Measuring risk aversion. Foundations and Trends in Microeconomics, 2, 107–203. [Google Scholar] [CrossRef]

- Morsky, B., & Bauch, C. T. (2016). Truncation selection and payoff distributions applied to the replicator equation. Journal of Theoretical Biology, 404, 383–390. [Google Scholar] [CrossRef]

- Morsky, B., & Bauch, C. T. (2019). The impact of truncation selection and diffusion on cooperation in spatial games. Journal of Theoretical Biology, 466, 64–83. [Google Scholar] [CrossRef]

- Morsky, B., Smolla, M., & Akçay, E. (2020). Evolution of contribution timing in public goods games. Proceedings of the Royal Society B, 287(1927), 20200735. [Google Scholar] [CrossRef]

- Moscibroda, T., Schmid, S., & Wattenhofer, R. (2009). The price of malice: A game-theoretic framework for malicious behavior. Internet Mathematics, 6(2), 125–156. [Google Scholar] [CrossRef]

- Myerson, R. B. (1991). Game theory: Analysis of conflict. Harvard University Press. [Google Scholar]

- Nash, J. F. (1950). Equilibrium points in n-person games. Proceedings of the National Academy of Science of the United States of America, 36(1), 48–49. [Google Scholar] [CrossRef] [PubMed]

- Papadimitriou, C. H. (2007). The complexity of finding Nash equilibria. Algorithmic Game Theory, 2, 30. [Google Scholar]

- Parrilo, P. A. (2006, December 13–15). Polynomial games and sum of squares optimization. 45th IEEE Conference on Decision and Control (pp. 2855–2860), San Diego, CA, USA. [Google Scholar] [CrossRef]

- Porter, R., Nudelman, E., & Shoham, Y. (2008). Simple search methods for finding a Nash equilibrium. Games and Economic Behavior, 63(2), 642–662. [Google Scholar] [CrossRef]

- Pratt, J. W. (1964). Risk aversion in the small and in the large. Econometrica, 32(1/2), 122–136. [Google Scholar] [CrossRef]

- Selten, R., & Bielefeld, R. S. (1988). Examination of the perfectness concept for equilibrium points in extensive games. In Models of strategic rationality (Vol. 2). Theory and Decision Library C. Springer. [Google Scholar]

- Simon, H. A. (1955). A behavioral model of rational choice. The Quarterly Journal of Economics, 69, 99–118. [Google Scholar] [CrossRef]

- Simons, S. (1995). Minimax theorems and their proofs. In Minimax and applications (pp. 1–23). Springer. [Google Scholar]

- Su, R., & Morsky, B. (2024). Relational utility and social norms in games. Mathematical Social Sciences, 127, 54–61. [Google Scholar] [CrossRef]

- Thie, P. R., & Keough, G. E. (2011). An introduction to linear programming and game theory. John Wiley & Sons. [Google Scholar]

- Von Stengel, B. (2021). Game theory basics. Cambridge University Press. [Google Scholar]

- Wright, J., & Leyton-Brown, K. (2014, June 8–12). Level-0 meta-models for predicting human behavior in games. EC 2014—Proceedings of the 15th ACM Conference on Economics and Computation, Palo Alto, CA, USA. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).