Comparative Assessment of Machine Learning Methods for Urban Vegetation Mapping Using Multitemporal Sentinel-1 Imagery

Abstract

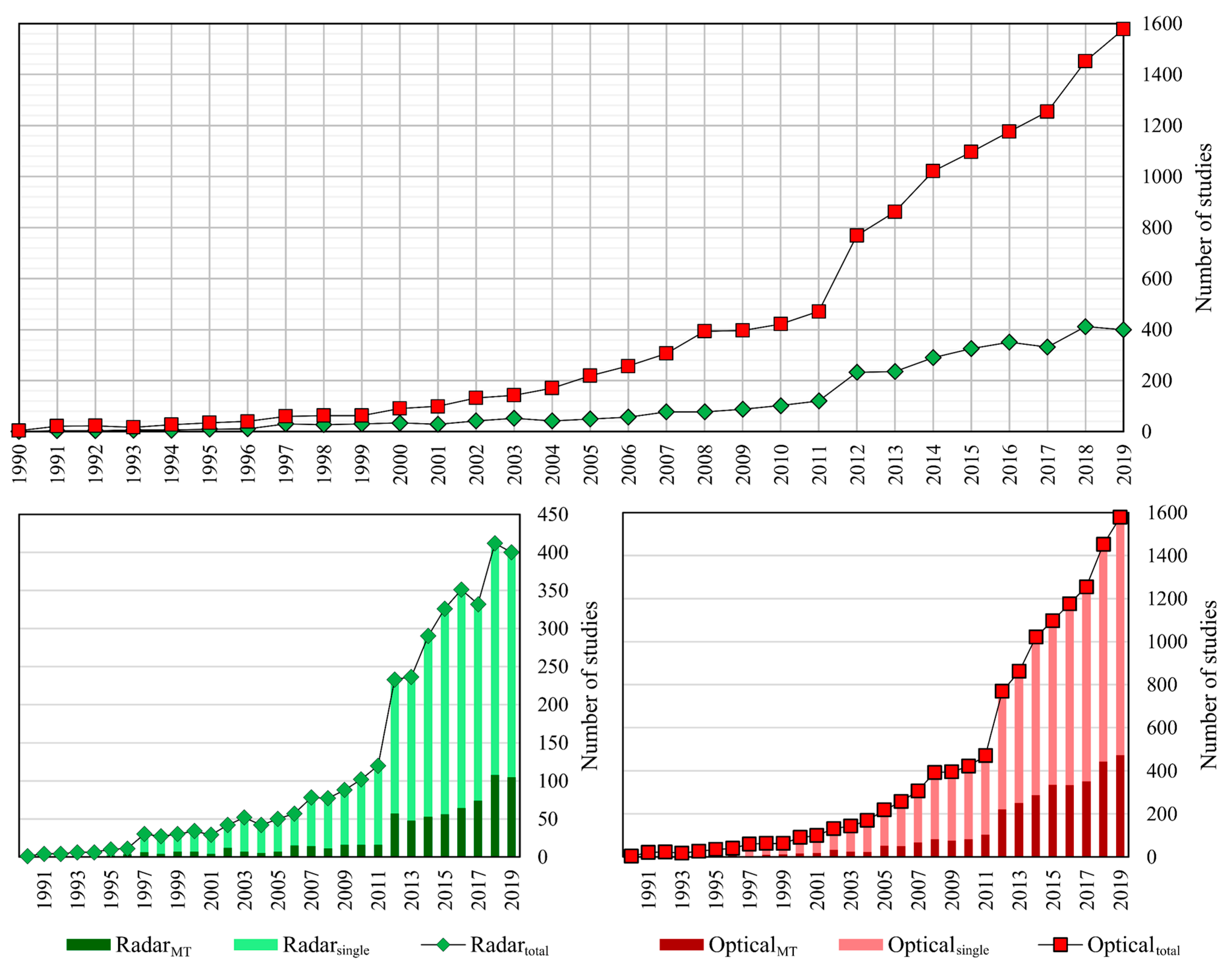

:1. Introduction

2. Study Areas and Dataset

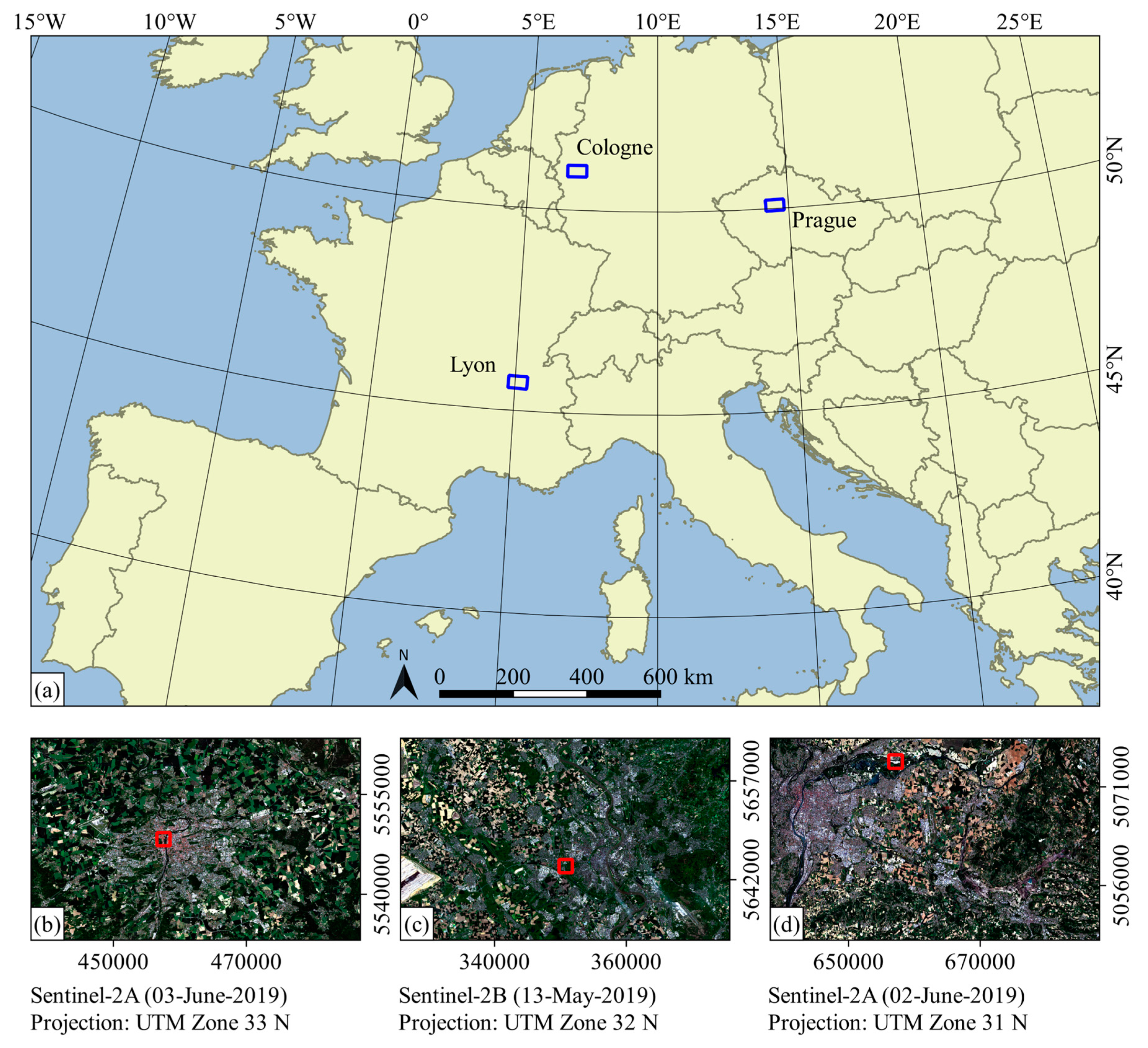

2.1. Study Areas

2.2. Data

3. Methods

3.1. Pre-Processing

3.2. Speckle Filtering

3.3. Classification and Accuracy Assessment

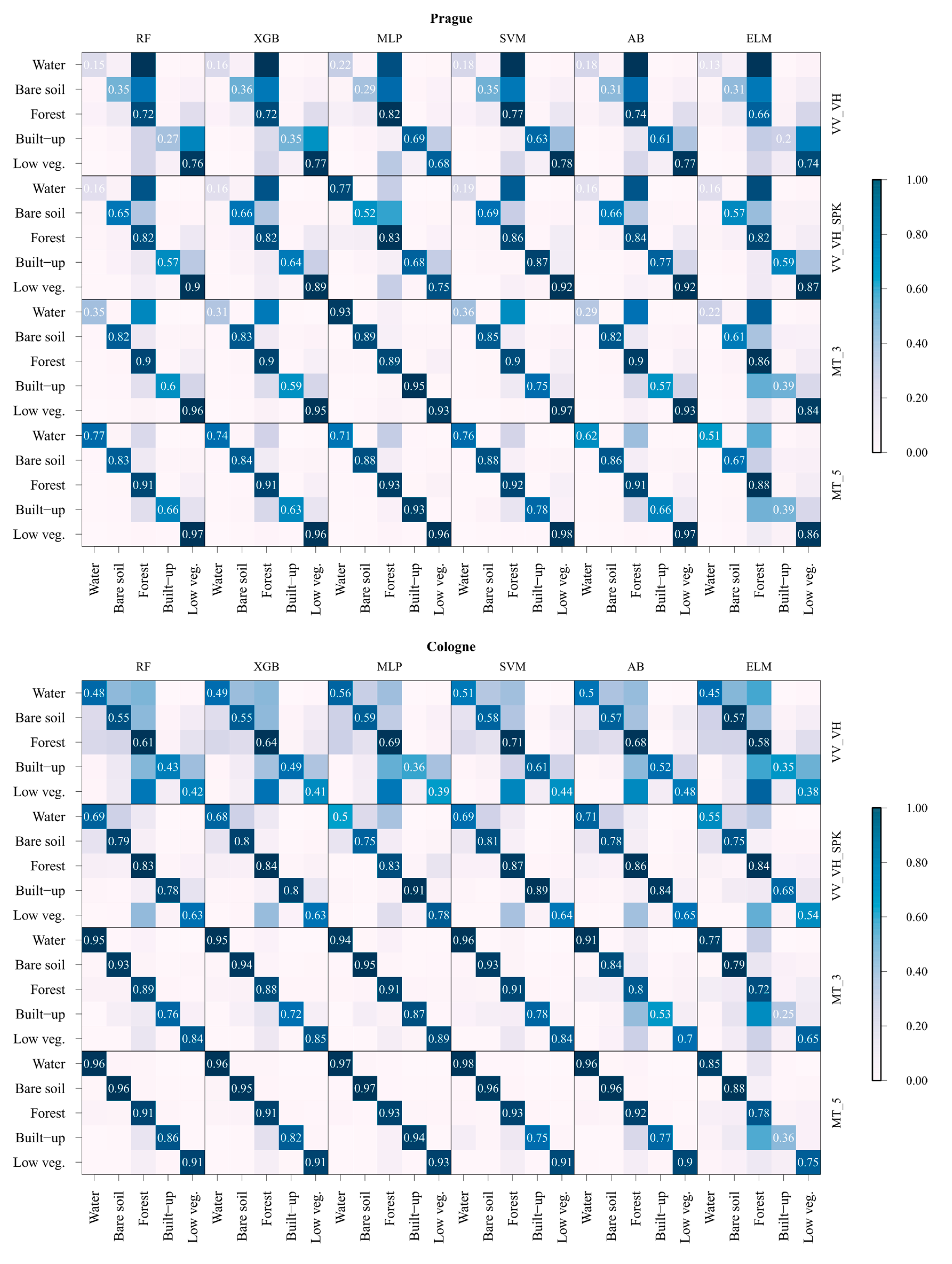

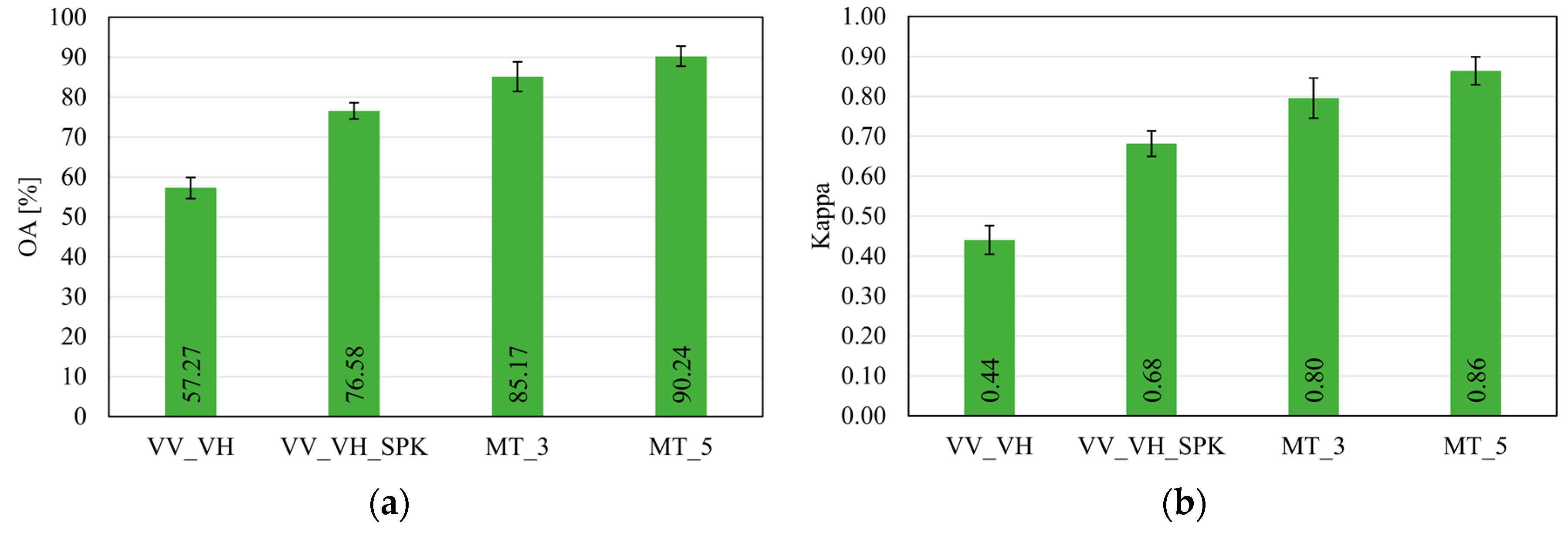

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Study Area | Prague | Cologne | Lyon |

|---|---|---|---|

| Country | Czech Republic | Germany | France |

| Lat/Long | 50°5′ N | 50°56′ N | 45°45′ N |

| 14°25′ E | 6°57′ E | 4°50′ E | |

| Extent (pixels) | 4958 × 3038 | 5213 × 3151 | 5145 × 3344 |

| Climate | Humid continental | Temperate oceanic | Temperate oceanic |

| Average annual | max. 14.9 | max. 16.5 | max. 18.2 |

| temperature (°C) | mean 12.6 | mean 13.9 | mean 14.8 |

| −2019 * | min. 7.5 | min. 9.3 | min. 9.6 |

| Precipitation (mm) | 984.0 | 979.1 | 1524.6 |

| −2019 * | |||

| Soils ** | Haplic Chernozems | Orthic Luvisols | Vertic Luvisols |

References

- Gomez-Chova, L.; Fernández-Prieto, D.; Calpe, J.; Soria, E.; Vila, J.; Camps-Valls, G. Urban monitoring using multi-temporal SAR and multi-spectral data. Pattern Recognit. Lett. 2006, 27, 234–243. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Weng, Q.; Resch, B. Collective Sensing: Integrating Geospatial Technologies to Understand Urban Systems—An Overview. Remote Sens. 2011, 3, 1743–1776. [Google Scholar] [CrossRef] [Green Version]

- Maghsoudi, Y.; Collins, M.J.; Leckie, D. Speckle reduction for the forest mapping analysis of multi-temporal Radarsat-1 images. Int. J. Remote Sens. 2012, 33, 1349–1359. [Google Scholar] [CrossRef]

- Skriver, H. Crop classification by multitemporal C- and L-band single- and dual-polarization and fully polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2138–2149. [Google Scholar] [CrossRef]

- Moreira, A.; Prats-iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Oliver, C.J. Information from SAR images. J. Phys. D Appl. Phys. 1991, 24, 1493–1514. [Google Scholar] [CrossRef] [Green Version]

- Yuan, J.; Lv, X.; Li, R. A speckle filtering method based on hypothesis testing for time-series SAR images. Remote Sens. 2018, 10, 1383. [Google Scholar] [CrossRef] [Green Version]

- Frost, V.S.; Stiles, J.A.; Shanmugan, K.S.; Holtzman, J.C. A Model for Radar Images and Its Application to Adaptive Digital Filtering of Multiplicative Noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, 2, 157–166. [Google Scholar] [CrossRef]

- Kuan, D.T.; Sawchuk, A.A.; Strand, T.C.; Chavel, P. Adaptive Restoration of Images with Speckle. IEEE Trans. Acoust. 1987, 35, 373–383. [Google Scholar] [CrossRef]

- Lee, J. Sen Digital image smoothing and the sigma filter. Comput. Vis. Graph. Image Process. 1983, 24, 255–269. [Google Scholar] [CrossRef]

- Lee, J. Sen Digital Image Enhancement and Noise Filtering by Use of Local Statistics. IEEE Trans. Pattern Anal. Mach. Intell. 1980, 2, 165–168. [Google Scholar] [CrossRef] [Green Version]

- Lopes, A.; Nezry, E.; Touzi, R.; Laur, H. Maximum a posteriori speckle filtering and first order texture models in SAR images. In Proceedings of the 10th Annual International Symposium on Geoscience and Remote Sensing, College Park, MD, USA, 20–24 May 1990; IEEE: Piscataway, NJ, USA, 1990; pp. 2409–2412. [Google Scholar] [CrossRef]

- Xiao, J.; Li, J.; Moody, A. A detail-preserving and flexible adaptive filter for speckle suppression in SAR imagery. Int. J. Remote Sens. 2003, 24, 2451–2465. [Google Scholar] [CrossRef]

- Guerschman, J.P.; Paruelo, J.M.; Di Bella, C.; Giallorenzi, M.C.; Pacin, F. Land cover classification in the Argentine Pampas using multi-temporal Landsat TM data. Int. J. Remote Sens. 2003, 24, 3381–3402. [Google Scholar] [CrossRef]

- Martinez, J.M.; Le Toan, T. Mapping of flood dynamics and spatial distribution of vegetation in the Amazon floodplain using multitemporal SAR data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar] [CrossRef]

- Quegan, S.; Le Toan, T.; Yu, J.J.; Ribbes, F.; Floury, N. Multitemporal ERS SAR analysis applied to forest mapping. IEEE Trans. Geosci. Remote Sens. Environ. 2000, 38, 741–753. [Google Scholar] [CrossRef]

- Townsend, P.A. Estimating forest structure in wetlands using multitemporal SAR. Remote Sens. Environ. 2002, 79, 288–304. [Google Scholar] [CrossRef]

- Waske, B.; Heinzel, V.; Braun, M.; Menz, G. Random forests for classifying multi-temporal SAR data. In Proceedings of the Envisat Symposium 2007, Montreux, Switzerland, 23–27 April 2007; pp. 1–4. [Google Scholar]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Planells, M.; Dejoux, J.F.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Vreugdenhil, M.; Wagner, W.; Bauer-Marschallinger, B.; Pfeil, I.; Teubner, I.; Rüdiger, C.; Strauss, P. Sensitivity of Sentinel-1 Backscatter to Vegetation Dynamics: An Austrian Case Study. Remote Sens. 2018, 10, 1396. [Google Scholar] [CrossRef] [Green Version]

- Gao, Q.; Zribi, M.; Escorihuela, M.J.; Baghdadi, N.; Segui, P.Q. Irrigation mapping using Sentinel-1 time series at field scale. Remote Sens. 2018, 10, 1495. [Google Scholar] [CrossRef] [Green Version]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. Unsupervised Rapid Flood Mapping Using Sentinel-1 GRD SAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3290–3299. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. Detection of temporary flooded vegetation using Sentinel-1 time series data. Remote Sens. 2018, 10, 1286. [Google Scholar] [CrossRef] [Green Version]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. SAR-based detection of flooded vegetation–a review of characteristics and approaches. Int. J. Remote Sens. 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.A.; et al. A Review of the Application of Optical and Radar Remote Sensing Data Fusion to Land Use Mapping and Monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef] [Green Version]

- Haas, J.; Ban, Y. Sentinel-1A SAR and sentinel-2A MSI data fusion for urban ecosystem service mapping. Remote Sens. Appl. Soc. Environ. 2017, 8, 41–53. [Google Scholar] [CrossRef]

- Jacob, A.; Ban, Y. Sentinel-1A SAR Data for Global Urban Mapping: Preliminary Results. In Proceedings of the 2015 IEEE International on Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 1179–1182. [Google Scholar] [CrossRef]

- Tavares, P.A.; Beltrão, N.E.S.; Guimarães, U.S.; Teodoro, A.C. Integration of sentinel-1 and sentinel-2 for classification and LULC mapping in the urban area of Belém, eastern Brazilian Amazon. Sensors 2019, 19, 1140. [Google Scholar] [CrossRef] [Green Version]

- Du, P.; Li, X.; Cao, W.; Luo, Y.; Zhang, H. Monitoring urban land cover and vegetation change by multi-temporal remote sensing information. Min. Sci. Technol. 2010, 20, 922–932. [Google Scholar] [CrossRef]

- Gašparović, M.; Dobrinić, D.; Medak, D. Urban Vegetation Detection Based on the Land-Cover Classification of Planetscope, Rapideye and Worldview-2 Satellite Imagery. In Proceedings of the 18th International Multidisciplinary Scientific Geo-Conference SGEM2018, Albena, Bulgaria, 30 June–9 July 2018; pp. 249–256. [Google Scholar] [CrossRef] [Green Version]

- Shade, C.; Kremer, P. Predicting Land Use Changes in Philadelphia Following Green Infrastructure Policies. Land 2019, 8, 28. [Google Scholar] [CrossRef] [Green Version]

- Sonobe, R. Parcel-Based Crop Classification Using Multi-Temporal TerraSAR-X Dual Polarimetric Data. Remote Sens. 2019, 11, 1148. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Wang, C.; Wu, J.; Qi, J.; Salas, W.A. Mapping paddy rice with multitemporal ALOS/PALSAR imagery in southeast China. Int. J. Remote Sens. 2009, 30, 6301–6315. [Google Scholar] [CrossRef]

- Han, M.; Zhu, X.; Yao, W. Remote sensing image classification based on neural network ensemble algorithm. Neurocomputing 2012, 78, 133–138. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Nyoungui, A.N.; Tonye, E.; Akono, A. Evaluation of speckle filtering and texture analysis methods for land cover classification from SAR images. Int. J. Remote Sens. 2002, 23, 1895–1925. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Freund, Y.; Schapire, R.E. Experiments with a New Boosting Algorithm. In Proceedings of the Thirteenth International Conference on International Conference on Machine Learning, Bari, Italy, 3–6 July 1996; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1996; pp. 148–156. [Google Scholar]

- Chen, Y.; Dou, P.; Yang, X. Improving land use/cover classification with a multiple classifier system using AdaBoost integration technique. Remote Sens. 2017, 9, 1055. [Google Scholar] [CrossRef] [Green Version]

- Kulkarni, S.; Kelkar, V. Classification of multispectral satellite images using ensemble techniques of bagging, boosting and adaboost. In Proceedings of the International Conference on Circuits, Systems, Communication and Information Technology Applications (CSCITA) Classification, Mumbai, India, 4–5 April 2014; pp. 253–258. [Google Scholar]

- Kawaguchi, S.; Nishii, R. Hyperspectral image classification by bootstrap AdaBoost with random decision stumps. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3845–3851. [Google Scholar] [CrossRef]

- Khosravi, I.; Mohammad-Beigi, M. Multiple Classifier Systems for Hyperspectral Remote Sensing Data Classification. J. Indian Soc. Remote Sens. 2014, 42, 423–428. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Pal, M. Extreme-learning-machine-based land cover classification. Int. J. Remote Sens. 2009, 30, 3835–3841. [Google Scholar] [CrossRef] [Green Version]

- Camargo, F.F.; Sano, E.E.; Almeida, C.M.; Mura, J.C.; Almeida, T. A comparative assessment of machine-learning techniques for land use and land cover classification of the Brazilian tropical savanna using ALOS-2/PALSAR-2 polarimetric images. Remote Sens. 2019, 11, 1600. [Google Scholar] [CrossRef] [Green Version]

- Lapini, A.; Pettinato, S.; Santi, E.; Paloscia, S.; Fontanelli, G.; Garzelli, A. Comparison of Machine Learning Methods Applied to SAR Images for Forest Classification in Mediterranean Areas. Remote Sens. 2020, 12, 369. [Google Scholar] [CrossRef] [Green Version]

- Waske, B.; Braun, M. Classifier ensembles for land cover mapping using multitemporal SAR imagery. ISPRS J. Photogramm. Remote Sens. 2009, 64, 450–457. [Google Scholar] [CrossRef]

- Lee, J.S.; Jurkevich, I.; Dewaele, P.; Wambacq, P.; Oosterlinck, A. Speckle filtering of synthetic aperture radar images: A review. Remote Sens. Rev. 1994, 8, 313–340. [Google Scholar] [CrossRef]

- Wang, X.; Ge, L.; Li, X. Evaluation of Filters for Envisat Asar Speckle Suppression in Pasture Area. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 341–346. [Google Scholar] [CrossRef] [Green Version]

- Quegan, S.; Yu, J.J. Filtering of multichannel SAR images. IEEE Trans. Geosci. Remote Sens. 2001, 39, 2373–2379. [Google Scholar] [CrossRef]

- McNairn, H.; Kross, A.; Lapen, D.; Caves, R.; Shang, J. Early season monitoring of corn and soybeans with TerraSAR-X and RADARSAT-2. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 252–259. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Noi, P.T.; Kappas, M. Comparison of random forest, k-nearest neighbor, and support vector machine classifiers for land cover classification using sentinel-2 imagery. Sensors 2018, 18, 18. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Qian, Y.; Zhou, W.; Yan, J.; Li, W.; Han, L. Comparing Machine Learning Classifiers for Object-Based Land Cover Classification Using Very High Resolution Imagery. Remote Sens. 2015, 7, 153–168. [Google Scholar] [CrossRef]

- Karatzoglou, A.; Smola, A.; Hornik, K.; Zeileis, A. kernlab-An S4 Package for Kernel Methods in R. J. Stat. Softw. 2004, 11, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H.; Chen, K.; Mitchell, R.; Cano, I.; Zhou, T.; et al. Xgboost: Extreme Gradient Boosting, R Package Version 0.82.1. Available online: https://CRAN.R-project.org/package=xgboost (accessed on 30 May 2020).

- Man, C.D.; Nguyen, T.T.; Bui, H.Q.; Lasko, K.; Nguyen, T.N.T. Improvement of land-cover classification over frequently cloud-covered areas using landsat 8 time-series composites and an ensemble of supervised classifiers. Int. J. Remote Sens. 2018, 39, 1243–1255. [Google Scholar] [CrossRef]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.I. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GISci. Remote Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Allaire, J.J.; Chollet, F. Keras: R Interface to ‘Keras’, R Package Version 2.2.4.1. Available online: https://CRAN.R-project.org/package=keras (accessed on 30 May 2020).

- Heaton, J. Introduction to Neural Networks with Java, 2nd ed.; Heaton Research, Inc.: Chesterfield, MO, USA, 2008. [Google Scholar]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for land cover and land use classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef] [Green Version]

- Alfaro, E.; Gáamez, M.; García, N. Adabag: An R package for classification with boosting and bagging. J. Stat. Softw. 2013, 54, 1–35. [Google Scholar] [CrossRef] [Green Version]

- Mouselimis, L.; Gosso, A. elmNNRcpp: The Extreme Learning Machine Algorithm, R Package Version 1.0.1. Available online: https://CRAN.R-project.org/package=elmNNRcpp (accessed on 30 May 2020).

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Stehman, S.V. Sampling designs for accuracy assessment of land cover. Int. J. Remote Sens. 2009, 30, 5243–5272. [Google Scholar] [CrossRef]

- Ottinger, M.; Clauss, K.; Kuenzer, C. Large-scale assessment of coastal aquaculture ponds with Sentinel-1 time series data. Remote Sens. 2017, 9, 440. [Google Scholar] [CrossRef] [Green Version]

- Gašparović, M.; Jogun, T. The effect of fusing Sentinel-2 bands on land-cover classification. Int. J. Remote Sens. 2018, 39, 822–841. [Google Scholar] [CrossRef]

- Stehman, S.V.; Foody, G.M. Key issues in rigorous accuracy assessment of land cover products. Remote Sens. Environ. 2019, 231, 111199. [Google Scholar] [CrossRef]

- Valero, S.; Morin, D.; Inglada, J.; Sepulcre, G.; Arias, M.; Hagolle, O.; Dedieu, G.; Bontemps, S.; Defourny, P.; Koetz, B. Production of a Dynamic Cropland Mask by Processing Remote Sensing Image Series at High Temporal and Spatial Resolutions. Remote Sens. 2016, 8, 55. [Google Scholar] [CrossRef] [Green Version]

- Pal, M.; Mather, P.M. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Story, M.; Congalton, R.G. Remote Sensing Brief Accuracy Assessment: A User’s Perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Jäger, G.; Benz, U. Measures of classification accuracy based on fuzzy similarity. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1462–1467. [Google Scholar] [CrossRef]

- Sun, C.; Bian, Y.; Zhou, T.; Pan, J. Using of multi-source and multi-temporal remote sensing data improves crop-type mapping in the subtropical agriculture region. Sensors 2019, 19, 2401. [Google Scholar] [CrossRef] [Green Version]

- Pontius, R.G.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Colditz, R.R. An evaluation of different training sample allocation schemes for discrete and continuous land cover classification using decision tree-based algorithms. Remote Sens. 2015, 7, 9655–9681. [Google Scholar] [CrossRef] [Green Version]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef]

- Whyte, A.; Ferentinos, K.P.; Petropoulos, G.P. A new synergistic approach for monitoring wetlands using Sentinels -1 and 2 data with object-based machine learning algorithms. Environ. Model. Softw. 2018, 104, 40–54. [Google Scholar] [CrossRef] [Green Version]

- Idol, T.; Haack, B.; Mahabir, R. Radar speckle reduction and derived texture measures for land cover/use classification: A case study. Geocarto Int. 2017, 32, 18–29. [Google Scholar] [CrossRef]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using Sentinel-2 data. GISci. Remote Sens. 2019, 57, 1–20. [Google Scholar] [CrossRef] [Green Version]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Markert, K.N.; Griffin, R.E.; Limaye, A.S.; McNider, R.T. Spatial Modeling of Land Cover/Land Use Change and Its Effects on Hydrology Within the Lower Mekong Basin. In Land Atmospheric Research Applications in Asia; Vadrevu, K.P., Ohara, T., Justice, C., Eds.; Springer: Heidelberg/Berlin, Germany, 2018; pp. 667–698. [Google Scholar]

- Stehman, S.V. A critical evaluation of the normalized error matrix in map accuracy assessment. Photogramm. Eng. Remote Sens. 2004, 70, 743–751. [Google Scholar] [CrossRef]

- Chust, G.; Ducrot, D.; Pretus, J.L. Land cover discrimination potential of radar multitemporal series and optical multispectral images in a Mediterranean cultural landscape. Int. J. Remote Sens. 2004, 25, 3513–3528. [Google Scholar] [CrossRef]

- CHMI Portal—Meteorological Measurements at Prague’s Clementinum Observatory. Available online: http://portal.chmi.cz/historicka-data/pocasi/praha-klementinum?l=en (accessed on 30 May 2020).

- Molijn, R.; Iannini, L.; López Dekker, P.; Magalhães, P.; Hanssen, R. Vegetation Characterization through the Use of Precipitation-Affected SAR Signals. Remote Sens. 2018, 10, 1647. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Brisco, B.; Poncos, V. Boreal Inundation Mapping with SMAP Radiometer Data for Methane Studies. In Proceedings of the 19th EGU General Assembly (EGU 2017), Vienna, Austria, 23–28 April 2017; p. 10916. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Demarez, V.; Helen, F.; Marais-Sicre, C.; Baup, F. In-Season Mapping of Irrigated Crops Using Landsat 8 and Sentinel-1 Time Series. Remote Sens. 2019, 11, 118. [Google Scholar] [CrossRef] [Green Version]

- Park, S.; Im, J.; Park, S.; Yoo, C.; Han, H.; Rhee, J. Classification and Mapping of Paddy Rice by Combining Landsat and SAR Time Series Data. Remote Sens. 2018, 10, 447. [Google Scholar] [CrossRef] [Green Version]

- Skriver, H.; Mattia, F.; Satalino, G.; Balenzano, A.; Pauwels, V.R.N.; Verhoest, N.E.C.; Davidson, M. Crop Classification Using Short-Revisit Multitemporal SAR Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 423–431. [Google Scholar] [CrossRef]

- Lavreniuk, M.; Kussul, N.; Meretsky, M.; Lukin, V.; Abramov, S.; Rubel, O. Impact of SAR data filtering on crop classification accuracy. In Proceedings of the 2017 IEEE First Ukraine Conference on Electrical and Computer Engineering (UKRCON), Kiev, Ukraine, 29 May–2 June 2017; pp. 912–917. [Google Scholar] [CrossRef]

- Remelgado, R.; Safi, K.; Wegmann, M. From ecology to remote sensing: Using animals to map land cover. Remote Sens. Ecol. Conserv. 2020, 6, 93–104. [Google Scholar] [CrossRef]

- Patel, P.; Srivastava, H.S.; Panigrahy, S.; Parihar, J.S. Comparative evaluation of the sensitivity of multi-polarized multi-frequency SAR backscatter to plant density. Int. J. Remote Sens. 2006, 27, 293–305. [Google Scholar] [CrossRef]

- Mercier, A.; Betbeder, J.; Rumiano, F.; Baudry, J.; Gond, V.; Blanc, L.; Bourgoin, C.; Cornu, G.; Ciudad, C.; Marchamalo, M.; et al. Evaluation of Sentinel-1 and 2 Time Series for Land Cover Classification of Forest–Agriculture Mosaics in Temperate and Tropical Landscapes. Remote Sens. 2019, 11, 979. [Google Scholar] [CrossRef] [Green Version]

- Niculescu, S.; Talab Ou Ali, H.; Billey, A. Random forest classification using Sentinel-1 and Sentinel-2 series for vegetation monitoring in the Pays de Brest (France). In Proceedings of the SPIE—Remote Sensing for Agriculture, Ecosystems, and Hydrology XX, Berlin, Germany, 10–13 September 2018; Volume 10783, pp. 1–18. [Google Scholar] [CrossRef]

- Liu, Y.; Gong, W.; Hu, X.; Gong, J. Forest type identification with random forest using Sentinel-1A, Sentinel-2A, multi-temporal Landsat-8 and DEM data. Remote Sens. 2018, 10, 946. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Woodcock, C.E.; Rogan, J.; Kellndorfer, J. Assessment of spectral, polarimetric, temporal, and spatial dimensions for urban and peri-urban land cover classification using Landsat and SAR data. Remote Sens. Environ. 2012, 117, 72–82. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef] [Green Version]

- Balzter, H.; Cole, B.; Thiel, C.; Schmullius, C. Mapping CORINE land cover from Sentinel-1A SAR and SRTM digital elevation model data using random forests. Remote Sens. 2015, 7, 14876–14898. [Google Scholar] [CrossRef] [Green Version]

- Pesaresi, M.; Corbane, C.; Julea, A.; Florczyk, A.; Syrris, V.; Soille, P.; Pesaresi, M.; Corbane, C.; Julea, A.; Florczyk, A.J.; et al. Assessment of the Added-Value of Sentinel-2 for Detecting Built-up Areas. Remote Sens. 2016, 8, 299. [Google Scholar] [CrossRef] [Green Version]

- Zakeri, H.; Yamazaki, F.; Liu, W. Texture Analysis and Land Cover Classification of Tehran Using Polarimetric Synthetic Aperture Radar Imagery. Appl. Sci. 2017, 7, 452. [Google Scholar] [CrossRef] [Green Version]

- Jin, Y.; Liu, X.; Chen, Y.; Liang, X. Land-cover mapping using Random Forest classification and incorporating NDVI time-series and texture: A case study of central Shandong. Int. J. Remote Sens. 2018, 39, 8703–8723. [Google Scholar] [CrossRef]

- Pavanelli, J.A.P.; dos Santos, J.R.; Galvão, L.S.; Xaud, M.R.; Xaud, H.A.M. Palsar-2/ALOS-2 and Oli/Landsat-8 data integration for land use and land cover mapping in northern Brazilian Amazon. Bol. Cienc. Geod. 2018, 24, 250–269. [Google Scholar] [CrossRef]

- Schuster, C.; Schmidt, T.; Conrad, C.; Kleinschmit, B.; Förster, M. Grassland habitat mapping by intra-annual time series analysis-Comparison of RapidEye and TerraSAR-X satellite data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 25–34. [Google Scholar] [CrossRef]

- Xing, L.; Tang, X.; Wang, H.; Fan, W.; Wang, G. Monitoring monthly surface water dynamics of Dongting Lake using Sentinel-1 data at 10 m. PeerJ 2018, 6, e4992. [Google Scholar] [CrossRef]

- Kuenzer, C.; Guo, H.; Schlegel, I.; Tuan, V.Q.; Li, X.; Dech, S. Varying Scale and Capability of Envisat ASAR-WSM, TerraSAR-X Scansar and TerraSAR-X Stripmap Data to Assess Urban Flood Situations: A Case Study of the Mekong Delta in Can Tho Province. Remote Sens. 2013, 5, 5122–5142. [Google Scholar] [CrossRef] [Green Version]

- Clerici, N.; Valbuena Calderón, C.A.; Posada, J.M. Fusion of sentinel-1a and sentinel-2A data for land cover mapping: A case study in the lower Magdalena region, Colombia. J. Maps 2017, 13, 718–726. [Google Scholar] [CrossRef] [Green Version]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Ho Tong Minh, D.; Ienco, D.; Gaetano, R.; Lalande, N.; Ndikumana, E.; Osman, F.; Maurel, P. Deep Recurrent Neural Networks for Winter Vegetation Quality Mapping via Multitemporal SAR Sentinel-1. IEEE Geosci. Remote Sens. Lett. 2018, 15, 465–468. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Mullissa, A.G.; Persello, C.; Tolpekin, V. Fully convolutional networks for multi-temporal SAR image classification. In Proceedings of the IGARSS 2018-2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6635–6638. [Google Scholar] [CrossRef]

- Sonobe, R.; Tani, H.; Wang, X. An experimental comparison between KELM and CART for crop classification using Landsat-8 OLI data. Geocarto Int. 2016, 32, 128–138. [Google Scholar] [CrossRef] [Green Version]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved early crop type identification by joint use of high temporal resolution sar and optical image time series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef] [Green Version]

- FAO-UNESCO. Soil Map of the World 1:5000000; UNESCO: Paris, France, 1981; Volume 5, ISBN 92-3-101364-0. [Google Scholar]

| Study Area | Date | Satellite | Acquisition Orbit |

|---|---|---|---|

| Prague | 05 May 2019 | S1B | DESC |

| 31 May 2019 | S1A | DESC | |

| 06 June 2019 | S1B | DESC | |

| 12 June 2019 | S1A | DESC | |

| 18 June 2019 | S1B | DESC | |

| Cologne | 01 May 2019 | S1A | ASC |

| 07 May 2019 | S1B | ASC | |

| 13 May 2019 | S1A | ASC | |

| 19 May 2019 | S1B | ASC | |

| 25 May 2019 | S1A | ASC | |

| Lyon | 17 May 2019 | S1B | ASC |

| 23 May 2019 | S1A | ASC | |

| 04 June 2019 | S1A | ASC | |

| 10 June 2019 | S1B | ASC | |

| 16 June 2019 | S1A | ASC |

| Prague | Cologne | Lyon | ||||

|---|---|---|---|---|---|---|

| Class | Train | Valid | Train | Valid | Train | Valid |

| Water | 105 | 45 | 105 | 45 | 105 | 45 |

| Bare land | 140 | 60 | 140 | 60 | 140 | 60 |

| Forest | 140 | 60 | 154 | 66 | 140 | 60 |

| Built-up | 140 | 60 | 140 | 60 | 140 | 60 |

| Low vegetation | 140 | 60 | 154 | 66 | 140 | 60 |

| Total | 665 | 285 | 693 | 297 | 665 | 285 |

| Method | OA | K | Water | Bare Land | Forest | Built-Up | Low Veg. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 | FoM | F1 | FoM | F1 | FoM | F1 | FoM | F1 | FoM | ||||

| VV_VH | RF | 55.37 | 0.42 | 0.52 | 0.42 | 0.57 | 0.41 | 0.49 | 0.36 | 0.46 | 0.35 | 0.58 | 0.41 |

| XGB | 57.26 | 0.44 | 0.53 | 0.43 | 0.59 | 0.43 | 0.49 | 0.38 | 0.50 | 0.37 | 0.61 | 0.44 | |

| MLP | 57.05 | 0.44 | 0.53 | 0.41 | 0.59 | 0.44 | 0.40 | 0.37 | 0.53 | 0.39 | 0.65 | 0.48 | |

| SVM | 61.63 | 0.49 | 0.55 | 0.47 | 0.61 | 0.47 | 0.52 | 0.42 | 0.58 | 0.43 | 0.68 | 0.51 | |

| AB | 60.20 | 0.48 | 0.55 | 0.45 | 0.60 | 0.46 | 0.51 | 0.40 | 0.56 | 0.41 | 0.67 | 0.50 | |

| ELM | 52.11 | 0.38 | 0.50 | 0.39 | 0.56 | 0.39 | 0.44 | 0.33 | 0.34 | 0.29 | 0.57 | 0.39 | |

| VV_VH_SPK | RF | 75.78 | 0.67 | 0.61 | 0.58 | 0.77 | 0.63 | 0.75 | 0.61 | 0.72 | 0.58 | 0.77 | 0.64 |

| XGB | 76.07 | 0.68 | 0.61 | 0.57 | 0.77 | 0.63 | 0.75 | 0.61 | 0.74 | 0.60 | 0.78 | 0.64 | |

| MLP | 76.48 | 0.67 | 0.59 | 0.57 | 0.74 | 0.61 | 0.76 | 0.62 | 0.78 | 0.64 | 0.79 | 0.65 | |

| SVM | 80.24 | 0.73 | 0.65 | 0.61 | 0.81 | 0.68 | 0.79 | 0.66 | 0.80 | 0.68 | 0.83 | 0.71 | |

| AB | 78.10 | 0.70 | 0.63 | 0.61 | 0.80 | 0.67 | 0.77 | 0.63 | 0.78 | 0.64 | 0.81 | 0.68 | |

| ELM | 72.79 | 0.63 | 0.59 | 0.54 | 0.76 | 0.61 | 0.71 | 0.57 | 0.59 | 0.48 | 0.75 | 0.61 | |

| MT_3 | RF | 88.62 | 0.84 | 0.80 | 0.77 | 0.92 | 0.85 | 0.88 | 0.79 | 0.76 | 0.66 | 0.89 | 0.80 |

| XGB | 87.96 | 0.83 | 0.79 | 0.77 | 0.92 | 0.85 | 0.87 | 0.78 | 0.75 | 0.64 | 0.88 | 0.79 | |

| MLP | 92.27 | 0.89 | 0.90 | 0.85 | 0.93 | 0.88 | 0.92 | 0.85 | 0.81 | 0.72 | 0.91 | 0.84 | |

| SVM | 90.14 | 0.86 | 0.80 | 0.78 | 0.93 | 0.87 | 0.90 | 0.82 | 0.80 | 0.70 | 0.90 | 0.83 | |

| AB | 81.58 | 0.75 | 0.76 | 0.72 | 0.88 | 0.77 | 0.79 | 0.67 | 0.66 | 0.55 | 0.80 | 0.69 | |

| ELM | 70.42 | 0.60 | 0.69 | 0.63 | 0.79 | 0.64 | 0.66 | 0.52 | 0.40 | 0.37 | 0.70 | 0.56 | |

| MT_5 | RF | 92.26 | 0.89 | 0.91 | 0.86 | 0.93 | 0.88 | 0.92 | 0.85 | 0.81 | 0.71 | 0.93 | 0.86 |

| XGB | 91.73 | 0.89 | 0.91 | 0.85 | 0.93 | 0.87 | 0.92 | 0.85 | 0.80 | 0.70 | 0.92 | 0.85 | |

| MLP | 93.95 | 0.92 | 0.92 | 0.87 | 0.95 | 0.91 | 0.93 | 0.88 | 0.86 | 0.77 | 0.93 | 0.88 | |

| SVM | 92.92 | 0.90 | 0.89 | 0.82 | 0.94 | 0.88 | 0.92 | 0.85 | 0.80 | 0.70 | 0.92 | 0.85 | |

| AB | 91.55 | 0.88 | 0.89 | 0.84 | 0.93 | 0.88 | 0.91 | 0.84 | 0.79 | 0.69 | 0.92 | 0.85 | |

| ELM | 79.02 | 0.71 | 0.80 | 0.71 | 0.84 | 0.72 | 0.77 | 0.64 | 0.47 | 0.44 | 0.79 | 0.65 | |

| Method | Water | Bare land | Forest | Built-Up | Low Veg. | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| UA | PA | UA | PA | UA | PA | UA | PA | UA | PA | ||

| VV_VH | RF | 47.43 | 66.49 | 50.70 | 66.44 | 63.44 | 39.91 | 37.63 | 59.80 | 58.22 | 59.93 |

| XGB | 48.26 | 67.60 | 51.06 | 70.22 | 65.01 | 40.05 | 44.10 | 57.88 | 59.77 | 64.29 | |

| MLP | 48.95 | 60.94 | 47.93 | 79.57 | 72.80 | 28.02 | 53.95 | 57.32 | 58.77 | 75.55 | |

| SVM | 49.54 | 70.88 | 52.49 | 76.75 | 71.12 | 42.00 | 61.73 | 55.13 | 64.39 | 74.53 | |

| AB | 48.38 | 70.54 | 51.01 | 75.84 | 69.10 | 40.98 | 57.58 | 54.74 | 64.20 | 72.02 | |

| ELM | 46.03 | 63.93 | 49.05 | 67.33 | 58.50 | 36.04 | 31.53 | 38.12 | 55.58 | 60.88 | |

| VV_VH_SPK | RF | 59.71 | 73.50 | 73.94 | 80.72 | 81.56 | 69.34 | 69.09 | 76.68 | 75.86 | 79.86 |

| XGB | 59.23 | 72.99 | 74.27 | 80.91 | 81.91 | 69.64 | 71.95 | 76.48 | 75.65 | 80.85 | |

| MLP | 75.02 | 58.21 | 67.27 | 81.62 | 82.17 | 70.13 | 81.75 | 75.17 | 79.63 | 79.06 | |

| SVM | 60.61 | 76.86 | 76.51 | 86.08 | 84.81 | 74.23 | 87.97 | 73.96 | 80.62 | 86.40 | |

| AB | 60.73 | 76.50 | 74.11 | 86.22 | 84.16 | 70.36 | 79.72 | 76.26 | 79.20 | 83.37 | |

| ELM | 53.37 | 73.66 | 71.46 | 81.70 | 80.06 | 65.06 | 66.76 | 53.23 | 71.83 | 80.44 | |

| MT_3 | RF | 76.02 | 91.36 | 90.48 | 93.09 | 89.95 | 87.12 | 72.32 | 81.62 | 89.56 | 88.05 |

| XGB | 74.90 | 91.60 | 91.21 | 92.63 | 89.68 | 85.49 | 69.64 | 81.53 | 88.90 | 87.82 | |

| MLP | 95.04 | 86.20 | 93.91 | 92.85 | 90.96 | 94.07 | 90.91 | 73.80 | 90.94 | 91.04 | |

| SVM | 76.99 | 91.49 | 93.21 | 94.39 | 90.23 | 88.78 | 79.91 | 80.47 | 90.27 | 90.97 | |

| AB | 71.43 | 89.65 | 86.14 | 89.52 | 83.23 | 75.52 | 59.03 | 74.99 | 80.30 | 80.77 | |

| ELM | 62.64 | 88.13 | 76.34 | 83.17 | 74.32 | 59.36 | 35.81 | 45.86 | 68.03 | 71.92 | |

| MT_5 | RF | 90.34 | 93.07 | 92.20 | 94.35 | 91.50 | 92.75 | 79.63 | 82.94 | 93.24 | 92.07 |

| XGB | 89.89 | 92.66 | 92.10 | 93.85 | 91.34 | 91.91 | 76.81 | 83.71 | 92.35 | 91.30 | |

| MLP | 89.26 | 95.34 | 94.54 | 96.16 | 91.83 | 94.91 | 93.16 | 79.31 | 93.72 | 93.26 | |

| SVM | 90.13 | 89.66 | 92.56 | 94.65 | 91.81 | 92.39 | 77.09 | 83.56 | 90.93 | 93.08 | |

| AB | 86.03 | 93.42 | 92.38 | 94.44 | 91.25 | 91.17 | 75.68 | 83.91 | 92.71 | 91.02 | |

| ELM | 77.07 | 87.33 | 81.53 | 87.38 | 79.31 | 75.49 | 48.84 | 48.06 | 76.36 | 80.97 | |

| Study Area | Class. Scenario | RF | XGB | MLP | SVM | AB | ELM |

|---|---|---|---|---|---|---|---|

| Prague | VV_VH | 46.74 | 20.71 | 29.90 | X | 39.49 | 116.67 |

| VV_VH_SPK | 66.21 | 56.46 | 14.52 | X | 0.10 | 71.15 | |

| MT_3 | 78.63 | 97.86 | X | 39.95 | 113.24 | 354.83 | |

| MT_5 | 23.43 | 29.17 | X | 3.92 | 31.17 | 226.17 | |

| Cologne | VV_VH | 12.98 | 13.85 | 15.15 | X | 0.48 | 31.17 |

| VV_VH_SPK | 9.03 | 6.25 | 24.85 | X | 0.00 | 76.59 | |

| MT_3 | 17.60 | 18.71 | X | 7.36 | 21.19 | 207.76 | |

| MT_5 | 9.22 | 12.20 | X | 6.37 | 11.84 | 208.71 | |

| Lyon | VV_VH | 31.73 | 16.62 | 25.28 | X | 1.78 | 44.21 |

| VV_VH_SPK | 19.38 | 22.26 | 5.89 | X | 6.47 | 27.76 | |

| MT_3 | 4.29 | 7.93 | X | 0.27 | 10.54 | 121.95 | |

| MT_5 | 0.06 | 1.93 | X | 1.84 | 3.67 | 128.72 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gašparović, M.; Dobrinić, D. Comparative Assessment of Machine Learning Methods for Urban Vegetation Mapping Using Multitemporal Sentinel-1 Imagery. Remote Sens. 2020, 12, 1952. https://doi.org/10.3390/rs12121952

Gašparović M, Dobrinić D. Comparative Assessment of Machine Learning Methods for Urban Vegetation Mapping Using Multitemporal Sentinel-1 Imagery. Remote Sensing. 2020; 12(12):1952. https://doi.org/10.3390/rs12121952

Chicago/Turabian StyleGašparović, Mateo, and Dino Dobrinić. 2020. "Comparative Assessment of Machine Learning Methods for Urban Vegetation Mapping Using Multitemporal Sentinel-1 Imagery" Remote Sensing 12, no. 12: 1952. https://doi.org/10.3390/rs12121952