A Coarse-to-Fine Deep Learning Based Land Use Change Detection Method for High-Resolution Remote Sensing Images

Abstract

:1. Introduction

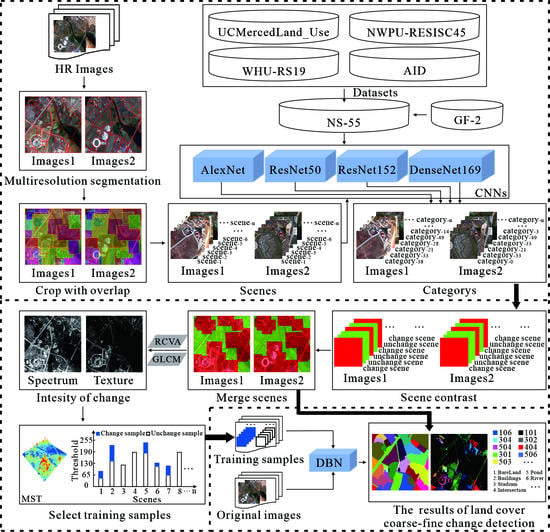

2. Methodology

2.1. NS-55 Dataset

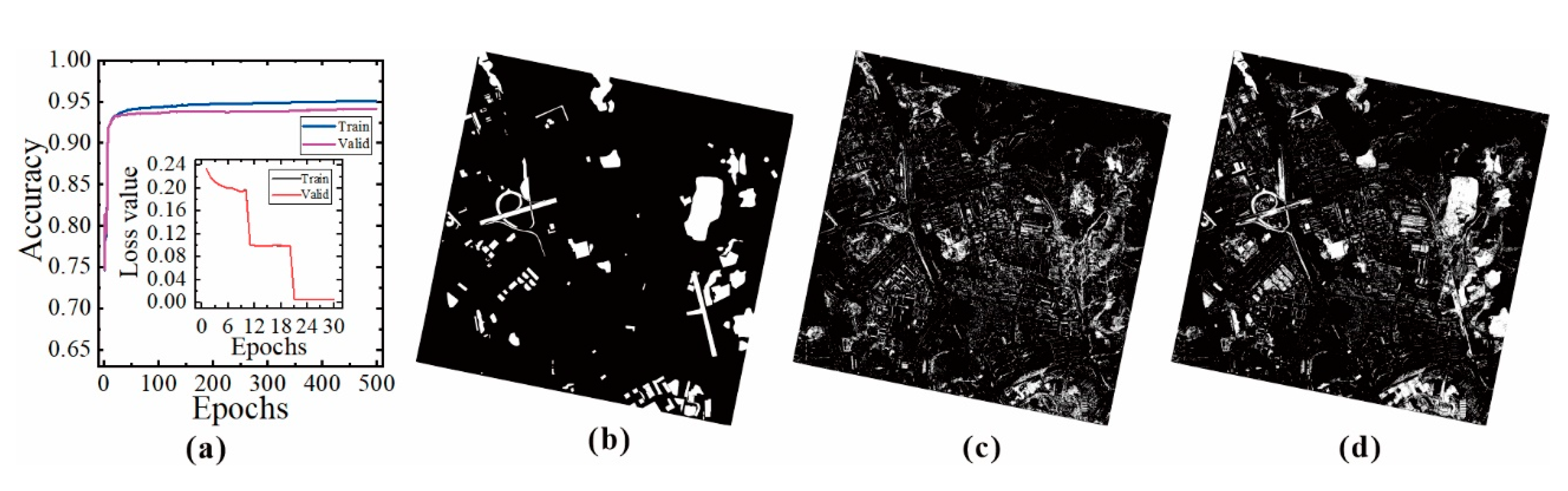

2.2. Coarse Deep-Learning Change Detection

2.3. Use Multi-Scale Threshold (MST) to Separate the Changed Samples from the Unchanged Samples

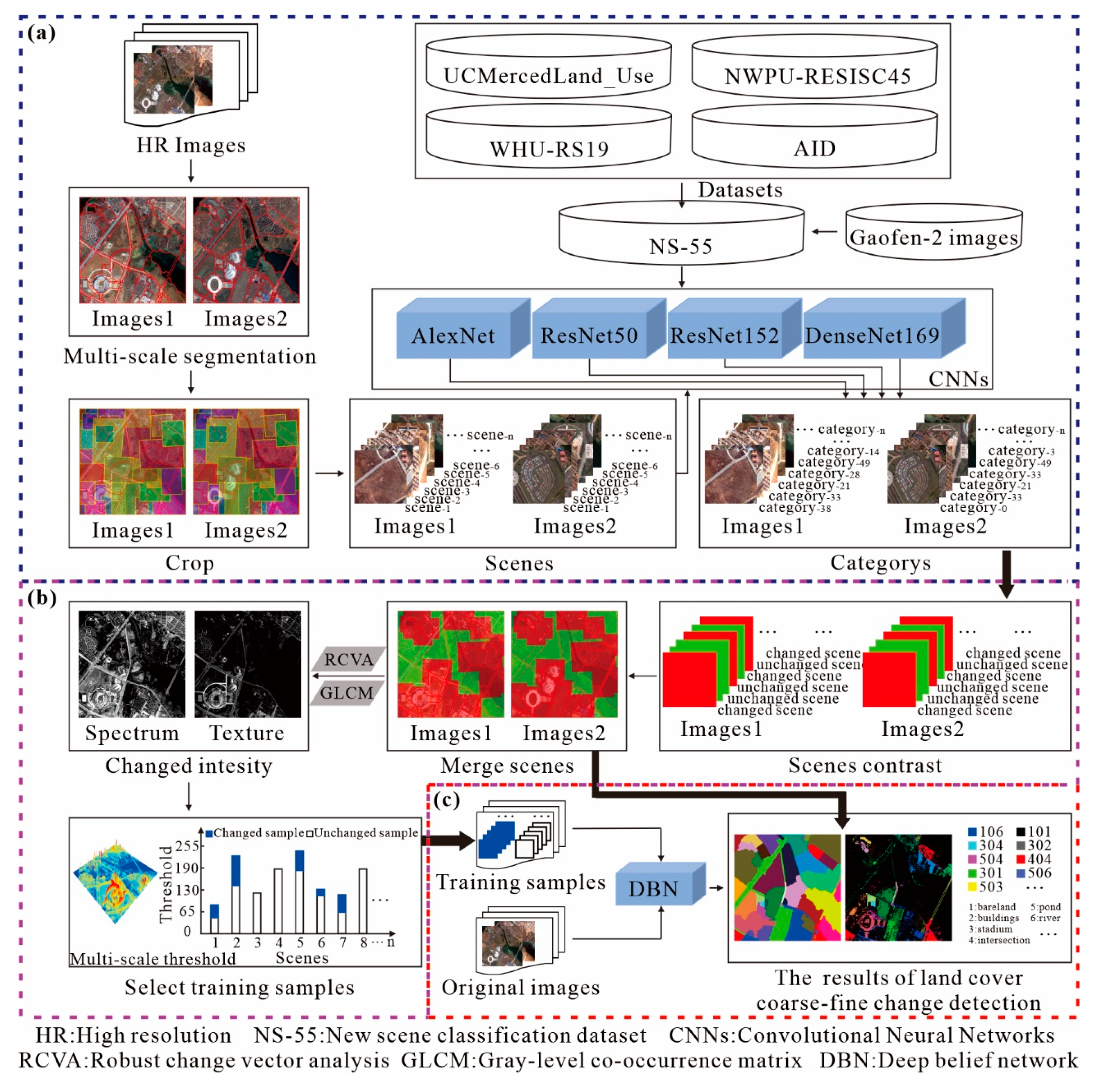

2.4. Fine Deep-Learning Change Detection

3. Experiments and Results

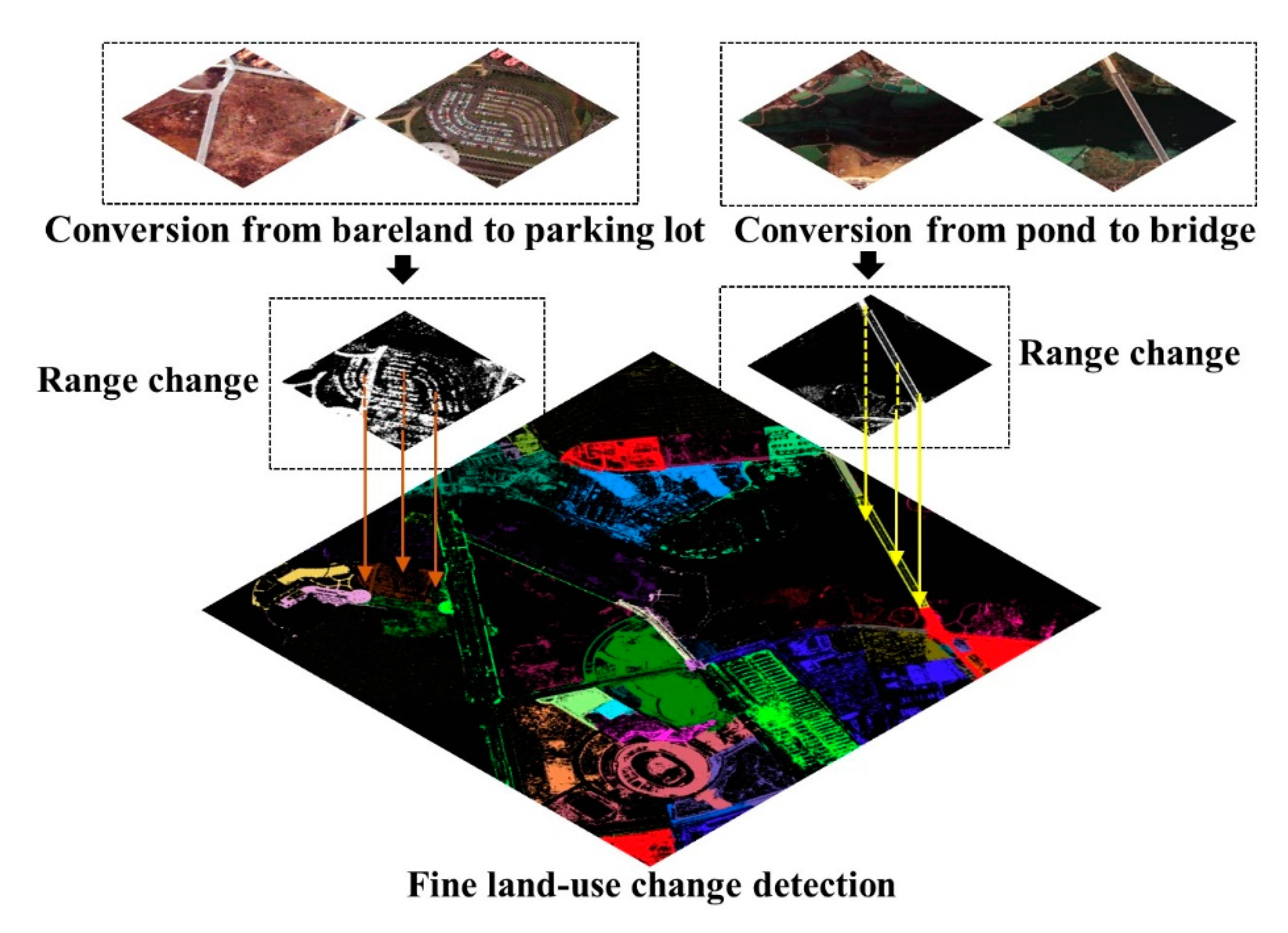

3.1. Experiment I

3.1.1. Study Area and Data

3.1.2. Data Processing and Accuracy Assessment

3.2. Experiment II

3.2.1. Study Area and Data

3.2.2. Data Processing and Accuracy Assessment

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lv, P.Y.; Zhong, Y.F.; Zhao, J.; Zhang, L.P. Unsupervised Change Detection Based on Hybrid Conditional Random Field Model for High Spatial Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4002–4015. [Google Scholar] [CrossRef]

- Lunetta, R.S.; Johnson, D.M.; Lyon, J.G.; Crotwell, J. Impacts of imagery temporal frequency on land-cover change detection monitoring. Remote Sens. Environ. 2004, 89, 444–454. [Google Scholar] [CrossRef]

- Zhu, Z. Change detection using landsat time series: A review of frequencies, preprocessing, algorithms, and applications. ISPRS J. Photogramm. 2017, 130, 370–384. [Google Scholar] [CrossRef]

- Hansen, M.C.; Loveland, T.R. A review of large area monitoring of land cover change using Landsat data. Remote Sens. Environ. 2012, 122, 66–74. [Google Scholar] [CrossRef]

- Han, Y.; Javed, A.; Jung, S.; Liu, S.C. Object-Based Change Detection of Very High Resolution Images by Fusing Pixel-Based Change Detection Results Using Weighted Dempster-Shafer Theory. Remote Sens.-Basel 2020, 12, 983. [Google Scholar] [CrossRef] [Green Version]

- Lv, P.Y.; Zhong, Y.F.; Zhao, J.; Zhang, L.P. Unsupervised Change Detection Model Based on Hybrid Conditional Random Field for High Spatial Resolution Remote Sensing Imagery. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar]

- Fukushima, K. Neocognitron: Self-organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 106–115. [Google Scholar] [CrossRef]

- Johnson, R.D.; Kasischke, E.S. Change vector analysis: A technique for the multispectral monitoring of land cover and condition. Int. J. Remote Sens. 1998, 19, 411–426. [Google Scholar] [CrossRef]

- Li, Z.X.; Shi, W.Z.; Zhang, H.; Hao, M. Change Detection Based on Gabor Wavelet Features for Very High Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. 2017, 14, 783–787. [Google Scholar] [CrossRef]

- Xu, L.; Jing, W.; Song, H.; Chen, G. High-Resolution Remote Sensing Image Change Detection Combined With Pixel-Level and Object-Level. IEEE Access 2019, 7, 78909–78918. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.C.; Marinelli, D.; Bruzzone, L.; Bovolo, F. A Review of Change Detection in Multitemporal Hyperspectral Images Current techniques, applications, and challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 140–158. [Google Scholar] [CrossRef]

- Carvalho, O.A.; Guimaraes, R.F.; Gillespie, A.R.; Silva, N.C.; Gomes, R.A.T. A New Approach to Change Vector Analysis Using Distance and Similarity Measures. Remote Sens.-Basel 2011, 3, 2473–2493. [Google Scholar] [CrossRef] [Green Version]

- Zheng, Z.F.; Cao, J.N.; Lv, Z.Y.; Benediktsson, J.A. Spatial-Spectral Feature Fusion Coupled with Multi-Scale Segmentation Voting Decision for Detecting Land Cover Change with VHR Remote Sensing Images. Remote Sens.-Basel 2019, 11, 1903. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Li, G.; Mercier, G.; He, Y.; Pan, Q. Change Detection in Heterogenous Remote Sensing Images via Homogeneous Pixel Transformation. IEEE Trans. Image Process. 2018, 27, 1822–1834. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.J.; Ma, L.; Fu, T.Y.; Zhang, G.; Yao, M.R.; Li, M.C. Change Detection in Coral Reef Environment Using High-Resolution Images: Comparison of Object-Based and Pixel-Based Paradigms. ISPRS Int. J. Geo-Inf. 2018, 7, 441. [Google Scholar] [CrossRef] [Green Version]

- Wenqing, F.; Haigang, S.; Jihui, T.; Kaimin, S. Remote Sensing Image Change Detection Based on the Combination of Pixel-level and Object-level Analysis. Acta Geod. Cartogr. Sin. 2017, 46, 1147–1155. [Google Scholar] [CrossRef]

- Wu, K.; Du, Q.; Wang, Y.; Yang, Y.T. Supervised Sub-Pixel Mapping for Change Detection from Remotely Sensed Images with Different Resolutions. Remote Sens.-Basel 2017, 9, 284. [Google Scholar] [CrossRef] [Green Version]

- Barazzetti, L. Sliver Removal in Object-Based Change Detection from VHR Satellite Images. Photogramm. Eng. Remote Sens. 2016, 82, 161–168. [Google Scholar] [CrossRef] [Green Version]

- Feizizadeh, B.; Blaschke, T.; Tiede, D.; Moghaddam, M.H.R. Evaluating fuzzy operators of an object-based image analysis for detecting landslides and their changes. Geomorphology 2017, 293, 240–254. [Google Scholar] [CrossRef]

- Xiao, P.F.; Yuan, M.; Zhang, X.L.; Feng, X.Z.; Guo, Y.W. Cosegmentation for Object-Based Building Change Detection from High-Resolution Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1587–1603. [Google Scholar] [CrossRef]

- Ben Hamida, A.; Benoit, A.; Lambert, P.; Ben Amar, C. 3-D Deep Learning Approach for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4420–4434. [Google Scholar] [CrossRef] [Green Version]

- Xie, F.Y.; Shi, M.Y.; Shi, Z.W.; Yin, J.H.; Zhao, D.P. Multilevel Cloud Detection in Remote Sensing Images Based on Deep Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3631–3640. [Google Scholar] [CrossRef]

- Tao, Y.T.; Xu, M.Z.; Lu, Z.Y.; Zhong, Y.F. DenseNet-Based Depth-Width Double Reinforced Deep Learning Neural Network for High-Resolution Remote Sensing Image Per-Pixel Classification. Remote Sens.-Basel 2018, 10, 779. [Google Scholar] [CrossRef] [Green Version]

- Zhao, X.; Li, P.F.; Xiao, K.T.; Meng, X.N.; Han, L.; Yu, C.C. Sensor Drift Compensation Based on the Improved LSTM and SVM Multi-Class Ensemble Learning Models. Sens.-Basel 2019, 19, 3844. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, F.; Dan, T.T.; Yu, R.; Yang, K.; Yang, Y.; Chen, W.Y.; Gao, X.Y.; Ong, S.H. Small UAV-based multi-temporal change detection for monitoring cultivated land cover changes in mountainous terrain. Remote Sens. Lett. 2019, 10, 573–582. [Google Scholar] [CrossRef]

- Ge, Y.; Jiang, S.L.; Xu, Q.Y.; Jiang, C.L.; Ye, F.M. Exploiting representations from pre-trained convolutional neural networks for high-resolution remote sensing image retrieval. Multimed. Tools Appl. 2018, 77, 17489–17515. [Google Scholar] [CrossRef]

- Li, L.W.; Yan, Z.; Shen, Q.; Cheng, G.; Gao, L.R.; Zhang, B. Water Body Extraction from Very High Spatial Resolution Remote Sensing Data Based on Fully Convolutional Networks. Remote Sens.-Basel 2019, 11, 1162. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.J.; Jiao, L.C.; Liu, F.; Hou, B.; Yang, S.Y. Transferred Deep Learning-Based Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6960–6973. [Google Scholar] [CrossRef]

- Jiang, Y.N.; Li, Y.; Zhang, H.K. Hyperspectral Image Classification Based on 3-D Separable ResNet and Transfer Learning. IEEE Geosci. Remote Sens. 2019, 16, 1949–1953. [Google Scholar] [CrossRef]

- Cheng, D.C.; Meng, G.F.; Cheng, G.L.; Pan, C.H. SeNet: Structured Edge Network for Sea-Land Segmentation. IEEE Geosci. Remote Sens. 2017, 14, 247–251. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. Acm. 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Traore, B.B.; Kamsu-Foguem, B.; Tangara, F. Deep convolution neural network for image recognition. Ecol. Inform. 2018, 48, 257–268. [Google Scholar] [CrossRef] [Green Version]

- Teerakawanich, N.; Leelaruji, T.; Pichetjamroen, A. Short term prediction of sun coverage using optical flow with GoogLeNet. Energy Rep. 2020, 6, 526–531. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Nemoto, K.; Hamaguchi, R.; Sato, M.; Fujita, A.; Imaizumi, T.; Hikosaka, S. Building change detection via a combination of CNNs using only RGB aerial imageries. Proc. Spie 2017, 10431, 104310J. [Google Scholar] [CrossRef]

- Ji, S.P.; Shen, Y.Y.; Lu, M.; Zhang, Y.J. Building Instance Change Detection from Large-Scale Aerial Images using Convolutional Neural Networks and Simulated Samples. Remote Sens.-Basel 2019, 11, 1343. [Google Scholar] [CrossRef] [Green Version]

- Wen, D.W.; Huang, X.; Zhang, L.P.; Benediktsson, J.A. A Novel Automatic Change Detection Method for Urban High-Resolution Remotely Sensed Imagery Based on Multiindex Scene Representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 609–625. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.M.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.P.; Zhu, T.T. Building Change Detection From Multitemporal High-Resolution Remotely Sensed Images Based on a Morphological Building Index. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 105–115. [Google Scholar] [CrossRef]

- Jin, S.M.; Yang, L.M.; Zhu, Z.; Homer, C. A land cover change detection and classification protocol for updating Alaska NLCD 2001 to 2011. Remote Sens. Environ. 2017, 195, 44–55. [Google Scholar] [CrossRef]

- Zhu, J.X.; Su, Y.J.; Guo, Q.H.; Harmon, T.C. Unsupervised Object-Based Differencing for Land-Cover Change Detection. Photogramm. Eng. Remote Sens. 2017, 83, 225–236. [Google Scholar] [CrossRef]

- Li, E.Z.; Xia, J.S.; Du, P.J.; Lin, C.; Samat, A. Integrating Multilayer Features of Convolutional Neural Networks for Remote Sensing Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5653–5665. [Google Scholar] [CrossRef]

- Xia, G.S.; Hu, J.W.; Hu, F.; Shi, B.G.; Bai, X.; Zhong, Y.F.; Zhang, L.P.; Lu, X.Q. AID: A Benchmark Data Set for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef] [Green Version]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very deep convolutional neural networks for complex land cover mapping using multispectral remote sensing imagery (Article). Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef] [Green Version]

- Zhang, G.L.; Wang, M.; Liu, K. Forest Fire Susceptibility Modeling Using a Convolutional Neural Network for Yunnan Province of China. Int. J. Disast. Risk Sci. 2019, 10, 386–403. [Google Scholar] [CrossRef] [Green Version]

- Zeng, D.; Chen, S.; Chen, B.; Li, S. Improving Remote Sensing Scene Classification by Integrating Global-Context and Local-Object Features. Remote Sens. 2018, 10, 734. [Google Scholar] [CrossRef] [Green Version]

- Arsalan, M.; Kim, D.S.; Lee, M.B.; Owais, M.; Park, K.R. FRED-Net: Fully residual encoder-decoder network for accurate iris segmentation. Expert Syst. Appl. 2019, 122, 217–241. [Google Scholar] [CrossRef]

- Li, H.; Peng, J.; Tao, C. What do We Learn by Semantic Scene Understanding for Remote Sensing imagery in CNN framework? arXiv 2017, arXiv:1705.07077. [Google Scholar]

- Huang, G.; Liu, Z.; Pleiss, G.; Maaten, L.v.d.; Weinberger, K.Q. Convolutional Networks with Dense Connectivity. IEEE Trans. Pattern Anal. Mach. Intell. 2020. [Google Scholar] [CrossRef] [Green Version]

- Li, K.; Hu, X.; Jiang, H.; Shu, Z.; Zhang, M. Attention-Guided Multi-Scale Segmentation Neural Network for Interactive Extraction of Region Objects from High-Resolution Satellite Imagery. Remote Sens.-Basel 2020, 12, 789. [Google Scholar] [CrossRef] [Green Version]

- Karydas, C.G. Optimization of multi-scale segmentation of satellite imagery using fractal geometry. Int. J. Remote Sens. 2020, 41, 2905–2933. [Google Scholar] [CrossRef]

- Siravenha, A.C.Q.; Pelaes, E.G. Analysing environmental changes in the neighbourhood of mines using compressed change vector analysis: Case study of Carajas Mountains, Brazil. Int. J. Remote Sens. 2018, 39, 4170–4193. [Google Scholar] [CrossRef]

- Chen, Q.; Chen, Y.H. Multi-Feature Object-Based Change Detection Using Self-Adaptive Weight Change Vector Analysis. Remote Sens.-Basel 2016, 8, 549. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.F.; Cao, Q.; Zhao, J.; Ma, A.L.; Zhao, B.; Zhang, L.P. Optimal Decision Fusion for Urban Land-Use/Land-Cover Classification Based on Adaptive Differential Evolution Using Hyperspectral and LiDAR Data. Remote Sens.-Basel 2017, 9. [Google Scholar] [CrossRef] [Green Version]

- Fadl, S.; Megahed, A.; Han, Q.; Qiong, L. Frame duplication and shuffling forgery detection technique in surveillance videos based on temporal average and gray level co-occurrence matrix. Multimed. Tools Appl. 2020. [Google Scholar] [CrossRef]

- Zulfa, M.I.; Fadli, A.; Ramadhani, Y. Classification Model for Graduation on Time Study Using Data Mining Techniques with SVM Algorithm. In Proceedings of the 1st International Conference on Material Science and Engineering for Sustainable Rural Development, Central Java, Indonesia, 2018. [Google Scholar] [CrossRef]

- Xiao, P.F.; Zhang, X.L.; Wang, D.G.; Yuan, M.; Feng, X.Z.; Kelly, M. Change detection of built-up land: A framework of combining pixel-based detection and object-based recognition. Isprs J. Photogramm. 2016, 119, 402–414. [Google Scholar] [CrossRef]

- Zhong, Y.F.; Wang, J.; Zhao, J. Adaptive conditional random field classification framework based on spatial homogeneity for high-resolution remote sensing imagery. Remote Sens. Lett. 2020, 11, 515–524. [Google Scholar] [CrossRef]

- Li, J.J.; Xi, B.B.; Li, Y.S.; Du, Q.; Wang, K.Y. Hyperspectral Classification Based on Texture Feature Enhancement and Deep Belief Networks. Remote Sens.-Basel 2018, 10, 396. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.L.; Su, H.Y.; Li, M.S. An Improved Model Based Detection of Urban Impervious Surfaces Using Multiple Features Extracted from ROSIS-3 Hyperspectral Images. Remote Sens.-Basel 2019, 11, 136. [Google Scholar] [CrossRef] [Green Version]

- Saito, Y.; Kato, T. Decreasing the Size of the Restricted Boltzmann Machine. Neural Comput. 2019, 31, 784–805. [Google Scholar] [CrossRef]

- Gao, X.G.; Li, F.; Wan, K.F. Accelerated Learning for Restricted Boltzmann Machine with a Novel Momentum Algorithm. Chin. J. Electron. 2018, 27, 483–487. [Google Scholar] [CrossRef]

- Xue, L.N.; Rienties, B.; Van Petegem, W.; van Wieringen, A. Learning relations of knowledge transfer (KT) and knowledge integration (KI) of doctoral students during online interdisciplinary training: An exploratory study. High Educ. Res. Dev. 2020. [Google Scholar] [CrossRef]

- Han, J.; Miao, S.H.; Li, Y.W.; Yang, W.C.; Zheng, T.T. A multi-view and multi-scale transfer learning based wind farm equivalent method. Int. J. Electr. Power 2020, 117, 105740. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, X.; Niu, X.; Wang, F.; Zhang, X. Scene Classification of High-Resolution Remotely Sensed Image Based on ResNet. J. Geovis. Spat. Anal. 2019, 3, 16. [Google Scholar] [CrossRef]

| Area | Method | A/% | R/% | FA/% | MA/% |

|---|---|---|---|---|---|

| Site1 | Single threshold | 68.39 | 41.78 | 59.92 | 58.22 |

| MST | 86.64 | 78.10 | 27.15 | 21.91 | |

| Site2 | Single threshold | 72.24 | 45.92 | 41.33 | 54.08 |

| MST | 84.98 | 81.49 | 25.75 | 18.51 |

| Method | A/% | R/% | FA/% | MA/% |

|---|---|---|---|---|

| Single threshold | 86.57 | 29.58 | 80.21 | 70.42 |

| MST | 91.57 | 77.10 | 55.60 | 22.93 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, M.; Zhang, H.; Sun, W.; Li, S.; Wang, F.; Yang, G. A Coarse-to-Fine Deep Learning Based Land Use Change Detection Method for High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 1933. https://doi.org/10.3390/rs12121933

Wang M, Zhang H, Sun W, Li S, Wang F, Yang G. A Coarse-to-Fine Deep Learning Based Land Use Change Detection Method for High-Resolution Remote Sensing Images. Remote Sensing. 2020; 12(12):1933. https://doi.org/10.3390/rs12121933

Chicago/Turabian StyleWang, Mingchang, Haiming Zhang, Weiwei Sun, Sheng Li, Fengyan Wang, and Guodong Yang. 2020. "A Coarse-to-Fine Deep Learning Based Land Use Change Detection Method for High-Resolution Remote Sensing Images" Remote Sensing 12, no. 12: 1933. https://doi.org/10.3390/rs12121933