An Analysis Method for Interpretability of CNN Text Classification Model

Abstract

:1. Introduction

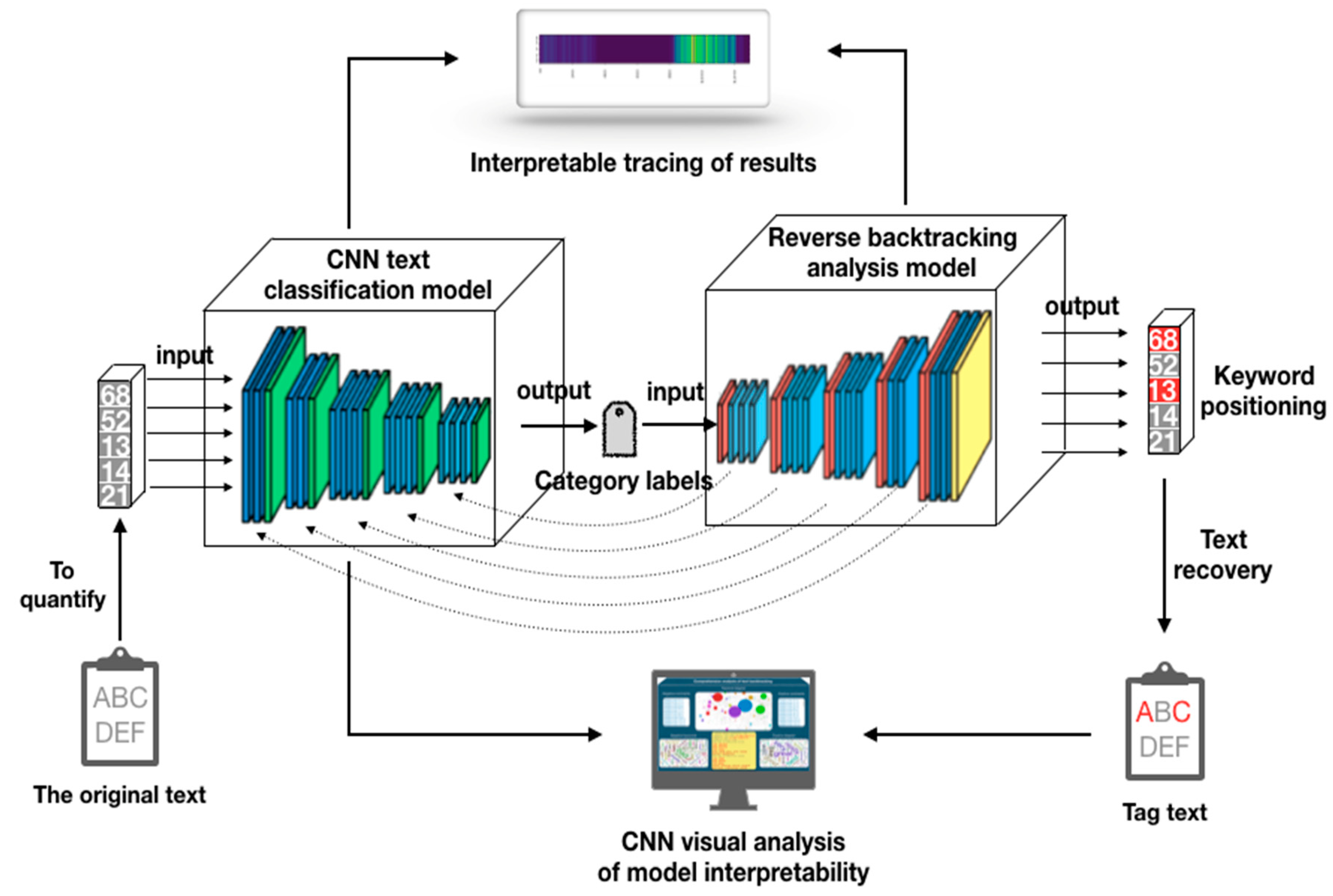

- The analysis method for interpretability of the CNN text classification model. The method proposed by us can perform multi-angle analysis on the discriminant results of multi-classified text and multi-label classification tasks through backtracking analysis on model prediction results.

- Using the data visualization technology to display model analysis results. Finally, the method proposed by us can display the analysis results of the model using visualization technology from multiple dimensions based on interpretability.

2. Interpretability Analysis Method

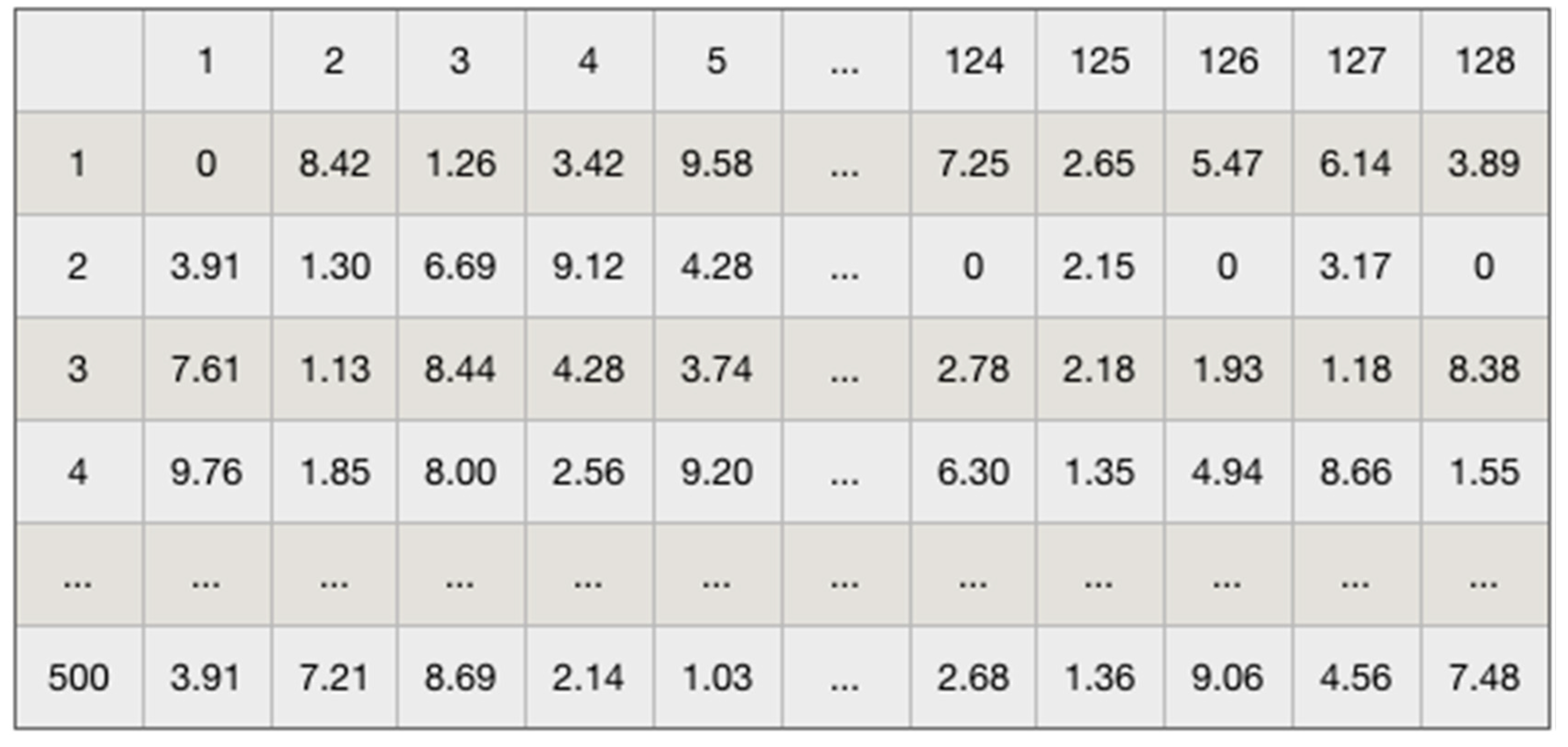

2.1. Text Data Preprocessing

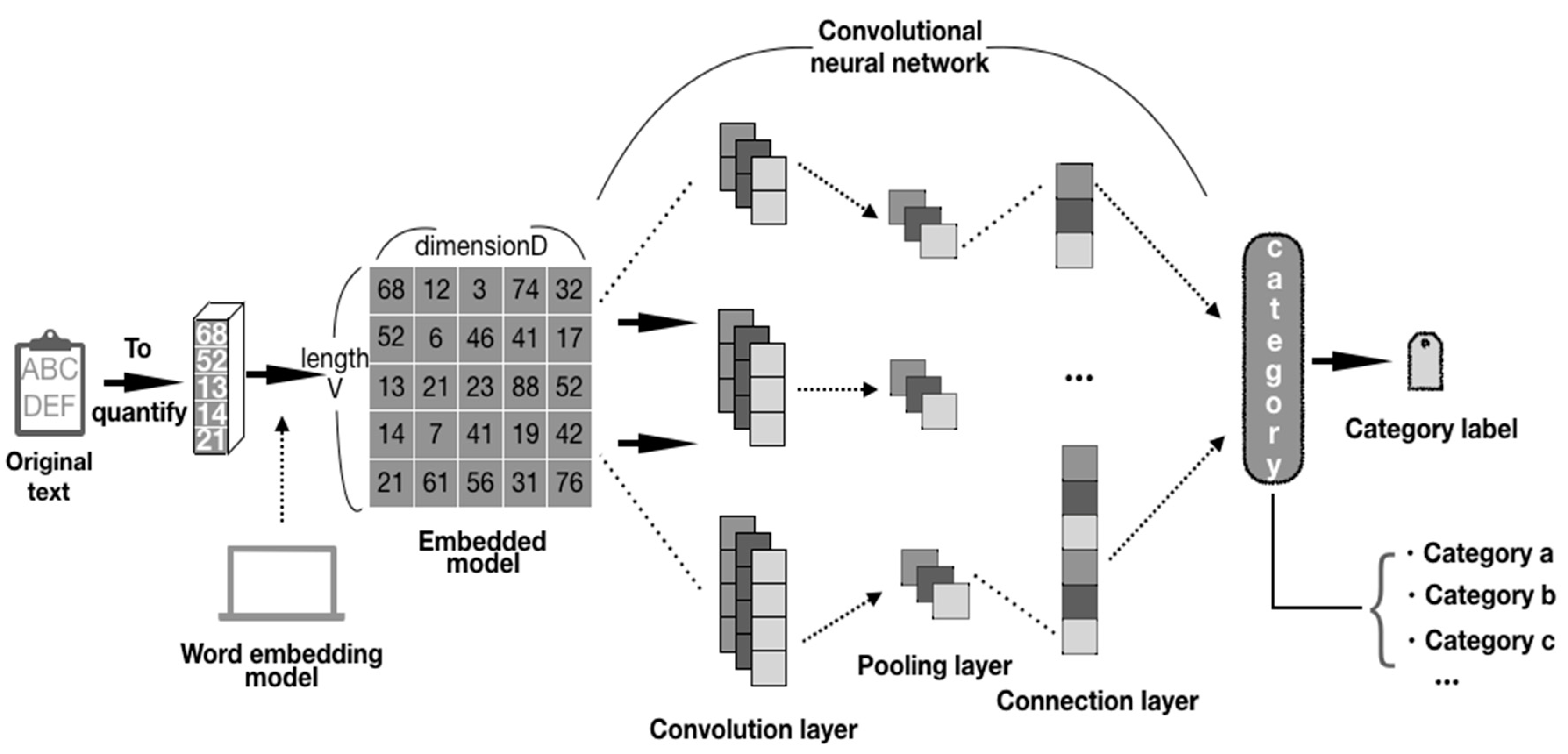

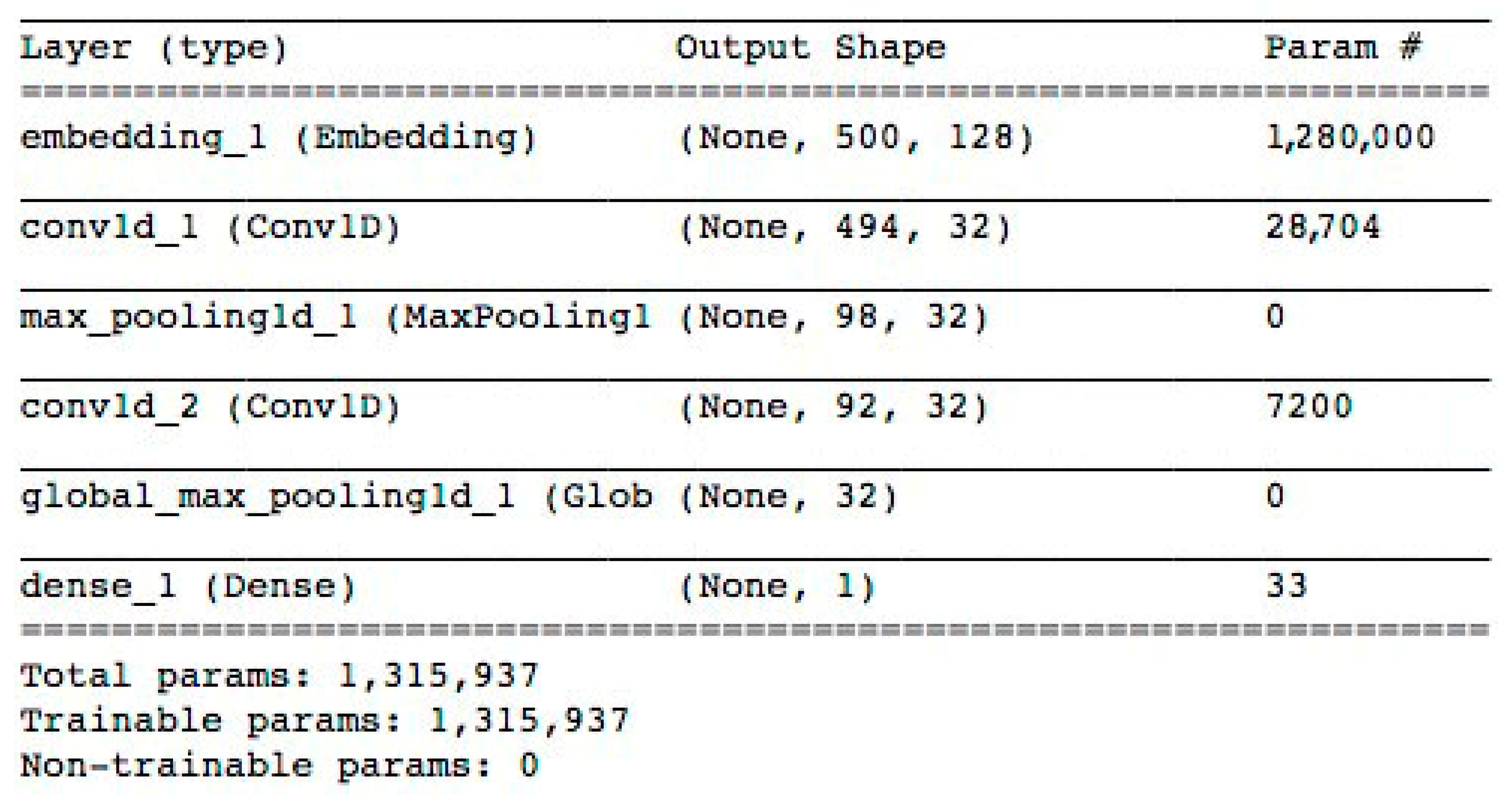

2.2. CNN Text Classification Model

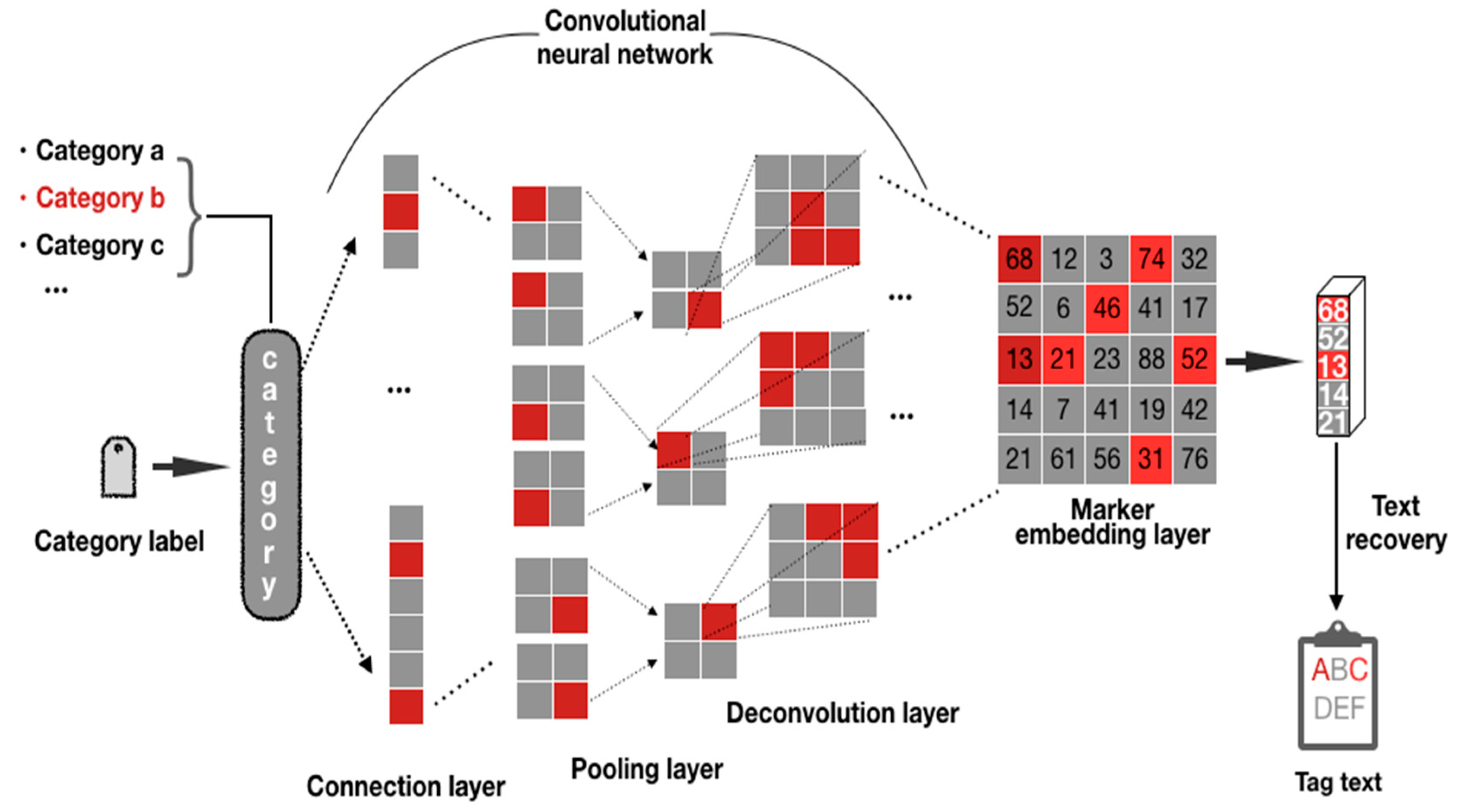

2.3. Backtracking Analysis Model

3. Interpretability Analysis of the Model

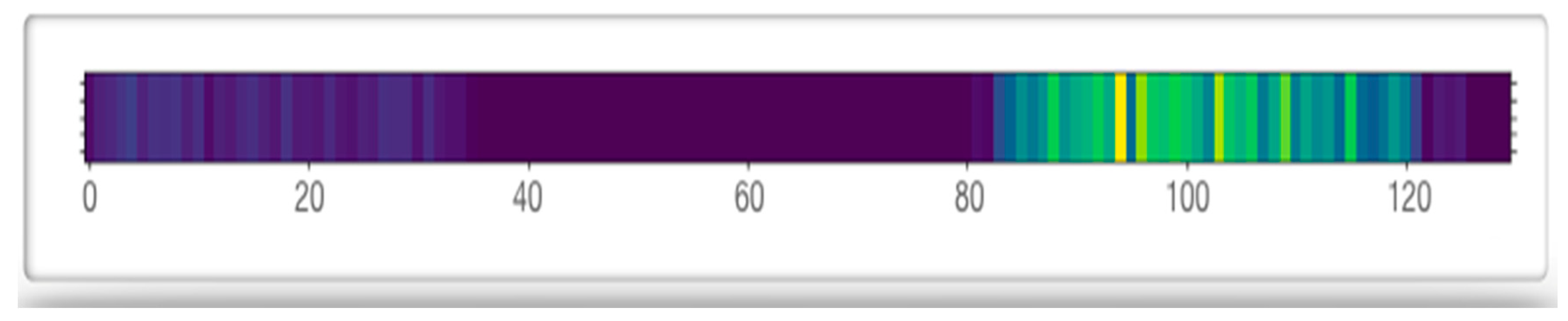

3.1. Visualization Diagram of Comment Weight

3.2. Comments on Comprehensive Analysis Diagram

4. Experimental Design and Result Analysis

4.1. Experiment Environment

4.2. Selection and Processing of Data Set

4.3. Experiment Design

4.4. Visual Analysis of Experimental Results

5. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Li, Y.; Wang, X.; Xu, P. Chinese Text Classification Model Based on Deep Learning. Future Internet 2018, 10, 113. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y. Convolutional neural networks for sentence classification. arXiv 2014, arXiv:1408.5882. [Google Scholar]

- Kim, H.; Jeong, Y.-S. Sentiment Classification Using Convolutional Neural Networks. Appl. Sci. 2019, 9, 2347. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Li, J.; Song, C.; Chang, J. High Utility Neural Networks for Text Classification. Tien Tzu Hsueh Pao/Acta Eletronica Sin. 2020, 48, 279–284. [Google Scholar]

- Stein, R.A.; Jaques, P.A.; Valiati, J.F. An analysis of hierarchical text classification using word embeddings. Inf. Sci. 2019, 471, 216–232. [Google Scholar] [CrossRef]

- Jin, R.; Lu, L.; Lee, J.; Usman, A. Multi-representational convolutional neural networks for text classification. Neural Comput. Appl. 2019, 35, 599–609. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, B. Fault Text Classification Based on Convolutional Neural Network. In Proceedings of the 2020 IEEE 7th International Conference on Industrial Engineering and Applications (ICIEA), Bangkok, Thailand, 16–21 April 2020; pp. 937–941. [Google Scholar] [CrossRef]

- Zhang, T.; Li, C.; Cao, N.; Ma, R.; Zhang, S.; Ma, N. Text Feature Extraction and Classification Based on Convolutional Neural Network (CNN); Zou, B., Li, M., Wang, H., Song, X., Xie, W., Lu, Z., Eds.; Data Science; ICPCSEE 2017; Communications in Computer and Information Science; Springer: Singapore, 2017; Volume 727. [Google Scholar]

- Zhao, X.; Lin, S.; Huang, Z. Text Classification of Micro-blog’s “Tree Hole” Based on Convolutional Neural Network. In Proceedings of the 2018 International Conference on Algorithms, Computing and Artificial Intelligence (ACAI’18), Sanya, China, 21–23 December 2018; pp. 1–5. [Google Scholar]

- Fu, L.; Yin, Z.; Wang, X.; Liu, Y. A Hybrid Algorithm for Text Classification Based on CNN-BLSTM with Attention. In Proceedings of the 2018 International Conference on Asian Language Processing (IALP), Bandung, Indonesia, 15–17 November 2018; pp. 31–34. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, J.; Jiang, Y.; Huang, G.; Chen, R. A Text Sentiment Classification Modeling Method Based on Coordinated CNN-LSTM-Attention Model. Chin. J. Electron. 2019, 28, 120. [Google Scholar] [CrossRef]

- Chen, K.; Tian, L.; Ding, H.; Cai, M.; Sun, L.; Liang, S.; Huo, Q. A Compact CNN-DBLSTM Based Character Model for Online Handwritten Chinese Text Recognition. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; pp. 1068–1073. [Google Scholar] [CrossRef]

- Usama, M.; Ahmad, B.; Singh, A.P.; Ahmad, P. Recurrent Convolutional Attention Neural Model for Sentiment Classification of short text. In Proceedings of the 2019 International Conference on Cutting-Edge Technologies in Engineering, Icon-CuTE, Uttar Pradesh, India, 14–16 November 2019; pp. 40–45. [Google Scholar]

- She, X.; Zhang, D. Text Classification Based on Hybrid CNN-LSTM Hybrid Model. In Proceedings of the 2018 11th International Symposium on Computational Intelligence and Design, ISCID, Hangzhou, China, 8–9 December 2018; pp. 185–189. [Google Scholar]

- Guo, L.; Zhang, D.; Wang, l.; Wang, H.; Cui, B. CRAN: A hybrid CNN-RNN attention-based model for text classification. In Proceedings of the 37th International Conference, ER 2018, Xi’an, China, 22–25 October 2018; pp. 571–585. [Google Scholar]

- Fei, W.; Bingbing, L.; Yahong, H. Interpretability for Deep Learning. Aero Weapon. 2019, 26, 39–46. (In Chinese) [Google Scholar]

- Huiping, C.; Lidan, W.; Shukai, D. Sentiment classification model based on word embedding and CNN. Appl. Res. Comput. 2016, 33, 2902–2905. [Google Scholar]

- Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep Inside Con-volutional Networks: Visualising Image Classification Models and Saliency Maps. arXiv 2013, arXiv:1312.6034. [Google Scholar]

- Dosovitskiy, A.; Brox, T. Inverting Visual Representations with Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recog-nition, LasVegas, NV, USA, 27–30 June 2016; pp. 4829–4837. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ce, P.; Tie, B. An Analysis Method for Interpretability of CNN Text Classification Model. Future Internet 2020, 12, 228. https://doi.org/10.3390/fi12120228

Ce P, Tie B. An Analysis Method for Interpretability of CNN Text Classification Model. Future Internet. 2020; 12(12):228. https://doi.org/10.3390/fi12120228

Chicago/Turabian StyleCe, Peng, and Bao Tie. 2020. "An Analysis Method for Interpretability of CNN Text Classification Model" Future Internet 12, no. 12: 228. https://doi.org/10.3390/fi12120228