3. System Model and Problem Formulation

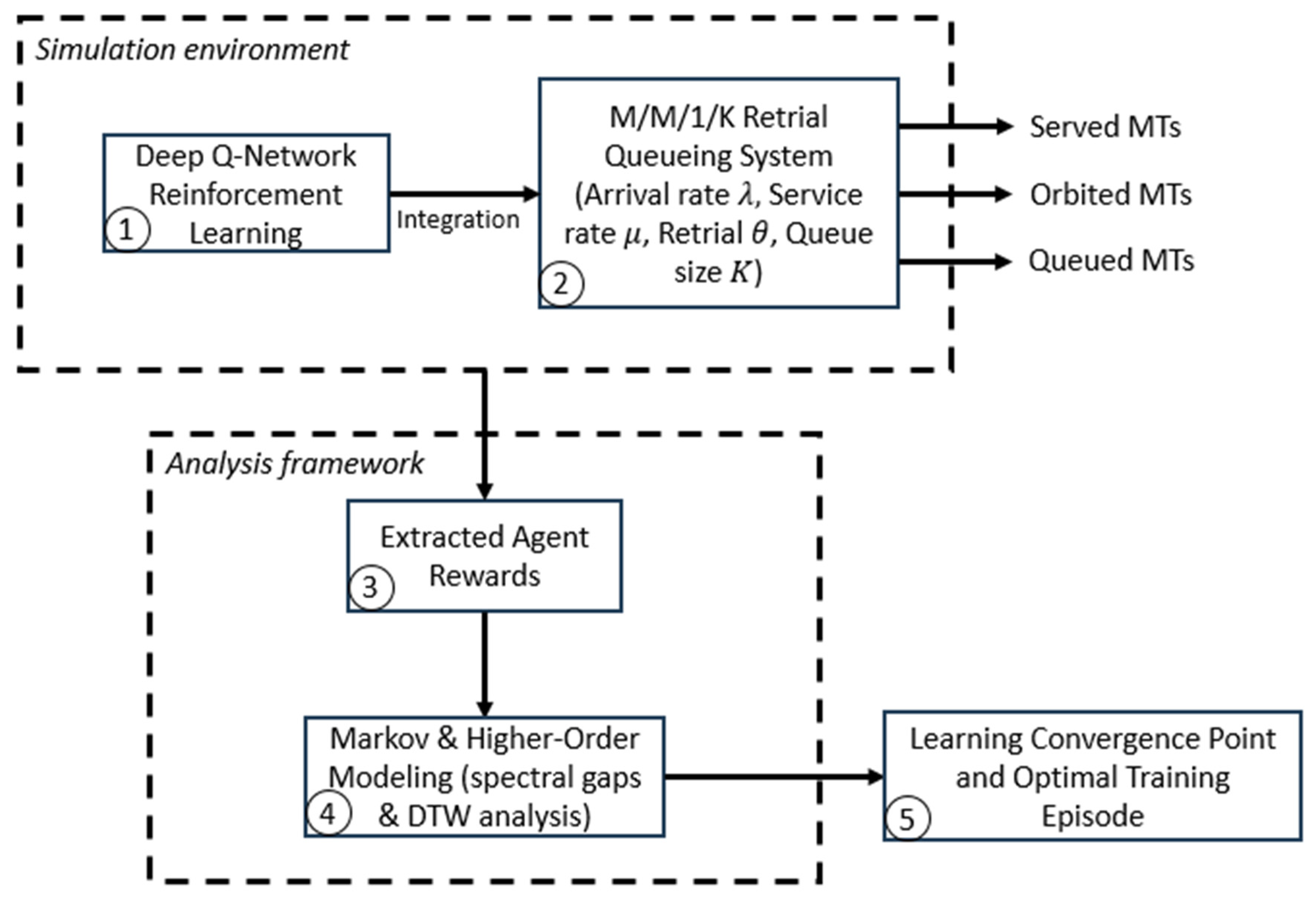

In this section, we present the modeling framework for the proposed RL-RQS model. We describe the retrial queueing system using the capture of realistic 6G network behavior. We define reinforcement learning and higher-order Markov modeling to optimize decision-making under dynamic traffic conditions.

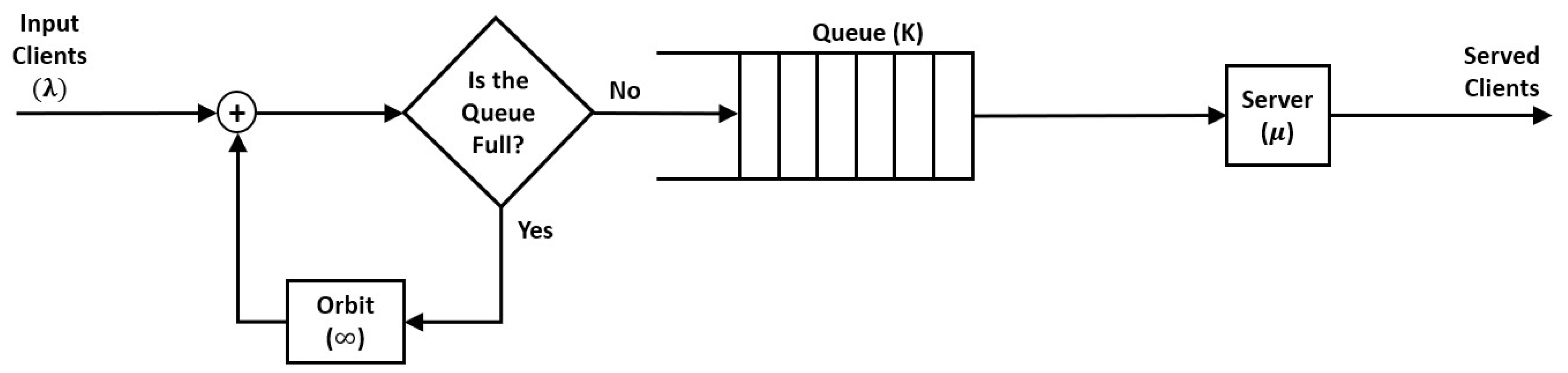

3.1. Retrial Queueing System Model

Among the existing queueing models, we chose a retrial queueing system. It is a system where customers who find the server busy upon arrival do not leave permanently but instead join a finite queue. Retrial queues are particularly relevant for wireless networks and telecommunication systems, where the packets or connection requests may need multiple attempts due to various scenarios. These scenarios may include unavailability of the channel, server congestion, or inference. These kinds of systems capture realistic behaviors in networks where blocking or immediate service denial is common.

Queueing systems are represented using Kendall’s notation: A/B/C/K/N/D. Each character refers to a specific property of the system:

A: Arrival process (e.g., M = Markovian/Poisson arrivals)

B: Service time distribution (e.g., M = exponential)

C: Number of servers

K: Queue length capacity (maximum number of customers that wait in the queue)

N: Population size (default )

D: Queue discipline (e.g., FCFS: First Come First Served)

For retrial queues, the classical Kendall’s notation is extended to include the orbit, capturing the behavior of customers who retry after an initial failure to join the queue.

In order to model the 6G core network scenario, we find that the M/M/1/K retrial queue system is particularly suitable for the following reasons:

M/M/1 refers to a single server with Poisson arrival (

) and exponentially distributed service time (

). This is common in network traffic analysis [

25,

26,

27,

28].

K defines the finite capacity of the queue. This aligns with the practical limits of 6G network buffers or slices in certain scenarios.

Retrial mechanism of the system, which captures the repeated attempts of packets that fail to access the server, reflecting dynamic QoS requirements and congestion in 6G scenarios.

The system explained provides a tractable framework. It helps to study multiple KPIs in scenarios with limited resources and high variability in traffic.

The server serves the clients with an exponential service time of mean

therefore, the probability of a service completion in

is:

The exponential distribution allows the system to be modeled as a Continuous-Time Markov Chain (CTMC). The state transitions depend on the rates

,

, and the retrial rate

. The probability of a new customer arriving in a small

is approximately:

The clients that could not join the queue join an orbit and retry after an exponential delay with mean

. The probability of retrying within

is:

We define the balance equation for state probabilities as follows:

For a full system (

), retrials are included:

where the orbit probability is:

The system is considered stable as long as the traffic intensity satisfies:

Satisfying this condition, the system remains stable enough that arrivals do not exceed the service capacity.

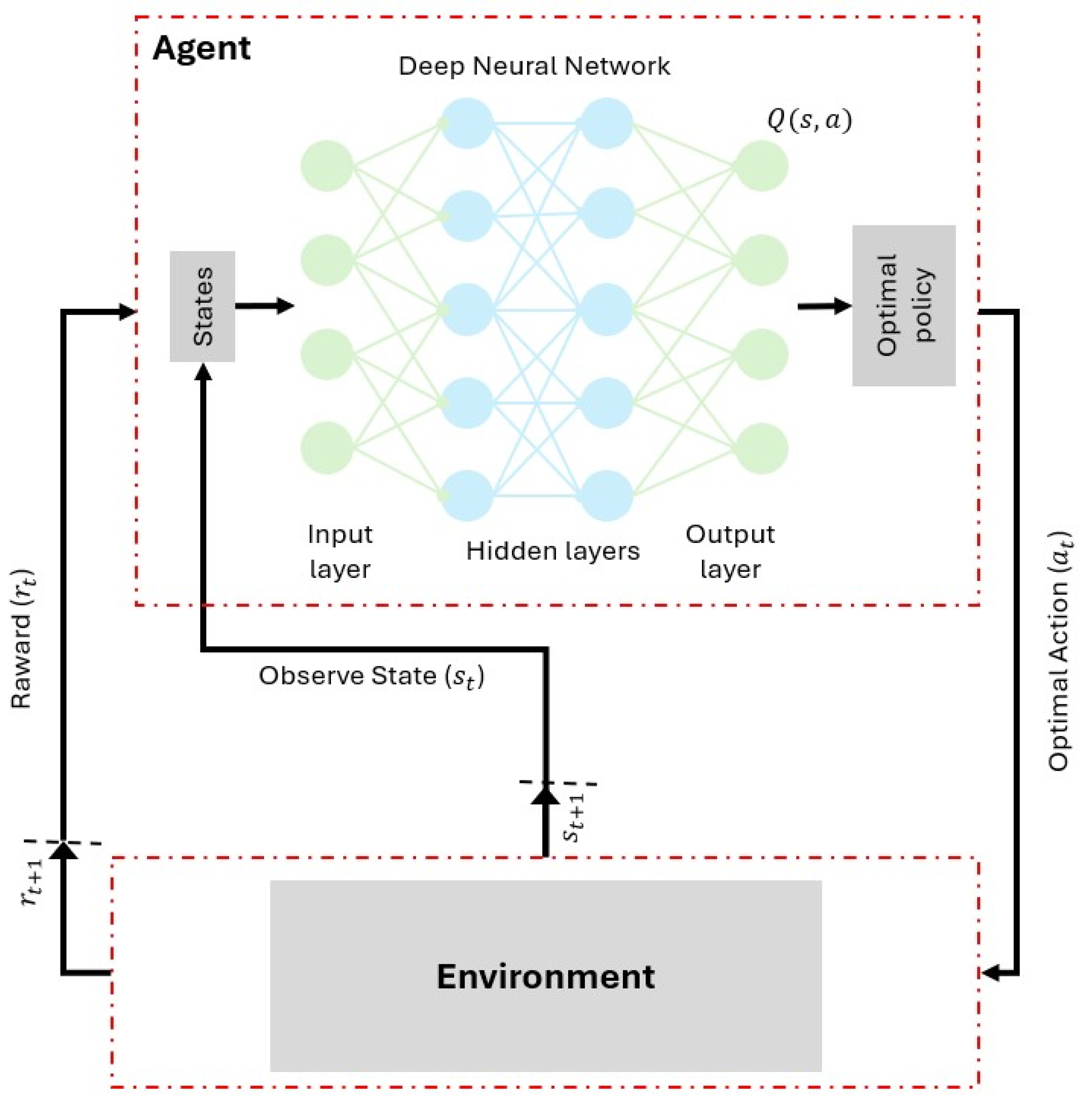

3.2. Deep Q-Network Reinforcement Learning Framework

Among the types of machine learning that are used for enhancing the performance of any system, we find Reinforcement Learning. RL is a decision-making paradigm where the agent interacts with the environment by observing states, executing actions, and receiving rewards as a type of feedback. By doing so, the agent aims to learn the optimal policy that maximizes the expected cumulative reward over the learning time. Q-learning is a method where the agent estimates the state-action value function , making a prediction for the long-term utility of performing an action in state . Traditional Q-learning is a tabular representation of Q values. It becomes computationally infeasible in large or continuous state spaces, particularly for next-generation wireless network scenarios.

This limitation has been addressed by proposing Deep Q-Networks. DGN employs deep neural networks to approximate the Q-function. Thus, it helps the agent to generalize across high-dimensional inputs and make decisions in complex environments.

The DQN framework is characterized by several components, as explained in

Table 1, and has the shown structure in

Figure 2. The primary objective of the training is to minimize the temporal-difference (TD) error. Which is defined by the loss function as follows:

where the symbols represent the following:

and denotes the current and the next states.

is the action taken in state , and represents possible next actions.

is the immediate reward.

is the discount factor.

is the approximated action-value function.

is the target network used to stabilize learning.

This formulation ensures that the DQN updates its parameters by reducing the squared Temporal-difference error between the predicted and target action values.

Over time, and by refining the Q-function, the DQN framework enables the agent to approach near-optimal policies, even in complex and highly dynamic environments. Therefore, the DQN framework has become a promising tool for decision making, resource allocation, and scheduling optimization for many complex systems such as 6G communication systems, where we find that the network conditions exhibit stochastic and time-varying behavior.

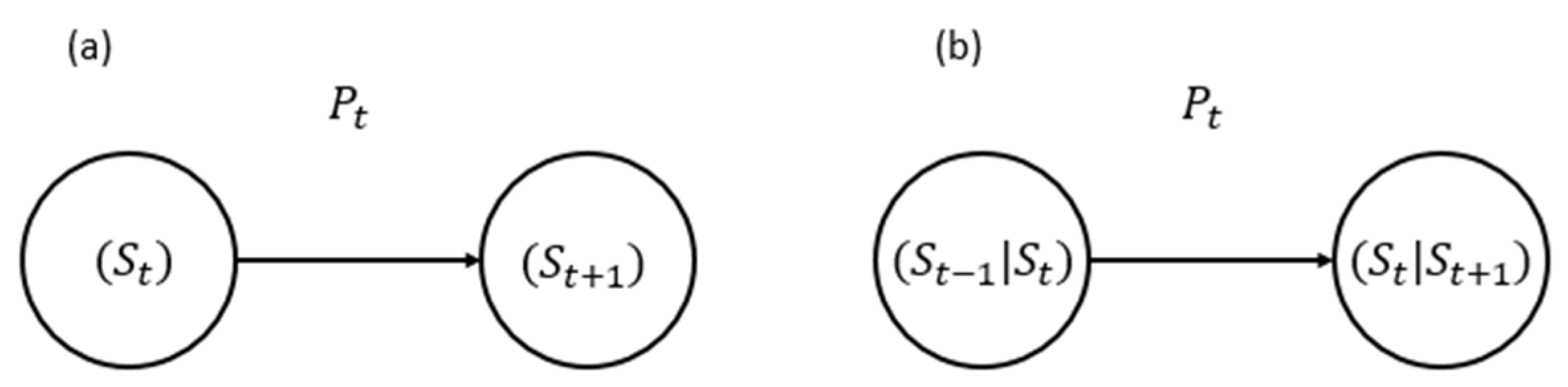

3.3. Markov and Higher-Order Modeling

A Markov chain is used for characterizing the stochastic behavior of dynamic systems, particularly in next-generation wireless networks, where the system states evolve over time. First- and higher-order Markov chains have been widely applied in network modeling and queueing systems to predict state transitions and system dynamics under uncertainty. The first-order model assumes memoryless transitions, while second-order models account for dependencies on previous state, providing more accurate predictions in environments with temporal correlations. As it was explained in previous sections, prior studies rarely integrate these models with deep reinforcement learning for adaptive resource allocation in 6G mobile networks, which is the focus of this work.

Following the first-order Markov model, the future state of a process depends on its present state (see

Figure 3a). This property benefits memoryless transitions between network states. A first-order Markov chain is a stochastic process

with Markov property:

The state space is

, for all

, and the transition are governed by the transition tensor

:

For stationary distributions,

satisfies the following condition:

The mixing rate is given by the spectral gap:

where

is the second-largest eigenvalue of

. The hitting time is inversely related to the spectral gap:

A large spectral gap means a small hitting time, indicating rapid state transitions and good mixing.

Real-world 6G environment often exhibits dependencies that extend beyond immediate past states, leading to the use of higher-order Markov models. Due to the considered past events, these models capture more complex temporal correlations, providing a more comprehensive representation of system dynamics and performance fluctuations. The second-order chain relaxes the memoryless property to two past steps, as shown in

Figure 3b and presented as follows:

The second-order transition tensor is defined as:

Such models enable accurate prediction and decision-making under uncertainty, supporting adaptive resource allocation and reliability estimation, particularly for high-speed communication networks. The integration of the first- and second-order Markov models with DQN-RL NN further enhances the system’s decision-making to learn and adapt to dynamic environment patterns.

represents global inter-cluster spectral pap, and

gives the local intra-cluster spectral gap. Common interpretation of the (

,

) metric pairs is given in

Table 2.

The first- and second-order spectral gaps (, ) provide valuable insight into the system’s temporal dynamics and stability. In the context of 6G networks, these analyses further facilitate the identification of metastable communication states and dynamic bottlenecks that are expected to be one of the challenges of the integration of many technologies. We believe that applying methods such as the first- and second-order Markov models with NN-based reinforcement learning would help the system capture temporal dependencies, resulting in enhanced convergence, diminished uncertainty, and more intelligent adaptation to environmental fluctuations.

4. Methodology

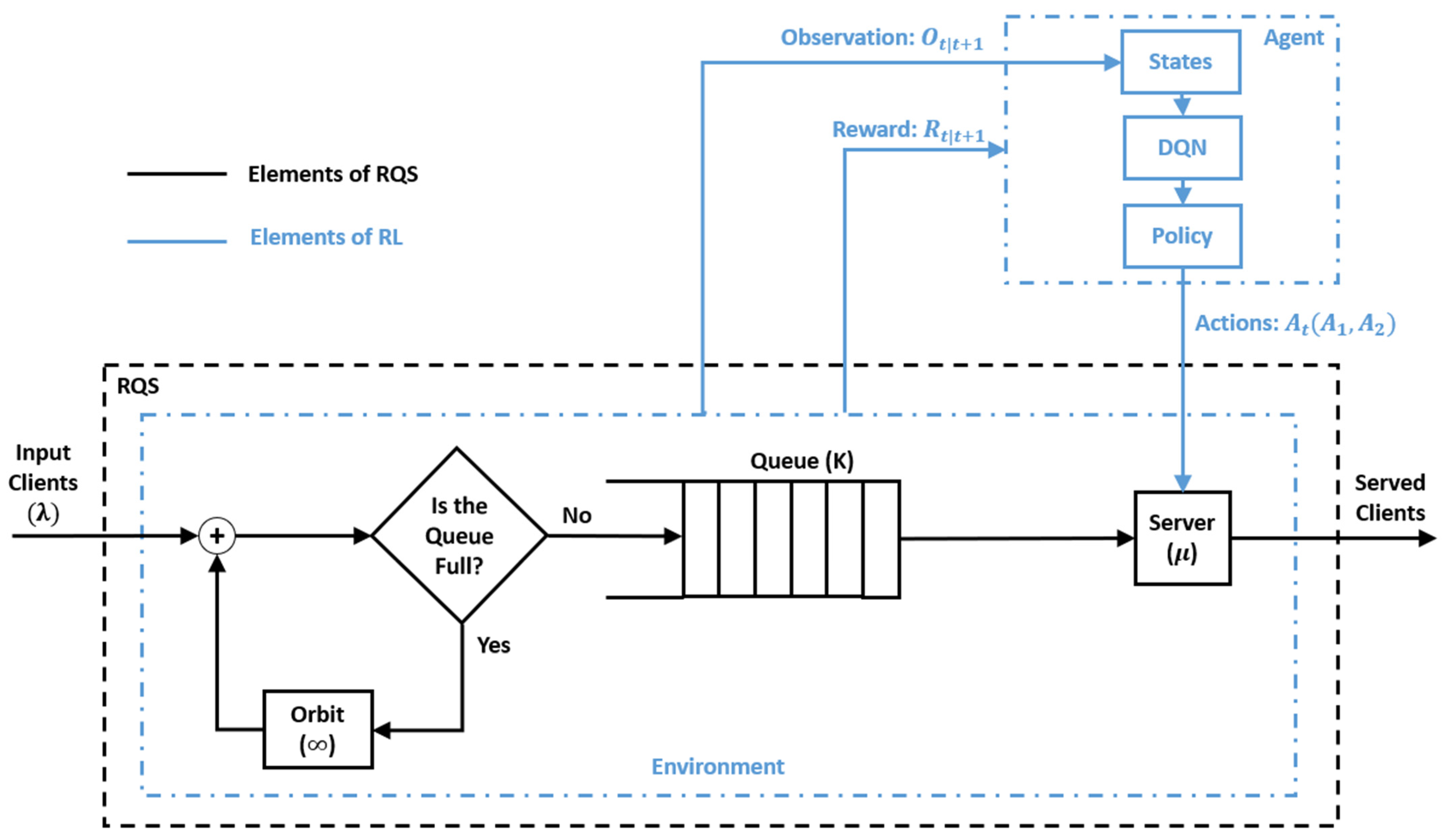

The proposed method aims to model and optimize the upcoming wireless communication access by integrating the DQN-RL into the RQS framework. This hybrid approach is expected to capture both the stochastic dynamics of user access requests and the adaptive decision-making required to maintain system stability under various traffic conditions. The access process between the Mobile Terminals (MTs) and the Access Point (AP) is modeled using/M/1/K retrial queue (see

Figure 4) following Kendall’s notation. The arrival rates is symbolized with

, serving rate by

, and queue size by

.

The retrial mechanism mirrors the practical behavior of 6G terminals. This end, attempt re-access after failed initial connections caused by temporary congestion, beam misalignment, or fading.

In order to introduce adaptive intelligence to the queueing system to match the native intelligence nature of the 6G, we chose to integrate an RL mechanism into the RQS framework. This enables adaptive intelligence in the queueing systems, enabling them to learn optimal control strategies from experience rather than relying on static probabilistic assumptions. The RL approach is chosen because 6G access is highly dynamic and stochastic, making static policies insufficient, while DQN allows the agent to learn optimal actions from interaction, balancing queue stability and throughput in real time.

In the proposed model, the agent acts as an upper-layer controller that continuously observes the system’s state, represented by the current queue size and AP status (busy or idle) (see

Figure 5 and Algorithm 1), and selects optimal actions to maintain service efficiency. The agent can choose one action from the actions list that contains two choices:

Action 1: No intervention, the system operates normally.

Action 2: Immediate interaction to force the AP to serve one queued MT.

In

Figure 5,

represents the observation at time

associated with the transition to time

.

represents the reward obtained at time

as a result of the transition from

to

.

The agent’s goal is to minimize queue congestion and maximize service throughput, which is formulated by the reward:

where

and

are the changes in the number of served and queued MTs between consecutive epochs.

and

are weighting coefficients (

) balancing throughput maximization and queue stability.

, being higher, encourages the agent to prioritize service completion, while

forces a stronger penalty on queue growth, ensuring system stability under heavy traffic conditions.

| Algorithm 1. Pseudo-code of the integrated RL-RQS framework for dynamic queue management. |

| 1 | Input: λ: Arrival rate, μ: Service rate, θ: Retry rate, |

| 2 | time_sim: Total simulation time, |

| 3 | K = Maximum queue length |

| 4 | Output: Optimal policy for queue management (based on DQN) |

| 5 | Define Environment (#1) |

| 6 | It contains RQS simulation-based parameters, states, and rewards. |

| 7 | State Representation (2D vector) (#2) |

| 8 | - -

Number of MTs in the queue

|

| 9 | - -

AP status (busy/free)

|

| 10 | Reward Function (#3) |

| 11 | |

| 12 | Observation (#4) |

| 13 | - -

Current queue length

|

| 14 | - -

AP status (busy/free)

|

| 15 | Actions (#5) |

| 16 | If Action 1 → Agent does nothing |

| 17 | If Action 2 → Agent forces AP to serve one MT. |

The set reward ensures that the agent continuously maximizes system efficiency without compromising fairness or stability. Over successive training iterations, the agent updates its policy by evaluating the long-term impact of actions rather than short-term gains, ultimately converging toward an optimal service strategy that minimizes latency, reduces queue congestion, and enhances overall throughput. The design of this reward function thus serves as the cornerstone for achieving intelligent, self-adaptive behavior in the proposed 6G queueing model.

Table 3 summarizes the simulation parameters used to evaluate the RL-RQS system. We tried to build a system using a configuration that reflects realistic 6G traffic dynamics while allowing controlled experimentation with different load and congestion levels. We varied the following parameters: (i) the arrival rate (

) varies across multiple scenarios to emulate different traffic intensities, (ii) the queue size (

) to study its impact on system stability and waiting time, and (iii) the scaling factor (

) and its complementary weight (

) to govern the trade-off between maximizing service throughput and controlling queue buildup.

We fixed the following parameters: (i) the service rate () to represent the average processing capability of the access point, (ii) the retry rate () to model the frequency of retransmission attempts from orbiting requests, (iii) the simulation time to ensure consistent convergence and performance comparison across all parameter settings, and (iv) the training episodes to enhance the learned knowledge.

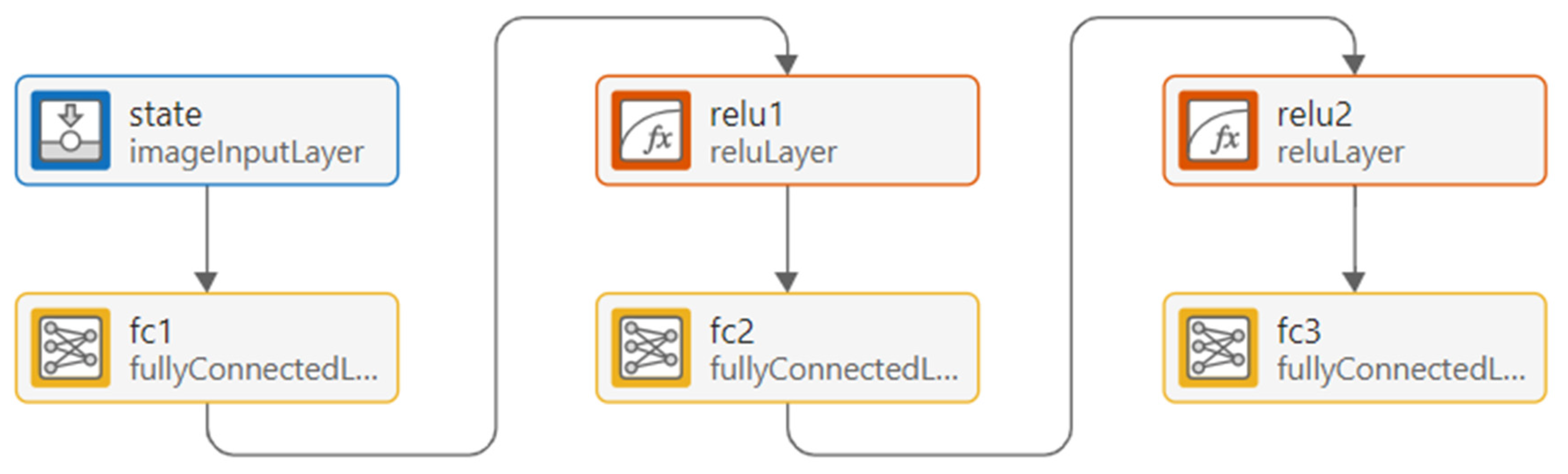

The architecture of the DQN agent is shown in

Figure 6, which consists of an input layer representing system states that receive a

feature vector representing the environment state, followed by two fully connected layers with

neurons each and ReLU functions, and an output layer estimating Q-values for each possible action. This design enables the system to dynamically adapt to real-time network fluctuations, achieving improved load balancing and reduced waiting time compared to a static queueing approach.

In the next section, we explain the outputs extracted from the simulations and interpret the results. Further investigations are made using first and second-order Markov chain models. The behavior of both spectral gaps described in

Section 3.3 allows us to understand deeply the agent’s reward function. Dynamic Time Warping is applied to process the similarity of Markov steady states with different numbers of dimensions.

5. Results and Discussion

In this section, we investigate how varying reward weighting influences the learning behavior and the system performance. We present the results of higher-order as well as Markov models and discuss the findings using the DTW metric to validate the results.

5.1. Impact of Reward Weighting on RL-Enhanced RQS Performance

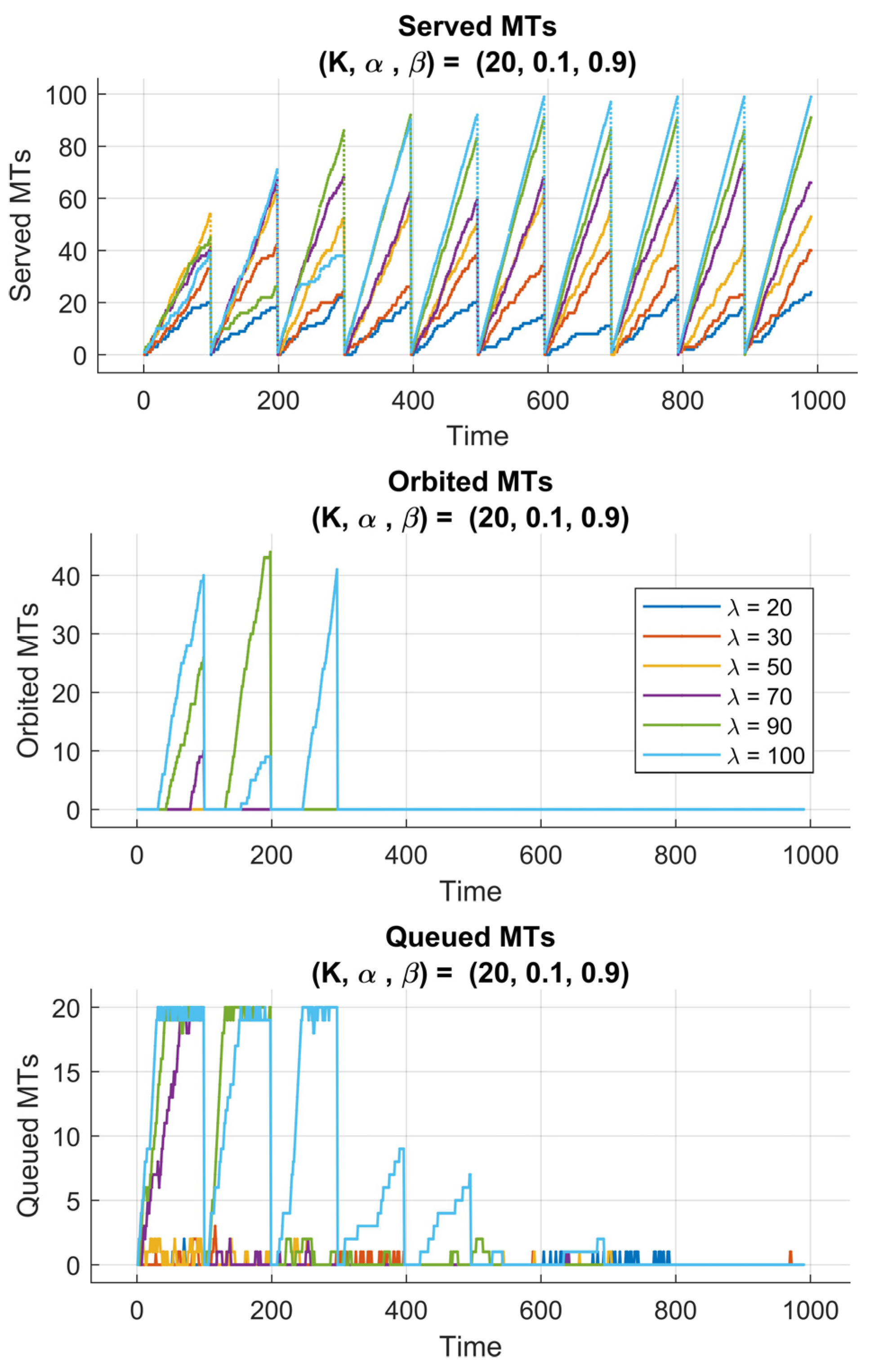

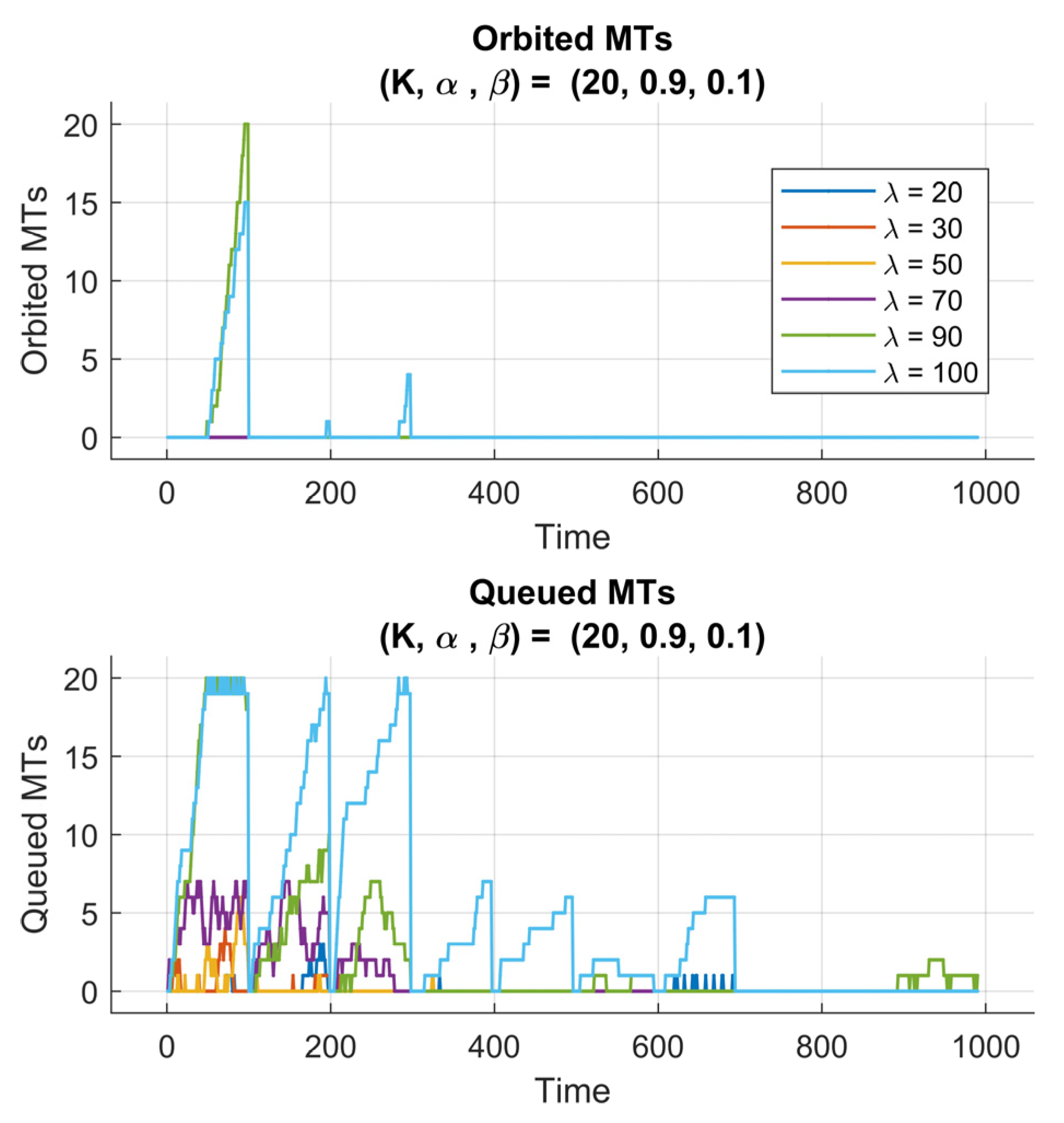

Figure 7 and

Figure 8 present the performance evaluation of the proposed RL-enhanced RQS model under two different reward configurations, characterized by

and

, respectively. Each experiment spans 10 learning episodes, with 100 time steps per episode, and is conducted across multiple arrival rate scenarios

. The subplots illustrate the temporal evolution of served, queued, and orbited MTs, reflecting how the reinforcement learning agent adapts to varying traffic intensities and queue dynamics.

In

Figure 7, we plot the served MTs, orbited MTs, and queued MTs for the case (

). We observe that by setting a high value of the weight

, the reward function places higher emphasis on queue stability rather than maximizing throughput. This initially allowed the agent to adopt a conservative policy, prioritizing queue regulation and minimizing congestion. One can observe, in the first training episodes, that the number of served MTs increases gradually, indicating that the agent is still exploring the environment and refining its understanding of the system’s state transitions. Later, the agent drops in performance, seen as temporary declines in served MTs, which correspond to exploration phases, where the agent tests alternative actions to discover potentially better policies. Over the 10 episodes, the RQS exhibits consistent improvement in serving efficiency, proving that the agent could successfully learn a balanced strategy that stabilizes both the queue and the orbit sizes.

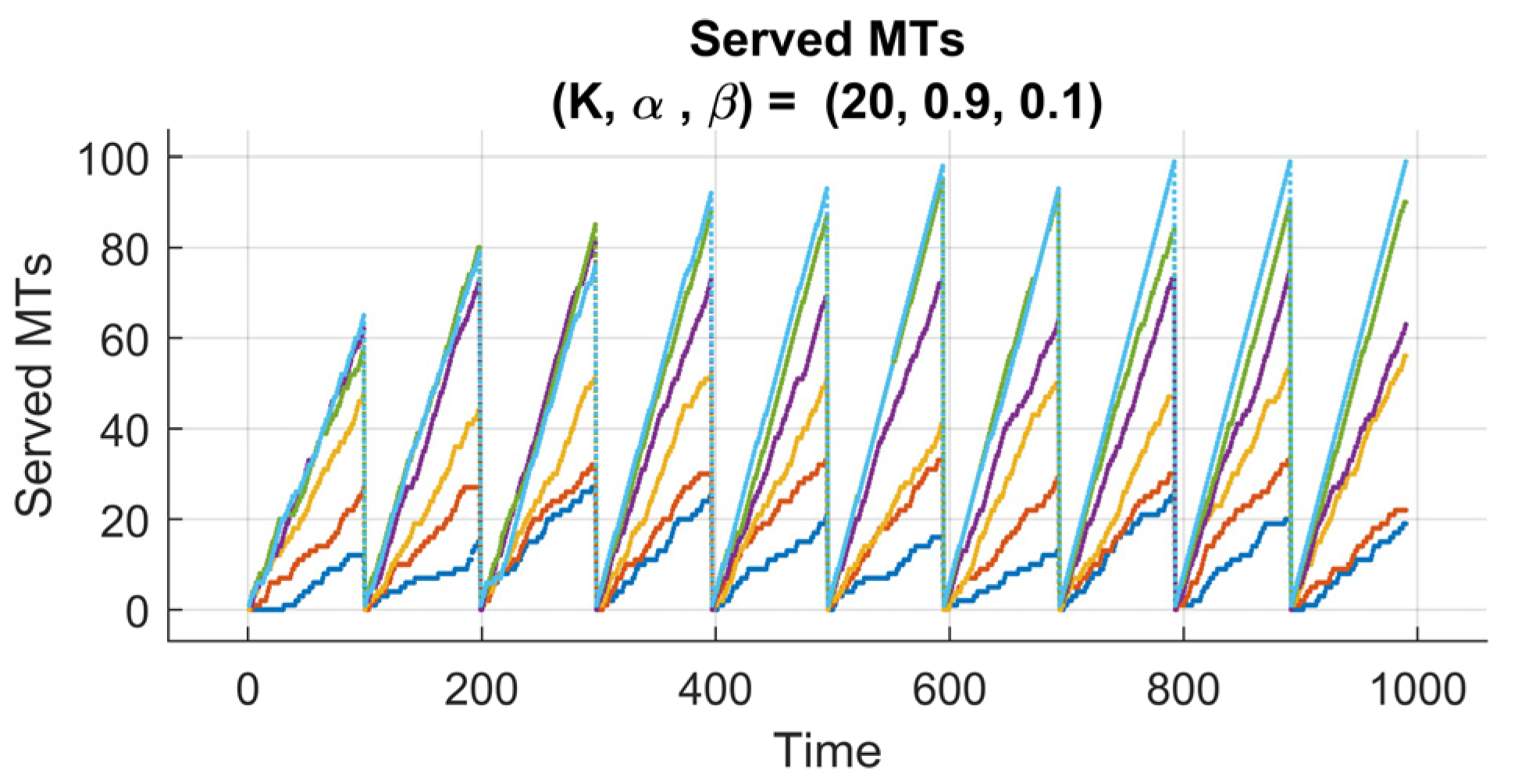

Conversely,

Figure 8 (

) presents the impact of a reward function in prioritizing the throughput maximization. The larger scaling factor

strongly forces the agent to serve as many MTs as possible, and this knowledge gets stronger from episode to episode. As a result, the system experiences a faster rise in the number of served MTs, especially during the first episodes. However, this aggressive serving learned strategy sometimes leads to temporary spikes in the queued MTs, this is obvious because the agent focuses more on short-term service gains rather than maintaining long-term queue balance.

Despite that, the number of queued MTs number eventually decreases as the policy converges, indicating that the agent’s adaptation is in interplay between immediate and cumulative rewards.

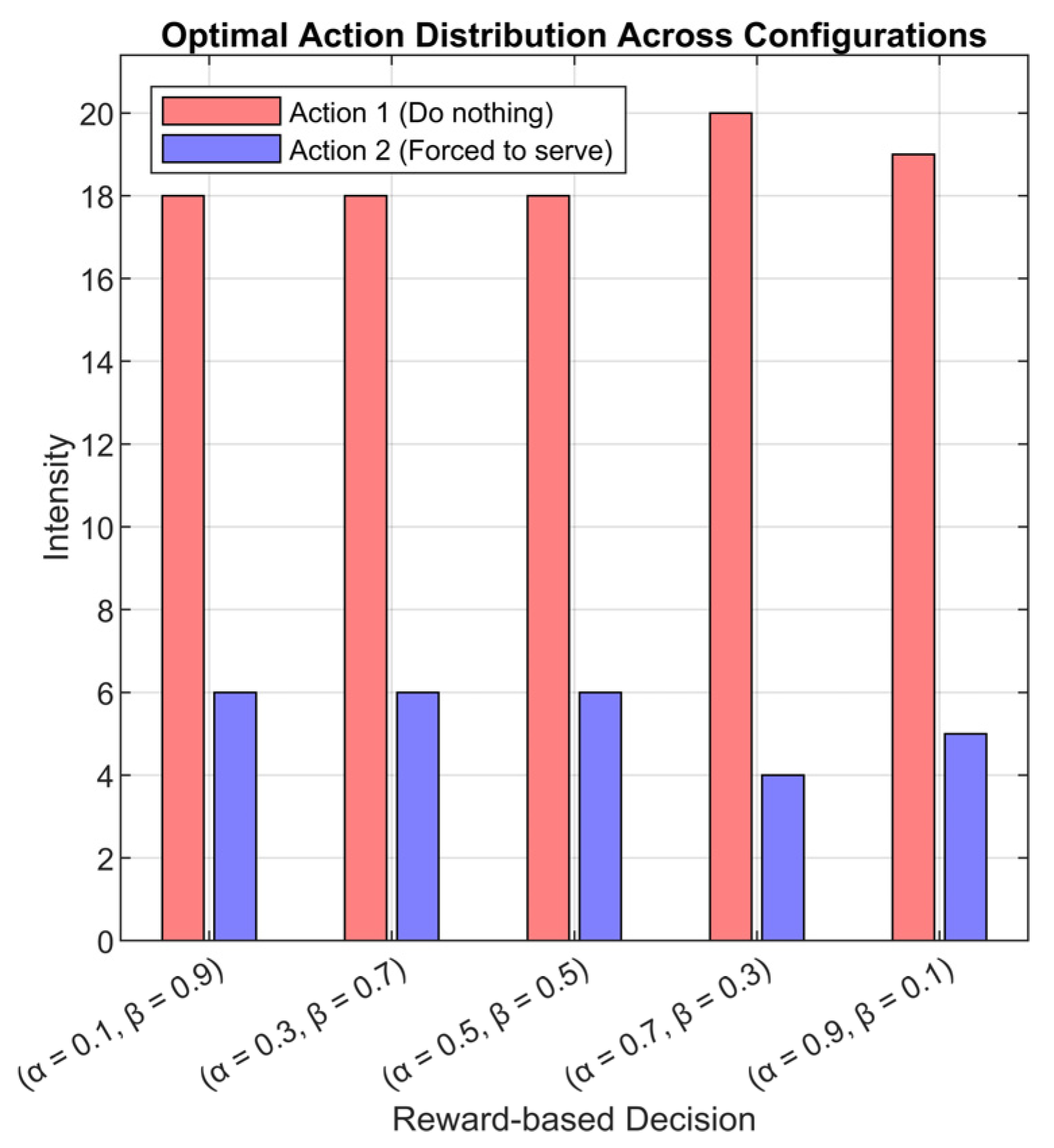

Figure 9 presents the distribution of the most frequently selected actions by the RL-enhanced RQS agent across all simulated scenarios. For each neuron output, the action with the maximum Q-weight was identified, and the predominant action across simulations was aggregated and visualized as a histogram. In the figure, red bars represent action 1 “No intervention, the system operates normally” and blue bars represent action 2 “Immediate interaction to force the AP to serve one queued MT”, with the sum of the two always equal to 24 cases for each scenario, reflecting the total number of simulation instances. The output shows that action 1 “No intervention, the system operates normally” is the most chosen action, indicating that the agent has learned to maintain system stability and avoid unnecessary interventions. Action 2 “Immediate interaction to force the AP to serve one queued MT” occurs primarily under high arrival rate conditions (λ = 100), where the server is insufficient to prevent queue accumulation and increased MT orbiting without the agent’s help. One must note that the summation of red and blue bars is exactly 24, indicating the number of cases (

cased of

and

cases of K). This helps in the comparison between the reward weights.

These observations confirm that the RL agent effectively internalizes system dynamics, selectively intervening only when necessary while allowing natural service progression under moderate or low traffic loads. This demonstrates a learned balance between service efficiency and queue stability, with the agent adapting its policy according to the state of the system. Moreover, the reward weighting factors (), queue size (), and arrival rate () shape the agent’s decision-making, influencing both the frequency of the interventions and the system’s throughput and queue behavior.

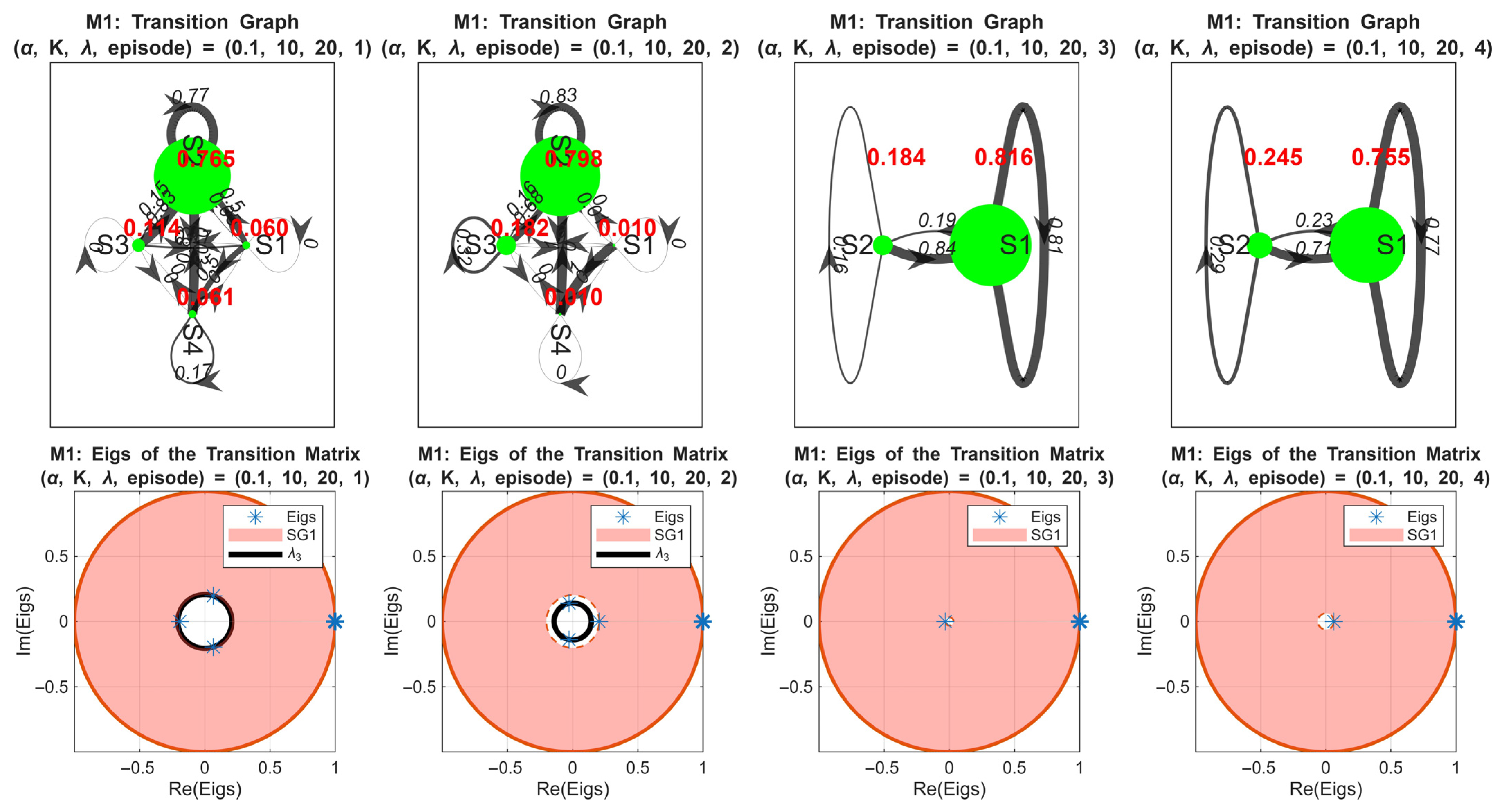

5.2. Mixing Time Computation

We analyzed the reward sequences per episode using Markov chain models to capture the convergence of the RL-RQS agent. We started by computing the first- and second-order Markov chains for all 120 simulated cases and for each episode, then we extracted the spectral gaps from the transition matrices. As previously explained, the spectral gaps provide a quantitative measure of the system’s mixing rate. This indicates how quickly the Markov chain reaches its steady state. For each Markov chain, the first and second spectral gaps were computed as represented in Equations (16) and (17), where and are the second and third largest eigenvalues of the transition matrix, respectively.

The state space represents the reward sequence. The transition tensor captures the probability of moving from state to in one step. For the second-order Markov chain, defines transitions considering the previous two states, giving the model the right to capture temporal correlation in the mobile network dynamics. Spectral gaps and are calculated from the eigenvalues of these transition matrices and quantify global and local mixing rates, respectively. As a result, we can say that they serve as indicators of convergence speed and system stability.

Figure 10 presents the first-order Markov chain (

) analysis for the simulation case

. The upper four figures show the transition matrices of the rewards for the first four episodes. We observe that the system exhibits four distinct states in episodes 1 and 2, then it is reduced to two states in episodes 3 and 4. This observation reflects the agent’s policy convergence and stabilization of the reward distribution. The lower four figures depict the corresponding eigenvalues and spectral gaps. The red disk represents the

interval (from 1 to

) and the black circle represents

. Notably,

increases rapidly in the early episodes as

, indicating fast convergence toward steady states. In episode 4, a slight reversal occurs, suggesting minor instability or over-exploration by the RL agent. The second spectral gap (

) decreases during the first two episodes and is absent in episodes 3 and 4 due to the reduction to two states, which is consistent with the mathematical expectation for smaller state spaces.

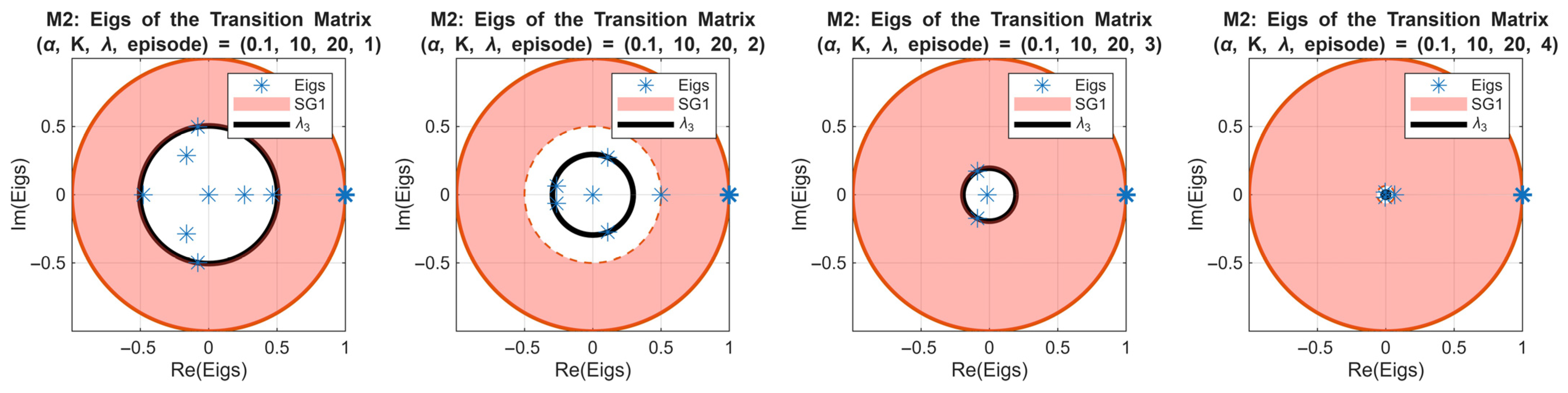

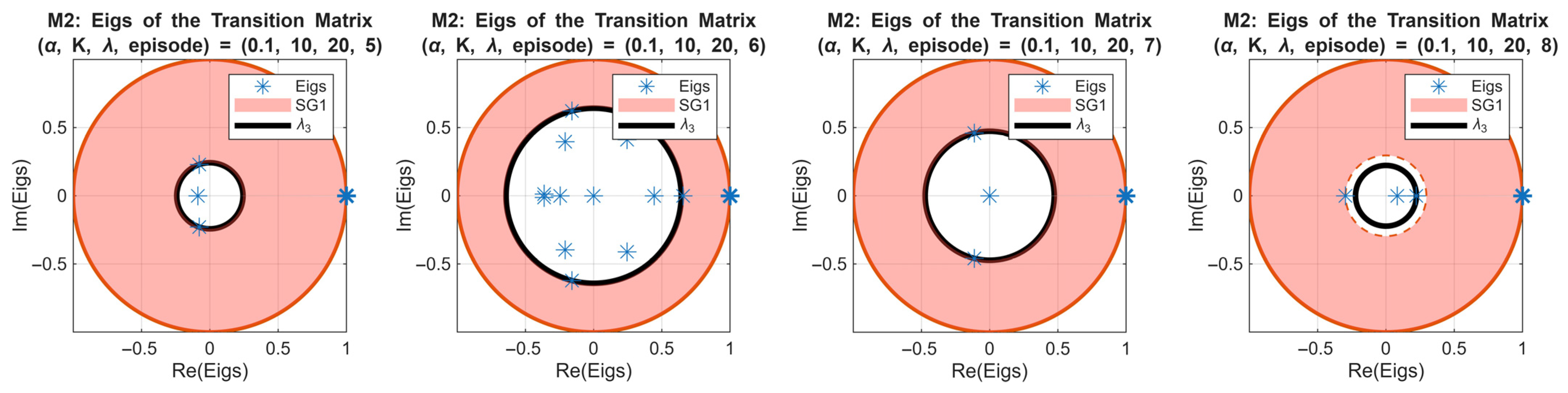

Figure 11 extends this analysis to the second-order Markov chain for the same simulation case. All eight subplots show eigenvalues of the transition matrices for episodes 1–8, with

(red disk) and

(black circle) highlighted. Compared with the first-order chain, the evolution of

is slower, reflecting the longer memory inherent in the second-order model. The spectral gap decreases after the 4th episode, indicating the over-learning in the policy.

This slower convergence highlights the ability of the second-order Markov model in capturing temporal dependencies beyond a single episode, providing a more nuanced view of policy dynamics over time.

It is important to mention that monitoring the spectral gaps provides a principled metric to determine the number of episodes necessary for RL training. The findings show that a rapid increase and stabilization of is interpreted by good efficiency of the agent’s policy and that it reached steady-state behavior, while slower or fluctuating spectral gaps suggest continued learning or instability. For this end, we can say that the episode at which converges can be used to identify the minimal number of training episodes required for the agent to achieve a stable and reliable policy. These results meet the 6G smart technologies requirements by providing a quantitative system-specific criterion for RL training duration, avoiding arbitrary choices and ensuring both efficiency and convergence in learning. The 6G smart technologies aim to support ultra-reliable, low-latency network management, this can be achieved by having control algorithms that converge rapidly, maintain stable operation under various dynamic conditions, and efficiently utilize system resources. Therefore, by analyzing the spectral gap of the first- and second-order Markov chains, our RL-RQS agent demonstrates fast convergence to steady-state policies (this is indicated by the rapid stabilization of ) and the ability to keep the reward distribution stable despite variations in network load. The quantitative assessment proves that the agent could easily achieve reliable decision-making within a minimal number of episodes, meeting the 6G requirement for efficient network control without long training or performance fluctuations.

The spectral gap analysis across first- and second-order Markov chains allows us to quantify the number of episodes necessary for the RL agent to achieve a stable policy. Also, the first-order Markov chain offers a rapid estimation of convergence, which can be useful for early-stage evaluation cases. The second-order Markov chain captures longer-term dependencies, enabling the detection of subtle instabilities or over-learning. Combining both orders identifies a practical range of episodes that balances learning efficiency with system stability, guiding the optimal training duration for the RL-enhanced RQS agent. This analysis further clarifies how critical parameters such as reward weighting coefficients directly influence convergence speed, policy stability, and system performance.

5.3. Validation and Metrics

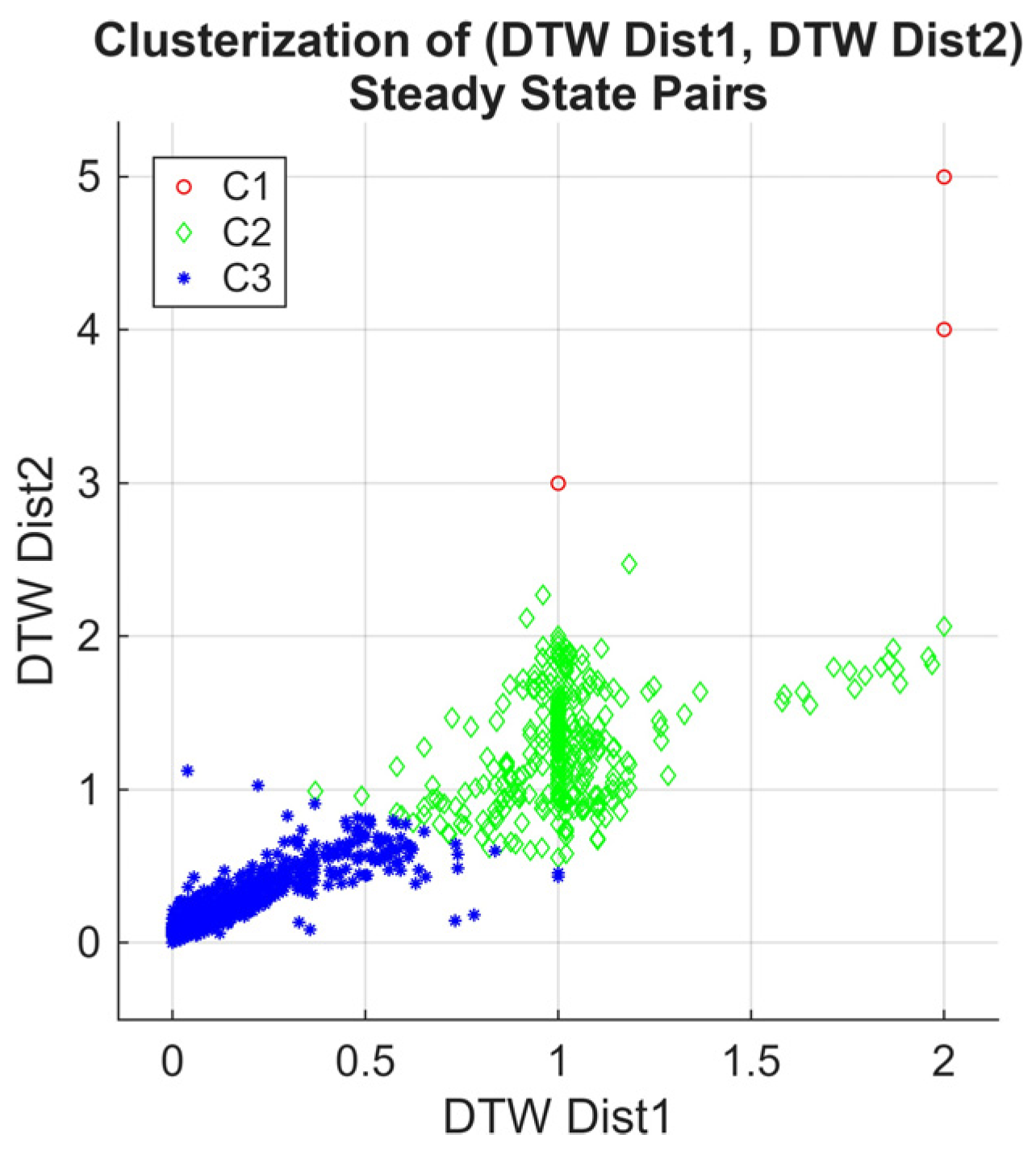

To validate the convergence behavior of the RL-enhanced RQS agent and quantify the similarity between the steady-state distributions of different Markov chain orders, we computed the Dynamic Time Warping (DTW) distances of the reward sequences’ steady states for both first- and second-order Markov chains across all episodes and 120 simulated cases. DTW provides a robust metric to compare sequences that may vary in length or temporal alignment, capturing both the rate and pattern of convergence.

The choice of DTW is motivated by its ability to compare sequences that may vary in length or exhibit temporal misalignment, which is common in reward sequences across episodes. Unlike standard probabilistic distance measure methods, DTW captures both the rate and pattern of convergence, providing a more robust metric for the stabilization evaluation of the agent policy over time.

In

Figure 12, we present a scatter plot of the DTW distances of the second-order Markov chain versus the first-order Markov chain. Using k-means clustering, three primary clusters emerge. The first cluster converges near zero, indicating cases where the steady states of both Markov chain orders are highly similar, reflecting consistent and rapid convergence of the RL agent’s policy.

The second cluster converges near one, representing the simulation cases where the steady states of the two orders differ significantly; this is likely due to the slower convergence or the presence of longer-term dependencies captured only by the second-order chain. In contrast, the third cluster contains sparse outliers; it reflects the anomalous behavior of the irregular transition of the policy.

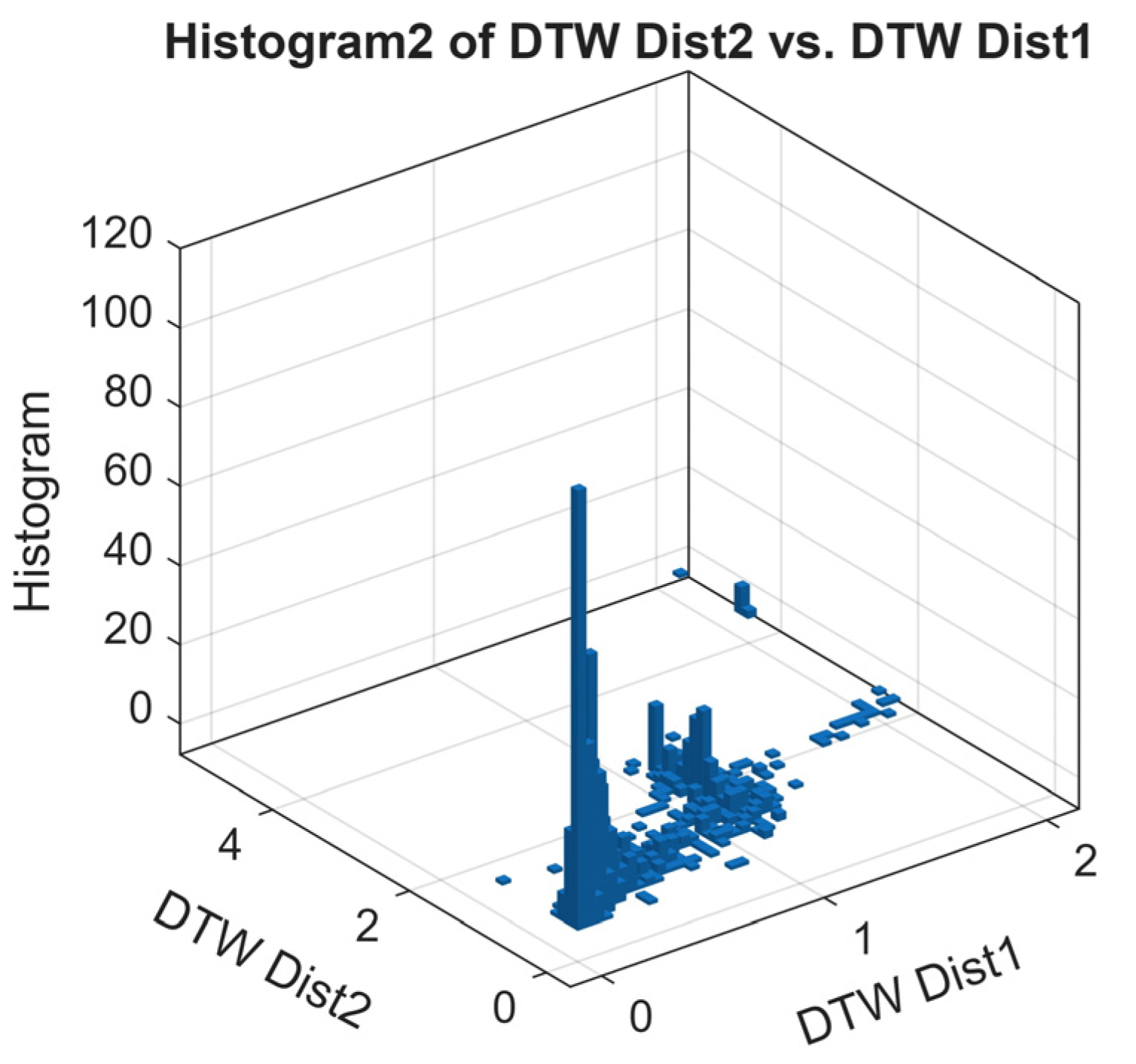

Figure 13 shows the histogram of the scatter plot, highlighting the density of DTW distances. The peaks near 0 and 1 confirm the presence of the two dominant convergence patterns: high similarity between the two Markov chain orders for most cases, and significant differences for a smaller set of episodes. The outliers contribute to the low-density tails of the histogram. Markov chain-based analysis validates the convergence assessment. Cases with DTW near zero indicate episodes where the first-order chain is sufficient to capture policy stabilization, whereas DTW near one highlights the episodes where longer memory effects, captured by the second-order chain, are important. This dual-order Markov model DTW comparison serves as a metric for quantifying the DQN-RL convergence and identifying the number of episodes required for further training.

6. Conclusions and Future Work

This paper presented an integration framework combining a retrial queueing system in a 6G environment with a deep Q-network reinforcement learning model and Markov-based higher-order analysis to enhance the network performance and achieve a so-called native intelligence technology. Analyzing the first and the second order of the Markov model’s components further brought analytical aspects into system dynamics and reward behavior. By studying the first and second spectral gaps, it was found that they serve as metrics to quantify the convergence speed and capture the optimal number of episodes required for the RL to reach policy stability. The dynamic time warping distance metric was utilized to compare two orders of Markov chain steady-state transitions, revealing the model’s temporal stability. Quantitative results indicate that, with the increase in the training episodes, the served MTs approached 100%, while both queued and orbited MTs decreased to 0%. Across all 120 simulation cases, the most frequently selected action was Action 1 (Do nothing) (approximately 75% of the time), demonstrating the agent’s ability to maintain efficient queue operation without continuously interrupting the server, highlighting the lower energy consumption. These observations reflect the behavior of the system under the simulated cases used in this study. The first- and second-order Markov chain analyses of the agent’s reward sequences confirm rapid convergence, showing that in some scenarios just five episodes were sufficient to achieve stable policy performance, rather than ten episodes. Potential improvements include refining the reward function, incorporating additional state features such as channel quality and mobility patterns, and exploring advanced RL algorithms or multi-agent coordination for denser network scenarios. Limitations of the current study include the reliance on simulation-based evaluation under controlled traffic conditions and the simplified single-agent RL setup.

A continuation step of this work will involve exploring multi-agent coordination, hierarchical learning, and real-world deployment across different conditions to enhance scalability and resilience in ultra-dense network environments.