Development of a Low-Cost Infrared Imaging System for Real-Time Analysis and Machine Learning-Based Monitoring of GMAW

Abstract

1. Introduction

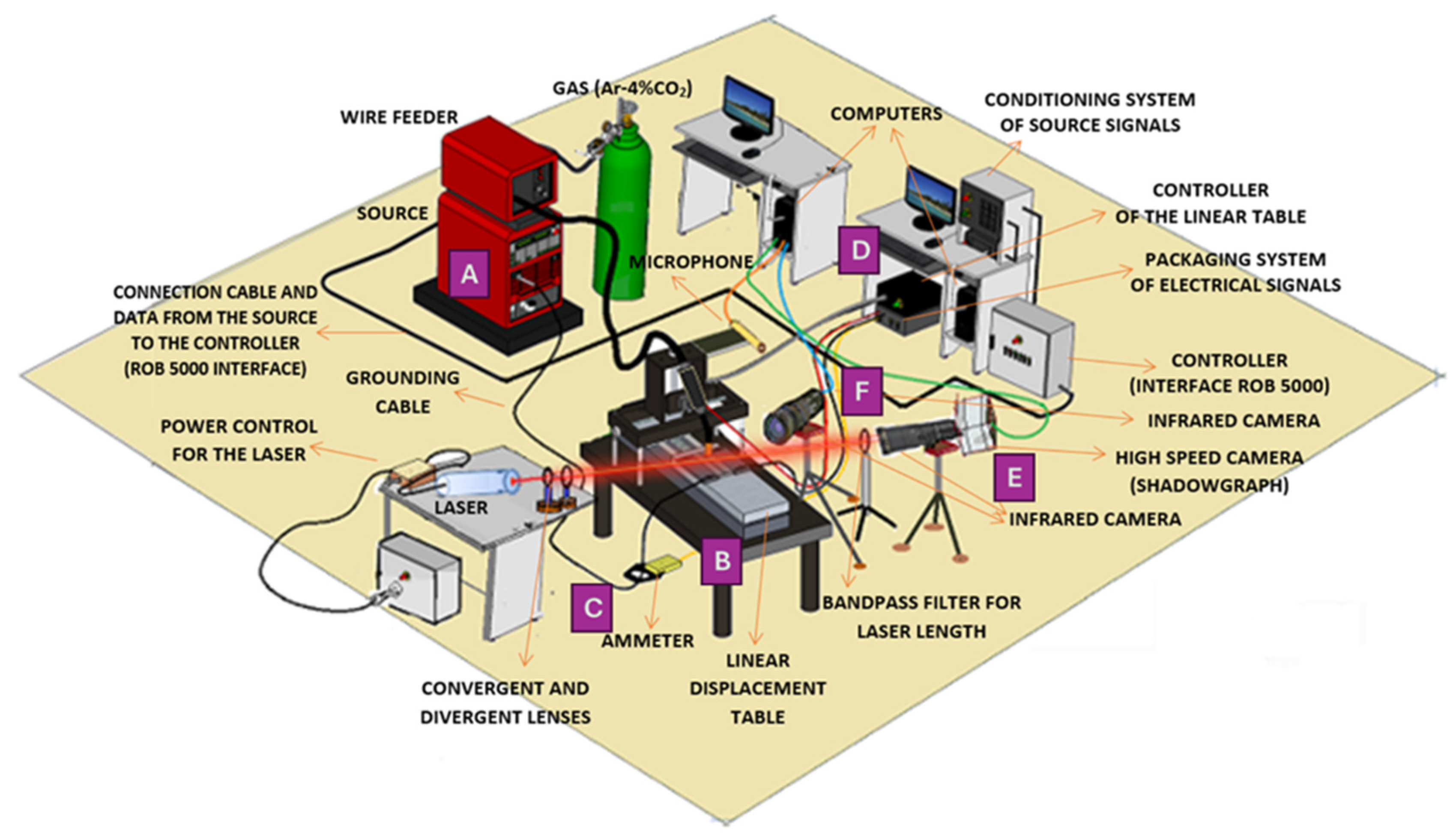

2. Materials and Methods

- (A)

- Power source (Model TransPlus Synergic 5000, Fronius, Inc., Pettenbach, Austria): the power source was configured to operate with a constant voltage output and variable wire feed speed control, specifically optimized to meet the requirements of the Gas Metal Arc Welding (GMAW) process.

- (B)

- Linear displacement table: This custom-built mechanical system provided controlled linear motion for both the workpiece and the welding torch. This setup enabled precise adjustments of travel speed and vertical positioning during welding.

- (C)

- Ammeter (Model i1010 AC/DC, Fluke Corporation Inc., Everett, WA, USA) and voltmeter (Model DVL 500, LEM Inc., Geneva, Switzerland): these instruments were used to monitor key welding parameters in real time, including the welding current near the arc and the voltage potential difference between the welding torch and the base material.

- (D)

- Data acquisition system (Model PCI Eagle 703s, Eagle Technology, Inc., Cape Town, South Africa): A high-speed data acquisition board was used to collect electrical signals (current and voltage) generated during welding. The acquired data were processed and analyzed using the LabVIEW and MATLAB R2016b software platforms.

- (E)

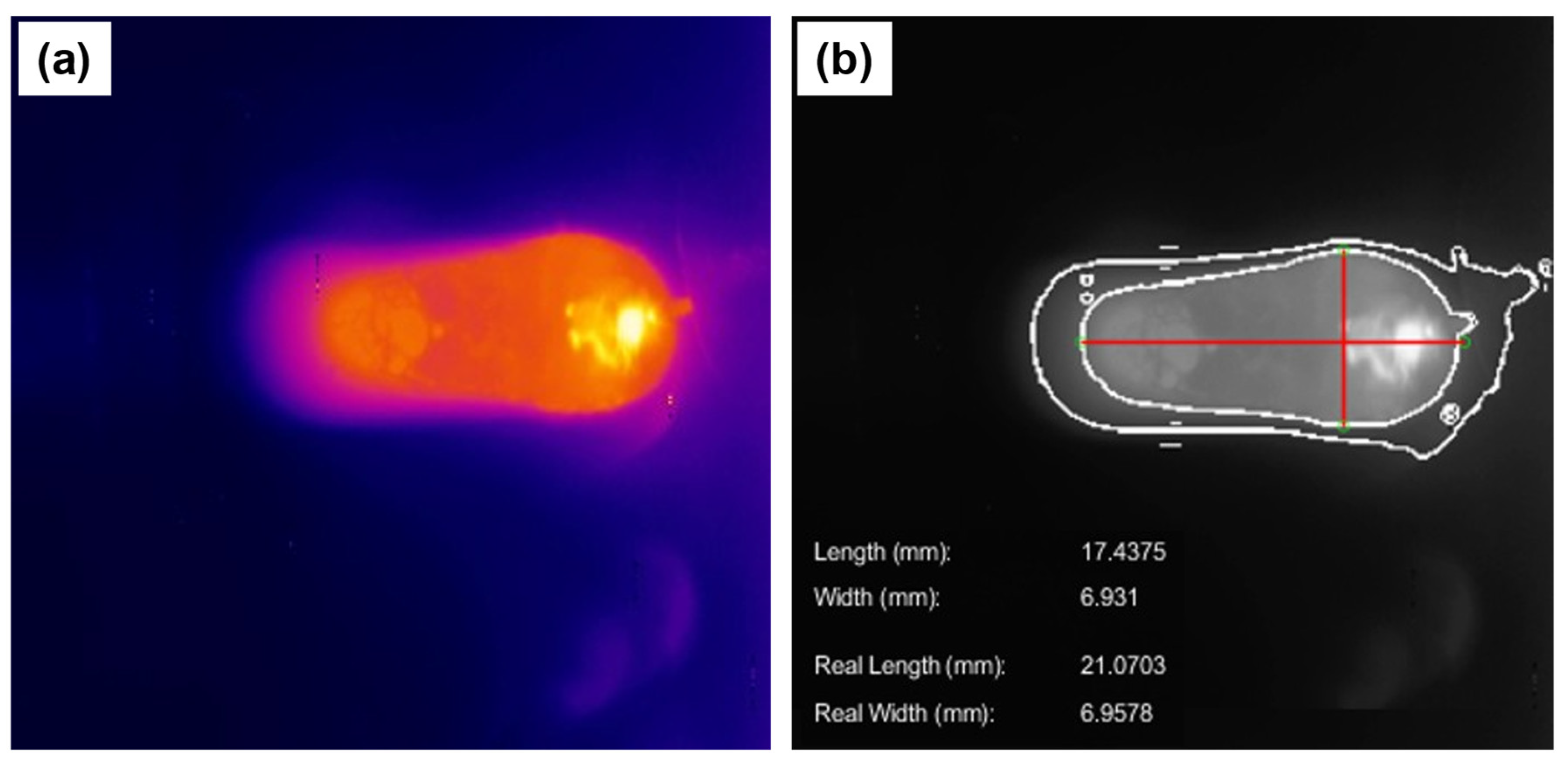

- CCD camera: a commercial CCD camera, sensitive to a broad spectral range (350–1150 nm), was employed and operated explicitly in the near-infrared range (1000–1150 nm) to capture real-time images of the evolving weld bead.

- (F)

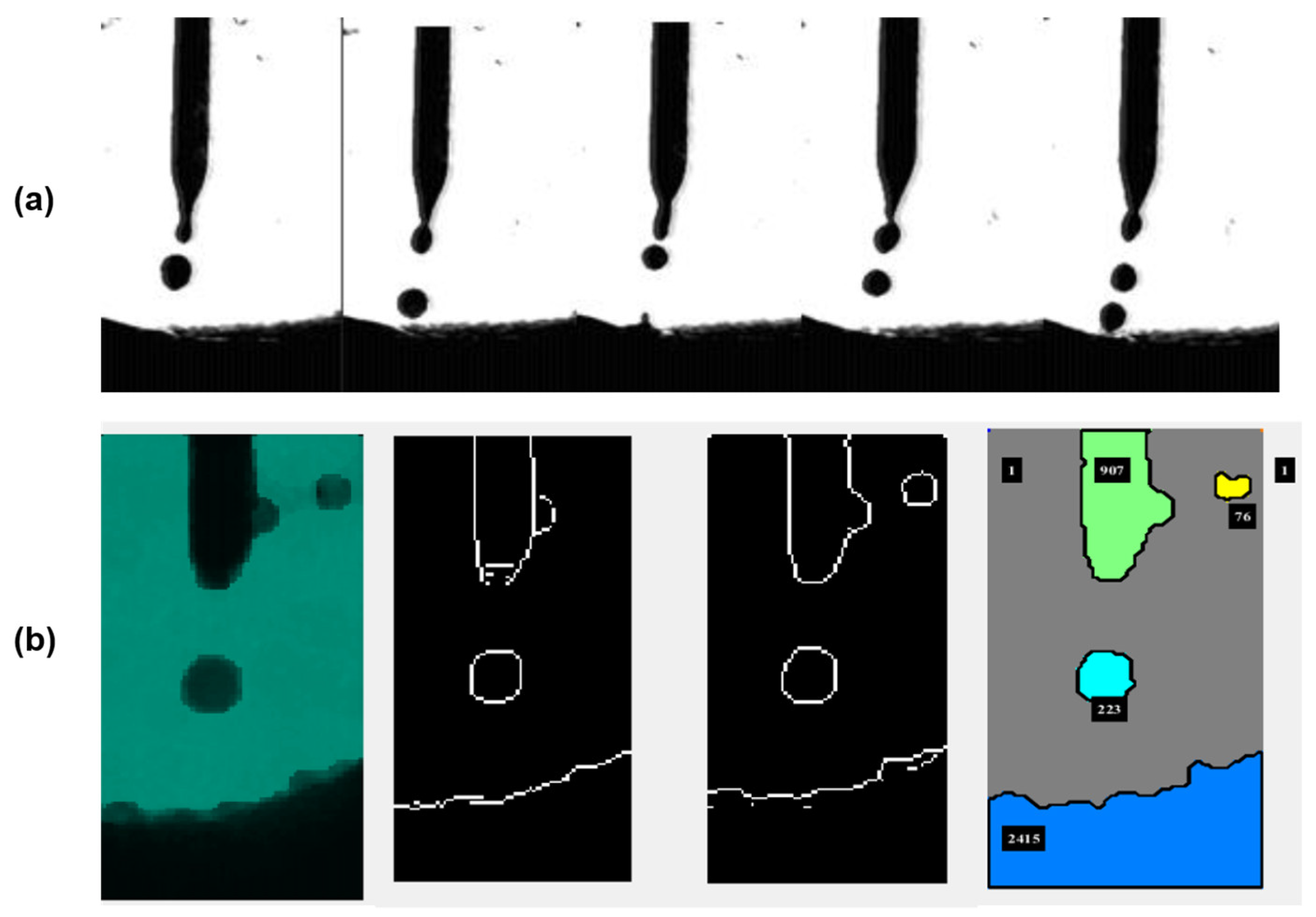

- High-speed infrared camera: This specialized camera captured rapid sequences of thermal images during welding, enabling detailed analysis of phenomena such as molten droplet formation, detachment from the electrode, and the thermal profile of the weld pool and surrounding material. A high-speed camera (Model 1M150-SA, Teledyne DALSA Inc., Waterloo, ON, Canada) with monochrome CMOS technology was used, offering 256 gray levels and a resolution of 96 × 128 pixels, with an acquisition rate of 1000 fps and a CMOS sensor exposure time of 50 µs. The light source was a He-Ne laser (633 nm, 15 mW) (Model He-Ne 633 nm and 15mW, Excelitas Technologies Inc., Waltham, MA, USA). To maintain a constant beam radius, a Galilean beam expander was constructed using a diverging lens (focal length = 40 mm) and a second converging lens.

- (G)

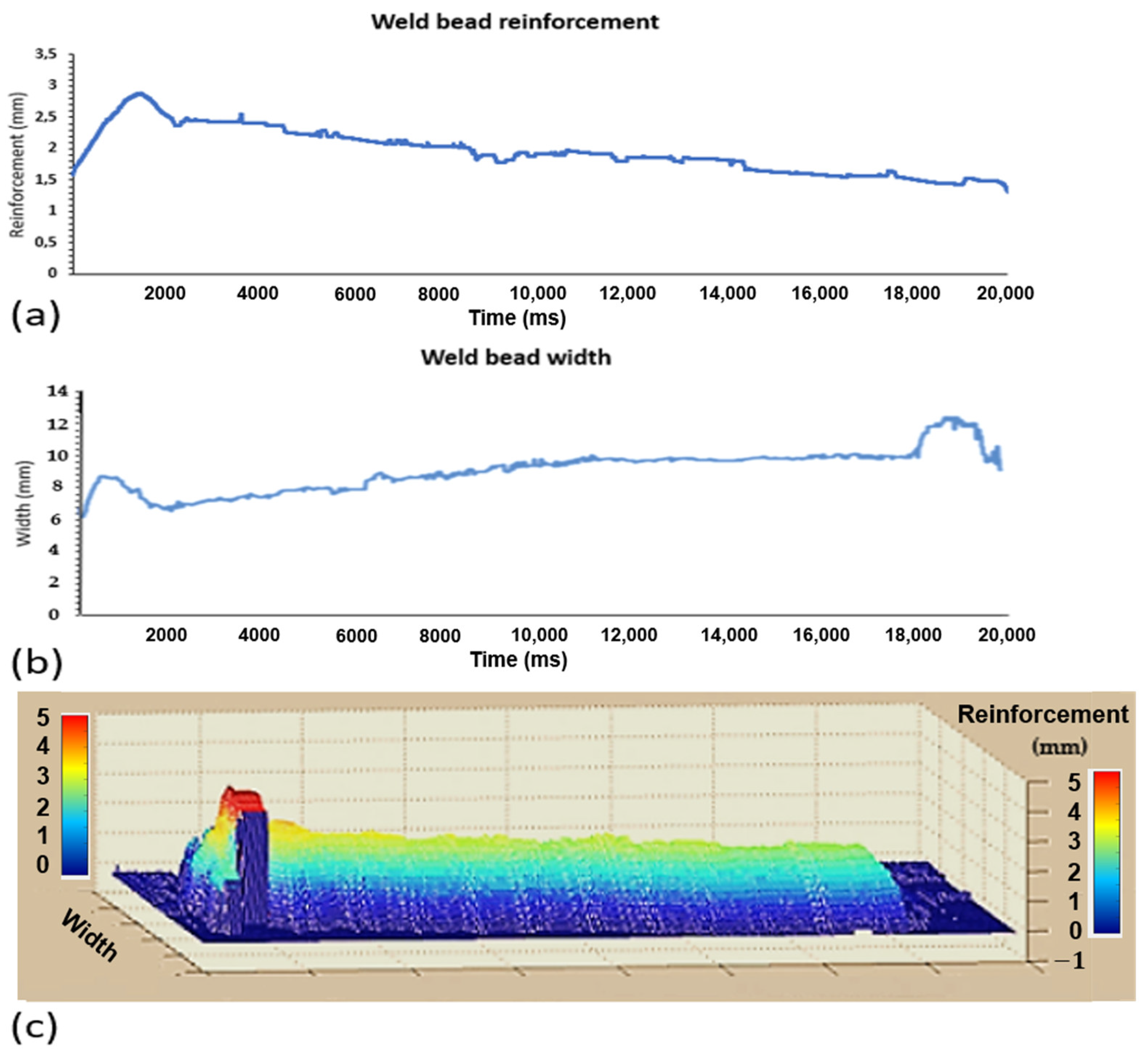

- Laser profilometer and optical filters: A laser profilometer (Model LLT 2950-100/BL, Micro-Epsilon Messtechnik GmbH & Co. KG Inc., Ortenburg, Germany) was used to perform precise geometric measurements of the solidified weld beads in conjunction with specific optical filters. The filters enhanced the clarity of the projected laser line and isolated its specific wavelength, enabling accurate measurement through point cloud data analysis. Each component is labeled in Figure 1 to facilitate correlation between the experimental setup and the visual representation.

- Filler metals: AISI ER316L solid wire (1.2 mm diameter) and E410NiMoT1-4 tubular wire (1.2 mm diameter) from ESAB Inc., North Bethesda, MD, USA. The chemical composition is shown in Table 1.

- Substrate: AISI 1020 (Aperam South America Inc., Timótio, Brazil) steel plates (6.35 mm × 250 mm × 50 mm).

- An infrared camera assessed weld bead length, reinforcement, and width.

- A laser light and high-speed camera was utilized for droplet analysis via the shadowgraph technique.

- Optical filters and lenses were employed to isolate relevant spectral bands and enhance image contrast.

- A laser profilometer was used to capture weld geometry through point cloud reconstruction.

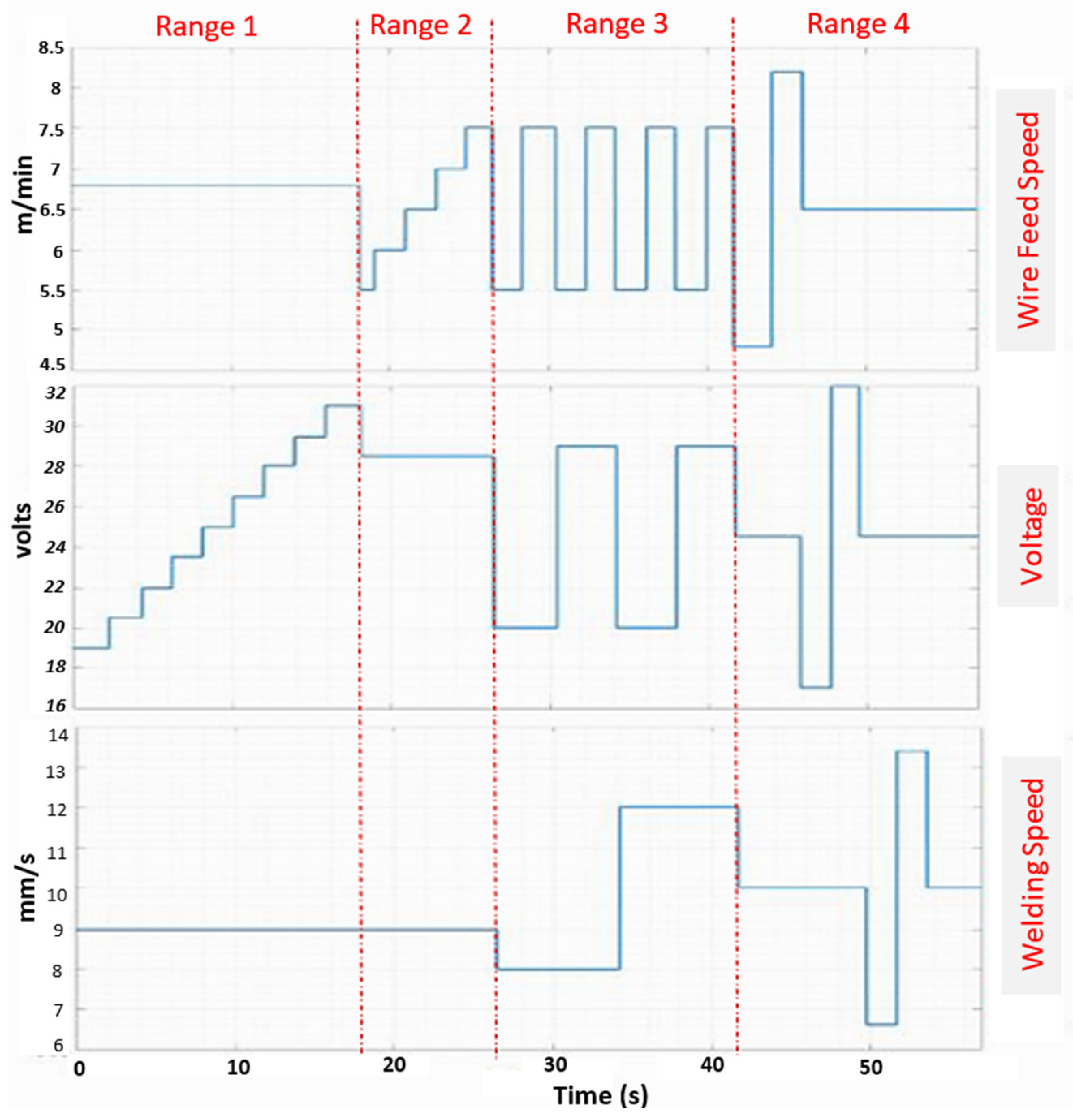

- Parametric sweep: systematic voltage and wire feed speed variation while maintaining other parameters at fixed values.

- Full factorial variation: simultaneous voltage, wire speed, and welding speed variation.

3. Results and Discussion

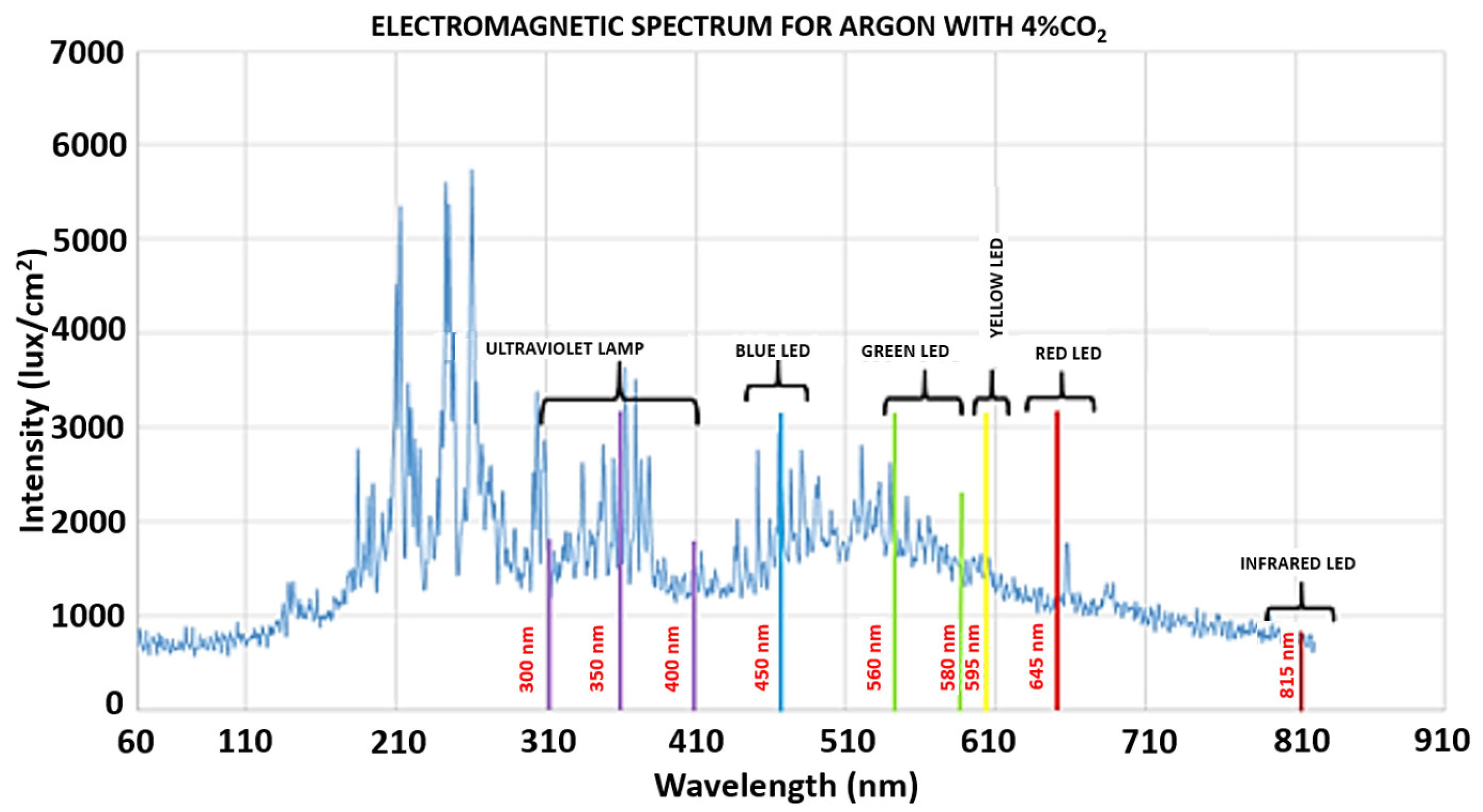

3.1. Optical Analysis Through the Electromagnetic Spectrum

3.2. Development of Vision Systems

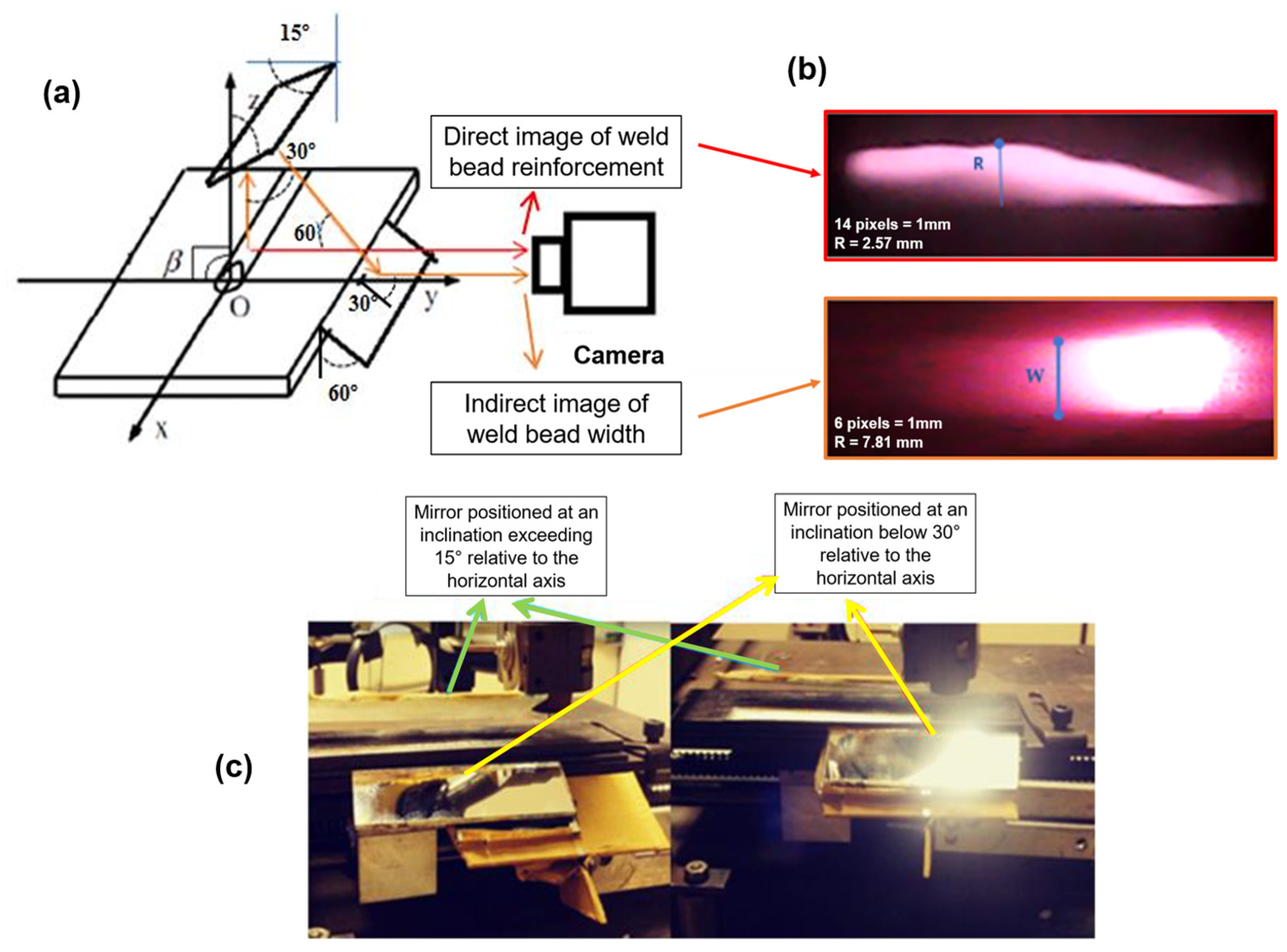

3.2.1. Camera and Optical Assembly

3.2.2. Image Processing Algorithm

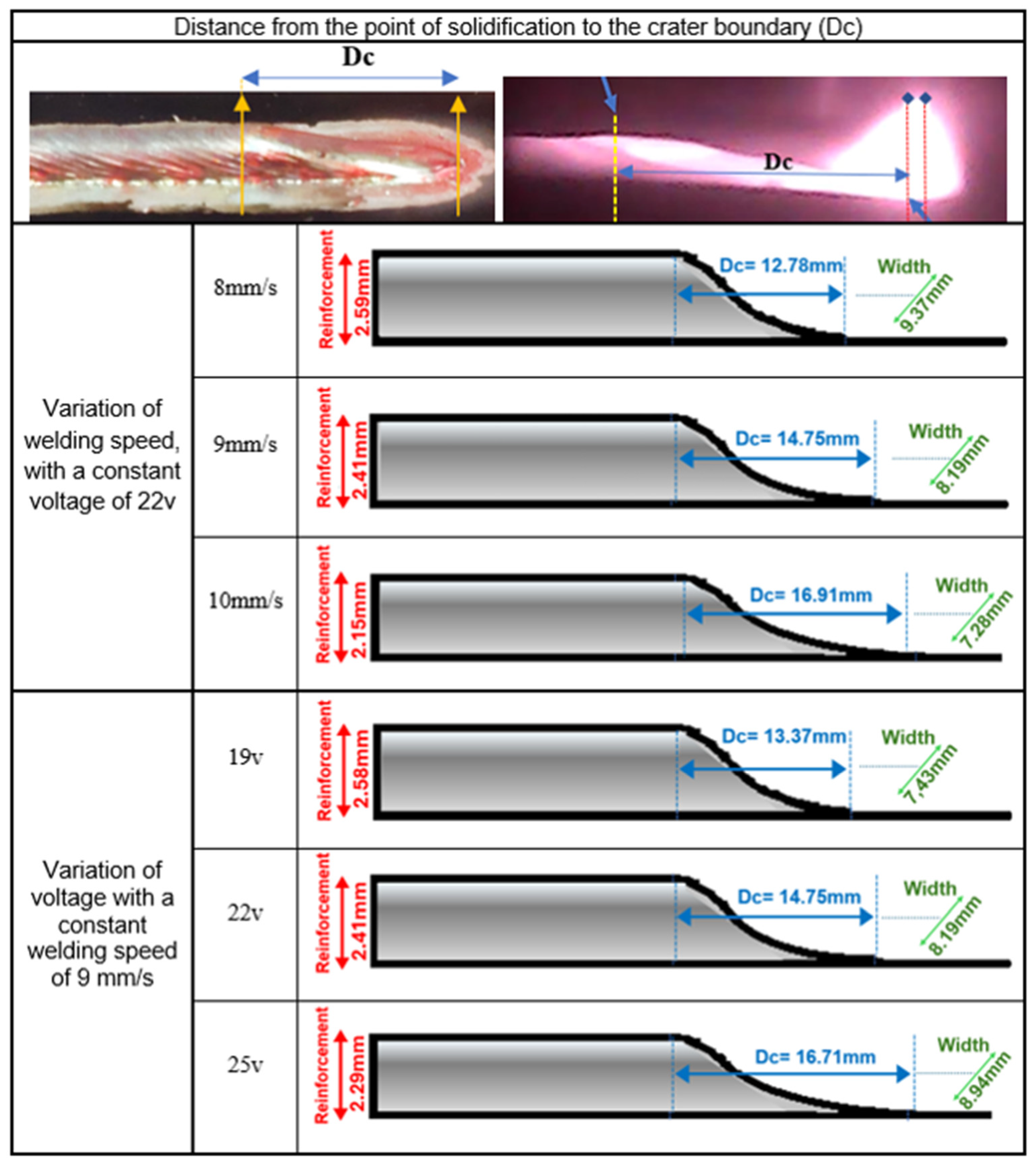

3.2.3. Dynamic Positioning of the Reinforcement Through the Measurement of the Crater Length (Dc)

- Dc is the crater length (mm), or the distance from the wire to the measurement point.

- Ws is the welding speed (mm/s).

- V is the arc voltage (V).

- C1, C2, and C3 are empirical regression coefficients determined experimentally.

| Variable | Abbreviations | Symbol |

|---|---|---|

| Gas Flow | (GF) |  |

| Travel Speed | (TS) |  |

| Voltage | (V) | V |

| Wire Feed Speed | (WFS) |  |

| Time Per Section | (t) |

3.2.4. Analysis Under Critical Conditions of Low Power or Start of Welding

3.2.5. Bead Width Estimation Based on Molten Pool Geometry and Camera Angle Correction

- 0.2 ≤ Ws ≤ 0.9 m/min;

- 3 ≤ Wfs ≤ 12 m/min;

- Bw is the predicted weld bead width (mm);

- Pw is the weld pool width (mm);

- Ws is the welding speed (mm/s);

- Wfs is the wire feed speed (mm/s).

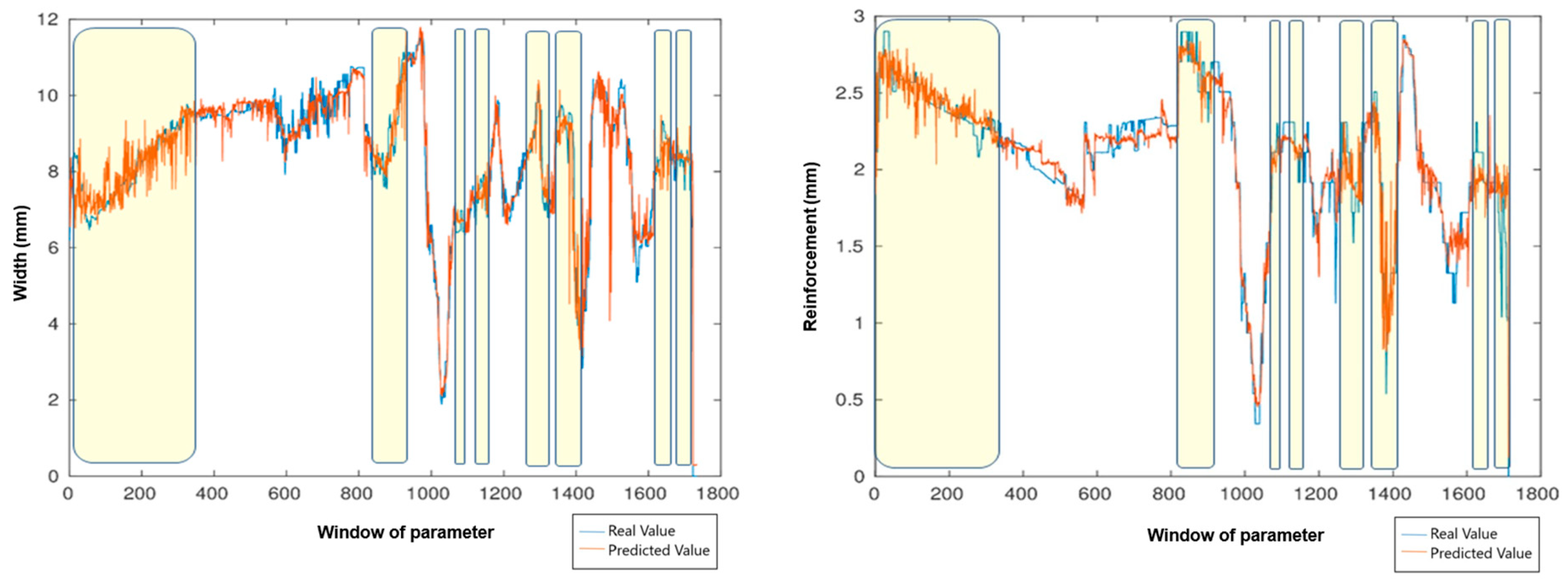

3.3. Vision System Data Processing

- Range 1: gradual increase in electric arc voltage;

- Range 2: gradual increase in wire feed speed;

- Range 3: alternating variations in all parameters;

- Range 4: full-range random variation in the three variables.

3.4. Camera Calibration

- Direct linear transformation (DLT) is known for its simplicity and ease of implementation. However, it does not account for lens distortions, significantly limiting its precision in applications requiring geometric fidelity.

- The MATLAB R2016b Camera Calibration Toolbox is a widely used toolbox offering a robust solution that includes lens distortion correction. Its ease of use and precision make it suitable for most engineering and scientific applications demanding accurate camera calibration.

- Tsai’s method [29] is a more sophisticated model that accounts for lens distortions and nonlinearities. Although more complex to implement, this method offers high accuracy and was adopted in this study. It was chosen based on previous work by the authors using a CMOS Lumenera LW230 camera (1616 × 1216 pixels, 4.4 μm pixel pitch), which demonstrated its suitability for precise dimensional applications in computer vision.

3.5. Integration with Machine Learning Application

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Algorithm A1 Image processing algorithm |

| Step 1

NumF= input (‘Write the number of frames you will analyze. NumF: ’); For a=1:NumF %This for allows analyzing several frames filename = [“Img”,num2str(a,‘R’),”.jpg’]; route = [‘C:\Program Files \MATLAB\R2016a\bin\img\’filename]; Img1 = imread(route); %APPLICATION OF MEDIA FILTER AND CHANGE TO GRAY TONES Img2 = uint8(Img); for j = 5: NumF-5 for i = 7: NumF/4-7 Img3(i,j) = median((Img2(i − 6:i + 6,j − 4:j + 4))); end end Gray = rgbgray(Img3); Step 2 % Binarization % Upper edge binarization—External heat radiation Edge1 = edge(Gray ≤ 100,‘sobel’); Edge2 = edge(Gray ≤ 100,‘log’); Edge3 = edge(Gray ≤ 100,‘canny’); EdgeF = imadjust(Edge1 + Edge2+ Edge3); Step 3 Edge12 = edge(gray ≤ 200,‘sobel’); Edge22 = edge(Gray ≤ 200,‘log’); Edge32 = edge(Gray ≤ 200,‘canny’); EdgeF2 = imadjust(Edge12 + Edge22 + Edge32); Step 4 Xm= average(midpoint_external, midpoint_internal); XR= round(Xm × 0.95); Step 5 Reinforcement= End_Reinforcement—Start_Reinforcement; Reinforcement_mm= Reinforcement × 0.0605; %Pixel to mm, conversion Factor. |

References

- Mattera, G.; Nele, L.; Paolella, D. Monitoring and Control the Wire Arc Additive Manufacturing Process Using Artificial Intelligence Techniques: A Review. J. Intell. Manuf. 2024, 35, 467–497. [Google Scholar] [CrossRef]

- Cai, Y.; Xiong, J.; Chen, H.; Zhang, G. A Review of In-Situ Monitoring and Process Control System in Metal-Based Laser Additive Manufacturing. J. Manuf. Syst. 2023, 70, 309–326. [Google Scholar] [CrossRef]

- Fang, Q.; Xiong, G.; Zhou, M.C.; Tamir, T.S.; Yan, C.B.; Wu, H.; Shen, Z.; Wang, F.Y. Process Monitoring, Diagnosis and Control of Additive Manufacturing. IEEE Trans. Autom. Sci. Eng. 2024, 21, 1041–1067. [Google Scholar] [CrossRef]

- Zhang, R.; Nishimoto, D.; Ma, N.; Narasaki, K.; Wang, Q.; Suga, T.; Tsuda, S.; Tabuchi, T.; Shimada, S. Asymmetric Molten Zone and Hybrid Heat Source Modeling in Laser Welding Carbon Steel and Cast Iron with Nickel Alloy Wires. J. Manuf. Process 2025, 142, 177–190. [Google Scholar] [CrossRef]

- Hou, W.; Zhang, D.; Wei, Y.; Guo, J.; Zhang, X. Review on Computer Aided Weld Defect Detection from Radiography Images. Appl. Sci. 2020, 10, 1878. [Google Scholar] [CrossRef]

- Czimmermann, T.; Ciuti, G.; Milazzo, M.; Chiurazzi, M.; Roccella, S.; Oddo, C.M.; Dario, P. Visual-Based Defect Detection and Classification Approaches for Industrial Applications—A SURVEY. Sensors 2020, 20, 1459. [Google Scholar] [CrossRef]

- Fu, Y.; Chen, S.; Chen, Z. Monitoring Welding Torch Position and Posture Using Reversed Electrode Images—Part I: Establishment of the REI-TPA Model. Weld. J. 2024, 103, 215–223. [Google Scholar] [CrossRef]

- Inês Silva, M.; Malitckii, E.; Santos, T.G.; Vilaça, P. Review of Conventional and Advanced Non-Destructive Testing Techniques for Detection and Characterization of Small-Scale Defects. Prog. Mater. Sci. 2023, 138, 101155. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, Z. Visual Sensing Technologies in Robotic Welding: Recent Research Developments and Future Interests. Sens. Actuators A Phys. 2021, 320, 112551. [Google Scholar] [CrossRef]

- Baraya, M.; El-Asfoury, M.S.; Fadel, O.O.; Abass, A. Experimental Analyses and Predictive Modelling of Ultrasonic Welding Parameters for Enhancing Smart Textile Fabrication. Sensors 2024, 24, 1488. [Google Scholar] [CrossRef]

- Dhara, S.; Das, A. Impact of Ultrasonic Welding on Multi-Layered Al–Cu Joint for Electric Vehicle Battery Applications: A Layer-Wise Microstructural Analysis. Mater. Sci. Eng. A 2020, 791, 139795. [Google Scholar] [CrossRef]

- Mishra, D.; Gupta, A.; Raj, P.; Kumar, A.; Anwer, S.; Pal, S.K.; Chakravarty, D.; Pal, S.; Chakravarty, T.; Pal, A.; et al. Real Time Monitoring and Control of Friction Stir Welding Process Using Multiple Sensors. CIRP J. Manuf. Sci. Technol. 2020, 30, 1–11. [Google Scholar] [CrossRef]

- Sarkar, S.S.; Das, A.; Paul, S.; Ghosh, A.; Mali, K.; Sarkar, R.; Kumar, A. Infrared Imaging Based Machine Vision System to Determine Transient Shape of Isotherms in Submerged Arc Welding. Infrared Phys. Technol. 2020, 109, 103410. [Google Scholar] [CrossRef]

- Naksuk, N.; Nakngoenthong, J.; Printrakoon, W.; Yuttawiriya, R. Real-Time Temperature Measurement Using Infrared Thermography Camera and Effects on Tensile Strength and Microhardness of Hot Wire Plasma Arc Welding. Metals 2020, 10, 1046. [Google Scholar] [CrossRef]

- Hamzeh, R.; Thomas, L.; Polzer, J.; Xu, X.W.; Heinzel, H. A Sensor Based Monitoring System for Real-Time Quality Control: Semi-Automatic Arc Welding Case Study. Procedia Manuf. 2020, 51, 201–206. [Google Scholar] [CrossRef]

- Wang, Y.; Lee, W.; Jang, S.; Truong, V.D.; Jeong, Y.; Won, C.; Lee, J.; Yoon, J. Prediction of Internal Welding Penetration Based on IR Thermal Image Supported by Machine Vision and ANN-Model during Automatic Robot Welding Process. J. Adv. Join. Process. 2024, 9, 100199. [Google Scholar] [CrossRef]

- Khan, M.A.; Madsen, N.H.; Goodling, J.S.; Chin, B.A. Infrared Thermography as a Control for the Welding Process. Opt. Eng. 1986, 25, 256799. [Google Scholar] [CrossRef]

- Li, C.; Fang, C.; Zhang, X. Automated Line Scan Profilometer Based on the Surface Recognition Method. Opt. Lasers Eng. 2024, 182, 108464. [Google Scholar] [CrossRef]

- Wang, Z. Monitoring of GMAW Weld Pool from the Reflected Laser Lines for Real-Time Control. IEEE Trans. Ind. Inform. 2014, 10, 2073–2083. [Google Scholar] [CrossRef]

- Chokkalingham, S.; Chandrasekhar, N.; Vasudevan, M. Predicting the Depth of Penetration and Weld Bead Width from the Infra Red Thermal Image of the Weld Pool Using Artificial Neural Network Modeling. J. Intell. Manuf. 2012, 23, 1995–2001. [Google Scholar] [CrossRef]

- Santoro, L.; Sesana, R.; Molica Nardo, R.; Curá, F. Infrared In-Line Monitoring of Flaws in Steel Welded Joints: A Preliminary Approach with SMAW and GMAW Processes. Int. J. Adv. Manuf. Technol. 2023, 128, 2655–2670. [Google Scholar] [CrossRef]

- Jorge, V.L.; Bendaoud, I.; Soulié, F.; Bordreuil, C. Rear Weld Pool Thermal Monitoring in GTAW Process Using a Developed Two-Colour Pyrometer. Metals 2024, 14, 937. [Google Scholar] [CrossRef]

- Cantor, M.C.; Goetz, H.M.; Beattie, K.; Renaud, D.L. Evaluation of an Infrared Thermography Camera for Measuring Body Temperature in Dairy Calves. JDS Commun. 2022, 3, 357–361. [Google Scholar] [CrossRef]

- Ba, K.; Wang, J. Advances in Solution-Processed Quantum Dots Based Hybrid Structures for Infrared Photodetector. Mater. Today 2022, 58, 119–134. [Google Scholar] [CrossRef]

- Wang, J.J.; Lin, T.; Chen, S.B. Obtaining Weld Pool Vision Information during Aluminium Alloy TIG Welding. Int. J. Adv. Manuf. Technol. 2005, 26, 219–227. [Google Scholar] [CrossRef]

- Pimenta Mota, C.; Vinícius Ribeiro Machado, M.; Bailoni Fernandes, D.; Oliveira Vilarinho, L. Estudo Da Emissão de Raios Infravermelho Próximo Em Processos de Soldagem a Arco (Study of near-Infrared Emission on Processes of Arc Welding). Soldag. Insp. 2011, 16, 44–52. [Google Scholar] [CrossRef]

- Zhang, L.; Peng, Z. Infrared Small Target Detection Based on Partial Sum of the Tensor Nuclear Norm. Remote Sens. 2019, 11, 382. [Google Scholar] [CrossRef]

- Tsai, R.Y. A Versatile Camera Calibratio Technique for High-Accuracy 3D Machine Vision Metrology Using Off-the-Shelf TV Cameras and Lenses. IEEE J. Robot. Autom. 1987, 4, 323–344. [Google Scholar] [CrossRef]

- Horn, B.K.P. Tsai’s Camera Calibration Method Revisited; Technical Report; Massachusetts Institute of Technology: Cambridge, MA, USA, 2000; Available online: https://people.csail.mit.edu/bkph/articles/Tsai_Revisited.pdf (accessed on 27 June 2025).

- Park, M.H.; Hur, J.J.; Lee, W.J. Prediction of Oil-Fired Boiler Emissions with Ensemble Methods Considering Variable Combustion Air Conditions. J. Clean. Prod. 2022, 375, 134094. [Google Scholar] [CrossRef]

- Xiao, G.; Tong, H.; Shu, Y.; Ni, A. Spatial-Temporal Load Prediction of Electric Bus Charging Station Based on S2TAT. Int. J. Electr. Power Energy Syst. 2025, 164, 110446. [Google Scholar] [CrossRef]

| Wire | C | Si | Mn | Cr | Ni | Mo | N |

|---|---|---|---|---|---|---|---|

| AISI 316L | 0.015 | 0.45 | 1.6 | 18.5 | 12.0 | 2.6 | 0.04 |

| E410NiMoT1-4 | 0.05 | 0.50 | 0.30 | 13.0 | 4.0 | 0.55 | <0.01 |

| Wavelength (nm) | Color |

|---|---|

| 380–450 | Violet |

| 450–490 | Blue |

| 490–520 | Cyan |

| 520–570 | Green |

| 570–590 | Yellow |

| 590–620 | Orange |

| 620–740 | Red |

| AWS NiMo Wire—Ar—4% CO2 Shielding Gas | ||||||

|---|---|---|---|---|---|---|

| Data | Time [ms] | Wire Feed Speed [m/min] | Welding Speed [mm/s] | Open Loop Voltage [V] | Current [A] | Arc Voltage [V] |

| 1 | 10 | 6.80 | 9 | 19 | 264.23 | 22.44 |

| 2 | 20 | 6.80 | 9 | 19 | 337.85 | 5.40 |

| 3 | 30 | 6.80 | 9 | 19 | 96.65 | 17.37 |

| 4 | 40 | 6.80 | 9 | 19 | 621.00 | 10.66 |

| 5 | 50 | 6.80 | 9 | 19 | 135.64 | 22.26 |

| 6 | 60 | 6.80 | 9 | 19 | 74.41 | 17.60 |

| 7 | 70 | 6.80 | 9 | 19 | 76.62 | 16.45 |

| 8 | 80 | 6.80 | 9 | 19 | 270.31 | 24.67 |

| 9 | 90 | 6.80 | 9 | 19 | 329.99 | 5.80 |

| 10 | 100 | 6.80 | 9 | 19 | 104.85 | 18.08 |

| Data | Arc length [mm] | Stick out [mm] | Weld bead Width [mm] | Weld bead reinforcement [mm] | Penetration [mm] | Transfer mode |

| 1 | 1.38 | 13.62 | 6.01 | 1.72 | 0.69 | CC-(1) |

| 2 | 1.38 | 13.62 | 6.01 | 1.72 | 1.14 | CC-(1) |

| 3 | 1.385 | 13.62 | 6.01 | 1.72 | 1.09 | CC-(1) |

| 4 | 1.50 | 13.50 | 6.19 | 1.92 | 1.05 | CC-(1) |

| 5 | 1.50 | 13.50 | 6.19 | 1.92 | 0.94 | CC-(1) |

| 6 | 1.50 | 13.50 | 6.19 | 1.92 | 0.96 | CC-(1) |

| 7 | 1.50 | 13.50 | 6.36 | 1.92 | 0.96 | CC-(1) |

| 8 | 1.38 | 13.62 | 6.36 | 1.92 | 0.96 | CC-(1) |

| 9 | 1.38 | 13.62 | 6.36 | 1.92 | 0.96 | CC-(1) |

| 10 | 1.38 | 13.62 | 6.77 | 2.11 | 0.96 | CC-(1) |

| Focal Length (f) (mm) | Image Center (Cx, Cy) (Pixels) | Scale Factors (sx, sy) (Pixels/mm) | Radial Distortion Coefficient (k) |

|---|---|---|---|

| 9.43773 | (738, 585) | (227.27, 227.27) | 9.4604 × 10−9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muñoz Chávez, J.J.; Lira, M.N.d.S.; Pizo, G.A.I.; Payão Filho, J.d.C.; Alfaro, S.C.A.; Motta, J.M.S.T.d. Development of a Low-Cost Infrared Imaging System for Real-Time Analysis and Machine Learning-Based Monitoring of GMAW. Sensors 2025, 25, 6858. https://doi.org/10.3390/s25226858

Muñoz Chávez JJ, Lira MNdS, Pizo GAI, Payão Filho JdC, Alfaro SCA, Motta JMSTd. Development of a Low-Cost Infrared Imaging System for Real-Time Analysis and Machine Learning-Based Monitoring of GMAW. Sensors. 2025; 25(22):6858. https://doi.org/10.3390/s25226858

Chicago/Turabian StyleMuñoz Chávez, Jairo José, Margareth Nascimento de Souza Lira, Gerardo Antonio Idrobo Pizo, João da Cruz Payão Filho, Sadek Crisostomo Absi Alfaro, and José Maurício Santos Torres da Motta. 2025. "Development of a Low-Cost Infrared Imaging System for Real-Time Analysis and Machine Learning-Based Monitoring of GMAW" Sensors 25, no. 22: 6858. https://doi.org/10.3390/s25226858

APA StyleMuñoz Chávez, J. J., Lira, M. N. d. S., Pizo, G. A. I., Payão Filho, J. d. C., Alfaro, S. C. A., & Motta, J. M. S. T. d. (2025). Development of a Low-Cost Infrared Imaging System for Real-Time Analysis and Machine Learning-Based Monitoring of GMAW. Sensors, 25(22), 6858. https://doi.org/10.3390/s25226858