Applications of Pose Estimation in Human Health and Performance across the Lifespan

Abstract

:1. Introduction

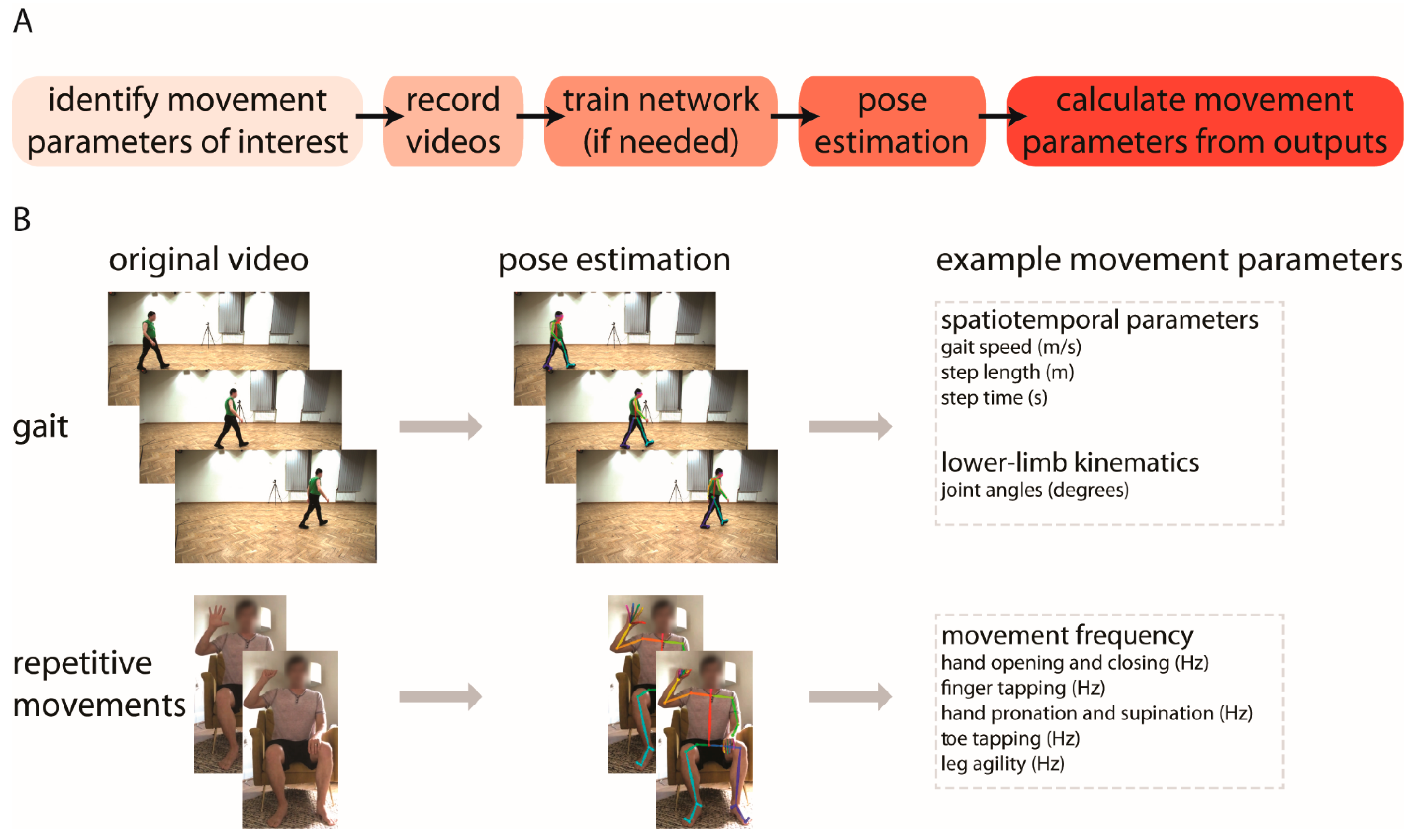

2. What Is Pose Estimation?

3. What Tools Are Available?

4. How Can These Tools Be Used to Improve Human Health and Performance?

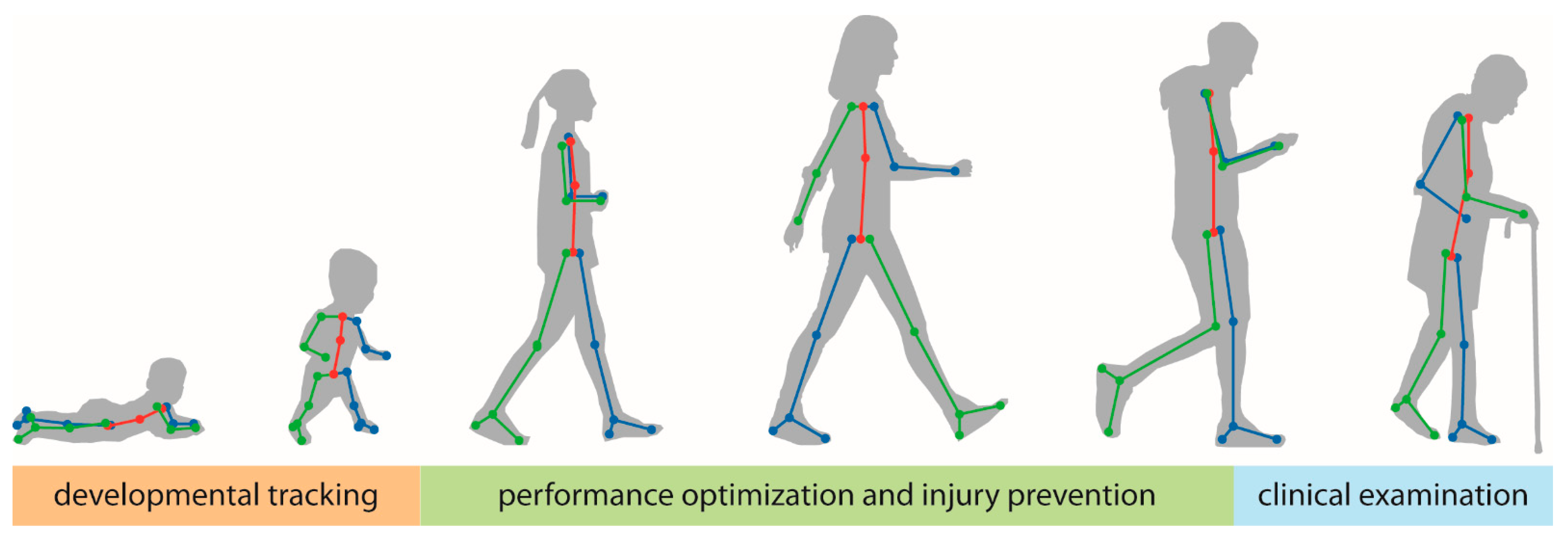

4.1. Tracking General Motor Development

4.2. Clinical Use in Pediatric Populations

4.3. Human Performance Optimization, Injury Prevention, and Safety

4.4. Clinical Motor Assessment in Adult Neurologic Conditions

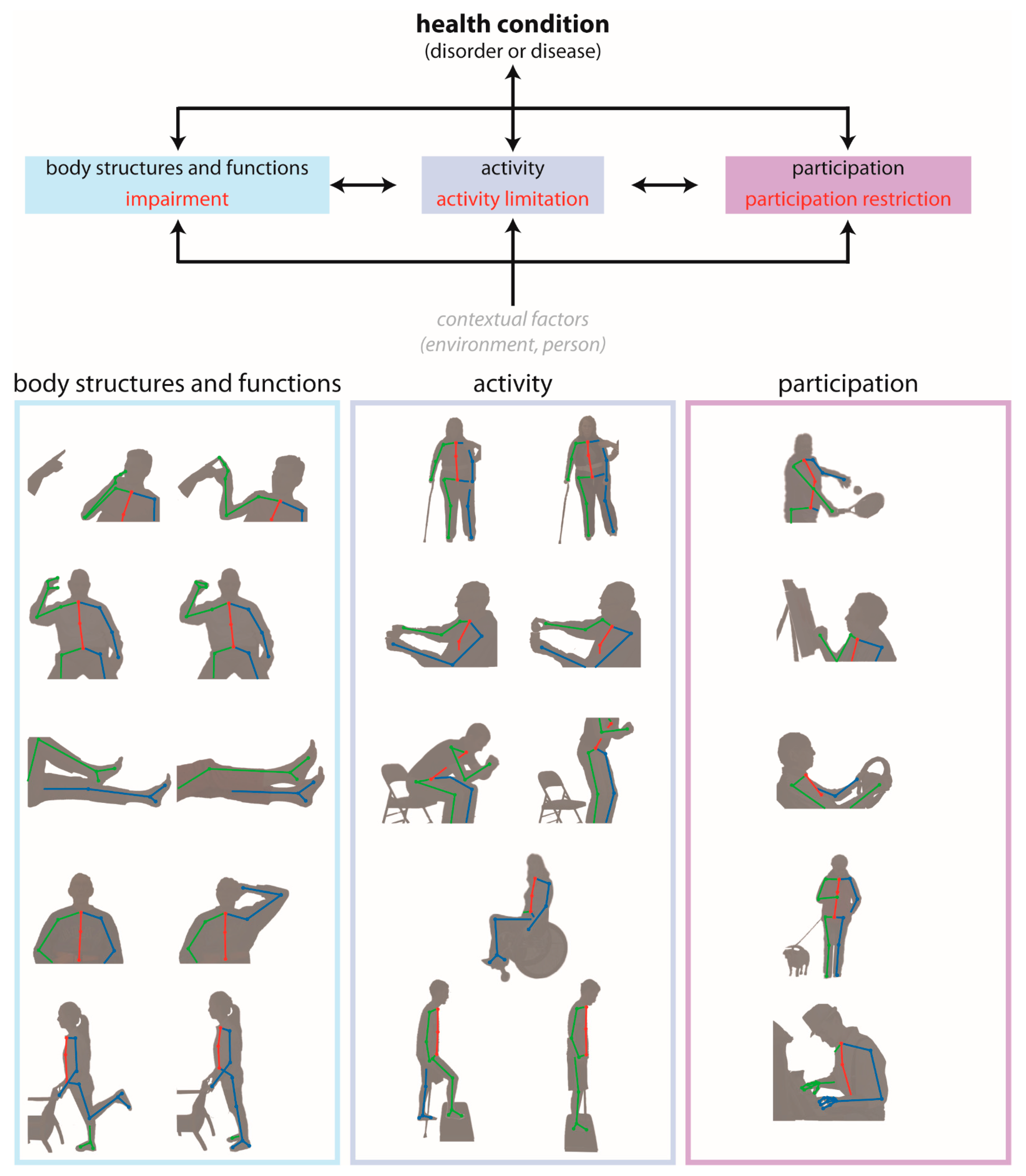

- Body structures and functions are anatomical parts of the body and physiological functions of the body systems, respectively. The term impairment refers to problems in body structure or function.

- Activity is the execution of a task or action by an individual. The term activity limitation describes difficulties with completion of an activity.

- Participation is involvement in a life situation. Participation restrictions are problems that an individual encounters during participation in real-world situations.

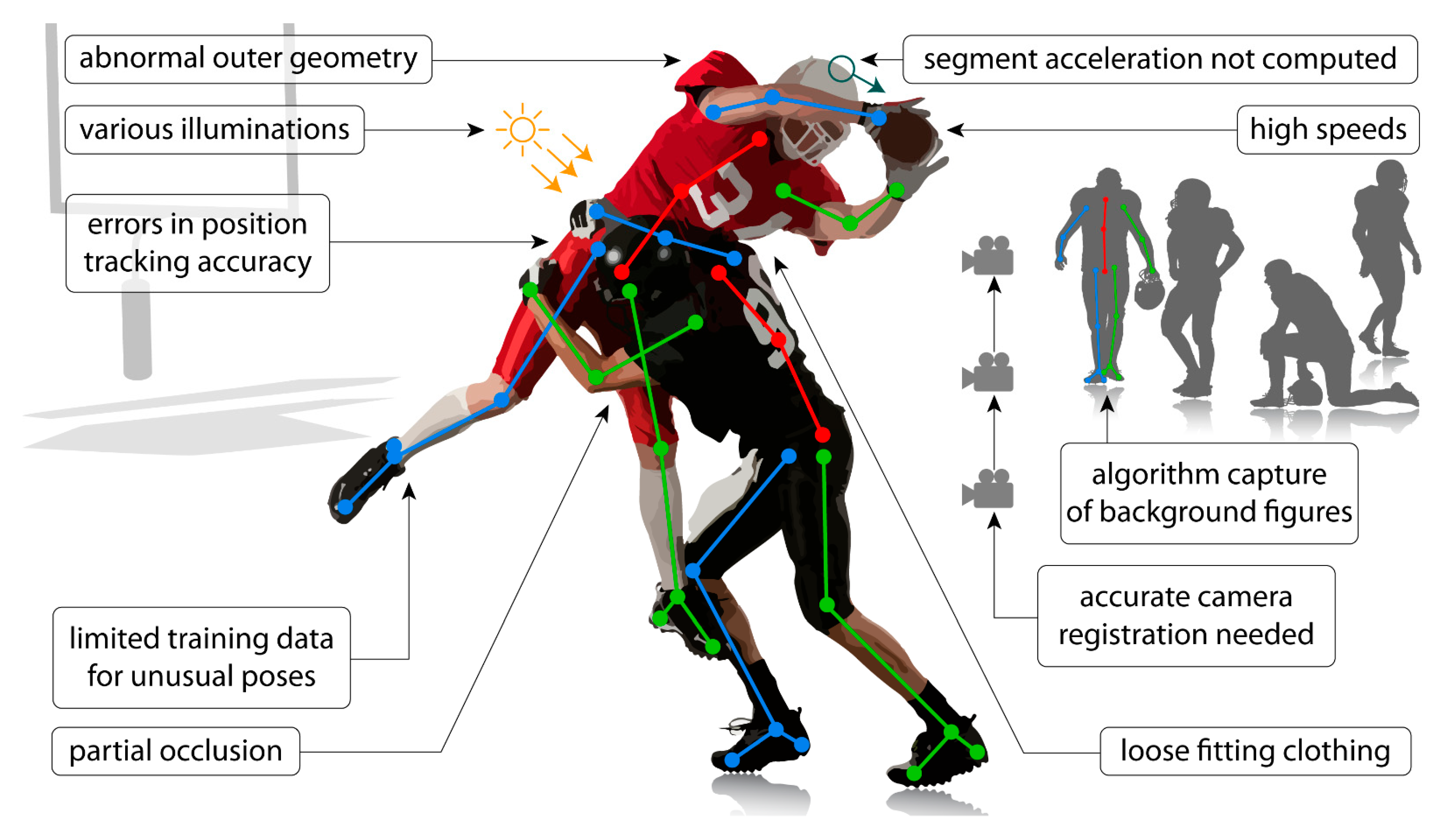

5. What Are the Limitations of Pose Estimation?

5.1. Application Limitations

- Occlusions: these occur when one or more of the anatomical locations desired to be tracked are not visible. This may be due to occlusion by other body segments, by other people in the frame, or by inanimate objects (e.g., assistive devices—canes, walkers, crutches, orthoses, robotics; clinical objects—beds, hospital gowns, medical devices; sporting equipment—helmets, balls, bats, sticks).

- Limited training data: networks that are trained on sets of images that lack diversity (e.g., clothing, poses, illuminations, viewpoints, unusual postures associated with clinical conditions) may not perform well in applications where the videos are quite different from those included in the training set. Applications of current techniques that require a training dataset may require creation of a new training dataset if movements/images of a patient population are substantially different from those included in the existing training dataset (e.g., abnormal hand postures after stroke). This is particularly important given that most training datasets are biased toward healthy movement patterns.

- Capture errors: pose estimation algorithms may identify and track unwanted human or human-like figures in the field of view (e.g., people in the background, images on posters or artwork).

- Positional errors: tracking may be difficult when conditions introduce uncertainty into the positions of anatomical locations within the image (e.g., wearing a dress, hospital gown, athletic uniform or padding). This may also occur when attempting to track a movement from a suboptimal viewpoint (e.g., measuring knee flexion from a frontal view).

- Limitations of recording devices: use of devices with low sampling rates (e.g., the sampling rate of common video recording devices is often approximately 30 Hz) may be unable to capture accurate movement kinematics of movements that occur at high speeds or high frequencies. The aperture and shutter speed of recording devices can also impact image quality and introduce blurring, which can impact the quality of the tracking achieved through pose estimation.

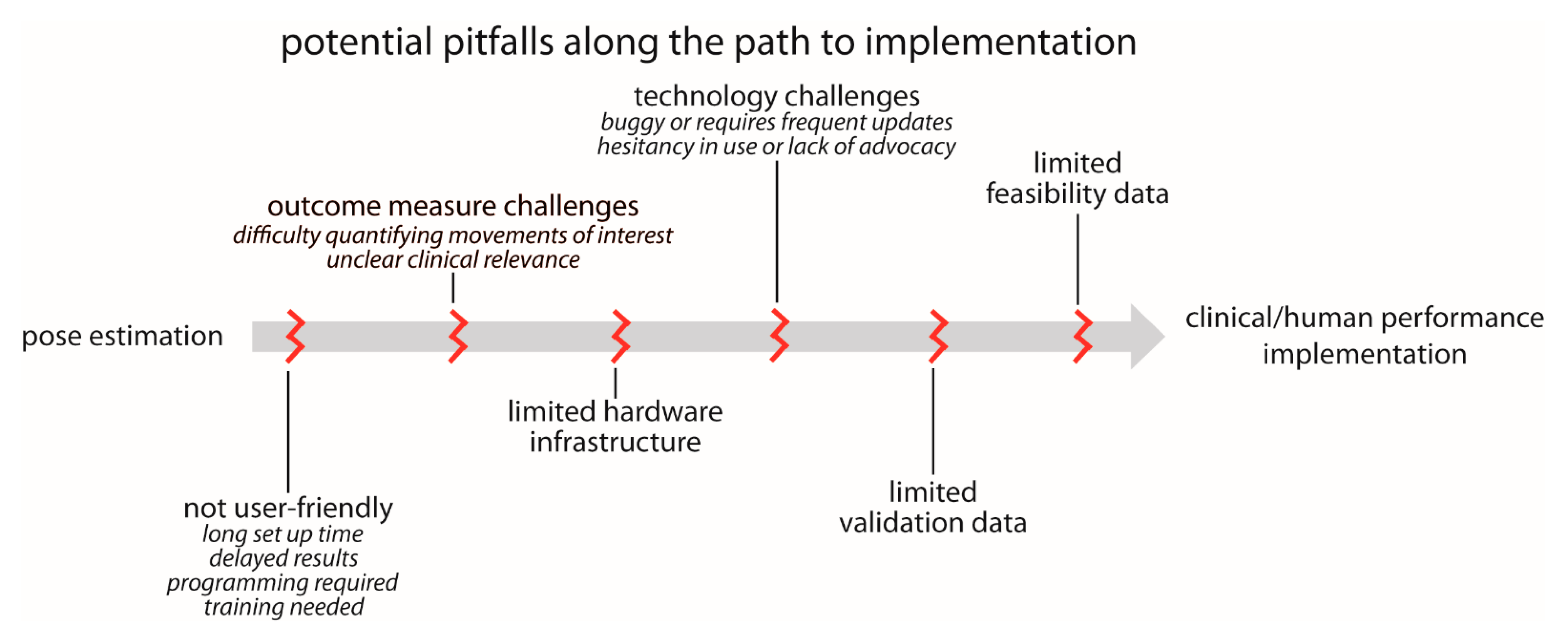

5.2. Barriers to Implementation

- User-friendliness: we currently lack plug-and-play options for pose estimation. While we certainly understand and acknowledge the many reasons for this, pose estimation is unlikely to be used widely in clinical settings in particular until user-friendliness improves. We outline several relevant components to user-friendliness below:

- ▪

- Set up time: in our experience, many users want point-and-click capability. They want to be able to carry a recording device in their pocket, use it to record a quick video of their patient or research participant when needed, and ultimately obtain meaningful information about movement kinematics. Alternatively, they want a reserved space where a recording device could be permanently mounted and easily started and stopped (e.g., a tablet mounted to a wall). Any configuration that requires multi-camera calibration or prolonged set up time is unlikely to be adopted for widespread clinical use.

- ▪

- Delayed results: many users want results in near real-time. There is a need for fast, automated approaches that immediately process the pose estimation outputs, calculate relevant movement parameters, and return interpretable data.

- ▪

- Programming and training requirements: some existing pose estimation options are very easy to download, install, and use for users with basic technical expertise. However, even these can remain prohibitively daunting for clinicians and researchers without technical backgrounds. Technologies that require any amount of programming or significant training are unlikely to reach widespread use in clinical settings.

- Outcome measure challenges: in some cases, users want to use movement data to improve clinical or performance-related decision-making, but it is not immediately clear what parameters of the movement will lead to improved outcomes (e.g., a user may express interest in measuring “walking” but is not sure which specific gait parameters are most relevant to their research study or clinical intervention). Therefore, there is a desire to collect kinematic data, but how these data should be used is not well-defined. Similarly, in the case of clinical assessments, there needs to be a clear link to relevant clinical and translational outcomes—the users should have input as to what output metrics are important.

- Limited hardware infrastructure: as described above, some applications of pose estimation for human movement tracking require significant computational power. Some clinical and research settings are unlikely to have access to the hardware (e.g., GPUs) needed to execute their desired applications in a timely manner.

- Technology challenges: many technologies that promise potential for clinical or human performance impact are made available before they are fully developed. This can lead to buggy software and frequent updating, which harms trust and credibility among users. This can, in turn, exacerbate the hesitancy in adopting new technologies present in some clinical and research communities, especially in artificial intelligence technologies (such as pose estimation) that are purported to supplement or even replace expert human assessment.

- Lack of validation and feasibility data: there is a need for large-scale studies to validate pose estimation outputs against ground truth measures in a wide range of different populations. This may be accomplished in a variety of ways, including (but not limited to) comparisons with three-dimensional motion capture, wearable devices with proven accuracy, expert clinical ratings and/or assessments, or even possibly other pose estimation algorithms. The error (relative to the ground truth measurement) that is deemed acceptable is likely to depend on the use case and the metrics being used. In our experience, users who study very specific movements of joints or other anatomical landmarks (e.g., biomechanics or motor control researchers) are likely to seek greater accuracy than, for example, a clinician who may wish to incorporate a video-based assessment of walking speed as part of a larger clinical examination. It may be desirable to begin to develop field-specific accuracy standards for some applications.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mündermann, L.; Corazza, S.; Andriacchi, T.P. The Evolution of Methods for the Capture of Human Movement Leading to Markerless Motion Capture for Biomechanical Applications. J. NeuroEng. Rehabil. 2006, 3, 6. [Google Scholar] [CrossRef] [Green Version]

- Baker, R. The History of Gait Analysis before the Advent of Modern Computers. Gait Posture 2007, 26, 23–28. [Google Scholar] [CrossRef] [PubMed]

- Roether, C.L.; Omlor, L.; Christensen, A.; Giese, M.A. Critical Features for the Perception of Emotion from Gait. J. Vis. 2009, 9, 1–32. [Google Scholar] [CrossRef] [PubMed]

- Michalak, J.; Troje, N.F.; Fischer, J.; Vollmar, P.; Heidenreich, T.; Schulte, D. Embodiment of Sadness and Depression-Gait Patterns Associated with Dysphoric Mood. Psychosom. Med. 2009, 71, 580–587. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kendon, A. Movement Coordination in Social Interaction: Some Examples Described. Acta Psychol. 1970, 32, 101–125. [Google Scholar] [CrossRef]

- Martinez, G.H.; Raaj, Y.; Idrees, H.; Xiang, D.; Joo, H.; Simon, T.; Sheikh, Y. Single-Network Whole-Body Pose Estimation. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 6982–6991. [Google Scholar] [CrossRef] [Green Version]

- Insafutdinov, E.; Andriluka, M.; Pishchulin, L.; Tang, S.; Levinkov, E.; Andres, B.; Schiele, B. ArtTrack: Articulated Multi-Person Tracking in the Wild. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6457–6465. [Google Scholar] [CrossRef] [Green Version]

- Insafutdinov, E.; Pishchulin, L.; Andres, B.; Andriluka, M.; Schiele, B. Deepercut: A Deeper, Stronger, and Faster Multi-Person Pose Estimation Model. In Computer Vision—ECCV 2016; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer International Publishing: Cham, Switzerland, 2016; pp. 34–50. [Google Scholar] [CrossRef] [Green Version]

- Pishchulin, L.; Insafutdinov, E.; Tang, S.; Andres, B.; Andriluka, M.; Gehler, P.; Schiele, B. DeepCut: Joint Subset Partition and Labeling for Multi Person Pose Estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4929–4937. [Google Scholar] [CrossRef] [Green Version]

- Toshev, A.; Szegedy, C. DeepPose: Human Pose Estimation via Deep Neural Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar] [CrossRef] [Green Version]

- Nath, T.; Mathis, A.; Chen, A.C.; Patel, A.; Bethge, M.; Mathis, M.W. Using DeepLabCut for 3D Markerless Pose Estimation across Species and Behaviors. Nat. Protoc. 2019, 14, 2152–2176. [Google Scholar] [CrossRef] [PubMed]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless Pose Estimation of User-Defined Body Parts with Deep Learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1302–1310. [Google Scholar] [CrossRef] [Green Version]

- Cornman, H.L.; Stenum, J.; Roemmich, R.T. Video-Based Quantification of Human Movement Frequency Using Pose Estimation. bioRxiv 2021. [Google Scholar] [CrossRef]

- Stenum, J.; Rossi, C.; Roemmich, R.T. Two-Dimensional Video-Based Analysis of Human Gait Using Pose Estimation. PLoS Comput. Biol. 2021, 17, e1008935. [Google Scholar] [CrossRef]

- Kwolek, B.; Michalczuk, A.; Krzeszowski, T.; Switonski, A.; Josinski, H.; Wojciechowski, K. Calibrated and Synchronized Multi-View Video and Motion Capture Dataset for Evaluation of Gait Recognition. Multimed. Tools Appl. 2019, 78, 32437–32465. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Tan, T.; Ning, H.; Hu, W. Silhouette Analysis-Based Gait Recognition for Human Identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1505–1518. [Google Scholar] [CrossRef] [Green Version]

- Holte, M.B.; Cuong, T.; Trivedi, M.M.; Moeslund, T.B. Human Pose Estimation and Activity Recognition from Multi-View Videos: Comparative Explorations of Recent Developments. IEEE J. Sel. Top. Signal Process. 2012, 6, 538–552. [Google Scholar] [CrossRef]

- Isaacs, J.; Foo, S. Hand Pose Estimation for American Sign Language Recognition. In Proceedings of the Thirty-Sixth Southeastern Symposium on System Theory, Atlanta, GA, USA, 16 March 2004; pp. 132–136. [Google Scholar] [CrossRef]

- Cronin, N.J. Using Deep Neural Networks for Kinematic Analysis: Challenges and Opportunities. J. Biomech. 2021, 123, 110460. [Google Scholar] [CrossRef] [PubMed]

- Seethapathi, N.; Wang, S.; Saluja, R.; Blohm, G.; Kording, K.P. Movement Science Needs Different Pose Tracking Algorithms. arXiv 2019, arXiv:1907.10226. [Google Scholar]

- Arac, A. Machine Learning for 3D Kinematic Analysis of Movements in Neurorehabilitation. Curr. Neurol. Neurosci. Rep. 2020, 20, 29. [Google Scholar] [CrossRef]

- Andriluka, M.; Pishchulin, L.; Gehler, P.; Schiele, B. 2D Human Pose Estimation: New Benchmark and State of the Art Analysis. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3683–3693. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar] [CrossRef] [Green Version]

- Sato, K.; Nagashima, Y.; Mano, T.; Iwata, A.; Toda, T. Quantifying Normal and Parkinsonian Gait Features from Home Movies: Practical Application of a Deep Learning–Based 2D Pose Estimator. PLoS ONE 2019, 14, e0223549. [Google Scholar] [CrossRef] [Green Version]

- Chambers, C.; Kong, G.; Wei, K.; Kording, K. Pose Estimates from Online Videos Show That Side-by-Side Walkers Synchronize Movement under Naturalistic Conditions. PLoS ONE 2019, 14, e0217861. [Google Scholar] [CrossRef]

- Cronin, N.J.; Rantalainen, T.; Ahtiainen, J.P.; Hynynen, E.; Waller, B. Markerless 2D Kinematic Analysis of Underwater Running: A Deep Learning Approach. J. Biomech. 2019, 87, 75–82. [Google Scholar] [CrossRef]

- Ota, M.; Tateuchi, H.; Hashiguchi, T.; Kato, T.; Ogino, Y.; Yamagata, M.; Ichihashi, N. Verification of Reliability and Validity of Motion Analysis Systems during Bilateral Squat Using Human Pose Tracking Algorithm. Gait Posture 2020, 80, 62–67. [Google Scholar] [CrossRef]

- Nakano, N.; Sakura, T.; Ueda, K.; Omura, L.; Kimura, A.; Iino, Y.; Fukashiro, S.; Yoshioka, S. Evaluation of 3D Markerless Motion Capture Accuracy Using OpenPose With Multiple Video Cameras. Front. Sports Act. Living 2020, 2, 50. [Google Scholar] [CrossRef]

- Zago, M.; Luzzago, M.; Marangoni, T.; De Cecco, M.; Tarabini, M.; Galli, M. 3D Tracking of Human Motion Using Visual Skeletonization and Stereoscopic Vision. Front. Bioeng. Biotechnol. 2020, 8, 181. [Google Scholar] [CrossRef] [PubMed]

- D’Antonio, E.; Taborri, J.; Palermo, E.; Rossi, S.; Patanè, F. A Markerless System for Gait Analysis Based on OpenPose Library. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Boswell, M.A.; Uhlrich, S.D.; Kidziński, Ł.; Thomas, K.; Kolesar, J.A.; Gold, G.E.; Beaupre, G.S.; Delp, S.L. A Neural Network to Predict the Knee Adduction Moment in Patients with Osteoarthritis Using Anatomical Landmarks Obtainable from 2D Video Analysis. Osteoarthr. Cartil. 2021, 29, 346–356. [Google Scholar] [CrossRef] [PubMed]

- Lu, M.; Poston, K.; Pfefferbaum, A.; Sullivan, E.V.; Fei-Fei, L.; Pohl, K.M.; Niebles, J.C.; Adeli, E. Vision-Based Estimation of MDS-UPDRS Gait Scores for Assessing Parkinson’s Disease Motor Severity. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2020; Springer International Publishing: Cham, Switzerland, 2020; Volume 12263, pp. 637–647. [Google Scholar] [CrossRef]

- Kidziński, Ł.; Yang, B.; Hicks, J.L.; Rajagopal, A.; Delp, S.L.; Schwartz, M.H. Deep Neural Networks Enable Quantitative Movement Analysis Using Single-Camera Videos. Nat. Commun. 2020, 11, 4054. [Google Scholar] [CrossRef] [PubMed]

- Ota, M.; Tateuchi, H.; Hashiguchi, T.; Ichihashi, N. Verification of Validity of Gait Analysis Systems during Treadmill Walking and Running Using Human Pose Tracking Algorithm. Gait Posture 2021, 85, 290–297. [Google Scholar] [CrossRef]

- Ossmy, O.; Adolph, K.E. Real-Time Assembly of Coordination Patterns in Human Infants. Curr. Biol. 2020, 30, 4553–4562. [Google Scholar] [CrossRef] [PubMed]

- Chambers, C.; Seethapathi, N.; Saluja, R.; Loeb, H.; Pierce, S.R.; Bogen, D.K.; Prosser, L.; Johnson, M.J.; Kording, K.P. Computer Vision to Automatically Assess Infant Neuromotor Risk. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2431–2442. [Google Scholar] [CrossRef]

- Fang, H.; Xie, S.; Lu, C. RMPE: Regional Multi-Person Pose Estimation. arXiv 2018, arXiv:1612.001737. [Google Scholar]

- Cao, Z.; Hidalgo Martinez, G.; Simon, T.; Wei, S.-E.; Sheikh, Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Viswakumar, A.; Rajagopalan, V.; Ray, T.; Parimi, C. Human Gait Analysis Using OpenPose. In Proceedings of the IEEE International Conference Image Information Processing, Shimla, India, 15–17 November 2019; pp. 310–314. [Google Scholar] [CrossRef]

- Ye, Q.; Yuan, S.; Kim, T.-K. Spatial Attention Deep Net with Partial PSO for Hierarchical Hybrid Hand Pose Estimation. arXiv 2016, arXiv:1604.03334. [Google Scholar]

- Ivekovic, S.; Trucco, E. Human Body Pose Estimation with PSO. In Proceedings of the 2006 IEEE International Conference on Evolutionary Computation, Vancouver, BC, Canada, 16–21 July 2006; pp. 1256–1263. [Google Scholar] [CrossRef]

- Lee, K.-Z.; Liu, T.-W.; Ho, S.-Y. Model-Based Pose Estimation of Human Motion Using Orthogonal Simulated Annealing. In Intelligent Data Engineering and Automated Learning; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar] [CrossRef]

- Halvorsen, K.; Söderström, T.; Stokes, V.; Lanshammar, H. Using an Extended Kalman Filter for Rigid Body Pose Estimation. J. Biomech. Eng. 2004, 127, 475–483. [Google Scholar] [CrossRef] [PubMed]

- Janabi-Sharifi, F.; Marey, M. A Kalman-Filter-Based Method for Pose Estimation in Visual Servoing. IEEE Trans. Robot. 2010, 26, 939–947. [Google Scholar] [CrossRef]

- Fanning, P.A.J.; Sparaci, L.; Dissanayake, C.; Hocking, D.R.; Vivanti, G. Functional Play in Young Children with Autism and Williams Syndrome: A Cross-Syndrome Comparison. Child Neuropsychol. J. Norm. Abnorm. Dev. Child. Adolesc. 2021, 27, 125–149. [Google Scholar] [CrossRef]

- Kretch, K.S.; Franchak, J.M.; Adolph, K.E. Crawling and Walking Infants See the World Differently. Child Dev. 2014, 85, 1503–1518. [Google Scholar] [CrossRef] [PubMed]

- LeBarton, E.S.; Iverson, J.M. Fine Motor Skill Predicts Expressive Language in Infant Siblings of Children with Autism. Dev. Sci. 2013, 16, 815–827. [Google Scholar] [CrossRef] [Green Version]

- Masek, L.R.; Paterson, S.J.; Golinkoff, R.M.; Bakeman, R.; Adamson, L.B.; Owen, M.T.; Pace, A.; Hirsh-Pasek, K. Beyond Talk: Contributions of Quantity and Quality of Communication to Language Success across Socioeconomic Strata. Infancy Off. J. Int. Soc. Infant Stud. 2021, 26, 123–147. [Google Scholar] [CrossRef]

- Le, H.; Hoch, J.E.; Ossmy, O.; Adolph, K.E.; Fern, X.; Fern, A. Modeling Infant Free Play Using Hidden Markov Models. In Proceedings of the 2021 IEEE International Conference on Development and Learning (ICDL), Beijing, China, 23–26 August 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Doroniewicz, I.; Ledwoń, D.J.; Affanasowicz, A.; Kieszczyńska, K.; Latos, D.; Matyja, M.; Mitas, A.W.; Myśliwiec, A. Writhing Movement Detection in Newborns on the Second and Third Day of Life Using Pose-Based Feature Machine Learning Classification. Sensors 2020, 20, 5986. [Google Scholar] [CrossRef] [PubMed]

- Iverson, J.M.; Shic, F.; Wall, C.A.; Chawarska, K.; Curtin, S.; Estes, A.; Gardner, J.M.; Hutman, T.; Landa, R.J.; Levin, A.R. Early Motor Abilities in Infants at Heightened versus Low Risk for ASD: A Baby Siblings Research Consortium (BSRC) Study. J. Abnorm. Psychol. 2019, 128, 69. [Google Scholar] [CrossRef]

- Iverson, J.M. Developing Language in a Developing Body: The Relationship between Motor Development and Language Development. J. Child Lang. 2010, 37, 229–261. [Google Scholar] [CrossRef]

- Alcock, K.J.; Krawczyk, K. Individual Differences in Language Development: Relationship with Motor Skill at 21 Months. Dev. Sci. 2010, 13, 677–691. [Google Scholar] [CrossRef]

- Adolph, K.E.; Hoch, J.E. Motor Development: Embodied, Embedded, Enculturated, and Enabling. Annu. Rev. Psychol. 2019, 70, 141–164. [Google Scholar] [CrossRef] [PubMed]

- Rosenbaum, P.; Paneth, N.; Leviton, A.; Goldstein, M.; Bax, M.; Damiano, D.; Dan, B.; Jacobsson, B. A Report: The Definition and Classification of Cerebral Palsy April 2006. Dev. Med. Child Neurol. Suppl. 2007, 109, 8–14. [Google Scholar] [PubMed]

- Novak, I.; Morgan, C.; Adde, L.; Blackman, J.; Boyd, R.N.; Brunstrom-Hernandez, J.; Cioni, G.; Damiano, D.; Darrah, J.; Eliasson, A.-C. Early, Accurate Diagnosis and Early Intervention in Cerebral Palsy: Advances in Diagnosis and Treatment. JAMA Pediatr. 2017, 171, 897–907. [Google Scholar] [CrossRef] [PubMed]

- Geethanath, S.; Vaughan, J.T.J. Accessible Magnetic Resonance Imaging: A Review. J. Magn. Reson. Imaging JMRI 2019, 49, e65–e77. [Google Scholar] [CrossRef] [PubMed]

- Adde, L.; Helbostad, J.L.; Jensenius, A.R.; Taraldsen, G.; Grunewaldt, K.H.; Støen, R. Early Prediction of Cerebral Palsy by Computer-based Video Analysis of General Movements: A Feasibility Study. Dev. Med. Child Neurol. 2010, 52, 773–778. [Google Scholar] [CrossRef]

- Rahmati, H.; Aamo, O.M.; Stavdahl, Ø.; Dragon, R.; Adde, L. Video-Based Early Cerebral Palsy Prediction Using Motion Segmentation. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2014, 3779–3783. [Google Scholar] [CrossRef]

- Ihlen, E.A.; Støen, R.; Boswell, L.; de Regnier, R.-A.; Fjørtoft, T.; Gaebler-Spira, D.; Labori, C.; Loennecken, M.C.; Msall, M.E.; Möinichen, U.I. Machine Learning of Infant Spontaneous Movements for the Early Prediction of Cerebral Palsy: A Multi-Site Cohort Study. J. Clin. Med. 2020, 9, 5. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chawarska, K.; Klin, A.; Paul, R.; Volkmar, F. Autism Spectrum Disorder in the Second Year: Stability and Change in Syndrome Expression. J. Child Psychol. Psychiatry 2007, 48, 128–138. [Google Scholar] [CrossRef] [PubMed]

- Landa, R.; Garrett-Mayer, E. Development in Infants with Autism Spectrum Disorders: A Prospective Study. J. Child Psychol. Psychiatry 2006, 47, 629–638. [Google Scholar] [CrossRef]

- Maenner, M.J.; Shaw, K.A.; Baio, J. Prevalence of Autism Spectrum Disorder among Children Aged 8 Years—Autism and Developmental Disabilities Monitoring Network, 11 Sites, United States, 2016. MMWR Surveill. Summ. 2020, 69, 1–12. [Google Scholar] [CrossRef]

- Gordon-Lipkin, E.; Foster, J.; Peacock, G. Whittling down the Wait Time: Exploring Models to Minimize the Delay from Initial Concern to Diagnosis and Treatment of Autism Spectrum Disorder. Pediatr. Clin. 2016, 63, 851–859. [Google Scholar] [CrossRef] [Green Version]

- Ning, M.; Daniels, J.; Schwartz, J.; Dunlap, K.; Washington, P.; Kalantarian, H.; Du, M.; Wall, D.P. Identification and Quantification of Gaps in Access to Autism Resources in the United States: An Infodemiological Study. J. Med. Internet Res. 2019, 21, e13094. [Google Scholar] [CrossRef] [PubMed]

- Brian, J.A.; Smith, I.M.; Zwaigenbaum, L.; Bryson, S.E. Cross-site Randomized Control Trial of the Social ABCs Caregiver-mediated Intervention for Toddlers with Autism Spectrum Disorder. Autism Res. 2017, 10, 1700–1711. [Google Scholar] [CrossRef]

- Dawson, G.; Rogers, S.; Munson, J.; Smith, M.; Winter, J.; Greenson, J.; Donaldson, A.; Varley, J. Randomized, Controlled Trial of an Intervention for Toddlers with Autism: The Early Start Denver Model. Pediatrics 2010, 125, e17–e23. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Landa, R.J.; Holman, K.C.; O’Neill, A.H.; Stuart, E.A. Intervention Targeting Development of Socially Synchronous Engagement in Toddlers with Autism Spectrum Disorder: A Randomized Controlled Trial. J. Child Psychol. Psychiatry 2011, 52, 13–21. [Google Scholar] [CrossRef] [Green Version]

- Crippa, A.; Salvatore, C.; Perego, P.; Forti, S.; Nobile, M.; Molteni, M.; Castiglioni, I. Use of Machine Learning to Identify Children with Autism and Their Motor Abnormalities. J. Autism Dev. Disord. 2015, 45, 2146–2156. [Google Scholar] [CrossRef]

- Karatsidis, A.; Richards, R.E.; Konrath, J.M.; van den Noort, J.C.; Schepers, H.M.; Bellusci, G.; Harlaar, J.; Veltink, P.H. Validation of Wearable Visual Feedback for Retraining Foot Progression Angle Using Inertial Sensors and an Augmented Reality Headset. J. NeuroEng. Rehabil. 2018, 15, 78. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Deligianni, F.; Gu, X.; Yang, G.-Z. 3-D Canonical Pose Estimation and Abnormal Gait Recognition with a Single RGB-D Camera. IEEE Robot. Autom. Lett. 2019, 4, 3617–3624. [Google Scholar] [CrossRef] [Green Version]

- Kondragunta, J.; Hirtz, G. Gait Parameter Estimation of Elderly People Using 3D Human Pose Estimation in Early Detection of Dementia. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2020, 5798–5801. [Google Scholar] [CrossRef]

- Chaaraoui, A.A.; Padilla-López, J.R.; Flórez-Revuelta, F. Abnormal Gait Detection with RGB-D Devices Using Joint Motion History Features. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Li, G.; Liu, T.; Yi, J. Wearable Sensor System for Detecting Gait Parameters of Abnormal Gaits: A Feasibility Study. IEEE Sens. J. 2018, 18, 4234–4241. [Google Scholar] [CrossRef]

- Chen, Y.; Du, R.; Luo, K.; Xiao, Y. Fall Detection System Based on Real-Time Pose Estimation and SVM. In Proceedings of the 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Nanchang, China, 26–28 March 2021; pp. 990–993. [Google Scholar] [CrossRef]

- Bian, Z.; Hou, J.; Chau, L.; Magnenat-Thalmann, N. Fall Detection Based on Body Part Tracking Using a Depth Camera. IEEE J. Biomed. Health Inform. 2015, 19, 430–439. [Google Scholar] [CrossRef]

- Huang, Z.; Liu, Y.; Fang, Y.; Horn, B.K.P. Video-Based Fall Detection for Seniors with Human Pose Estimation. In Proceedings of the 2018 4th International Conference on Universal Village (UV), Boston, MA, USA, 21–24 October 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Mehrizi, R.; Peng, X.; Tang, Z.; Xu, X.; Metaxas, D.; Li, K. Toward Marker-Free 3D Pose Estimation in Lifting: A Deep Multi-View Solution. arXiv 2018, arXiv:1802.01741. [Google Scholar]

- Han, S.; Lee, S. A Vision-Based Motion Capture and Recognition Framework for Behavior-Based Safety Management. Autom. Constr. 2013, 35, 131–141. [Google Scholar] [CrossRef]

- Han, S.; Achar, M.; Lee, S.; Peña-Mora, F. Empirical Assessment of a RGB-D Sensor on Motion Capture and Action Recognition for Construction Worker Monitoring. Vis. Eng. 2013, 1, 6. [Google Scholar] [CrossRef] [Green Version]

- Blanchard, N.; Skinner, K.; Kemp, A.; Scheirer, W.; Flynn, P. “Keep Me In, Coach!”: A Computer Vision Perspective on Assessing ACL Injury Risk in Female Athletes. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1366–1374. [Google Scholar] [CrossRef]

- Vukicevic, A.M.; Macuzic, I.; Mijailovic, N.; Peulic, A.; Radovic, M. Assessment of the Handcart Pushing and Pulling Safety by Using Deep Learning 3D Pose Estimation and IoT Force Sensors. Expert Syst. Appl. 2021, 183, 115371. [Google Scholar] [CrossRef]

- Mehrizi, R.; Peng, X.; Metaxas, D.N.; Xu, X.; Zhang, S.; Li, K. Predicting 3-D Lower Back Joint Load in Lifting: A Deep Pose Estimation Approach. IEEE Trans. Hum. Mach. Syst. 2019, 49, 85–94. [Google Scholar] [CrossRef]

- Krosshaug, T.; Nakamae, A.; Boden, B.P.; Engebretsen, L.; Smith, G.; Slauterbeck, J.R.; Hewett, T.E.; Bahr, R. Mechanisms of Anterior Cruciate Ligament Injury in Basketball: Video Analysis of 39 Cases. Am. J. Sports Med. 2007, 35, 359–367. [Google Scholar] [CrossRef]

- Olsen, O.-E.; Myklebust, G.; Engebretsen, L.; Bahr, R. Injury Mechanisms for Anterior Cruciate Ligament Injuries in Team Handball: A Systematic Video Analysis. Am. J. Sports Med. 2004, 32, 1002–1012. [Google Scholar] [CrossRef] [PubMed]

- Kim, W.; Sung, J.; Saakes, D.; Huang, C.; Xiong, S. Ergonomic Postural Assessment Using a New Open-Source Human Pose Estimation Technology (OpenPose). Int. J. Ind. Ergon. 2021, 84, 103164. [Google Scholar] [CrossRef]

- Li, Y.; Wang, C.; Cao, Y.; Liu, B.; Tan, J.; Luo, Y. Human Pose Estimation Based In-Home Lower Body Rehabilitation System. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Cordella, F.; Di Corato, F.; Zollo, L.; Siciliano, B. A Robust Hand Pose Estimation Algorithm for Hand Rehabilitation. In New Trends in Image Analysis and Processing—ICIAP 2013; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8158, pp. 1–10. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Y.; Lu, W.; Gan, W.; Hou, W. A Contactless Method to Measure Real-Time Finger Motion Using Depth-Based Pose Estimation. Comput. Biol. Med. 2021, 131, 104282. [Google Scholar] [CrossRef]

- Milosevic, B.; Leardini, A.; Farella, E. Kinect and Wearable Inertial Sensors for Motor Rehabilitation Programs at Home: State of the Art and an Experimental Comparison. Biomed. Eng. Online 2020, 19, 25. [Google Scholar] [CrossRef] [Green Version]

- Tao, Y.; Hu, H.; Zhou, H. Integration of Vision and Inertial Sensors for 3D Arm Motion Tracking in Home-Based Rehabilitation. Int. J. Robot. Res. 2007, 26, 607–624. [Google Scholar] [CrossRef]

- Ranasinghe, I.; Dantu, R.; Albert, M.V.; Watts, S.; Ocana, R. Cyber-Physiotherapy: Rehabilitation to Training. In Proceedings of the 2021 IFIP/IEEE International Symposium on Integrated Network Management (IM), Bordeaux, France, 17–21 May 2021; pp. 1054–1057. [Google Scholar]

- Tao, T.; Yang, X.; Xu, J.; Wang, W.; Zhang, S.; Li, M.; Xu, G. Trajectory Planning of Upper Limb Rehabilitation Robot Based on Human Pose Estimation. In Proceedings of the 2020 17th International Conference on Ubiquitous Robots (UR), Kyoto, Japan, 22–26 June 2020; pp. 333–338. [Google Scholar] [CrossRef]

- Palermo, M.; Moccia, S.; Migliorelli, L.; Frontoni, E.; Santos, C.P. Real-Time Human Pose Estimation on a Smart Walker Using Convolutional Neural Networks. Expert Syst. Appl. 2021, 184, 115498. [Google Scholar] [CrossRef]

- Airò Farulla, G.; Pianu, D.; Cempini, M.; Cortese, M.; Russo, L.O.; Indaco, M.; Nerino, R.; Chimienti, A.; Oddo, C.M.; Vitiello, N. Vision-Based Pose Estimation for Robot-Mediated Hand Telerehabilitation. Sensors 2016, 16, 208. [Google Scholar] [CrossRef] [Green Version]

- Sarsfield, J.; Brown, D.; Sherkat, N.; Langensiepen, C.; Lewis, J.; Taheri, M.; McCollin, C.; Barnett, C.; Selwood, L.; Standen, P.; et al. Clinical Assessment of Depth Sensor Based Pose Estimation Algorithms for Technology Supervised Rehabilitation Applications. Int. J. Med. Inf. 2019, 121, 30–38. [Google Scholar] [CrossRef] [PubMed]

- Xu, W.; Chatterjee, A.; Zollhoefer, M.; Rhodin, H.; Mehta, D.; Seidel, H.-P.; Theobalt, C. MonoPerfCap: Human Performance Capture from Monocular Video. arXiv 2018, arXiv:1708.02136. [Google Scholar]

- Habermann, M.; Xu, W.; Zollhoefer, M.; Pons-Moll, G.; Theobalt, C. LiveCap: Real-Time Human Performance Capture from Monocular Video. arXiv 2019, arXiv:1810.02648. [Google Scholar]

- Wang, J.; Qiu, K.; Peng, H.; Fu, J.; Zhu, J. AI Coach: Deep Human Pose Estimation and Analysis for Personalized Athletic Training Assistance. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 374–382. [Google Scholar] [CrossRef]

- Einfalt, M.; Dampeyrou, C.; Zecha, D.; Lienhart, R. Frame-Level Event Detection in Athletics Videos with Pose-Based Convolutional Sequence Networks. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 42–50. [Google Scholar] [CrossRef] [Green Version]

- Einfalt, M.; Zecha, D.; Lienhart, R. Activity-Conditioned Continuous Human Pose Estimation for Performance Analysis of Athletes Using the Example of Swimming. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 446–455. [Google Scholar] [CrossRef] [Green Version]

- Güler, R.A.; Neverova, N.; Kokkinos, I. DensePose: Dense Human Pose Estimation in the Wild. arXiv 2018, arXiv:1802.00434. [Google Scholar]

- Patacchiola, M.; Cangelosi, A. Head Pose Estimation in the Wild Using Convolutional Neural Networks and Adaptive Gradient Methods. Pattern Recognit. 2017, 71, 132–143. [Google Scholar] [CrossRef] [Green Version]

- Fong, C.-M.; Blackburn, J.T.; Norcross, M.F.; McGrath, M.; Padua, D.A. Ankle-Dorsiflexion Range of Motion and Landing Biomechanics. J. Athl. Train. 2011, 46, 5–10. [Google Scholar] [CrossRef] [Green Version]

- Caccese, J.B.; Buckley, T.A.; Tierney, R.T.; Rose, W.C.; Glutting, J.J.; Kaminski, T.W. Sex and Age Differences in Head Acceleration during Purposeful Soccer Heading. Res. Sports Med. 2018, 26, 64–74. [Google Scholar] [CrossRef] [PubMed]

- Cerveri, P.; Pedotti, A.; Ferrigno, G. Kinematical Models to Reduce the Effect of Skin Artifacts on Marker-Based Human Motion Estimation. J. Biomech. 2005, 38, 2228–2236. [Google Scholar] [CrossRef]

- Joo, H.; Neverova, N.; Vedaldi, A. Exemplar Fine-Tuning for 3D Human Model Fitting Towards In-the-Wild 3D Human Pose Estimation. arXiv 2020, arXiv:200403686. [Google Scholar]

- Habibie, I.; Xu, W.; Mehta, D.; Pons-Moll, G.; Theobalt, C. In the Wild Human Pose Estimation Using Explicit 2D Features and Intermediate 3D Representations. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10897–10906. [Google Scholar] [CrossRef]

- Mehta, D.; Rhodin, H.; Casas, D.; Fua, P.; Sotnychenko, O.; Xu, W.; Theobalt, C. Monocular 3D Human Pose Estimation in The Wild Using Improved CNN Supervision. arXiv 2017, arXiv:1611.09813. [Google Scholar]

- Mehta, D.; Sridhar, S.; Sotnychenko, O.; Rhodin, H.; Shafiei, M.; Seidel, H.-P.; Xu, W.; Casas, D.; Theobalt, C. VNect: Real-Time 3D Human Pose Estimation with a Single RGB Camera. ACM Trans. Graph. 2017, 36, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Gilbert, A.; Trumble, M.; Malleson, C.; Hilton, A.; Collomosse, J. Fusing Visual and Inertial Sensors with Semantics for 3D Human Pose Estimation. Int. J. Comput. Vis. 2019, 127, 381–397. [Google Scholar] [CrossRef] [Green Version]

- Malleson, C.; Gilbert, A.; Trumble, M.; Collomosse, J.; Hilton, A.; Volino, M. Real-Time Full-Body Motion Capture from Video and IMUs. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 449–457. [Google Scholar] [CrossRef] [Green Version]

- WHO. International Classification of Functioning, Disability and Health: ICF 2001, Title of Beta 2, Full Version: International Classification of Functioning and Disability: ICIDH-2 (WHO Document no. WHO/HSC/ACE/99.2); WHO: Geneva, Switzerland, 2001. [Google Scholar]

- Fugl-Meyer, A.R.; Jääskö, L.; Leyman, I.; Olsson, S.; Steglind, S. The Post-Stroke Hemiplegic Patient. 1. a Method for Evaluation of Physical Performance. Scand. J. Rehabil. Med. 1975, 7, 13–31. [Google Scholar] [PubMed]

- Yozbatiran, N.; Der-Yeghiaian, L.; Cramer, S.C. A Standardized Approach to Performing the Action Research Arm Test. Neurorehabil. Neural Repair 2008, 22, 78–90. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Duncan, P.W.; Wallace, D.; Lai, S.M.; Johnson, D.; Embretson, S.; Laster, L.J. The Stroke Impact Scale Version 2.0. Stroke 1999, 30, 2131–2140. [Google Scholar] [CrossRef] [Green Version]

- Li, M.H.; Mestre, T.A.; Fox, S.H.; Taati, B. Automated Assessment of Levodopa-Induced Dyskinesia: Evaluating the Responsiveness of Video-Based Features. Parkinsonism Relat. Disord. 2018, 53, 42–45. [Google Scholar] [CrossRef]

- Li, M.H.; Mestre, T.A.; Fox, S.H.; Taati, B. Automated Vision-Based Analysis of Levodopa-Induced Dyskinesia with Deep Learning. In Proceedings of the 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017. [Google Scholar] [CrossRef] [PubMed]

- Li, M.H.; Mestre, T.A.; Fox, S.H.; Taati, B. Vision-Based Assessment of Parkinsonism and Levodopa-Induced Dyskinesia with Pose Estimation. J. Neuroeng. Rehabil. 2018, 15, 97. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Chen, J.; Hu, C.; Ma, Y.; Ge, D.; Miao, S.; Xue, Y.; Li, L. Vision-Based Method for Automatic Quantification of Parkinsonian Bradykinesia. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 1952–1961. [Google Scholar] [CrossRef] [PubMed]

- Aung, N.; Bovonsunthonchai, S.; Hiengkaew, V.; Tretriluxana, J.; Rojasavastera, R.; Pheung-Phrarattanatrai, A. Concurrent Validity and Intratester Reliability of the Video-Based System for Measuring Gait Poststroke. Physiother. Res. Int. J. Res. Clin. Phys. Ther. 2020, 25, e1803. [Google Scholar] [CrossRef]

- Shin, J.H.; Yu, R.; Ong, J.N.; Lee, C.Y.; Jeon, S.H.; Park, H.; Kim, H.-J.; Lee, J.; Jeon, B. Quantitative Gait Analysis Using a Pose-Estimation Algorithm with a Single 2D-Video of Parkinson’s Disease Patients. J. Park. Dis. 2021, 11, 1271–1283. [Google Scholar] [CrossRef]

- Ng, K.-D.; Mehdizadeh, S.; Iaboni, A.; Mansfield, A.; Flint, A.; Taati, B. Measuring Gait Variables Using Computer Vision to Assess Mobility and Fall Risk in Older Adults With Dementia. IEEE J. Transl. Eng. Health Med. 2020, 8, 2100609. [Google Scholar] [CrossRef]

- Li, T.; Chen, J.; Hu, C.; Ma, Y.; Wu, Z.; Wan, W.; Huang, Y.; Jia, F.; Gong, C.; Wan, S.; et al. Automatic Timed Up-and-Go Sub-Task Segmentation for Parkinson’s Disease Patients Using Video-Based Activity Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 2189–2199. [Google Scholar] [CrossRef]

| Domain | Behavior/Movement Pattern Tracked | References |

|---|---|---|

| Motor and non-motor development | Infant cruising (early locomotion) | [36] |

| Infant play/general movement | [37] | |

| Infant writhing | [51] | |

| Human performance optimization, injury prevention, and safety | Healthy repetitive movements | [14] |

| Healthy gait | [15,26,29,30,31,35,40] | |

| Sign language | [19] | |

| Healthy running | [27,35] | |

| Bilateral squat | [28] | |

| Healthy gait/jumping/throwing | [29] | |

| Lifting | [79,84] | |

| Various unsafe working behaviors | [80,81] | |

| ACL injury risk | [82,85,86] | |

| Handcart pushing and pulling | [83] | |

| Ergonomic postural assessment | [87] | |

| Remotely-delivered rehabilitation | [88,91,92,93] | |

| Healthy finger movements | [90] | |

| Rehabilitation robotics | [94,95,96,97] | |

| Athletic training | [100,101] | |

| Swimming | [102] | |

| Clinical motor assessment | Gait in Parkinson’s disease | [25,33,123] |

| Knee kinetics in osteoarthritis | [32] | |

| Gait in cerebral palsy | [34] | |

| Simulated abnormal gait | [72,74] | |

| Gait in older adults | [73] | |

| Fall detection | [76,77,78] | |

| Dyskinesias in Parkinson’s disease | [118,119,120] | |

| Gait in older adults with dementia | [124] | |

| Timed up-and-go in Parkinson’s disease | [125] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stenum, J.; Cherry-Allen, K.M.; Pyles, C.O.; Reetzke, R.D.; Vignos, M.F.; Roemmich, R.T. Applications of Pose Estimation in Human Health and Performance across the Lifespan. Sensors 2021, 21, 7315. https://doi.org/10.3390/s21217315

Stenum J, Cherry-Allen KM, Pyles CO, Reetzke RD, Vignos MF, Roemmich RT. Applications of Pose Estimation in Human Health and Performance across the Lifespan. Sensors. 2021; 21(21):7315. https://doi.org/10.3390/s21217315

Chicago/Turabian StyleStenum, Jan, Kendra M. Cherry-Allen, Connor O. Pyles, Rachel D. Reetzke, Michael F. Vignos, and Ryan T. Roemmich. 2021. "Applications of Pose Estimation in Human Health and Performance across the Lifespan" Sensors 21, no. 21: 7315. https://doi.org/10.3390/s21217315