1. Introduction

It is reported by U.S. Energy Information Administration that buildings consumed 32% of primary energy in 2019 in the United States and global energy consumption in buildings will grow by 1.3% per year on average from 2018 to 2050 [

1]. Building operation data are playing an important role in building design, retrofit, commissioning, maintenance, operations, monitoring, analysis, modeling, and control. With the wide adoption of building automation system (BAS), smart sensors, and Internet of Things in buildings, massive measurements from sensors and meters are continuously collected, providing a +great amount of data on equipment and building operations and great opportunities for data-driven tools to improve building energy efficiency based on collected data [

2].

Data quality is essential for data-driven tools and missing data is one of the most common and important issues for data quality. During building operations, it is common for sensors to fail to record data for several reasons, including malfunctioning equipment or sensors, power outage at the sensor’s node, random occurrences of local interferences, and a higher bit error rate of the wireless radio transmissions as compared with wired communications [

3]. Cabrera and Zareipour [

4] summarized that missing data can be grouped depending on the reason they are missing: (a) missing not at random, or systematic missing: when the probability of an observation being missing depends on the information that is not observed; (b) missing at random: when the probability of an observation being missing depends on other observed values; and (c) missing completely at random: when the probability of an observation being missing is completely at random and not related to any other value.

In statistics, imputation is the process of replacing missing data with substituted values [

5]. The theories of imputation are well studied. Early in 2001, Pigott [

6] wrote a review of methods for missing data imputation and he summarized that ad hoc methods, such as complete case analysis, available case analysis (pairwise deletion), or single-value imputation, are widely studied. Ad hoc methods can be easily implemented, but they require assumptions about the data that rarely hold in practice. However, model-based methods, such as maximum likelihood using the expectation-maximization algorithm and multiple imputation, hold more promise for dealing with difficulties caused by missing data. While model-based methods require specialized computer programs and assumptions about the nature of the missing data, these methods are appropriate for a wider range of situations than the more commonly used ad hoc methods. Harel and Zhou [

7] reviewed some key theoretical ideas, forming the basis of multiple imputation and its implementation, provided a limited software availability list detailing the main purpose of each package, and illustrated by example the practical implementations of multiple imputation, dealing with categorical missing data. Ibrahim et al. [

8] reviewed four common approaches for inference in generalized linear models with missing covariate data: maximum likelihood, multiple imputation, fully Bayesian, and weighted estimating equations. They studied how these four methodologies are related, the properties of each approach, the advantages and disadvantages of each methodology, and computational implementation. They examined data that are missing at random and nonignorable missing. They used a real dataset and a detailed simulation study to compare the four methods.

Many existing studies are found to apply data imputation in missing data in buildings. Deleting the missing data is the most direct way to deal with them and, on some occasions, it can be counted as a data imputation method. In the research from Ekwevugbe et al. [

9], all corrupted and missing data instances due to instrumentation limitations were excluded from further analysis. Xiao and Fan [

10] addressed that missing values can be filled in using the global constant, moving average, or inference-based models. In their paper, missing values were handled using a simple moving average method with a window size of five samples. Rahman et al. [

11] proposed an imputation scheme using a recurrent neural network model to provide missing values in time series energy consumption data. The missing-value imputation scheme has been shown to obtain higher accuracies than those obtained using a multilayer perceptron model. Peppanen et al. [

12] presented a novel and computationally efficient data processing method, called the optimally weighted average data imputation method, for imputing bad and missing load power measurements to create full power consumption data sets. The imputed data periods have a continuous profile with respect to the adjacent available measurements, which is a highly desirable feature for time-series power flow analyses. Ma et al. [

13] proposed a methodology called the hybrid Long Short-Term Memory model with Bi-directional Imputation and Transfer Learning (LSTM-BIT). It integrates the powerful modeling ability of deep learning networks and flexible transferability of transfer learning. A case study on the electric consumption data of a campus lab building was utilized to test the method.

To summarize the above research, researchers introduced, developed, and applied a specific imputation method applied for specific sensor types and missing data scenarios. However, normally in one building system, there are various types of sensors, including a thermometer, humidity sensor, flow meter, energy meter, differential pressure sensors, etc. There are also multiple missing data mechanisms (malfunction, power outage, local interferences, wireless radio transmissions error) and multiple randomness for missing data (missing not at random, missing at random, missing completely at random) for various sensors. The single imputation method may not be able to capture various missing data scenarios from various sensors. Few researchers study the compatibility of a single technique on various sensors with missing data. A few papers are found to work on the selection and use of multiple data imputation methods and they are introduced in the following paragraph.

Inman et al. [

14] explored the use of missing data imputation and clustering on building electricity consumption data. The objective was to compare two data imputation methods: Amelia multiple imputation and cubic spline imputation. The results of this study suggest that using multiple imputation to fill in missing data prior to performing clustering analysis results in more informative clusters. Schachinger et al. [

15] focused on improving and correcting the monitored data set. They tried to identify the reasons for data losses and then look for recovery options. If lengths of periods of missing data are not exceeding the predefined thresholds, these periods are interpolated. Interpolation thresholds are set depending on the frequency of sensor monitoring and dynamic behavior. Continuous data are interpolated using linear and polynomial interpolation. Habib et al. [

16] filled the missing values with regression and linear interpolation for short and long periods. They also addressed that (a) it is necessary to detect the missing gaps between the data acquired as it is also an important factor to indicate the reliability of the data, (b) these missing gaps are always undeniable and lowering the amount of meaningful calculations, (c) it is crucial to make the missing gaps fewer as data with fewer gaps is considered good quality data, and (d) there are different methods available for handling the missing values, e.g., regression, depending on the nature of the data and other parameters, such as computations, precision, robustness, and accuracy. Xia et al. [

17] addressed that to get the data in workable order for calculation, analysis, and benchmarking, the missing or invalid (mainly negative) data should be replaced with data during time periods or days that were similar to the invalid points, taking weather condition into account as well. For example, a few missing data would be replaced by the previous or following few proper data, or their average. Several hours’ missing data would be replaced by data of the same time periods on the previous or following day, considering weekdays and weekends. The same goes for missing or invalid data of an even longer period. Garnier et al. [

18] used data anterior and/or posterior to the sensor failure in order to rebuild the missing information. Two different approaches were presented: an interpolation technique used for the estimation of missing solar radiation data and an extrapolation technique based on artificial intelligence deals with indoor temperature estimation.

The papers in the last paragraph addressed the selection among only a few (two or three) imputation methods according to the length of missing data gaps, sensor types, and the nature of missing data. Too much domain knowledge is set to select among the sensors, making the method less automated. Ensemble method, which is a pool-based method that can better address the variation problem is rarely applied for building sensors. Additionally, few studies are found to apply the ensemble method to customize the imputation for different sensors with missing data.

One more key issue for data imputation techniques lies in the difficulty of validation. Since the information of missing data are missed already and the truth data is lost forever, it is hard to create some testing or validation scenarios to quantify the performance of data imputation methods.

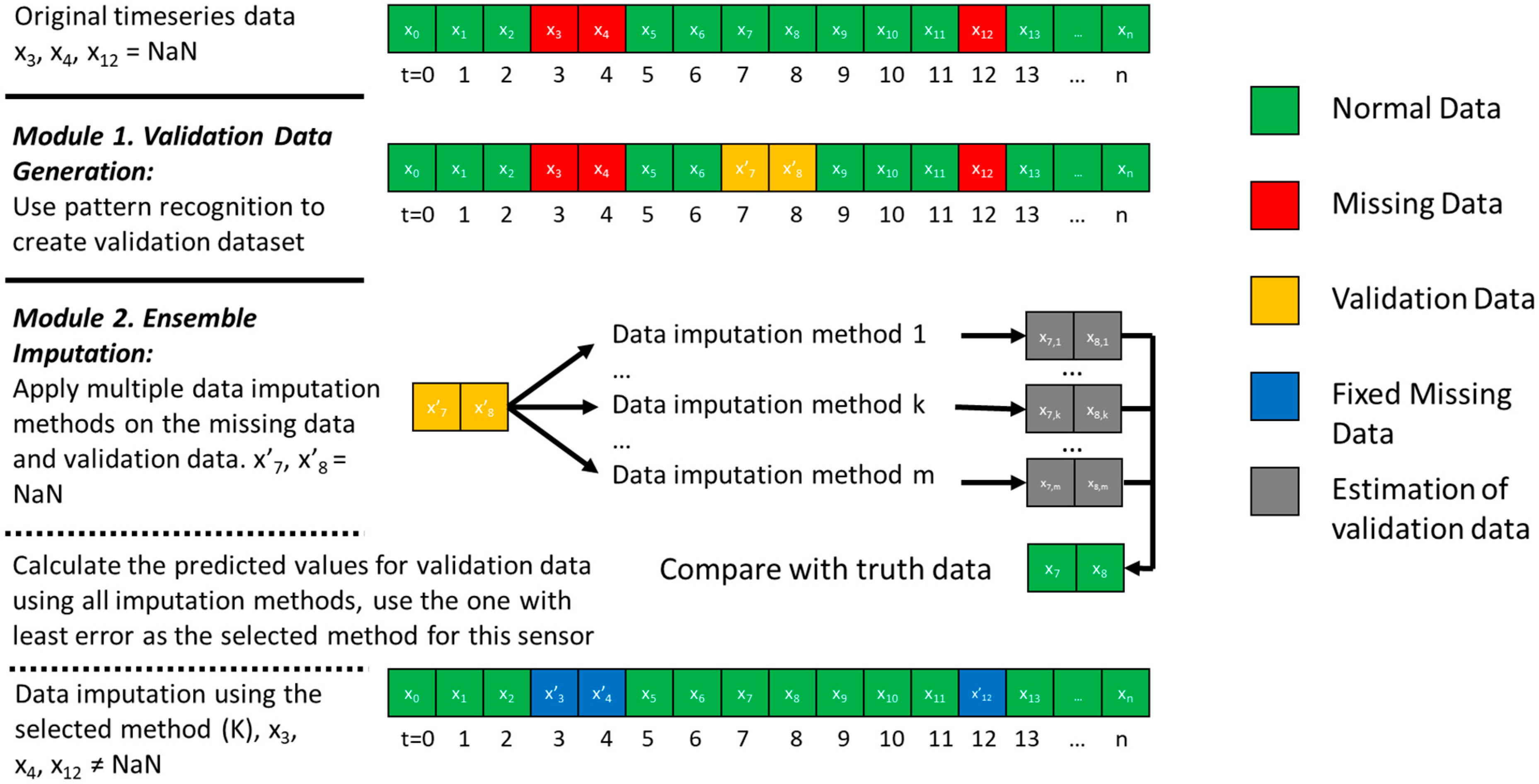

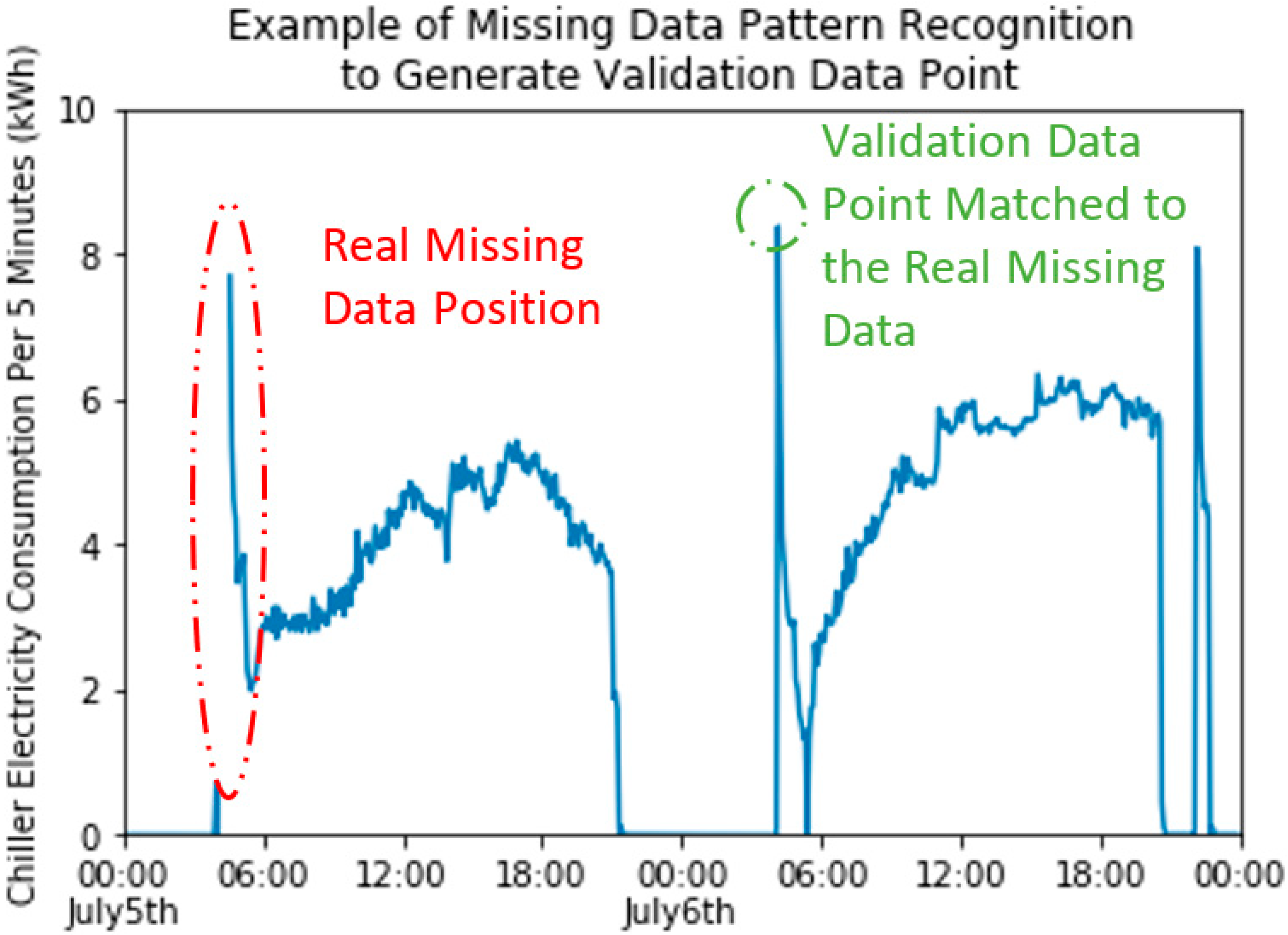

In this paper, a framework is proposed to deal with the above two problems. First, a validation module is developed based on pattern recognition. This module identifies good data points that have similar characteristics with the missing data. The selected good data can mimic missing data but with truth value, which create testing scenarios to validate the effectiveness of the single data imputation method. Second, with the validation data decided, a pool of data imputation methods is tested under the validation dataset, to find an optimal imputation method for each sensor, which is basically an ensemble method. The selected imputation method is expected to be different from sensor to sensor, which can optimize the accuracy of imputation under different sensor types and different mechanism and randomness of data missing for each sensor.

The paper is organized as follows. The data imputation framework is developed in

Section 2. The developed framework is applied to a real-building case study to demonstrate its effectiveness in

Section 3. Results and discussion are introduced in

Section 4. Conclusions are drawn in

Section 5.

5. Conclusions

This paper developed a framework of data imputation for sensors from building energy systems. The first module of this framework is developed based on pattern recognition, which identifies good data points that have similar characteristics with the missing data. The selected good data can mimic missing data but with truth value, which create testing scenarios to validate the effectiveness of the data imputation method. In the second module of this framework, a pool of data imputation methods is tested under the validation dataset to find an optimal imputation method for each sensor, which is termed as an ensemble method. The selected imputation method is expected to vary from sensor to sensor, which can reflect the specific mechanism and randomness of missing data from each sensor.

The effectiveness of the framework is demonstrated in a real-building case study. The results show the importance of the ensemble method to automatically customize the selection of the imputation method based on different sensor types and missing data characteristics, after finding in the case study that (1) the ensemble method outperforms the best single imputation method by 18.2% and (2) the single imputation method cannot achieve good performance in all types of sensors and missing data characteristics.

The framework can automate the data cleaning process due to very little domain knowledge being required. Users of this framework do not need any knowledge on the characteristics of the sensor and its missing data. Instead, the pool-based ensemble imputation method can automatically evaluate each single imputation method and find the most suitable one for each sensor. The automation process is essentially important with the development of BAS and Internet of Things, where a high automation is required for building analysis, modeling, and control.

In terms of the limitation and future work, first, the framework only considers offline missing data. In the future, it can be extended for an online process. Second, the pool of imputation methods does not exhaust all the existing imputation methods. More sophisticated data imputation methods, such as Amelia, Self-Organization Maps, and K-nearest neighbors, can be included into the pool of imputation methods to further improve the performance of the whole framework. Moreover, the frequency of readings in this paper is 5 min for all sensors; in the future, the impact of sensor reading frequency on the accuracy of the developed framework can be further studied. Finally, the validation data generation module will not find appropriate (or similar enough) validation data when the missing data is a very rare case (in terms of weather and happening time) or no such pattern appears in the dataset. In the future, the impact of this situation on imputation accuracy should be evaluated. Generally speaking, the future work should focus on extending the developed framework to a more generic and plug-n-play tool for BAS.