1. Introduction

Low frequency vibration generally exists in living environment and production activities. Vibrational environment has a non-negligible influence on precision instruments, bridges, buildings and human bodies [

1,

2,

3]. Even low frequency vibration with a small amplitude in the short-term may cause or accelerate destruction. Therefore, qualitative monitoring and analysis of vibration in daily life environment is an active field to be searched.

The realization of vibration monitoring mainly contains the contact sensing mode and non-contact sensing mode. Compared with the contact sensing mode, the visual perception non-contact sensing mode [

4,

5,

6] relies on a camcorder to record image in a timed sequence. Visual-based vibration sensing has the superiority of simple environmental requirements, non-invasion, easy operation, strong applicability and fast acquisition. With the recent developments in image processing, computer vision techniques and deep learning, the diversity and robustness of this vision-based approach has been greatly improved.

For the vibration monitoring by visual perception, how to quickly analyze the video information and realize the vibration information extraction is the core of the visual monitoring system. Traditionally, visual video analysis relies on spatiotemporal interest points described by intuitive low-level features such as SIFT [

7]. Moreover, some algorithms proposed to extract single or multiple vibration points in the frame images. Jiantao Liu et al. [

8] proposed image sequence analysis by reading video as an image sequence and saving it as separate pixel brightness vibration signals. Our previous research [

9] studied the projected color fringe from a vibrating plane and chose a center point of a series of images to record the surface height changes for gain vibration frequency. U.P. Poudel, G et al. [

10] used digital video imaging for detecting damaging in the structures, which is based on sub-pixel edge identification to obtain the time series of a vibrating object. According to the characteristics of vibration in a time domain, feature engineering of these methods transforms two-dimensional information into one-dimensional information to realize the vibration measuring or monitoring. The extracted one-dimensional information is a biased representation of the original signal sequence, not a perfect representation in the overall view of vibration. In fact, the vibration is not only correlated in temporal and one-dimensional vibration information structure does not completely cover the spatial characteristics of vibration. One-dimensional signal sequence can only represent vibration information in which every independent variable corresponds to only one dependent variable. Image as a first-hand obtained information carrier of morphological features and changes of spatial and temporal information records the vibration in space. The complicated structure distribution and interrelationship is one where every independent variable may have more than one dependent variable. We hope to put the two-dimensional information of a vibration video directly into the network, so as to represent the whole vibration change.

The main purpose of feature engineering is to reduce the modeling complexity by reducing the input dimension. The complete characteristics of a video stream in space is not easy to recognize from manual feature engineering. However, the advances in modeling methods have made it possible to directly take high dimensional data as the model input. Currently, given the interest in learning deep with its strong self-learning ability and dependence on getting rid of manual intervention and expert experience, convolutional neural networks (CNN) have pretty good performances in many scopes [

11,

12,

13]. Several vibration research methods combining with it in some stage have been proposed recently. Ruoyu Yang et al. [

14] selected the deep learning CNN-LSTM approach as a backbone to serve the computer vision-based vibration measurement techniques. Jiantao Liu et al. [

15] proposed image-based machine learning via LSTM-RNNs combined with a multi-target learning techniques method to measure the vibration frequency. Huipeng Chen et al. [

16] combined the two-direction vibration bearing signal data with a deep convolutional neural network for fault diagnosis. Compared with traditional methods, deep learning has a strong feature extraction ability for a large amount of complex data automatically with the serial structure of feature extractor and classifier. The improvement in the neural network makes it possible to take the vibration video (original high dimensional signal) as the input to directly estimate the essential relationship between the input and the vibration states, which may release researchers from constrained manual feature engineering. In theory, without manual feature engineering, the model is the best relationship representation in mathematics, rather than the empirical model based on signal-feature-model method.

Based on the RGB camera, there have been some attempts to detect small objects with the help of geometry cues and CNNs. However, when the vibration environment such as ambient light is not stable, the vibration information collected may be affected. Relying on apparent information in the RGB image alone is not sufficient. It is necessary to obtain multi-sided vibration information to describe the vibration at each moment, so at least one mode auxiliary to the RGB mode is required. The depth mode contains more location, contour and spatial information that can be used as a critical indicator of objects. To achieve more comprehensive and accurate results, the fusion of modes is essential [

17,

18,

19]. The data level fusion is mainly used to integrate signals such as the various types of video resources, which is a low-level fusion. It has a high demand for data homogeneity, and poor real-time performance. Feature level fusion fuses information after feature extraction. Data compression in feature extraction is considerable, but there may be information loss in fusion. Decision level fusion is based on different sensors to obtain the target object processing, which has strong flexibility, a low requirement for data homogeneity and a strong fault tolerance, but the learning ability could be limited. Combined with the characteristics of modal and convenience in practical operation, it is necessary and significant to adopt a method that can intelligently and effectively give the results.

In this work, we propose a method supported by depth mode and RGB mode information acquired from Microsoft Kinect v2 and combine it with 3D convolutional networks and conventional LSTM (3DCNN-ConvLSTM), which is a further development for monitoring the vibration frequency.

The main contributions of this paper are summarized as follows:

We propose to use 3D convolution to extract spatial and temporal features from the vibration video stream in the field of vibration monitoring for the first time. Compared with the traditional vibration feature extraction method, the feature extractor operates in both spatial and temporal dimensions to capture the vibration features from raw data automatically without depending on the signal processing techniques in the video stream.

We implement the network corresponding to vibration signal characteristics to realize frequency classification and monitoring. In the low frequency vibration range, the 3DCNN and ConvLSTM network architecture can effectively learn the spatial-temporal characteristics of muti-frequency with both global and local features. 3DCNN is used to extract the short-term spatiotemporal feature. The ConvLSTM structure learns the long-term spatiotemporal feature information.

The method we propose is non-invasive and has no special restrictions on the monitoring environment. In order to reduce the interference factors such as ambient light and meet more comprehensive vibration monitoring as far as possible, we set the depth mode as the auxiliary of the color mode, and improve the performance of vibration monitoring through multi-modal fusion. The experimental results show that the method is superior to the single-modal structure.

The remainder of this paper is organized as follows. In

Section 2, materials and methods of the system are described. In

Section 3, experiment evaluation of different objects is made to verify the effectiveness and superiority of the proposed method. After this, the obtained results with the discussions and the conclusions are given in

Section 4.

2. Materials and Methods

In this paper, a multi-modal (depth and RGB) visual vibration monitoring system based on Kinect v2 and 3DCNN-ConvLSTM is proposed. The procedure of the novel method to realize vibration recognition is shown in

Figure 1. There are three main parts: vibration signal acquisition, 3DCNN-LSTM and multi-modal fusion. In this method, the depth mode of Kinect v2 are complementary to the RGB mode to collect the input visual information. The input of the network is the collected sample without the tedious feature extraction processing. According to a fusion formula in the late stage the process of fusion multi-modal is carried out.

2.1. Vibration Signal Acquisition

RGB-D camera has been a rising technology in recent years [

20,

21]. Kinect v2, a common RGB-D camera, can simultaneously record the vibration video of objects in both RGB mode and depth mode. The depth mode of Kinect is based on a flight (TOF) algorithm. The basic principle of TOF is to continuously transmit light pulses (generally invisible light) to the observed object and calculates the distance between the sensor and the object based on the flight time. Compared with color image, depth image contains depth information indicating the actual distance from the sensor to the object. The interference of background light is reduced to a certain extent due to the high energy of the light pulses. Theoretically when the vibration occurs, the depth images have superior advantages with the texture-less and sharp-edge characteristics. In the process of acquisition, there is no need for strict adjustment or camera calibration and only collecting the image of the vibrating object within the view is needed. Owing to these characteristics, depth information can be complemented to RGB mode for the monitoring of the whole vibration process.

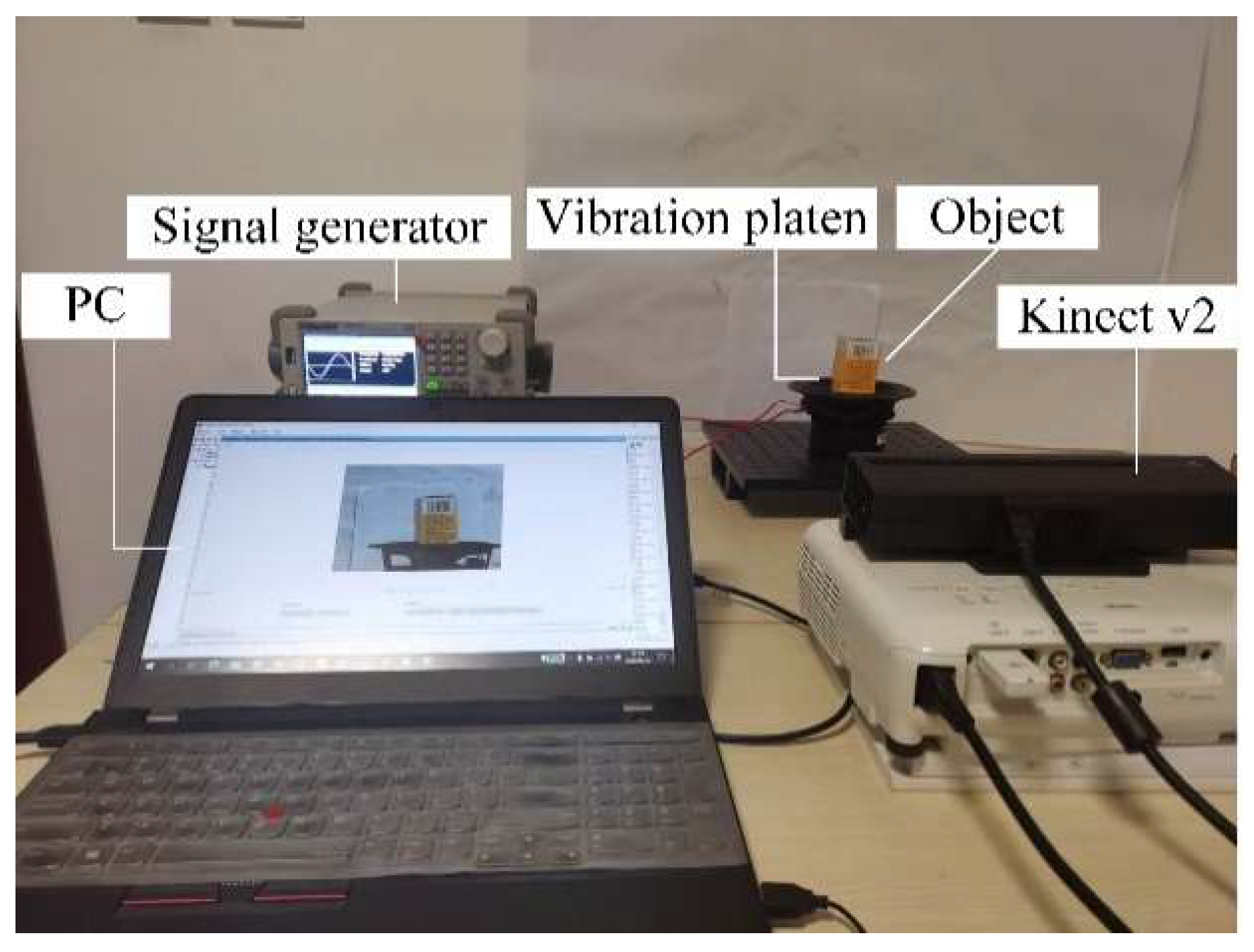

Vibration signal acquisition is shown in

Figure 1. We use a signal generator to provide sinusoidal signals with a frequency from 0 Hz to 10 Hz and peak to peak voltage 20 V to control the vibration platen. The object to be monitored is placed in the platen. The acquisition equipment Kinect v2 is used to take vibration frames of RGB and depth modes. The frame rate of two mode is 30 fps. In the end, a series of RGB and depth mode frame images are obtained, which records the object vibration process in a low frequency vibration environment.

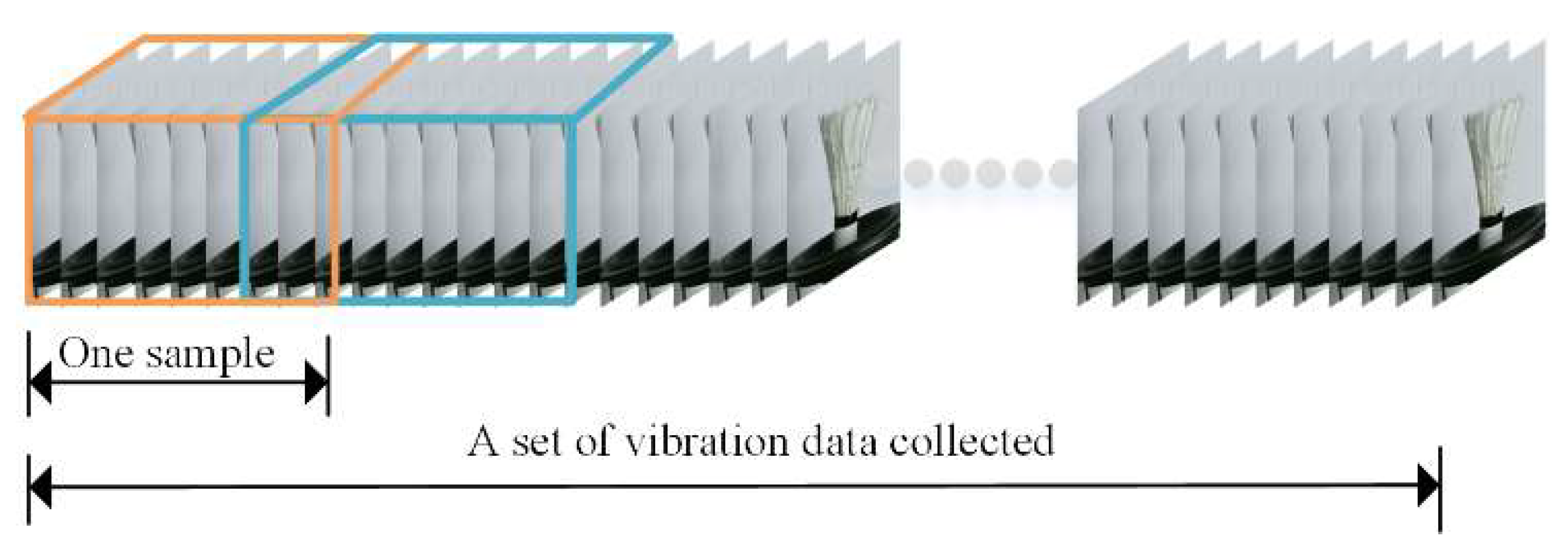

2.2. Networks for Vibration Signal

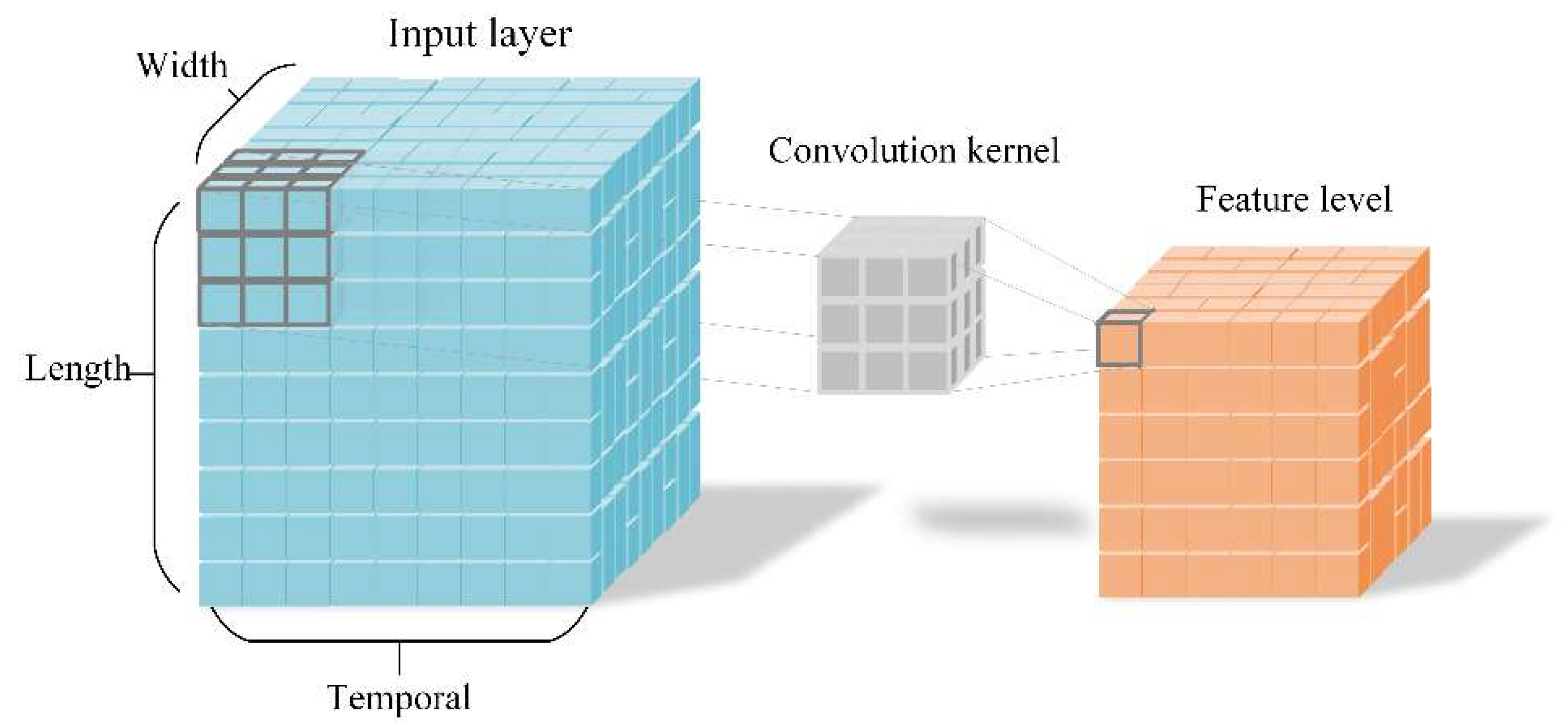

In order to learn the vibration feature in the space, it is desirable to capture the vibration information encoded in contiguous frames. In

Figure 2, the video frame information is represented by blue squares with three dimensions including width, length and temporal. The three-dimensional convolution kernel will perform slide window operations in height, width and temporal directions on video stream. The image receptive field of the input layer convolutes by three-dimensional convolution kernel, the temporal features can be extracted at one time, and the state change information of multiple frames can be captured. However, when 2D convolution operating is in process, there is no relation between the extracted feature in the temporal direction. After the sum operator, all features are collapsed. Compared with the two-dimensional convolution principle, three-dimensional convolution [

22,

23] with its pooling could be performed spatiotemporally.

Formally, the value of a unit at position

in the

feature map in the

layer, denoted as

, is given by

where

is the length of the 3D kernel,

is the width of the 3D kernel and

is the size of the 3D kernel along the temporal dimension.

is the weight of 3D kernel position (p, q, r) connecting the upper image receptive field.

represents the bias and

represents the activation function.

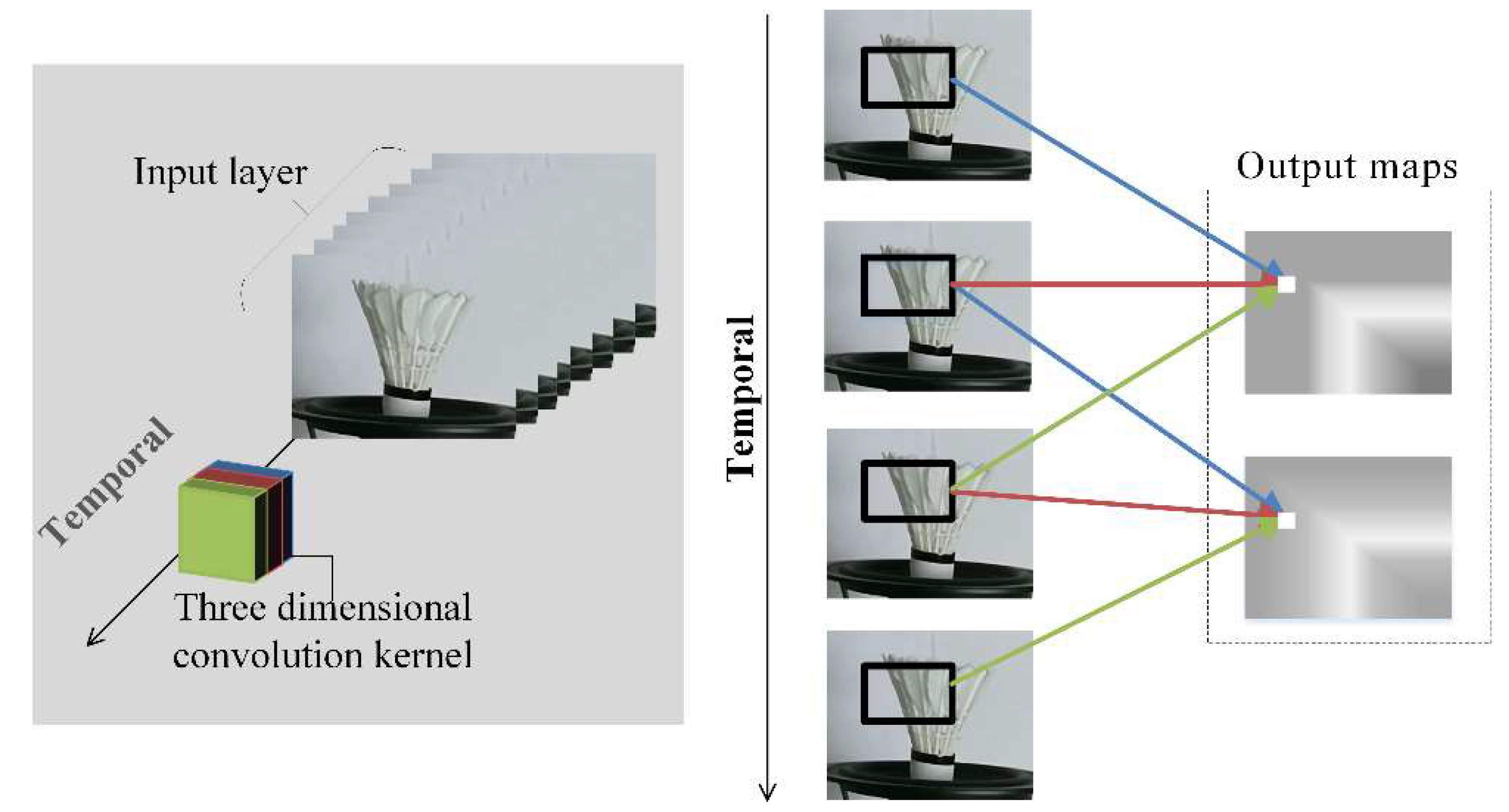

In

Figure 3, the 3D convolution kernel with length 3 (red, green and blue) in the temporal direction executes two times convolution operations sequentially in the temporal direction to obtain output maps. The weight of the convolution kernel is the same in the whole video stream and one convolution kernel can only extract one type of feature. The features pointed by the different color arrows to the same output map containing temporal information, and all output maps form a 3D tensor. So the 3D convolutional network is well-suited for the vibration feature learning in frame images.

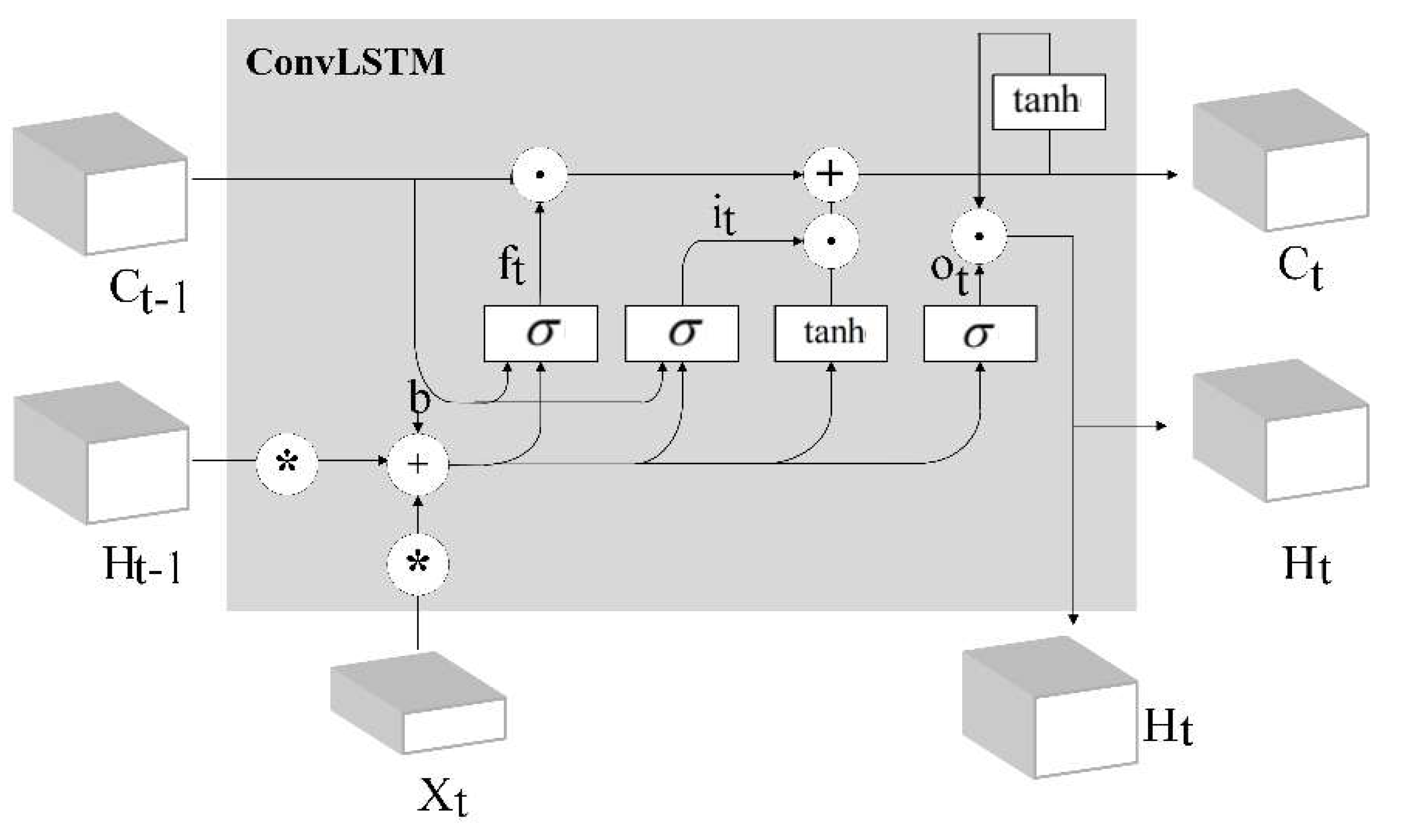

3DCNN operates in a comparatively short-term spatiotemporal space, and applying 3DCNN architectures alone to the time-series related problem is sub-optimal. Long short-term memory (LSTM) networks are skillful in sequential learning by passing signal information across time steps [

14]. As shown in

Figure 4, when the input vector

is fed as 3-D matrices after the 3DCNN, ConvLSTM [

24] could be considered. ConvLSTM replaces the convolution operators with an LSTM memory cell. ConvLSTM has been applied in a time-series classification for anomaly detection using video sequences.

The ConvLSTM can be formulated as

where

are the inputs,

are the cell states,

are the hidden states and

are the gates.

is the sigmoid function and W is the weight corresponding to different state gates. The symbol ‘◦’represents the multiplication of the corresponding elements of the matrix, also known as the Hadamard product.

3DCNN-ConvLSTM architecture uses 3D Convolutional Neural Network layers coupled with ConvLSTM for feature extraction on input spatio-temporal characterization of vibration data.

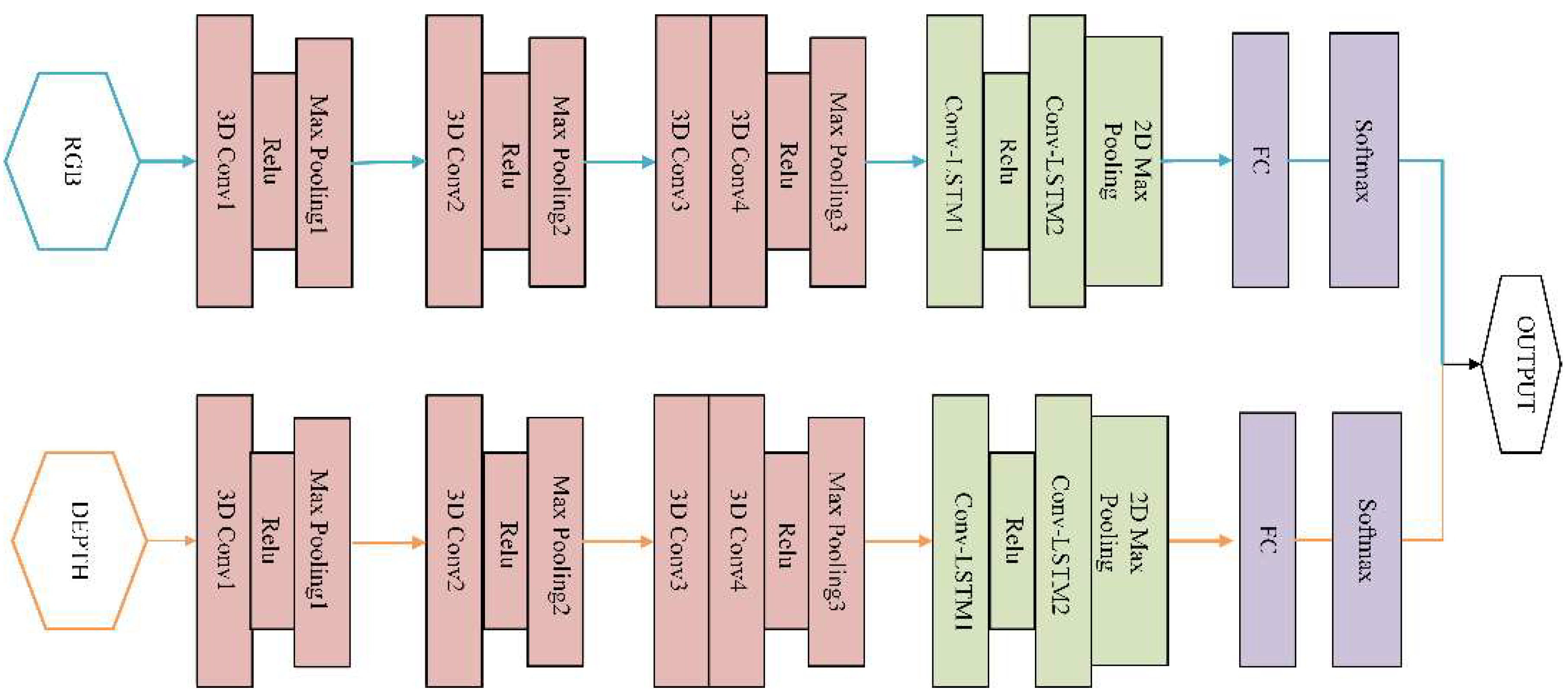

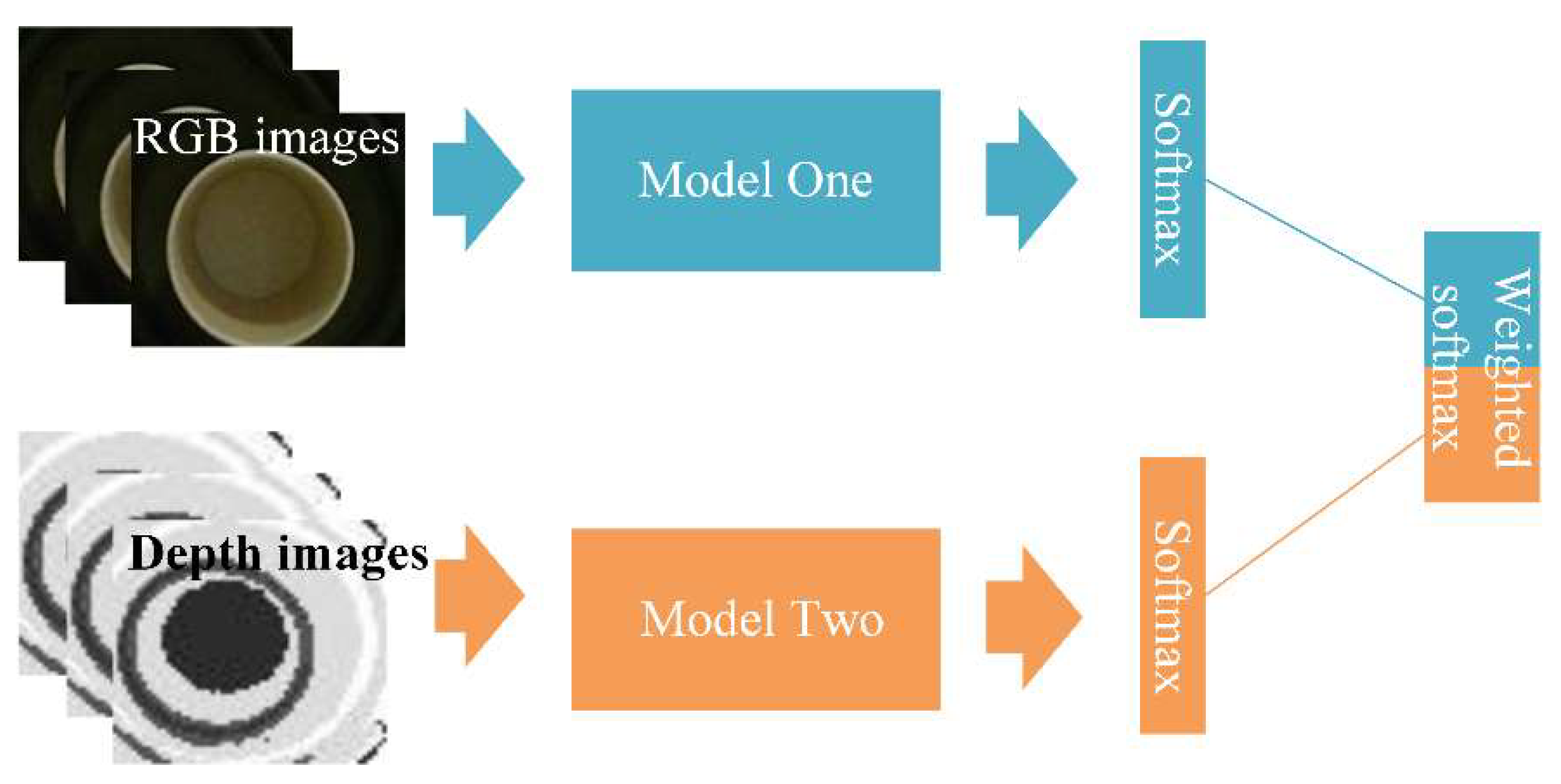

2.3. Model of Muti-Modal Networks

The model design should match the characteristics of vibration learning spatiotemporal features simultaneously. In

Figure 5, an architecture with small 3 × 3 × 3 convolution kernels in all layers is among the best performing architecture for 3DCNN. The learning ability is positively related to the number of layers and the size of the kernels. If the structure is too simple, the learning ability will be so poor that it cannot effectively integrate the vibration information with the small amplitude.

Table 1 shows the details of the 3DCNN-Convlstm used in the model. The filter counts of the four Conv3D layers are 30, 60, 80, 80 respectively according to the complexity of the data. Relu is a nonlinear activation function which improves the distinguishability of the learned features.

The ConvLSTM obtains the temporal information between frames based on the spatial information extracted by 3DCNN. Two-level ConvLSTM is deployed in the proposed algorithm. The convolutional kernel size is 3 × 3 with stride 1 × 1. The convolutional filter counts of the two-level ConvLSTM layers are 256 and 384, respectively. The output of the high level ConvLSTM layer is down-sampled by 2D max pooling, and then flattened into a 1D tensor, which is considered as the long-term spatiotemporal features for each vibration frequency. This 1D tensor is then fully connected to 11 nodes by the fully connected (FC) layer. After the softmax layer, the probability of each class is obtained.

The schematic diagram of multi-modal fusion is shown in

Figure 6. The softmax layer is used to calculate the probability distribution of each class. The formula of softmax could be expressed as follows:

where x is the output vector of the full connection layer and has the dimension the same as the number of classification categories and

is the element value of vector x.

is the softmax value

of representing the probability that this input is class i. The output vectors of the two softmax layers are the predicted probabilities of each class by two modal networks. Then the mean of the two prediction vectors is calculated to get the prediction vector. Combining the two scores according to fusion Formula (8):

is the final prediction vector, and are the prediction vectors of RGB and depth mode respectively.

4. Discussion and Conclusions

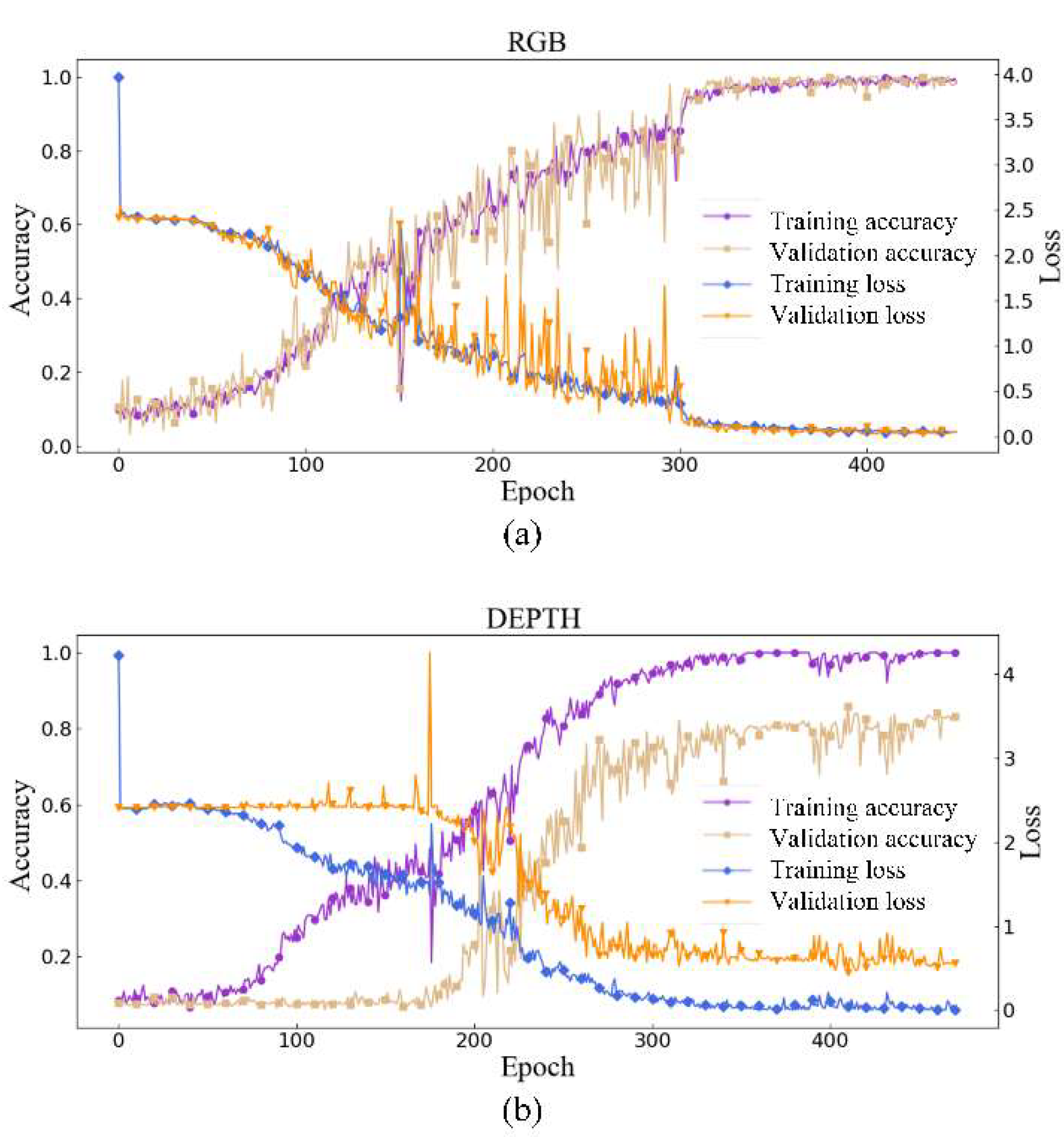

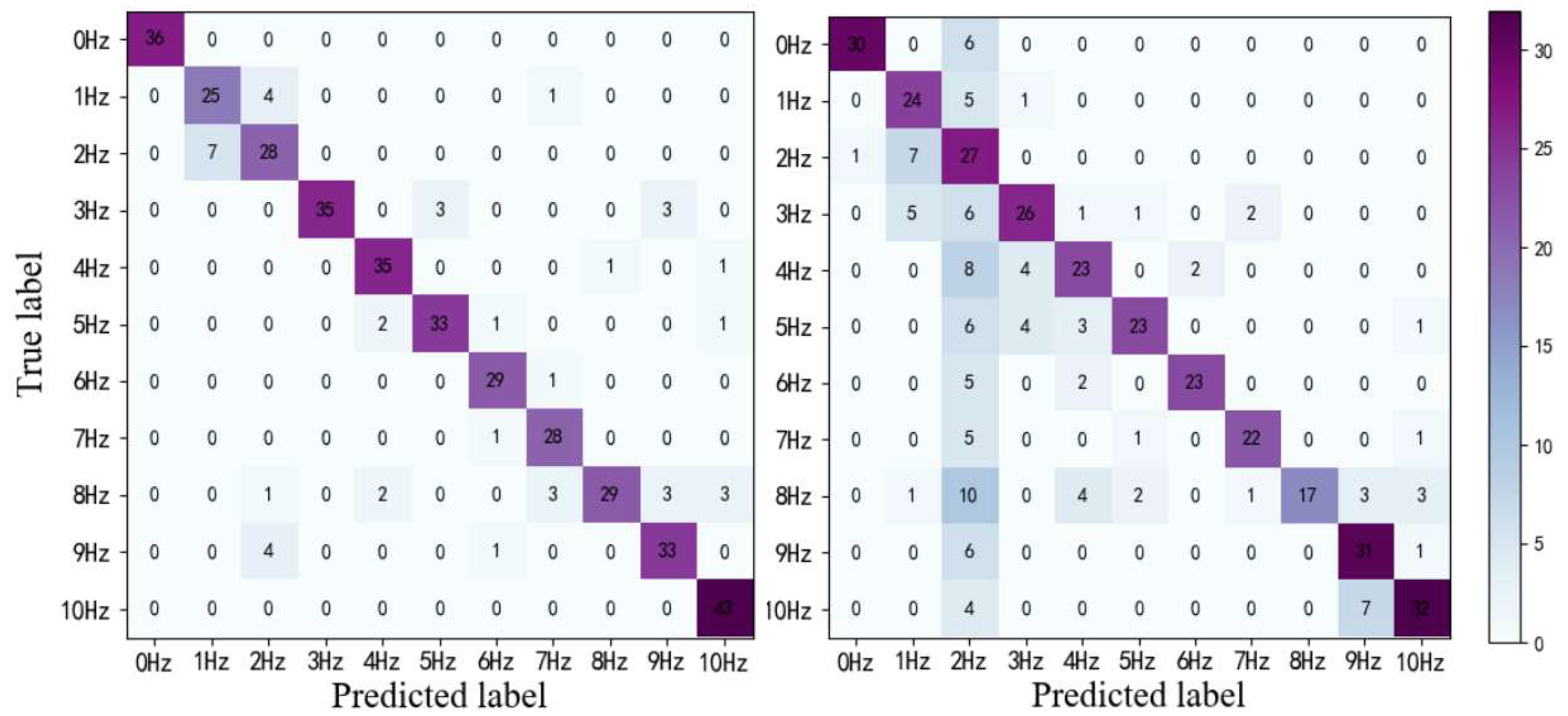

The context of the presented work is the challenging task of monitoring low frequency vibration in non-contact video methods. To meet that aim, we have proposed an approach for a low frequency vibration visual monitoring system based on multi-modal 3DCNN-ConvLSTM. Based on the experiments conducted with different objects vibrating at different frequencies in the above-mentional experimental environment, the proposed method can provide acceptable vibration monitoring result which reaches an accuracy of 93%.

Compared with the traditional image-based methods, our method does not need extra image processing or signal transformation, and directly uses the collected images to put into the network for model training and testing. This could provide great benefits for real-time vibration detection. At the same time, the construction and deployment of the whole hardware is fast and convenient. Due to the characteristics of vibration recorded by frame images, we use an RGB-D camera to add modal information to record the vibration spatial information, which further improves the accuracy of vibration monitoring.

Visual information can be obtained quickly and easily, especially based on the performance improvement of hardware devices. This means that the visual vibration monitoring method can be widely used in precision instruments, human health, bridge monitoring and other aspects. Moreover, we can consider transplanting this method to portable equipment to monitor the low frequency vibration environment.

We still acknowledge the drawbacks and our next research may focus on the next two aspects. (1) enriching the vibration data in a variety of complex scenarios and gradually improve the robustness of the network model. (2) reducing the complexity of the network while maintaining the detection accuracy, so that the model can run faster or run on ordinary performance devices.