Analysis of the Matchability of Reference Imagery for Aircraft Based on Regional Scene Perception

Abstract

:1. Introduction

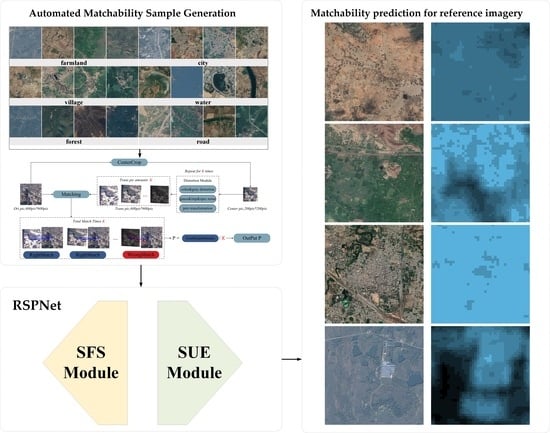

- An automated matchability sample generation method was constructed for image matchability analysis. In order to solve the lack of atypical structural sample features in multiple task scenarios, an automatic sample generation method that takes into account the matching process is designed using simulation technology.

- An image matchability analysis network, called RSPNet, based on region scene perception was proposed. This network takes into account the criterion of feature saliency and uniqueness in scene matching. The end-to-end matchability evaluation of reference imagery is realized through the analysis of scene-level feature perception.

- We conducted experiments on the WHU-SCENE-MATCHING dataset and compared our method with classical algorithms and deep learning methods. The results show that our method exhibits significant advantages compared to the other algorithms.

2. Related Works

2.1. Classical Methods

2.2. Matchability Analysis Based on Deep Learning

3. Network Architecture for Image Matchability Analysis Based on Regional Scene Perception

3.1. An Automated Matchability Sample Generation Methods

3.2. RSPNet

3.2.1. Saliency Analysis Module

3.2.2. Uniqueness Analysis Module

3.2.3. Loss Function

4. Experiment and Analysis

4.1. Datasets

4.2. Experimental Design

- Comparative experiment with classical method: Different methods as mentioned in [41], such as image variance, edge density, and phase correlation length were applied to analyze image matchability. Threshold parameters from related literature and extensive experimentation were used to determine correct matches, considering a matching error of less than 3 pixels.

- Comparative experiment with deep learning method: Different network architectures were adopted for comparison experiments as mentioned in [4,42,43], such as ResNet, DeepLabV3+, and AlexNet. To adapt to the matchability regression task in our paper, we made specific modifications to the model structure as in reference [23]. The number of fully connected layers was changed to 1. MAE was used as the loss function.

4.3. Parameter Settings

4.4. Evaluation Indicators

4.5. Experimental Results

4.5.1. Comparative Experiments with Conventional Methods

4.5.2. Comparative Experiments with Deep Learning Methods

5. Discussion

5.1. Ablation Experiment

5.2. Impact Analysis of Regional Scenarios on Matchability

5.3. Limitations

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wang, J.Z. Research on Key Technologies of Scene Matching Areas Selection of Cruise Missile. Master’s Thesis, National University of Defense Technology, Changsha, China, 2015. [Google Scholar]

- Lu, Y.; Xue, Z.; Xia, G.-S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef]

- Leng, X.F. Research on the Key Technology for Scene Matching Aided Navigation System Based on Image Features. Ph.D. Thesis, Nanjing University of Aeronautics and Astronautics, Nanjing, China, 2007. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned Aircraft Systems in Remote Sensing and Scientific Research: Classification and Considerations of Use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Shen, L.; Bu, Y. Research on Matching-Area Suitability for Scene Matching Aided Navigation. Acta Aeronaut. Astronaut. Sin. 2010, 31, 553–563. [Google Scholar]

- Jia, D.; Zhu, N.D.; Yang, N.H.; Wu, S.; Li, Y.X.; Zhao, M.Y. Image matching methods. J. Image Graph. 2019, 24, 677–699. [Google Scholar]

- Zhao, C.H.; Zhou, Z.H. Review of scene matching visual navigation for unmanned aerial vehicles. Sci. Sin. Inf. 2019, 49, 507–519. [Google Scholar] [CrossRef]

- Johnson, M. Analytical development and test results of acquisition probability for terrain correlation devices used in navigation systems. In Proceedings of the 10th Aerospace Sciences Meeting, San Diego, CA, USA, 17–19 January 1972; p. 122. [Google Scholar]

- Zhang, X.; He, Z.; Liang, Y.; Zeng, P. Selection method for scene matching area based on information entropy. In Proceedings of the 2012 Fifth International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 28–29 October 2012; Volume 1, pp. 364–368. [Google Scholar]

- Cao, F.; Yang, X.G.; Miao, D.; Zhang, Y.P. Study on reference image selection roles for scene matching guidance. Appl. Res. Comput. 2005, 5, 137–139. [Google Scholar]

- Yang, X.; Cao, F.; Huang, X. Reference image preparation approach for scene matching simulation. J. Syst. Simul. 2010, 22, 850–852. [Google Scholar]

- Pang, S.N.; Kim, H.C.; Kim, D.; Bang, S.Y. Prediction of the suitability for image-matching based on self-similarity of vision contents. Image Vis. Comput. 2004, 22, 355–365. [Google Scholar] [CrossRef]

- Wei, D. Research on SAR Image Matching. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2011. [Google Scholar]

- Ju, X.N.; Guo, W.P.; Sun, J.Y.; Gao, J. Matching probability metric for remote sensing image based on interest points. Opt. Precis. Eng. 2014, 22, 1071–1077. [Google Scholar]

- Yang, C.H.; Cheng, Y.Y. Support Vector Machine for Scene Matching Area Selection. J. Tongji Univ. Nat. Sci. 2009, 37, 690–695. [Google Scholar]

- Zhang, Y.Y.; Su, J. SAR Scene Matching Area Selection Based on Multi-Attribute Comprehensive Analysis. J. Proj. Rocket. Missiles Guid. 2016, 36, 104–108. [Google Scholar] [CrossRef]

- Xu, X.; Tang, J. Selection for matching area in terrain aided navigation based on entropy-weighted grey correlation decision-making. J. Chin. Inert. Technol. 2015, 23, 201–206. [Google Scholar] [CrossRef]

- Cai, T.; Chen, X. Selection criterion based on analytic hierarchy process for matching region in gravity aided INS. J. Chin. Inert. Technol. 2013, 21, 93–96. [Google Scholar]

- Luo, H.; Chang, Z.; Yu, X.; Ding, Q. Automatic suitable-matching area selection method based on multi-feature fusion. Infrared Laser Eng. 2011, 40, 2037–2041. [Google Scholar]

- Wang, J.Z. Research on Matching Area Selection of Remote Sensing Image Based on Convolutional Neural Networks. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2016. [Google Scholar]

- Sun, K. Scene Navigability Analysis Based on Deep Learning Model. Master’s Thesis, National University of Defense Technology, Changsha, China, 2019. [Google Scholar]

- Yang, J. Suitable Matching Area Selection Method Based on Deep Learning. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2019. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Kortylewski, A.; Liu, Q.; Wang, A.; Sun, Y.; Yuille, A. Compositional convolutional neural networks: A robust and interpretable model for object recognition under occlusion. Int. J. Comput. Vis. 2021, 129, 736–760. [Google Scholar] [CrossRef]

- Cao, J.; Leng, H.; Lischinski, D.; Cohen-Or, D.; Tu, C.; Li, Y. ShapeConv: Shape-aware convolutional layer for indoor RGB-D semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 7068–7077. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Zhao, H.; Jia, J.; Koltun, V. Exploring self-attention for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10073–10082. [Google Scholar]

- Sun, B.; Liu, G.; Yuan, Y. F3-Net: Multiview Scene Matching for Drone-Based Geo-Localization. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of SIFT, SURF, KAZE, AKAZE, ORB, and BRISK. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies, Sukkur, Pakistan, 3–4 March 2018; pp. 1–10. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Amin, S.; Hamid, E. Distinctive Order Based Self-Similarity Descriptor for Multi-Sensor Remote Sensing Image Matching. ISPRS J. Photogramm. Remote Sens. 2015, 108, 62–71. [Google Scholar]

- Yang, X.G.; Zuo, S. Integral Experiment and Simulation System for Image Matching. J. Syst. Simul. 2010, 22, 1360–1364. [Google Scholar]

- Ling, Z.G.; Qu, S.J.; He, Z.J. Suitability analysis on scene matching aided navigation based on CR-DSmT. Chin. Sci. Pap. Online 2015, 8, 1553–1566. [Google Scholar]

- Jiang, B.C.; Chen, Y.Y. A Rule of Selecting Scene Matching Area. J. Tongji Univ. Nat. Sci. 2007, 35, 4. [Google Scholar]

- Wang, W.; Lai, Q.; Fu, H.; Shen, J.; Ling, H.; Yang, R. Salient Object Detection in the Deep Learning Era: An In-Depth Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3239–3259. [Google Scholar] [CrossRef] [PubMed]

- Borji, A.; Cheng, M.-M.; Hou, Q.; Jiang, H.; Li, J. Salient object detection: A survey. Comput. Vis. Media 2019, 5, 117–150. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2011–2023. [Google Scholar] [CrossRef]

- Liu, Z.H.; Wang, H. Research on Selection for Scene Matching Area. Comput. Technol. Dev. 2013, 23, 128–133. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

| Methods | NMA | NUA | PMA | PUA |

|---|---|---|---|---|

| Image Variance | 551 | 3288 | 0.8182 | 0.4579 |

| Edge Density | 455 | 3384 | 0.8095 | 0.4693 |

| Phase Correlation Length | 401 | 3438 | 0.7743 | 0.4764 |

| Ours | 607 | 3232 | 0.9156 | 0.4334 |

| Methods | MAE | MSE | RMSE | |

|---|---|---|---|---|

| ResNet | 1.075 | 1.8294 | 1.3526 | 0.8117 |

| Deeplabv3+ | 1.0234 | 1.6545 | 1.2863 | 0.8291 |

| AlexNet | 1.0787 | 2.0960 | 1.4478 | 0.7835 |

| (ours) | 0.6581 | 0.8138 | 0.9023 | 0.9154 |

| Methods | Accuracy | F1 Score | ROC AUC | PR AUC |

|---|---|---|---|---|

| ResNet | 0.6253 | 0.4108 | 0.6108 | 0.7254 |

| Deeplabv3+ | 0.9249 | 0.9220 | 0.9250 | 0.9392 |

| AlexNet | 0.8557 | 0.8402 | 0.8531 | 0.8929 |

| (ours) | 0.9591 | 0.9567 | 0.9585 | 0.9700 |

| Methods | MAE | MSE | RMSE | |

|---|---|---|---|---|

| Backbone | 0.7296 | 0.9747 | 0.9872 | 0.8994 |

| Backbone + SFS | 0.7070 | 0.9362 | 0.9671 | 0.9033 |

| Backbone + SUE | 0.6943 | 0.9208 | 0.9596 | 0.9048 |

| RSPNet | 0.6581 | 0.8138 | 0.9023 | 0.9154 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Zhang, G.; Cui, H.; Ma, J.; Wang, W. Analysis of the Matchability of Reference Imagery for Aircraft Based on Regional Scene Perception. Remote Sens. 2023, 15, 4353. https://doi.org/10.3390/rs15174353

Li X, Zhang G, Cui H, Ma J, Wang W. Analysis of the Matchability of Reference Imagery for Aircraft Based on Regional Scene Perception. Remote Sensing. 2023; 15(17):4353. https://doi.org/10.3390/rs15174353

Chicago/Turabian StyleLi, Xin, Guo Zhang, Hao Cui, Jinhao Ma, and Wei Wang. 2023. "Analysis of the Matchability of Reference Imagery for Aircraft Based on Regional Scene Perception" Remote Sensing 15, no. 17: 4353. https://doi.org/10.3390/rs15174353