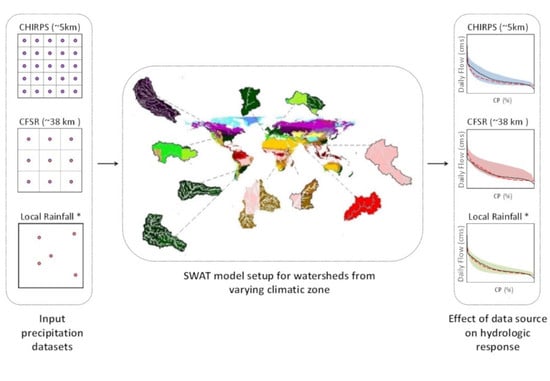

A Comparative Evaluation of the Performance of CHIRPS and CFSR Data for Different Climate Zones Using the SWAT Model

Abstract

:1. Introduction

2. Study Area

3. Materials and Methods

3.1. Rainfall Datasets

3.1.1. Gauge Rainfall Data

3.1.2. Climate Forecast System Reanalysis (CFSR) Data

3.1.3. CHIRPS Data

3.1.4. Evaluation Statistics Used

3.2. Hydrological Modelling

3.2.1. SWAT Model Setup

3.2.2. Calibration Process

4. Results and Discussion

4.1. Evaluation of Gridded Rainfall Products

4.2. Streamflow Evaluation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shi, F.; Zhao, S.; Guo, Z.; Goosse, H.; Yin, Q. Multi-proxy reconstructions of May–September precipitation field in China over the past 500 years. Clim. Past 2017, 13, 1919–1938. [Google Scholar] [CrossRef] [Green Version]

- Ma, Y.; Hong, Y.; Chen, Y.; Yang, Y.; Tang, G.; Yao, Y.; Long, D.; Li, C.; Han, Z.; Liu, R. Performance of Optimally Merged Multisatellite Precipitation Products Using the Dynamic Bayesian Model Averaging Scheme Over the Tibetan Plateau. J. Geophys. Res. Atmos. 2018, 123, 814–834. [Google Scholar] [CrossRef]

- Mantas, V.M.; Liu, Z.; Caro, C.; Pereira, A.J.S.C. Validation of TRMM multi-satellite precipitation analysis (TMPA) products in the Peruvian Andes. Atmos. Res. 2015, 163, 132–145. [Google Scholar] [CrossRef]

- Mishra, A.K.; Gairola, R.M.; Varma, A.K.; Agarwal, V.K. Improved rainfall estimation over the Indian region using satellite infrared technique. Adv. Space Res. 2011, 48, 49–55. [Google Scholar] [CrossRef]

- Kistler, R.; Kalnay, E.; Collins, W.; Saha, S.; White, G.; Woollen, J.; Chelliah, M.; Ebisuzaki, W.; Kanamitsu, M.; Kousky, V.; et al. The NCEP-NCAR 50-Year Reanalysis: Monthly Means CD-ROM and Documentation. Bull. Am. Meteorol. Soc. 2001, 82, 247–268. [Google Scholar] [CrossRef]

- Gampe, D.; Ludwig, R. Evaluation of Gridded Precipitation Data Products for Hydrological Applications in Complex Topography. Hydrology 2017, 4, 53. [Google Scholar] [CrossRef] [Green Version]

- Gao, Y.C.; Liu, M.F. Evaluation of high-resolution satellite precipitation products using rain gauge observations over the Tibetan Plateau. Hydrol. Earth Syst. Sci. 2013, 17, 837–849. [Google Scholar] [CrossRef] [Green Version]

- Xu, M.; Kang, S.; Wu, H.; Yuan, X. Detection of spatio-temporal variability of air temperature and precipitation based on long-term meteorological station observations over Tianshan Mountains, Central Asia. Atmos. Res. 2018, 203, 141–163. [Google Scholar] [CrossRef]

- Negrón Juárez, R.I.; Li, W.; Fu, R.; Fernandes, K.; de Oliveira Cardoso, A. Comparison of Precipitation Datasets over the Tropical South American and African Continents. J. Hydrometeorol. 2009, 10, 289–299. [Google Scholar] [CrossRef]

- Blacutt, L.A.; Herdies, D.L.; de Gonçalves, L.G.G.; Vila, D.A.; Andrade, M. Precipitation comparison for the CFSR, MERRA, TRMM3B42 and Combined Scheme datasets in Bolivia. Atmos. Res. 2015, 163, 117–131. [Google Scholar] [CrossRef] [Green Version]

- Rivera, J.A.; Marianetti, G.; Hinrichs, S. Validation of CHIRPS precipitation dataset along the Central Andes of Argentina. Atmos. Res. 2018, 213, 437–449. [Google Scholar] [CrossRef]

- Henn, B.; Newman, A.J.; Livneh, B.; Daly, C.; Lundquist, J.D. An assessment of differences in gridded precipitation datasets in complex terrain. J. Hydrol. 2018, 556, 1205–1219. [Google Scholar] [CrossRef]

- Yilmaz, K.K.; Hogue, T.S.; Hsu, K.; Sorooshian, S.; Gupta, H.V.; Wagener, T. Intercomparison of Rain Gauge, Radar, and Satellite-Based Precipitation Estimates with Emphasis on Hydrologic Forecasting. J. Hydrometeorol. 2005, 6, 497–517. [Google Scholar] [CrossRef]

- Wilk, J.; Kniveton, D.; Andersson, L.; Layberry, R.; Todd, M.C.; Hughes, D.; Ringrose, S.; Vanderpost, C. Estimating rainfall and water balance over the Okavango River Basin for hydrological applications. J. Hydrol. 2006, 331, 18–29. [Google Scholar] [CrossRef] [Green Version]

- Getirana, A.C.V.; Espinoza, J.C.V.; Ronchail, J.; Rotunno Filho, O.C. Assessment of different precipitation datasets and their impacts on the water balance of the Negro River basin. J. Hydrol. 2011, 404, 304–322. [Google Scholar] [CrossRef]

- Seyyedi, H.; Anagnostou, E.N.; Beighley, E.; McCollum, J. Hydrologic evaluation of satellite and reanalysis precipitation datasets over a mid-latitude basin. Atmos. Res. 2015, 164–165, 37–48. [Google Scholar] [CrossRef]

- Jiang, S.; Ren, L.; Hong, Y.; Yang, X.; Ma, M.; Zhang, Y.; Yuan, F. Improvement of Multi-Satellite Real-Time Precipitation Products for Ensemble Streamflow Simulation in a Middle Latitude Basin in South China. Water Resour. Manag. 2014, 28, 2259–2278. [Google Scholar] [CrossRef]

- Zhu, Q.; Xuan, W.; Liu, L.; Xu, Y.-P. Evaluation and hydrological application of precipitation estimates derived from PERSIANN-CDR, TRMM 3B42V7, and NCEP-CFSR over humid regions in China: Evaluation and Hydrological Application of Precipitation Estimates. Hydrol. Process. 2016, 30, 3061–3083. [Google Scholar] [CrossRef]

- Dile, Y.T.; Srinivasan, R. Evaluation of CFSR climate data for hydrologic prediction in data-scarce watersheds: An application in the Blue Nile River Basin. J. Am. Water Resour. Assoc. 2014, 50, 1226–1241. [Google Scholar] [CrossRef]

- Bayissa, Y.; Tadesse, T.; Demisse, G.; Shiferaw, A. Evaluation of Satellite-Based Rainfall Estimates and Application to Monitor Meteorological Drought for the Upper Blue Nile Basin, Ethiopia. Remote Sens. 2017, 9, 669. [Google Scholar] [CrossRef] [Green Version]

- Duan, Z.; Liu, J.; Tuo, Y.; Chiogna, G.; Disse, M. Evaluation of eight high spatial resolution gridded precipitation products in Adige Basin (Italy) at multiple temporal and spatial scales. Sci. Total Environ. 2016, 573, 1536–1553. [Google Scholar] [CrossRef] [Green Version]

- Rahman, K.; Shang, S.; Shahid, M.; Wen, Y. Hydrological evaluation of merged satellite precipitation datasets for streamflow simulation using SWAT: A case study of Potohar Plateau, Pakistan. J. Hydrol. 2020, 587, 125040. [Google Scholar] [CrossRef]

- Fuka, D.R.; Walter, M.T.; MacAlister, C.; Degaetano, A.T.; Steenhuis, T.S.; Easton, Z.M. Using the Climate Forecast System Reanalysis as weather input data for watershed models: Using Cfsr as Weather Input Data for Watershed Models. Hydrol. Process. 2014, 28, 5613–5623. [Google Scholar] [CrossRef]

- Radcliffe, D.E.; Mukundan, R. PRISM vs. CFSR Precipitation Data Effects on Calibration and Validation of SWAT Models. J. Am. Water Resour. Assoc. 2017, 53, 89–100. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, G.; Wang, L.; Yu, J.; Xu, Z. Evaluation of Gridded Precipitation Data for Driving SWAT Model in Area Upstream of Three Gorges Reservoir. PLoS ONE 2014, 9, e112725. [Google Scholar] [CrossRef] [PubMed]

- Duan, Z.; Tuo, Y.; Liu, J.; Gao, H.; Song, X.; Zhang, Z.; Yang, L.; Mekonnen, D.F. Hydrological evaluation of open-access precipitation and air temperature datasets using SWAT in a poorly gauged basin in Ethiopia. J. Hydrol. 2019, 569, 612–626. [Google Scholar] [CrossRef] [Green Version]

- Tuo, Y.; Duan, Z.; Disse, M.; Chiogna, G. Evaluation of precipitation input for SWAT modeling in Alpine catchment: A case study in the Adige river basin (Italy). Sci. Total Environ. 2016, 573, 66–82. [Google Scholar] [CrossRef] [Green Version]

- Renard, B.; Kavetski, D.; Leblois, E.; Thyer, M.; Kuczera, G.; Franks, S.W. Toward a reliable decomposition of predictive uncertainty in hydrological modeling: Characterizing rainfall errors using conditional simulation: Decomposing Predictive Uncertainty in Hydrological Modeling. Water Resour. Res. 2011, 47. [Google Scholar] [CrossRef]

- Mendoza, P.A.; Clark, M.P.; Mizukami, N.; Newman, A.J.; Barlage, M.; Gutmann, E.D.; Rasmussen, R.M.; Rajagopalan, B.; Brekke, L.D.; Arnold, J.R. Effects of Hydrologic Model Choice and Calibration on the Portrayal of Climate Change Impacts. J. Hydrometeorol. 2015, 16, 762–780. [Google Scholar] [CrossRef]

- Fekete, B.Z.M. Uncertainties in Precipitation and Their Impacts on Runoff Estimates. J. Clim. 2004, 17, 11. [Google Scholar] [CrossRef]

- Kottek, M.; Grieser, J.; Beck, C.; Rudolf, B.; Rubel, F. World Map of the Köppen-Geiger climate classification updated. Meteorol. Z. 2006, 15, 259–263. [Google Scholar] [CrossRef]

- Peral-García, C.; Navascués Fernández-Victorio, B.; Ramos Calzado, P. Serie de Precipitación Diaria en Rejilla con Fines Climáticos; Spanish Meterological Agency (AEMET): Madrid, Spain, 2017. [Google Scholar]

- Senent-Aparicio, J.; López-Ballesteros, A.; Pérez-Sánchez, J.; Segura-Méndez, F.; Pulido-Velazquez, D. Using Multiple Monthly Water Balance Models to Evaluate Gridded Precipitation Products over Peninsular Spain. Remote Sens. 2018, 10, 922. [Google Scholar] [CrossRef] [Green Version]

- Arnold, J.G.; Kiniry, J.R.; Srinivasan, R.; Williams, J.R.; Haney, E.B.; Neitsch, S.L. SWAT 2012 Input/Output Documentation. Texas Water Resources Institute. Available online: http://hdl.handle.net/1969.1/149194 (accessed on 4 March 2013).

- Pai, D.S.; Sridhar, L.; Rajeevan, M.; Sreejith, O.P.; Satbhai, N.S.; Mukhopadhyay, B. Development of a new high spatial resolution (0.25° × 0.25°) long period (1901–2010) daily gridded rainfall data set over India and its comparison with existing data sets over the region. Mausam 2014, 65, 1–18. [Google Scholar]

- Funk, C.; Peterson, P.; Landsfeld, M.; Pedreros, D.; Verdin, J.; Shukla, S.; Husak, G.; Rowland, J.; Harrison, L.; Hoell, A.; et al. The climate hazards infrared precipitation with stations—A new environmental record for monitoring extremes. Sci. Data 2015, 2, 150066. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yong, B.; Chen, B.; Gourley, J.J.; Ren, L.; Hong, Y.; Chen, X.; Wang, W.; Chen, S.; Gong, L. Intercomparison of the Version-6 and Version-7 TMPA precipitation products over high and low latitudes basins with independent gauge networks: Is the newer version better in both real-time and post-real-time analysis for water resources and hydrologic extremes? J. Hydrol. 2014, 508, 77–87. [Google Scholar] [CrossRef]

- Meng, J.; Li, L.; Hao, Z.; Wang, J.; Shao, Q. Suitability of TRMM satellite rainfall in driving a distributed hydrological model in the source region of Yellow River. J. Hydrol. 2014, 509, 320–332. [Google Scholar] [CrossRef]

- Jha, M.; Gassman, P.W.; Secchi, S.; Gu, R.; Arnold, J. Effect of Watershed Subdivision on Swat Flow, Sediment, and Nutrient Predictions. J. Am. Water Resour. Assoc. 2004, 40, 811–825. [Google Scholar] [CrossRef] [Green Version]

- Kumar, S.; Merwade, V. Impact of Watershed Subdivision and Soil Data Resolution on SWAT Model Calibration and Parameter Uncertainty. J. Am. Water Resour. Assoc. 2009, 45, 1179–1196. [Google Scholar] [CrossRef]

- Wallace, C.; Flanagan, D.; Engel, B. Evaluating the Effects of Watershed Size on SWAT Calibration. Water 2018, 10, 898. [Google Scholar] [CrossRef] [Green Version]

- Abbaspour, K.C.; Rouholahnejad, E.; Vaghefi, S.; Srinivasan, R.; Yang, H.; Kløve, B. A continental-scale hydrology and water quality model for Europe: Calibration and uncertainty of a high-resolution large-scale SWAT model. J. Hydrol. 2015, 524, 733–752. [Google Scholar] [CrossRef] [Green Version]

- Sun, W.; Wang, Y.; Wang, G.; Cui, X.; Yu, J.; Zuo, D.; Xu, Z. Physically based distributed hydrological model calibration based on a short period of streamflow data: Case studies in four Chinese basins. Hydrol. Earth Syst. Sci. 2017, 21, 251–265. [Google Scholar] [CrossRef] [Green Version]

- Gupta, H.V.; Kling, H.; Yilmaz, K.K.; Martinez, G.F. Decomposition of the mean squared error and NSE performance criteria: Implications for improving hydrological modelling. J. Hydrol. 2009, 377, 80–91. [Google Scholar] [CrossRef] [Green Version]

- Nicolle, P.; Pushpalatha, R.; Perrin, C.; Francois, D.; Thiéry, D.; Mathevet, T.; Lay, M.L.; Besson, F.; Soubeyroux, J.-M.; Viel, C.; et al. Benchmarking hydrological models for low-flow simulation and forecasting on French catchments. Hydrol. Earth Syst. Sci. 2014, 30. [Google Scholar] [CrossRef] [Green Version]

- Asadzadeh, M.; Leon, L.; Yang, W.; Bosch, D. One-day offset in daily hydrologic modeling: An exploration of the issue in automatic model calibration. J. Hydrol. 2016, 534, 164–177. [Google Scholar] [CrossRef]

- Gupta, H.V.; Sorooshian, S.; Yapo, P.O. Status of Automatic Calibration for Hydrologic Models: Comparison with Multilevel Expert Calibration. J. Hydrol. Eng. 1999, 4, 135–143. [Google Scholar] [CrossRef]

- Moriasi, D.N.; Arnold, J.G.; Liew, M.W.V.; Bingner, R.L.; Harmel, R.D.; Veith, T.L. Model Evaluation Guidelines for Systematic Quantification of Accuracy in Watershed Simulations. Trans. ASABE 2007, 50, 885–900. [Google Scholar] [CrossRef]

- Abbaspour, K.C. User Manual for SWAT-CUP, SWAT Calibration and Uncertainty Analysis Programs; Eawag: Duebendorf, Switzerland, 2007. [Google Scholar]

- Katsanos, D.; Retalis, A.; Michaelides, S. Validation of a high-resolution precipitation database (CHIRPS) over Cyprus for a 30-year period. Atmos. Res. 2016, 169, 459–464. [Google Scholar] [CrossRef]

- Legates, D.R.; McCabe, G.J. Evaluating the use of “goodness-of-fit” Measures in hydrologic and hydroclimatic model validation. Water Resour. Res. 1999, 35, 233–241. [Google Scholar] [CrossRef]

| Code | Watershed | Koppen Climate | Elevation (m) | Area (km2) | Annual Rainfall (mm) | Major Landuse | ||

|---|---|---|---|---|---|---|---|---|

| Min | Max | Mean | ||||||

| 01_US_IOW | Iowa | Dfa | 191 | 396 | 303 | 32,374 | 890 | Agriculture |

| 02_US_SAL | Salt | Csa, Csb, Bsk | 965 | 2609 | 1719 | 11,152 | 520 | Forest, Rangeland |

| 03_BR_CHA | Chapecó | Cfa | 222 | 1377 | 779 | 8297 | 1953 | Forest, Agriculture |

| 04_BR_CAN | Canoas | Cfa | 648 | 1822 | 976 | 10,125 | 1647 | Forest |

| 05_SP_EO | Eo | Cfb | 16 | 1107 | 568 | 712 | 1240 | Forest |

| 06_SP_TAG | Tagus | Csb, Cfb | 723 | 1932 | 1114 | 3274 | 630 | Forest |

| 07_ET_TAN | Lake Tana | Aw, Cwb | 1777 | 4112 | 2060 | 14,950 | 1280 | Agriculture |

| 08_ET_BEL | Beles | Aw, Cwb | 942 | 2729 | 1497 | 4330 | 1550 | Forest |

| 09_IN_BAI | Baitarani | Aw | 0 | 1100 | 330 | 11,129 | 1400 | Forest, Agriculture |

| 10_IN_MAN | Manimala | Am | 20 | 1257 | 856 | 789 | 2517 | Agriculture |

| Statistical Index | Unit | Equation | Perfect Score |

|---|---|---|---|

| The correlation coefficient (CC) | - | 1 | |

| Root mean squared error (RMSE) | mm | 0 | |

| Mean error (ME) | mm | 0 | |

| Relative Bias (BIAS) | % | 0 | |

| Probability of detection (POD) | - | 1 | |

| False alarm ratio (FAR) | - | 0 | |

| Critical success index (CSI) | - | 1 |

| Code | Data | Description | Source |

|---|---|---|---|

| 01_US_IOW 02_US_SAL | DEM | 30 m resolution map | US Geological Survey (USGS) National Elevation Dataset |

| Land use map | Vector database | USGS National Land Cover Database (NLCD) and Cropland Data Layers (CDL) | |

| Soil map | 1:250,000-scale | Digital General Soil Map of the United States (STATSGO) | |

| Temperature data | 5 km resolution | National Center for Environmental Information (NCEI) of the National Oceanic and Atmospheric Administration (NOAA) | |

| 03_BR_CHA 04_BR_CAN | DEM | 30 m resolution map | Empresa de Pesquisa Agropecuária e Extensão Rural de Santa Catarina (EPAGRI) |

| Land use map | Vector database | MapBiomas Project | |

| Soil map | 1 km resolution map | Harmonized World Soil Map (HWSD) | |

| Temperature data | 38 km resolution | Climate Forecast System Reanalysis (CFSR) | |

| 05_SP_EO 06_SP_TAG | DEM | 25 m resolution map | Spanish National Geographic Institute (IGN) |

| Land use map | Vector database | Corine Land Cover of year 2006 (CLC2006) | |

| Soil map | 1 km resolution map | Harmonized World Soil Map (HWSD) | |

| Temperature data | SPAIN02 v5 dataset (0,1°) | Spanish Meteorological Agency (AEMET) and University of Cantabria (UC) | |

| 07_ET_TAN 08_ET_BEL | DEM | 30 m resolution map | US Geological Survey (USGS) |

| Land use map | Vector database | Ministry of Water, Irrigation and Electricity of Ethiopia (MoWIE) | |

| Soil map | 1 km resolution map | Harmonized World Soil Map (HWSD) | |

| Temperature data | Local Stations | National Meteorology Agency of Ethiopia (NMA) | |

| 09_IN_BAI 10_IN_MAN | DEM | 90 m resolution map | USGS Shuttle Radar Topography Mission (SRTM) |

| Land use map | Vector database | USGS Global Land Cover Characterization (GLCC) | |

| Soil map | 1 km resolution map | Harmonized World Soil Map (HWSD) | |

| Temperature data | IMD gridded data (1°) | Indian Meteorological Department (IMD) |

| Code | Sources | Monthly | Daily | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| CC | RMSE (mm) | ME (mm) | BIAS (%) | CC | RMSE (mm) | ME (mm) | CSI | FAR | POD | ||

| 01_US_IOW | CFSR | 0.78 | 33.19 | 2.59 | 3.50 | 0.54 | 4.97 | 0.09 | 0.78 | 0.13 | 0.88 |

| CHIRPS | 0.99 | 6.46 | −0.35 | −0.47 | 0.70 | 4.09 | −0.01 | 0.54 | 0.09 | 0.57 | |

| 02_US_MIN | CFSR | 0.89 | 22.93 | 6.56 | 15.15 | 0.72 | 3.18 | 0.22 | 0.59 | 0.09 | 0.63 |

| CHIRPS | 0.97 | 12.08 | −3.89 | −8.97 | 0.61 | 3.26 | −0.13 | 0.42 | 0.13 | 0.45 | |

| 03_BR_CHA | CFSR | 0.67 | 69.67 | −3.21 | −1.95 | 0.47 | 10.58 | −0.11 | 0.73 | 0.19 | 0.87 |

| CHIRPS | 0.97 | 20.27 | 1.73 | 1.05 | 0.62 | 9.35 | 0.06 | 0.54 | 0.12 | 0.58 | |

| 04_BR_CAN | CFSR | 0.74 | 55.28 | 18.63 | 13.33 | 0.47 | 9.27 | 0.61 | 0.77 | 0.20 | 0.96 |

| CHIRPS | 0.96 | 18.57 | −1.16 | −0.83 | 0.55 | 8.31 | −0.04 | 0.52 | 0.12 | 0.57 | |

| 05_SP_EO | CFSR | 0.91 | 30.86 | 6.15 | 5.95 | 0.74 | 4.62 | 0.20 | 0.83 | 0.06 | 0.88 |

| CHIRPS | 0.84 | 40.95 | 11.31 | 10.94 | 0.42 | 9.66 | 0.37 | 0.31 | 0.02 | 0.31 | |

| 06_SP_TAG | CFSR | 0.83 | 26.70 | −13.72 | −25.72 | 0.64 | 2.93 | −0.45 | 0.70 | 0.05 | 0.73 |

| CHIRPS | 0.80 | 28.97 | −12.89 | −24.17 | 0.55 | 3.61 | −0.42 | 0.36 | 0.04 | 0.36 | |

| 07_ET_TAN | CFSR | 0.95 | 57.04 | 13.04 | 11.75 | 0.69 | 5.07 | 0.43 | 0.76 | 0.19 | 0.94 |

| CHIRPS | 0.98 | 38.60 | −16.46 | −14.83 | 0.63 | 5.58 | −0.54 | 0.54 | 0.07 | 0.56 | |

| 08_ET_BEL | CFSR | 0.92 | 126.35 | 44.22 | 31.72 | 0.54 | 8.66 | 1.45 | 0.78 | 0.14 | 0.78 |

| CHIRPS | 0.96 | 50.11 | −28.62 | −20.53 | 0.62 | 6.07 | −0.94 | 0.60 | 0.07 | 0.60 | |

| 09_IN_BAI | CFSR | 0.90 | 99.72 | 34.77 | 27.43 | 0.57 | 9.42 | 1.14 | 0.77 | 0.16 | 0.91 |

| CHIRPS | 0.95 | 42.68 | −2.45 | −1.94 | 0.53 | 8.53 | −0.08 | 0.66 | 0.16 | 0.76 | |

| 10_IN_MAN | CFSR | 0.78 | 172.37 | 61.45 | 24.95 | 0.49 | 16.13 | 2.02 | 0.73 | 0.26 | 0.97 |

| CHIRPS | 0.83 | 133.55 | 4.24 | 1.72 | 0.30 | 18.78 | 0.14 | 0.59 | 0.21 | 0.70 | |

| Code | PD0 | PD1 | PD10 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| LOCAL | CFSR | CHIRPS | LOCAL | CFSR | CHIRPS | LOCAL | CFSR | CHIRPS | |

| 01_US_IOW | 7569 | 7671 | 4731 | 3239 | 3529 | 2989 | 717 | 715 | 779 |

| 02_US_MIN | 6347 | 4435 | 3305 | 2413 | 2188 | 2001 | 287 | 472 | 318 |

| 03_BR_CHA | 6737 | 7268 | 4446 | 4855 | 4496 | 3533 | 1640 | 1623 | 1741 |

| 04_BR_CAN | 7258 | 8758 | 4671 | 4665 | 5117 | 3549 | 1463 | 1481 | 1547 |

| 05_SP_EO | 8455 | 7891 | 2667 | 4334 | 4321 | 2225 | 1017 | 1083 | 1125 |

| 06_SP_TAG | 8140 | 6263 | 3099 | 2863 | 2375 | 1864 | 421 | 265 | 361 |

| 07_ET_TAN | 5104 | 5938 | 3076 | 4120 | 4128 | 2650 | 1319 | 1495 | 1162 |

| 08_ET_BEL | 4901 | 5120 | 3342 | 4365 | 4146 | 3152 | 1742 | 2232 | 1457 |

| 09_IN_BAI | 5467 | 5957 | 4911 | 4001 | 3867 | 3887 | 1210 | 1907 | 1394 |

| 10_IN_MAN | 5951 | 7808 | 5281 | 4821 | 6473 | 4625 | 2278 | 2901 | 2379 |

| Watershed | Rainfall Source | Calibration | Validation | ||||

|---|---|---|---|---|---|---|---|

| NSE | KGE | PBIAS | NSE | KGE | PBIAS | ||

| 01_US_IOW | Local | 0.83 | 0.82 | 15.65 | 0.77 | 0.79 | 9.33 |

| CFSR | 0.59 | 0.78 | 8.82 | 0.51 | 0.73 | −5.01 | |

| CHIRPS | 0.85 | 0.90 | 5.30 | 0.78 | 0.82 | 6.16 | |

| 02_US_SAL | Local | 0.55 | 0.68 | 15.94 | −1.43 | 0.01 | −12.39 |

| CFSR | −0.46 | 0.11 | −53.94 | −3.91 | −0.72 | −65.56 | |

| CHIRPS | 0.54 | 0.77 | −3.20 | 0.61 | 0.61 | 6.59 | |

| 03_BR_CHA | Local | 0.80 | 0.88 | 3.80 | 0.63 | 0.62 | 31.9 |

| CFSR | 0.26 | 0.64 | −1.90 | −0.40 | 0.24 | 40.3 | |

| CHIRPS | 0.83 | 0.91 | 0.40 | 0.40 | 0.20 | 3.2 | |

| 04_BR_CAN | Local | 0.81 | 0.87 | −3.00 | 0.57 | 0.53 | 34.1 |

| CFSR | 0.13 | 0.58 | −11.90 | 0.02 | 0.36 | 32.1 | |

| CHIRPS | 0.80 | 0.88 | 0.60 | −0.95 | 0.12 | 1.4 | |

| 05_SP_EO | Local | 0.87 | 0.93 | 2.06 | 0.84 | 0.81 | 5.87 |

| CFSR | 0.83 | 0.90 | 2.04 | 0.64 | 0.76 | −11.45 | |

| CHIRPS | 0.74 | 0.77 | −18.55 | 0.73 | 0.79 | −7.77 | |

| 06_SP_TAG | Local | 0.66 | 0.74 | 10.54 | 0.68 | 0.75 | 17.09 |

| CFSR | 0.30 | 0.58 | 27.55 | 0.30 | 0.62 | 6.13 | |

| CHIRPS | 0.09 | 0.52 | 24.81 | 0.62 | 0.64 | 31.39 | |

| 07_ET_TAN | Local | 0.69 | 0.57 | 12.46 | 0.43 | 0.26 | 43.97 |

| CFSR | 0.77 | 0.57 | 21.42 | 0.21 | 0.13 | 59.16 | |

| CHIRPS | 0.61 | 0.46 | 21.09 | 0.32 | 0.14 | 55.05 | |

| 08_ET_BEL | Local | 0.46 | 0.55 | −37.73 | 0.54 | 0.54 | −42.65 |

| CFSR | 0.57 | 0.36 | −50.27 | 0.75 | 0.60 | −27.99 | |

| CHIRPS | 0.47 | 0.32 | −61.90 | 0.73 | 0.73 | −23.45 | |

| 09_IN_BAI | Local | 0.82 | 0.89 | −6.35 | 0.68 | 0.76 | 1.6 |

| CFSR | −0.31 | −0.21 | −116.2 | −0.43 | −0.32 | −117.2 | |

| CHIRPS | 0.77 | 0.88 | 1.15 | 0.64 | 0.62 | 13.4 | |

| 10_IN_MAN | Local | 0.58 | 0.78 | −0.04 | 0.62 | 0.79 | 0.52 |

| CFSR | 0.70 | 0.65 | 0.05 | 0.60 | 0.67 | 0.40 | |

| CHIRPS | 0.70 | 0.79 | 0.02 | 0.71 | 0.69 | 0.27 | |

| Watershed | Rainfall Source | Calibration | Validation | ||||

|---|---|---|---|---|---|---|---|

| NSE | KGE | PBIAS | NSE | KGE | PBIAS | ||

| 01_US_IOW | Local | 0.30 | 0.64 | 15.67 | 0.35 | 0.67 | 9.23 |

| CFSR | 0.05 | 0.55 | 9.03 | −0.27 | 0.45 | −4.88 | |

| CHIRPS | 0.44 | 0.73 | 5.38 | 0.42 | 0.72 | 6.05 | |

| 02_US_SAL | Local | 0.04 | 0.53 | 16.38 | −4.02 | −0.88 | −12.41 |

| CFSR | −2.52 | −0.35 | −53.77 | −11.90 | −2.08 | −65.58 | |

| CHIRPS | −0.86 | 0.27 | −2.57 | −0.39 | 0.38 | 6.94 | |

| 03_BR_CHA | Local | −0.9 | 0.03 | 10.30 | −0.91 | −0.18 | 65.70 |

| CFSR | −0.79 | 0.28 | −1.00 | −1.47 | −0.02 | 3.90 | |

| CHIRPS | −0.46 | 0.36 | 11.10 | −1.29 | 0.00 | 13.10 | |

| 04_BR_CAN | Local | 0.36 | 0.69 | −3.10 | −1.51 | 0.02 | 11.20 |

| CFSR | −0.77 | 0.28 | −8.20 | −1.82 | 0.02 | 6.20 | |

| CHIRPS | 0.26 | 0.65 | −1.50 | −1.39 | 0.03 | 10.60 | |

| 05_SP_EO | Local | 0.61 | 0.79 | 1.29 | 0.53 | 0.65 | 5.50 |

| CFSR | 0.54 | 0.76 | 1.34 | −0.07 | 0.50 | −12.22 | |

| CHIRPS | −0.16 | 0.46 | −19.34 | 0.16 | 0.49 | −8.38 | |

| 06_SP_TAG | Local | 0.44 | 0.71 | 10.73 | 0.50 | 0.70 | 17.70 |

| CFSR | −0.01 | 0.49 | 28.10 | −0.29 | 0.41 | 6.80 | |

| CHIRPS | −0.39 | 0.37 | 25.04 | 0.08 | 0.50 | 31.84 | |

| 07_ET_TAN | Local | 0.52 | 0.52 | 12.76 | 0.28 | 0.19 | 44.15 |

| CFSR | 0.65 | 0.53 | 21.59 | 0.15 | 0.05 | 59.23 | |

| CHIRPS | 0.47 | 0.41 | 21.37 | 0.23 | 0.08 | 55.24 | |

| 08_ET_BEL | Local | −0.33 | 0.37 | −39.81 | −0.59 | 0.23 | −42.11 |

| CFSR | 0.11 | −0.73 | −52.80 | 0.29 | −0.73 | −27.90 | |

| CHIRPS | −0.32 | −0.73 | −63.91 | 0.14 | −0.73 | −23.12 | |

| 09_IN_BAI | Local | −0.13 | 0.47 | −6.25 | −0.23 | 0.33 | 10.8 |

| CFSR | −1.40 | −0.34 | −104 | −0.73 | −0.67 | −111 | |

| CHIRPS | 0.12 | 0.54 | −14.65 | 0.10 | 0.44 | 6.7 | |

| 10_IN_MAN | Local | 0.19 | 0.61 | −4.5 | 0.54 | 0.76 | 6.5 |

| CFSR | 0.17 | 0.46 | 20.2 | 0.34 | 0.37 | 2.7 | |

| CHIRPS | 0.22 | 0.54 | 5.5 | 0.47 | 0.41 | 1.8 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dhanesh, Y.; Bindhu, V.M.; Senent-Aparicio, J.; Brighenti, T.M.; Ayana, E.; Smitha, P.S.; Fei, C.; Srinivasan, R. A Comparative Evaluation of the Performance of CHIRPS and CFSR Data for Different Climate Zones Using the SWAT Model. Remote Sens. 2020, 12, 3088. https://doi.org/10.3390/rs12183088

Dhanesh Y, Bindhu VM, Senent-Aparicio J, Brighenti TM, Ayana E, Smitha PS, Fei C, Srinivasan R. A Comparative Evaluation of the Performance of CHIRPS and CFSR Data for Different Climate Zones Using the SWAT Model. Remote Sensing. 2020; 12(18):3088. https://doi.org/10.3390/rs12183088

Chicago/Turabian StyleDhanesh, Yeganantham, V. M. Bindhu, Javier Senent-Aparicio, Tássia Mattos Brighenti, Essayas Ayana, P. S. Smitha, Chengcheng Fei, and Raghavan Srinivasan. 2020. "A Comparative Evaluation of the Performance of CHIRPS and CFSR Data for Different Climate Zones Using the SWAT Model" Remote Sensing 12, no. 18: 3088. https://doi.org/10.3390/rs12183088