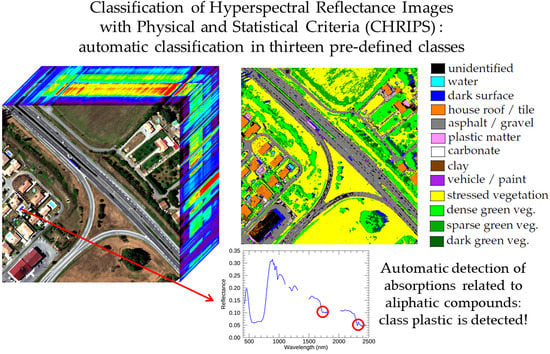

Classification of Hyperspectral Reflectance Images With Physical and Statistical Criteria

Abstract

:1. Introduction

- The first objective is to perform a classification without using any expert knowledge from user. For unsupervised methods, the selection of the number of classes is not straightforward even if some methods try to estimate it [42,43]. Methods using metrics (SAM, SID) require thresholds to be tuned—this task cannot be performed easily. For machine learning methods, training samples need to be selected for each class, but spatial location of these training samples on images is often unknown. The number of classes CHRIPS could detect is fixed. For each class, CHRIPS applies a set of criteria that depend on thresholds for which robust values were estimated once for all from training samples covering as much as possible spectral variability of each class. Then, CHRIPS only requires a hyperspectral reflectance image as input.

- The second objective is that the classification method can be applied on hyperspectral images acquired at different spectral and spatial resolutions by common airborne hyperspectral sensors. Machine learning methods may be quite accurate when the trained model is applied on images acquired with the same sensor and the same spatial resolution as the training samples, but may be less efficient when it is applied on images acquired with other sensors (transfer ability) because they are generally not trained for more than one sensor. CHRIPS method is based on the computation of criteria that are robust to the sensor change.

2. Overview of Classification Method Chrips

- dark surface

- (1)

- dark green vegetation

- (2)

- water

- (3)

- unidentified dark surface

- material with specific absorptions

- (4)

- plastic matter (aliphatic + aromatic)

- (5)

- carbonate

- (6)

- clay

- vegetation

- (7)

- dense green vegetation

- (8)

- sparse green vegetation

- (9)

- stressed vegetation

- classes with dedicated indices

- (10)

- house roof/tile

- (11)

- asphalt

- (12)

- vehicle/paint/metal surface

- (13)

- non-carbonated gravel

- unidentified

3. Datasets

3.1. Presentation of Hyperspectral Datasets

- five Odin images (training),

- two HySpex images: Fauga (training) and Mauzac (test),

- one HyMap image: Garons (test),

- three AisaFENIX images: surburban Mauzac (test).

3.1.1. Odin Images

3.1.2. HySpex Images

3.1.3. HyMap Image

3.1.4. AisaFENIX Images

3.2. Selection of Training Samples

- –

- design of optimal CHRIPS criteria from the training samples (see Section 5)

- –

- application of CHRIPS on the set of images

- –

- identification of misclassified pixels

- –

- update of : addition of new training samples from misclassified pixels

3.3. Images and Ground Truth Used for Assessment

- Fauga (HySpex)

- Mauzac (HySpex)

- Garons (HyMap)

- suburban Mauzac (AisaFENIX): images (0.55 m), (2.2 m) and (8.8 m)

4. Pre-Processing: Correction of Spectral Data

4.1. Atmospheric Correction

4.2. Smoothing of Reflectance Spectra

4.3. Interpolation of Reflectance Spectra

5. Definition of Chrips Criteria

- dark surface (dark green vegetation, water, unidentified dark surface)

- material with specific absorptions (plastic matter, carbonate, clay),

- vegetation (dense green, sparse green, stressed),

- classes with dedicated indices (house roof, asphalt, vehicle, non-carbonated gravel).

5.1. Threshold Estimation

- –

- Random selection of a criterion among all criteria .

- –

- Random selection of a set of M real values , m = 1…M, in the range [0,1]. Typically, M = 10.

- –

- Computation of new values for threshold , noted , m = 1…M. values are located between centers of sets and : .

- –

- Computation of classification performances. All criteria are applied on and by varying values, m = 0…M. For run, and for . The retained threshold is the one that minimizes the number of misclassifed samples. is updated with this value.

5.2. Dark Surfaces

5.2.1. Dark Green Vegetation

- The NDVI index [36], related to chlorophyll content, is high enough (typically above 0.3):.

- Reflectance after red-edge is not very low: .

- Reflectance is low in the SWIR range: , .

5.2.2. Water

- Reflectance is very low in the SWIR range:, , .

- In VNIR range, maximal reflectance is located in the range [470–600 nm]:

- is significantly higher than reflectance in the range [800–850 nm]:

5.2.3. Unidentified Dark Surfaces (Shadowed Surfaces…)

5.3. Classes with Specific Absorptions

5.3.1. Plastic Matter

- , ,

- , .

- , ,

- , ,

- , .

5.3.2. Carbonate

- The reflectance highly decreases between 2250 and 2310 nm:

- Reflectance has a local minimum around 2320–2350 nm:

- The reflectance of the local minimum is significantly below reflectances from before and after:

- Reflectance level is not highly contaminated by noise:

- The NDVI index related to green vegetation (chlorophyll content) is low:NDVI

5.3.3. Clay

- Reflectance has a local minimum close to 2200 nm:

- The difference of reflectance between the local minimum and neighboring reflectances is high enough:.

5.4. Vegetation

- NDVI is above a given threshold: NDVI . is related to stressed vegetation.

- Reflectance in blue channel is below reflectance in green and red channels:and

- Reflectance has a local maximum close to 2210 nm (see CAI index [38]):

- Reflectance has a local maximum close to 1660 nm (see NDNI index, [62]): .

- Reflectance is parabolic between 1520 and 1760 nm. Let us note . Reflectance is locally modelled as . The parameter a is estimated by minimizing with least square minimization for ranging between 1520 nm and 1760 nm. The analysis of many vegetation reflectances led to the following criterion:

- Some false detections with house roofs (possible presence of lichen or moss) made us addying another criterion: .

- –

- ,

- –

- ,

- –

- .

5.5. Definition of New Indices for the Remaining Classes

5.5.1. Methodology

- Spectral bands , , , , , are randomly selected in a discrete set . As spectral reflectance slowly varies outside the absorption bands, spectral bands can be chosen sparsely in the spectral range. Moreover, gas absorption bands are removed. Typically, the following set of spectral bands could be used (expressed in nanometers):.

- A range of possible values for , , and must be selected. After several tests, the following discrete set of values leads to satisfactory results:A = .

- The number N of indices used to characterize a class is also a parameter. Typically, N varies between 3 and 10. Practically, this parameter is not fixed at the beginning of the process. Depending on the discriminant power of estimated indices, more or less indices are computed.

- For each n between 1 and N, the maximum percentage of samples from set not being discriminated after using the indices … must be indicated. For three indices, for example, the following values can be chosen: = 30, = 10 and = 0. This means that after using the first index , we tolerate that a maximum of 30% of the samples from set are not discriminated. After using indices and , a maximum of 10% of samples from are not discriminated. After using the indices , and , all the samples from set are discriminated.

- Spectral bands , , , , , are randomly drawn in the set with constraints and .

- Coefficients , , , are randomly drawn in the set A.

- The index is computed for all samples from sets and from the Equation (4) with spectral bands and coefficients , leading to the sets of values and .

- The minimum value and the maximum value of are identified.

- Indices in the set that are not between and are discriminated.

- –

- If the percentage of undiscriminated samples from is higher than , the index is not discriminant enough and is not retained. Another index will be computed.

- –

- If the percentage of undiscriminated samples from is lower than , the index is satisfactory and is retained. The samples from set that are discriminated by are suppressed from . Next index will be searched for by considering the set and the reduced set .

- ,

- .

5.5.2. Application

6. Post-Processing: Spatial Regularization

7. Usage of Chrips Method

- –

- dark vegetation: (NDVI), and (low reflectance in SWIR)

- –

- water: , and (low reflectance in SWIR) and (comparison between maximal reflectance and )

- –

- dark surface: , , (low reflectance in SWIR)

- –

- plastic matter: , , , and (absorption depth)

- –

- carbonate: and (absorption depth)

- –

- clay: (absorption depth)

- –

- vegetation: , and (NDVI)

8. Experiments

8.1. Methodology

- -

- radial basis function kernel: , ,

- -

- linear kernel: ,

- -

- polynomial kernel: degree = , .

- -

- number of trees : ,

- -

- number of features to consider when looking for the best split: , where is the number of features of the image,

- -

- maximum depth of each tree : ,

- -

- criterion to measure the quality of a split: .

- –

- precision = TP / (TP + FP),

- –

- recall = TP / (TP + FN),

- –

- ,

8.2. Classification and Assessment

8.2.1. Fauga Image (Training)

8.2.2. Mauzac Image

8.2.3. Garons Image

8.2.4. Impact of Spatial Resolution: Suburban Mauzac Images

- Case 1: , pixels are considered as pure

- Case 2: , pixels are considered as mixed

- Case 3: , pixels are considered as pure or mixed

9. Discussion

10. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| CHRIPS | Classification of Hyperspectral Reflectance Images with Physical and Statistical criteria |

| CNN | Convolutional Neural Network |

| COCHISE | atmospheric COrrection Code for Hyperspectral Images of remote SEnsing sensors) |

| NDVI | Normalized Difference Vegetation Index |

| RFC | Random Forest Classification |

| SVM | Support Vector Machine |

| SWIR | Short-Wavelength InfraRed |

| VNIR | Visible and Near-InfraRed |

References

- Chutia, D.; Bhattacharyya, D.; Sarma, K.; Kalita, R.; Sudhakar, S. Hyperspectral Remote Sensing Classifications: A Perspective Survey. Trans. GIS 2015, 20, 463–490. [Google Scholar] [CrossRef]

- Steinhaus, H. Sur la division des corps matériels en parties. Bull. Acad. Polon. Sci. 1957, 4, 801–804. [Google Scholar]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum Likelihood from Incomplete Data via the EM Algorithm. J. R. Stat. Soc. Ser. (Methodol.) 1977, 39, 1–38. [Google Scholar]

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Vijendra, S. Efficient clustering for high dimensional data: Subspace based clustering and density based clustering. Inf. Technol. J. 2011, 10, 1092–1105. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Zhang, L.; Gong, W. Unsupervised remote sensing image classification using an artificial immune network. Int. J. Remote Sens. 2011, 32, 5461–5483. [Google Scholar] [CrossRef]

- Zhong, Y.; Zhang, S.; Zhang, L. Automatic fuzzy clustering based on adaptive multi-objective differential evolution for remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2290–2301. [Google Scholar] [CrossRef]

- Bai, J.; Xiang, S.; Pan, C. A graph-based classification method for hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 803–817. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Marsheva, T.V.B.; Zhou, D. Semi-supervised graph-based hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3044–3054. [Google Scholar] [CrossRef]

- Zhao, Y.; Yuan, Y.; Wang, Q. Fast Spectral Clustering for Unsupervised Hyperspectral Image Classification. Remote Sens. 2019, 11, 399. [Google Scholar] [CrossRef] [Green Version]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.B.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The Spectral Image Processing System (SIPS)—Interactive Visualization and Analysis of Imaging spectrometer Data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Chang, C.I. Spectral information divergence for hyperspectral image analysis. Igarss Proc. 1999, 1, 509–511. [Google Scholar]

- Du, H.; Chang, C.I.; Ren, H.; D’Amico, F.M.; Jensen, J.O. New Hyperspectral Discrimination Measure for Spectral Characterization. Opt. Eng. 1999, 43, 1777–1786. [Google Scholar]

- Jia, X.; Richards, J.A. Efficient maximum likelihood classification for imaging spectrometer data sets. IEEE Trans. Geosci. Remote Sens. 1994, 32, 274–281. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Zhang, L. An adaptive artificial immune network for supervised classification of multi-/hyperspectral remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2012, 50, 894–909. [Google Scholar] [CrossRef]

- Ho, T.K. Random decision forests. In Proceedings of the 3rd International Conference on Document Analysis and Recognition, Montreal, QC, Canada, 14–16 August 1995; Volume 1, pp. 278–282. [Google Scholar]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Semisupervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans. Geosci. Remote Sens. 2010, 48, 4085–4098. [Google Scholar] [CrossRef] [Green Version]

- Scholkopf, B.; Smola, A.J. Learning With Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Fauvel, M.; Tarabalka, Y.; Benediktsson, J.A.; Chanussot, J.; Tilton, J.C. Advances in spectral-spatial classification of hyperspectral images. Proc. IEEE 2013, 101, 652–675. [Google Scholar] [CrossRef] [Green Version]

- He, L.; Li, J.; Liu, C.; Li, S. Recent Advances on Spectral-Spatial Hyperspectral Image Classification: An Overview and New Guidelines. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1579–1597. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Gomez-Chova, L.; Munoz-Mari, J.; Vila-Francés, J.; Calpe-Maravilla, J. Composite kernels for hyperspectral image classification. IEEE Trans. Geosci. Remote Lett. 2006, 3, 93–97. [Google Scholar] [CrossRef]

- Fauvel, M.; Chanussot, J.; Benediktsson, J.A. A spatial-spectral kernel-based approach for the classification of remote-sensing images. Pattern Recognit. 2012, 45, 381–392. [Google Scholar] [CrossRef]

- Fang, L.; Li, S.; Duan, W.; Ren, J.; Benediktsson, J.A. Classification of hyperspectral images by exploiting spectral-spatial information of superpixel via multiple kernels. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6663–6674. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Song, W.; Fang, L.; Chen, Y.; Ghamasi, P.; Benediktsson, J.A. Deep Learning for Hyperspectral Image Classification: An Overview. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6690–6709. [Google Scholar] [CrossRef] [Green Version]

- Audebert, N.; Saux, B.L.; Lefèvre, S. Deep Learning for Classification of Hyperspectral Data: A Comparative Review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 159–173. [Google Scholar] [CrossRef] [Green Version]

- Hu, W.; Huang, Y.; Wei, L.; Zhang, F.; Li, H. Deep Convolutional Neural Networks for Hyperspectral Image Classification. J. Sensors 2015, 2015, 258619. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Wu, G.; Zhang, F.; Du, Q. Hyperspectral image classification using deep pixel-pair features. IEEE Trans. Geosci. Remote Sens. 2017, 55, 844–853. [Google Scholar] [CrossRef]

- Yang, X.; Ye, Y.; Li, X.; Lau, R.Y.K.; Zhang, X.; Huang, X. Hyperspectral image classification with deep learning models. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5408–5423. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.E.; Plaza, J.L.; Plaza, A. Active learning with convolutional neural networks for hyperspectral image classification using a new Bayesian approach. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6440–6461. [Google Scholar] [CrossRef]

- Liu, B.; Yu, X.; Zhang, P.; Tan, X.; Yu, A.; Xue, Z. A semi-supervised convolutional neural network for hyperspectral image classification. Remote Sens. Lett. 2017, 8, 839–848. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, L.; Ghamisi, P.; Jia, X.; Li, G.; Tang, L. Hyperspectral images classification with Gabor filtering and convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2355–2359. [Google Scholar] [CrossRef]

- Song, W.; Li, S.; Fang, L.; Lu, T. Hyperspectral image classification with deep feature fusion network. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3173–3184. [Google Scholar] [CrossRef]

- Clark, R.N.; Swayze, G.; Livo, K.E.; Kokaly, R.; Sutley, S.J.; Dalton, J.; McDougal, R.R.; Gent, C.A. Imaging spectroscopy: Earth and planetary remote sensing with the USGS Tetracorder and expert systems. J. Geophys. Res. 2003, 108, E12. [Google Scholar] [CrossRef]

- Chabrillat, S.; Eisele, A.; Guillaso, S.; Rogaß, C.; Ben-Dor, E.; Kaufmann, H. HYSOMA: An easy-to-use software interface for soil mapping applications of hyperspectral imagery. In Proceedings of the 7th EARSeL SIG Imaging Spectroscopy Workshop, Edinburgh, UK, 11–13 April 2011. [Google Scholar]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third ERTS Symposium, NASA SP-351, Washington, DC, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Daughtry, C.S.T. Discriminating Crop Residues from Soil by Short-Wave Infrared Reflectance. Agron. J. 2001, 93, 125–131. [Google Scholar] [CrossRef]

- Daughtry, C.S.T.; Hunt, E.R., Jr.; McMurtrey, J.M., III. Assessing Crop Residue Cover Using Shortwave Infrared Reflectance. Remote Sens. Environ. 2004, 90, 126–134. [Google Scholar] [CrossRef]

- Sun, Z.C.; Wang, C.; Guo, H.; Shang, R. A Modified Normalized Difference Impervious Surface Index (MNDISI) for Automatic Urban Mapping from Landsat Imagery. Remote Sens. 2017, 9, 942. [Google Scholar] [CrossRef] [Green Version]

- Zha, Y.; Gao, Y.; Ni, S. Use of normalized difference built-up index in automatically mapping urban areas from TM imagery. Int. J. Remote Sens. 2003, 24, 583–594. [Google Scholar] [CrossRef]

- Ambikapathi, A.; Chan, T.; Chi, C.; Keizer, K. Hyperspectral Data Geometry-Based Estimation of Number of Endmembers Using p-Norm-Based Pure Pixel Identification Algorithm. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2753–2769. [Google Scholar] [CrossRef]

- Heylen, R.; Parente, M.; Scheunders, P. Estimation of the Number of Endmembers in a Hyperspectral Image via the Hubness Phenomenon. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2191–2200. [Google Scholar] [CrossRef]

- Atkins, P.; de Paula, J. Elements of Physical Chemistry; Macmillan: Oxford, UK, 2009. [Google Scholar]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A.R. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 95–120. [Google Scholar] [CrossRef]

- Köhler, C. Airborne Imaging Spectrometer HySpex. J. Large-Scale Res. Facil. 2016, 2, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Rebeyrol, S.; Deville, Y.; Achard, V.; Briottet, X.; May, S. A New Hyperspectral Unmixing Method Using Co-Registered Hyperspectral and Panchromatic Images. In Proceedings of the 2019 10th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Amsterdam, The Netherlands, 24–26 September 2019; 2019. [Google Scholar] [CrossRef] [Green Version]

- Miesch, C.; Poutier, L.; Achard, V.; Briottet, X.; Lenot, X.; Boucher, Y. Direct and Inverse Radiative Transfer Solutions for Visible and Near-Infrared Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1552–1562. [Google Scholar] [CrossRef]

- Boucher, Y.; Poutier, L.; Achard, V.; Lenot, X.; Miesch, C. Validation and robustness of an atmospheric correction algorithm for hyperspectral images. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery VIII; SPIE: Bellingham, WA, USA, 2002. [Google Scholar] [CrossRef]

- Berk, A.; Anderson, G.P.; Acharya, P.K.; Bernstein, L.S.; Muratov, L.; Lee, J.; Fox, M.; Adler-Golden, S.M.; Chetwynd, J.H., Jr.; Hoke, M.L.; et al. MODTRAN5: 2006 update. In Algorithms and Technologies for Multispectral, Hyperspectral, and Ultraspectral Imagery XII; International Society for Optics and Photonics, SPIE: Bellingham, WA, USA, 2006; Volume 6233. [Google Scholar] [CrossRef]

- Carrère, V.; Briottet, X.; Jacquemoud, S.; Marion, R.; Bourguignon, A.; Chami, M.; Dumont, M.; Minghelli-Roman, A.; Weber, C.; Lefèvre-Fonollosa, M.J.; et al. HYPXIM: A second generation high spatial resolution hyperspectral satellite for dual applications. In Proceedings of the 2013 5th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Gainesville, FL, USA, 26–28 June 2013. [Google Scholar]

- Richter, R.; Schläpfer, D. Atmospheric/Topographic Correction for Satellite Imagery, Theoretical Background Document. 2017. Available online: https://www.dlr.de/eoc/en/Portaldata/60/Resources/dokumente/5_tech_mod/atcor3_manual_2012.pdf (accessed on 22 June 2020).

- Alder-Golden, S.M.; Berk, A.; Bernstein, L.S.; Richtsmeier, S.C.; Acharya, P.K.; Matthew, M.W.; Anderson, G.P.; Allred, C.L.; Jeong, L.S.; Chetwynd, J.H. FLAASH, a MODTRAN4 Atmospheric Correction Package for Hyperspectral Data Retrievals and Simulations. In AVIRIS Geoscience Workshop; 1998. Available online: https://aviris.jpl.nasa.gov/proceedings/workshops/98_docs/2.pdf (accessed on 8 May 2012).

- Gao, B.C.; Goetz, A.F.H. Column atmospheric water vapor and vegetation liquid water retrievals from airborne imaging spectrometer data. J. Geophys. Res. Atmos. 1990, 95, 3549–3564. [Google Scholar] [CrossRef]

- Qu, Z.; Kindel, B.; Goetz, A. The High Accuracy Atmospheric Correction for Hyperspectral Data (HATCH) model. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1223–1231. [Google Scholar] [CrossRef]

- Miller, C.J. Performance assessment of ACORN atmospheric correction algorithm. In Algorithms and Technologies for Multispectral, Hyperspectral and Ultraspectral Imagery VIII; SPIE: Bellingham, WA, USA, 2002. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar] [CrossRef]

- Winkelmann, K.H. On the Applicability of Imaging Spectrometry for the Detection and Investigation of Contaminated Sites with Particular Consideration Given to the Detection of Fuel Hydrocarbon Contaminants in Soil. Ph.D. Thesis, BTU Cottbus-Senftenberg, Senftenberg, Germany, 2007. [Google Scholar]

- Kühn, F.; Oppermann, K.; Hörig, B. Hydrocarbon Index—An algorithm for hyperspectral detection of hydrocarbons. Int. J. Remote Sens. 2004, 25, 2467–2473. [Google Scholar] [CrossRef]

- Clark, R.N.; Roush, T.L. Reflectance spectroscopy: Quantitative analysis techniques for remote sensing applications. J. Geophys. Res. 1984, 89, 6329–6340. [Google Scholar] [CrossRef]

- Levin, N.; Kidron, G.J.; Ben-Dor, E. Surface properties of stabilizing coastal dunes: Combining spectral and field analyses. Sedimentology 2007, 54, 771–788. [Google Scholar] [CrossRef]

- Serrano, L.; Penuelas, J.; Ustin, S.L. Remote Sensing of Nitrogen and Lignin in Mediterranean Vegetation from AVIRIS Data: Decomposing Biochemical from Structural Signals. Remote Sens. Environ. 2002, 81, 355–364. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Berjon, A.; Lopez-Lozano, R.; Miller, J.R.; Martín, P.; Cachorro, V.; González, M.; de Frutos, A. Assessing vineyard condition with hyperspectral indices: Leaf and canopy reflectance simulation in a row-structured discontinuous canopy. Remote Sens. Environ. 2005, 99, 271–287. [Google Scholar] [CrossRef]

- Clark, R.N.; King, T.V.V.; Klejwa, M.; Swayze, G.; Vergo, N. High spectral resolution reflectance spectroscopy of minerals. J. Geophys. Res. 1990, 95, 12653–12680. [Google Scholar] [CrossRef] [Green Version]

| FWHM | FWHM | Spatial | |||

|---|---|---|---|---|---|

| Sensor | N | N | VNIR | SWIR | Resolution |

| HySpex | 2 | 416 | 4 nm | 6 nm | 0.3 m |

| HyMap | 1 | 125 | 15 nm | 15 nm | 4 m |

| Odin | 5 | 426 | 4 nm | 9 nm | 0.5 m |

| AisaFENIX | 3 | 420 | 3.5 nm | 7.5 nm | 0.55/2.2/8.8 m |

| CHRIPS | CNN-1D | SVM | RFC | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Class | P | R | F | P | R | F | P | R | F | P | R | F | |

| Fauga (training) | unid. dark surface | 0.894 | 0.968 | 0.929 | 0.987 | 0.472 | 0.638 | 0.981 | 0.586 | 0.734 | 0.975 | 0.576 | 0.724 |

| water | 0.879 | 0.990 | 0.931 | 0.903 | 0.880 | 0.890 | 0.900 | 0.880 | 0.890 | 0.326 | 0.878 | 0.475 | |

| plastic matter | 0.708 | 0.998 | 0.828 | 0.667 | 0.588 | 0.625 | 0.342 | 0.775 | 0.475 | 0.154 | 0.479 | 0.234 | |

| carbonate | 0.993 | 0.883 | 0.935 | 0.565 | 0.939 | 0.705 | 0.694 | 0.943 | 0.799 | 0.982 | 0.808 | 0.887 | |

| green vegetation | 0.960 | 0.898 | 0.928 | 0.921 | 0.942 | 0.931 | 0.910 | 0.953 | 0.931 | 0.939 | 0.840 | 0.887 | |

| stressed vegetation | 0.515 | 0.739 | 0.607 | 0.645 | 0.528 | 0.581 | 0.701 | 0.510 | 0.590 | 0.526 | 0.539 | 0.532 | |

| house roof/tile | 1.000 | 0.964 | 0.982 | 0.943 | 0.883 | 0.912 | 0.967 | 0.934 | 0.950 | 0.976 | 0.888 | 0.930 | |

| asphalt/gravel | 0.987 | 0.974 | 0.981 | 0.964 | 0.909 | 0.936 | 0.953 | 0.954 | 0.954 | 0.998 | 0.702 | 0.824 | |

| vehicle/paint | 0.823 | 0.381 | 0.520 | 0.170 | 0.745 | 0.277 | 0.379 | 0.630 | 0.473 | 0.072 | 0.927 | 0.134 | |

| Mauzac | unid. dark surface | 0.857 | 0.980 | 0.914 | 0.887 | 0.464 | 0.609 | 0.891 | 0.556 | 0.684 | 0.860 | 0.534 | 0.659 |

| water | 0.995 | 0.810 | 0.893 | 0.985 | 0.853 | 0.914 | 0.987 | 0.844 | 0.910 | 0.877 | 0.828 | 0.852 | |

| plastic matter | 0.991 | 0.951 | 0.971 | 0.720 | 0.338 | 0.460 | 0.387 | 0.643 | 0.483 | 0.094 | 0.167 | 0.120 | |

| carbonate | 0.994 | 0.762 | 0.863 | 0.211 | 0.424 | 0.281 | 0.294 | 0.548 | 0.382 | 0.782 | 0.200 | 0.319 | |

| green vegetation | 0.994 | 0.984 | 0.989 | 0.974 | 0.940 | 0.956 | 0.945 | 0.970 | 0.957 | 0.975 | 0.876 | 0.923 | |

| stressed vegetation | 0.391 | 0.687 | 0.498 | 0.134 | 0.274 | 0.180 | 0.136 | 0.201 | 0.162 | 0.111 | 0.304 | 0.163 | |

| house roof/tile | 0.999 | 0.971 | 0.985 | 0.968 | 0.912 | 0.939 | 0.981 | 0.943 | 0.962 | 0.981 | 0.886 | 0.931 | |

| asphalt/gravel | 0.995 | 0.928 | 0.961 | 0.899 | 0.801 | 0.847 | 0.908 | 0.892 | 0.900 | 0.997 | 0.521 | 0.684 | |

| vehicle/paint | 0.743 | 0.462 | 0.570 | 0.032 | 0.832 | 0.062 | 0.128 | 0.625 | 0.212 | 0.017 | 0.932 | 0.033 | |

| Garons | unid. dark surface | 0.998 | 0.398 | 0.569 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| plastic matter | 1.000 | 0.907 | 0.951 | 0.944 | 0.827 | 0.882 | 0.683 | 0.927 | 0.787 | 0.281 | 0.497 | 0.359 | |

| carbonate | 0.953 | 0.946 | 0.950 | 0.552 | 0.961 | 0.702 | 0.067 | 0.593 | 0.121 | 0.543 | 0.167 | 0.256 | |

| clay | 0.934 | 0.888 | 0.910 | 0.876 | 0.534 | 0.664 | 0.577 | 0.684 | 0.626 | 0.980 | 0.192 | 0.322 | |

| green vegetation | 0.974 | 0.939 | 0.956 | 0.911 | 0.921 | 0.916 | 0.937 | 0.852 | 0.892 | 0.966 | 0.723 | 0.827 | |

| stressed vegetation | 0.861 | 0.771 | 0.814 | 0.756 | 0.075 | 0.136 | 0.495 | 0.272 | 0.351 | 0.539 | 0.293 | 0.380 | |

| house roof/tile | 0.931 | 0.970 | 0.951 | 0.003 | 0.228 | 0.006 | 0.008 | 0.289 | 0.016 | 0.006 | 0.538 | 0.012 | |

| asphalt/gravel | 0.790 | 0.557 | 0.654 | 0.753 | 0.498 | 0.600 | 0.577 | 0.573 | 0.575 | 0.874 | 0.381 | 0.531 | |

| Sub. Mauzac | unid. dark surface | 0.990 | 0.997 | 0.993 | 1.000 | 0.317 | 0.481 | 0.999 | 0.408 | 0.579 | 0.999 | 0.396 | 0.568 |

| water | 0.988 | 0.964 | 0.976 | 1.000 | 0.918 | 0.957 | 1.000 | 0.910 | 0.953 | 0.667 | 0.765 | 0.712 | |

| plastic matter | 0.962 | 0.983 | 0.972 | 0.518 | 0.663 | 0.582 | 0.141 | 0.864 | 0.242 | 0.093 | 0.321 | 0.144 | |

| carbonate | 0.595 | 0.983 | 0.741 | 0.036 | 0.797 | 0.068 | 0.031 | 0.735 | 0.059 | 0.083 | 0.285 | 0.129 | |

| clay | 0.068 | 1.000 | 0.127 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| green vegetation | 0.947 | 0.994 | 0.970 | 0.724 | 0.951 | 0.822 | 0.727 | 0.938 | 0.819 | 0.711 | 0.806 | 0.755 | |

| stressed vegetation | 0.995 | 0.958 | 0.976 | 0.866 | 0.218 | 0.348 | 0.974 | 0.437 | 0.604 | 0.879 | 0.255 | 0.395 | |

| house roof/tile | 0.974 | 0.955 | 0.964 | 0.045 | 0.243 | 0.076 | 0.224 | 0.703 | 0.340 | 0.184 | 0.868 | 0.303 | |

| asphalt/gravel | 1.000 | 0.922 | 0.959 | 0.974 | 0.913 | 0.942 | 0.982 | 0.917 | 0.948 | 0.999 | 0.754 | 0.859 | |

| vehicle/paint | 0.579 | 0.478 | 0.523 | 0.088 | 0.688 | 0.157 | 0.073 | 0.775 | 0.134 | 0.011 | 0.941 | 0.022 | |

| CHRIPS (F1 score) | CNN-1D (F1 score) | SVM (F1 score) | RFC (F1 score) | ||||||||||

| Class | pure | mixed | all | pure | mixed | all | pure | mixed | all | pure | mixed | all | |

| Sub. Mauzac | unid. dark surface | 0.576 | 0.039 | 0.190 | 0 | 0 | 0 | 0.320 | 0 | 0.081 | 0 | 0 | 0 |

| water | 0.500 | 0 | 0.300 | 0.714 | 0 | 0.481 | 0.714 | 0 | 0.455 | 0 | 0 | 0 | |

| plastic matter | 0.894 | 0.618 | 0.745 | 0.842 | 0.338 | 0.482 | 0.352 | 0.087 | 0.134 | 0.092 | 0.019 | 0.040 | |

| carbonate | 0.667 | 0.286 | 0.353 | 0.011 | 0.052 | 0.033 | 0.012 | 0.099 | 0.041 | 0 | 0.074 | 0.051 | |

| clay | 1.000 | 0.933 | 0.960 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| green vegetation | 0.937 | 0.877 | 0.899 | 0.700 | 0.800 | 0.759 | 0.725 | 0.793 | 0.765 | 0.700 | 0.712 | 0.707 | |

| stressed vegetation | 0.979 | 0.737 | 0.914 | 0.363 | 0.464 | 0.394 | 0.652 | 0.554 | 0.626 | 0.447 | 0.418 | 0.439 | |

| house roof/tile | 0.992 | 0.696 | 0.925 | 0.065 | 0.374 | 0.104 | 0.384 | 0.613 | 0.415 | 0.306 | 0.516 | 0.329 | |

| asphalt/gravel | 0.661 | 0.500 | 0.586 | 0.984 | 0.834 | 0.913 | 0.981 | 0.787 | 0.892 | 0.899 | 0.697 | 0.809 | |

| vehicle/paint | 0 | 0.276 | 0.242 | 0 | 0.172 | 0.138 | 0 | 0.058 | 0.053 | 0 | 0.019 | 0.012 | |

| green vegetation | 0.533 | 0.802 | 0.770 | 0.271 | 0.687 | 0.610 | 0.254 | 0.641 | 0.558 | 0.235 | 0.636 | 0.542 | |

| stressed vegetation | 0.957 | 0.749 | 0.845 | 0.276 | 0.519 | 0.420 | 0.524 | 0.643 | 0.590 | 0.383 | 0.465 | 0.428 | |

| house roof/tile | 0.933 | 0.526 | 0.642 | 0.019 | 0.522 | 0.215 | 0.187 | 0.694 | 0.387 | 0.187 | 0.755 | 0.422 | |

| asphalt/gravel | 0.842 | 0.438 | 0.471 | 1.000 | 0.839 | 0.850 | 1.000 | 0.762 | 0.779 | 1.000 | 0.675 | 0.701 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alakian, A.; Achard, V. Classification of Hyperspectral Reflectance Images With Physical and Statistical Criteria. Remote Sens. 2020, 12, 2335. https://doi.org/10.3390/rs12142335

Alakian A, Achard V. Classification of Hyperspectral Reflectance Images With Physical and Statistical Criteria. Remote Sensing. 2020; 12(14):2335. https://doi.org/10.3390/rs12142335

Chicago/Turabian StyleAlakian, Alexandre, and Véronique Achard. 2020. "Classification of Hyperspectral Reflectance Images With Physical and Statistical Criteria" Remote Sensing 12, no. 14: 2335. https://doi.org/10.3390/rs12142335