5.2. Limitations

Apparently, a specific limitation of our methodology is owed to the sake of simplicity in GT generation, where individual cars have been selected in both images independently. As shown in

Figure 9a, it can happen that by coincidence two moving cars cover the same location in both images. Consequently, such cases are treated as static cars both in training and prediction. However, our empirical investigation (see

Table 4) has shown that the influence of this failure case is minor, as in a test area of

km

with 598 parking cars, only 5 such cases occurred.

Another implicit assumption we make is that a static car is visible in both images. Obviously, as illustrated in

Figure 9b, this assumption can be violated by occlusions, e.g., when cars are parked close to buildings. It was shown empirically that around

of parking cars (61 out of 598 cars as depicted in

Table 4) get lost due to this restriction, for our specific set-up.

Because the satellite position along its orbit has different in-track and cross-track viewing angles, there are areas in the images occluded by high buildings, trees, and other artificial objects on the ground. Being the second most populous municipality of Spain, Barcelona is a large city with tall buildings, but currently not exceeding 150 m in height. From a thorough analysis of the derived DSM, it follows that typical buildings in the city center have 30 m height. Moreover, building have similar rectangular atrium-shapes following the regular distribution of street directions. This was double-checked with additional visualization of the satellite images and OSM data. In the city center, the street network has a conventional grid pattern, with arterial roads and local streets parallel and orthogonal to each other, forming a pattern of squares (

Figure 10).

The typical distance between two buildings is approximately 20 m. This covers not only the street width (10 m), but also the sidewalks and parking areas on both street sides.

Figure 11a illustrates a profile-view for visible and non-visible areas during image acquisition, where we assume a particular case with the satellite track parallel with the street azimuth and an in-track viewing angle of 0

.

The street visibility can be analyzed with the incidence angles (

), azimuth of the camera sensor (

), and street direction (

). According to

Figure 11b, the non-visible street areas

can be described by both the geometry of the city (

,

) and satellite viewing geometry (

,

), with the following formula,

where

is the non-visible distance on the ground hidden by buildings;

the building height;

and

are the street and camera azimuths, respectively; and

the incidence angle.

The incidence angle () is defined as the angle between the ground normal and looking direction from the satellite sensor (note that we define those angles at the ground, whereas the Nadir angle at the satellite may be different by a few degree because of Earth curvature). For the two Barcelona satellite images, the incidence angles are and . The azimuth of the camera sensor () is the angle between North and the viewing direction projected on the ground, clockwise positive from to . The Barcelona stereo images have azimuth angles of and for the forward and backward scenes, respectively.

Streets located in the central area of the city have a regular distribution with right angle intersections (

Figure 11). Therefore, there are mainly two types of streets: (a) with a southwest to northeast direction (

=

) and (b) with a southeast to northwest direction (

=

). The building height

is 30 m.

For computing the visible part of the streets, the street width () needs to be considered, too. The visibility can then be computed by subtracting the non-visible distance () from the street width (): ω = - .

By applying the above equations and considering a street cross section, we obtain different values for the two types of streets in the two images. In the forward scene, the visible distances are 19.4 m for streets with azimuth and 9.1 m for streets with azimuth, i.e., visibility of 97% and 46% for northeast- and northwest-oriented streets, respectively. For the backward image, the resulting visible distances are 8.3 m and 13.2 m (visibility 41% and 66% for the northeast- and northwest-oriented streets, respectively). Thus, at least the western halves of the streets are visible in both images.

Obviously, the smaller the incidence angles, the better is the street visibility. Therefore, to avoid reduced visibility and to have as few occlusions as possible in the images, the acquisition collection parameters should be taken into account when ordering satellite imagery in urban areas. Concerning occlusions caused by buildings, small in-track and cross-track angles are recommended for a better visibility. The acquisition geometry can be specified in the technical documentation when ordering satellite images (additional costs are charged). In case of archive images, there is typically hardly any choice of viewing directions. Using two image pairs, one looking eastwards and one looking westwards, would allow observing the entire parking space with short interval in-track imagery. To reduce the occlusions caused by trees, images can be acquired during leaf-less periods; however, the low sun elevation angle leads to a lower image quality under these circumstances.

5.3. 3D Reconstruction

It is evident from the results that in our scenario the reconstructed DSM is not helpful for the detection of cars. Normal family cars, our investigated objects, are usually less than 1.5 m high, 5 m long, and 2 m wide. Expressed in pixels, by taking into account the GSD of the WorldView-3 satellite images (0.35 m for Barcelona), they have dimensions of less than 14 pixels length and 6 pixels width. As a result of different viewing angles of the sensor with a B/H ratio of 0.69, the corresponding change in parallax for cars (apparent shift in position) is approximately 3 pixels in image space. The reconstruction of the Barcelona city from the stereo image pair is performed using dense image matching. Because of the smoothness constraint and regularization in dense image matching [

51,

52], the heights of small individual objects may not be reconstructed. Therefore, in the resulting 3D point cloud cars do not have higher elevations compared to their surroundings. A detailed description of single objects height estimation from WV-3 DSM is found in [

53], where a minimum pixel size of 15 is reported for vehicle lengths, in order to get reconstructed.

The computed DSM with a spatial resolution of 0.5 m × 0.5 m shows the Barcelona cityscape, but with some “noise, artifacts, and roughness” effects that appear on the road network especially due to moving objects and building shadows.

Figure 12 shows a detail view of the computed DSM for a street intersection and surrounding buildings. Even if buildings appear in the DSM with irregular contours they have quite well reconstructed heights. In contrast, parked cars do not show any height information in the DSM. The elevation of the two streets and in the intersection area is thoroughly constant.

Moreover, the DSM contains areas with missing elevation information, caused by occlusions or by a substantial height difference between buildings, trees, and the surrounding ground. To summarize, cars appear to be too small to have a significant height signature in the DSM. Still, the DSM allows to discern street level from building level which could be possibly exploited by a CNN to rule out building areas for car detection. However, confusing structures on building roofs with cars seems to be very seldom, and thus a CNN can not benefit from the additional DSM information.

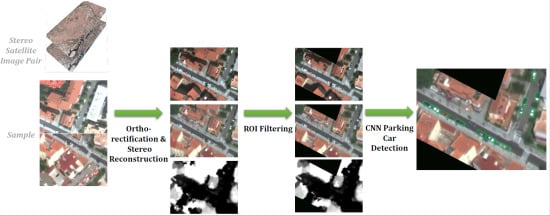

However, while the 3D content from the DSM had an adverse effect on the static car detection, the terrain model extracted from the satellite image stereo pair is necessary to correctly ortho-rectify the image content at street level. A parallax-free image pair (e.g., from a hypothetical pair of satellites flying along the same orbit with the same viewing direction of their cameras) would require an alternative source for the DTM in order to execute the ortho-rectification and integration with other data sources, e.g., inclusion layers.