Distribution Consistency Loss for Large-Scale Remote Sensing Image Retrieval

Abstract

:1. Introduction

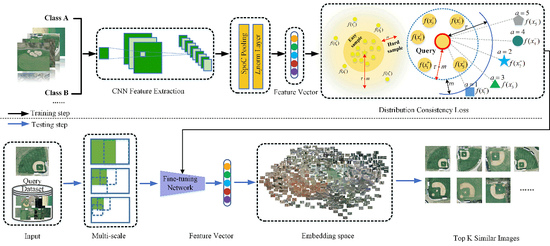

- For the remote sensing image retrieval task, we propose a novel distribution consistency loss (DCL), to learn discriminative embeddings. Different from the previous pair-based loss, it performs loss optimization based on the difference in the number of samples within the class and the sample distribution structure between the classes. It includes the sample balance loss obtained by assigning dynamic weights to selected hard samples based on the ratio of easy sample and hard sample in the class, and the ranking consistency loss weighted [23] according to the distribution of the category of the negative sample.

- A sample mining method suitable for a remote sensing method is proposed. The intra-class hard sample mining method is used to select five positive samples, and each positive sample is given a dynamic weight. The hard class is used instead of hard content mining. This method selects a representative sample which obtains richer information while increasing the speed of convergence.

- We built an end-to-end fine-tuning network architecture for remote sensing image retrieval, which applied convolutional neural network, and selected the most suitable method for remote sensing image retrieval. In DCL, the loss and gradients were computed based on sum-pooling (SPoC) [24] features. The loss function influences the activation distribution of the feature response map, which enhanced accurate saliency and extracted more discriminative features. In addition, we also compared different combinations of image multi-scale processing, whitening and query expansion, and finally selected the most suitable multi-scale cropping method to process the input data.

2. Related Work

2.1. Remote Sensing Image Retrieval Methods

2.2. General Pair-Based Weighting Loss

2.2.1. Contrastive Loss

2.2.2. Triplet Loss

2.2.3. N-Pair Loss

2.2.4. Binomial Deviance Loss

2.2.5. Lifted Structured Loss

2.2.6. Multi-Similarity Loss

2.3. Sample Mining

3. Methodology

3.1. Distribution Consistency Loss

3.1.1. Sample Mining

3.1.2. Distribution Consistency Loss Weighting

3.1.3. Optimization Objective

4. Experiments and Discussion

4.1. Experimental Settings

4.1.1. Datasets

4.1.2. Performance Evaluation Criteria

4.2. Non-Trivial Examples Mining

4.3. Pooling Methods

4.4. Multi-Scale Representation

4.5. Comparison of Sample Mining Methods

4.6. Comparison of Effects of Different Sample Numbers

4.7. Per-Class Results

4.8. Comparison with the State of the Art

4.9. Visualization Result

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ozkan, S.; Ates, T.; Tola, E.; Soysal, M.; Esen, E. Performance Analysis of State-of-the-Art Representation Methods for Geographical Image Retrieval and Categorization. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1996–2000. [Google Scholar] [CrossRef]

- Napoletano, P. Visual descriptors for content-based retrieval of remote-sensing images. Int. J. Remote Sens. 2018, 39, 1343–1376. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Du, J.P.; Wang, X.R. Research on the Robust Image Representation Scheme for Natural Scene Categorization. Chin. J. Electron. 2013, 22, 341–346. [Google Scholar]

- Yang, Y.; Newsam, S. Geographic Image Retrieval Using Local Invariant Features. IEEE Trans. Geosci. Remote Sens. 2012, 51, 818–832. [Google Scholar] [CrossRef]

- Hu, F.; Xia, G.S.; Wang, Z.; Huang, X.; Zhang, L.; Sun, H. Unsupervised Feature Learning Via Spectral Clustering of Multidimensional Patches for Remotely Sensed Scene Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2015–2030. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Tao, D.; Huang, X. On Combining Multiple Features for Hyperspectral Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2011, 50, 879–893. [Google Scholar] [CrossRef]

- Xiao, Q.K.; Liu, M.N.; Song, G. Development Remote Sensing Image Retrieval Based on Color and Texture. In Proceedings of the 2nd International Conference on Information Engineering and Applications, Chongqing, China, 26–28 October 2012; pp. 469–476. [Google Scholar]

- Ye, F.; Xiao, H.; Zhao, X.; Dong, M.; Luo, W.; Min, W. Remote Sensing Image Retrieval Using Convolutional Neural Network Features and Weighted Distance. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1535–1539. [Google Scholar] [CrossRef]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. PatternNet: A benchmark dataset for performance evaluation of remote sensing image retrieval. ISPRS J. Photogram. Remote Sens. 2018, 145, 197–209. [Google Scholar] [CrossRef] [Green Version]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A Unified Embedding for Face Recognition and Clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2999–3007. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFS. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Li, Z.; Peng, C.; Yu, G.; Zhang, X.; Sun, J. Light-Head R-CNN: In Defense of Two-Stage Object Detector. arXiv 2017, arXiv:1711.07264. [Google Scholar]

- Gordo, A.; Almazán, J.; Revaud, J.; Larlus, D. End-to-End Learning of Deep Visual Representations for Image Retrieval. Int. J. Comput. Vis. 2017, 124, 237–254. [Google Scholar] [CrossRef] [Green Version]

- Roy, S.; Sangineto, E.; Demir, B.; Sebe, N. Deep metric and hash-code learning for content-based retrieval of remote sensing images. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 4539–4542. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Toronto, ON, Canada, 20 June 2005; pp. 539–546. [Google Scholar]

- Hermans, A.; Beyer, L.; Leibe, B. In Defense of the Triplet Loss for Person Re-Identification. arXiv 2017, arXiv:1703.07737. [Google Scholar]

- Oh Song, H.; Xiang, Y.; Jegelka, S.; Savarese, S. Deep metric learning via lifted structured feature embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4004–4012. [Google Scholar]

- Sohn, K. Improved deep metric learning with multi-class n-pair loss objective. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Barcelona, Spain, 5–10 December 2016; pp. 1857–1865. [Google Scholar]

- Oh Song, H.; Jegelka, S.; Rathod, V.; Murphy, K. Deep metric learning via facility location. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5382–5390. [Google Scholar]

- Law, M.T.; Urtasun, R.; Zemel, R.S. Deep spectral clustering learning. In Proceedings of the 34th International Conference on Machine Learning (ICML), Sydney, Australia, 6–11 August 2017; Volume 70, pp. 1985–1994. [Google Scholar]

- Movshovitz-Attias, Y.; Toshev, A.; Leung, T.K.; Ioffe, S.; Singh, S. No fuss distance metric learning using proxies. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 360–368. [Google Scholar]

- Fan, L.; Zhao, H.; Zhao, H.; Liu, P.; Hu, H. Distribution Structure Learning Loss (DSLL) Based on Deep Metric Learning for Image Retrieval. Entropy 2019, 21, 1121. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems (GIS), San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Zhou, W.; Newsam, S.; Li, C.; Shao, Z. Learning Low Dimensional Convolutional Neural Networks for High-Resolution Remote Sensing Image Retrieval. Remote Sens. 2017, 9, 489. [Google Scholar] [CrossRef] [Green Version]

- Xiong, W.; Lv, Y.; Cui, Y.; Zhang, X.; Gu, X. A Discriminative Feature Learning Approach for Remote Sensing Image Retrieval. Remote Sens. 2019, 11, 281. [Google Scholar] [CrossRef] [Green Version]

- Marmanis, D.; Datcu, M.; Esch, T.; Stilla, U. Deep learning earth observation classification using ImageNet pretrained networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 105–109. [Google Scholar] [CrossRef] [Green Version]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; LeCun, Y. Overfeat: Integrated Recognition, Localization and Detection Using Convolutional Networks. arXiv 2013, arXiv:1312.6229. [Google Scholar]

- Tang, X.; Jiao, L.; Emery, W.J. SAR image content retrieval based on fuzzy similarity and relevance feedback. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1824–1842. [Google Scholar] [CrossRef]

- Demir, B.; Bruzzone, L. Hashing-Based Scalable Remote Sensing Image Search and Retrieval in Large Archives. IEEE Trans. Geosci. Remote Sens. 2016, 54, 892–904. [Google Scholar] [CrossRef]

- Imbriaco, R.; Sebastian, C.; Bondarev, E. Aggregated Deep Local Features for Remote Sensing Image Retrieval. Remote Sens. 2019, 11, 493. [Google Scholar] [CrossRef] [Green Version]

- Kulis, B.; Grauman, K. Kernelized locality-sensitive hashing. IEEE Tran. Pattern Anal. Mach. Intell. 2012, 34, 1092–1104. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, Y.; Huang, X.; Zhu, H.; Ma, J. Large-Scale Remote Sensing Image Retrieval by Deep Hashing Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 56, 950–965. [Google Scholar] [CrossRef]

- Roy, S.; Sangineto, E.; Demir, B.; Sebe, N. Metric-Learning based Deep Hashing Network for Content Based Retrieval of Remote Sensing Images; Cornell University: Ithaca, NY, USA, 2019. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Jose, CA, USA, 18–20 June 2009; pp. 248–255. [Google Scholar]

- Penatti, O.A.; Nogueira, K.; Dos Santos, J.A. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 24–27 June 2015; pp. 44–51. [Google Scholar]

- Jia, Y.; Shelhamer, E.; Donahue, J.; Karayev, S.; Long, J.; Girshick, R.; Guadarrama, S.; Darrell, T. Caffe: Convolutional architecture for fast feature embedding. In Proceedings of the 22nd ACM International Conference on Multimedia, Orlando, FL, USA, 3–7 November 2014; pp. 675–678. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the Devil in the Details: Delving Deep into Convolutional Nets. In Proceedings of the British MachineVision Conference, Nottingham, UK, 1–5 September 2014; pp. 1–11. [Google Scholar]

- Shao, Z.; Zhou, W.; Cheng, Q.; Diao, C.; Zhang, L. An effective hyperspectral image retrieval method using integrated spectral and textural features. Sens. Rev. 2015, 35, 274–281. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Chandrasekhar, V.; Lin, J.; Morere, O.; Goh, H.; Veillard, A. A practical guide to CNNs and Fisher Vectors for image instance retrieval. Signal Process. 2016, 128, 426–439. [Google Scholar] [CrossRef]

- Babenko, A.; Lempitsky, V. Aggregating local deep features for image retrieval. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 24–27 June 2015; pp. 1269–1277. [Google Scholar]

- Gordo, A.; Almazán, J.; Revaud, J.; Larlus, D. Deep image retrieval: Learning global representations for image search. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 241–257. [Google Scholar]

- Zhao, W.; Du, S.; Wang, Q.; Emery, W.J. Contextually guided very-high-resolution imagery classification with semantic segments. ISPRS J. Photogramm. Remote Sens. 2017, 132, 48–60. [Google Scholar] [CrossRef]

- Chaudhuri, U.; Banerjee, B.; Bhattacharya, A. Siamese graph convolutional network for content based remote sensing image retrieval. Comput. Vis. Image Underst. 2019, 184, 22–30. [Google Scholar] [CrossRef]

- Ye, F.; Dong, M.; Luo, W.; Chen, X.; Min, W. A New Re-Ranking Method Based on Convolutional Neural Network and Two Image-to-Class Distances for Remote Sensing Image Retrieval. IEEE Access 2019, 7, 141498–141507. [Google Scholar] [CrossRef]

- Chaudhuri, B.; Demir, B.; Chaudhuri, S.; Bruzzone, L. Multilabel Remote Sensing Image Retrieval Using a Semi supervised Graph-Theoretic Method. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1144–1158. [Google Scholar] [CrossRef]

- Tolias, G.; Sicre, R.; Jégou, H. Particular Object Retrieval with Integral Max-Pooling of CNN Activations. arXiv 2015, arXiv:1511.05879. [Google Scholar]

- Radenović, F.; Tolias, G.; Chum, O. Fine-tuning CNN image retrieval with no human annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1655–1668. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Noh, H.; Araujo, A.; Sim, J.; Weyand, T.; Han, B. Large-scale image retrieval with attentive deep local features. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3456–3465. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality reduction by learning an invariant mapping. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17 June 2006; pp. 1735–1742. [Google Scholar]

- Yi, D.; Lei, Z.; Li, S.Z. Deep Metric Learning for Practical Person Re-Identification. arXiv 2014, arXiv:1407.4979. [Google Scholar]

- Wang, X.; Han, X.; Huang, W.; Dong, D.; Scott, M.R. Multi-Similarity Loss with General Pair Weighting for Deep Metric Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Denton, TX, USA, 18–20 March 2019; pp. 5022–5030. [Google Scholar]

- Liu, H.; Cheng, J.; Wang, F. Sequential subspace clustering via temporal smoothness for sequential data segmentation. IEEE Trans. Image Process. 2017, 27, 866–878. [Google Scholar] [CrossRef] [PubMed]

- Harwood, B.; Kumar, B.; Carneiro, G.; Reid, I.; Drummond, T. Smart mining for deep metric learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2821–2829. [Google Scholar]

- Wu, C.Y.; Manmatha, R.; Smola, A.J.; Krahenbuhl, P. Sampling matters in deep embedding learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2840–2848. [Google Scholar]

- Xiao, Q.; Luo, H.; Zhang, C. Margin Sample Mining Loss: A Deep Learning Based Method for Person Re-Identification. arXiv 2017, arXiv:1710.00478. [Google Scholar]

- Wang, X.; Hua, Y.; Kodirov, E.; Hu, G.; Garnier, R.; Robertson, N.M. Ranked List Loss for Deep Metric Learning. arXiv 2019, arXiv:1903.03238. [Google Scholar]

- Yuan, Y.; Yang, K.; Zhang, C. Hard-aware deeply cascaded embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 814–823. [Google Scholar]

- Cui, Y.; Zhou, F.; Lin, Y.; Belongie, S. Fine-grained categorization and dataset bootstrapping using deep metric learning with humans in the loop. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1153–1162. [Google Scholar]

- Prabhu, Y.; Varma, M. Fastxml: A fast, accurate and stable tree-classifier for extreme multi-label learning. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014; pp. 263–272. [Google Scholar]

- Wang, X.; Hua, Y.; Kodirov, E.; Hu, G.; Robertson, N.M. Deep metric learning by online soft mining and class-aware attention. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 5361–5368. [Google Scholar]

- Razavian, A.S.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN Features Off-the-Shelf: An Astounding Baseline for Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 806–813. [Google Scholar]

| Network | τ | P@5 | P@10 | P@50 | P@100 |

|---|---|---|---|---|---|

| VGG16 | 0.85 | 97.89 | 97.89 | 95.68 | 94.68 |

| 1.05 | 98.42 | 98.16 | 97.37 | 95.84 | |

| 1.25 | 97.37 | 97.32 | 96.05 | 94.43 | |

| 1.45 | 97.89 | 98.42 | 96.74 | 94.18 | |

| ResNet50 | 0.85 | 98.42 | 97.89 | 97.21 | 95.89 |

| 1.05 | 98.95 | 98.68 | 97.26 | 96.11 | |

| 1.25 | 99.47 | 99.21 | 98.53 | 98.08 | |

| 1.45 | 99.47 | 99.21 | 97.84 | 96.87 |

| Network | m | P@5 | P@10 | P@50 | P@100 |

|---|---|---|---|---|---|

| VGG16 | 0 | 98.42 | 97.63 | 95.11 | 93.21 |

| 0.2 | 98.95 | 98.42 | 98.16 | 98.00 | |

| 0.4 | 98.95 | 98.95 | 98.42 | 97.82 | |

| 0.6 | 98.22 | 98.22 | 98.69 | 98.35 | |

| 0.8 | 99.21 | 99.58 | 98.86 | 98.20 | |

| 1.0 | 99.47 | 99.74 | 99.11 | 98.34 | |

| ResNet50 | 0.2 | 100.00 | 99.74 | 99.21 | 98.82 |

| 0.4 | 98.95 | 98.95 | 98.42 | 97.82 | |

| 0.6 | 100.00 | 100.00 | 99.84 | 99.71 | |

| 0.8 | 98.98 | 99.47 | 99.79 | 99.58 | |

| 1.0 | 99.47 | 99.74 | 99.42 | 99.26 | |

| 1.2 | 100.00 | 100.00 | 99.89 | 99.81 |

| Pooling over Scales | Scale | mAP | P@5 | P@10 | P@50 | P@100 | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| P@5 P@10 P@50 | ||||||||||

| VGG16 |  | 96.43 | 97.89 | 98.42 | 98.16 | 97.42 | ||||

|  | 98.05 | 98.95 | 99.21 | 99.32 | 98.95 | ||||

|  |  | 94.66 | 98.95 | 97.89 | 97.21 | 96.05 | |||

|  |  |  | 94.09 | 98.42 | 97.89 | 96.84 | 95.66 | ||

|  |  |  |  | 91.27 | 96.84 | 97.37 | 95.58 | 93.74 | |

| ResNet50 |  | 99.38 | 100.00 | 99.74 | 99..95 | 99.68 | ||||

|  | 99.43 | 100.00 | 100.00 | 99.89 | 99.66 | ||||

|  |  | 99.30 | 100.00 | 100.00 | 99.95 | 99.58 | |||

|  |  |  | 98.79 | 100.00 | 100.00 | 99.84 | 99.50 | ||

|  |  |  |  | 97.96 | 100.00 | 100.00 | 99.74 | 98.61 | |

| Structural Loss | mAP | P@5 | P@10 | P@50 | P@100 | P@1000 |

|---|---|---|---|---|---|---|

| UCMD | ||||||

| Triplet Loss | 92.96 | 98.04 | 96.63 | 92.62 | 46.16 | 4.69 |

| N-pair-mc Loss | 91.81 | 94.04 | 91.46 | 90.49 | 45.08 | 4.67 |

| Proxy NCA Loss | 95.72 | 97.98 | 96.65 | 94.23 | 47.02 | 4.71 |

| Lifted Struct Loss | 96.08 | 98.90 | 97.82 | 95.78 | 47.46 | 4.76 |

| DSLL | 97.34 | 98.98 | 98.42 | 96.93 | 48.67 | 4.86 |

| Our DCL | 98.76 | 100.00 | 100.00 | 99.33 | 49.82 | 5.21 |

| PatternNet | ||||||

| Triplet Loss | 94.94 | 99.52 | 97.92 | 96.13 | 95.07 | 15.61 |

| N-pair-mc Loss | 94.11 | 97.94 | 95.15 | 94.33 | 98.17 | 15.52 |

| Proxy NCA Loss | 97.71 | 98.56 | 98.69 | 98.89 | 98.45 | 15.74 |

| Lifted Struct Loss | 98.58 | 98.05 | 98.62 | 98.75 | 98.88 | 15.79 |

| DSLL | 98.52 | 99.09 | 98.03 | 96.68 | 98.69 | 15.83 |

| Our DCL | 99.43 | 100.00 | 100.00 | 99.89 | 99.66 | 16.38 |

| NWPU-RESISC45 | ||||||

| Triplet Loss | 93.82 | 98.65 | 96.85 | 96.07 | 94.83 | 15.34 |

| N-pair-mc Loss | 93.06 | 97.86 | 95.12 | 94.35 | 98.15 | 15.46 |

| Proxy NCA Loss | 97.68 | 97.54 | 97.57 | 97.91 | 97.44 | 15.69 |

| Lifted Struct Loss | 97.47 | 97.03 | 97.42 | 97.63 | 97.72 | 15.76 |

| DSLL | 98.54 | 99.05 | 98.15 | 96.34 | 98.45 | 15.78 |

| Our DCL | 99.44 | 100.00 | 100.00 | 99.91 | 99.70 | 16.42 |

| Number (n) | Weights | mAP |

|---|---|---|

| 1 | 1 | 95.85 |

| 5 | 1 | 93.21 |

| 5 | 1/n | 94.93 |

| 5 | Dynamic | 97.48 |

| 10 | 1 | 90.15 |

| 10 | 1/n | 93.85 |

| 10 | Dynamic | 95.32 |

| Number | Category Restrictions | mAP |

|---|---|---|

| 5 | yes | 97.48 |

| 5 | no | 95.16 |

| 10 | yes | 94.52 |

| 10 | no | 92.14 |

| 15 | yes | 93.42 |

| 15 | no | 91.35 |

| Categories | VGG16 | ResNet50 | ||

|---|---|---|---|---|

| Pretrained | DCL | Pretrained CNNs | DCL | |

| Agriculture (1) | 0.94 | 1.00 | 0.99 | 1.00 |

| Airplane (2) | 0.66 | 1.00 | 0.99 | 1.00 |

| Baseball diamond (3) | 0.60 | 0.99 | 0.59 | 1.00 |

| Beach (4) | 0.99 | 1.00 | 0.99 | 1.00 |

| Buildings (5) | 0.33 | 0.74 | 0.37 | 0.99 |

| Chaparral (6) | 0.99 | 1.00 | 1.00 | 1.00 |

| Dense residential (7) | 0.36 | 0.94 | 0.24 | 0.97 |

| Forest (8) | 0.88 | 1.00 | 0.99 | 1.00 |

| Freeway (9) | 0.55 | 0.99 | 0.87 | 0.99 |

| Golf course (10) | 0.42 | 0.99 | 0.83 | 1.00 |

| Harbor (11) | 0.59 | 1.00 | 0.68 | 1.00 |

| Intersection (12) | 0.31 | 0.98 | 0.31 | 0.98 |

| Medium residential (13) | 0.48 | 0.93 | 0.61 | 0.99 |

| Mobile home park (14) | 0.58 | 1.00 | 0.72 | 1.00 |

| Overpass (15) | 0.37 | 0.97 | 0.51 | 0.99 |

| Parking lot (16) | 0.79 | 1.00 | 0.32 | 0.83 |

| River (17) | 0.67 | 0.98 | 0.60 | 0.99 |

| Runway (18) | 0.57 | 1.00 | 0.89 | 1.00 |

| Sparse residential (19) | 0.11 | 0.89 | 0.55 | 0.99 |

| Storage tanks (20) | 0.77 | 0.99 | 0.88 | 1.00 |

| Tennis court (21) | 0.39 | 1.00 | 0.78 | 1.00 |

| Categories | VGG16 | ResNet50 | ||

|---|---|---|---|---|

| Pretrained | DCL | Pretrained CNNs | DCL | |

| Airplane (1) | 0.95 | 1.00 | 0.92 | 1.00 |

| Baseball field (2) | 0.97 | 0.99 | 0.96 | 1.00 |

| Basketball court (3) | 0.50 | 0.97 | 0.45 | 0.98 |

| Beach (4) | 1.00 | 1.00 | 0.99 | 1.00 |

| Bridge (5) | 0.24 | 0.98 | 0.13 | 0.99 |

| Cemetery (6) | 0.93 | 1.00 | 0.93 | 1.00 |

| Chaparral (7) | 0.99 | 1.00 | 1.00 | 1.00 |

| Christmas tree farm (8) | 0.98 | 1.00 | 0.83 | 1.00 |

| Closed road (9) | 0.93 | 0.99 | 0.91 | 0.99 |

| Coastal mansion (10) | 0.99 | 0.97 | 0.98 | 0.99 |

| Crosswalk (11) | 0.96 | 1.00 | 0.93 | 1.00 |

| Dense residential (12) | 0.52 | 0.82 | 0.46 | 0.99 |

| Ferry terminal (13) | 0.58 | 0.83 | 0.40 | 0.87 |

| Football field (14) | 0.97 | 0.99 | 0.89 | 1.00 |

| Forest (15) | 0.99 | 1.00 | 1.00 | 1.00 |

| Freeway (16) | 0.99 | 1.00 | 0.99 | 1.00 |

| Golf course (17) | 0.95 | 0.99 | 0.95 | 0.99 |

| Harbor (18) | 0.89 | 0.96 | 0.92 | 0.96 |

| Intersection (19) | 0.52 | 0.98 | 0.51 | 0.99 |

| Mobile home park (20) | 0.86 | 0.99 | 0.81 | 1.00 |

| Nursing home (21) | 0.23 | 0.96 | 0.59 | 0.98 |

| Oil gas field (22) | 0.99 | 1.00 | 0.99 | 1.00 |

| Oilwell (23) | 1.00 | 1.00 | 1.00 | 1.00 |

| Overpass (24) | 0.77 | 0.99 | 0.90 | 0.99 |

| Parking lot (24) | 0.99 | 0.99 | 0.98 | 1.00 |

| Parking space (26) | 0.52 | 1.00 | 0.47 | 1.00 |

| Railway (27) | 0.83 | 0.99 | 0.78 | 1.00 |

| River (28) | 0.99 | 1.00 | 0.99 | 1.00 |

| Runway (29) | 0.29 | 0.99 | 0.36 | 0.99 |

| Runway marking (30) | 0.99 | 0.99 | 0.99 | 1.00 |

| Shipping yard (31) | 0.97 | 0.99 | 0.99 | 0.99 |

| Solar panel (32) | 0.99 | 0.99 | 0.99 | 1.00 |

| Sparse residential (33) | 0.64 | 0.91 | 0.47 | 0.99 |

| Storage tank (34) | 0.42 | 0.99 | 0.55 | 0.99 |

| Swimming pool (35) | 0.18 | 0.96 | 0.43 | 0.99 |

| Tennis court (36) | 0.59 | 0.91 | 0.31 | 0.97 |

| Transformer station (37) | 0.69 | 0.99 | 0.63 | 0.99 |

| Wastewater plant (38) | 0.91 | 0.98 | 0.90 | 0.99 |

| Categories | VGG16 | ResNet50 | ||

|---|---|---|---|---|

| Pretrained | DCL | Pretrained CNNs | DCL | |

| Airplane (1) | 0.76 | 1.00 | 0.88 | 1.00 |

| Airport (2) | 0.66 | 0.97 | 0.72 | 1.00 |

| Baseball Diamond (3) | 0.64 | 0.97 | 0.69 | 0.99 |

| Basketball Court (4) | 0.51 | 0.95 | 0.60 | 0.98 |

| Beach (5) | 0.77 | 1.00 | 0.76 | 1.00 |

| Bridge (6) | 0.85 | 1.00 | 0.72 | 1.00 |

| Chaparral (7) | 1.00 | 1.00 | 1.00 | 1.00 |

| Church (8) | 0.55 | 0.97 | 0.56 | 0.97 |

| Circular Farmland (9) | 0.93 | 1.00 | 0.96 | 1.00 |

| Cloud (10) | 0.89 | 1.00 | 0.91 | 1.00 |

| Commercial Area (11) | 0.64 | 0.99 | 0.81 | 1.00 |

| Dense residential (12) | 0.77 | 1.00 | 0.88 | 1.00 |

| Desert (13) | 0.84 | 1.00 | 0.86 | 1.00 |

| Forest (14) | 1.00 | 1.00 | 0.94 | 1.00 |

| Freeway (15) | 0.71 | 0.92 | 0.62 | 0.98 |

| Golf course (16) | 0.79 | 0.99 | 0.95 | 1.00 |

| Ground Track Field (17) | 0.80 | 0.97 | 0.62 | 0.98 |

| Harbor (18) | 0.84 | 1.00 | 0.92 | 1.00 |

| Industrial Area (19) | 0.71 | 0.98 | 0.76 | 0.99 |

| Intersection (20) | 0.70 | 0.95 | 0.63 | 0.98 |

| Island (21) | 1.00 | 1.00 | 1.00 | 1.00 |

| Lake (22) | 0.88 | 1.00 | 0.80 | 1.00 |

| Meadow (23) | 0.82 | 1.00 | 0.84 | 1.00 |

| Medium Residential (24) | 0.70 | 0.99 | 0.78 | 1.00 |

| Mobile Home Park (25) | 0.71 | 1.00 | 0.92 | 1.00 |

| Mountain (26) | 0.86 | 1.00 | 0.87 | 1.00 |

| Overpass (27) | 0.72 | 0.99 | 0.86 | 1.00 |

| Palace (28) | 0.52 | 0.94 | 0.40 | 0.98 |

| Parking Lot (29) | 0.87 | 1.00 | 1.00 | 1.00 |

| Railway (30) | 0.77 | 1.00 | 0.89 | 1.00 |

| Railway Station (31) | 0.65 | 0.95 | 0.63 | 0.98 |

| Rectangular Farmland (32) | 0.85 | 1.00 | 0.83 | 0.99 |

| River (33) | 0.69 | 0.98 | 0.69 | 0.99 |

| Roundabout (34) | 0.80 | 1.00 | 0.71 | 1.00 |

| Runway (35) | 0.77 | 1.00 | 0.78 | 1.00 |

| Sea Ice (36) | 1.00 | 1.00 | 1.00 | 1.00 |

| Ship (37) | 0.65 | 0.91 | 0.60 | 0.98 |

| Snowberg (38) | 0.95 | 1.00 | 0.96 | 1.00 |

| Sparse Residential (39) | 0.81 | 1.00 | 0.68 | 0.98 |

| Stadium (40) | 0.76 | 1.00 | 0.80 | 1.00 |

| Storage Tank (41) | 0.81 | 1.00 | 0.87 | 1.00 |

| Tennis Court (42) | 0.56 | 0.99 | 0.81 | 1.00 |

| Terrace (43) | 0.73 | 1.00 | 0.87 | 1.00 |

| Thermal Power Station (44) | 0.64 | 0.98 | 0.66 | 0.98 |

| Wetland (45) | 0.68 | 0.98 | 0.83 | 1.00 |

| Features | mAP | P@5 | P@10 | P@50 | P@100 | P@1000 |

|---|---|---|---|---|---|---|

| RAN-KNN [46] | 26.74 | - | 24.90 | - | - | - |

| GoogLeNet [46] | 53.13 | - | 80.96 | - | - | - |

| VGG-VD19 [46] | 53.19 | - | 77.60 | - | - | - |

| VGG-VD16 [46] | 53.71 | - | 78.34 | - | - | - |

| KSLSH [32] | 63.00 | - | - | - | - | - |

| GCN [46] | 64.81 | - | 87.12 | - | - | - |

| SGCN [46] | 69.89 | - | 93.63 | - | - | - |

| VGG16 [27] | 81.30 | 89.50 | 87.60 | 78.30 | - | - |

| ResNet50 [27] | 84.00 | 91.90 | 91.40 | 84.50 | - | - |

| MiLaN [34] | 90.40 | - | - | - | - | - |

| FC6 (VGG16) [47] | 91.65 | - | - | - | - | - |

| FC7 (VGG16) [47] | 92.00 | - | - | - | - | - |

| Pool5 (ResNet50) [47] | 95.62 | - | - | - | - | - |

| Ours (VGG16) | 97.34 | 97.14 | 97.62 | 98.67 | 48.95 | 3.98 |

| Ours (ResNet50) | 98.76 | 100.00 | 100.00 | 99.33 | 49.82 | 5.21 |

| Features | mAP | P@5 | P@10 | P@50 | P@100 | P@1000 |

|---|---|---|---|---|---|---|

| G-KNN [46] | 12.35 | - | 13.24 | - | - | - |

| RAN-KNN [46] | 22.56 | - | 37.70 | - | - | - |

| UFL [9] | 25.35 | 52.09 | 48.82 | 38.11 | 31.92 | 9.79 |

| Gabor Texture [9] | 27.73 | 68.55 | 62.78 | 44.61 | 35.52 | 8.99 |

| VLAD [9] | 34.10 | 58.25 | 55.70 | 47.57 | 41.11 | 11.04 |

| VGG-VD19 [46] | 57.89 | - | 91.13 | - | - | - |

| VGG-VD16 [46] | 59.86 | - | 92.04 | - | - | - |

| VGGF Fc1 [9] | 61.95 | 92.46 | 90.37 | 79.26 | 69.05 | 14.25 |

| GoogLeNet [46] | 63.11 | - | 93.31 | - | - | - |

| VGGF Fc2 [9] | 63.37 | 91.52 | 89.64 | 79.99 | 70.47 | 14.52 |

| VGGS Fc1 [9] | 63.28 | 92.74 | 90.70 | 80.03 | 70.13 | 14.36 |

| VGGS Fc2 [9] | 63.74 | 91.92 | 90.09 | 80.31 | 70.73 | 14.55 |

| ResNet50 [9] | 68.23 | 94.13 | 92.41 | 83.71 | 74.93 | 14.64 |

| LDCNN [9] | 69.17 | 66.81 | 66.11 | 67.47 | 68.80 | 14.08 |

| SGCN [46] | 71.79 | - | 97.14 | - | - | - |

| GCN [46] | 73.11 | - | 95.53 | - | - | - |

| FC6 (VGG16) [47] | 96.21 | - | - | - | - | - |

| FC7 (VGG16) [47] | 96.48 | - | - | - | - | - |

| Pool5 (ResNet50) [47] | 98.49 | - | - | - | - | - |

| Ours (VGG16) | 98.05 | 98.95 | 99.21 | 99.32 | 98.95 | 15.47 |

| Ours (ResNet50) | 99.43 | 100.00 | 100.00 | 99.89 | 99.66 | 16.38 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, L.; Zhao, H.; Zhao, H. Distribution Consistency Loss for Large-Scale Remote Sensing Image Retrieval. Remote Sens. 2020, 12, 175. https://doi.org/10.3390/rs12010175

Fan L, Zhao H, Zhao H. Distribution Consistency Loss for Large-Scale Remote Sensing Image Retrieval. Remote Sensing. 2020; 12(1):175. https://doi.org/10.3390/rs12010175

Chicago/Turabian StyleFan, Lili, Hongwei Zhao, and Haoyu Zhao. 2020. "Distribution Consistency Loss for Large-Scale Remote Sensing Image Retrieval" Remote Sensing 12, no. 1: 175. https://doi.org/10.3390/rs12010175