1. Introduction

Spatial modeling has become an integral component of geographic information systems (GISs) and remote sensing. Combined with classical statistical and machine learning algorithms, spatial modeling in GIS has been used to address wide ranging questions in a broad array of disciplines, from epidemiology [

1] and climate science [

2] to geosciences [

3] and natural resources [

4,

5,

6]. However, in most GISs, the current workflow used to integrate statistical and machine learning algorithms and to process raster models limits the types of analyses that can be performed. This process can be generally described as a series of sequential steps: (1) build a sample data set using a GIS; (2) import that sample data set into statistical software such as SAS [

7], R [

8], or MATLAB [

9]; (3) define a relationship (e.g., predictive regression model) between response and explanatory variables that can be used within a GIS to create predictive surfaces, and then (4) build a representative spatial model within a GIS that uses the outputs from the predictive model to create spatially explicit surfaces. Often, the multi-software complexity of this practice warrants building tools that streamline and automate many aspects of the process, especially the export and import steps that bridge different software. However, a number of challenges place significant limitations on producing final outputs in this manner, including learning additional software, implementing predictive model outputs, managing large data sets, and handling the long processing time and large storage space requirements associated with this work flow [

10,

11]. These challenges have intensified over the past decade because large, fine-resolution remote sensing data sets, such as meter and sub-meter imagery and Lidar, have become widely available and less expensive to procure, but the tools to use such data efficiently and effectively have not always kept pace, especially in the desktop environment.

To address some of these limitations, advances in both GIS and statistical software have focused on integrating functionality through coding libraries that extend the capabilities of any software package. Common examples include RSGISlib [

12], GDAL [

13], SciPy [

14], ALGLIB [

15], and Accord.NET [

16]. At the same time, new processing techniques have been developed to address common challenges with big data that aim to more fully leverage improvements in computer hardware and software configurations. For example, parallel processing libraries such as OpenCL [

17] and CUDA [

18] are stable and actively being used within the GIS community [

19,

20]. Similarly, frameworks such as Hadoop [

21] are being used to facilitate cloud computing, and offer improvements in big data processing by partitioning processes across multiple CPUs within a large server farm, thereby improving user access, affordability, reliability, and data sharing [

22,

23]. While the integration, functionality, and capabilities of GIS and statistical software continue to expand, the underlying framework of how procedures and methods are used within spatial models in GIS tends to remain the same, which can impose artificial limitations on the type and scale of analyses that can be performed.

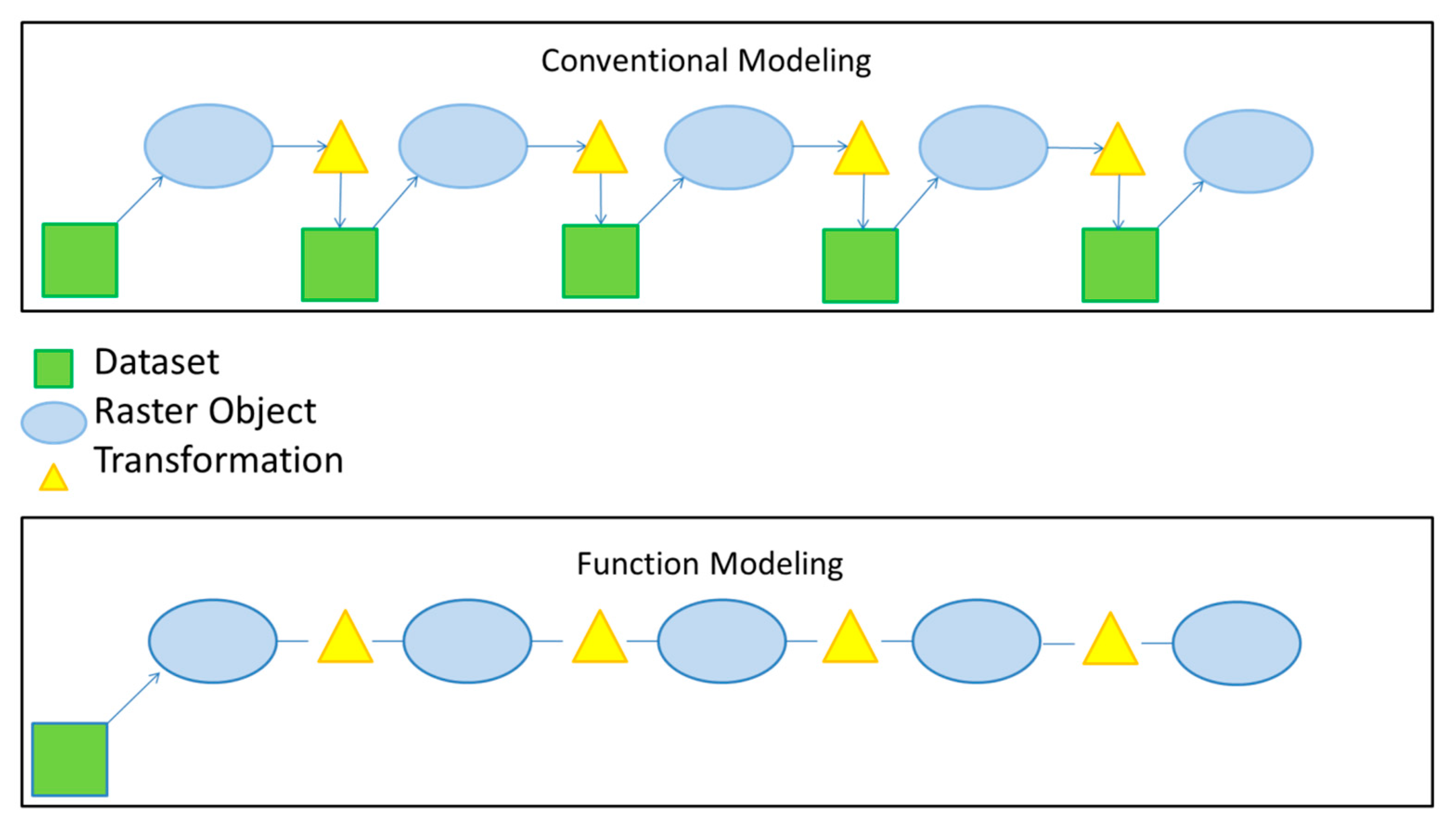

Spatial models are typically composed of multiple sequential operations. Each operation reads data from a given data set, transforms the data, and then creates a new data set (

Figure 1). In programming, this is called eager evaluation (or strict semantics) and is characterized by a flow that evaluates all expressions (i.e., arguments) regardless of the need for the values of those expressions in generating final results [

24]. Though eager evaluation is intuitive and used by many traditional programming languages, creating and reading new data sets at each step of a model in GIS comes at a high processing and storage cost, and is not viable for large area analysis outside of the supercomputing environment, which is not currently available to the vast majority of GIS users.

In contrast, lazy evaluation (also called lazy reading and call-by-need) can be employed to perform the same types of analyses, reducing the number of reads and writes to disk, which reduces processing time and storage. In lazy evaluation, actions are delayed until their results are required, and results can be generated without requiring the independent evaluation of each expression [

24]. From a mathematical perspective, this is similar to function composition, in which functions are sequentially combined into a composite function that is capable of generating results without evaluating each function independently. The theoretical foundations of lazy evaluation employed in functional programming languages dates back to as early as 1971, as described by Jian et al. [

25]. Tremblay [

26], provides a thorough discussion of the different classes of functional programming languages and their expressiveness as it relates to the spectrum of strict and non-strict evaluation. He defines strict (i.e., eager) approaches as evaluating all of the arguments before starting the evaluation of the body of the function and evaluating the body of the function only if all arguments are defined, and lazy approaches as evaluating the body of the function before evaluating any of the arguments and evaluating the arguments only if needed while evaluating the body.

In GISs, operations in conventional spatial models are evaluated and combined eagerly in ways that generate intermediate data sets whose values are written to disk and used to produce final results. In a lazy evaluation context, evaluation is delayed, intermediate datasets are only generated if needed, and final data sets are not stored to disk but are instead processed dynamically when needed (

Figure 1). For example, though an analysis may be conducted over a very large area at high resolution, final results can be generated easily in a call-by-need fashion for a smaller extent being visualized on a screen or map, without generating the entire data set for the full extent of the analysis.

In this paper, we introduce a new GIS spatial modeling framework called function modeling (FM) and highlight some of the advantages of processing big data spatial models using lazy evaluation techniques. Simulations and two case studies are used to evaluate the costs and benefits of alternative methods, and an open source .Net coding library built around ESRI (Redlands, CA, USA) ArcObjects is discussed. The coding library facilitates a wide range of big data spatial, statistical, and machine learning type analyses, including FM.

2. Materials and Methods

2.1. NET Libraries

To streamline the spatial modeling process, we developed a series of coding libraries that leverage concepts of lazy evaluation using function raster data sets [

10,

11] and integrate numeric, statistical, and machine learning algorithms with ESRI’s ArcObjects [

27]. Combined, these libraries facilitate FM, which allows users to chain modeling steps and complex algorithms into one raster data set or field calculation without writing the outputs of intermediate modeling steps to disk. Our libraries were built using an object-oriented design, .NET framework, ESRI’s ArcObjects [

27], ALGLIB [

15], and Accord.net [

16], which are accessible to computer programmers and analysts through our subversion site [

28] and packaged in an add-in toolbar for ESRI ArcGIS [

11]. The library and toolbar together are referred to here as the U.S. Forest Service Rocky Mountain Research Station Raster Utility (the RMRS Raster Utility) [

28].

The methods and procedures within the RMRS Raster Utility library parallel many of the functions found in ESRI’s Spatial Analyst extension including focal, aggregation, resampling, convolution, remap, local, arithmetic, mathematical, logical, zonal, surface, and conditional operations. However, the libraries used in this study support multiband manipulations and perform these transformations without writing intermediate or final output raster data sets to disk. The spatial modeling framework used in the Utility focuses on storing only the manipulations occurring to data sets and applying those transformations dynamically at the time data are read. In addition to including common types of raster transformations, the utility also includes multiband manipulation procedures such as gray level co-occurrence matrix (GLCM), time series transformations, landscape metrics, entropy and angular second moment calculations for focal and zonal procedures, image normalization, and other statistical and machine learning transformations that are integrated directly into this modeling framework.

While the statistical and machine learning transformations can be used to build surfaces and calculate records within a tabular field, they do not in themselves define the relationships between response and explanatory variables like a predictive model. To define these relationships, we built a suite of classes that perform a wide variety of statistical testing and predictive modeling using many of the optimization algorithms and mathematical procedures found within ALGLIB and Accord.net [

15,

16]. Typically, in natural resource applications that use GIS, including the case studies presented in

Section 2.3, analysts use samples drawn from a population to test hypotheses and create generalized associations (e.g., an equation or procedure) between variables of interest that are expensive to collect in the field (i.e., response variables) and variables that are less costly and thought to be related to the response variables (i.e., explanatory variables;

Figure 2 and

Figure 3). For example, it is common to use the information contained in remotely sensed images to predict the physical characteristics of vegetation that is otherwise measured accurately and relatively expensively with ground plots. While the inner workings and assumptions of the algorithms used to develop these relationships are beyond the scope of this paper, it is worth noting that the classes developed to utilize these algorithms within a spatial context provide computer programmers and analysts with the ability to use these techniques in the FM context, relatively easily without developing code for such algorithms themselves.

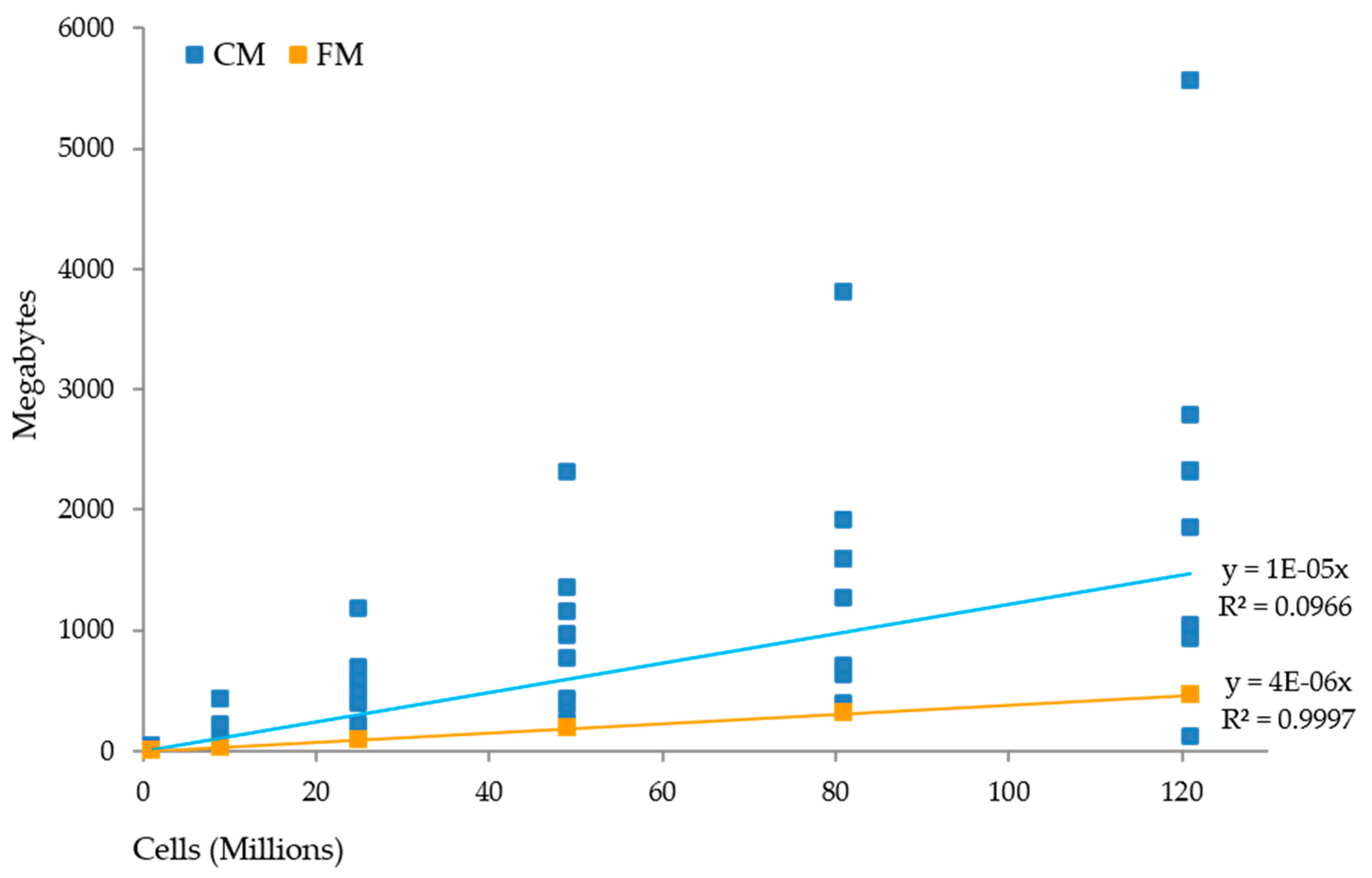

Combined with FM, the sampling, modeling, and surface creation workflow can be streamlined to produce outputs that answer questions and display relationships between response and explanatory variables in a spatially explicit manner. However, improvements in efficiency and effectiveness over conventional techniques are not always known. These .NET libraries were used to test the hypothesis that FM provides significant improvement over conventional techniques and to quantify any related effects on storage and processing time.

2.2. Simulations

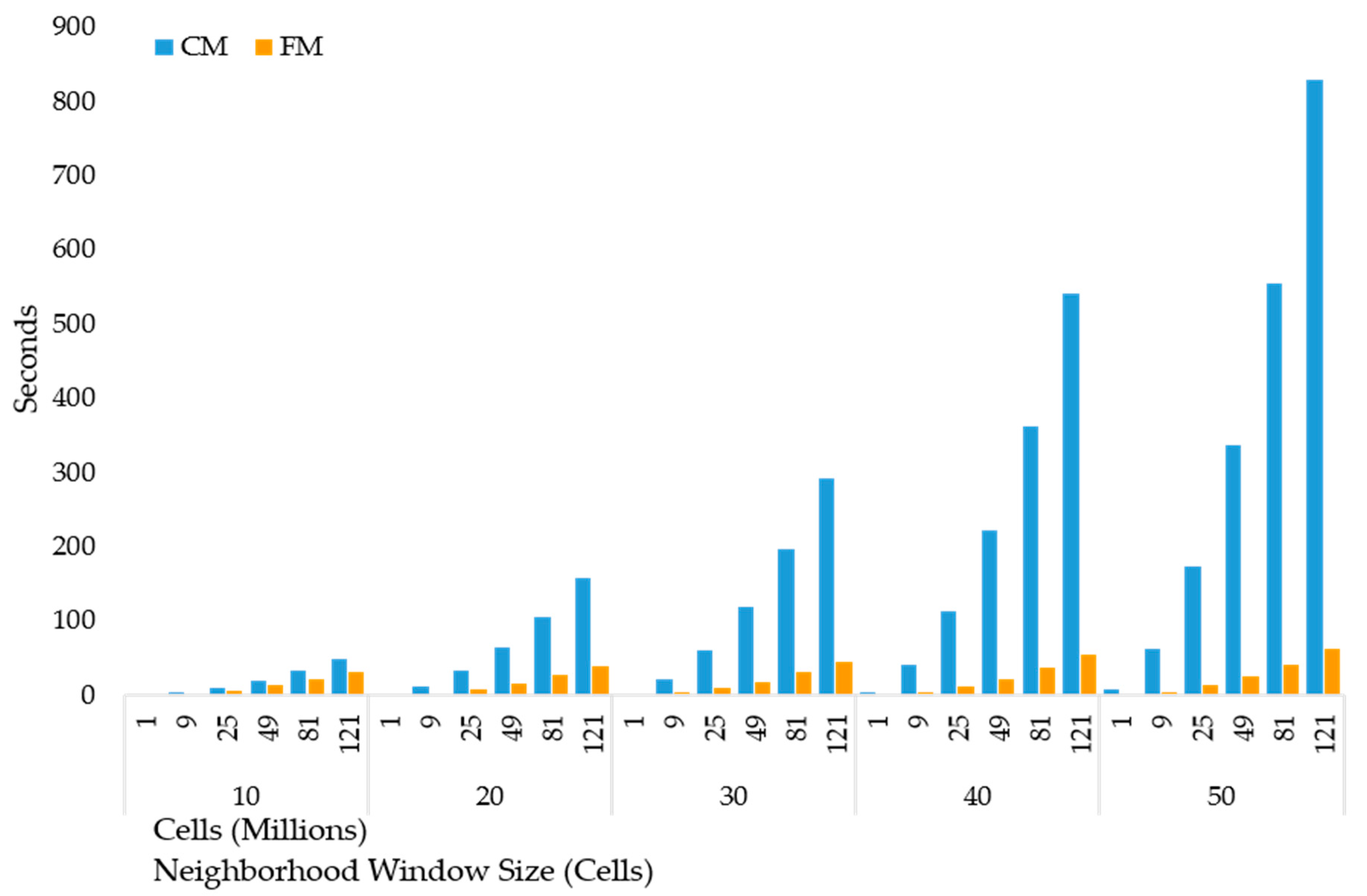

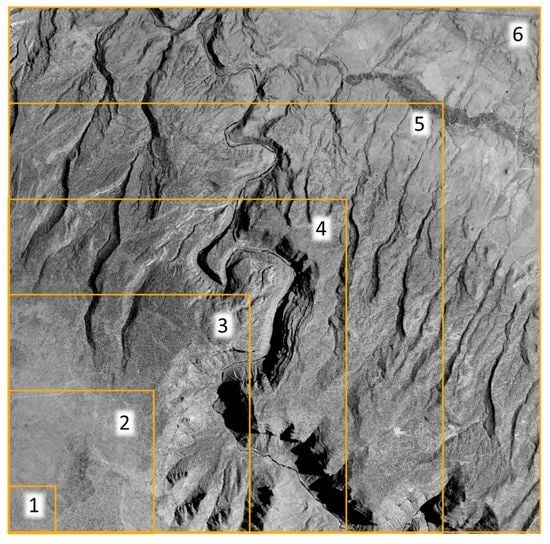

From a theoretical standpoint, FM should improve processing efficiency by reducing the processing time and storage space associated with spatial modeling, primarily because it requires fewer input and output processes. However, to justify the use of FM methods, it is necessary to quantify the extent to which FM actually reduces processing time and storage space. To evaluate FM, we designed, ran, and recorded processing time and storage space associated with six simulations, each including 12 models, varying the size of the raster data sets used in each simulation. From the results of those simulations, we compared and contrasted FM with conventional modeling (CM) techniques using linear regression and analysis of covariance (ANCOVA). All CM techniques were directly coded against ESRI’s ArcObject Spatial Analyst classes to minimize CM processing time and provide a fair comparison.

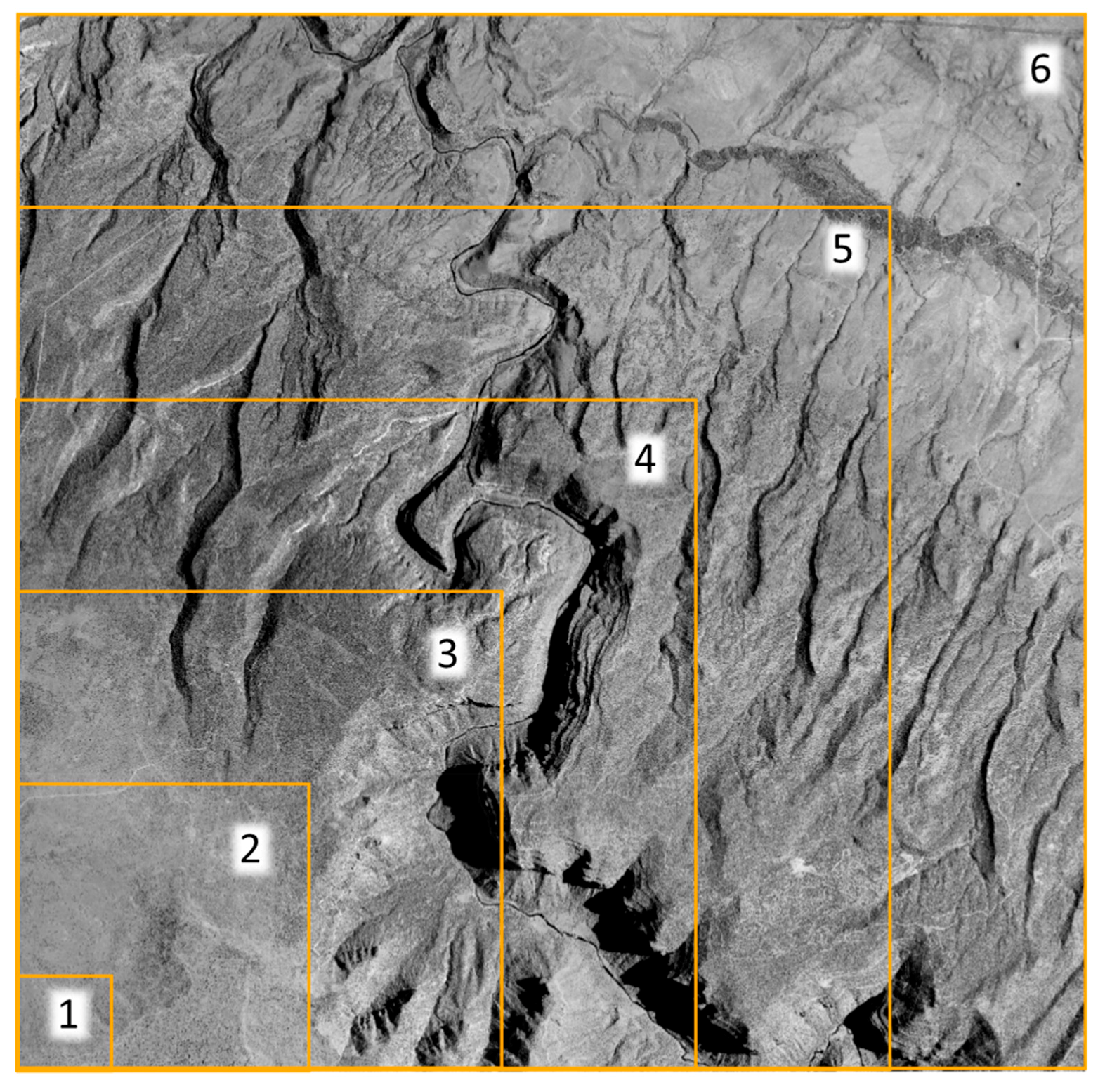

Spatial modeling scenarios within each simulation ranged from one arithmetic operation to twelve operations that included arithmetic, logical, conditional, focal, and local type analyses. Counts of each type of operation included in each model are shown in

Table 1. In the interest of conserving space, composite functions for each of these models are not included and defined here, but, as described in the introduction, each model could be presented in mathematical form. Each modeling scenario created a final raster output and was run against six raster data sets ranging in size from 1,000,000 to 121,000,000 total cells (1 m

2 grain size), incrementally increasing in total raster size by 2000 columns and 2000 rows at each step (adding 4 million cells at each step,

Figure 4). Cell bit depth remained constant as floating type numbers across all scenarios and simulations. Simulations were carried out on a Hewlett-Packard EliteBook 8740 using an I7 Intel quad core processor and standard internal 5500 revolutions per minute (RPM) hard disk drives (HHD).

These simulations employed stepwise increases in the total number of cells used in the analysis. It is worth noting here that this is a different approach than maintaining a fixed area (i.e., extent) and changing granularity, gradually moving from coarse to fine resolution and larger numbers of smaller and smaller cells. In this simulation, the algorithm used would produce the same result in both situations. However, some algorithms identify particular structures in an image and try to use characteristics like structural patterns and local homogeneity to improve efficiency, especially when attempting to discern topological relations, potentially using map-based measurements in making such determinations. In these cases, granularity may be important, and comparing the performance of different approaches would benefit from using both raster size and grain size simulations.

To determine the overhead of creating and using a FM, we also conducted a simulation using focal mean analyses that varied neighborhood window size across six images. Each iteration of the simulation gradually increased the neighborhood window size for the focal mean procedure from a 10 by 10 to a 50 by 50 cell neighborhood (in increments of 10 cells) to evaluate the impact of processing more cells within a neighborhood, while holding the image and number of input and output procedures constant. Images used in this simulation were the same as in the previous simulation and contained between 1,000,000 and 121,000,000 total cells. In this scenario, CM and FM techniques were expected to show no difference with regard to processing time and storage space using a paired t-test comparison, and any differences recorded in processing or storage can be attributed to the overhead associated with creating and using a FM.

2.3. Case Studies

To more fully explore the use of FM to analyze data and create predictive surfaces in real applications, we evaluated the efficiencies associated with two case studies: (1) a recent study of the Uncompahgre National Forest (UNF) in the US state of Colorado [

6,

29] and (2) a study focused on the forests of the Montana State Department of Natural Resources and Conservation (DNRC) [unpublished data]. For the UNF study, we used FM, fixed radius cluster plots, and fine resolution imagery to develop a two-stage classification and estimation procedure that predicts mean values of forest characteristics across 1 million ha at a spatial resolution of 1 m

2. The base data used to create these predictions consisted of National Agricultural Imagery Program (NAIP) color infrared (CIR) imagery [

30], which contained a total of 10 billion pixels for the extent of the study area. Within this project, we created no fewer than 365 explanatory raster surfaces, many of which represented a combination of multiple raster functions, as well as 52 predictive models, and 64 predictive surfaces. All of these outputs were generated at the extent and spatial resolution of the NAIP imagery (1 m

2, 10 billion pixels).

For the DNRC study, we used FM, variable radius plots, and NAIP imagery to predict basal area per acre (BAA), trees per acre (TPA), and board foot volume per acre (BFA) for more than 2.2 billion pixels (~0.22 million ha) in northwest Montana USA at the spatial resolution of 1 m2. In this study, we created 12 explanatory variables, three predictive models, and three raster surfaces depicting mean plot estimates of BAA, TPA, and BFA. Average plot extent was used to extract spectral and textural values in the DNRC study and was determined by calculating average limiting distance of trees within each plot given the basal area factor used in the field measurement (ranging between 5 and 20) and the diameter of each tree measured.

While it would be ideal to directly compare CM with FM for the UNF and DNRC case studies, ESRI’s libraries do not have procedures to perform many of the analyses and raster transformations used in these studies, so CM was not incorporated directly into the analysis. As an alternative to evaluate the time savings associated with using FM in these examples, we used our simulated results and estimated the number of individual CM functions required to create GLCM explanatory variables and model outputs. Processing time was based on the number of CM functions required to produce equivalent explanatory variables and predictive surfaces. In many instances, multiple CM functions were required to create one explanatory variable or final output. The number of functions were then multiplied by the corresponding proportion increase in processing time associated with CM when compared to FM. Storage space was calculated based on the number of intermediate steps used to create explanatory variables and final outputs. In all cases, the space associated with intermediate outputs from FM and CM were calculated without data compression and summed to produce a final storage space estimate.

4. Discussion

The underlying concept behind FM is lazy evaluation, which has been used in programming for decades, and is conceptually similar to function composition in mathematics. Using this technique in GIS removes the need to store intermediate data sets to disk in the spatial modeling process and allows models to process data only for the spatial areas needed at the time they are read. In CM, as spatial models become more complex (i.e., more modeling steps) the number of intermediate outputs increases. These intermediate outputs significantly increase the amount of processing time and storage space required to run a specified model. In contrast, FM does not store intermediate data sets, thereby significantly reducing model processing time and storage space.

In this study, we quantified this effect by comparing FM to CM methodologies using simulations that created final raster outputs from multiple, incrementally complex models. However, this does not capture the full benefit of operating in the FM environment. Because FM does not need to store outputs to disk in a conventional format (e.g., Grid or Imagine format), and all functions occurring to source raster data sets can be stored and used in a dynamic fashion to display, visualize, symbolize, retrieve, and manipulate function data sets at a small fraction of the time and space it takes to create a final raster output, this framework allows extremely efficient work flows and data exploration. For example, if we were to perform the same six simulations presented here but replace the requirement of storing the final output as a conventional raster format with creating a function model, it would take less than 1 second and would require less than 50 kilobytes of storage to finish the same simulations. The results can then be stored as a conventional raster at a later time if needed. To further illustrate this point, we conducted a simulation to quantify the time and storage required to create various size data sets from a function in FM, and then read it back into a raster object using various neighborhood window sizes. Results show that storage space and processing time to read FM objects are extremely small (kilobytes and milliseconds) and that the underlying algorithms behind those procedures have a much larger impact than the overhead associated with lazy evaluation. Based on these results, for the UNF and DNRC case studies, FM facilitated an analysis that simply would have been too lengthy (and costly) to perform using CM.

Though it is gaining traction, the majority of GIS professionals use CM and have not been exposed to FM concepts. Furthermore, FM is not included in most “off-the-shelf” GISs, making it difficult for the typical analyst to make the transition, even if FM results in better functionality, significant efficiency gains, and improved work flow. Also, even if coding libraries are available, many GIS users do not have the programming skills necessary to execute FM outside of a graphical interface of some kind. To simplify and facilitate the use of FM and complex statistical and machine learning algorithms within GIS, we have created a user-friendly add-in toolbar called the RMRS Raster Utility (

Figure 9) that provides quick access to a wide array of spatial analyses, while allowing users to easily store and retrieve FM models. The toolbar was specifically developed for ESRI’s GIS, one of the most widely used commercial platforms, but the underlying libraries have applicability in almost all GISs. Using the toolbar in the ESRI environment, FM models can be loaded into ArcMap as a function raster data set and can be used interchangeably with all ESRI raster spatial operations.

Currently, our solution contains 1016 files, over 105,000 lines of code, 609 classes, and 107 forms that facilitate functionality ranging from data acquisition to raster manipulation, and statistical and machine learning types of analyses, in addition to FM. Structurally, the utility contains two primary projects that consist of an ESRI Desktop class library (“esriUtil”) and an ArcMap add-in (“servicesToolBar”). The project esriUtil contains most of the functionality of our solution, while the servicesToolBar project wraps that functionality into a toolbar accessible within ArcMap. In addition, two open source numeric class libraries are used within our esriUtil project. These libraries, ALGLIB [

15] and Accord.net [

16], provide access to numeric, statistical, and machine learning algorithms used to facilitate the statistical and machine learning procedures within our classes.

While FM is efficient compared to CM and uses almost no storage space, there may be circumstances that warrant saving FMs to disk in a conventional raster format. Obviously, if intermediate data sets are useful products in themselves or serve as necessary inputs for other, independent FMs, FMs can be saved as conventional raster data sets at any point along the FM chain. For example, if one FM (FM1) is read multiple times to create a new FM (FM2) and the associated time it takes to read multiple instances of FM1 is greater than the combined read and write time of saving FM1 to a conventional raster format (i.e., many transformations within the first FM), then it would be advantageous to save FM1 to a conventional raster format and use the newly created conventional raster data set in FM2. Also, some processes that are hierarchical in nature where the same operations occur for multiple embedded processes may benefit from a similar strategic approach to storing specific steps in a FM as a conventional raster data set. Nonetheless, results from our simulations and case studies demonstrate that FM substantially reduces processing time and storage space. Given accessible tools and appropriate training, GIS professionals may find that these benefits outweigh the costs of learning new techniques to incorporate FM into their analysis and modeling of large spatial data sets.