A Novel Vision-Based Classification System for Explosion Phenomena

Abstract

:1. Introduction

2. Problem Statement

2.1. Volcanic Eruptions

2.2. Nuclear Explosions

2.3. Research Hypothesis

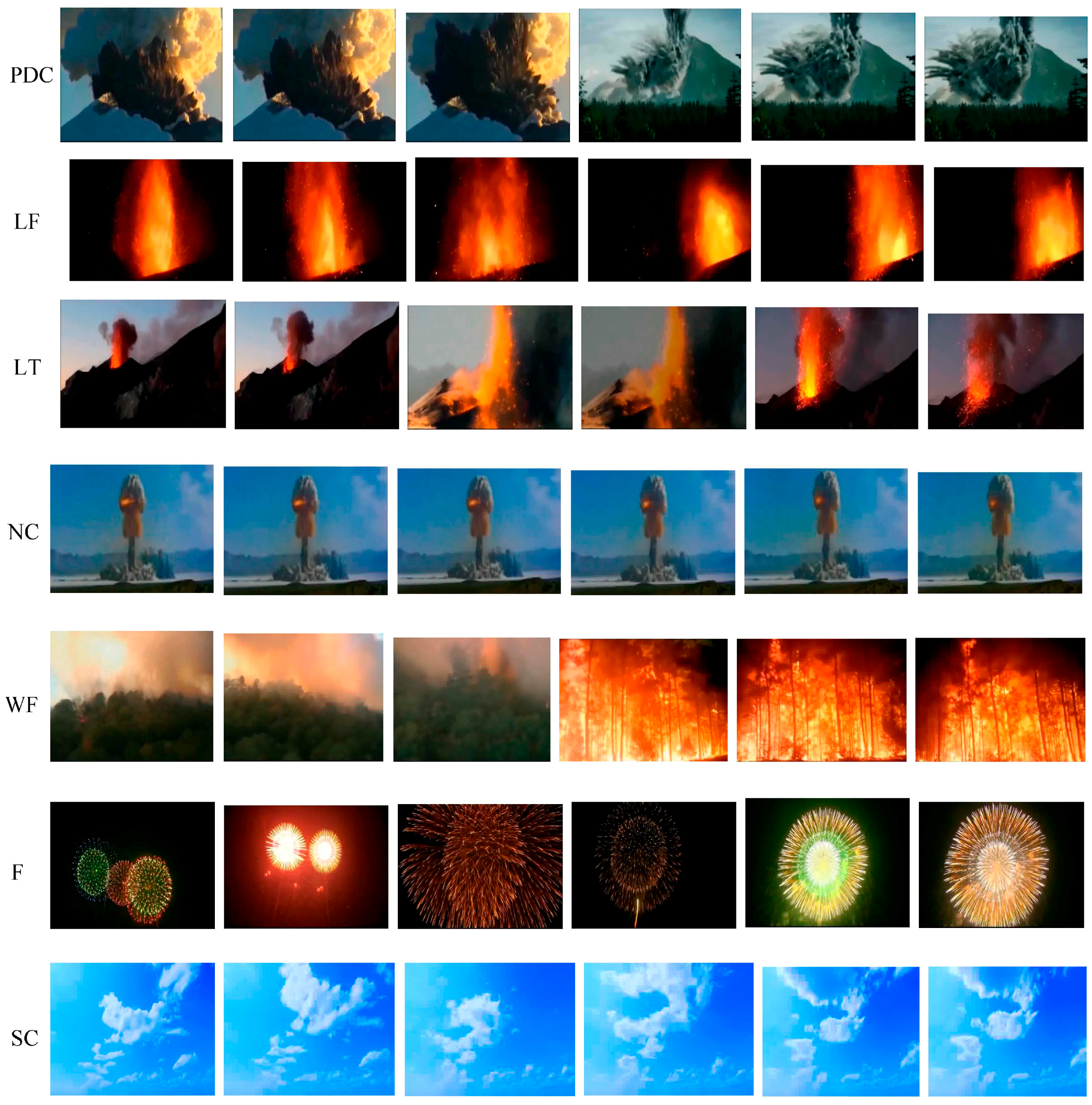

- (1)

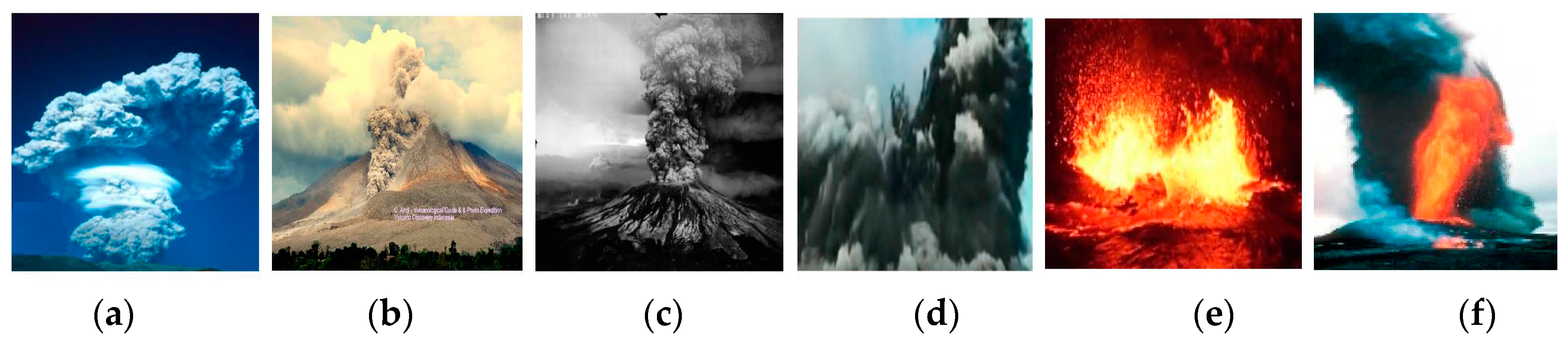

- Pyroclastic Density Currents (PDCs) patterns have color properties that can be white (e.g., Figure 1a), or brown/brownish (e.g., Figure 1b), or dark color ranging from gray to black shades (e.g., Figure 1c,d), have dense cloud shapes, and have multiple manifestation (shapes) including: vertical column, laterally spread, avalanches which are generated by lava dome and moving downslope of the volcano, and some volcanic eruptions can produce natural mushroom clouds under the force of gravity.

- (2)

- Lava fountains patterns have a luminous region of the image, and the color of the luminous region depends on the temperature of the lava during the eruption. Therefore, lava may glow golden yellow 1090 °C), orange 900 °C), bright cherry red 700 °C), dull red 600 °C), or lowest visible red 475 °C) [21].

- (3)

- Lava and tephra fallout patterns have a luminous region (lava) and non-luminous region of the image (tephra), and the color of lava is based on its temperature as explained in point 2. In addition, the color of tephra including blocks, bombs, cinder/scoria/lapilli, Pele’s tears, and Pele’s hair, coarse ash, and fine ash, etc. will be variable (light or dark colors) based on the type of pyroclastic materials that are being ejected during the eruption.

- (4)

- Nuclear explosions patterns have five properties. First, the color property where the initial color of the mushroom cloud of a nuclear expulsion is red/reddish. When the fireball cools, water condensation leads to the white color characteristic of the explosion cloud [9] and, secondly, growth of the nuclear mushroom-shaped cloud, where it keeps rising until it reaches its maximum height. Third, the shape which can be either mushroom-shaped cloud (our focus in this research), or artificial aurora display with ionized region in case of space explosions). Fourth, the luminous region of the image at which a luminous fireball can be viewed as flash or light from hundreds of miles away for about 10 s, and then it is no longer luminous. Thus, the non-luminous growing cloud appears for approximately 1–14 min, and fifth, the orientation where the mushroom-shaped cloud has a single orientation.

3. Related Work

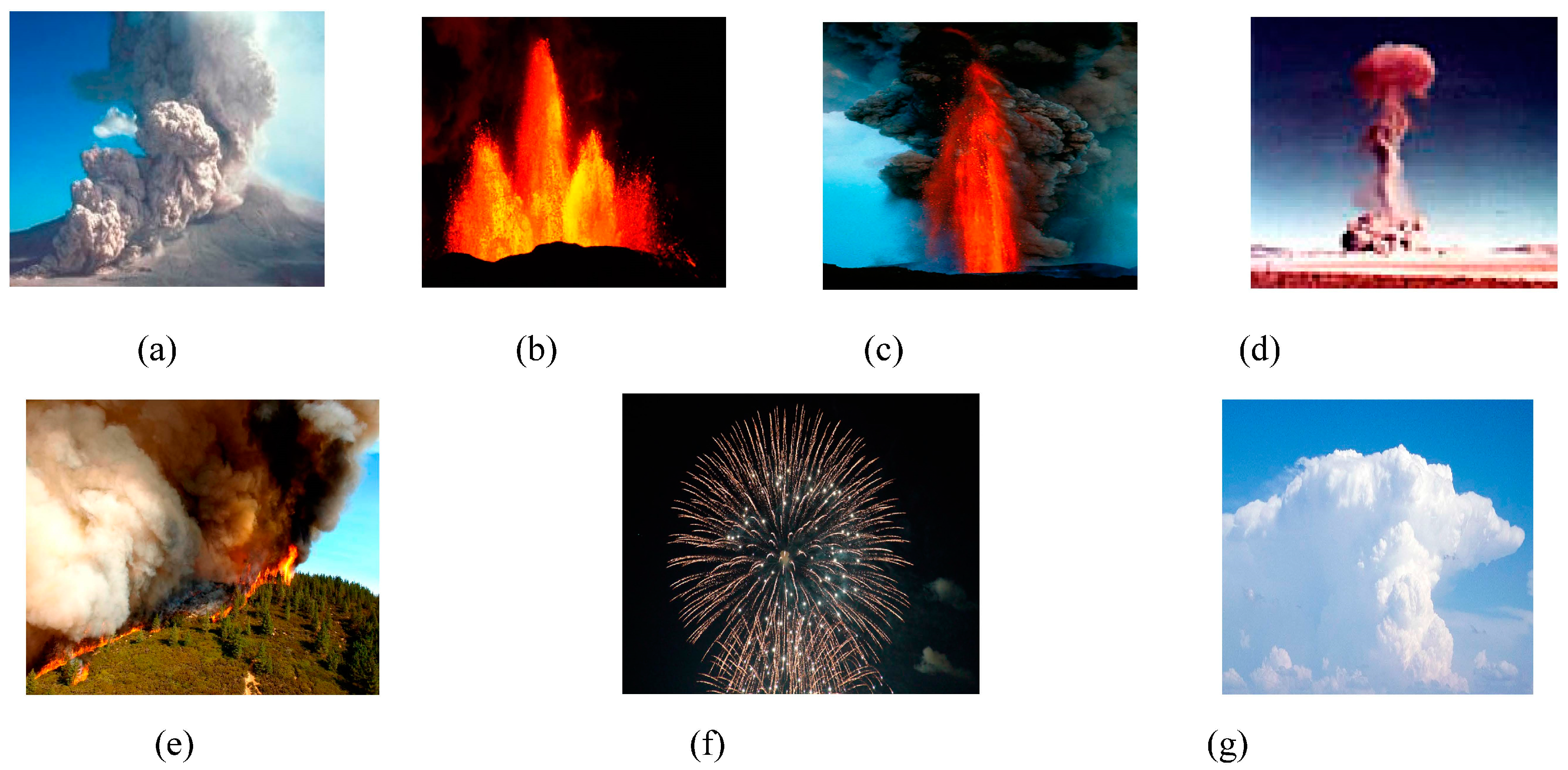

4. Dataset

5. Proposed Research Methodology

5.1. Design of the Proposed Framework

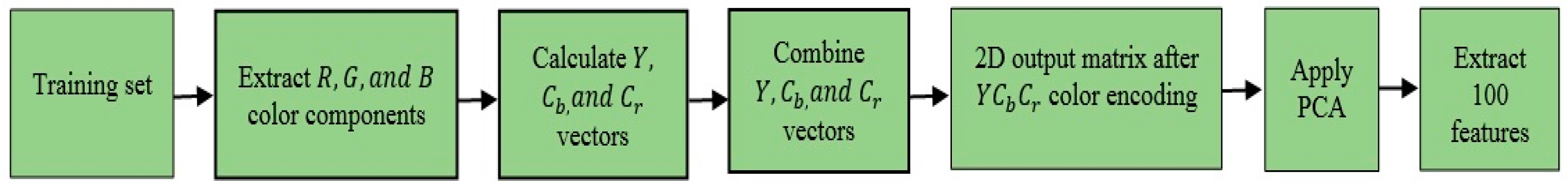

5.1.1. Preprocessing

5.1.2. Feature Extraction

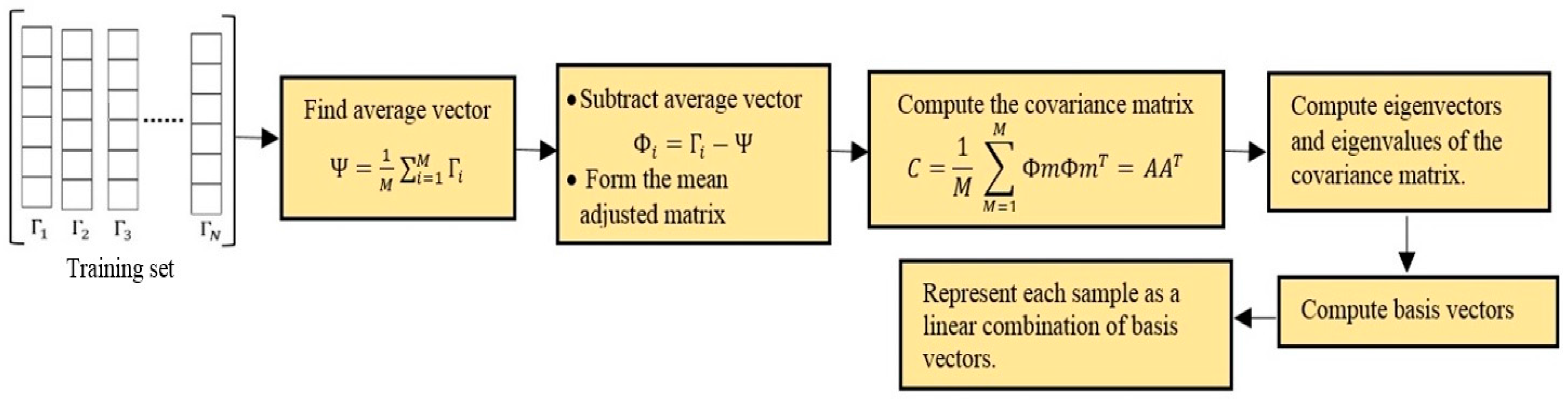

- (1)

- Obtain images for training phases where and represent each image as a vector .

- (2)

- Find the average vector.

- (3)

- Find the mean adjusted vector for every image vector , by subtracting the average vector from each sample, and then assemble all data samples in a mean adjusted matrix.

- (4)

- Compute the covariance matrix C.

- (5)

- Calculate the eigenvectors and eigenvalues ) of the computed covariance matrix C. After computing the eigenvalues, we will sort the eigenvalues ) by magnitude, and we will only keep the highest 100 eigenvalues and discard the rest.

- (6)

- Compute the basis vectors. Thus, from the previous step, we have 100 eigenvectors . These vectors will be assembled into an eigenvector matrix (EV). Then, we will multiply EV by the mean adjusted matrix computed in step 3 to form the basis vectors.

- (7)

- Describe each sample using a linear combination of basis vectors.

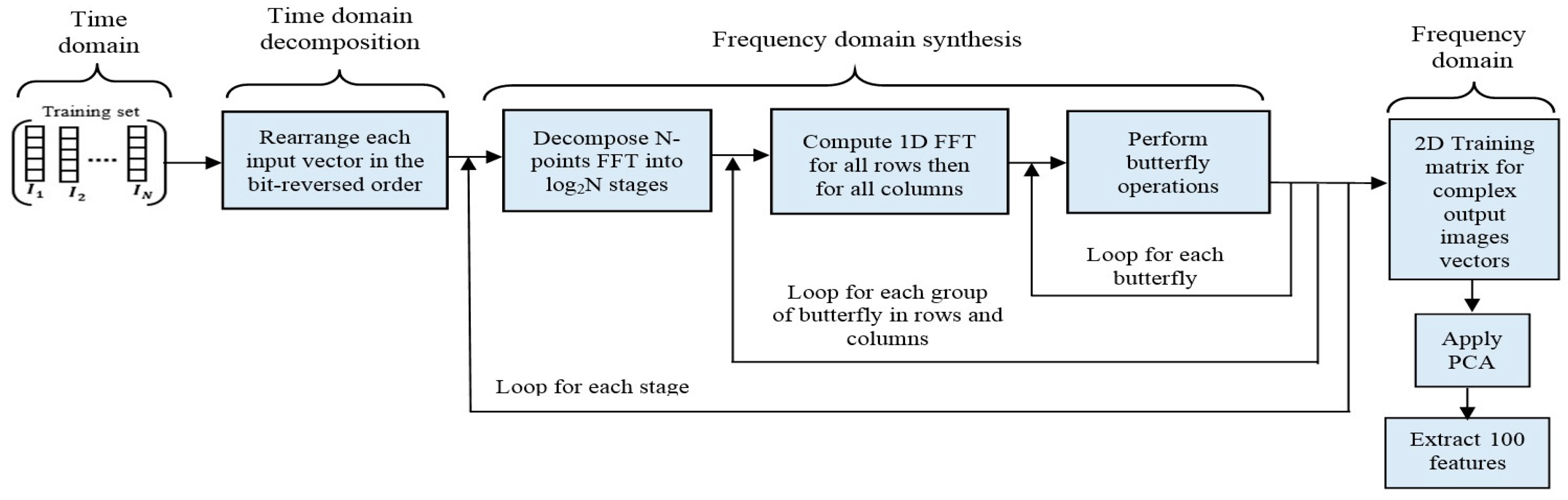

- (1)

- Perform a time-domain decomposition using a bit-reversal sorting algorithm to transform the input spatial image into a bit-reverse order array, and there are stages needed for this decomposition.

- (2)

- A two-dimensional FFT can be executed as two one-dimensional FFT in sequence where 1D FFT is performed across all rows, replacing each row with its transform. Then, 1D FFT is performed across all columns, replacing each column with its transform.

- (3)

- Combine the N frequency spectra in the correct reverse order at which the decomposition in the time domain was achieved. This step involves calculation of the core computational module of base-2-domain FFT algorithm, which is called a butterfly operation.

- (1)

- Spectral analysis of the image using Radix-2 FFT reveals a significant amount of information about the geometric structure of 2D spatial images due to the use of orthogonal basis functions. Consequently, representing an image in the transform domain has a larger range than in the spatial domain.

- (2)

- An image can contain high-frequency components if its gray levels (intensity values) are changing rapidly, or low-frequency components if its gray levels are changing slowly over the image space. For detecting such a change, Radix-2 FFT can be efficiently applied.

5.1.3. One-against-One Multi-Class Support Vector Classification

6. Experimental Results and Discussion

7. Conclusions

Author Contributions

Conflicts of Interest

References

- AlChE Staff, Center for Chemical Process Safety (CCPS) Staff. Guidelines for Evaluating Process Plant Buildings for External Explosions and Fires. Appendix A—Explosion and Fire Phenomena and Effects; American Institute of Chemical Engineers, John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1996. [Google Scholar]

- Self, S. The effects and consequences of very large explosive volcanic eruptions. Philos. Trans. R. Soc. Lond. A: Math. Phys. Eng. Sci. 2006, 364, 2073–2097. [Google Scholar] [CrossRef] [PubMed]

- Bogoyavlenskaya, G.E.; Braitseva, O.A.; Melekestsev, I.V.; Kiriyanov, V.Y.; Miller, C.D. Catastrophic eruptions of the directed-blast type at Mount St. Helens, Bezymianny and Shiveluch volcanoes. J. Geodyn. 1985, 3, 189–218. [Google Scholar] [CrossRef]

- Nairn, I.A.; Self, S. Explosive eruptions and pyroclastic avalanches from Ngauruhoe in February 1975. J. Volcanol. Geother. Res. 1978, 3, 39–60. [Google Scholar] [CrossRef]

- Pipkin, B.; Trent, D.D.; Hazlett, R.; Bierman, P. Geology and the Environment, 6th ed.; Brooks/Cole-Cengage Learning: Belmont, CA, USA, 2010. [Google Scholar]

- Volcano Hazards Program, U.S. Department of the Interior—U.S. Geological Survey. Glossary-Effusive Eruption, Modified on 10 July 2015. Available online: https://volcanoes.usgs.gov/vsc/glossary/effusive_eruption.html (accessed on 24 September 2016).

- U.S. Department of the Interior—USGS, Volcano Hazards Program-Glossary-Tephra, Modified on 19 September 2013. Available online: http://volcanoes.usgs.gov/vsc/glossary/tephra.html (accessed on 24 September 2016).

- How Volcanoes Work, Tephra and Pyroclastic Rocks. Available online: http://www.geology.sdsu.edu/how_volcanoes_work/Tephra.html (accessed on 24 September 2016).

- Craig, P.P.; Jungerman, J.A. Nuclear Arms Race: Technology and Society, 2nd ed.; University of California; McGraw-Hill: Davis, CA, USA, 1990. [Google Scholar]

- Lindgen, N. Earthquake or explosion? IEEE Spectr. 1966, 3, 66–75. [Google Scholar] [CrossRef]

- National Geographic Society. Volcanic Ash. Available online: http://education.nationalgeographic.com/encyclopedia/volcanic-ash/ (accessed on 8 March 2017).

- Volcano Hazards Program. U.S. Department of the Interior—U.S. Geological Survey. Lava Flows and Their Effects, Modified on 24 May 2010. Available online: http://volcanoes.usgs.gov/hazards/lava/ (accessed on 24 September 2016).

- Lopes, R. The Volcano Adventure Guide; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Gyakum, J.; Stix, J. Natural Disasters, McGill University on EDX, Montreal, Canada, Free Online Course Conducted on 14 January–11 April 2015. Available online: https://courses.edx.org (accessed on 11 April 2017).

- Spellman, F.R. Geography for Nongeographers; Government Institutes; The Scarecrow Press, Inc.: Lanham, MD, USA; Toronto, ON, Canada; Plymouth, UK, 2010. [Google Scholar]

- Branney, M.J.; Kokelaar, P. Pyroclastic Density Currents and the Sedimentation of Ignimbrites; The Geological Society of London: London, UK, 2002. [Google Scholar]

- Volcano Hazards Program, U.S. Department of the Interior—U.S. Geological Survey. Glossary—Eruption Column, Modified on 28 July 2015. Available online: http://volcanoes.usgs.gov/vsc/glossary/eruption_column.html (accessed on 24 September 2016).

- Pfeiffer, T. Volcano Discovery. Available online: https://www.volcano-adventures.com/tours/eruption-special/sinabung.html (accessed on 8 March 2017).

- Volcano Hazards Program, U.S. Department of the Interior—U.S. Geological Survey. Mount St. Helens, Modified on 27 August 2015. Available online: https://volcanoes.usgs.gov/volcanoes/st_helens/st_helens_geo_hist_99.html (accessed on 28 February 2017).

- TVNZ One News—Undersea Volcano Eruption 09 [Tonga], Uploaded on 19 March 2009. Available online: https://www.youtube.com/watch?v=B1tjIihHgco (accessed on 8 March 2017).

- Seach, J. Volcano Live, Lava Colour|John Seach. Available online: http://www.volcanolive.com/lava2.html (accessed on 22 March 2017).

- U.S. Department of the Interior—USGS, Hawaiian Volcano Observatory. Available online: http://hvo.wr.usgs.gov/multimedia/index.php?newSearch=true&display=custom&volcano=1&resultsPerPage=20 (accessed on 11 June 2016).

- Tilling, R.; Heliker, C.; Swanson, D. Eruptions of Hawaiian Volcanoes—Past, Present, and Future. General Information Product 117, 2nd ed.; U.S. Department of the Interior, U.S. Geological Survey: Reston, VA, USA, 2010.

- Trinity Atomic Web Site. Gallery of Test Photos, 1995–2003. Available online: http://www.abomb1.org/testpix/index.html (accessed on 24 September 2016).

- Operation Crossroads 1946. Available online: http://nuclearweaponarchive.org/Usa/Tests/Crossrd.html (accessed on 20 April 2014).

- The National Security Archive—The George Washington University. The Atomic Tests at Bikini Atoll. July 1946. Available online: http://nsarchive.gwu.edu/nukevault/ebb553–70th-anniversary-of-Crossroads-atomic-tests/#photos (accessed on 28 February 2017).

- Wicander, R.; Monroe, J.S. Essentials of Physical Geology, 5th ed.; Brooks/Cole-Cengage Learning: Belmont, CA, USA, 2009; pp. 128–130. [Google Scholar]

- U.S. Department of the Interior—USGS, Volcano Hazards Program. Monitoring Volcano Ground Deformation, Modified on 29 December 2009. Available online: https://volcanoes.usgs.gov/activity/methods/index.php (accessed on 24 September 2016).

- U.S. Department of the Interior—USGS, Volcano Hazards Program. Temperatures at the Surface Reflect Temperatures Below the Ground, Modified on 7 January 2016. Available online: https://volcanoes.usgs.gov/vhp/thermal.html (accessed 24 September 2016).

- Moran, S.C.; Freymueller, J.T.; Lahusen, R.G.; Mcgee, K.A.; Poland, M.P.; Power, J.A.; Schmidt, D.A.; Schneider, D.J.; Stephens, G.; Werner, C.A.; et al. Instrumentation Recommendations for Volcano Monitoring at U.S. Volcanoes under the National Volcano Early Warning System; Scientific Investigation Report 2008–5114; Department of the Interior—USGS: Reston, VA, USA, 2008.

- Langer, H.; Falsaperla, S.; Masotti, M.; Campanini, R.; Spampinato, S.; Messina, A. Synopsis of supervised and unsupervised pattern classification techniques applied to volcanic tremor data at Mt Etna, Italy. Geophys. J. Int. 2009, 178, 1132–1144. [Google Scholar] [CrossRef]

- Iyer, A.S.; Ham, F.M.; Garces, M.A. Neural classification of infrasonic signals associated with hazardous volcanic eruptions. In Proceedings of the IEEE International Joint Conference on Neural Networks, San Jose, CA, USA, 31 July–5 August 2011; pp. 336–341. [Google Scholar]

- Picchiani, M.; Chini, M.; Corradini, S.; Merucci, L.; Sellitto, P.; Del Frate, F.; Stramondo, S. Volcanic ash detection and retrievals using MODIS data by means of neural networks. Atmos. Meas. Tech. 2011, 4, 2619–2631. [Google Scholar] [CrossRef]

- Tan, R.; Xing, G.; Chen, J.; Song, W.Z.; Huang, R. Fusion-based volcanic earthquake detection and timing in wireless sensor networks. ACM Trans. Sens. Netw. 2013, 9, 1–25. [Google Scholar] [CrossRef]

- Dickinson, H.; Tamarkin, P. Systems for the Detection and Identification of Nuclear Explosions in the Atmosphere and in Space. Proc. IEEE 1965, 53, 1921–1934. [Google Scholar] [CrossRef]

- Ammon, C.J.; Lay, T. USArray Data Quality. In Nuclear Test Illuminates; EOS, Transaction American Geophysical Union, Wiley Online Library, 2007; Volume 88, No. 4; pp. 37–52. Available online: http://onlinelibrary.wiley.com/doi/10.1029/2007EO040001/pdf (accessed on 15 April 2017).

- U.S. Geological Survey. Available online: https://www.usgs.gov/ (accessed on 1 January 2017).

- Department of Commerce, National Oceanic and Atmospheric Administration, National Weather Service. Ten Basic Cloud Types, Updated on 14 March 2013. Available online: http://www.srh.noaa.gov/srh/jetstream/clouds/cloudwise/types.html (accessed on 24 September 2016).

- Onet.Blog. Copyright 1996–2016. Available online: http://wulkany-niszczycielska-sila.blog.onet.pl/page/2/ (accessed on 24 September 2016).

- Pfeiffer, T. Volcano Adventures. Available online: https://www.volcano-adventures.com/travel/photos.html (accessed on 1 January 2017).

- Hipschman, R. Exploratorium. Full-Spectrum Science with Ron Hipschman: Fireworks. Available online: http://www.exploratorium.edu/visit/calendar/fullspectrum-science-ron-hipschman-fireworks-june-22-2014 (accessed on 24 September 2016).

- Wikimedia Commons. Available online: https://commons.wikimedia.org/wiki/Main_Page (accessed on 1 January 2017).

- Storm (HQ) Volcano Eruption (Vivaldi Techno) Vanessa Mae Violin Full HD Music Song Vídeo Remix 2013, Published on 7 April 2013. Available online: https://www.youtube.com/watch?v=lj6ZGGBy-R8 (accessed on 1 January 2017).

- The Nuclear Cannon (Upshot-Knothole—Grable), Published on 1 February 2013. Available online: https://www.youtube.com/watch?v=BECOQuQC0vQ (accessed on 1 January 2017).

- Texas Wildfires 2011 (Worst in TX History), Uploaded on 7 September 2011. Available online: https://www.youtube.com/watch?v=pqr2DNaMLiQ (accessed on 1 January 2017).

- The Worst Neighbors from Hell Presents the Best Fireworks Display July 4th, 2016, Published on 4 July 2016. Available online: https://www.youtube.com/watch?v=6qO6TFUp5C0 (accessed on 1 January 2017).

- Deep Blue Sky—Clouds Timelapse—Free Footage—Full HD 1080p, Published on 18 April 2016. Available online: https://www.youtube.com/watch?v=3pD88QLP1AM (accessed on 1 January 2017).

- U.S. Department of the Interior—USGS, Volcano Hazards Program. Pyroclastic Flows Move Fast and Destroy Everything in Their Path, Modified on 12 February 2016. Available online: https://volcanoes.usgs.gov/vhp/pyroclastic_flows.html (accessed on 24 September 2016).

- Joschenbacher. File: Fissure Eruption in Holurhraun (Iceland), 13 September 2014. JPG, Uploaded on 24 September 2014. Available online: https://commons.wikimedia.org/wiki/File:Fissure_eruption_in_Holurhraun_(Iceland),_13._September_2014.JPG (accessed on 8 March 2017).

- Pfeiffer, T. Volcano Discovery. Available online: http://www.decadevolcano.net/photos/etna0701_1.htm (accessed on 24 September 2016).

- Newman, J. Wikimedia Commons. File; Zaca3.jpg, Uploaded on 17 October 2007. Available online: https://commons.wikimedia.org/wiki/File:Zaca3.jpg (accessed on 1 January 2017).

- Turk, M.; Pentland, A. Eigenfaces for Recognition. J. Cogn. Neurosci. 1991, 3, 71–86. [Google Scholar] [CrossRef] [PubMed]

- Jack, K. Video Demystified: A Handbook for the Digital Engineer, 3rd ed.; LLH Technology Publishing: Eagle Rock, VA, USA, 2001. [Google Scholar]

- Payette, B. Color Space Convertor: R’G’B’ to Y’CbCr, Xilinx, XAPP637 (v1.0). 2002; Available online: http://application-notes.digchip.com/077/77-42796.pdf (accessed on 11 April 2017).

- Nagabhushana, S. Computer Vision and Image Processing, 1st ed.; New Age International (P) Ltd.: New Delhi, India, 2005. [Google Scholar]

- Proakis, J.G.; Manolakis, D.G. Digital Signal Processing: Principles, Algorithms, and Applications, 3rd ed.; Prentice Hall, Inc: Upper Saddle River, NJ, USA, 1996. [Google Scholar]

- Smith, S.W. The Scientist and Engineer’s Guide to Digital Signal Processing, Chapter 12: The Fast Fourier Transform, Copyright 1997–2011. California Technical Publishing: San Diego, CA, USA; Available online: http://www.dspguide.com/ch12.htm (accessed on 11 April 2017).

- Hsu, C.W.; Chang, C.C.; Lin, C.J. A Practical Guide to Support Vector Classification. 2010. Available online: http://www.csie.ntu.edu.tw/~cjlin/papers/guide/guide.pdf (accessed on 11 April 2017).

- Burges, C.J.C. A Tutorial on Support Vector Machines for Pattern Recognition, Data Mining and Knowledge Discovery; Kluwer Academic Publishers: Hingham, MA, USA, 1998; Volume 2, pp. 121–167. [Google Scholar]

- Platt, J.C. Fast training of support vector machines using sequential minimal optimization. In Advances in Kernel Methods—Support Vector Learning; Scholkopf, B., Burges, C.J.C., Smola, A.J., Eds.; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Diosan, L.; Oltean, M.; Rogozan, A.; Pecuchet, J.P. Genetically Designed Multiple-Kernels for Improving the SVM Performance. In Proceedings of the 9th Annual Conference on Genetic and Evolutionary Computation (GECCO’07), London, UK, 7–11 July 2007; p. 1873. [Google Scholar]

| Category | Number of Images | |

|---|---|---|

| Explosion | Pyroclastic density currents (PDC) | 1522 |

| Lava fountains (LF) | 966 | |

| Lava and tephra fallout (LT) | 346 | |

| Nuclear mushroom clouds (NC) | 394 | |

| Non-explosion | Wildfires (WF) | 625 |

| Fireworks (F) | 980 | |

| Sky clouds (SC) | 494 | |

| Total | 5327 | |

| Category | Frame Rate | Resolution of Video Sequences | Number of Retrieved Frames for Testing | Frames Resized During Preprocessing | Features Input Vector | Accuracy |

|---|---|---|---|---|---|---|

| Video 1—PDC | 29 fps | 720 × 480 | 140 | 64 × 64 | 300 | 98.57% |

| Video 2—LF | 29 fps | 720 × 480 | 140 | 64 × 64 | 300 | 90.71% |

| Video 3—LT | 29 fps | 720 × 480 | 140 | 64 × 64 | 300 | 83.57% |

| Video 4—NC | 29 fps | 720 × 480 | 140 | 64 × 64 | 300 | 100% |

| Video 5—WF | 29 fps | 720 × 480 | 140 | 64 × 64 | 300 | 85.71% |

| Video 6—F | 29 fps | 720 × 480 | 140 | 64 × 64 | 300 | 100% |

| Video 7—SC | 29 fps | 720 × 480 | 140 | 64 × 64 | 300 | 100% |

| Actual | Predicted Results | ||||||

|---|---|---|---|---|---|---|---|

| PDC | LF | LT | NC | WF | F | SC | |

| PDC (140) | 138 | 0 | 2 | 0 | 0 | 0 | 0 |

| LF (140) | 0 | 127 | 0 | 0 | 0 | 13 | 0 |

| LT (140) | 10 | 8 | 117 | 0 | 5 | 0 | 0 |

| NC (140) | 0 | 0 | 0 | 140 | 0 | 0 | 0 |

| WF (140) | 5 | 9 | 0 | 0 | 120 | 6 | 0 |

| F (140) | 0 | 0 | 0 | 0 | 0 | 140 | 0 |

| SC (140) | 0 | 0 | 0 | 0 | 0 | 0 | 140 |

| Phase | Time in Seconds |

|---|---|

| Time for extracting 300 features | 0.073 |

| Time to pass 1 frame to the classifier | 0.001 |

| Classification time | 0.046 |

| Total time | ≈0.12 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abusaleh, S.; Mahmood, A.; Elleithy, K.; Patel, S. A Novel Vision-Based Classification System for Explosion Phenomena. J. Imaging 2017, 3, 14. https://doi.org/10.3390/jimaging3020014

Abusaleh S, Mahmood A, Elleithy K, Patel S. A Novel Vision-Based Classification System for Explosion Phenomena. Journal of Imaging. 2017; 3(2):14. https://doi.org/10.3390/jimaging3020014

Chicago/Turabian StyleAbusaleh, Sumaya, Ausif Mahmood, Khaled Elleithy, and Sarosh Patel. 2017. "A Novel Vision-Based Classification System for Explosion Phenomena" Journal of Imaging 3, no. 2: 14. https://doi.org/10.3390/jimaging3020014