Towards Automatic Bird Detection: An Annotated and Segmented Acoustic Dataset of Seven Picidae Species

Abstract

:1. Introduction

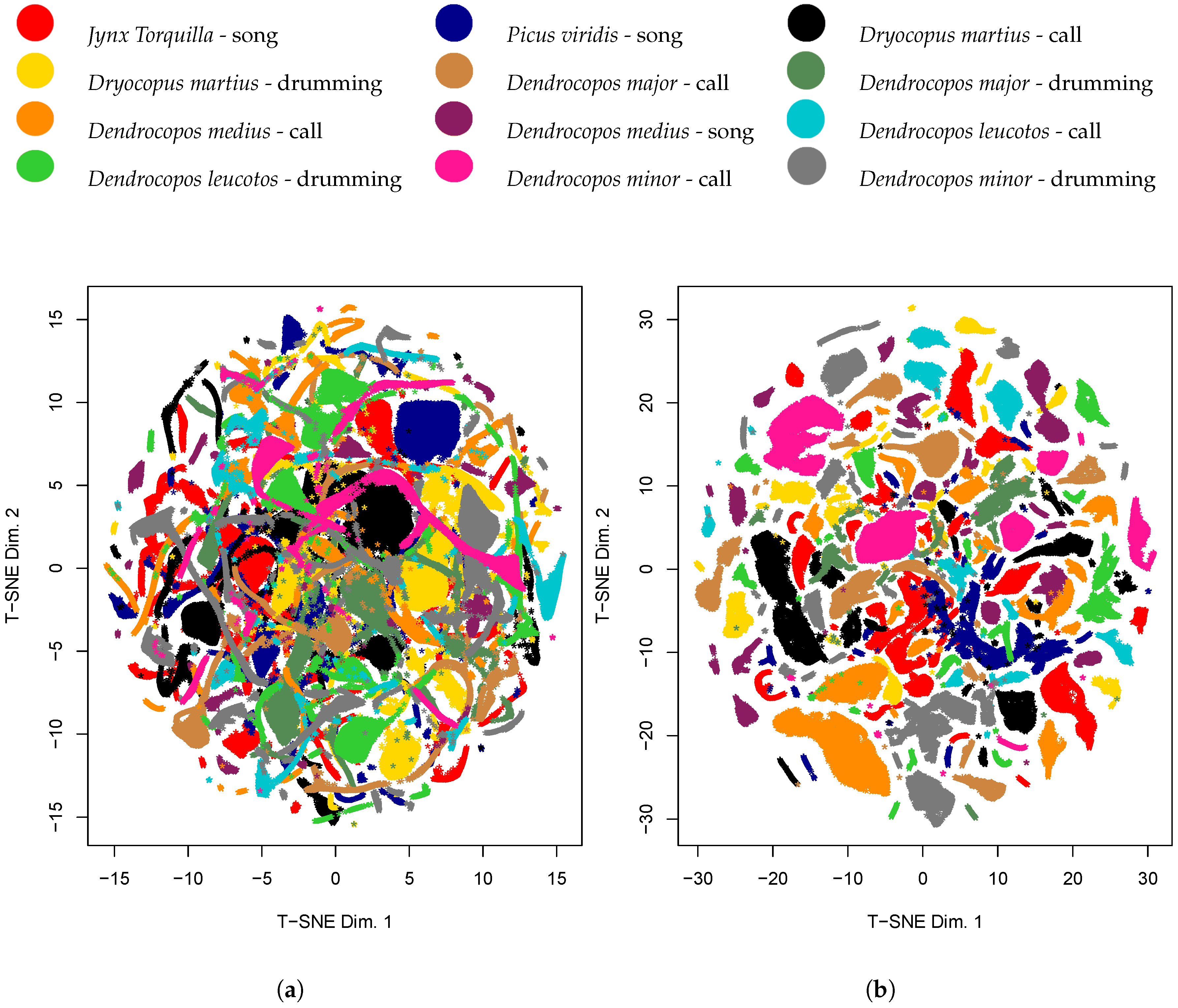

2. Dataset Description

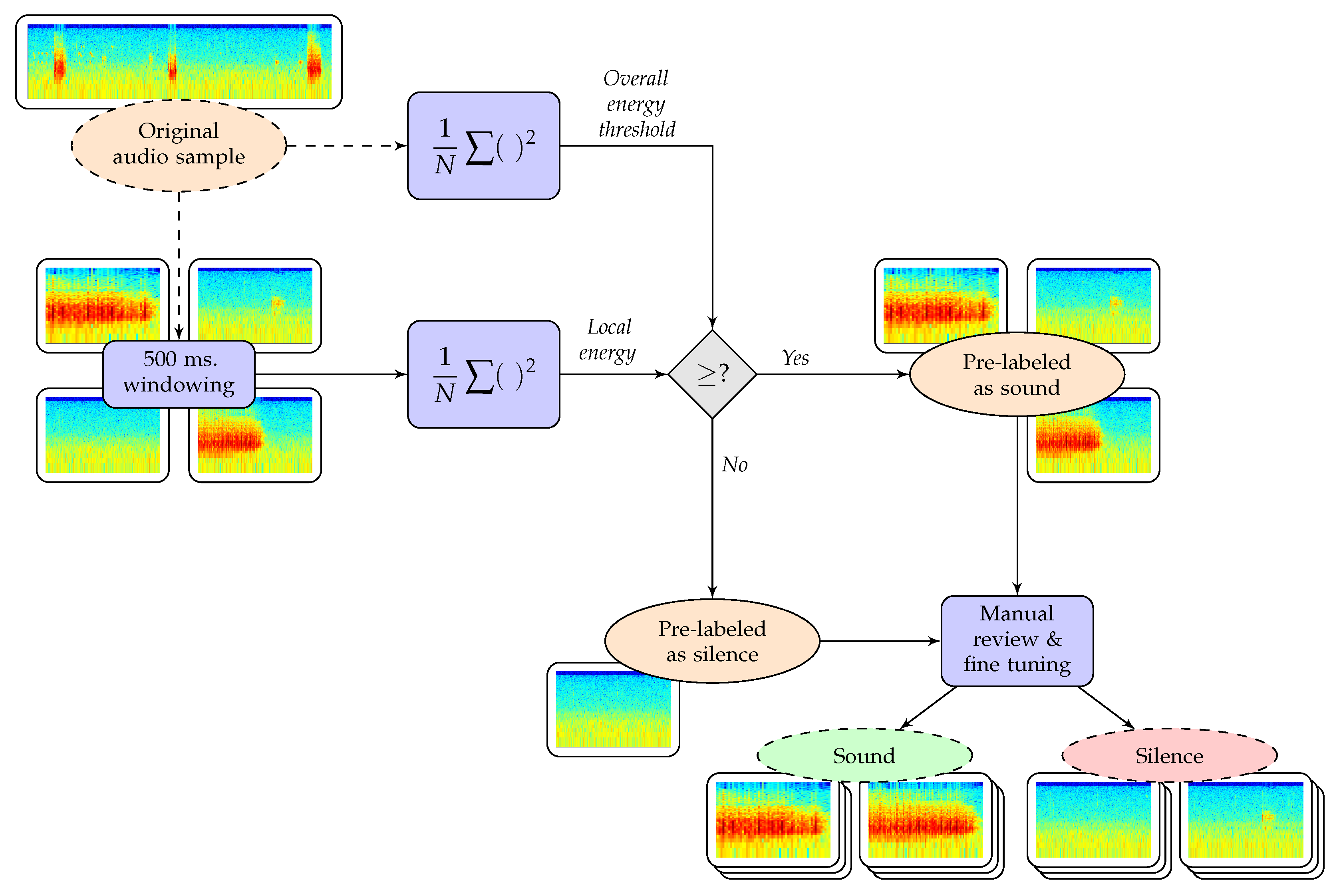

3. Segmentation and Annotation Process

- Step I.

- Original audio sample acquisition. It consists of downloading the audio samples from the Xeno-Canto repository [11,12] and running an initial manual pre-filtering. That is, listening to the downloaded samples and ensuring that they correspond to the species to which they are labeled. Low quality and too noisy samples are discarded.

- Step II.

- Energy threshold calculation. It consists of establishing an energy threshold to distinguish between a sample containing sound and a sample containing silence. It is computed by squaring every sample value of the whole original audio and averaging the result (i.e., average power of the audio recording).

- Step III.

- Sample windowing. It consists of applying a 500 ms. sliding window with no overlap to the original audio sample (i.e., splitting the file in 500 ms. segments). We have found that 500 ms. is a convenient size because it captures enough acoustic energy of the bird sound to discern it from the background noise of the acoustic sample. A larger window size may increase the chance of capturing different bird songs in the same sample. Accordingly, a smaller window size may prevent the system from distinguishing background noise from the bird song.

- Step IV.

- Local energy calculation. It consists of calculating the average power of the 500 ms. audio segment.

- Step V.

- Energy comparison. It consists of deciding whether the 500 ms. audio segment is either sound or silence. If the result from Step IV is higher than the threshold established in Step III, the audio fragment is pre-labeled as a sample of interest (the bird is making some sound); otherwise, the fragment is labeled as background noise (i.e., silence).

- Step VI.

- Pre-labeling. It consists of comparing the label obtained at Step V with the previous labels. If the label is the same (e.g., all the 500 ms. fragments so far have been labeled as silence), the system goes to Step III again with the following segment. If the label is different (e.g., the previous window was labeled as silence and the actual window was labeled as sound of interest), a new audio with all the previous same-label windows is generated and stored before going again to Step III with the next segment of the original audio sample. In the latter case, the segment will be included in the next sample collection. It is worth mentioning that acoustic samples—even from different 500-ms adjacent windows—that still contain subsequent repetitions from the same sound will be tied together as a single audio file at this step. This is done to capture the information of sound repetitions, which might be of great interest when identifying the bird species and its type of sound.

- Step VII.

- Fine tuning and final annotation. It consists of conducting a manual review (i.e., removing false positives) and adjustment (i.e., moving background noises from actual sound samples to the Silence class) on the pre-labeled samples. For instance, there might be some audio samples that might introduce ambiguous information to the dataset and, thus, need to be deleted (e.g., a Jynx Torquilla and a Picus viridis singing at the same time and labeled as a Jynx Torquilla only). It is worth noting that the background noise (i.e., silence) segments might contain birdsongs from non-interesting bird species (i.e., birds that do not belong to any of the Picidae species that are being studied in this paper); the rationale behind this decision is to make sure that an automatic classifier will consider all the noises (even if they are birdsongs) that are not generated by the bird of interest as silence.

4. Materials

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| MFCC | Mel Frequency Cepstral Coefficients |

| t-SNE | t-Distributed Stochastic Neighbor Embedding |

References

- Witzany, G. Biocommunication of Animals; Springer: Dortrecht, The Netherlands, 2014; ISBN 978-94-007-7413-1. [Google Scholar]

- Kershenbaum, A.A.; Blumstein, D.T.; Roch, M.A.; Akcay, G.; Backus, G.; Bee, M.A.; Bohn, K.; Cao, Y.; Carter, G.; Casar, C.; et al. Acoustic sequences in non-human animals: A tutorial review and prospectus. Biol. Rev. 2014. [Google Scholar] [CrossRef] [PubMed]

- Slabbekoorn, H.; Smith, T.B. Bird song, ecology and speciation. Philos. Trans. R. Soc. Lond. B: Biol. Sci. 2002, 357, 493–503. [Google Scholar] [CrossRef] [PubMed]

- Howell, S.N.G.; Sophie, W. A Guide to the Birds of Mexico and Northern Central America; Oxford University Press: Oxford, UK, 1995; ISBN 0-19-854012-4. [Google Scholar]

- Baker, M.C.; Cunningham, M.A. The biology of bird-song dialects. Behav. Brain Sci. 1985, 8, 85–100. [Google Scholar] [CrossRef]

- Stowell, D.; Wood, M.; Stylianou, Y.; Glotin, H. Bird detection in audio: A survey and a challenge. In Proceedings of the IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), Salerno, Italy, 13–16 September 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Sivarajan, S.; Schuller, B.; Coutinho, E. Bird Sound Classification. Available online: http://www.imperial.ac.uk/media/imperial-college/faculty-of-engineering/computing/public/SINDURANSIVARAJAN.pdf (accessed on 11 April 2017).

- Noda, J.J.; Travieso, C.M.; Sánchez-Rodríguez, D. Automatic Taxonomic Classification of Fish Based on Their Acoustic Signals. Appl. Sci. 2016, 6, 443. [Google Scholar] [CrossRef]

- Mermelstein, P. Distance measures for speech recognition–psychological and instrumental. Pattern Recogn. Artif. Intell. 1976, 166, 374–388. [Google Scholar]

- Van der Maaten, L.J.P.; Hinton, G.E. Visualizing high-dimensional data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Vellinga, W.P.; Planque, R. The Xeno-canto collection and its relation to sound recognition and classification. In Proceedings of the 2015 CLEF, Toulouse, France, 8–11 September 2015. [Google Scholar]

- Xeno-Canto Foundation. Xeno-Canto: Sharing Bird Sounds from around the World. Available online: http://www.xeno-canto.org/ (accessed on 15 April 2017).

- Cornell Library of Ornithology Macaulay Library. Available online: http://macaulaylibrary.org (accessed on 11 April 2017).

- University of Utah Western Soundscape Archive. Available online: http://www.westernsoundscape.org (accessed on 11 April 2017).

- Lepage, D. Avibase—The World Bird Database. Available online: http://avibase.bsc-eoc.org/avibase.jsp (accessed on 11 April 2017).

- British Library Sound Archive. Available online: http://www.bl.uk/soundarchive (accessed on 11 April 2017).

- Tierstimmenarchiv, Animal Sound Archive (Tierstimmenarchiv) at the Museum für Naturkunde in Berlin. Available online: http://www.tierstimmenarchiv.de (accessed on 11 April 2017).

- CSIRO. Australian National Wildlife Collection Sound Archive. Available online: https://www.csiro.au/en/Research/Collections/ANWC/About-ANWC/Our-wildlife-sound-archive (accessed on 11 April 2017).

- Mikusiński, G.; Gromadzki, M.; Chylarecki, P. Woodpeckers as Indicators of Forest Bird Diversity. Conserv. Biol. 2001, 15, 208–217. [Google Scholar] [CrossRef]

- Real Decreto 139/2011, de 4 de Febrero, Para el Desarrollo del Listado de Especies Silvestres en Régimen de Protección Especial y del Catálogo Español de Especies Amenazadas. Available online: http://www.boe.es/buscar/act.php?id=BOE-A-2011-3582 (accessed on 15 April 2017).

- Pakkala, T.; Lindén, A.; Tiainen, J.; Tomppo, E.; Kouki, J. Indicators of forest biodiversity: Which Bird Species Predict High Breeding Bird Assemblage Diversity in Boreal Forests at Multiple Spatial Scales? In Annales Zoologici Fennic; Finnish Zoological and Botanical Publishing: Helsinki, Finland, 2014; Volume 51, pp. 457–476. [Google Scholar]

- Sprengel, E.; Jaggi, M.; Kilcher, Y.; Hofmann, T. Audio Based Bird Species Identification using Deep Learning Techniques. Proceedings of CEUR Workshop, Evora, Portugal, 5–8 September 2016; pp. 547–559. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vidaña-Vila, E.; Navarro, J.; Alsina-Pagès, R.M. Towards Automatic Bird Detection: An Annotated and Segmented Acoustic Dataset of Seven Picidae Species. Data 2017, 2, 18. https://doi.org/10.3390/data2020018

Vidaña-Vila E, Navarro J, Alsina-Pagès RM. Towards Automatic Bird Detection: An Annotated and Segmented Acoustic Dataset of Seven Picidae Species. Data. 2017; 2(2):18. https://doi.org/10.3390/data2020018

Chicago/Turabian StyleVidaña-Vila, Ester, Joan Navarro, and Rosa Ma Alsina-Pagès. 2017. "Towards Automatic Bird Detection: An Annotated and Segmented Acoustic Dataset of Seven Picidae Species" Data 2, no. 2: 18. https://doi.org/10.3390/data2020018