Performance Evaluation of Real Industrial RTO Systems

Abstract

:1. Introduction

2. Problem Statement

3. RTO System Description

- (a)

- Steady-state detection (SSD), which states if the plant is at steady state based on the data gathered from the plant within a time interval;

- (b)

- Monitoring sequence (MON), which is a switching method for executing the RTO iteration based on the information of the unit’s stability, the unit’s load and the RTO system’s status; the switching method triggers the beginning of a new cycle of optimization and commonly depends on a minimal interval between successive RTO iterations, which typically corresponds to 30 min to 2 h for distillation units;

- (c)

- Execution of the optimization layer based on the two-step approach, thus adapting the stationary process model and using it as a constraint for solving a nonlinear programming problem representing an economic index.

- production planning and scheduling, which transfer information to it;

- storage logistics, which has information about the composition of feed tanks;

- Distributed control system (DCS) and database, which deliver measured values.

4. Industrial RTO Evaluation

4.1. Steady-State Detection

4.1.1. Tool A

4.1.2. Tool B

4.1.3. Industrial Results

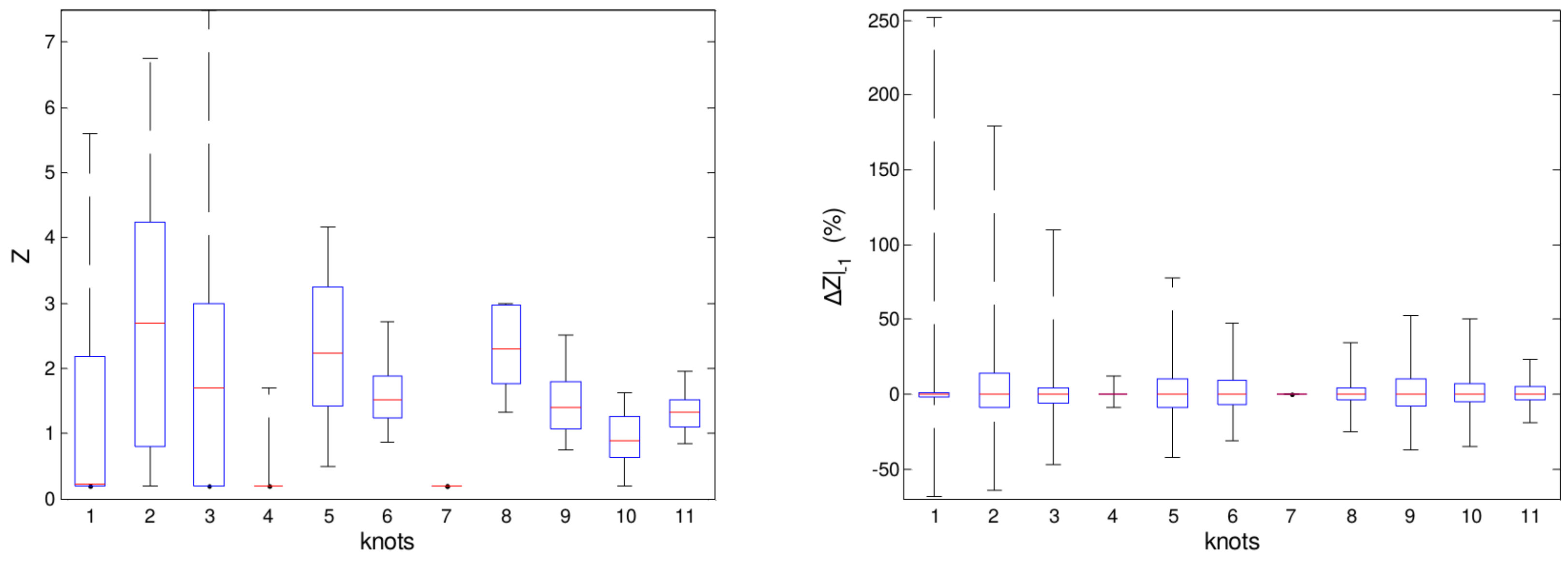

4.2. Adaptation and Optimization

- the database might present lagged analyses, given the quality of oil changes with time;

- there might be changes in oil composition due to storage and distribution policies from well to final tank. Commonly, this causes the loss of volatile compounds;

- mixture rules applied to determine the properties of the load might not adequately represent its distillation profile;

- eventually, internal streams of the refinery are blended with the load for reprocessing.

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| RTO | Real-time optimization systems |

| MPC | Model predictive control |

| MON | Monitoring sequence |

| SSD | Steady-state detection |

| APC | Advanced process control |

| DCS | Distributed control system |

| SM | Statistical method |

| HM | Heuristic method |

References

- Garcia, C.E.; Morari, M. Optimal operation of integrated processing systems. Part I: Open-loop on-line optimizing control. AIChE J. 1981, 27, 960–968. [Google Scholar] [CrossRef]

- Ellis, J.; Kambhampati, C.; Sheng, G.; Roberts, P. Approaches to the optimizing control problem. Int. J. Syst. Sci. 1988, 19, 1969–1985. [Google Scholar] [CrossRef]

- Engell, S. Feedback control for optimal process operation. J. Process Control 2007, 17, 203–219. [Google Scholar] [CrossRef]

- Chachuat, B.; Srinivasan, B.; Bonvin, D. Adaptation strategies for real-time optimization. Comput. Chem. Eng. 2009, 33, 1557–1567. [Google Scholar] [CrossRef]

- François, G.; Bonvin, D. Chapter One—Measurement-Based Real-Time Optimization of Chemical Processes. In Control and Optimisation of Process Systems; Advances in Chemical Engineering; Pushpavanam, S., Ed.; Academic Press: New York, NY, USA, 2013; Volume 43, pp. 1–50. [Google Scholar]

- Cutler, C.; Perry, R. Real time optimization with multivariable control is required to maximize profits. Comput. Chem. Eng. 1983, 7, 663–667. [Google Scholar] [CrossRef]

- Bamberger, W.; Isermann, R. Adaptive on-line steady-state optimization of slow dynamic processes. Automatica 1978, 14, 223–230. [Google Scholar] [CrossRef]

- Jang, S.S.; Joseph, B.; Mukai, H. On-line optimization of constrained multivariable chemical processes. AIChE J. 1987, 33, 26–35. [Google Scholar] [CrossRef]

- Arkun, Y.; Stephanopoulos, G. Studies in the synthesis of control structures for chemical processes: Part IV. Design of steady-state optimizing control structures for chemical process units. AIChE J. 1980, 26, 975–991. [Google Scholar] [CrossRef]

- Darby, M.L.; Nikolaou, M.; Jones, J.; Nicholson, D. RTO: An overview and assessment of current practice. J. Process Control 2011, 21, 874–884. [Google Scholar] [CrossRef]

- Naysmith, M.; Douglas, P. Review of real time optimization in the chemical process industries. Dev. Chem. Eng. Miner. Process. 1995, 3, 67–87. [Google Scholar] [CrossRef]

- Marlin, T.E.; Hrymak, A.N. Real-Time Operations Optimization of Continuous Processes; AIChE Symposium Series; 1971-c2002; American Institute of Chemical Engineers: New York, NY, USA, 1997; Volume 93, pp. 156–164. [Google Scholar]

- Trierweiler, J.O. Real-Time Optimization of Industrial Processes. In Encyclopedia of Systems and Control; Baillieul, J., Samad, T., Eds.; Springer: London, UK, 2014; pp. 1–11. [Google Scholar]

- Rotava, O.; Zanin, A.C. Multivariable control and real-time optimization—An industrial practical view. Hydrocarb. Process. 2005, 84, 61–71. [Google Scholar]

- Young, R. Petroleum refining process control and real-time optimization. IEEE Control Syst. 2006, 26, 73–83. [Google Scholar] [CrossRef]

- Shokri, S.; Hayati, R.; Marvast, M.A.; Ayazi, M.; Ganji, H. Real time optimization as a tool for increasing petroleum refineries profits. Pet. Coal 2009, 51, 110–114. [Google Scholar]

- Ruiz, C.A. Real Time Industrial Process Systems: Experiences from the Field. Comput. Aided Chem. Eng. 2009, 27, 133–138. [Google Scholar]

- Mercangöz, M.; Doyle, F.J., III. Real-time optimization of the pulp mill benchmark problem. Comput. Chem. Eng. 2008, 32, 789–804. [Google Scholar] [CrossRef]

- Chen, C.Y.; Joseph, B. On-line optimization using a two-phase approach: An application study. Ind. Eng. Chem. Res. 1987, 26, 1924–1930. [Google Scholar] [CrossRef]

- Yip, W.; Marlin, T.E. The effect of model fidelity on real-time optimization performance. Comput. Chem. Eng. 2004, 28, 267–280. [Google Scholar] [CrossRef]

- Roberts, P. Algorithms for integrated system optimisation and parameter estimation. Electron. Lett. 1978, 14, 196–197. [Google Scholar] [CrossRef]

- Forbes, J.; Marlin, T.; MacGregor, J. Model adequacy requirements for optimizing plant operations. Comput. Chem. Eng. 1994, 18, 497–510. [Google Scholar] [CrossRef]

- Chachuat, B.; Marchetti, A.; Bonvin, D. Process optimization via constraints adaptation. J. Process Control 2008, 18, 244–257. [Google Scholar] [CrossRef]

- Marchetti, A.; Chachuat, B.; Bonvin, D. A dual modifier-adaptation approach for real-time optimization. J. Process Control 2010, 20, 1027–1037. [Google Scholar] [CrossRef]

- Bunin, G.; François, G.; Bonvin, D. Sufficient conditions for feasibility and optimality of real-time optimization schemes—I. Theoretical foundations. arXiv 2013. [Google Scholar]

- Gao, W.; Wenzel, S.; Engell, S. A reliable modifier-adaptation strategy for real-time optimization. Comput. Chem. Eng. 2016, 91, 318–328. [Google Scholar] [CrossRef]

- Yip, W.S.; Marlin, T.E. Multiple data sets for model updating in real-time operations optimization. Comput. Chem. Eng. 2002, 26, 1345–1362. [Google Scholar] [CrossRef]

- Yip, W.S.; Marlin, T.E. Designing plant experiments for real-time optimization systems. Control Eng. Pract. 2003, 11, 837–845. [Google Scholar] [CrossRef]

- Pfaff, G.; Forbes, J.F.; McLellan, P.J. Generating information for real-time optimization. Asia-Pac. J. Chem. Eng. 2006, 1, 32–43. [Google Scholar] [CrossRef]

- Zhang, Y.; Nadler, D.; Forbes, J.F. Results analysis for trust constrained real-time optimization. J. Process Control 2001, 11, 329–341. [Google Scholar] [CrossRef]

- Quelhas, A.D.; de Jesus, N.J.C.; Pinto, J.C. Common vulnerabilities of RTO implementations in real chemical processes. Can. J. Chem. Eng. 2013, 91, 652–668. [Google Scholar] [CrossRef]

- Friedman, Y.Z. Closed loop optimization update—We are a step closer to fulfilling the dream. Hydrocarb. Process. J. 2000, 79, 15–16. [Google Scholar]

- Gattu, G.; Palavajjhala, S.; Robertson, D.B. Are oil refineries ready for non-linear control and optimization? In Proceedings of the International Symposium on Process Systems Engineering and Control, Mumbai, India, 3–4 January 2003.

- Basak, K.; Abhilash, K.S.; Ganguly, S.; Saraf, D.N. On-line optimization of a crude distillation unit with constraints on product properties. Ind. Eng. Chem. Res. 2002, 41, 1557–1568. [Google Scholar] [CrossRef]

- Morari, M.; Arkun, Y.; Stephanopoulos, G. Studies in the synthesis of control structures for chemical processes: Part I: Formulation of the problem. Process decomposition and the classification of the control tasks. Analysis of the optimizing control structures. AIChE J. 1980, 26, 220–232. [Google Scholar] [CrossRef]

- Bailey, J.; Hrymak, A.; Treiber, S.; Hawkins, R. Nonlinear optimization of a hydrocracker fractionation plant. Comput. Chem. Eng. 1993, 17, 123–138. [Google Scholar] [CrossRef]

- Bard, Y. Nonlinear Parameter Estimation; Academic Press: New York, NY, USA, 1974; Volume 513. [Google Scholar]

- Von Neumann, J.; Kent, R.; Bellinson, H.; Hart, B. The mean square successive difference. Ann. Math. Stat. 1941, 12, 153–162. [Google Scholar] [CrossRef]

- Von Neumann, J. Distribution of the ratio of the mean square successive difference to the variance. Ann. Math. Stat. 1941, 12, 367–395. [Google Scholar] [CrossRef]

- Young, L. On randomness in ordered sequences. Ann. Math. Stat. 1941, 12, 293–300. [Google Scholar] [CrossRef]

- Cao, S.; Rhinehart, R.R. An efficient method for on-line identification of steady state. J. Process Control 1995, 5, 363–374. [Google Scholar] [CrossRef]

- Cao, S.; Rhinehart, R.R. Critical values for a steady-state identifier. J. Process Control 1997, 7, 149–152. [Google Scholar] [CrossRef]

- Shrowti, N.A.; Vilankar, K.P.; Rhinehart, R.R. Type-II critical values for a steady-state identifier. J. Process Control 2010, 20, 885–890. [Google Scholar] [CrossRef]

- Bhat, S.A.; Saraf, D.N. Steady-state identification, gross error detection, and data reconciliation for industrial process units. Ind. Eng. Chem. Res. 2004, 43, 4323–4336. [Google Scholar] [CrossRef]

- Montgomery, D.C.; Runger, G.C. Applied Statistics and Probability for Engineers, 3rd ed.; John Wiley & Sons: New York, NY, USA, 2002. [Google Scholar]

- Alekman, S.L. Significance tests can determine steady-state with confidence. Control Process Ind. 1994, 7, 62–63. [Google Scholar]

- Schladt, M.; Hu, B. Soft sensors based on nonlinear steady-state data reconciliationin the process industry. Chem. Eng. Process. Process Intensif. 2007, 46, 1107–1115. [Google Scholar] [CrossRef]

- Kelly, J.D.; Hedengren, J.D. A steady-state detection (SSD) algorithm to detect non-stationary drifts in processes. J. Process Control 2013, 23, 326–331. [Google Scholar] [CrossRef]

- Miletic, I.; Marlin, T. Results analysis for real-time optimization (RTO): Deciding when to change the plant operation. Comput. Chem. Eng. 1996, 20 (Suppl. S2), S1077–S1082. [Google Scholar] [CrossRef]

- Miletic, I.; Marlin, T. On-line statistical results analysis in real-time operations optimization. Ind. Eng. Chem. Res. 1998, 37, 3670–3684. [Google Scholar] [CrossRef]

- Zafiriou, E.; Cheng, J.H. Measurement noise tolerance and results analysis for iterative feedback steady-state optimization. Ind. Eng. Chem. Res. 2004, 43, 3577–3589. [Google Scholar] [CrossRef]

- Bainbridge, L. Ironies of automation. Automatica 1983, 19, 775–779. [Google Scholar] [CrossRef]

| Tag | ||

|---|---|---|

| 0.0 | 98.3 | |

| 3.2 | 81.1 | |

| 0.0 | 97.3 | |

| 0.0 | 90.9 | |

| 0.0 | 97.1 | |

| 4.8 | 99.5 | |

| 0.0 | 90.1 | |

| 6.8 | 84.4 |

| Position in Rank | Tag | P50 | P90 | P10 | |

|---|---|---|---|---|---|

| 1 | yes | 21.53 | 54.83 | 1.15 | |

| 2 | yes | 15.99 | 54.33 | 0.19 | |

| 3 | yes | 2.37 | 11.39 | 0.10 | |

| 4 | yes | 1.95 | 7.49 | 0.48 | |

| 5 | yes | 1.68 | 14.21 | 0.35 | |

| 6 | yes | 1.41 | 7.01 | <0.01 | |

| 7 | yes | 1.17 | 5.94 | 0.04 | |

| 8 | yes | 1.12 | 7.39 | <0.01 | |

| 9 | θ(8) | no | 0.96 | 4.08 | 0.09 |

| 10 | yes | 0.65 | 2.17 | 0.12 |

| Knot | Active Constraint (%) | ||

|---|---|---|---|

| 1 | 0.2 | 10 | 42.5 |

| 2 | 0.2 | 10 | 13.4 |

| 3 | 0.2 | 7.5 | 31.5 |

| 4 | 0.2 | 7.5 | 81.7 |

| 5 | 0.2 | 7.5 | 0.7 |

| 6 | 0.2 | 7.5 | 0.2 |

| 7 | 0.2 | 7.5 | 92.1 |

| 8 | 0.2 | 3 | 19.4 |

| 9 | 0.2 | 3 | 1.2 |

| 10 | 0.2 | 3 | 14.6 |

| 11 | 0.2 | 3 | 0.3 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Câmara, M.M.; Quelhas, A.D.; Pinto, J.C. Performance Evaluation of Real Industrial RTO Systems. Processes 2016, 4, 44. https://doi.org/10.3390/pr4040044

Câmara MM, Quelhas AD, Pinto JC. Performance Evaluation of Real Industrial RTO Systems. Processes. 2016; 4(4):44. https://doi.org/10.3390/pr4040044

Chicago/Turabian StyleCâmara, Maurício M., André D. Quelhas, and José Carlos Pinto. 2016. "Performance Evaluation of Real Industrial RTO Systems" Processes 4, no. 4: 44. https://doi.org/10.3390/pr4040044