Player–Game Interaction and Cognitive Gameplay: A Taxonomic Framework for the Core Mechanic of Videogames

Abstract

:1. Introduction

2. Background

2.1. Videogames

2.2. Cognitive Gameplay

2.3. Information, Content, and Representation

2.4. Cognition and Interaction

2.5. Core Mechanic: The Cognitive Nucleus

3. INFORM: Interaction Design for the Core Mechanic

3.1. Elements of Action

3.1.1. Agency

3.1.2. Flow (Action)

3.1.3. Focus

3.1.4. Granularity

3.1.5. Presence

3.1.6. Timing

3.2. Elements of Reaction

3.2.1. Activation

3.2.2. Context

3.2.3. Flow (Reaction)

3.2.4. Spread

3.2.5. State

3.2.6. Transition

4. Application of INFORM in a Scenario

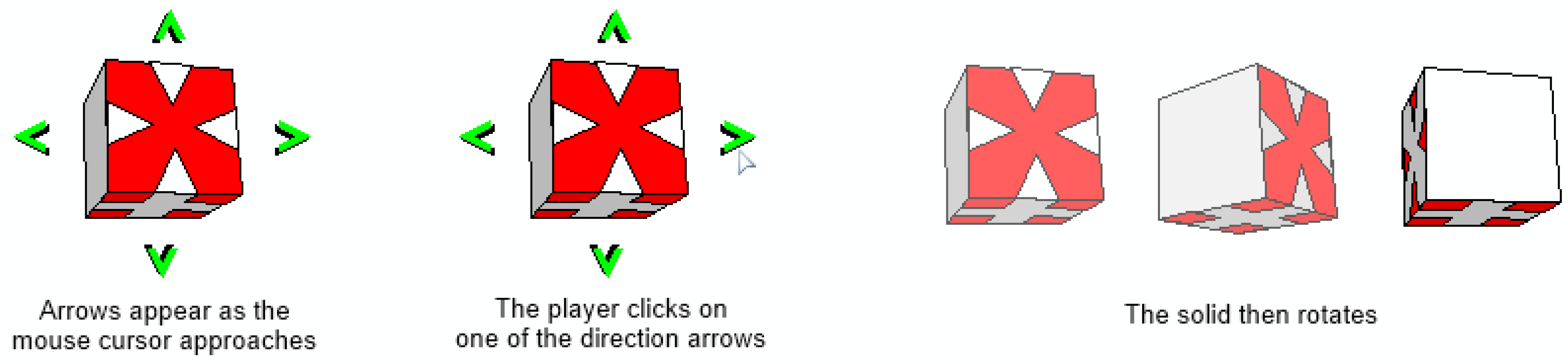

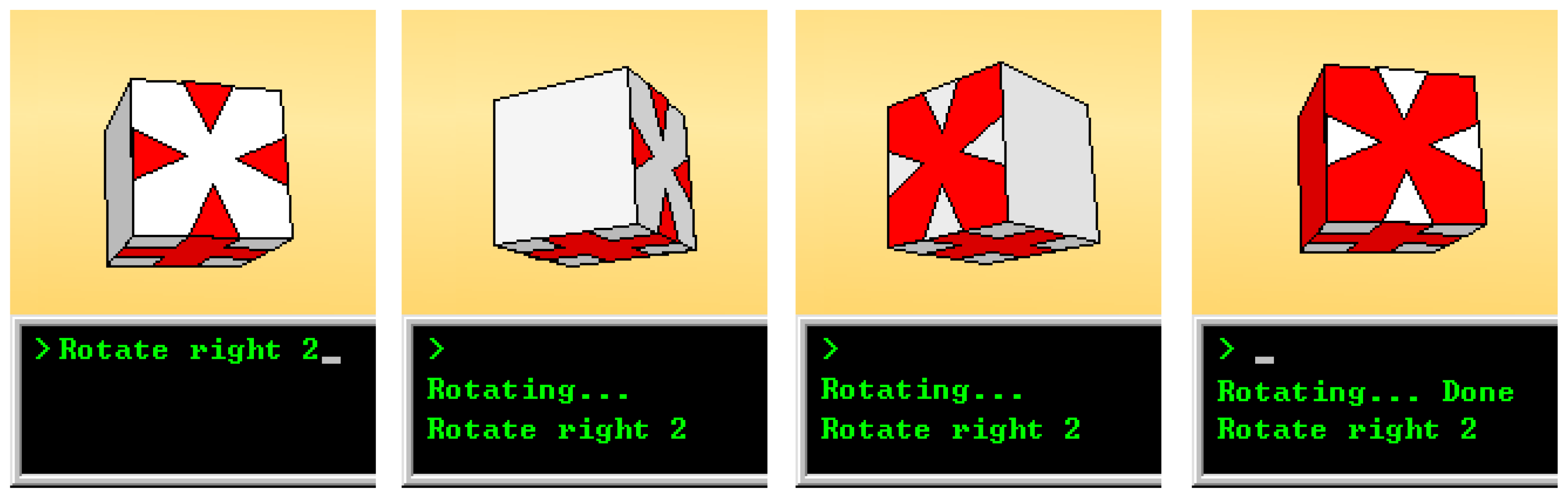

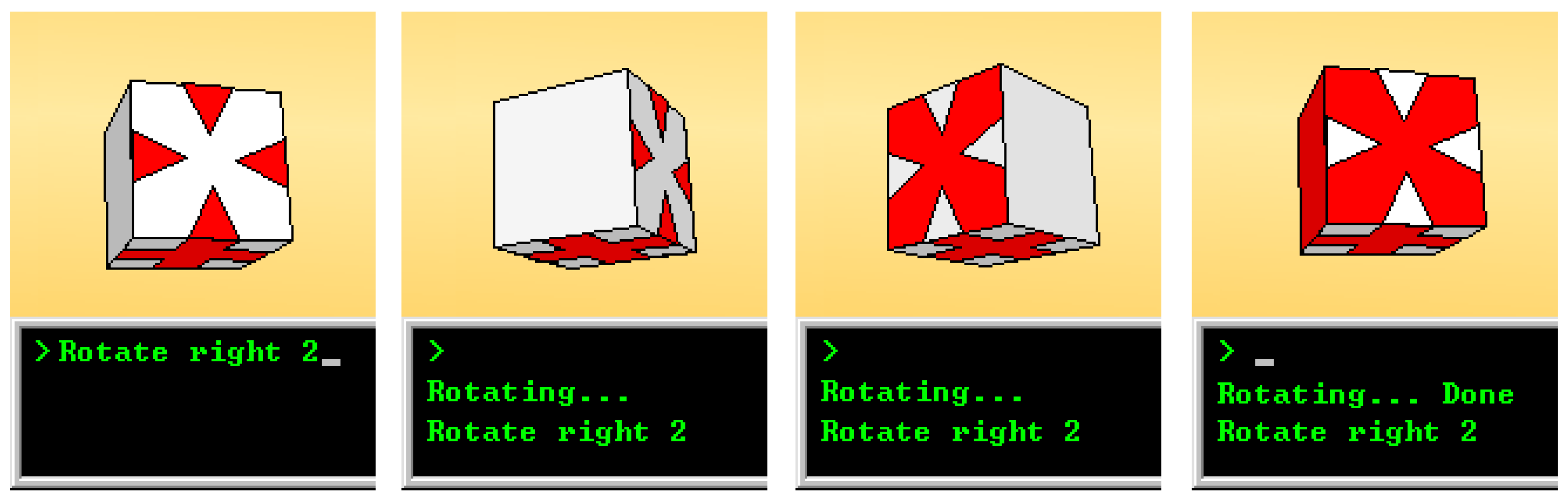

- Agency: manual or verbal. With manual agency, the player rotates the cube using the mouse—e.g., by clicking and dragging the cube. Alternatively, the cube could be rotated by clicking on arrows that appear around the cube as the mouse cursor approaches it (see Figure 6). With verbal agency, the player could type commands into a console to rotate the cube—e.g., “rotate left” to rotate the cube to the left once, and “rotate left 3” to rotate the cube to the left three times (see Figure 7).

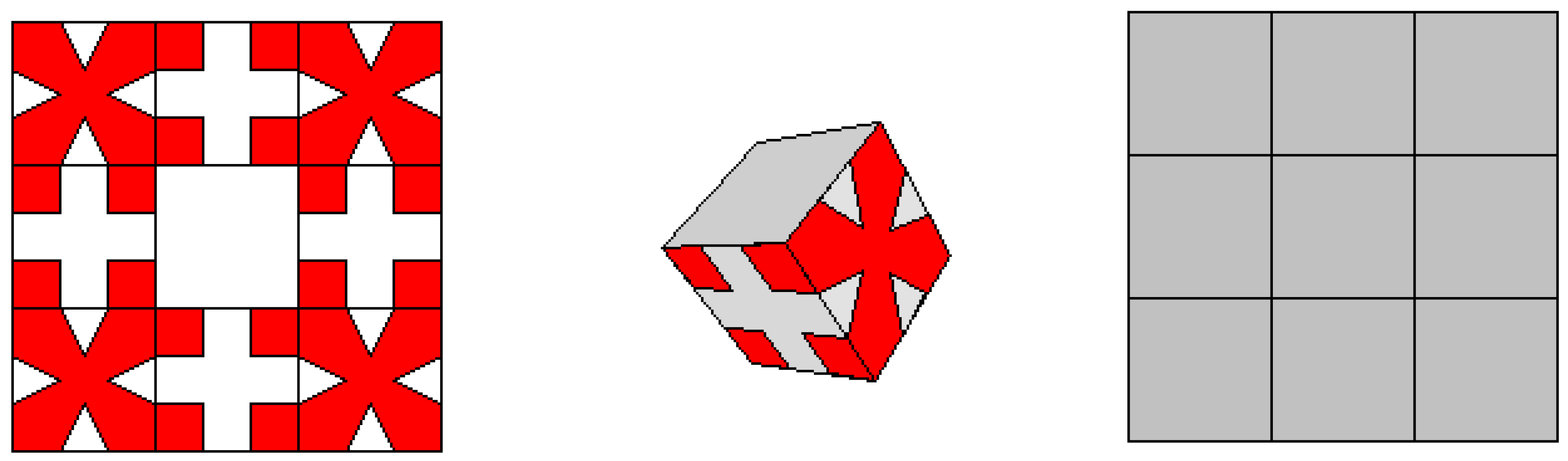

- Focus: direct or indirect. With direct focus, the cube is the focal point of action—e.g., the player clicks on and drags the cube to rotate it. As the interaction is directed toward the intended target (i.e., the cube), focus is direct. If the focus were indirect, however, another representation would be the focal point of action. A simple example is having a set of arrows in a control panel in the interface. When the player clicks on an arrow, the cube rotates in the corresponding direction. This is indirect focus, as the action is directed toward arrows in the control panel, and not the cube itself. As another example, the player could be provided with an additional, alternative representation of the cube (e.g., a formula that represents its 2D projection). The player would be required to act on the alternative representation to control the rotation of the cube.

- Flow (action): discrete or continuous. Consider the above examples in which the player clicks on an arrow button to rotate the cube. These are examples in which action flow is discrete, as the action occurs instantaneously: the player simply clicks a button. Alternatively, the player could click on and drag a slider to specify the amount of rotation—an example where action flow is continuous since the action happens over a period of time in a fluid manner.

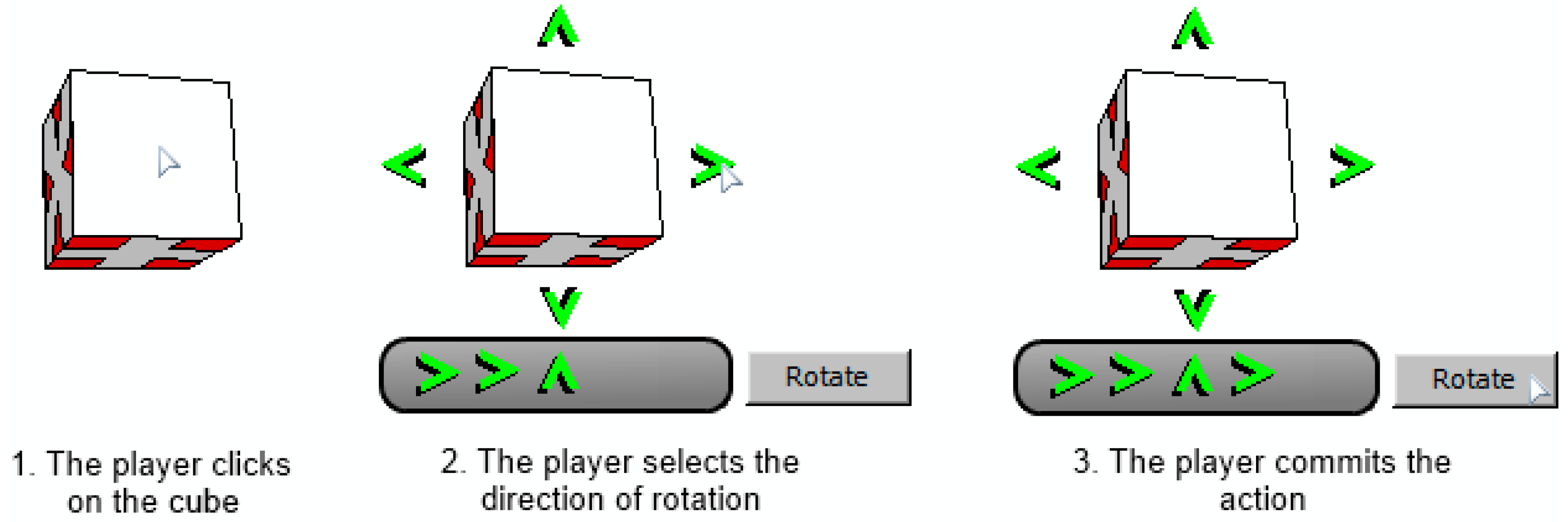

- Granularity: atomic or composite. With atomic granularity, the interaction has only one step—e.g., dragging the cube to rotate it. With composite granularity, the interaction would require more than one step to complete. For example, to rotate the cube, the player would have to first select it, then specify the desired direction of rotation, then select a button to execute the rotation. Although the composite type of granularity may seem unnecessary here, it could be quite relevant if, for example, the player could supply important additional parameters to the action (such as the angle or speed of rotation).

- Presence: implicit or explicit. With implicit presence, the possibility of rotating the cube is not advertised to the player. The player must have existing knowledge that the shape can be rotated and how to go about rotating it. For instance, if the player could rotate the cube by clicking on it and dragging the mouse, but nothing on the cube or anywhere else in the interface suggests that this action is possible, then presence is implicit. With explicit presence, the possibility of rotating the cube would be advertised to the player. One simple example is to have a label below the cube stating: “To rotate the cube, click and drag it”. Alternatively, the cube could be wiggling with a small rotation sign attached to it to suggest the possibility of this interaction.

- Timing: player-paced or game-paced. With player-paced timing, the player can take as much time as she wants to rotate the cube. However, if timing is game-paced, a time restriction is placed on the player. For example, there could be a timer that begins at 60 seconds and counts down. When it reaches 0 seconds, the player loses points. Every time the player performs the rotating action, the timer for this interaction can be reset.

- Activation: immediate, delayed, or on-demand. With immediate activation, the cube rotates as soon as the player acts—e.g., the player clicks an arrow button and the cube rotates immediately. With delayed activation, the cube would not rotate until a period of time elapses or some other event occurs. For instance, the player clicks on the arrow button to rotate the cube to the left but the cube does not rotate until a subsequent action is performed. With on-demand activation, the player could specify a sequence of multiple rotations, but the cube would not rotate until a separate button is clicked, and the sequence would unfold (see Figure 8).

- Context: changed or unchanged. In the examples thus far, context is implemented with the unchanged type (i.e., the context in which the interaction occurs does not change once the reaction is finished). One possible implementation of the changed type would be the following: the game places a limit on the number of times the player can rotate the cube. Once the player rotates the cube beyond this limit, the game reacts by resetting the level, and this changes the context in which the player is operating. This context change will likely force the player to think about recreating the whole pattern again, and remember the set of steps used before the level was reset.

- Flow (reaction): discrete or continuous. The following is an example of continuous reaction flow: the player clicks on and drags a slider to rotate the cube, and the cube gradually rotates until its orientation matches that specified by the slider’s position. If the reaction flow was discrete, however, the cube’s orientation would immediately change to match that specified by the slider’s position—it would not change gradually over time. In this case, the reaction occurs instantaneously without any fluid motion. Separating action and reaction flow can be conducive to mindful planning in certain situations (see [63]).

- Spread: self-contained or propagated. In the case of self-contained spread, only the focal representation is affected. For instance, if the player drags a cube to rotate it, no other representation in the interface is affected. However, in the example given below in which transition is distributed, the player types a command to rotate the cube and, as a result, multiple representations are created to display the orientation of the cube at different stages in the rotation. In this example, the spread is propagated to other representations in the interface.

- State: created, deleted, and/or altered. To demonstrate implementations of the different types of this element, the game will enforce a limit on the number of times the cube can rotate. Once this limit is reached, the player can no longer rotate the cube and must restart the puzzle. Consider the case in which the player rotates the cube by typing a ‘rotate’ command into a console. Assume that, in addition to the cube rotating, representations elsewhere in the interface encoding the number of performed rotations are also affected. One possibility is that, as the cube is rotated, the color and/or arrangement of other representations change to reflect the number of remaining rotations that are available to the player. In this case, the properties of the representations (i.e., their colors and positions) are altered—an example of the ‘altered’ type of state. Another possibility is that each time the cube is rotated, a representation is removed from the interface to indicate that one less rotation is available to the player—an example of the ‘deleted’ type of state. For instance, there could be a row of small cubes, and each rotation results in one of these cubes being removed. A third possibility is that representations of the number of rotations are added to the interface after the performance of each rotation—an example of the ‘created’ type of state. For example, there may be an empty grid in which a small copy of the cube is placed after each rotation to signify that an interaction has taken place.

- Transition: stacked or distributed. In the above example, transition is stacked—i.e., the cube rotates such that orientations are stacked on top of one another, and previous orientations are not displayed (see Figure 9). Alternatively, the transition could be distributed—e.g., the player types a command to rotate the cube three times to the right, and several representations appear to encode the intermediate orientations. All representations remain on the screen, so that the player can see the different orientations which the cube had while it was rotating (see Figure 10).

5. Summary

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Barab, S.; Thomas, M.; Dodge, T.; Carteaux, R. Making learning fun: Quest Atlantis, a game without guns. Educ. Technol. Res. Dev. 2005, 53, 86–107. [Google Scholar] [CrossRef]

- Gros, B. Digital games in education. J. Res. Technol. Educ. 2007, 40, 23–38. [Google Scholar] [CrossRef]

- Ke, F.; Grabowski, B. Gameplaying for maths learning: Cooperative or not? Br. J. Educ. Technol. 2007, 38, 249–259. [Google Scholar] [CrossRef]

- Linehan, C.; Lawson, S.; Doughty, M.; Kirman, B.; Haferkamp, N.; Krämer, N.C.; Schembri, M.; Nigrelli, M.L. Teaching group decision making skills to emergency managers via digital games. In Media in the Ubiquitous Era: Ambient, Social and Gaming Media; Lugmayr, A., Fanssila, H., Naranen, P., Sotamaa, O., Vanhala, J., Eds.; IGI Global: Hershey, PA, USA, 2012; Volume 3, pp. 111–129. [Google Scholar]

- Liu, M.; Yuen, T.; Horton, L.; Lee, J.; Toprac, P.; Bogard, T. Designing technology-enriched cognitive tools to support young learners’ problem solving. Int. J. Cogn. Technol. 2013, 18, 14–21. [Google Scholar]

- Sedig, K. From play to thoughtful learning: A design strategy to engage children with mathematical representations. J. Comput. Math. Sci. Teach. 2008, 27, 65–101. [Google Scholar]

- Sedig, K.; Haworth, R. Interaction design and cognitive gameplay: Role of activiation time. In Proceedings of the First ACM SIGCHI Annual Symposium on Computer-Human Interaction in Play (CHI PLAY ’14), Toronto, ON, Canada, 19–22 October 2014; ACM Press: New York, NY, USA, 2014; pp. 247–256. [Google Scholar]

- Haworth, R.; Tagh Bostani, S.S.; Sedig, K. Visualizing decision trees in games to support children’s analytic reasoning: Any negative effects on gameplay? Int. J. Comput. Games Technol. 2010, 2010, 1–11. [Google Scholar] [CrossRef]

- Sedig, K.; Parsons, P. Interaction design for complex cognitive activities with visual representations: A pattern-based approach. AIS Trans. Hum. Comput. Interact. 2013, 5, 84–133. [Google Scholar]

- Salen, K.; Zimmerman, E. Rules of Play: Game Design Fundamentals; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Bedwell, W.L.; Pavlas, D.; Heyne, K.; Lazzara, E.H.; Salas, E. Toward a taxonomy linking game attributes to learning: An empirical study. Simul. Gaming 2012, 43, 729–760. [Google Scholar] [CrossRef]

- Sicart, M. Defining game mechanics. Game Stud. 2008, 8, 1–14. [Google Scholar]

- Campbell, T.; Ngo, B.; Fogarty, J. Game design principles in everyday fitness applications. In Proceedings of the ACM 2008 Conference on Computer Supported Cooperative Work (CSCW ’08), San Diego, CA, USA, 8–12 November 2008; ACM Press: New York, NY, USA, 2008; p. 249. [Google Scholar]

- Hartson, R. Cognitive, physical, sensory, and functional affordances in interaction design. Behav. Inf. Technol. 2003, 22, 315–338. [Google Scholar] [CrossRef]

- Kirsh, D. Interaction, external representation and sense making. In Proceedings of the 31st Annual Conference of the Cognitive Science Society, Amsterdam, The Netherlands, 29 July–1 August 2009; pp. 1103–1108.

- Kiili, K. Digital game-based learning: Towards an experiential gaming model. Internet High. Educ. 2005, 8, 13–24. [Google Scholar] [CrossRef]

- Annetta, L.A. The “I’s” have it: A framework for serious educational game design. Rev. Gen. Psychol. 2010, 14, 105–112. [Google Scholar] [CrossRef]

- Sedig, K.; Parsons, P.; Dittmer, M.; Haworth, R. Human-centered interactivity of visualization tools: Micro- and macro-level considerations. In Handbook of Human-Centric Visualization; Huang, W., Ed.; Springer: New York, NY, USA, 2014; pp. 717–743. [Google Scholar]

- Sedig, K.; Klawe, M.; Westrom, M. Role of interface manipulation style and scaffolding on cognition and concept learning in learnware. ACM Trans. Comput. Interact. 2001, 8, 34–59. [Google Scholar] [CrossRef]

- Costikyan, G. I have no words but i must design: Toward a critical vocabulary for games. In Computer Games and Digital Cultures Conference Proceedings; Tampere University Press: Tampere, Finland, 2002; pp. 9–33. [Google Scholar]

- Crawford, C. The Art of Computer Game Design; Osborne/McGraw-Hill: Berkeley, CA, USA, 1984. [Google Scholar]

- Suits, B. The Grasshopper: Games, Life and Utopia, 3rd ed.; Broadview Press: Peterborough, ON, USA, 2014. [Google Scholar]

- Ang, C.S. Rules, gameplay, and narratives in video games. Simul. Gaming 2006, 37, 306–325. [Google Scholar] [CrossRef]

- Ermi, L.; Mäyrä, F. Fundamental Components of the Gameplay Experience: Analysing Immersion. In Proceedings of the 2005 International Conference on Changings Views Worlds in Play, Vancouver, BC, Canada, 16–20 June 2005; pp. 15–27.

- Poels, K.; de Kort, Y.; IJsselsteijn, W. “It is always a lot of fun!”: Exploring dimensions of digital game experience using focus group methodology. In Processings of the 2007 conference on Future Play, Toronto, Canada, 14–17 November 2007; pp. 83–89.

- Callele, D.; Neufeld, E.; Schneider, K. A proposal for cognitive gameplay requirements. In Proceedings of the IEEE 2010 Fifth International Workshop on Requirements Engineering Visualization, Sydney, Australia, 28 September 2010; pp. 43–52.

- Connolly, T.M.; Boyle, E.A.; Macarthur, E.; Hainey, T.; Boyle, J.M. A systematic literature review of empirical evidence on computer games and serious games. Comput. Educ. 2012, 59, 661–686. [Google Scholar] [CrossRef]

- Cox, A.; Cairns, P.; Shah, P.; Carroll, M. Not doing but thinking. In Proceedings of the 2012 ACM Annual Conference on Human Factors in Computing Systems (CHI ’12), Austin, TX, USA, 5–10 May 2012; ACM Press: New York, NY, USA, 2012; p. 79. [Google Scholar]

- Lee, K.M.; Peng, W. What Do We Know About Social and Psychological Effects of Computer Games? A Comprehensive Review of the Current Literature; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2006. [Google Scholar]

- Spires, H.A. 21st century skills and serious games: Preparing the N generation. In Serious Educational Games: From Theory to Practice; Annetta, L.A., Ed.; Sense Publishers: Rotterdam, The Netherlands, 2008; pp. 13–23. [Google Scholar]

- Ritterfeld, U.; Weber, R. Video Games for Entertainment and Education; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2006. [Google Scholar]

- Moreno-Ger, P.; Martínez-Ortiz, I.; Sierra, J.L.; Fernández-Manjón, B. A content-centric development process model. Computer 2008, 41, 24–30. [Google Scholar] [CrossRef]

- Dondlinger, M.J. Educational video game design: A review of the literature. J. Appl. Educ. Technol. 2007, 4, 21–31. [Google Scholar]

- Fisch, S.M. Making educational computer games “educational”. In Proceeding of the 2005 Conference on Interaction Design and Children (IDC ’05), Boulder, CO, USA, 8–10 June 2005; ACM Press: New York, NY, USA, 2005; pp. 56–61. [Google Scholar]

- Cox, R.; Brna, P. Supporting the use of external representations in problem solving: The need for flexible learning environments. J. Artif. Intell. Educ. 1995, 6, 239–302. [Google Scholar]

- Larkin, J.; Simon, H. Why a diagram is (Sometimes) worth ten thousand words. Cogn. Sci. 1987, 11, 65–100. [Google Scholar] [CrossRef]

- Zhang, J.; Norman, D. Representations in distributed cognitive tasks. Cogn. Sci. 1994, 18, 87–122. [Google Scholar] [CrossRef]

- Cole, M.; Derry, J. We have met technology and it is us. In Intelligence and Technology: The Impact of Tools on the Nature and Development of Human Abilities; Sternberg, R.J., Preiss, D.D., Eds.; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2005. [Google Scholar]

- Clark, A.; Chalmers, D. The extended mind. Analysis 1998, 58, 7–19. [Google Scholar] [CrossRef]

- Hutchins, E. Cognition in the Wild; MIT Press: Cambridge, MA, USA, 1995. [Google Scholar]

- Kirsh, D.; Maglio, P. On distinguishing epistemic from pragmatic action. Cogn. Sci. 1994, 18, 513–549. [Google Scholar] [CrossRef]

- Sedig, K.; Sumner, M. Characterizing interaction with visual mathematical representations. Int. J. Comput. Math. Learn. 2006, 11, 1–55. [Google Scholar] [CrossRef]

- Adams, E.; Dormans, J. Game Mechanics; Advanced Game Design; New Riders: Berkeley, CA, USA, 2012. [Google Scholar]

- McGuire, M.; Jenkins, O.C. Creating Games: Mechanics, Content, and Technology; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Adams, E. Fundamentals of Game Design, 3rd ed.; Pearson Education: London, UK, 2014. [Google Scholar]

- Hunicke, R.; LeBlanc, M.; Zubek, R. MDA: A formal approach to game design and game research. In Proceedings of the AAAI Workshop on Challenges Game AI, San Jose, CA, USA, 25–26 July 2004.

- Lundgren, S.; Björk, S. Game mechanics: Describing computer-augmented games in terms of interaction. In Proceedings of the First International Conference on Technologies for Interactive Digital Storytelling and Entertainment (TIDSE 2003), Darmstadt, Hessen, Germany, 24–26 March 2003; Fraunhofer IRB Verlag: Stuttgart, Germany; pp. 45–56.

- Iii, R.; Ogden, S. Game Design: Theory & Practice; Wordware Publishing: Plano, TX, USA, 2005. [Google Scholar]

- Petkovi, M. Famous Puzzles of Great Mathematicians; American Mathematical Society: Providence, RI, USA, 2009. [Google Scholar]

- Sniedovich, M. OR/MS games: 2. Towers of Hanoi. INFORMS Trans. Educ. 2002, 3, 45–62. [Google Scholar] [CrossRef]

- Svendsen, G.B. The influence of interface style on problem solving. Int. J. Man Mach. Stud. 1991, 35, 379–397. [Google Scholar] [CrossRef]

- Slocum, J. The Tao of Tangram: History, Problems, Solutions; Barnes & Noble: New York, NY, USA, 2007. [Google Scholar]

- Norman, D. The Design of Everyday Things: Revised and Expanded Edition; Basic Books: New York, NY, USA, 2013. [Google Scholar]

- Johnson, J. Designing with the Mind in Mind: Simple Guide to Understanding User Interface Design Rules; Morgan Kaufmann: Burlington, MA, USA, 2014. [Google Scholar]

- Van Harreveld, F.; Wagenmakers, E.J.; van der Maas, H.L.J. The effects of time pressure on chess skill: An investigation into fast and slow processes underlying expert performance. Psychol. Res. 2007, 71, 591–597. [Google Scholar] [CrossRef] [PubMed]

- Eich, E. Context, memory, and integrated item/context imagery. J. Exp. Psychol. Learn. Mem. Cogn. 1985, 11, 764. [Google Scholar] [CrossRef]

- Radvansky, G.A.; Krawietz, S.A.; Tamplin, A.K. Walking through doorways causes forgetting: Further explorations. Q. J. Exp. Psychol. 2011, 64, 1632–1645. [Google Scholar] [CrossRef] [PubMed]

- Sahakyan, L.; Kelley, C.M. A contextual change account of the directed forgetting effect. J. Exp. Psychol. Learn. Mem. Cogn. 2002, 28, 1064–1072. [Google Scholar] [CrossRef] [PubMed]

- Ainsworth, S. DeFT: A conceptual framework for considering learning with multiple representations. Learn. Instr. 2006, 16, 183–198. [Google Scholar] [CrossRef]

- Kirsh, D. Thinking with external representations. AI Soc. 2010, 25, 441–454. [Google Scholar] [CrossRef]

- Anderson, T.R.; Schönborn, K.J.; du Plessis, L.; Gupthar, A.S.; Hull, T.L. Identifying and Developing Students’ Ability to Reason with Concepts and Representations in Biology. Series: Models and Modeling in Science Education. In Multiple Representations in Biological Education; Treagust, D.F., Tsui, C.Y., Eds.; Springer: Dordrecht, The Netherlands, 2013; Volume 7, pp. 19–38. [Google Scholar]

- Scaife, M.; Rogers, Y. External cognition: How do graphical representations work? Int. J. Hum. Comput. Stud. 1996, 45, 185–213. [Google Scholar] [CrossRef]

- Liang, H.-N.; Parsons, P.; Wu, H.-C.; Sedig, K. An exploratory study of interactivity in visualization tools: “Flow” of interaction. J. Interact. Learn. Res. 2010, 21, 5–45. [Google Scholar]

- Tufte, E.R. Visual Explanations: Images and Quantities, Evidence and Narrative, 1st ed.; Graphics Press: Cheshire, CT, USA, 1997. [Google Scholar]

- Sedig, K.; Rowhani, S.; Liang, H.-N. Designing interfaces that support formation of cognitive maps of transitional processes: An empirical study. Interact. Comput. 2005, 17, 419–452. [Google Scholar] [CrossRef]

| Action | Reaction |

|---|---|

| agency | activation |

| flow | context |

| focus | flow |

| granularity | spread |

| presence | state |

| timing | transition |

| Element | Concern | Types | |

|---|---|---|---|

| action | agency | metaphoric way through which action is expressed | verbal, manual |

| flow | parsing of action in time | discrete, continuous | |

| focus | focal point of action | direct, indirect | |

| granularity | steps required to compose an action | atomic, composite | |

| presence | existence and advertisement of action | explicit, implicit | |

| timing | time available to player to compose and/or commit action | player-paced, game-paced | |

| reaction | activation | commencement of reaction | immediate, delayed, on-demand |

| context | context in which representations exist once reaction is complete | changed, unchanged | |

| flow | parsing of reaction in time | discrete, continuous | |

| spread | spread of effect that action causes | self-contained, propagated | |

| state | condition of representations once reaction process is complete | created, deleted, altered | |

| transition | presentation of change | stacked, distributed |

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sedig, K.; Parsons, P.; Haworth, R. Player–Game Interaction and Cognitive Gameplay: A Taxonomic Framework for the Core Mechanic of Videogames. Informatics 2017, 4, 4. https://doi.org/10.3390/informatics4010004

Sedig K, Parsons P, Haworth R. Player–Game Interaction and Cognitive Gameplay: A Taxonomic Framework for the Core Mechanic of Videogames. Informatics. 2017; 4(1):4. https://doi.org/10.3390/informatics4010004

Chicago/Turabian StyleSedig, Kamran, Paul Parsons, and Robert Haworth. 2017. "Player–Game Interaction and Cognitive Gameplay: A Taxonomic Framework for the Core Mechanic of Videogames" Informatics 4, no. 1: 4. https://doi.org/10.3390/informatics4010004