Effects of Teachers’ Professional Knowledge and Their Use of Three-Dimensional Physical Models in Biology Lessons on Students’ Achievement

Abstract

:1. Introduction

1.1. The Nature and Purpose of Models in Science Instruction

1.2. Teachers’ Domain-Specific Professional Knowledge Including Knowledge about Models and Modeling in Science

1.3. Effects of Using Models in Instruction on Students’ Outcome

1.4. Research Questions and Hypotheses

- RQ1:

- In which way should models be used in biology instruction to achieve higher students’ achievement in biology?

- RQ2:

- Which dimensions of professional knowledge do teachers need for using models in a way leading to an increase in students’ knowledge in biology?

- RQ3:

- How can an effective model use in biology instruction be described?

2. Materials and Methods

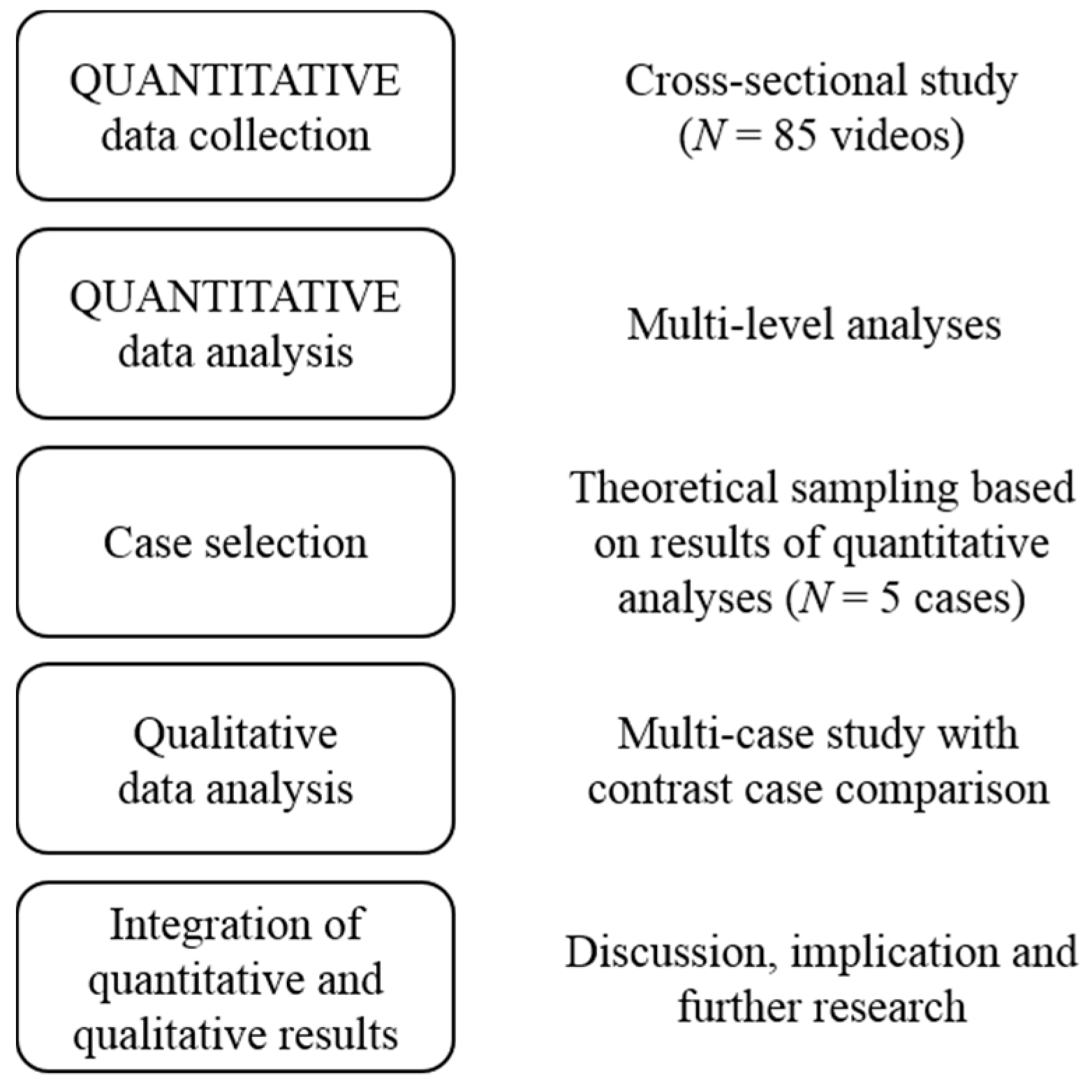

2.1. Design and Sample

2.2. Quantitative Phase

2.2.1. Domain-Specific Knowledge Dimensions PCK and CK

2.2.2. Instructional Quality Feature Elaborate Model Use (ELMO)

2.2.3. Students’ Data

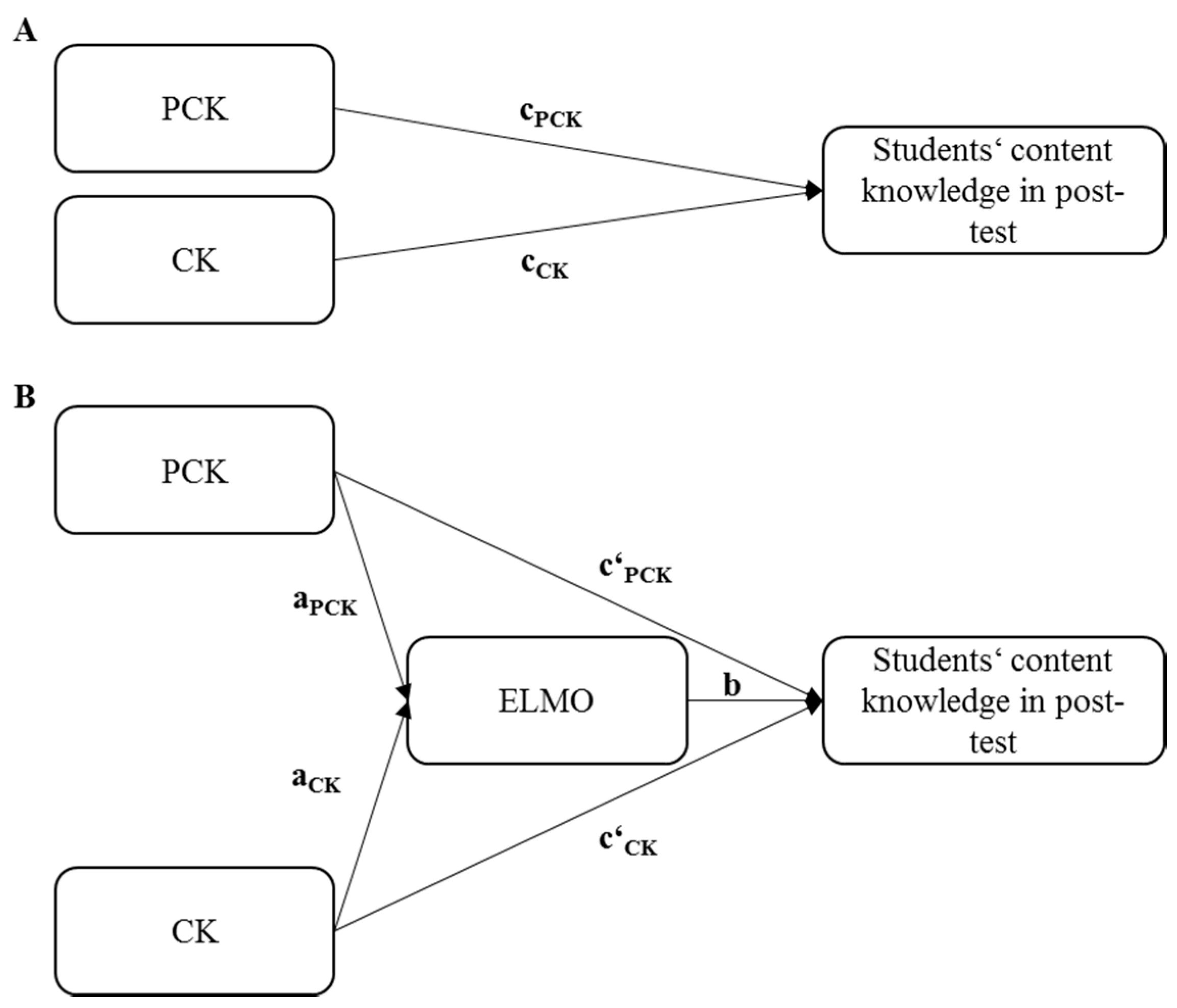

2.2.4. Quantitative Data Analysis: Multilevel Path Analyses

2.3. Qualitative Phase

2.3.1. Qualitative Research Design

2.3.2. Case Selection

2.3.3. Qualitative Data Analysis

3. Results

3.1. Quantitative Results

3.2. Qualitative Results

3.2.1. Teachers

3.2.2. Lessons

3.2.3. Aspect ‘Characteristics of the Model‘

3.2.4. Aspect ‘the Way the Model Is Integrated into Instruction’

- Tom:

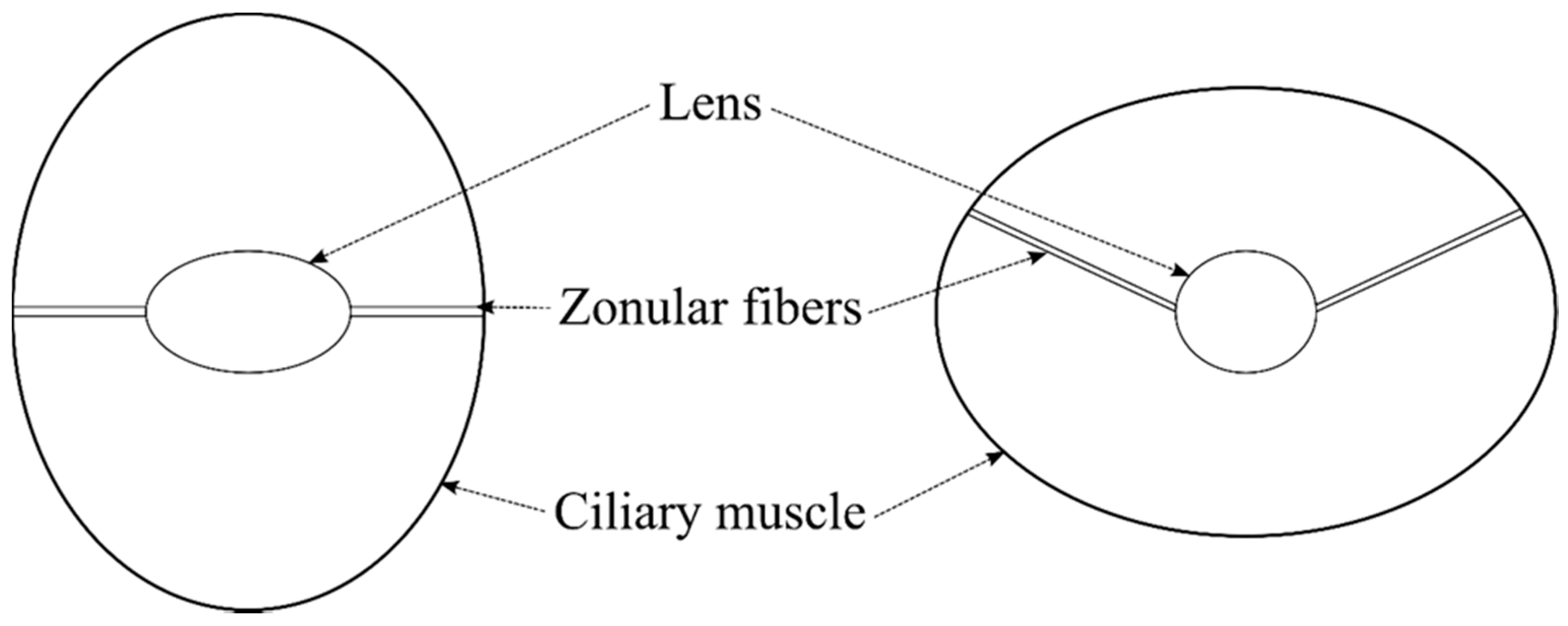

- [Tom takes a model of accommodation (see Figure 4) from the desk.] This is a model, which illustrates the situation. This wire represents the lens. And these red threads represent the zonular fibers. By pulling here, the ciliary muscle can be changed—so this structure on the outside represents the ciliary muscle. […]

- Maria:

- The spinal cord is hidden in the spine. Yes … I brought something with me. Please, just take a short look at the model. We will need this view later. Yes? […]

- Michael:

- Good [with regard to the content he previously dealt with]. This is [takes the structural model of spinal cord] a specific cross section of the spinal cord. Where is the spinal cord located?

- Tom:

- Okay. The lens takes a round shape. Now, think about when you are reading or watching television or playing computer games. Which is more exhausting, playing computer for one hour or taking a walk and looking at the landscape?

- Tom:

- [Tom takes the model of accommodation.] In this situation [see left part of Figure 4], the ciliary muscle is large and relaxed; the zonular fibers are stretched tight. What consequences would this situation have for the lens?

- Tom:

- […] So, which case would this situation [see left part of Figure 4] be?

- S13:

- Looking into the distance.

- Julian:

- Okay. This means, when a person has paraplegia or a biker fall on a specific position of the spinal cord … Nerves are cut, in the drastic case, … are compressed, that the neural impulses are not transmitted any longer. Yes, there are several possibilities. This can happen at this point [pointed at the model]. This can happen at another point [pointed at another position of the model] and this can also happen at this point [pointed at a third position of the model]. Which consequences will you expect? … Injuring the spinal cord at specific positions?

3.2.5. Aspect ‘the Way the Model is Used to Foster Scientific Reasoning’

- Tom:

- For getting a sharp picture, I change the distance between the objective and the focal plane. This is how it works in our model and in photography. But we can’t change the position of the lens in our eye that is what we would have to do, if we want to do it by analogy [Tom points to the optical bench]. […] So what do you think about how it works in our eye?

- Tom:

- […] This wire represents the lens. And these red threads represent the zonular fibers. By pulling here, the ciliary muscle can be changed—so this structure on the outside represents the ciliary muscle […]

- Tom:

- […] Now, we assume the muscle becomes tense; it contracts and gets closer around the lens, thereby it gets thicker—we can’t see it in this model—but what can we see?

- Robert:

- Right, the cornea, which is a part of the sclera, is pervious to light. I can remove it here. It would not work with a real eye in this way.

- Robert:

- […] and in here, there are two further structures in the eye. Usually you can’t take them out that easy, but in the model it works.

- Robert:

- Right, this structure is called vitreous body, because it is transparent. In reality, it is more jelly-like or gelatinous. It is not as tough as in this model.

- Robert:

- So, if someone wears glasses, it is like a glass or synthetic lens. In this model, it is also a synthetic lens. In reality—we will see it clearly, when we dissect porcine eyes—the lens of our eye is not tough like in a camera, but it is flexible. If I press the lens, it distorts.

- Robert:

- In the model, we can see another structure from outside. We can’t see it at our own eyes, but here in the model it can be clearly seen.

- S15:

- The optic nerve.

- Robert:

- Right, that is actually the part which leads to the brain.

- Maria:

- […] Please, just take a short look at the model. We will need this view later. Yes? … The spinal cord looks like this, more like this model.

3.2.6. Further Observations

3.3. Summary of the Results

4. Discussion

4.1. Contributions of the Study

4.1.1. Contribution to the Understanding of Elaborate Model Use in Biology Instruction

- Before working with models in instruction, it is important for teachers to become clear about what content they want to teach and to define an appropriate learning goal. Based on this learning goal, they may select an appropriate model (see the first aspect and its three categories in Table 1);

- A clear introduction of the model at the beginning of the model use may highlight the importance of the model for learning the content. Teachers can name the model, the components of the model, how the model works, and the content, which should be worked out using the model. In this way, the model seems as an independent teaching tool in instruction (see the second aspect category ‘introduction of the model’ in Table 1);

- When models are used as tools for scientific inquiry, phases of model work include more cognitively activating instruction and impart a higher level of understanding of models and modeling science. Therefore, it is important to formulate and refer to scientific research questions or hypotheses as well as to ask students about consequences when making changes to the used model. On the one hand, students are more involved in the modeling process and model work. On the other hand, instructional tasks are on a higher cognitive level (see the second aspect category ‘purpose of the model’ in Table 1);

- Critical reflection on the used model is important for supporting the process of scientific inquiry within model work. Several aspects are possible to reflect on. Teachers have the possibilities to discuss structural and material differences between the model and the real object, locations in the real object, adjoining structures, which are not illustrated in the model, as well as what is possible to do with the used model compared with the real object (see the third aspect category ‘critical reflection’ in Table 1).

4.1.2. Contribution to the Analyses of Effects of Knowledge Dimensions on Instructional Quality Features in Biology

4.1.3. Contribution to Fostering Students’ Achievement

4.1.4. Contribution to the Research Field

4.2. Limitations

4.2.1. Selection of Our Quantitative Sample

4.2.2. Model Use in the Videotaped Lessons

4.2.3. Teachers’ Professional Competence

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Department for Education and Skills & Qualification and Curriculum Authority [DfEaS&Q]. Science. The National Curriculum for England; HMSO: London, UK, 2004.

- Conference of the Ministers of Education [KMK]. Beschlüsse der Kultusministerkonferenz. Bildungsstandards im Fach Biologie für den Mittleren Schulabschluss (Jahrgangsstufe 10) [Resolution of the Standing Conference of the Ministers of Education and Cultural Affairs of the Länder in the Federal Republic of Germany Education Standards for the subject biology (Grade 10)]; Luchterhand: München, Germany, 2005; Available online: https://www.kmk.org/fileadmin/Dateien/veroeffentlichungen_beschluesse/2004/2004_10_15-Bildungsstandards-Deutsch-Primar.pdf (accessed on 29 May 2018).

- National Research Council. A Framework for K-12 Science Education. Practices, Crosscutting Concepts, and Core Ideas; The National Academies Press: Washington, DC, USA, 2012; ISBN 978-0-309-21742-2. [Google Scholar]

- Kampa, N.; Köller, O. German national proficiency scales in Germany—Internal structure, relations to general cognitive abilities and verbal skills. Sci. Educ. 2016, 100, 903–922. [Google Scholar] [CrossRef] [PubMed]

- Abd-El-Khalick, F.; BouJaoude, S.; Duschl, R.; Lederman, N.G.; Mamlok-Naaman, R.; Hofstein, A.; Niaz, M.; Treagust, D.; Tuan, H.-L. Inquiry in science education: International perspectives. Sci. Educ. 2004, 88, 397–419. [Google Scholar] [CrossRef] [Green Version]

- Kremer, K.; Fischer, H.E.; Kauertz, A.; Mayer, J.; Sumfleth, E.; Walpuski, M. Assessment of standards-based learning outcomes in science education: Perspectives from the German project ESNaS. In Making it Tangible: Learning Outcomes in Science Education; Bernholt, S., Neumann, K., Nentwig, P., Eds.; Waxmann: Münster, Germany, 2012; pp. 201–218. ISBN 978-3830926448. [Google Scholar]

- Mayer, J. Erkenntnisgewinnung als wissenschaftliches Problemlösen [Inquiry as scientific problem solving]. In Theorien in Der Biologiedidaktischen Forschung; Krüger, D., Vogt, H., Eds.; Springer: Berlin, Germany, 2007; pp. 177–186. ISBN 978-3540681656. [Google Scholar]

- Lederman, N.G.; Abd-El-Khalick, F.; Bell, R.L.; Schwartz, R.S. Views of nature of science questionnaire: Toward valid and meaningful assessment of learners’ conceptions of nature of science. JRST 2002, 39, 497–521. [Google Scholar] [CrossRef]

- Upmeier zu Belzen, A.; Krüger, D. Modellkompetenz im Biologieunterricht [Model competence in biology education]. ZfDN 2010, 16, 41–57. Available online: http://archiv.ipn.uni-kiel.de/zfdn/pdf/16_Upmeier.pdf (accessed on 28 May 2018).

- Matthews, M.R. Models in science and in science education: An introduction. Sci. Educ. 2007, 16, 647–652. [Google Scholar] [CrossRef]

- Shen, J.; Confrey, J. From conceptual change to transformative modeling: A case study of an elementary teacher in learning astronomy. Sci. Educ. 2007, 91, 948–966. [Google Scholar] [CrossRef] [Green Version]

- Gilbert, J.K.; Boulter, C.J. Developing Models in Science Education; Kluwer Academic Publishers: Dordrecht, The Netherlands; Boston, MA, USA, 2000; ISBN 978-94-010-0876-1. [Google Scholar]

- Upmeier zu Belzen, A. Unterrichten mit Modelle [Teaching with models]. In Fachdidaktik Biologie; Gropengießer, H., Harms, U., Kattmann, U., Eds.; Aulis Verlag: Halbergmoos, Germany, 2013; pp. 325–334. ISBN 978-3761428689. [Google Scholar]

- Oh, P.S.; Oh, S.J. What Teachers of Science Need to know about models: An overview. Int. J. Sci. Educ. 2011, 33, 1109–1130. [Google Scholar] [CrossRef]

- Grosslight, L.; Unger, C.; Jay, E. Understanding Models and their Use in Science: Conceptions of Middle and High School Students and Experts. JRST 1991, 28, 799–822. [Google Scholar] [CrossRef]

- Justi, R.S.; Gilbert, J.K. Modelling, teachers’ views on the nature of modelling, and implications for the education of modellers. Int. J. Sci. Educ. 2002, 24, 369–387. [Google Scholar] [CrossRef]

- Barak, M.; Hussein-Farraj, R. Integrating model-based learning and animations for enhancing students’ understanding of proteins structure and function. Res. Sci. Educ. 2013, 43, 619–636. [Google Scholar] [CrossRef]

- Lazarowitz, R.; Naim, R. Learning the cell structures with three-dimensional models: Students’ achievement by methods, Type of School and Questions’ Cognitive Level. J. Sci. Educ. Technol. 2013, 22, 500–508. [Google Scholar] [CrossRef]

- Roberts, J.R.; Hagedorn, E.; Dillenburg, P.; Patrick, M.; Herman, T. Physical Models enhance molecular three-dimensional literacy in an introductory biochemistry course. Biochem. Mol. Biol. Educ. 2005, 33, 105–110. [Google Scholar] [CrossRef] [PubMed]

- Rotbain, Y.; Marbach-Ad, G.; Stavy, R. Effect of bead and illustrations models on high school students’ achievement in molecular genetics. JRST 2006, 43, 500–529. [Google Scholar] [CrossRef]

- Creswell, J.W. Educational Research. Planning, Conducting, and Evaluating Quantitative and Qualitative Research; Pearson: Boston, MA, USA, 2012; ISBN 978-0-13-136739-5. [Google Scholar]

- Hodson, D. In search of a meaningful relationship: An exploration of some issues relating to integration in science and science education. Int. J. Sci. Educ. 1992, 14, 541–562. [Google Scholar] [CrossRef]

- Harrison, A.G.; Treagust, D.F. Secondary students’ mental models of atoms and molecules: Implications for teaching chemistry. Sci. Educ. 1996, 80, 509–534. [Google Scholar] [CrossRef]

- Tepner, O.; Borowski, A.; Dollny, S.; Fischer, H.E.; Jüttner, M.; Kirschner, S.; Leutner, D.; Neuhaus, B.J.; Sandmann, A.; Sumfleth, E.; et al. Modell zur Entwicklung von Testitems zur Erfassung des Professionswissens von Lehrkräften in den Naturwissenschaften [Item development model for assessing professional knowledge of science teachers]. ZfDN 2012, 18, 7–28. [Google Scholar]

- Van Driel, J.H.; Verloop, N. Teachers’ knowledge of models and modelling in science. Int. J. Sci. Educ. 1999, 21, 1141–1153. [Google Scholar] [CrossRef]

- Gilbert, J.K.; Boulter, C.J.; Elmer, R. Positioning models in science education and in design and technology education. In Developing Models in Science Education; Gilbert, J.K., Boulter, C.J., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2000; pp. 3–17. [Google Scholar]

- Harrison, A.G. How do teachers and textbook writers model scientific ideas for students? Res. Sci. Educ. 2001, 31, 401–435. [Google Scholar] [CrossRef]

- Fleige, J.; Seegers, A.; Upmeier zu Belzen, A.; Krüger, D. Förderung von Modellkompetenz im Biologieunterricht [Fostering model competence in biology education]. MNU 2012, 65, 19–28. [Google Scholar]

- Nowak, K.H.; Nehring, A.; Tiemann, R.; Upmeier zu Belzen, A. Assessing students’ abilities in processes of scientific inquiry in biology using a paper-and-pencil test. J. Biol. Educ. 2013, 47, 182–188. [Google Scholar] [CrossRef]

- Odenbaugh, J. Idealized, inaccurate but successful: A pragmatic approach to evaluating models in theoretical ecology. Biol. Philos. 2005, 20, 231–255. [Google Scholar] [CrossRef]

- Passmore, C.M.; Svoboda, J. Exploring opportunities for argumentation in modelling classrooms. Int. J. Sci. Educ. 2012, 34, 1535–1554. [Google Scholar] [CrossRef]

- Schwarz, C.V.; Reiser, B.J.; Davis, E.A.; Kenyon, L.; Achér, A.; Fortus, D.; Shwartz, Y.; Hug, B.; Krajcik, J. Developing a learning progression for scientific modeling: Making scientific modeling accessible and meaningful for learners. JRST 2009, 46, 632–654. [Google Scholar] [CrossRef] [Green Version]

- Treagust, D.F.; Chittleborough, G.D.; Mamiala, T.L. Students’ understanding of the role of scientific models in learning science. Int. J. Sci. Educ. 2002, 24, 357–368. [Google Scholar] [CrossRef]

- Clark, D.C.; Mathis, P.M. Modeling mitosis & meiosis. A problem solving activity. Am. Biol. Teach. 2000, 62, 204–206. [Google Scholar]

- Schwarz, C.V.; White, B.Y. Metamodeling knowledge: Developing students’ understanding of scientific modeling. Cognit. Instr. 2005, 23, 165–205. [Google Scholar] [CrossRef]

- Crawford, B.A.; Cullin, M.J. Supporting prospective teachers’ conceptions of modelling in science. Int. J. Sci. Educ. 2004, 26, 1379–1401. [Google Scholar] [CrossRef]

- Smit, J.J.A.; Finegold, M. Models in physics: Perceptions held by final-year prospective physical science teachers studying at South African universities. Int. J. Sci. Educ. 1995, 17, 621–634. [Google Scholar] [CrossRef]

- Van Driel, J.H.; Verloop, N. Experienced teachers’ knowledge of teaching and learning of models and modelling in science education. Int. J. Sci. Educ. 2002, 24, 1255–1272. [Google Scholar] [CrossRef]

- Gogolin, S.; Krüger, D. Students’ understanding of the nature and purpose of models. JRST 2018, 1–26. [Google Scholar] [CrossRef]

- Park, S.; Oliver, J.S. Revisiting the conceptualisation of pedagogical content knowledge (PCK): PCK as a conceptual tool to understand teachers as professionals. Res. Sci. Educ. 2008, 38, 261–284. [Google Scholar] [CrossRef]

- Shulman, L.S. Those who understand: Knowledge growth in teaching. ER 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Van Driel, J.H.; Verloop, N.; de Vos, W. Developing science teachers’ pedagogical content knowledge. JRST 1998, 35, 673–695. [Google Scholar] [CrossRef]

- Depaepe, F.; Verschaffel, L.; Kelchtermans, G. Pedagogical content knowledge: A systematic review of the way in which the concept has pervaded mathematics educational research. Teach. Teach. Educ. 2013, 34, 12–25. [Google Scholar] [CrossRef]

- Shulman, L.S. Knowledge and teaching of the new reform. Harv. Educ. Rev. 1987, 57, 1–22. [Google Scholar] [CrossRef]

- Davis, E.; Kenyon, L.; Hug, B.; Nelson, M.; Beyer, C.; Schwarz, C.; Reiser, B. MoDeLS: Designing supports for teachers using scientific modeling. In Proceedings of the Association for Science Teacher Education, St. Louis, MO, USA, 10 January 2008. [Google Scholar]

- Schwarz, C. A learning progression of elementary teachers’ knowledge and practices for model-based scientific inquiry. In Proceedings of the American Educational Research Association, San Diego, CA, USA, 13–17 April 2009. [Google Scholar]

- Werner, S.; Förtsch, C.; Boone, W.; von Kotzebue, L.; Neuhaus, B.J. Investigation how German biology teachers use models in classroom instruction: A video study. Res. Sci. Educ. 2017. [Google Scholar] [CrossRef]

- Henze, I.; van Driel, J.H.; Verloop, N. Science teachers’ knowledge about teaching models and modelling in the context of a new syllabus on public understanding of science. Res. Sci. Educ. 2007, 37, 99–122. [Google Scholar] [CrossRef]

- Justi, R.; van Driel, J.H. A case study of the development of a beginning chemistry teacher’s knowledge about models and modelling. Res. Sci. Educ. 2005, 35, 197–219. [Google Scholar] [CrossRef]

- Henze, I.; van Driel, J.H.; Verloop, N. Development of experienced science teachers’ pedagogical content knowledge of models of the solar system and the universe. Int. J. Sci. Educ. 2008, 30, 1321–1342. [Google Scholar] [CrossRef]

- Danusso, L.; Testa, I.; Vicentini, M. Improving prospective teachers’ knowledge about scientific models and modelling: Design and evaluation of a teacher education intervention. Int. J. Sci. Educ. 2010, 32, 871–905. [Google Scholar] [CrossRef]

- Justi, R.; van Driel, J.H. The development of science teachers’ knowledge on models and modelling: Promoting, characterizing, and understanding the process. Int. J. Sci. Educ. 2005, 27, 549–573. [Google Scholar] [CrossRef]

- Justi, R.; van Driel, J. The use of the Interconnected Model of Teacher Professional Growth for understanding the development of science teachers’ knowledge on models and modelling. Teach. Teach. Educ. 2006, 22, 437–450. [Google Scholar] [CrossRef]

- Soulios, I.; Psillos, D. Enhancing student teachers’ epistemological beliefs about models and conceptual understanding through a model-based inquiry process. Int. J. Sci. Educ. 2016, 38, 1212–1233. [Google Scholar] [CrossRef]

- Windschitl, M.; Thompson, J.; Braaten, M. How Novice Science Teachers Appropriate Epistemic Discourses Around Model-Based Inquiry for Use in Classrooms. Cognit. Instr. 2008, 26, 310–378. [Google Scholar] [CrossRef]

- Dori, Y.J.; Barak, M. Virtual and Physical Molecular Modeling: Fostering Model Perception and Spatial Understanding. Educ. Technol. Soc. 2001, 4, 61–74. [Google Scholar]

- Werner, S. Zusammenhänge zwischen dem fachspezifischen Professionswissen einer Lehrkraft, dessen Unterrichtsgestaltung und Schülervariablen am Beispiel eines elaborierten Modelleinsatzes [Correlations between teachers‘ subject-specific professional knowledge, their instructional quality and students variable by an elaborate model use]. Ph.D. Thesis, Ludwig-Maximilians Universität München, München, Germany, 2 November 2016. [Google Scholar]

- Helmke, A. Unterrichtsqualität und Lehrerprofessionalität. Diagnose, Evaluation und Verbesserung des Unterrichts [Instructional Quality and Teachers’ Professionalism: Diagnostic, Evaluation and Improvement of Instruction]; Klett: Seelze-Velber, Germany, 2015; ISBN 978-3780010094. [Google Scholar]

- Schmelzing, S.; Wüsten, S.; Sandmann, A.; Neuhaus, B. Fachdidaktisches Wissen und Reflektieren im Querschnitt der Biologielehrerbildung [Pedagogical content knowledge and reflection in frame of biology teacher education]. ZfDN 2010, 16, 189–207. [Google Scholar]

- Ergönenc, J.; Neumann, K.; Fischer, H.E. The impact of pedagogical content knowledge on cognitive activation and students learning. In Quality of Instruction in Physics: Comparing Finland, Germany and Switzerland; Fischer, H.E., Labudde, P., Neumann, K., Viiri, J., Eds.; Waxmann: Münster, Germany, 2014; pp. 145–160. ISBN 978-3-8309-3055-6. [Google Scholar]

- Kunter, M.; Klusmann, U.; Baumert, J.; Richter, D.; Voss, T.; Hachfeld, A. Professional competence of teachers: Effects on instructional quality and student development. J. Educ. Psychol. 2013, 105, 805–820. [Google Scholar] [CrossRef]

- Förtsch, C.; Werner, S.; von Kotzebue, L.; Neuhaus, B.J. Effects of biology teachers’ professional knowledge and cognitive activation on students’ achievement. Int. J. Sci. Educ. 2016, 17, 2642–2666. [Google Scholar] [CrossRef]

- Bayerisches Staatsministerium für Unterricht und Kultus [BSfUK]. Lehrplan für das Gymnasium in Bayern [Curriculum for Secondary School in Bavaria]; Kastner: Wolnzach, Germany, 2004; ISBN 978-3937082202. [Google Scholar]

- Hanson, W.E.; Creswell, J.W.; Clark, V.L.P.; Petska, K.S.; Creswell, J.D. Mixed methods research designs in counseling psychology. J. Counsel. Psychol. 2005, 52, 224–235. [Google Scholar] [CrossRef]

- Jüttner, M.; Boone, W.; Park, S.; Neuhaus, B.J. Development and use of a test instrument to measure biology teachers’ content knowledge (CK) and pedagogical content knowledge (PCK). EAEA 2013, 25, 45–67. [Google Scholar] [CrossRef]

- Jüttner, M.; Neuhaus, B.J. Das Professionswissen von Biologielehrkräften. Ein Vergleich zwischen Biologielehrkräften, Biologen und Pädagogen [Biology teachers’ professional knowledge. A comparison of biology teachers, biologists and pedagogues]. ZfDN 2013, 19, 31–49. [Google Scholar]

- Jüttner, M.; Neuhaus, B.J. Validation of a paper-and-pencil test instrument measuring biology teachers’ pedagogical content knowledge by using think-aloud interviews. JETS 2013, 1, 113–125. [Google Scholar] [CrossRef]

- Bond, T.G.; Fox, C.M. Applying the Rasch Model. Fundamental Measurement in the Human Sciences, 2nd ed.; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 2007; ISBN 978-0805854626. [Google Scholar]

- Linacre, J.M. A User’s Guide to Winsteps/Ministep: Rasch-Model Computer Programs. Available online: http://www.winsteps.com/manuals.htm (accessed on 28 May 2018).

- Boone, W.J.; Staver, J.R.; Yale, M.S. Rasch Analysis in the Human Sciences; Springer: Dordrecht, The Netherlands, 2014; ISBN 978-94-007-6857-4. [Google Scholar]

- Grünkorn, J.; Upmeier zu Belzen, A.; Krüger, D. Assessing students’ understandings of biological models and their use in science to evaluate a theoretical framework. Int. J. Sci. Educ. 2014, 36, 1–34. [Google Scholar] [CrossRef]

- Justi, R.; Gilbert, J.K. Teachers’ views on the nature of models. Int. J. Sci. Educ. 2003, 25, 1369–1386. [Google Scholar] [CrossRef]

- Kauertz, A.; Fischer, H.E.; Mayer, J.; Sumfleth, E.; Walpusik, M. Standardbezogene Kompetenzmodellierung in den Naturwissenschaften der Sekundarstufe I [Modeling competence according to standards for science education in secondary schools]. ZfDN 2010, 16, 135–153. [Google Scholar]

- Wadouh, J.; Liu, N.; Sandmann, A.; Neuhaus, B.J. The effect of knowledge linking levels in biology lessons upon students’ knowledge structure. Int. J. Sci. Math. Educ. 2014, 12, 25–47. [Google Scholar] [CrossRef]

- Wüsten, S. Allgemeine und Fachspezifische Merkmale der Unterrichtsqualität im Fach Biologie. Eine Video- und Interventionsstudie [General and Content-Specific Features of Instructional Quality in the Subject Biology: A Video and Intervention Study]; Logos: Berlin, Germany, 2010; ISBN 978-3-8325-2668-9. [Google Scholar]

- Baek, H.; Schwarz, C.; Chen, J.; Hokayem, H.; Zhan, L. Engaging elementary students in scientific modeling: The MoDeLS fifth-grade approach and findings. In Models and Modeling: Cognitive Tools for Scientific Enquiry; Khine, M.S., Saleh, I.M., Eds.; Springer: Dordrecht, The Netherlands, 2011; pp. 195–220. ISBN 978-94-007-0449-7. [Google Scholar]

- Rimmele, R. Videograph 4.2.1.22.X3 [Computer Software]. 2012. Available online: www.dervideograph.de (accessed on 6 August 2018).

- Krathwohl, D.R. A revision of Bloom’s taxonomy: An overview. Theory Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Chi, M.T.H.; Wylie, R. The ICAP framework: Linking cognitive engagement to active learning outcomes. Educ. Psychol. 2014, 49, 219–243. [Google Scholar] [CrossRef]

- Tekkumru-Kisa, M.; Stein, M.K.; Schunn, C. A framework for analyzing cognitive demand and content-practices integration: Task analysis guide in science. JRST 2015, 52, 659–685. [Google Scholar] [CrossRef]

- Stein, M.K.; Smith, M.S.; Henningsen, M.A.; Silver, E.A. Implementing Standard-Based Mathematics Instruction. A Casebook for Professional Development; Teachers College, Columbia University: New York, NY, USA, 2009; ISBN 978-0807739075. [Google Scholar]

- Wild, E.; Gerber, J.; Exeler, J.; Remy, K. Dokumentation der Skalen- und Item-Auswahl für den Kinderfragebogen zur Lernmotivation und zum Emotionalen Erleben [Documentation of the Scales and Items of the Questionnaire on Motivation and Emotional Experience]; Universität Bielefeld: Universitätsstraße, Germany, 2001. [Google Scholar]

- Muthén, L.K.; Muthén, B.O. Mplus User’s Guide, 7th ed.; Muthén & Muthén: Los Angeles, CA, USA, 2012; Available online: https://www.statmodel.com/download/usersguide/Mplus%20user%20guide%20Ver_7_r3_web.pdf (accessed on 28 May 2018).

- Hu, L.-T.; Bentler, P.M. Fit indices in covariance structure modeling: Sensitivity to underparameterized model misspecification. Psychol. Methods 1998, 3, 424–453. [Google Scholar] [CrossRef]

- Baron, R.M.; Kenny, D.A. The moderator-mediator variable distinction in social psychological research: Conceptual, strategic, and statistical considerations. J. Pers. Soc. Psychol. 1986, 51, 1173–1182. [Google Scholar] [CrossRef] [PubMed]

- MacKinnon, D.P. Introduction to Statistical Mediation Analysis; Lawrence Erlbaum Associates: New York, NY, USA, 2008; ISBN 978-0805864298. [Google Scholar]

- Preacher, K.J.; Hayes, A.F. Asymptotic and resampling strategies for assessing and comparing indirect effects in multiple mediator models. Behav. Res. Methods 2008, 40, 879–891. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education; Routledge: London, UK, 2011; ISBN 978-0415583367. [Google Scholar]

- Stake, R.E. The Art of Case Study Research; SAGE Publications: Thousand Oaks, CA, USA, 1995; ISBN 978-0803957671. [Google Scholar]

- Yin, R.K. Case Study Research. Design and Methods, 5th ed.; SAGE: Los Angeles, CA, USA, 2014; ISBN 978-1452242569. [Google Scholar]

- Creswell, J.W. Research Design. Qualitative, Quantitative, and Mixed Methods Approaches; SAGE: Los Angeles, CA, USA, 2009; ISBN 978-1412965576. [Google Scholar]

- Glaser, B.G.; Strauss, A.L. Grounded Theory. Strategien Qualitativer Forschung [Strategies of qualitative reserach], 2nd ed.; Huber: Bern, Switzerland, 2005; ISBN 3456842120. [Google Scholar]

- Charmaz, K. Constructing Grounded Theory, 2nd ed.; SAGE: London, UK; Thousand Oaks, CA, USA, 2014; ISBN 9780857029133. [Google Scholar]

- Kunter, M.; Baumert, J.; Blum, W.; Klusmann, U.; Krauss, S.; Neubrand, M. Cognitive Activation in the Mathematics Classroom and Professional Competence of Teachers. Results from the COACTIV Project; Springer: New York, NY, USA, 2013; ISBN 978-1-4614-5149-5. [Google Scholar]

- Bauer, J.; Diercks, U.; Retelsdorf, J.; Kauper, T.; Zimmermann, F.; Köller, O.; Möller, J.; Prenzel, M. Spannungsfeld Polyvalenz in der Lehrerbildung [Polyvalence of teacher training programms]. Zeitschrift für Erziehungswissenschaft 2011, 14, 629–649. [Google Scholar] [CrossRef]

- Ball, D.L.; Thames, M.H.; Phelps, G. Content knowledge for teaching: What makes it special? JRST 2008, 59, 389–407. [Google Scholar]

- Gess-Newsome, J. Pedagogical Content Knowledge. In International Guide to Student Achievement; Hattie, J., Anderman, E.M., Eds.; Routledge: New York, NY, USA, 2013; pp. 257–259. ISBN 978-0415879019. [Google Scholar]

- Lederman, N.G.; Lederman, J.S. The status of preservice science Teacher education: A global perspective. JRST 2015, 26, 1–6. [Google Scholar] [CrossRef]

- Großschedl, J.; Mahler, D.; Kleickmann, T.; Harms, U. Content-related knowledge of biology teachers from secondary schools: Structure and learning opportunities. Int. J. Sci. Educ. 2014, 36, 2335–2366. [Google Scholar] [CrossRef]

- Sczudlek, M.; Borowski, A.; Fischer, H.E.; Kirschner, S.; Lenske, G.; Leutner, D.; Sumfleth, E.; Tepner, O.; Wirth, J.; Neuhaus, B.J. Secondary science teachers’ PCK, CK and PK: Their interplay. 2018; manuscript in preparation. [Google Scholar]

- Förtsch, C.; Werner, S.; Dorfner, T.; von Kotzebue, L.; Neuhaus, B.J. Effects of cognitive activation in biology lessons on students‘ situational interest and achievement. Res. Sci. Educ. 2017, 47, 559–578. [Google Scholar] [CrossRef]

- Craik, F.I.M.; Lockhart, R.S. Levels of processing: A framework for memory research. J. Verbal Learn. Verbal Behav. 1972, 11, 671–684. [Google Scholar] [CrossRef]

- Förtsch, C.; Werner, S.; von Kotzebue, L.; Neuhaus, B.J. Effects of high-complexity and high-cognitive level instructional tasks in biology lessons on students’ factual and conceptual knowledge. Res. Sci. Technol. Educ. 2017, 36, 1–22. [Google Scholar] [CrossRef]

- Lipowsky, F.; Rakoczy, K.; Pauli, C.; Drollinger-Vetter, B.; Klieme, E.; Reusser, K. Quality of geometry instruction and its short-term impact on students’ understanding of the Pythagorean Theorem. Learn. Instr. 2009, 19, 527–537. [Google Scholar]

- Krell, M.; Upmeier zu Belzen, A.; Krüger, D. Students’ levels of understanding models and modelling in biology: Global or aspect-dependent? Res. Sci. Educ. 2014, 44, 109–132. [Google Scholar] [CrossRef]

- Sins, P.H.M.; Savelsbergh, E.R.; van Joolingen, W.R.; van Hout-Wolters, B.H.A.M. The relation between students’ epistemological understanding of computer models and their cognitive processing on a modelling task. Int. J. Sci. Educ. 2009, 31, 1205–1229. [Google Scholar] [CrossRef]

- Sins, P.H.M.; Savelsberg, E.R.; van Joolingen, W.R.; Hout-Wolters, B. Effects of face-to-face versus chat communication on performance in a collaborative inquiry modeling task. Comput. Educ. 2011, 56, 379–387. [Google Scholar] [CrossRef]

- Fischer, H.E.; Labudde, P.; Neumann, K.; Viiri, J. Quality of Instruction in Physics: Comparing Finland, Germany and Switzerland; Waxmann: Münster, Germany, 2014; ISBN 978-3-8309-3055-6. [Google Scholar]

- Roth, K.J.; Garnier, H.E.; Chen, C.; Lemmens, M.; Schwille, K.; Wickler, N.I.Z. Videobased lesson analysis: Effective science PD for teacher and student learning. JRST 2011, 48, 117–148. [Google Scholar] [CrossRef]

- Vosniadou, S. Capturing and modeling the process of conceptual change. Learn. Instr. 1994, 4, 45–69. [Google Scholar] [CrossRef] [Green Version]

| Aspect of Elaborate Model Use | Category in Category System | Gradation of the Category |

|---|---|---|

| Characteristics of the model | Level of abstraction | Low, middle, or high abstraction compared with real objects |

| Level of complexity | Showing facts, relations, or concept | |

| Fitting to the learning goal | No fitting or fitting of level of abstraction and level of complexity to the learning goal | |

| The way the model is integrated into instruction | Purpose of the model | Illustration or tool for scientific reasoning |

| Introduction of the model | No, short or detailed introduction of the model | |

| Students working with the model | Teachers vs. students with teacher working with models | |

| The way the model is used to foster scientific reasoning | Predict scientific phenomena (scientific inquiry) | No formulation vs. formulation of scientific research questions or hypothesis |

| Revise models (scientific inquiry) | No regard or regard to scientific research questions or hypothesis | |

| Critical reflection | No critical reflectionf, incidentally critical reflection naming one aspect, incidentally critical reflection naming more than one fact, detailed critical reflection naming one fact or detailed critical reflection naming more than one fact |

| Example of an Item | Sample Solution | Literature | |

|---|---|---|---|

| Factual knowledge | Note a mnemonic sentence that describes all the important characteristics of a reflex. | A reflex is a congenital protective function without processing in cerebrum, follows according to a fixed scheme and can be rare deliberately influenced. | Factual knowledge tasks including questions on specific definitions, terminology or details in biology [79] |

| Conceptual knowledge | Explain how the human nervous system is adapted to humans’ way of living. Give two examples. | e.g., centralization of the human nerve system for more complex movements. | Conceptual tasks were characterized by formulating relations between single facts [79]—core ideas of NES in Germany [2] |

| Scientific reasoning | In the figure below, you can see an experiment about hearing. Please make one hypothesis on what will be tested in this experiment. | This experiment tests if and under which preconditions a human has directional hearing. | Generating hypothesis as part of the dimension “scientific inquiry” of NES [2,7] |

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|---|---|

| Class level | ||||||||

| Teacher variable | ||||||||

| 1. Pedagogical content knowledge (PCK) a | −0.34 | 0.52 | - | |||||

| 2. Content knowledge (CK) a | 0.93 | 0.47 | 0.14 | - | ||||

| Instructional variable | ||||||||

| 3. ELMO a | 1.12 | 1.27 | 0.35 * | −0.04 | - | |||

| Student level | ||||||||

| 4. Student achievement in posttest a | 0.04 | 0.48 | 0.06 | −0.09 ** | 0.13 *** | - | ||

| 5. Student achievement in pretest a | −0.44 | 0.61 | −0.12 *** | −0.05 | −0.17 *** | 0.32 *** | - | |

| 6. Willingness to make an effort b | 3.4 | 0.57 | 0.04 | −0.02 | 0.03 | 0.24 *** | 0.11 ** | - |

| Model a | Achievement in Posttest | |

|---|---|---|

| β | SE | |

| 1a | ||

| PCK | 0.20 | 0.17 |

| 1b | ||

| CK | −0.20 | 0.17 |

| 1c | ||

| PCK | 0.23 | 0.17 |

| CK | −0.23 | 0.17 |

| Mediator Variable | Dependent Variable | |||

|---|---|---|---|---|

| ELMO | Achievement in Posttest | |||

| β | SE | β | SE | |

| Class level | ||||

| Teacher variables | ||||

| PCK | 0.36 * | 0.15 | 0.10 | 0.18 |

| CK | −0.09 | 0.16 | −0.19 | 0.16 |

| Instructional variable | ||||

| ELMO | 0.38 * | 0.17 | ||

| R² | 0.13 | 0.17 | ||

| Student level | ||||

| Achievement in pretest | 0.38 *** | 0.03 | ||

| Willingness to make an effort | 0.19 *** | 0.03 | ||

| R² | 0.19 | |||

| Tom | Robert | Julian | Michael | Maria | |

|---|---|---|---|---|---|

| Age | 40 | 37 | 29 | 28 | 29 |

| Teaching experience in years | 9 | 5 | 3 | 2 | 1 |

| Taught subject besides biology | chemistry | chemistry | chemistry | chemistry | chemistry |

| Evaluation of their teacher preparation in biology education content (biology) | sufficient (D) * sufficient (D) | sufficient (D) very good (A) | satisfactory (C) sufficient (D) | good (B) good (B) | good (B) good (B) |

| Number of lessons per week teaching biology | 6 | 10 | 15 | 6 | 15 |

| Topic of their selected lessons | accommodation in the human eye | structure of the human eye | structure and function of the human spine and spinal cord with the context of paraplegia | reflex | reflex |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Förtsch, S.; Förtsch, C.; Von Kotzebue, L.; Neuhaus, B.J. Effects of Teachers’ Professional Knowledge and Their Use of Three-Dimensional Physical Models in Biology Lessons on Students’ Achievement. Educ. Sci. 2018, 8, 118. https://doi.org/10.3390/educsci8030118

Förtsch S, Förtsch C, Von Kotzebue L, Neuhaus BJ. Effects of Teachers’ Professional Knowledge and Their Use of Three-Dimensional Physical Models in Biology Lessons on Students’ Achievement. Education Sciences. 2018; 8(3):118. https://doi.org/10.3390/educsci8030118

Chicago/Turabian StyleFörtsch, Sonja, Christian Förtsch, Lena Von Kotzebue, and Birgit J. Neuhaus. 2018. "Effects of Teachers’ Professional Knowledge and Their Use of Three-Dimensional Physical Models in Biology Lessons on Students’ Achievement" Education Sciences 8, no. 3: 118. https://doi.org/10.3390/educsci8030118