First, the institutional data for the universities is provided (ranking position, student population and research expenditures), describing the main figures obtained and showing the correlation between the indicators used. Second, the same procedure is followed to display the web data from the universities (page count, mention, impact, and usage indicators). Finally, a correlation between the institutional and web data is provided.

3.1. University Institutional Data

The John Hopkins University has the highest expenditures by far on Research ($1,680,927,000; shown in Annex A) in the period analyzed, followed by the University of Wisconsin-Madison ($881,777,000) and the University of Michigan-Ann Arbor ($876,390,000). However, eight universities did not provide this indicator. For the corresponding position in university rankings, all 100 universities are ranked in the Forbes college rankings, but five universities (Wayne State University, University of Houston, University of Nevada-Las Vegas, Nova Southeastern University, and University of North Texas) are not positioned in the US News and World Report ranking.

There is a wide statistical range in student population between the smallest-ranked university (Rice University, with 5,576 students) and the largest-ranked university (Arizona State University at the Tempe Campus, with 68,064). Apart from the latter, only four other universities—within the sample—surpass 50,000 students (very big universities): Ohio State University-Main Campus (55,014), University of Minnesota-Twin Cities (51,659), the University of Texas at Austin (50,995), and the University of Florida (50,691). After eliminating universities without data in any of the four institutional parameters, a correlation matrix is obtained (

Table 2).

Table 2 clearly shows a high correlation between rankings (r = 0.92) and a lower correlation between rankings and the remaining variables (research expenditures and student population). This effect could be easily explained because the two rankings used employ many indicators (not only research-oriented), so that ranking is determined by not only research performance but also influence. Negative correlations showed are due to the fact that top positions in ranking are the best.

Table 2.

Correlation between institutional data.

Table 2.

Correlation between institutional data.

| Rank (US News and World Report) | Rank (Forbes) | Research Expenditures | Student Population |

|---|

| Rank (USN) | 1 | | | |

| Rank (FORBES) | 0.923 * | 1 | | |

| R. Expenditures | −0.467 * | −0.332 * | 1 | |

| Student population | 0.368 * | 0.380 * | 0.314 * | 1 |

In any case, the high correlation between the US News and World Report and Forbes rankings should be examined carefully. If the rankings are compared, important differences are found: within the top 50 universities in the US News and World Report ranking, up to 11 universities have a difference of more than 100 positions in the Forbes ranking, such as the University of Southern California (23rd on US News and World Report and 165th on Forbes), the University of Wisconsin-Madison (42nd on US News and World Report and 165th on Forbes), and the University of Texas-Austin (45th on US News and World Report and 185th on Forbes). Going down the table, the differences increase even more. An explanation of these discrepancies can be found in the methodological differences in the rankings. Moreover, in the US News and World Report rankings, there are ties (for example, first place is shared by Harvard University and Princeton University, and no university is ranked second). This procedure affects the statistical calculation of the correlations. Otherwise, the total student population is weakly correlated with the remaining indicators, especially with research expenditures (r = 0.31). The sample analyzed is composed of the top 100 universities with higher expenditures on academic libraries, not the top total research expenditures or the top total student population.

3.2. University Web Data

A total of 104 URLs were gathered from the sample because four universities maintain two active web domains: University of Illinois at Urbana-Champaign (uiuc.edu; illinois.edu), Ohio State University-Main Campus (osu.edu, ohio-state.edu), Southern Illinois University Carbondale (siuc.edu, siu.edu), and Stony Brook University (stonybrook.edu, sunysb.edu). All URLs with all values gathered for each indicator are available in Annex B. The web domains with the highest page counts are the following: psu.edu (Pennsylvania State University, 1,400,000 pages), stanford.edu (Stanford University, 1,290,000 pages), umich.edu University of Michigan-Ann Arbor, 1,050,000 pages), and harvard.edu (Harvard University, 1,000,000 pages). No other university surpass 1,000,000 pages in the period analyzed.

If the number of URL mentions (Yahoo!) is considered, there are slight differences in the universities’ rankings. For example, psu.edu moves from first place on the page count list (Yahoo!) to 21st position in URL mentions; and umich.edu moves from second place to 11th. However, Stanford University (6,030,000 mentions) and MIT (5,810,000 mentions) become the two universities with more URL mentions.

External links provide other interesting results. The web domain that has the most links is purdue.edu (Purdue University-Main Campus, 16,584,698 links), followed by psu.edu (11,797,997 links) and cornell.edu (Cornell University, 11,195,178 links). Regarding total visits, the data shows that only 11 websites received more than 10,000,000 total visits during the month considered in the study. The most visited website, by far, was umn.edu (University of Minnesota-Twin Cities, 3,826,259 visits), followed by Purdue University (2,103,405 visits) and Harvard University (1,639,311 visits).

Despite some differences in the top rankings (due to statistical noise, especially in the low positions), a high similarity is obtained between the results of the different indicators, as the correlation analysis shows (

Table 3). The fact that university web indicators may be strongly related to size (and other organizational characteristics) may account for their compactness. For that reason, all web data indicators were divided by student population (marked as “p”) in order to additionally calculate correlations using this ratio instead of the raw values.

Table 3.

Correlation among web data at university level (raw data and normalized by population).

Table 3.

Correlation among web data at university level (raw data and normalized by population).

| Count (Y) | PDF (G) | PDF (Y) | URL (G) | URL(Y) | Links (M) | Links (O) | DmR | Visits (C) |

| Count (Y) | 1 | | | | | | | | |

| PDF (G) | 0.847* | 1 | | | | | | | |

| PDF (Y) | 0.917* | 0.908* | 1 | | | | | | |

| URL (G) | 0.840* | 0.778* | 0.854* | 1 | | | | | |

| URL (Y) | 0.753* | 0.727* | 0.759* | 0.759* | 1 | | | | |

| Links (M) | 0.856* | 0.752* | 0.832* | 0.788* | 0.765* | 1 | | | |

| Links (O) | 0.767* | 0.673* | 0.781* | 0.698* | 0.659* | 0.816* | 1 | | |

| DmR | 0.914* | 0.819* | 0.900* | 0.893* | 0.777* | 0.883* | 0.769* | 1 | |

| Visits (C) | 0.903* | 0.844* | 0.896* | 0.783* | 0.734* | 0.836* | 0.751* | 0.871* | 1 |

| Count (Y)/p | PDF (G)/p | PDF (Y)/p | URL (G)/p | URL(Y)/p | Links (M)/p | Links (O)/p | DmR | Visits (C)/p |

| Count (Y)/p | 1 | | | | | | | | |

| PDF (G)/p | 0.767* | 1 | | | | | | | |

| PDF (Y)/p | 0.895* | 0.848* | 1 | | | | | | |

| URL (G)/p | 0.869* | 0.759* | 0.853* | 1 | | | | | |

| URL (Y)/p | 0.742* | 0.706* | 0.765* | 0.742* | 1 | | | | |

| Links (M)/p | 0.836* | 0.687* | 0.804* | 0.758* | 0.711* | 1 | | | |

| Links (O)/p | 0.675* | 0.519* | 0.688* | 0.628* | 0.621* | 0.813* | 1 | | |

| DmR | 0.729* | 0.636* | 0.733* | 0.693* | 0.621* | 0.715* | 0.602* | 1 | |

| Visits (C)/p | 0.866* | 0.774* | 0.851* | 0.823* | 0.730* | 0.821* | 0.622* | 0.692* | 1 |

The elevated correlation values obtained show coherence among web-oriented indicators in the analyzed sample, regardless the type of indicator or the source, both for non-normalized values and normalized by student population.

As regards raw data, the lowest results values were detected for links measured with Open Site Explorer (with a lower coverage than Majestic), specifically when compared with the URL mentions for Google (r = 0.698) and Yahoo! (r = 0.659). This coherence among web indicators also shows that some could be used as substitutes for the others. For example, PDF Count by Google can be used instead of PDF Count by Yahoo! (less coverage) or Global Count by Yahoo! (less accurate). Likewise, links measured by Majestic can substitute links from Open Site Explorer (less coverage) and URL mentions (less accurate). Finally, unexpectedly, a high correlation was found between Count (Y) and DmR (0.914) and Visits (0.903).

If web data weighted by student population is considered, the correlation values are slightly lower but still statistically significant, showing similar patterns, specifically the great correlation of Count (Y) and Visits (C) with the remaining indicators, and the lower correlation achieved by Links (O). These results reinforce the high coherence among different university web indicators with independence of size.

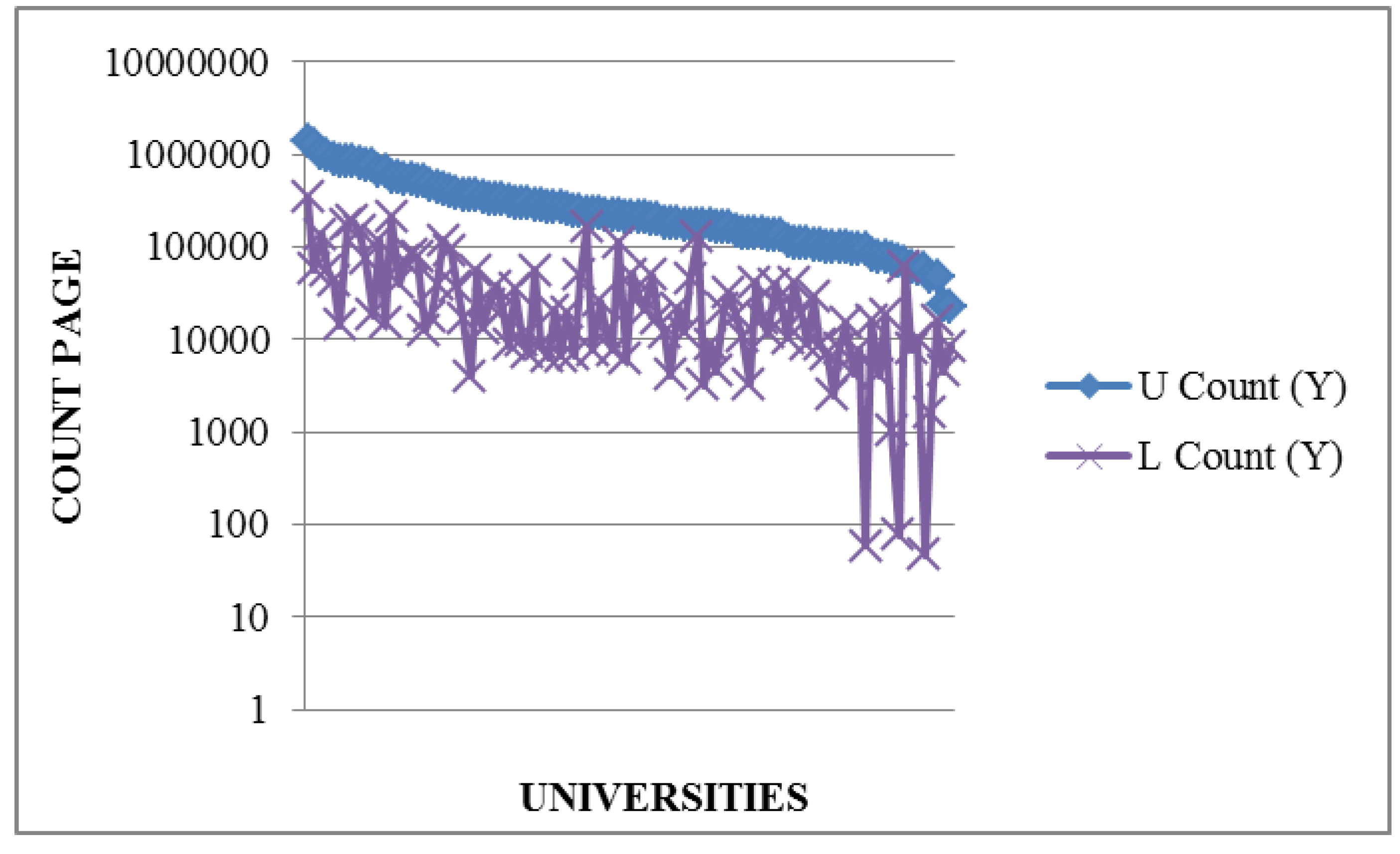

Figure 1 shows the performance distribution for count-like indicators, whereas

Figure 2 focuses on impact indicators (mentions and links). In general, the correlation among the indicators is well reflected, except for some URLs that show unexpected high or low values. For example, for the Count (Google) indicator, psu.edu, mit.edu, uiowa.edu, and uoregon.edu show elevated values if compared with the remaining count-like indicators.

Figure 1.

Distribution of page count indicators for URLs.

Figure 1.

Distribution of page count indicators for URLs.

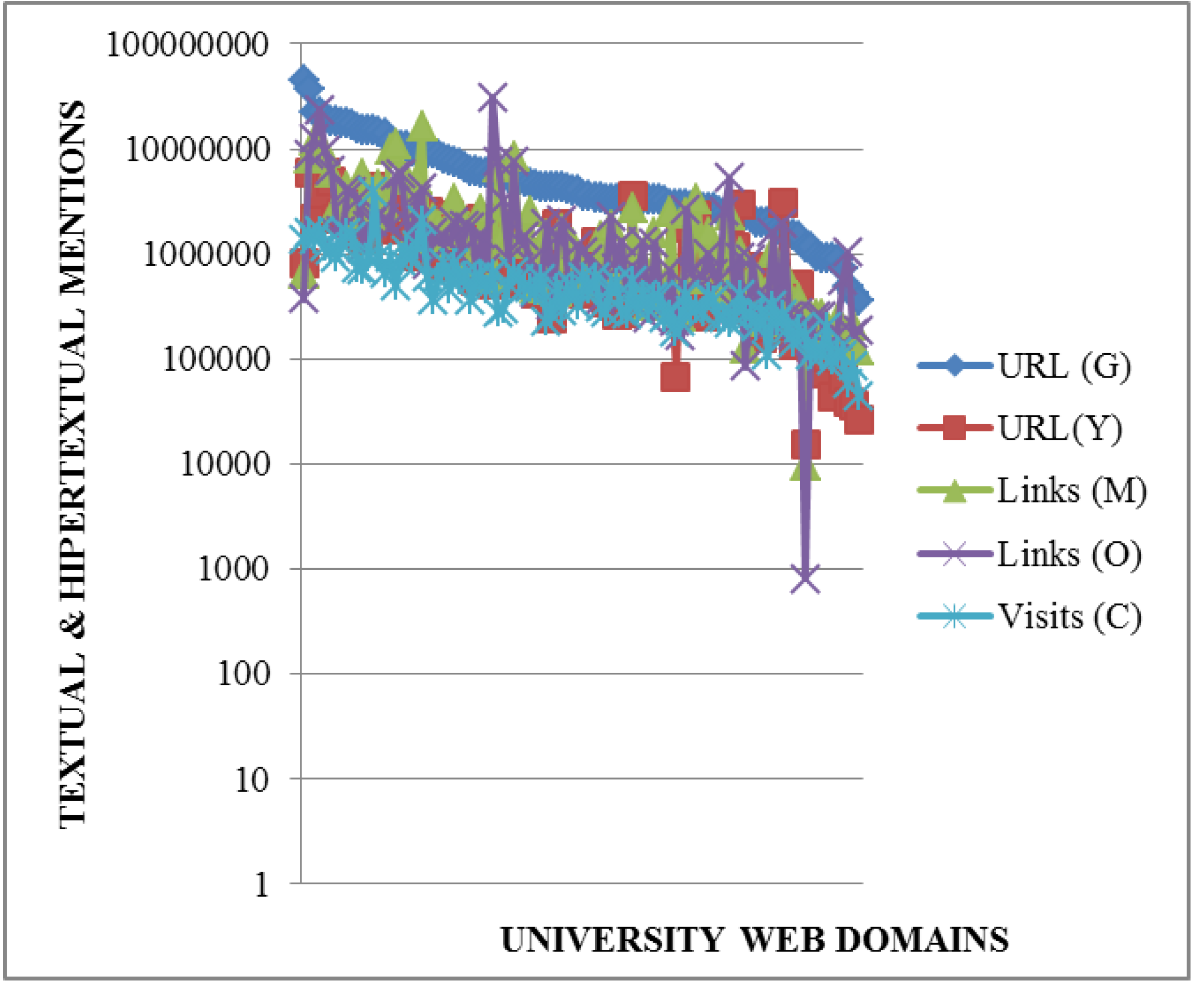

Figure 2.

Distribution of impact indicators for URLs.

Figure 2.

Distribution of impact indicators for URLs.

Regarding impact (shown in logarithmic scale to facilitate the display), a high value for buffalo.edu and a low value for manoa.hawai.edu are identified for Links (Open Site Explorer). Likewise, a low value for URL mentions (Yahoo!) is detected for bc.edu, for links (Open Site Explorer), among other inconsistencies. In addition, there are generally low values for audience in terms of mentions and links indicators (

Figure 2).