Bio-Inspired Principles Applied to the Guidance, Navigation and Control of UAS

Abstract

:1. Introduction

1.1. Current Limitations of Unmanned Aerial System (UAS)

1.1.1. Navigation

1.1.2. Situational Awareness

1.1.3. Intelligent Autonomous Guidance

1.2. Vision-Based Techniques and Biological Inspiration

1.3. Objective and Paper Overview

2. Experimental Platform

2.1. Overview of Platform

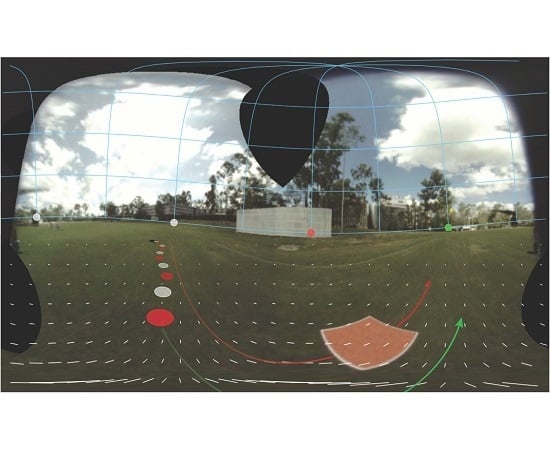

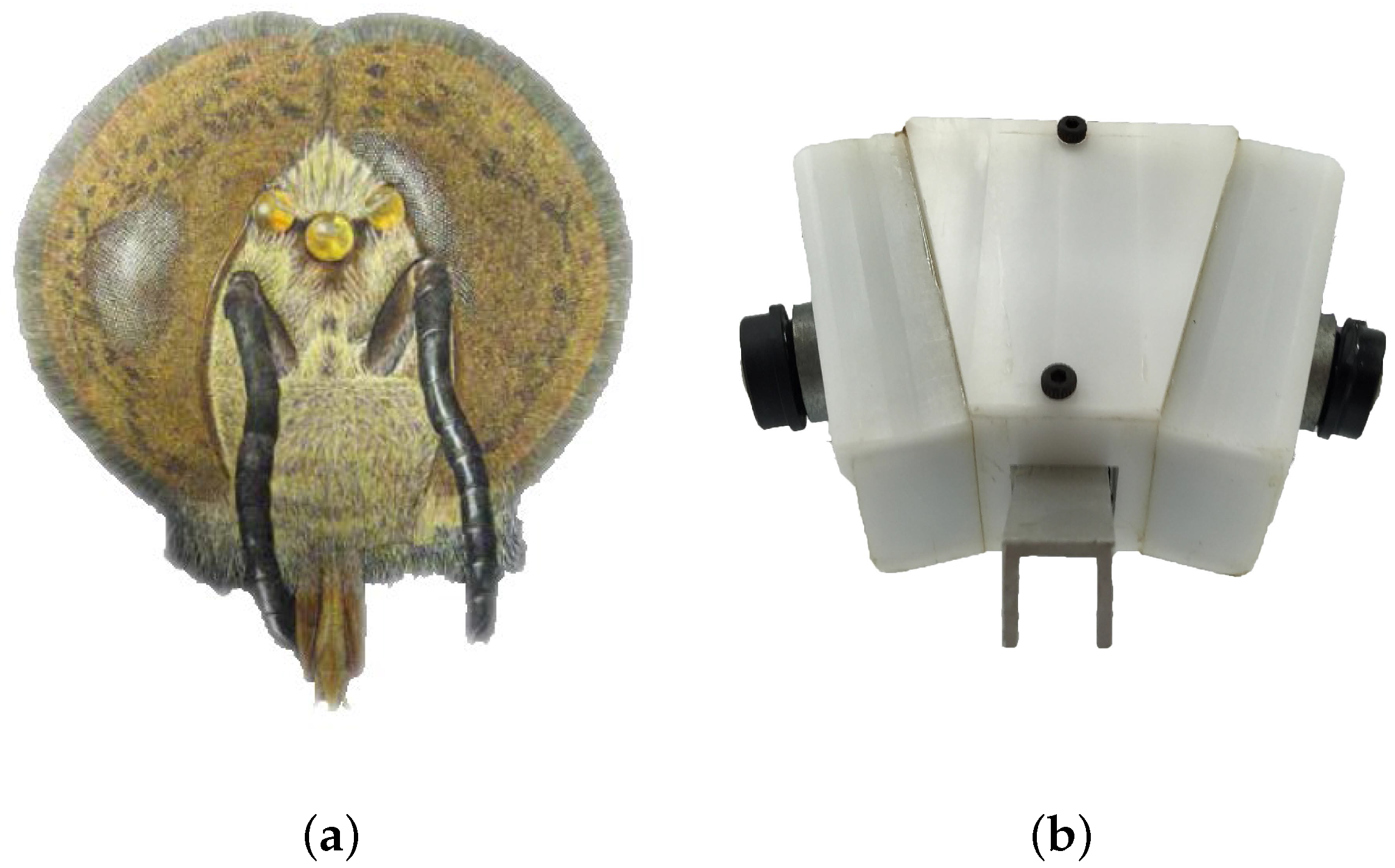

2.2. Biologically Inspired Vision System

2.3. Control Architecture

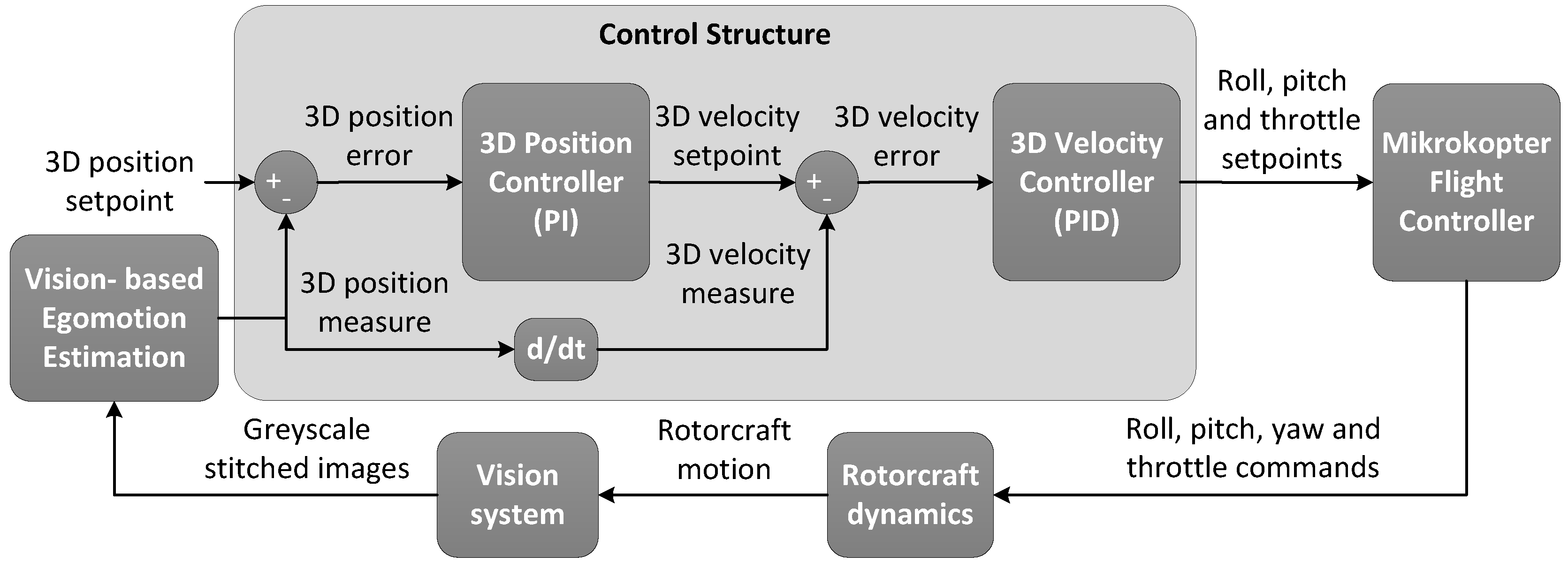

3. Navigation

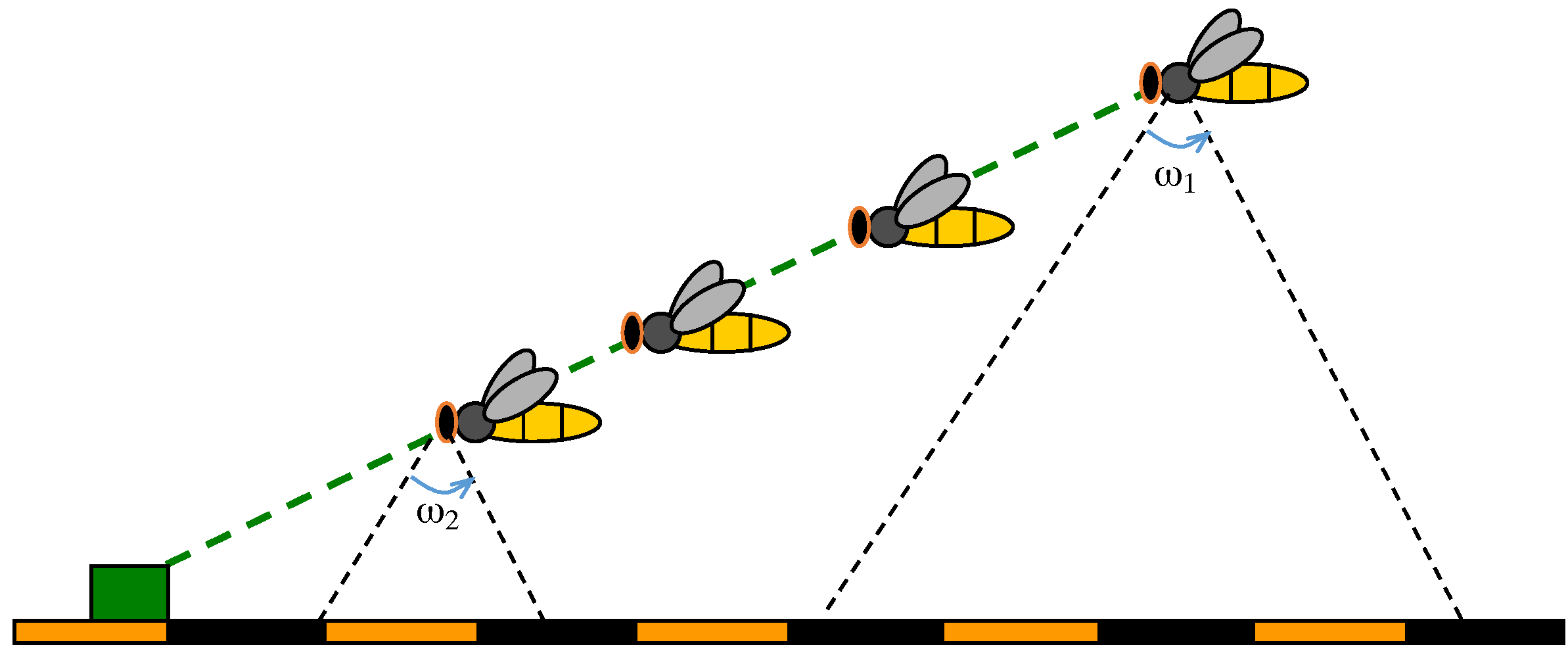

3.1. Optic Flow-Based Navigation

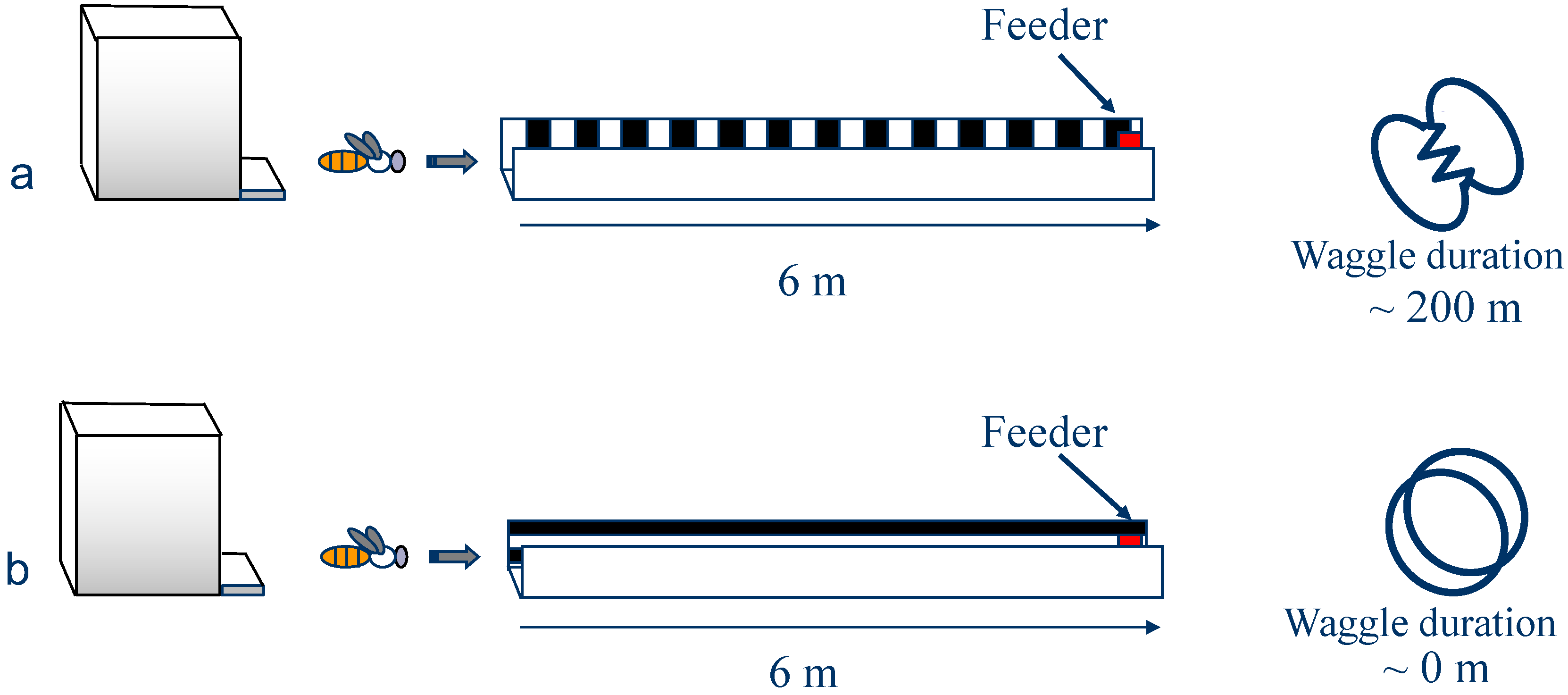

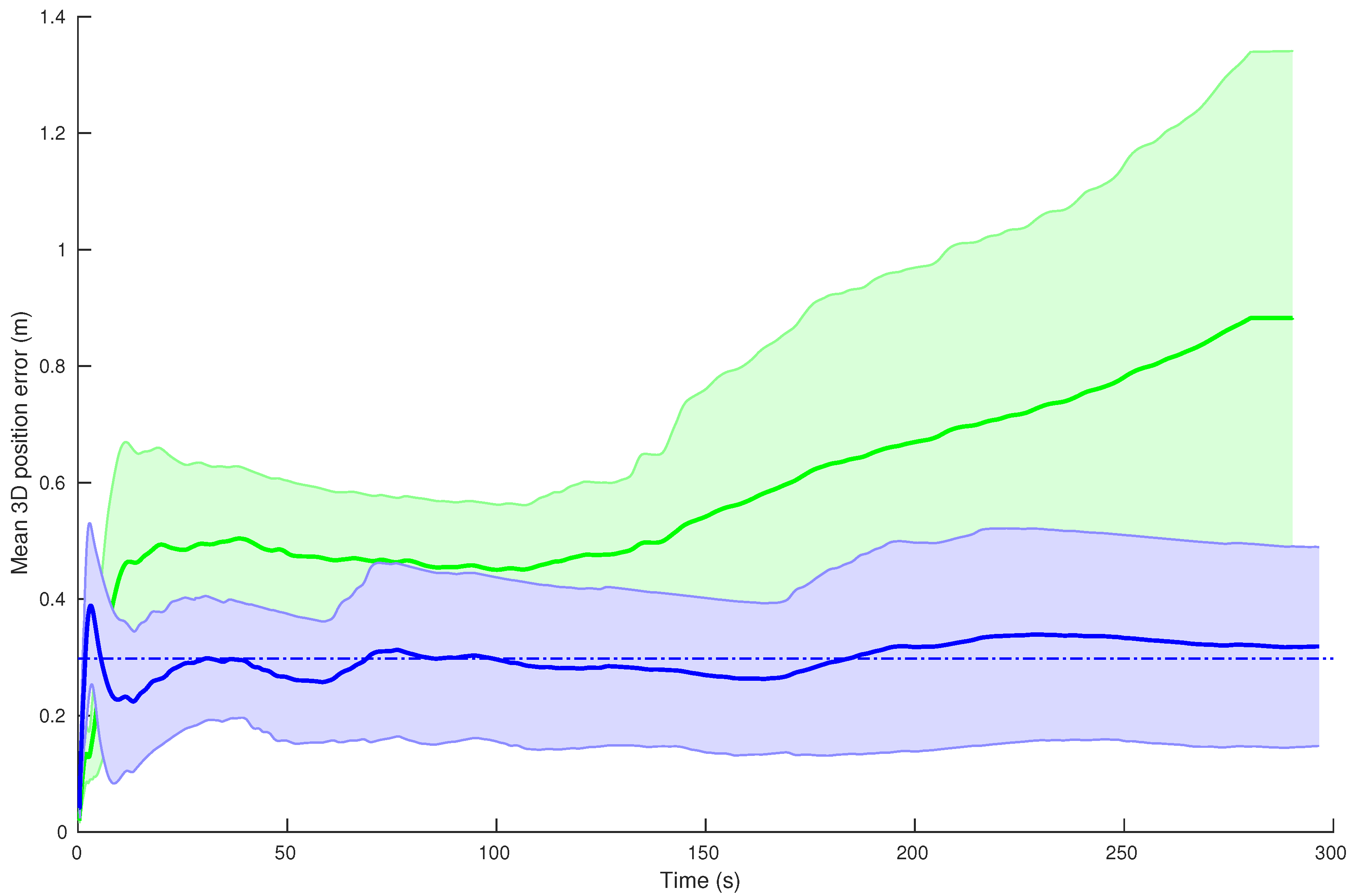

3.1.1. Visual Odometry

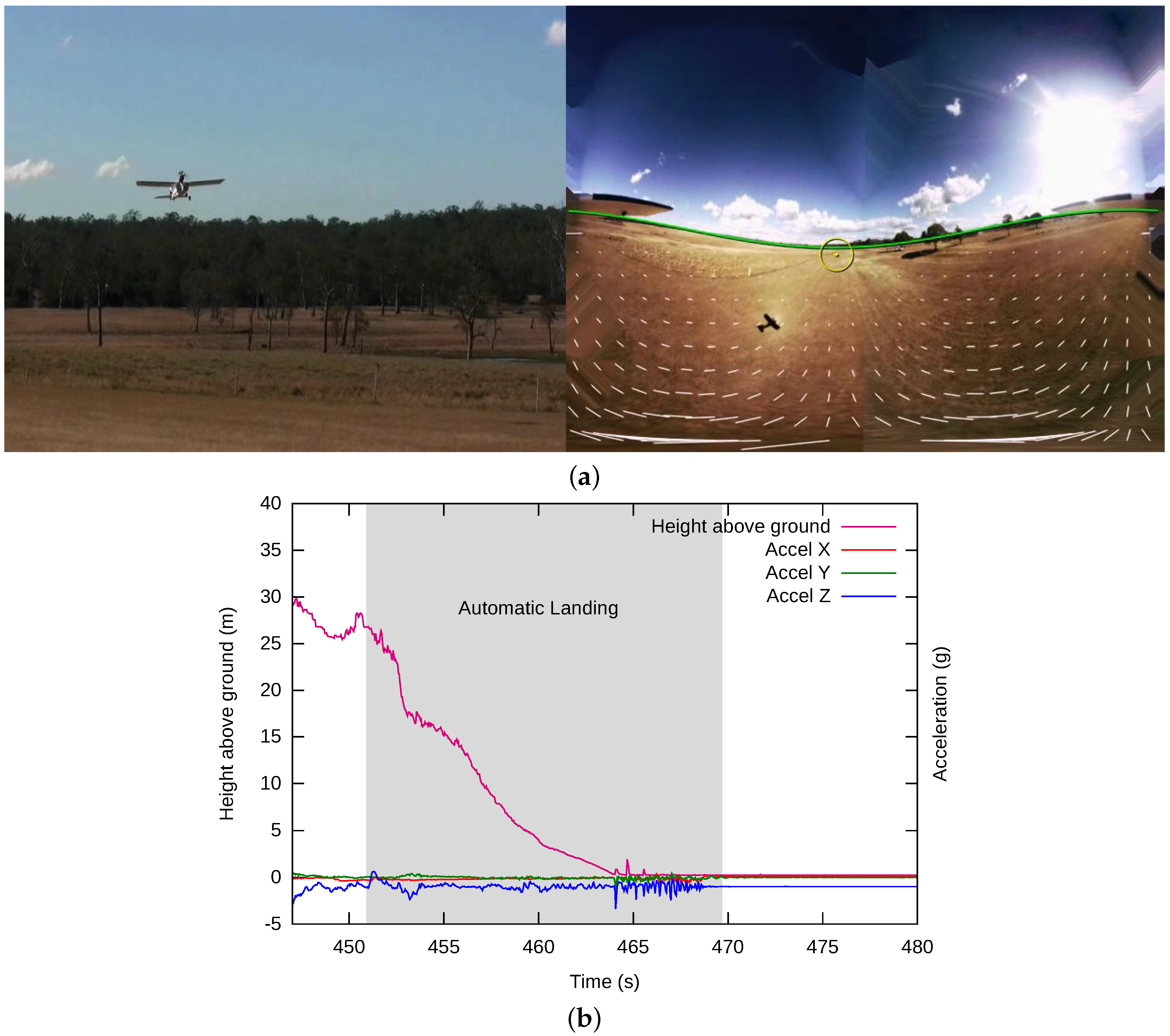

3.1.2. Landing

3.2. Drift-Free Hover Stabilisation and Navigation via Bio-Inspired Snapshot Matching

3.2.1. Control of UAS Hover through Image Coordinates Extrapolation (ICE)

3.2.2. Control of UAS Hover Through Snapshot Matching

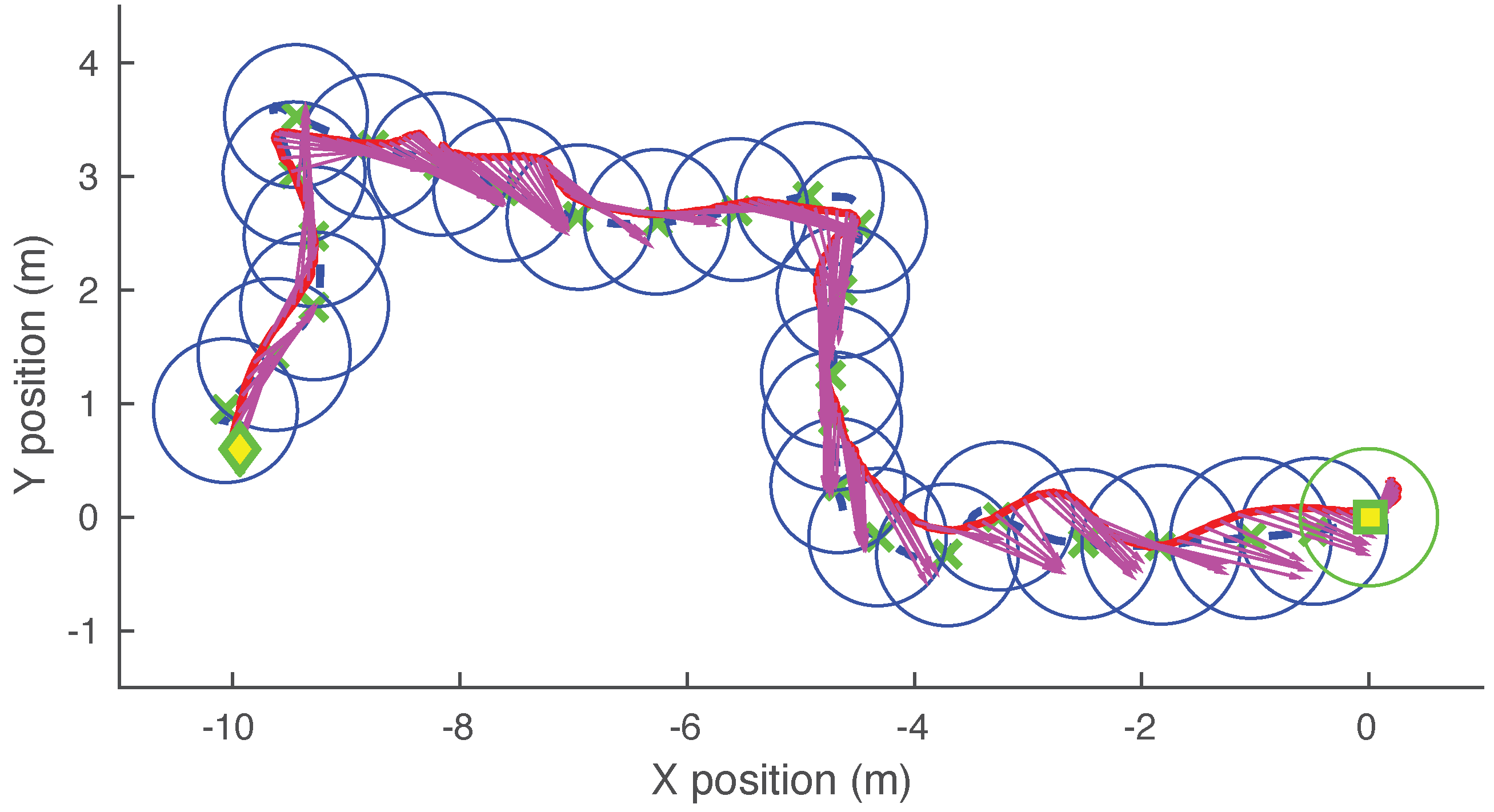

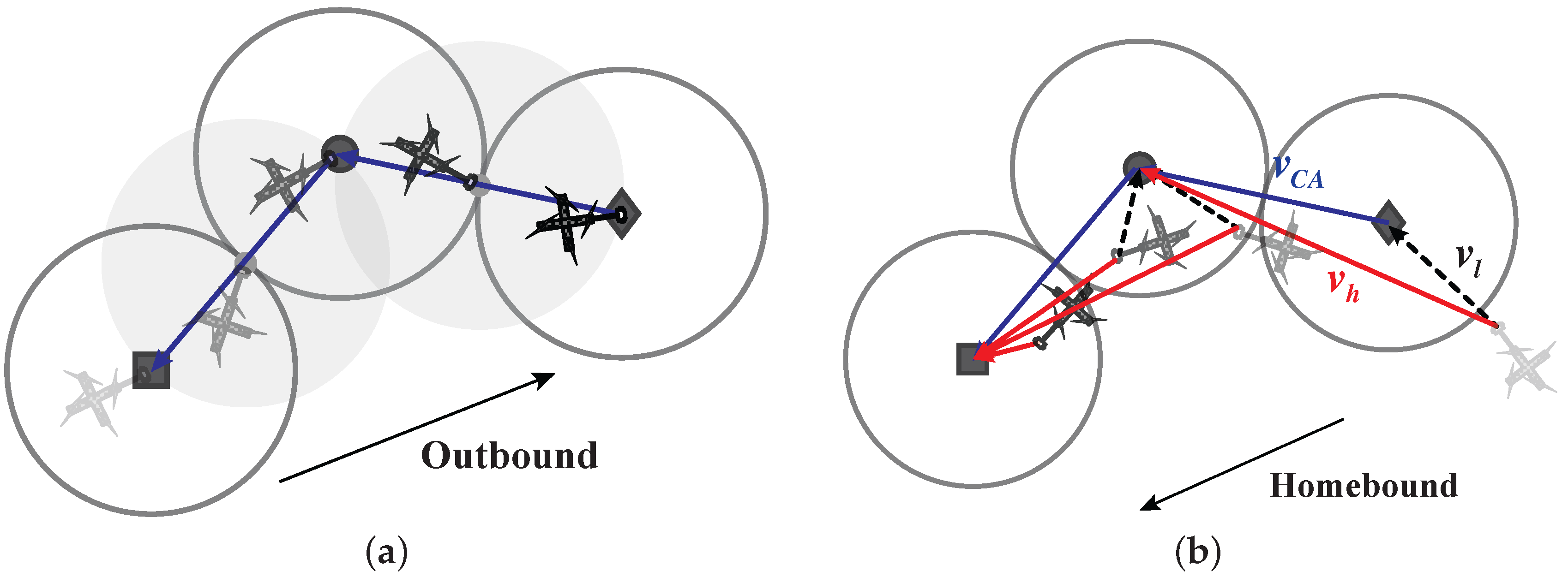

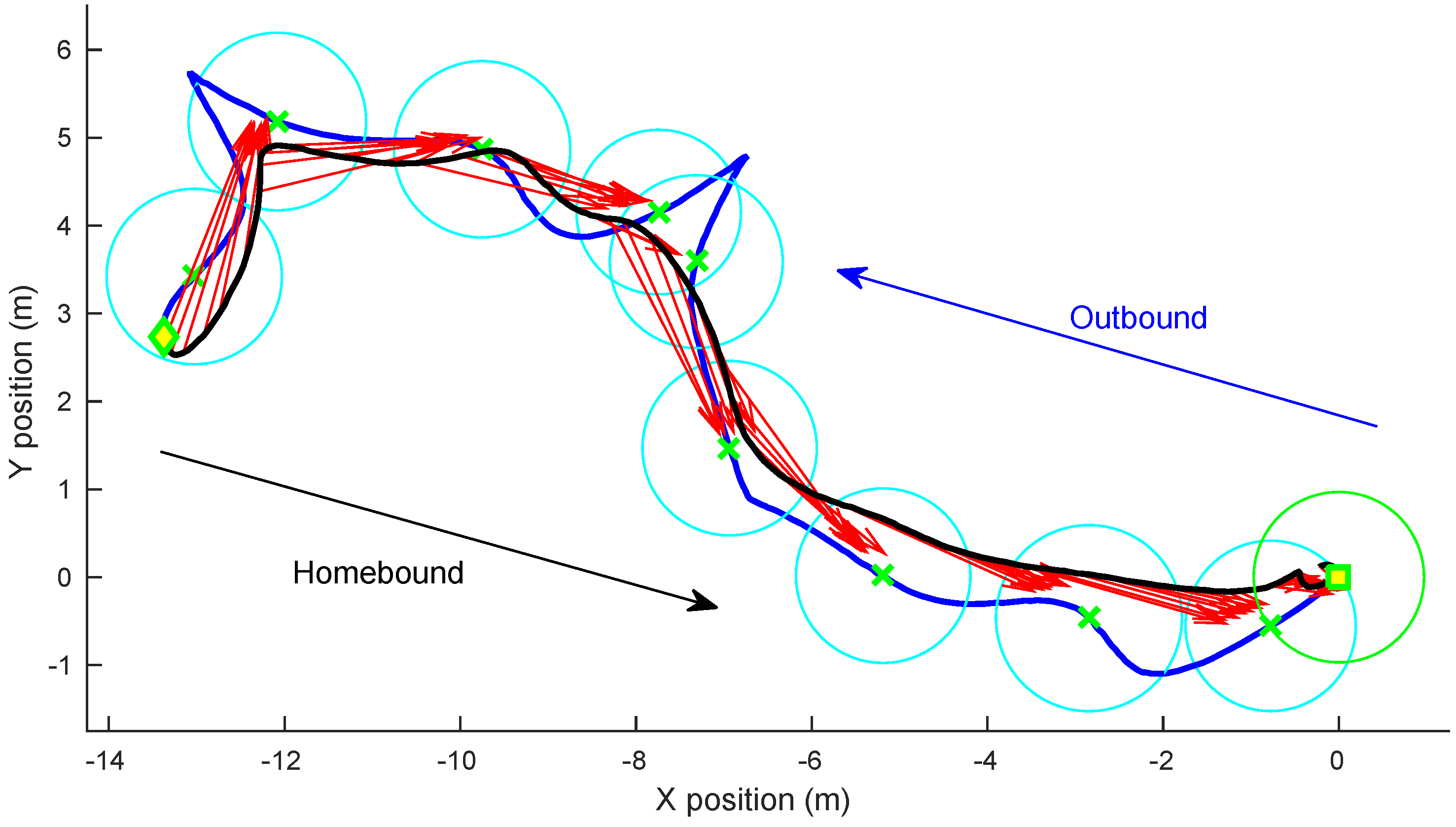

3.2.3. Control of UAS Navigation Through Snapshot Matching

3.3. Vision-Only Navigation

4. Situational Awareness

4.1. Object Detection and Tracking

4.2. Target Motion Classification: Determining Whether an Object Is Moving or Stationary

4.2.1. Using the Epipolar Constraint to Classify Motion

- Compute the egomotion of the aircraft based on the pattern of optic flow in a panoramic image.

- Determine the component of this optic flow pattern that is generated by the aircraft’s translation.

- Finally, detect the moving object by evaluating whether the direction of the flow generated by the object is different from the expected direction, had the object been stationary.

4.2.2. The Triangle Closure Method (TCM)

- The translation direction of the UAS.

- The direction to the centroid of the object.

- The change in the size of the object’s image between two frames.

4.3. Situational Awareness Conclusions

5. Guidance for Pursuit and Interception

5.1. Interception: An Engineering Approach to Pursue Ground Targets

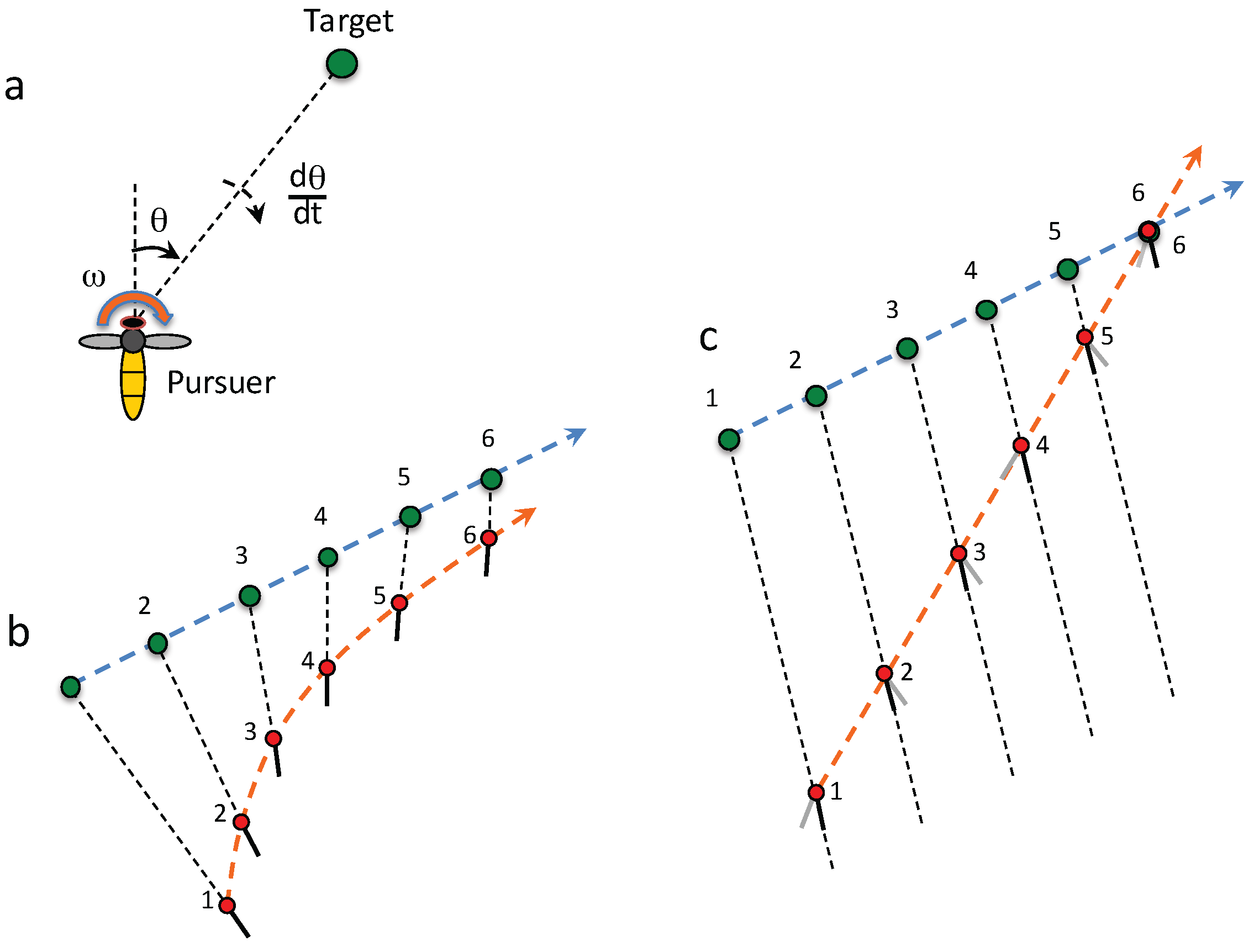

5.2. Interception: A Biological Approach

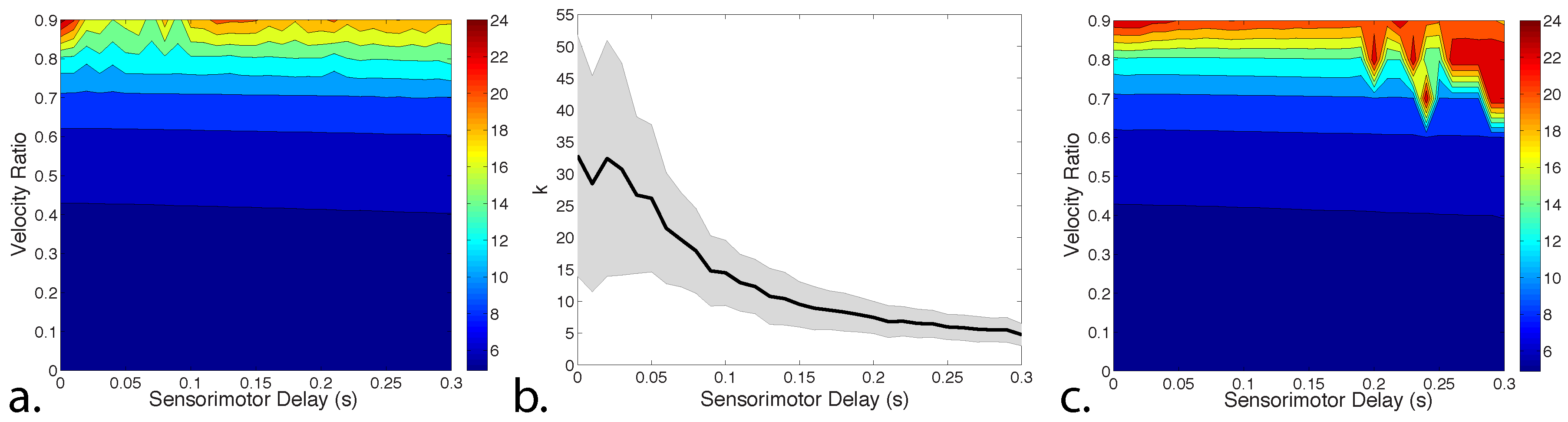

5.3. Comparison of Pursuit and Constant-Bearing Interception Strategies

5.3.1. Evaluation of Pursuit and Interception Performance as a Function of Sensorimotor Delay

5.3.2. Pursuit and Constant Bearing Interception in the Context of Robotics

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Gibbs, Y. Global Hawk—Performance and Specifications. Available online: http://www.nasa.gov/centers/armstrong/aircraft/GlobalHawk/performance.html (accessed on 9 June 2016).

- Golightly, G. Boeing’s Concept Exploration Pioneers New UAV Development with the Hummingbird and the Maverick. Available online: http://www.boeing.com/news/frontiers/archive/2004/december/tssf04.html (accessed on 9 June 2016).

- MQ-1B Predator. Available online: http://www.af.mil/AboutUs/FactSheets/Display/tabid/224/Article/104469/mq-1b-predator.aspx (accessed on 9 June 2016).

- Insitu. SCANEAGLE; Technical Report; Insitu: Washington, DC, USA, 2016. [Google Scholar]

- AeroVironment. Raven; Technical Report; AeroVironment: Monrovia, CA, USA, 2016. [Google Scholar]

- AirRobot. AIRROBOT; Technical Report; AirRobot: Arnsberg, Germany, 2007. [Google Scholar]

- DJI. Available online: http://www.dji.com/product/phantom-3-pro/info (accessed on 9 June 2016).

- Thrun, S.; Leonard, J.J. Simultaneous localization and mapping. In Springer Handbook of Robotics; Springer: Berlin, Germany; Heidelberg, Germany, 2008; pp. 871–889. [Google Scholar]

- Clark, S.; Durrant-Whyte, H. Autonomous land vehicle navigation using millimeter wave radar. In Proceedings of the 1998 IEEE International Conference on Robotics and Automation, Leuven, Belgium, 16–20 May 1998; Volume 4, pp. 3697–3702.

- Clark, S.; Dissanayake, G. Simultaneous localisation and map building using millimetre wave radar to extract natural features. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation, Detroit, MI, USA, 10–15 May 1999; Volume 2, pp. 1316–1321.

- Jose, E.; Adams, M.D. Millimetre wave radar spectra simulation and interpretation for outdoor slam. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation, ICRA’04, New Orleans, LA, USA, 26 April–1 May 2004; Volume 2, pp. 1321–1326.

- Clothier, R.; Frousheger, D.; Wilson, M.; Grant, I. The smart skies project: Enabling technologies for future airspace environments. In Proceedings of the 28th Congress of the International Council of the Aeronautical Sciences, International Council of the Aeronautical Sciences, Brisbane, Australia; 2012; pp. 1–12. [Google Scholar]

- Wilson, M. Ground-Based Sense and Avoid Support for Unmanned Aircraft Systems. In Proceedings of the Congress of the International Council of the Aeronautical Sciences (ICAS), Brisbane, Australia, 23–28 September 2012.

- Korn, B.; Edinger, C. UAS in civil airspace: Demonstrating “sense and avoid” capabilities in flight trials. In Proceedings of the 2008 IEEE/AIAA 27th Digital Avionics Systems Conference, St. Paul, MN, USA, 26–30 October 2008; pp. 4.D.1-1–4.D.1-7.

- Viquerat, A.; Blackhall, L.; Reid, A.; Sukkarieh, S.; Brooker, G. Reactive collision avoidance for unmanned aerial vehicles using doppler radar. In Field and Service Robotics; Springer: Berlin, Germany; Heidelberg, Germany, 2008; pp. 245–254. [Google Scholar]

- Australian Transport Safety Bureau (ATSB). Review of Midair Collisions Involving General Aviation Aircraft in Australia between 1961 and 2003; ATSB: Canberra, Australia, 2004.

- Hayhurst, K.J.; Maddalon, J.M.; Miner, P.S.; DeWalt, M.P.; McCormick, G.F. Unmanned aircraft hazards and their implications for regulation. In Proceedings of the 2006 IEEE/AIAA 25th Digital Avionics Systems Conference, Portland, OR, USA, 15–19 October 2006; pp. 1–12.

- Srinivasan, M.V. Honeybees as a model for the study of visually guided flight, navigation, and biologically inspired robotics. Physiol. Rev. 2011, 91, 413–460. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, M.V. Visual control of navigation in insects and its relevance for robotics. Curr. Opin. Neurobiol. 2011, 21, 535–543. [Google Scholar] [CrossRef] [PubMed]

- Mischiati, M.; Lin, H.T.; Herold, P.; Imler, E.; Olberg, R.; Leonardo, A. Internal models direct dragonfly interception steering. Nature 2015, 517, 333–338. [Google Scholar] [CrossRef] [PubMed]

- Franceschini, N. Small brains, smart machines: From fly vision to robot vision and back again. Proc. IEEE 2014, 102, 751–781. [Google Scholar] [CrossRef]

- Roubieu, F.L.; Serres, J.R.; Colonnier, F.; Franceschini, N.; Viollet, S.; Ruffier, F. A biomimetic vision-based hovercraft accounts for bees’ complex behaviour in various corridors. Bioinspir. Biomim. 2014, 9, 036003. [Google Scholar] [CrossRef] [PubMed]

- Ruffier, F.; Franceschini, N. Optic flow regulation: The key to aircraft automatic guidance. Robot. Auton. Syst. 2005, 50, 177–194. [Google Scholar] [CrossRef]

- Floreano, D.; Zufferey, J.C.; Srinivasan, M.V.; Ellington, C. Flying Insects and Robots; Springer: Berlin, Germany; Heidelberg, Germany, 2009. [Google Scholar]

- Srinivasan, M.V.; Moore, R.J.; Thurrowgood, S.; Soccol, D.; Bland, D.; Knight, M. Vision and Navigation in Insects, and Applications to Aircraft Guidance; The MIT Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Coombs, D.; Roberts, K. ’Bee-Bot’: Using Peripheral Optical Flow to Avoid Obstacles. In Proc. SPIE 1825, Proceedings of Intelligent Robots and Computer Vision XI: Algorithms, Techniques, and Active Vision, Boston, MA, USA, 15 November 1992; pp. 714–721.

- Santos-Victor, J.; Sandini, G.; Curotto, F.; Garibaldi, S. Divergent stereo in autonomous navigation: From bees to robots. Int. J. Comput. Vis. 1995, 14, 159–177. [Google Scholar] [CrossRef]

- Weber, K.; Venkatesh, S.; Srinivasan, M.V. Insect inspired behaviours for the autonomous control of mobile robots. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 1, pp. 156–160.

- Conroy, J.; Gremillion, G.; Ranganathan, B.; Humbert, J.S. Implementation of wide-field integration of optic flow for autonomous quadrotor navigation. Auton. Robot. 2009, 27, 189–198. [Google Scholar] [CrossRef]

- Sabo, C.M.; Cope, A.; Gurney, K.; Vasilaki, E.; Marshall, J. Bio-Inspired Visual Navigation for a Quadcopter using Optic Flow. In AIAA Infotech @ Aerospace; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2016; Volume 404, pp. 1–14. [Google Scholar]

- Zufferey, J.C.; Floreano, D. Fly-inspired visual steering of an ultralight indoor aircraft. IEEE Trans. Robot. 2006, 22, 137–146. [Google Scholar] [CrossRef]

- Garratt, M.A.; Chahl, J.S. Vision-based terrain following for an unmanned rotorcraft. J. Field Robot. 2008, 25, 284–301. [Google Scholar] [CrossRef]

- Moore, R.J.; Thurrowgood, S.; Bland, D.; Soccol, D.; Srinivasan, M.V. UAV altitude and attitude stabilisation using a coaxial stereo vision system. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 29–34.

- Cornall, T.; Egan, G.; Price, A. Aircraft attitude estimation from horizon video. Electron. Lett. 2006, 42, 744–745. [Google Scholar] [CrossRef]

- Horiuchi, T.K. A low-power visual-horizon estimation chip. IEEE Trans. Circuits Syst. I Regul. Pap. 2009, 56, 1566–1575. [Google Scholar] [CrossRef]

- Moore, R.; Thurrowgood, S.; Bland, D.; Soccol, D.; Srinivasan, M.V. A fast and adaptive method for estimating UAV attitude from the visual horizon. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011.

- Jouir, T.; Strydom, R.; Srinivasan, M.V. A 3D sky compass to achieve robust estimation of UAV attitude. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 2–4 December 2015.

- Zhang, Z.; Xie, P.; Ma, O. Bio-inspired trajectory generation for UAV perching. In Proceedings of the 2013 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Wollongong, Australia, 9–12 July 2013; pp. 997–1002.

- Zhang, Z.; Zhang, S.; Xie, P.; Ma, O. Bioinspired 4D trajectory generation for a UAS rapid point-to-point movement. J. Bionic Eng. 2014, 11, 72–81. [Google Scholar] [CrossRef]

- Zhang, Z.; Xie, P.; Ma, O. Bio-inspired trajectory generation for UAV perching movement based on tau theory. Int. J. Adv. Robot. Syst. 2014, 11, 141. [Google Scholar] [CrossRef]

- Xie, P.; Ma, O.; Zhang, Z. A bio-inspired approach for UAV landing and perching. In Proceedings of the AIAA GNC, Boston, MA, USA, 19–22 August 2013; Volume 13.

- Kendoul, F. Four-dimensional guidance and control of movement using time-to-contact: Application to automated docking and landing of unmanned rotorcraft systems. Int. J. Robot. Res. 2014, 33, 237–267. [Google Scholar] [CrossRef]

- Beyeler, A.; Zufferey, J.C.; Floreano, D. optiPilot: Control of take-off and landing using optic flow. In Proceedings of the 2009 European Micro Air Vehicle conference and competition (EMAV 09), Delft, The Netherland, 14–17 September 2009.

- Herissé, B.; Hamel, T.; Mahony, R.; Russotto, F.X. Landing a VTOL unmanned aerial vehicle on a moving platform using optical flow. IEEE Trans. Robot. 2012, 28, 77–89. [Google Scholar] [CrossRef]

- Kendoul, F.; Fantoni, I.; Nonami, K. Optic flow-based vision system for autonomous 3D localization and control of small aerial vehicles. Robot. Auton. Syst. 2009, 57, 591–602. [Google Scholar] [CrossRef]

- Thurrowgood, S.; Moore, R.J.; Soccol, D.; Knight, M.; Srinivasan, M.V. A Biologically Inspired, Vision-based Guidance System for Automatic Landing of a Fixed-wing Aircraft. J. Field Robot. 2014, 31, 699–727. [Google Scholar] [CrossRef]

- Chahl, J. Unmanned Aerial Systems (UAS) Research Opportunities. Aerospace 2015, 2, 189–202. [Google Scholar] [CrossRef]

- Duan, H.; Li, P. Bio-Inspired Computation in Unmanned Aerial Vehicles; Springer: Berlin, Germany; Heidelberg, Germany, 2014. [Google Scholar]

- Evangelista, C.; Kraft, P.; Dacke, M.; Reinhard, J.; Srinivasan, M.V. The moment before touchdown: Landing manoeuvres of the honeybee Apis mellifera. J. Exp. Biol. 2010, 213, 262–270. [Google Scholar] [CrossRef] [PubMed]

- Kannala, J.; Brandt, S.S. A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef] [PubMed]

- Goodman, L. Form and Function in the Honey Bee; International Bee Research Association: Bristol, UK, 2003. [Google Scholar]

- Koenderink, J.J.; van Doorn, A.J. Facts on optic flow. Biol. Cybern. 1987, 56, 247–254. [Google Scholar] [CrossRef] [PubMed]

- Krapp, H.G.; Hengstenberg, R. Estimation of self-motion by optic flow processing in single visual interneurons. Nature 1996, 384, 463–466. [Google Scholar] [CrossRef] [PubMed]

- Krapp, H.G.; Hengstenberg, B.; Hengstenberg, R. Dendritic structure and receptive-field organization of optic flow processing interneurons in the fly. J. Neurophysiol. 1998, 79, 1902–1917. [Google Scholar] [PubMed]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI, Vancouver, BC, Canada, 24–28 August 1981; Volume 81, pp. 674–679.

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 71, 319–331. [Google Scholar] [CrossRef]

- Expert, F.; Ruffier, F. Flying over uneven moving terrain based on optic-flow cues without any need for reference frames or accelerometers. Bioinspir. Biomim. 2015, 10, 026003. [Google Scholar] [CrossRef] [PubMed]

- Maimone, M.; Cheng, Y.; Matthies, L. Two years of visual odometry on the mars exploration rovers. J. Field Robot. 2007, 24, 169–186. [Google Scholar] [CrossRef]

- Strydom, R.; Thurrowgood, S.; Srinivasan, M.V. Visual Odometry: Autonomous UAV Navigation using Optic Flow and Stereo. In Proceedings of the Australasian Conference on Robotics and Automation, Melbourne, Australia, 2–4 December 2014.

- Von Frisch, K. The Dance Language and Orientation of Bees; Harvard University Press: Cambridge, MA, USA, 1967. [Google Scholar]

- Srinivasan, M.V.; Zhang, S.W.; Altwein, M.; Tautz, J. Honeybee navigation: Nature and calibration of the “odometer”. Science 2000, 287, 851–853. [Google Scholar] [CrossRef] [PubMed]

- Esch, H.E.; Zhang, S.W.; Srinivasan, M.V.; Tautz, J. Honeybee dances communicate distances measured by optic flow. Nature 2001, 411, 581–583. [Google Scholar] [CrossRef] [PubMed]

- Sünderhauf, N.; Protzel, P. Stereo Odometry—A Review of Approaches; Technical Report; Chemnitz University of Technology: Chemnitz, Germany, 2007. [Google Scholar]

- Alismail, H.; Browning, B.; Dias, M.B. Evaluating pose estimation methods for stereo visual odometry on robots. In Proceedings of the 2010 11th International Conference on Intelligent Autonomous Systems (IAS-11), Ottawa, ON, Canada, 30 August–1 September 2010.

- Badino, H.; Yamamoto, A.; Kanade, T. Visual odometry by multi-frame feature integration. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 222–229.

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual odometry. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2004, Washington, DC, USA, 27 June–2 July 2004; Volume 1, pp. I-652–I-659.

- Nistér, D.; Naroditsky, O.; Bergen, J. Visual odometry for ground vehicle applications. J. Field Robot. 2006, 23, 3–20. [Google Scholar] [CrossRef]

- Warren, M.; Corke, P.; Upcroft, B. Long-range stereo visual odometry for extended altitude flight of unmanned aerial vehicles. Int. J. Robot. Res. 2016, 35, 381–403. [Google Scholar] [CrossRef]

- Caballero, F.; Merino, L.; Ferruz, J.; Ollero, A. Vision-based odometry and SLAM for medium and high altitude flying UAVs. J. Intell. Robot. Syst. 2009, 54, 137–161. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Siegwart, R. Appearance-guided monocular omnidirectional visual odometry for outdoor ground vehicles. IEEE Trans. Robot. 2008, 24, 1015–1026. [Google Scholar] [CrossRef]

- More, V.; Kumar, H.; Kaingade, S.; Gaidhani, P.; Gupta, N. Visual odometry using optic flow for Unmanned Aerial Vehicles. In Proceedings of the 2015 International Conference on Cognitive Computing and Information Processing (CCIP), Noida, India, 3–4 March 2015; pp. 1–6.

- Corke, P.; Strelow, D.; Singh, S. Omnidirectional visual odometry for a planetary rover. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2004 (IROS 2004), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 4007–4012.

- Campbell, J.; Sukthankar, R.; Nourbakhsh, I. Techniques for evaluating optical flow for visual odometry in extreme terrain. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2004 (IROS 2004), Sendai, Japan, 28 September–2 October 2004; Volume 4, pp. 3704–3711.

- Scaramuzza, D.; Fraundorfer, F. Visual Odometry. Part 1: The first 30 years and fundamentals. IEEE Robot. Autom. Mag. 2011, 18, 80–92. [Google Scholar] [CrossRef]

- Olson, C.F.; Matthies, L.H.; Schoppers, M.; Maimone, M.W. Robust stereo ego-motion for long distance navigation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head Island, SC, USA, 13–15 June 2000; Volume 2, pp. 453–458.

- Tardif, J.P.; Pavlidis, Y.; Daniilidis, K. Monocular visual odometry in urban environments using an omnidirectional camera. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 2531–2538.

- Heng, L.; Honegger, D.; Lee, G.H.; Meier, L.; Tanskanen, P.; Fraundorfer, F.; Pollefeys, M. Autonomous Visual Mapping and Exploration with a Micro Aerial Vehicle. J. Field Robot. 2014, 31, 654–675. [Google Scholar] [CrossRef]

- Nourani-Vatani, N.; Borges, P.V.K. Correlation-based visual odometry for ground vehicles. J. Field Robot. 2011, 28, 742–768. [Google Scholar] [CrossRef]

- Lemaire, T.; Berger, C.; Jung, I.K.; Lacroix, S. Vision-based slam: Stereo and monocular approaches. Int. J. Comput. Vis. 2007, 74, 343–364. [Google Scholar] [CrossRef]

- Salazar-Cruz, S.; Escareno, J.; Lara, D.; Lozano, R. Embedded control system for a four-rotor UAV. Int. J. Adapt. Control Signal Process. 2007, 21, 189–204. [Google Scholar] [CrossRef]

- Kendoul, F.; Lara, D.; Fantoni, I.; Lozano, R. Real-time nonlinear embedded control for an autonomous quadrotor helicopter. J. Guid. Control Dyn. 2007, 30, 1049–1061. [Google Scholar] [CrossRef]

- Mori, R.; Kubo, T.; Kinoshita, T. Vision-based hovering control of a small-scale unmanned helicopter. In Proceedings of the International Joint Conference SICE-ICASE, Busan, Korean, 18–21 October 2006; pp. 1274–1278.

- Guenard, N.; Hamel, T.; Mahony, R. A practical visual servo control for an unmanned aerial vehicle. IEEE Trans. Robot. 2008, 24, 331–340. [Google Scholar] [CrossRef] [Green Version]

- Lange, S.; Sunderhauf, N.; Protzel, P. A vision based onboard approach for landing and position control of an autonomous multirotor UAV in GPS-denied environments. In Proceedings of the International Conference on Advanced Robotics, 2009, ICAR 2009, Munich, Germany, 22–26 June 2009.

- Yang, S.; Scherer, S.A.; Zell, A. An onboard monocular vision system for autonomous takeoff, hovering and landing of a micro aerial vehicle. J. Intell. Robot. Syst. 2013, 69, 499–515. [Google Scholar] [CrossRef]

- Masselli, A.; Zell, A. A novel marker based tracking method for position and attitude control of MAVs. In Proceedings of the International Micro Air Vehicle Conference and Flight Competition (IMAV), Braunschweig, Germany, 3–6 July 2012.

- Azrad, S.; Kendoul, F.; Nonami, K. Visual servoing of quadrotor micro-air vehicle using color-based tracking algorithm. J. Syst. Des. Dyn. 2010, 4, 255–268. [Google Scholar] [CrossRef]

- Achtelik, M.; Achtelik, M.; Weiss, S.; Siegwart, R. Onboard IMU and monocular vision based control for MAVs in unknown in-and outdoor environments. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 3056–3063.

- Shen, S.; Mulgaonkar, Y.; Michael, N.; Kumar, V. Vision-based state estimation for autonomous rotorcraft mavs in complex environments. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 6–10 May 2013; pp. 1758–1764.

- Zeil, J.; Hofmann, M.I.; Chahl, J.S. Catchment areas of panoramic snapshots in outdoor scenes. JOSA A 2003, 20, 450–469. [Google Scholar] [CrossRef] [PubMed]

- Denuelle, A.; Thurrowgood, S.; Kendoul, F.; Srinivasan, M.V. A view-based method for local homing of unmanned rotorcraft. In Proceedings of the 2015 6th International Conference on Automation, Robotics and Applications (ICARA), Queenstown, New Zealand, 17–19 February 2015; pp. 443–449.

- Denuelle, A.; Thurrowgood, S.; Strydom, R.; Kendoul, F.; Srinivasan, M.V. Biologically-inspired visual stabilization of a rotorcraft UAV in unknown outdoor environments. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 1084–1093.

- Denuelle, A.; Strydom, R.; Srinivasan, M.V. Snapshot-based control of UAS hover in outdoor environments. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 1278–1284.

- Romero, H.; Salazar, S.; Lozano, R. Real-time stabilization of an eight-rotor UAV using optical flow. IEEE Trans. Robot. 2009, 25, 809–817. [Google Scholar] [CrossRef]

- Li, P.; Garratt, M.; Lambert, A.; Pickering, M.; Mitchell, J. Onboard hover control of a quadrotor using template matching and optic flow. In Proceedings of the International Conference on Image Processing, Computer Vision, and Pattern Recognition (IPCV). The Steering Committee of The World Congress in Computer Science, Computer Engineering and Applied Computing (WorldComp), Las Vegas, NV, USA, 22–25 July 2013; p. 1.

- Green, W.E.; Oh, P.Y.; Barrows, G. Flying insect inspired vision for autonomous aerial robot maneuvers in near-earth environments. In Proceedings of the 2004 IEEE International Conference on Robotics and Automation, New Orleans, LA, USA, 26 April–1 May 2004; Volume 3, pp. 2347–2352.

- Colonnier, F.; Manecy, A.; Juston, R.; Mallot, H.; Leitel, R.; Floreano, D.; Viollet, S. A small-scale hyperacute compound eye featuring active eye tremor: Application to visual stabilization, target tracking, and short-range odometry. Bioinspir. Biomim. 2015, 10, 026002. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Garratt, M.; Lambert, A. Monocular Snapshot-based Sensing and Control of Hover, Takeoff, and Landing for a Low-cost Quadrotor. J. Field Robot. 2015, 32, 984–1003. [Google Scholar] [CrossRef]

- Matsumoto, Y.; Inaba, M.; Inoue, H. Visual navigation using view-sequenced route representation. In Proceedings of the 1996 IEEE International Conference on Robotics and Automation, Minneapolis, MN, USA, 22–28 April 1996; Volume 1, pp. 83–88.

- Jones, S.D.; Andresen, C.; Crowley, J.L. Appearance based process for visual navigation. In Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robots and Systems, Grenoble, France, 7–11 September 1997; Volume 2, pp. 551–557.

- Vardy, A. Long-range visual homing. In Proceedings of the 2006 IEEE International Conference on Robotics and Biomimetics, Kunming, China, 17–20 December 2006; pp. 220–226.

- Smith, L.; Philippides, A.; Graham, P.; Baddeley, B.; Husbands, P. Linked local navigation for visual route guidance. Adapt. Behav. 2007, 15, 257–271. [Google Scholar] [CrossRef]

- Labrosse, F. Short and long-range visual navigation using warped panoramic images. Robot. Auton. Syst. 2007, 55, 675–684. [Google Scholar] [CrossRef] [Green Version]

- Zhou, C.; Wei, Y.; Tan, T. Mobile robot self-localization based on global visual appearance features. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 1271–1276.

- Argyros, A.A.; Bekris, K.E.; Orphanoudakis, S.C.; Kavraki, L.E. Robot homing by exploiting panoramic vision. Auton. Robot. 2005, 19, 7–25. [Google Scholar] [CrossRef]

- Courbon, J.; Mezouar, Y.; Guenard, N.; Martinet, P. Visual navigation of a quadrotor aerial vehicle. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 5315–5320.

- Fu, Y.; Hsiang, T.R. A fast robot homing approach using sparse image waypoints. Image Vis. Comput. 2012, 30, 109–121. [Google Scholar] [CrossRef]

- Smith, L.; Philippides, A.; Husbands, P. Navigation in large-scale environments using an augmented model of visual homing. In From Animals to Animats 9; Springer: Berlin, Germany; Heidelberg, Germany, 2006; pp. 251–262. [Google Scholar]

- Chen, Z.; Birchfield, S.T. Qualitative vision-based mobile robot navigation. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 2686–2692.

- Ohno, T.; Ohya, A.; Yuta, S.I. Autonomous navigation for mobile robots referring pre-recorded image sequence. In Proceedings of the 1996 IEEE/RSJ International Conference on Intelligent Robots and Systems, Osaka, Japan, 4–8 November 1996; Volume 2, pp. 672–679.

- Sagüés, C.; Guerrero, J.J. Visual correction for mobile robot homing. Robot. Auton. Syst. 2005, 50, 41–49. [Google Scholar] [CrossRef]

- Denuelle, A.; Srinivasan, M.V. Snapshot-based Navigation for the Guidance of UAS. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 2–4 December 2015.

- Denuelle, A.; Srinivasan, M.V. A sparse snapshot-based navigation strategy for UAS guidance in natural environments. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3455–3462.

- Rohac, J. Accelerometers and an aircraft attitude evaluation. In Proceedings of the IEEE Sensors, Irvine, CA, USA, 30 October–3 November 2005; p. 6.

- Thurrowgood, S.; Moore, R.J.; Bland, D.; Soccol, D.; Srinivasan, M.V. UAV attitude control using the visual horizon. In Proceedings of the Australasian Conference on Robotics and Automation, Brisbane, Australia, 1–3 December 2010.

- Lai, J.; Ford, J.J.; Mejias, L.; O’Shea, P. Characterization of Sky-region Morphological-temporal Airborne Collision Detection. J. Field Robot. 2013, 30, 171–193. [Google Scholar] [CrossRef]

- Nussberger, A.; Grabner, H.; Van Gool, L. Aerial object tracking from an airborne platform. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014.

- Wenzel, K.E.; Rosset, P.; Zell, A. Low-cost visual tracking of a landing place and hovering flight control with a microcontroller. J. Intell. Robot. Syst. 2010, 57, 297. [Google Scholar] [CrossRef]

- Choi, J.H.; Lee, D.; Bang, H. Tracking an unknown moving target from UAV: Extracting and localizing an moving target with vision sensor based on optical flow. In Proceedings of the 2011 5th International Conference on Automation, Robotics and Applications (ICARA), Wellington, New Zealand, 6–8 December 2011.

- Pinto, A.M.; Costa, P.G.; Moreira, A.P. Introduction to Visual Motion Analysis for Mobile Robots. In CONTROLO’2014–Proceedings of the 11th Portuguese Conference on Automatic Control; Springer: Berlin, Germany; Heidelberg, Germany, 2015; pp. 545–554. [Google Scholar]

- Elhabian, S.Y.; El-Sayed, K.M.; Ahmed, S.H. Moving object detection in spatial domain using background removal techniques-state-of-art. Recent Pat. Comput. Sci. 2008, 1, 32–54. [Google Scholar] [CrossRef]

- Spagnolo, P.; Leo, M.; Distante, A. Moving object segmentation by background subtraction and temporal analysis. Image Vis. Comput. 2006, 24, 411–423. [Google Scholar] [CrossRef]

- Hayman, E.; Eklundh, J.O. Statistical background subtraction for a mobile observer. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; pp. 67–74.

- Sheikh, Y.; Javed, O.; Kanade, T. Background subtraction for freely moving cameras. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 Sptember–2 Octomber 2009; pp. 1219–1225.

- Kim, I.S.; Choi, H.S.; Yi, K.M.; Choi, J.Y.; Kong, S.G. Intelligent visual surveillance—A survey. Int. J. Control Autom. Syst. 2010, 8, 926–939. [Google Scholar] [CrossRef]

- Thakoor, N.; Gao, J.; Chen, H. Automatic object detection in video sequences with camera in motion. In Proceedings of the Advanced Concepts for Intelligent Vision Systems, Brussels, Belgium, 31 August–3 September 2004.

- Pinto, A.M.; Moreira, A.P.; Correia, M.V.; Costa, P.G. A flow-based motion perception technique for an autonomous robot system. J. Intell. Robot. Syst. 2014, 75, 475–492. [Google Scholar] [CrossRef]

- Strydom, R.; Thurrowgood, S.; Srinivasan, M.V. Airborne vision system for the detection of moving objects. In Proceedings of the Australasian Conference on Robotics and Automation, Sydney, Australia, 2–4 December 2013.

- Dey, S.; Reilly, V.; Saleemi, I.; Shah, M. Detection of independently moving objects in non-planar scenes via multi-frame monocular epipolar constraint. In Computer Vision–ECCV 2012; Springer: Berlin, Germany; Heidelberg, Germany, 2012; pp. 860–873. [Google Scholar]

- Strydom, R.; Thurrowgood, S.; Srinivasan, M.V. TCM: A Fast Technique to Determine if an Object is Moving or Stationary from a UAV. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 2–4 December 2015.

- Strydom, R.; Thurrowgood, S.; Denuelle, A.; Srinivasan, M.V. TCM: A Vision-Based Algorithm for Distinguishing Between Stationary and Moving Objects Irrespective of Depth Contrast from a UAS. Int. J. Adv. Robot. Syst. 2016. [Google Scholar] [CrossRef]

- Garratt, M.; Pota, H.; Lambert, A.; Eckersley-Masline, S.; Farabet, C. Visual tracking and lidar relative positioning for automated launch and recovery of an unmanned rotorcraft from ships at sea. Nav. Eng. J. 2009, 121, 99–110. [Google Scholar] [CrossRef]

- Strydom, R.; Thurrowgood, S.; Denuelle, A.; Srinivasan, M.V. UAV Guidance: A Stereo-Based Technique for Interception of Stationary or Moving Targets. In Towards Autonomous Robotic Systems: 16th Annual Conference, TAROS 2015, Liverpool, UK, September 8–10, 2015, Proceedings; Dixon, C., Tuyls, K., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 258–269. [Google Scholar]

- Hou, Y.; Yu, C. Autonomous target localization using quadrotor. In Proceedings of the 26th Chinese Control and Decision Conference (2014 CCDC), Changsha, China, 31 May–2 June 2014; pp. 864–869.

- Li, W.; Zhang, T.; Kuhnlenz, K. A vision-guided autonomous quadrotor in an air-ground multi-robot system. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011.

- Teuliere, C.; Eck, L.; Marchand, E. Chasing a moving target from a flying UAV. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 Septemmber 2011; pp. 4929–4934.

- Land, M.F.; Collett, T. Chasing behaviour of houseflies (Fannia canicularis). J. Comp. Physiol. 1974, 89, 331–357. [Google Scholar] [CrossRef]

- Collett, T.S.; Land, M.F. Visual control of flight behaviour in the hoverfly, Syritta pipiens L. J. Comp. Physiol. 1975, 99, 1–66. [Google Scholar] [CrossRef]

- Collett, T.S.; Land, M.F. How hoverflies compute interception courses. J. Comp. Physiol. 1978, 125, 191–204. [Google Scholar] [CrossRef]

- Olberg, R.; Worthington, A.; Venator, K. Prey pursuit and interception in dragonflies. J. Comp. Physiol. A 2000, 186, 155–162. [Google Scholar] [CrossRef] [PubMed]

- Kane, S.A.; Zamani, M. Falcons pursue prey using visual motion cues: New perspectives from animal-borne cameras. J. Exp. Biol. 2014, 217, 225–234. [Google Scholar] [CrossRef] [PubMed]

- Ghose, K.; Horiuchi, T.K.; Krishnaprasad, P.; Moss, C.F. Echolocating bats use a nearly time-optimal strategy to intercept prey. PLoS Biol. 2006, 4, e108. [Google Scholar] [CrossRef] [PubMed]

- Strydom, R.; Singh, S.P.; Srinivasan, M.V. Biologically inspired interception: A comparison of pursuit and constant bearing strategies in the presence of sensorimotor delay. In Proceedings of the 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), Zhuhai, China, 6–9 December 2015; pp. 2442–2448.

- Lavretsky, E.; Wise, K.A. Optimal Control and the Linear Quadratic Regulator. In Robust and Adaptive Control; Springer: London, UK, 2013; pp. 27–50. [Google Scholar]

- Mattingley, J.; Wang, Y.; Boyd, S. Receding horizon control. IEEE Control Syst. 2011, 31, 52–65. [Google Scholar] [CrossRef]

| UAS Type | Approx. Size (m) | Endurance | Primary Function |

|---|---|---|---|

| Global Hawk [1] | 14 (length), 40 (wingspan) | 32+ h | Surveillance |

| Hummingbird [2] | 11 (length), 11 (wingspan) | 24 h | Military reconnaissance |

| Predator [3] | 8.2 (length), 14.8 (wingspan) | 24 h | Military reconnaissance and air strike |

| Scan Eagle [4] | 1.6 (length), 3.1 (wingspan) | 24+ h | Surveillance |

| Raven [5] | 0.9 (length), 1.4 (wingspan) | 60–90 min | Communications |

| Air Robot [6] | 1.0 (diameter) | 25 min | Surveillance and inspection |

| Phantom 3 [7] | 0.35 (diagonal length excluding propellers) | 23 min (max) | Aerial imaging |

| Method | Platform | Path Length | Number of Tests | Average Error (%) |

|---|---|---|---|---|

| Feature-based stereo matching [75] | Ground vehicle | 20 m | 1 | 1.3 |

| Feature-based monocular/stereo matching [66] | Ground vehicle | 186 m; 266 m; 365 m | 3 | 1.4 |

| OF-FTF and stereo [59] | UAS | 46 m; 50 m | 15 of each | 1.7 |

| path length | ||||

| Maimone [58] | Ground vehicle | 24 m; 29 m | 2 | 2.0 |

| Feature-based monocular/stereo matching [67] | Ground vehicle | 186 m; 266 m; 365 m | 3 | 2.5 |

| Monocular visual SLAM using SIFT features [76] | Ground vehicle | 784 m; 2434 m | 2 | 2.7 |

| Optic flow [77] | UAS | 94 m; 83 m | 2 | 3.2 |

| Template matching [78] | Ground vehicle | 10 m; 20 m; 50 m; 100 m | 8 of each | 3.3 |

| path length | ||||

| Stereo/monocular interest point matching SLAM [79] | UAS/Ground vehicle | 100 m | 2 | 3.5 |

| Method Reference | Environment | Static Target | Moving Target | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Test Height (m) | Mean Error (m) | Std. Error or RMSE (m) | Angular Error () | Test Height (m) | Mean Error (m) | Std. Error or RMSE (m) | Angular Error () | ||

| Yang et al. [85] | Indoor | 1.00 | 0.03 | NA | 1.97 | NA | NA | NA | NA |

| Hou et al. [134] | Indoor | 0.70 | 0.04 | NA | 2.86 | NA | NA | NA | NA |

| Guenard et al. [83] | Indoor | 1.40 | 0.10 | NA | 4.09 | NA | NA | NA | NA |

| Masselli et al. [86] | Indoor | 0.80 | 0.11 | 0.08 | 5.74 | NA | NA | NA | NA |

| Azrad et al. [87] | Outdoor | 5.00 | 2.00 | NA | 21.80 | NA | NA | NA | NA |

| Lange et al. [84] | Indoor | 0.70 | 0.29 | NA | 22.29 | NA | NA | NA | NA |

| wenzel et al. [118] | Indoor | NA | NA | NA | NA | 0.25 | 0.02 | 0.07 | 14.90 |

| Li et al. [135] | Indoor | NA | NA | NA | NA | 1.00 | 0.38 | NA | 20.81 |

| Teuliere et al. [136] | Indoor | 2.00 | 0.10 | NA | 2.86 | 0.70 | 0.30 | NA | 23.20 |

| Strydom et al. [133] | Outdoor | 2.00 | 0.01 | 0.32 | 9.09 | 2.00 | 0.14 | 0.24 | 6.84 |

| Task | Technique | Section |

|---|---|---|

| Hover | OF-FTF, OF-SM and ICE | 3.2.1 and 3.2.2 |

| Landing | OF-FTF | 3.1.2 |

| Odometry | OF-FTF and Stereo | 3.1.1 |

| Classifying target motion | Optic flow and expansion cues | 4.2 |

| Target pursuit | Stereo | 5.1 |

| Target pusuit in the presence of sensorimotor delay | Simple pursuit and constant bearing | 5.2 and 5.3 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Strydom, R.; Denuelle, A.; Srinivasan, M.V. Bio-Inspired Principles Applied to the Guidance, Navigation and Control of UAS. Aerospace 2016, 3, 21. https://doi.org/10.3390/aerospace3030021

Strydom R, Denuelle A, Srinivasan MV. Bio-Inspired Principles Applied to the Guidance, Navigation and Control of UAS. Aerospace. 2016; 3(3):21. https://doi.org/10.3390/aerospace3030021

Chicago/Turabian StyleStrydom, Reuben, Aymeric Denuelle, and Mandyam V. Srinivasan. 2016. "Bio-Inspired Principles Applied to the Guidance, Navigation and Control of UAS" Aerospace 3, no. 3: 21. https://doi.org/10.3390/aerospace3030021