Towards Sensor-Actuator Coupling in an Automated Order Picking System by Detecting Sealed Seams on Pouch Packed Goods

Abstract

:1. Introduction

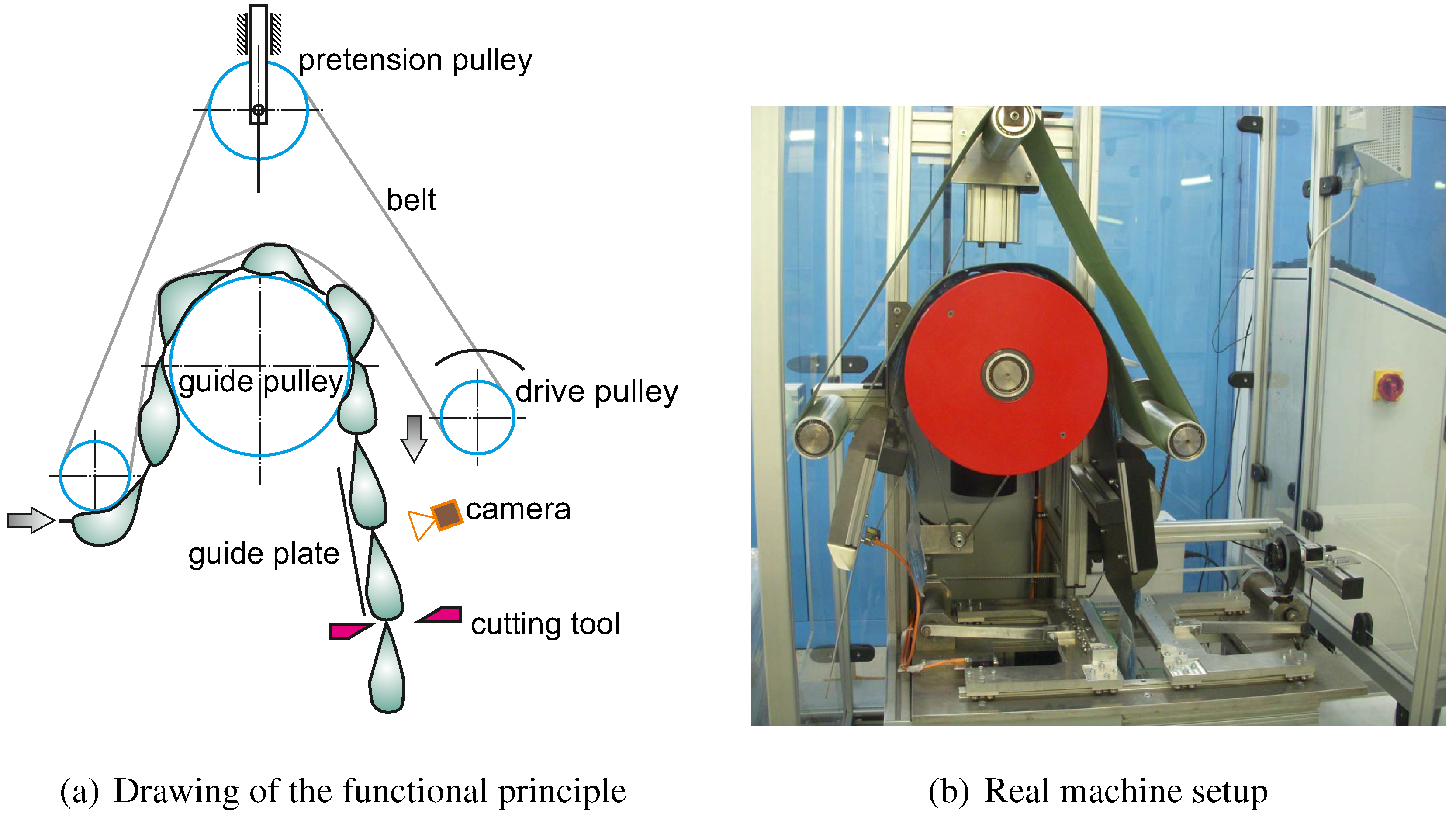

2. State of the Art

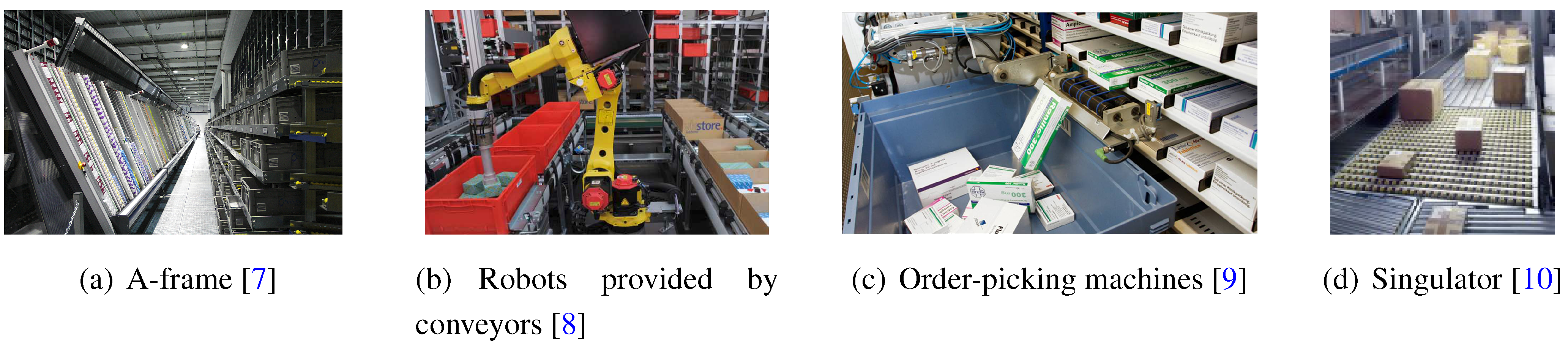

2.1. Fully Automated Order Picking

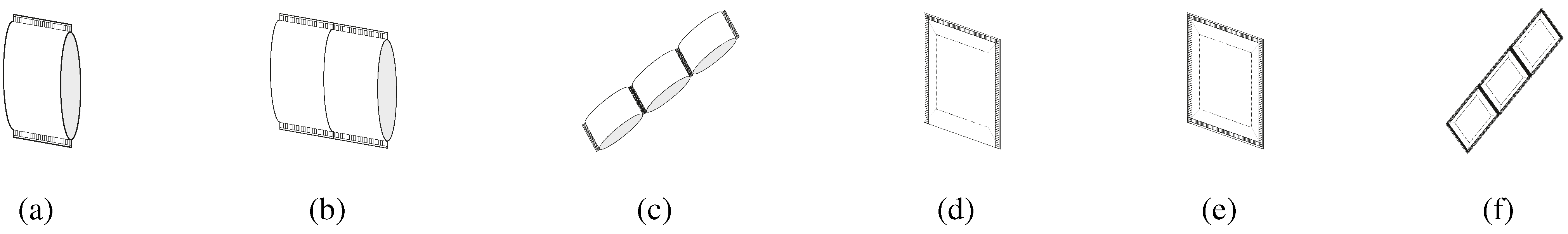

2.2. Types of Pouches in Industrial Systems

2.3. Cutting Line Detection

- binary registration (print) mark sensor based on contrast or color (e.g., SICK KT 10-2),

- binary camera based sensors,

- camera systems (e.g., SICK IVC-2D or IVC 3D),

- and sensors for detection of streams of marks.

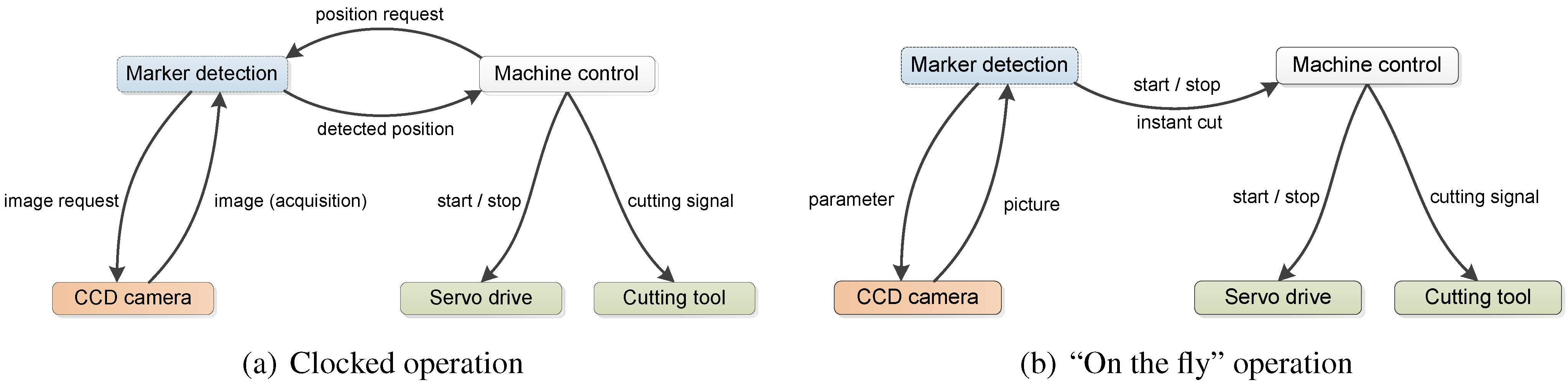

3. Automated Order Picking System

4. Marker Detection System

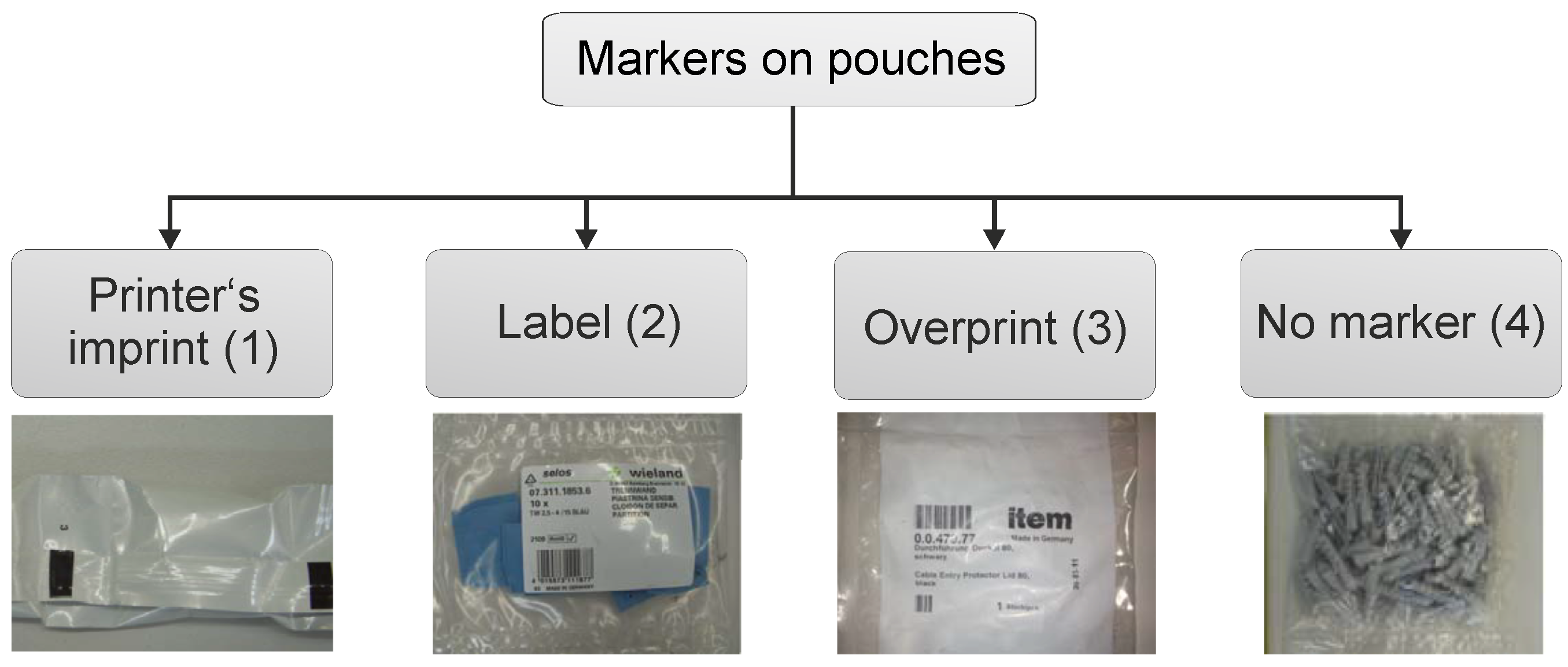

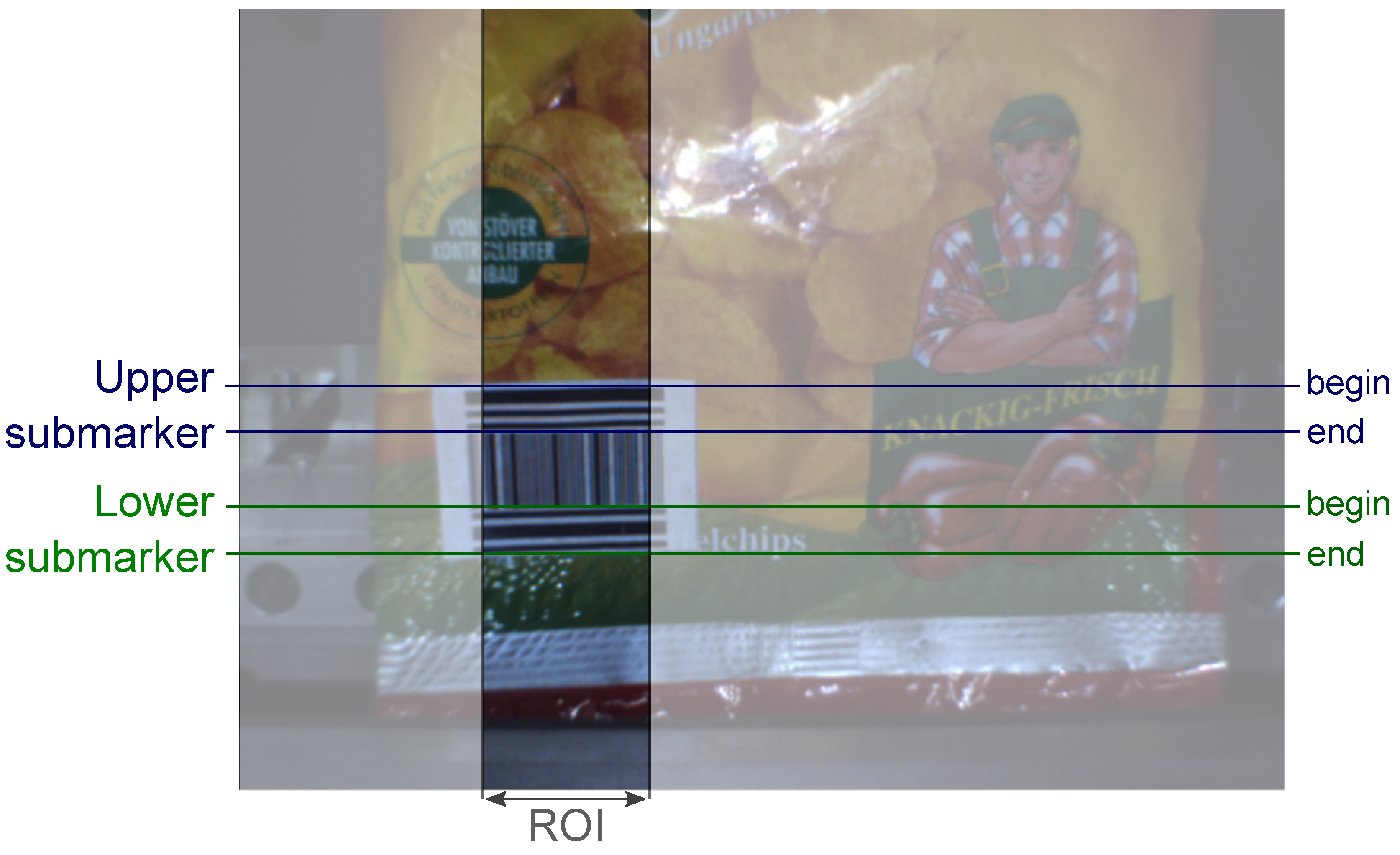

4.1. Marker Detection

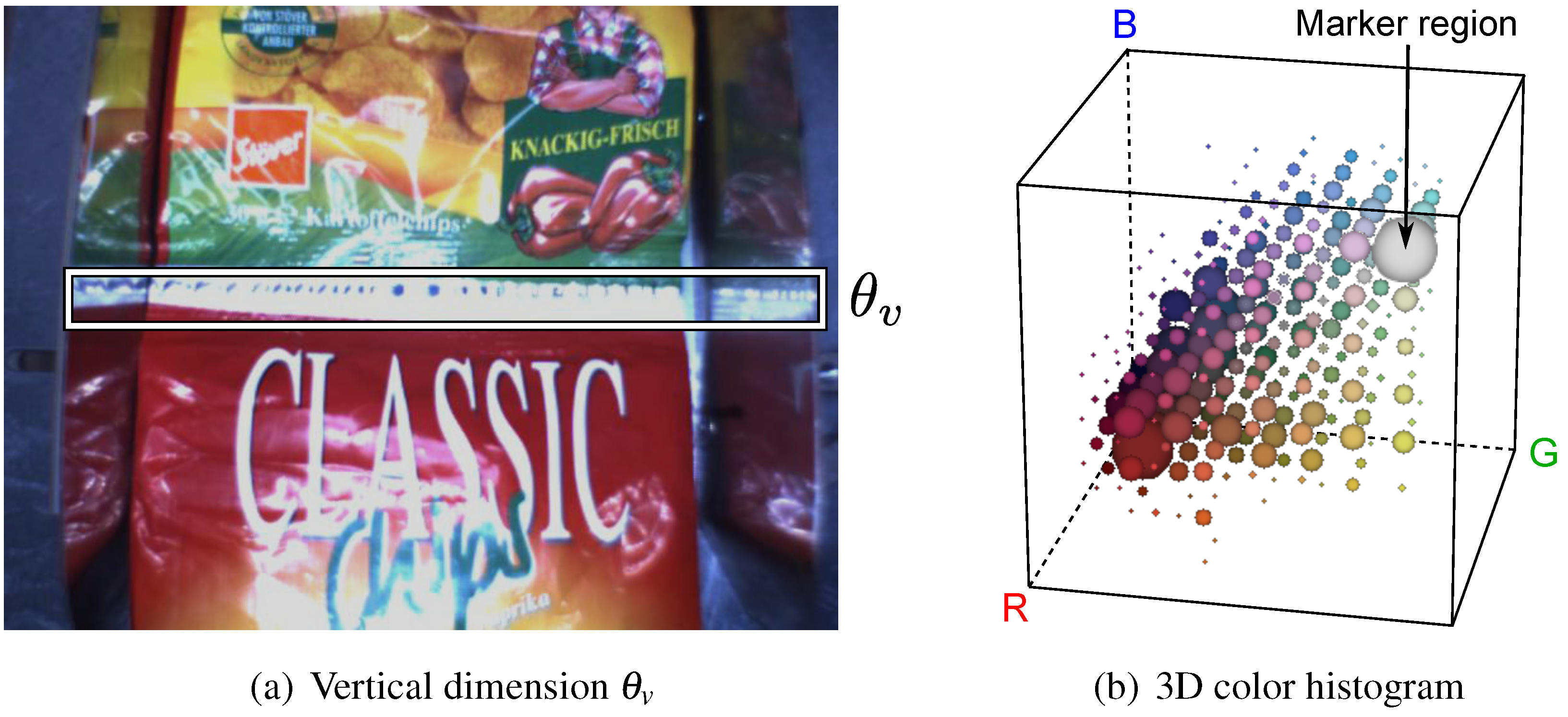

4.2. Color Marker Detection

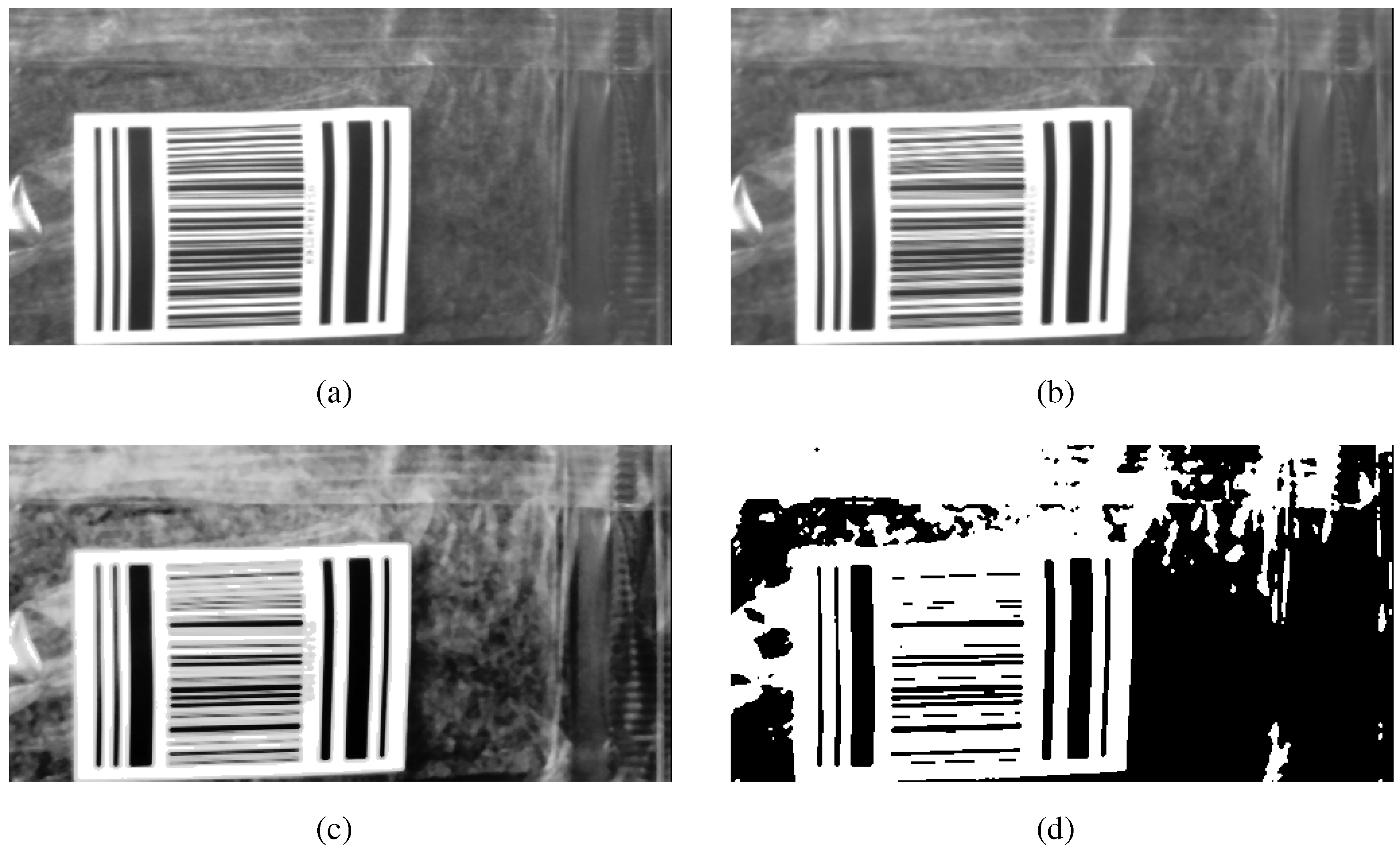

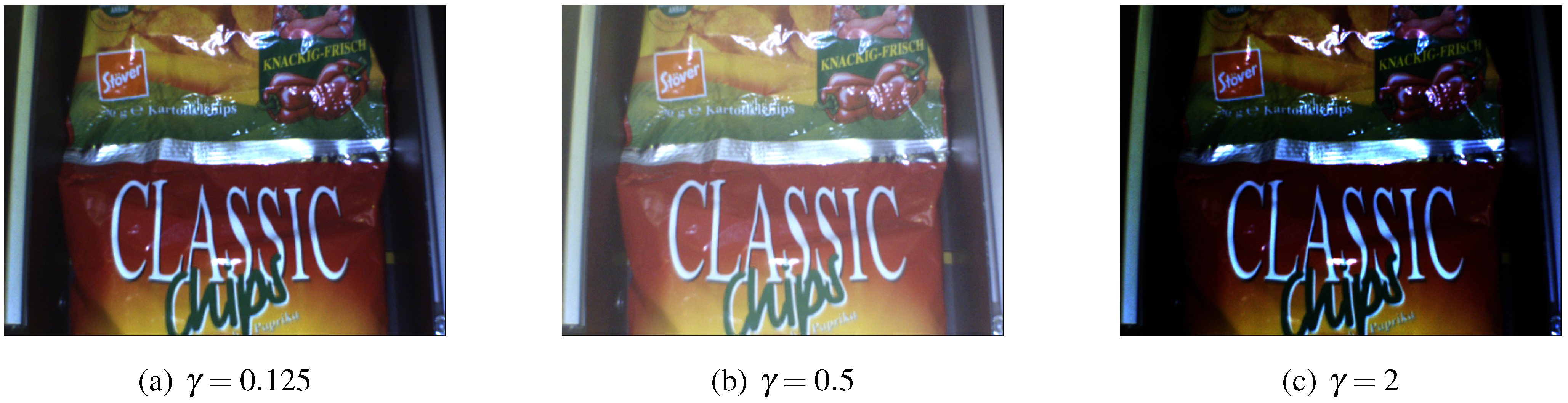

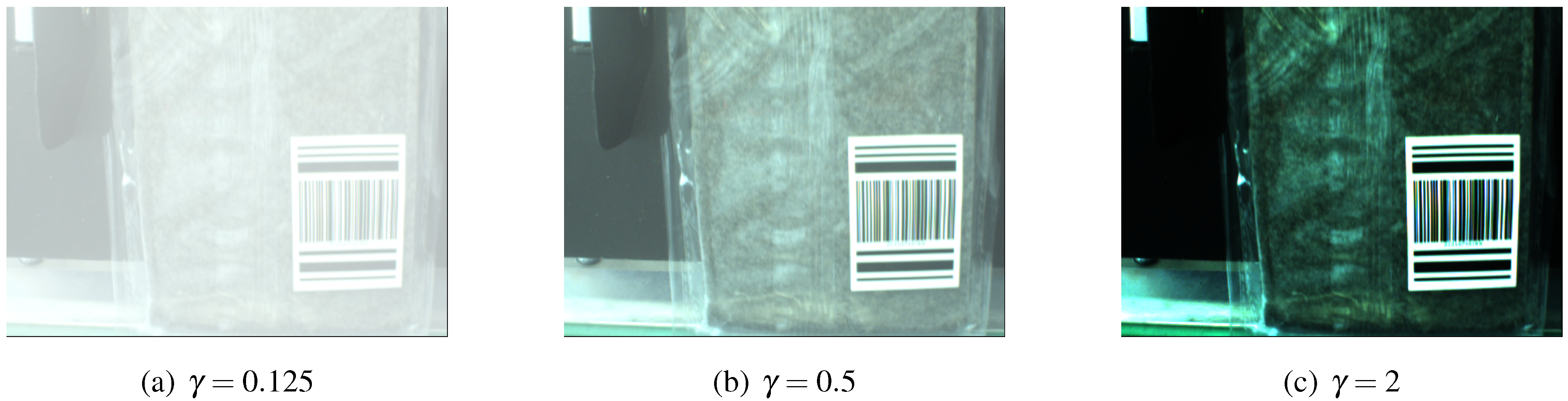

4.2.1. Preprocessing

4.2.2. Histogram-Enhanced Template Matching (Color-Marker_Template)

- : interval which contains the red channel intensity values of the subquad.

- : interval which contains the green channel intensity values of the subquad.

- : interval which contains the blue channel intensity values of the subquad.

4.3. User-Defined Marker Detection

4.3.1. User-Defined Marker Design

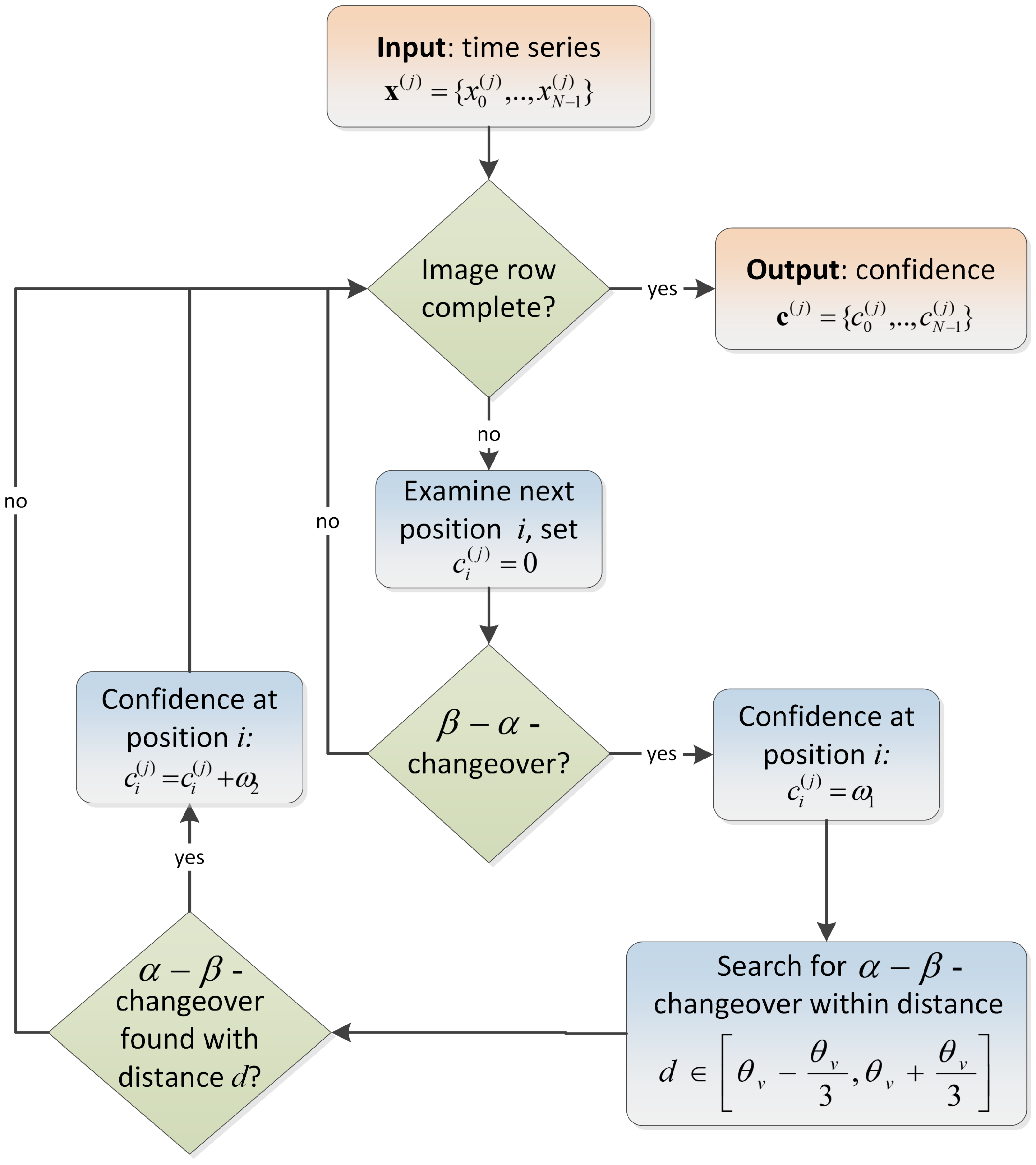

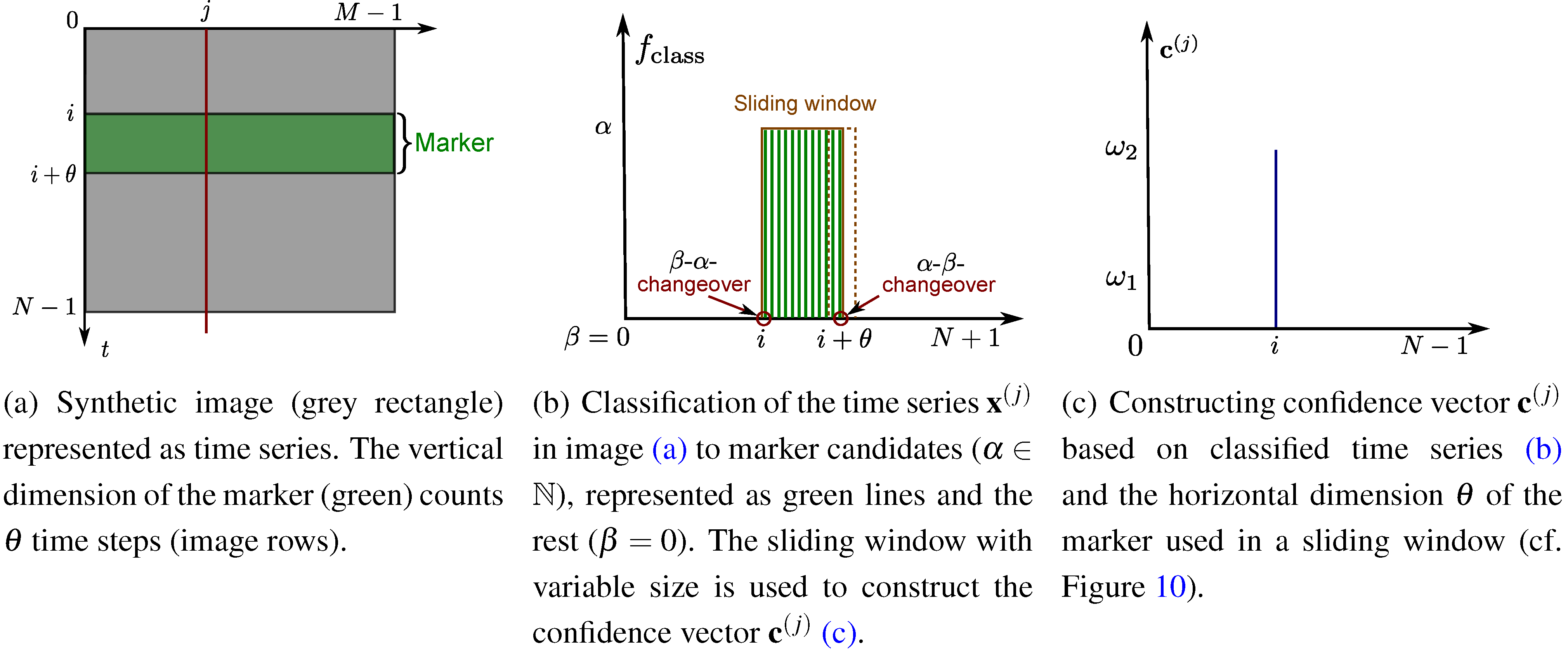

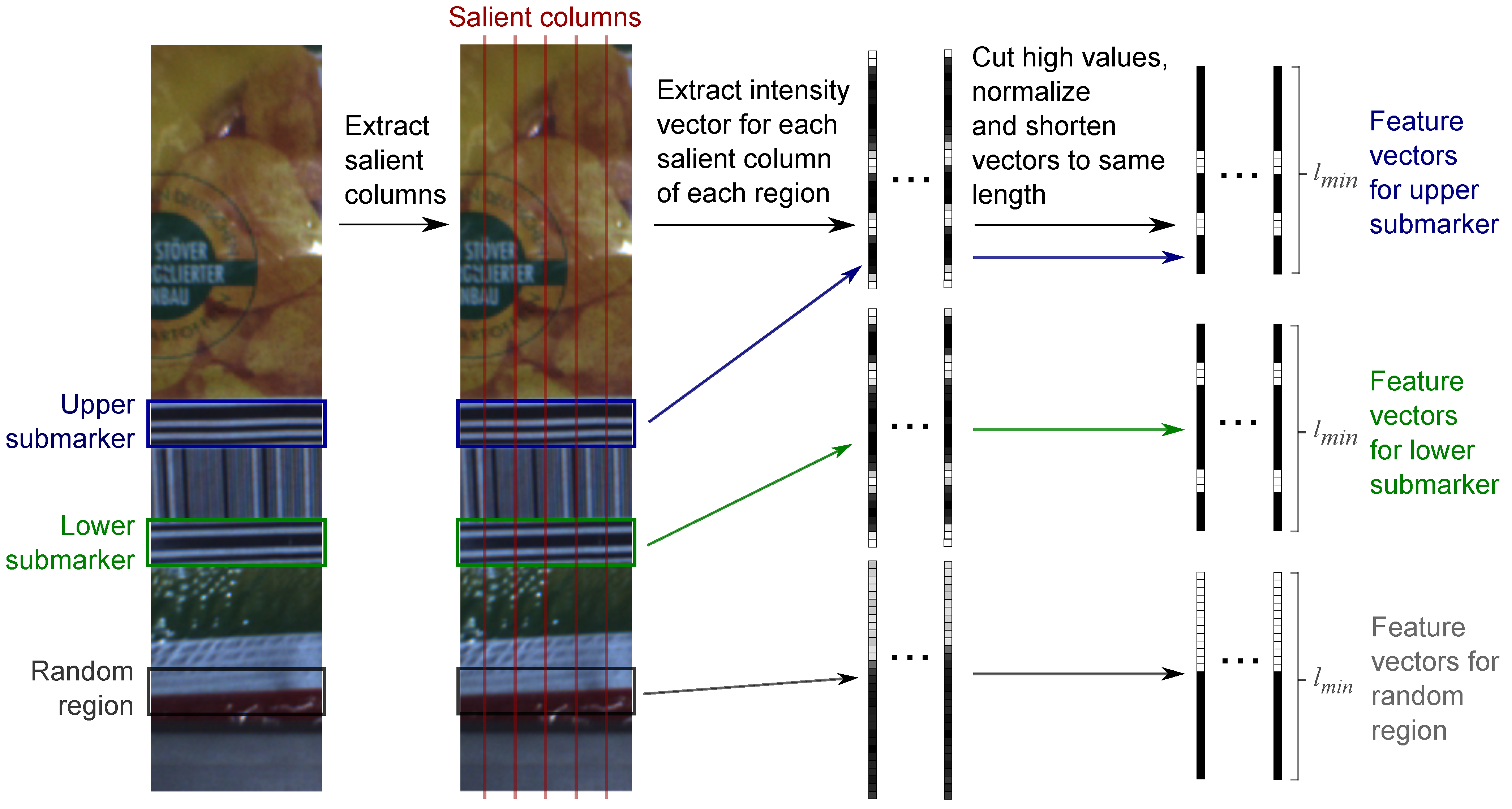

4.3.2. Machine Learning Based Marker Detection

4.3.3. Template Matching Based Marker Detection

5. Evaluation

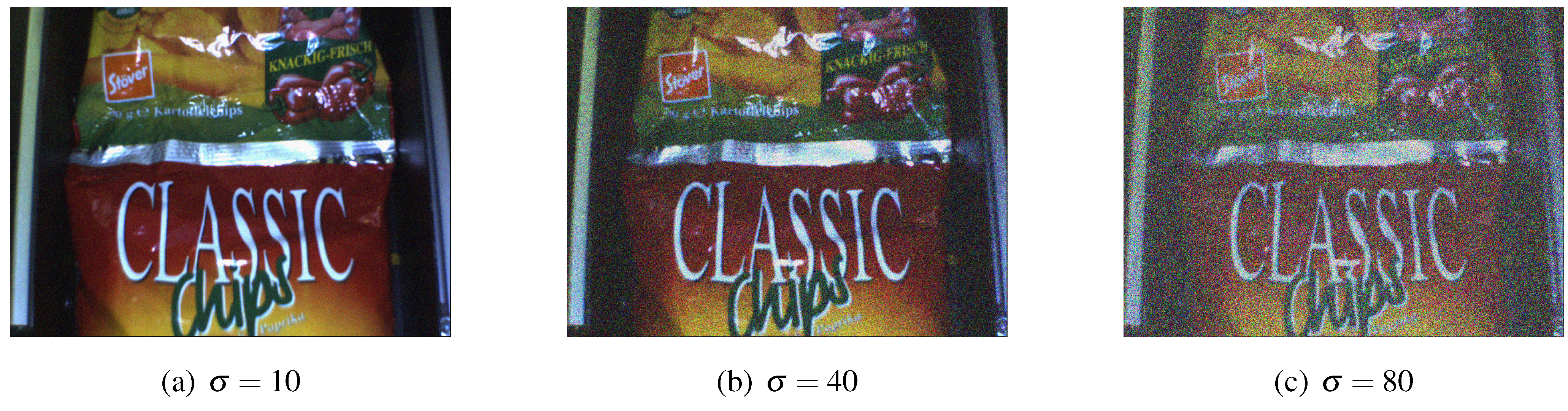

5.1. Vision System Setup

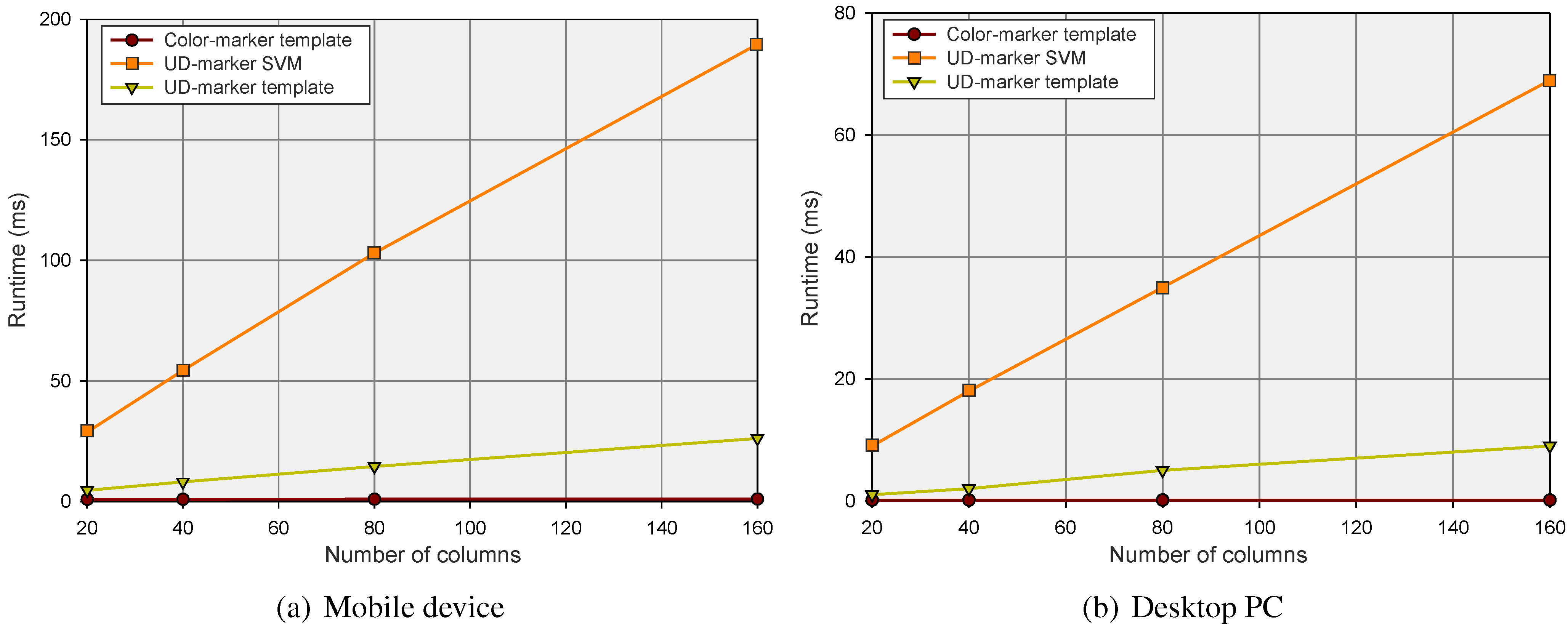

5.2. Detection Quality, Cutting Line Accuracy and Runtime

5.2.1. Detection Quality

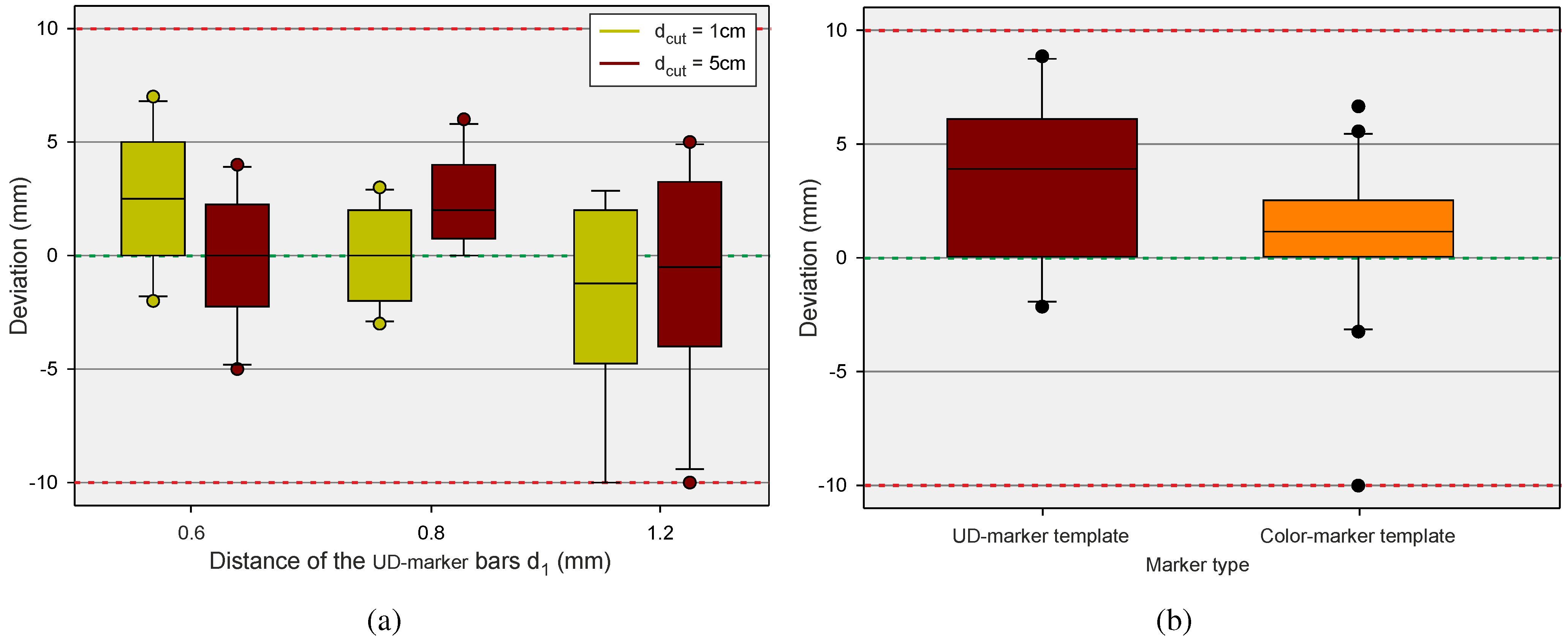

5.2.2. Cutting Line Accuracy

5.2.3. Runtime Performance

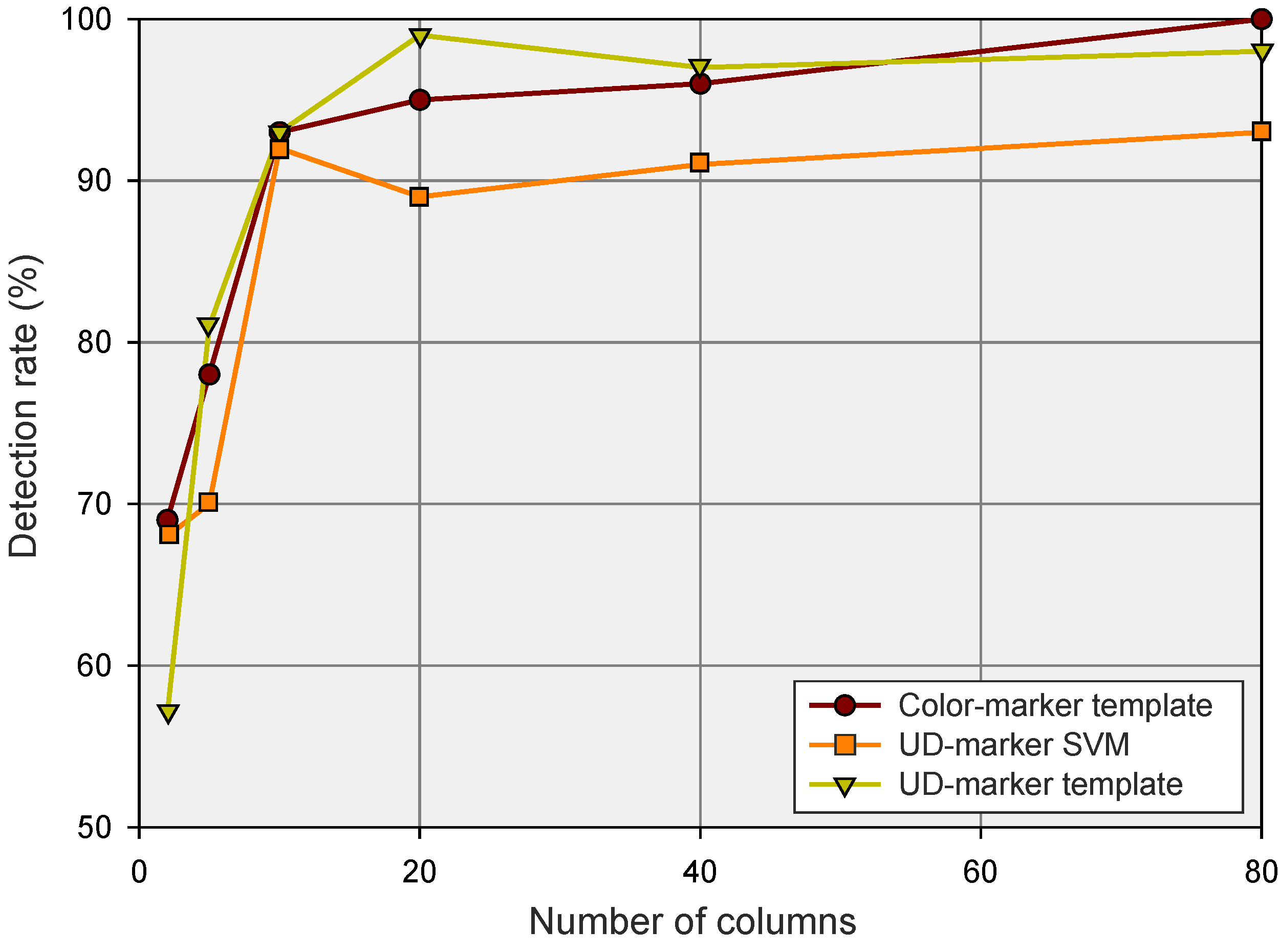

5.3. Trade-off Considerations: Detection Quality vs. Number of Columns

6. Conclusion

Author Contributions

Conflicts of Interest

References

- Bvh–Bundeverband des Deutschen Versandhandels. Aktuelle Zahlen zum interaktiven Handel: “Jahresprognose 2014 für den interaktiven Handel mit Waren”. Available online: http://www.bvh.info/zahlen-und-fakten/allgemeines/ (accessed on 30 June 2014).

- European Union. Key Figures on European Business—With Special Feature on SMEs; Eurostat Pocketbooks; Eurostat: Luxembourg, 2011. [Google Scholar]

- Leiking, L. Method of Automated Order-Picking of Pouch-Packed Goods. PhD Thesis, Technical University of Dortmund, Dortmund, Germany, 2007. [Google Scholar]

- Statistisches Bundesamt—DESTATIS. Produktion des verarbeitenden Gewerbes sowie des Bergbaus und der Gewinnung von Steinen und Erden—Verarbeitendes Gewerbe, 2014. Fachreihe 4, Reihe 3.1, 4th quarter of 2013; Statistisches Bundesamt: Wiesbaden, Germany, 2014. [Google Scholar]

- Verein deutscher Ingenieure (VDI). Kommissioniersysteme: Grundlagen. Richtlinie, VDI 3590. Beuth Verlag: Düsseldorf, Germany, 1994. [Google Scholar]

- ten Hompel, M.; Schmidt, T. Warehouse Management; Springer-Verlag: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- SSI-Schäfer Peem GmbH. Schachtkommissionierer/A-Frame, 2011. Available online: http://www.ssi-schaefer.de/foerder-und-kommissioniersysteme/automatische-kommissionierung/a-frame.html (accessed on 18 July 2014).

- Viastore Systems. Vollautomatisches Kommissioniersystem viapick, 2011. Available online: http://www.viastore.de/kommissioniersysteme/viapick.html (accessed on 18 July 2014).

- Apostore GmbH. Carry Fix Pusher, 2014. Available online: http://www.apostore.de/de/produkte/spezialloesungen/carryfix-pusher.html (accessed on 18 July 2014).

- Siemens Technical Press, Industry Sector. Vereinzelung auf kleinstem Raum mit dem Visicon Singulator von Siemens, 2009. Available online: http://w1.siemens.com/press/de/pressemitteilungen/2009/mobility/imo200901008.htm (accessed on 18 July 2014).

- Karaca, H.; Akinlar, C. A multi-camera vision system for real-time tracking of parcels moving on a conveyor belt. In Computer and Information Sciences—ISCIS 2005; Springer-Verlag: Berlin/Heidelberg, Germany, 2005; Volume 3733, pp. 708–717. [Google Scholar]

- Rontech. SpaceFeeder—Universal Infeed System, 2011. Available online: http://www.rontech.ch/index.cfm?navid=41&detailid=7 (accessed on 18 July 2014).

- Somtec. Automatische Beutelvereinzelung und Kartonbefüllung, 2008. Available online: www.somtec.de (accessed on 18 July 2014).

- Rovema Verpackungsservice GmbH. Packaging Concepts, 2011. Available online: http://www.ptg-verpackungsservice.de/deutsch/dienstleistung/beutel/index.html (accessed on 18 July 2014).

- Rockwell Automation, Inc. The VFFS (Vertical From Fill Seal) Machine Application, 2008. Available online: http://literature.rockwellautomation.com/idc/groups/literature/documents/wp/oem-wp004_-en-p.pdf (accessed on 18 July 2014).

- Schultze, S.; Schnabel, H. Sensor for Marks on or in Material and Method of Sensing a Mark on or in a Material. Patent No. DE102008024104A1, 2010. [Google Scholar]

- Schölkopf, B.; Sung, K.; Burges, C.; Girosi, F.; Niyogi, P.; Poggio, T.; Vapnik, V. Comparing Support Vector Machines with Gaussian Kernels to Radial Basis Function Classifiers. IEEE Trans. Signal Process. 1997, 45, 2758–2765. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. Support Vector Machines; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Gaspar, P.; Carbonell, J.; Oliveira, J. On the parameter optimization of Support Vector Machines for binary classification. J. Integr. Bioinf. 2012, 9, 1–11. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar]

- Basler AG. Available online: http://www.baslerweb.com (accessed on 18 July 2014).

- Allied Vision Technologies. Digital Machine Vision Cameras. Available online: http://www.alliedvisiontec.com/emea/home.html (accessed on 18 July 2014).

- GigE Vision—True Plug and Play Connectivity. http://www.visiononline.org/vision-standards-details.cfm (accessed on 18 July 2014).

- Gudehus, T. Logistik—Grundlagen, Strategien, Anwendungen; Springer-Verlag: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Rabiner, L.; Juang, B. An Introduction to Hidden Markov Models. IEEE ASSP Mag. 1986, 3, 4–16. [Google Scholar] [CrossRef]

- Koller-Meier, E.; Ade, F. Tracking multiple objects using the condensation algorithm. Robot. Auton. Syst. 2001, 34, 93–105. [Google Scholar] [CrossRef]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weichert, F.; Böckenkamp, A.; Prasse, C.; Timm, C.; Rudak, B.; Hölscher, K.; Hompel, M.T. Towards Sensor-Actuator Coupling in an Automated Order Picking System by Detecting Sealed Seams on Pouch Packed Goods. J. Sens. Actuator Netw. 2014, 3, 245-273. https://doi.org/10.3390/jsan3040245

Weichert F, Böckenkamp A, Prasse C, Timm C, Rudak B, Hölscher K, Hompel MT. Towards Sensor-Actuator Coupling in an Automated Order Picking System by Detecting Sealed Seams on Pouch Packed Goods. Journal of Sensor and Actuator Networks. 2014; 3(4):245-273. https://doi.org/10.3390/jsan3040245

Chicago/Turabian StyleWeichert, Frank, Adrian Böckenkamp, Christian Prasse, Constantin Timm, Bartholomäus Rudak, Klaas Hölscher, and Michael Ten Hompel. 2014. "Towards Sensor-Actuator Coupling in an Automated Order Picking System by Detecting Sealed Seams on Pouch Packed Goods" Journal of Sensor and Actuator Networks 3, no. 4: 245-273. https://doi.org/10.3390/jsan3040245