Robust Indoor Mobile Localization with a Semantic Augmented Route Network Graph

Abstract

:1. Introduction

2. Related Works

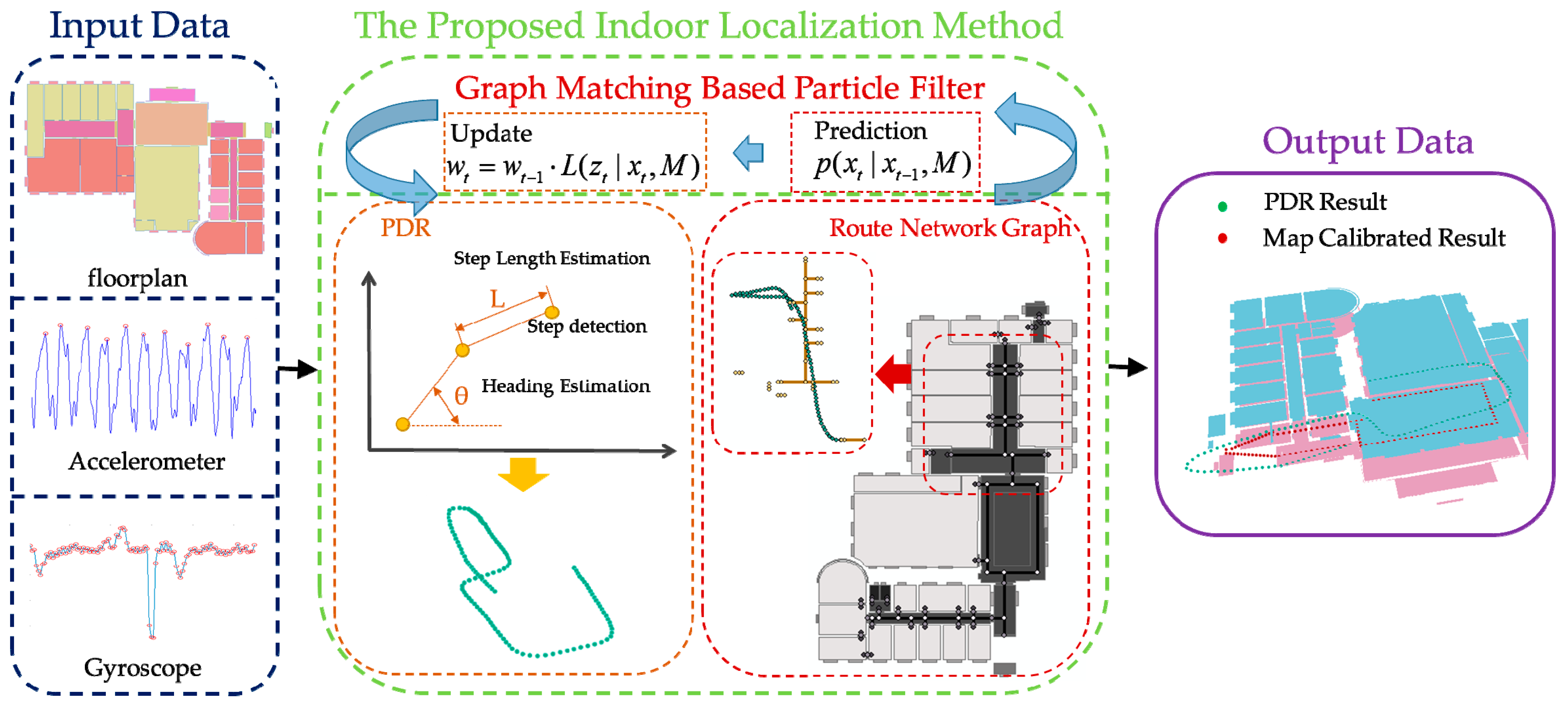

3. Methodology

3.1. PDR Trajectory Estimation

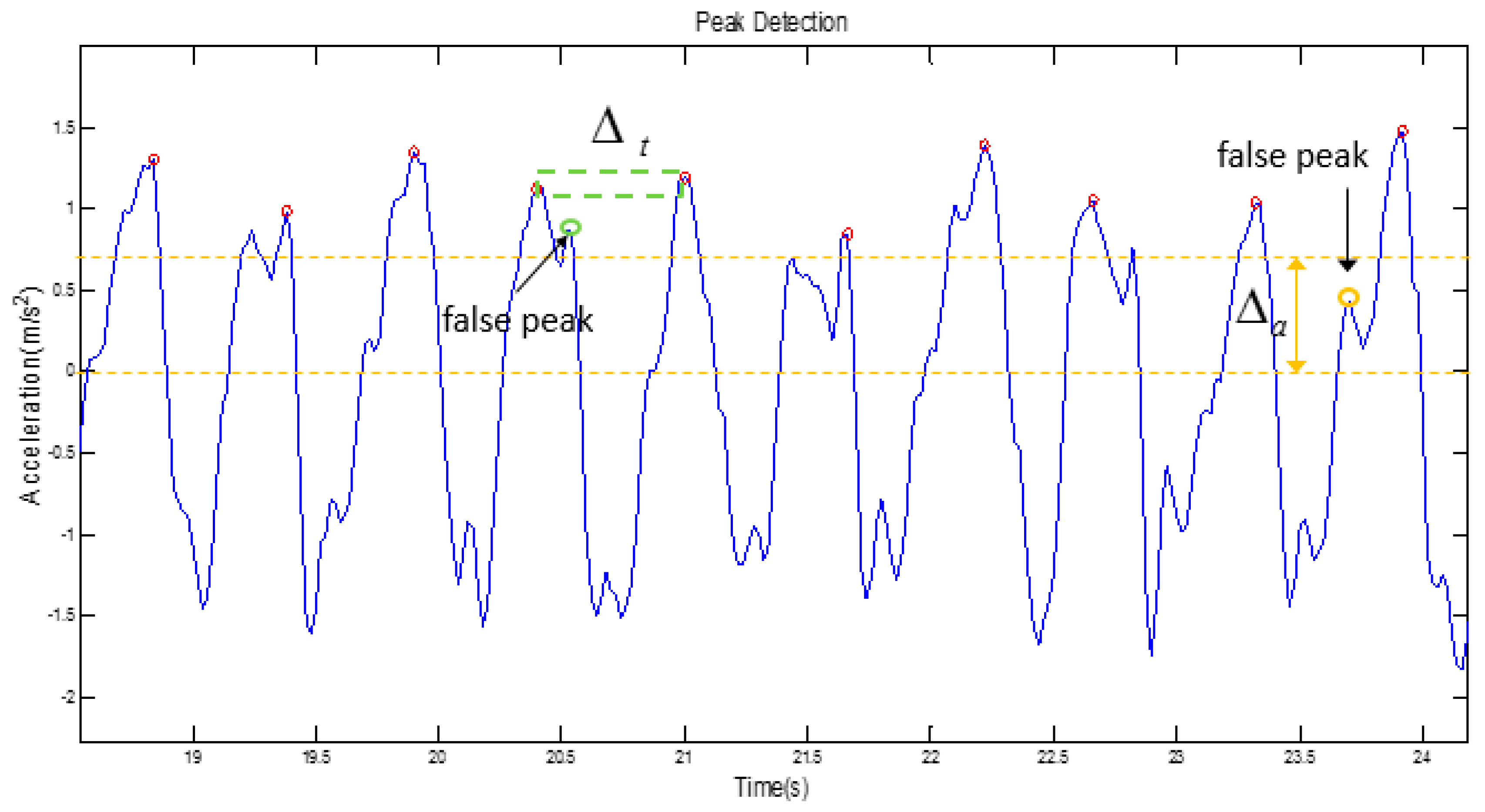

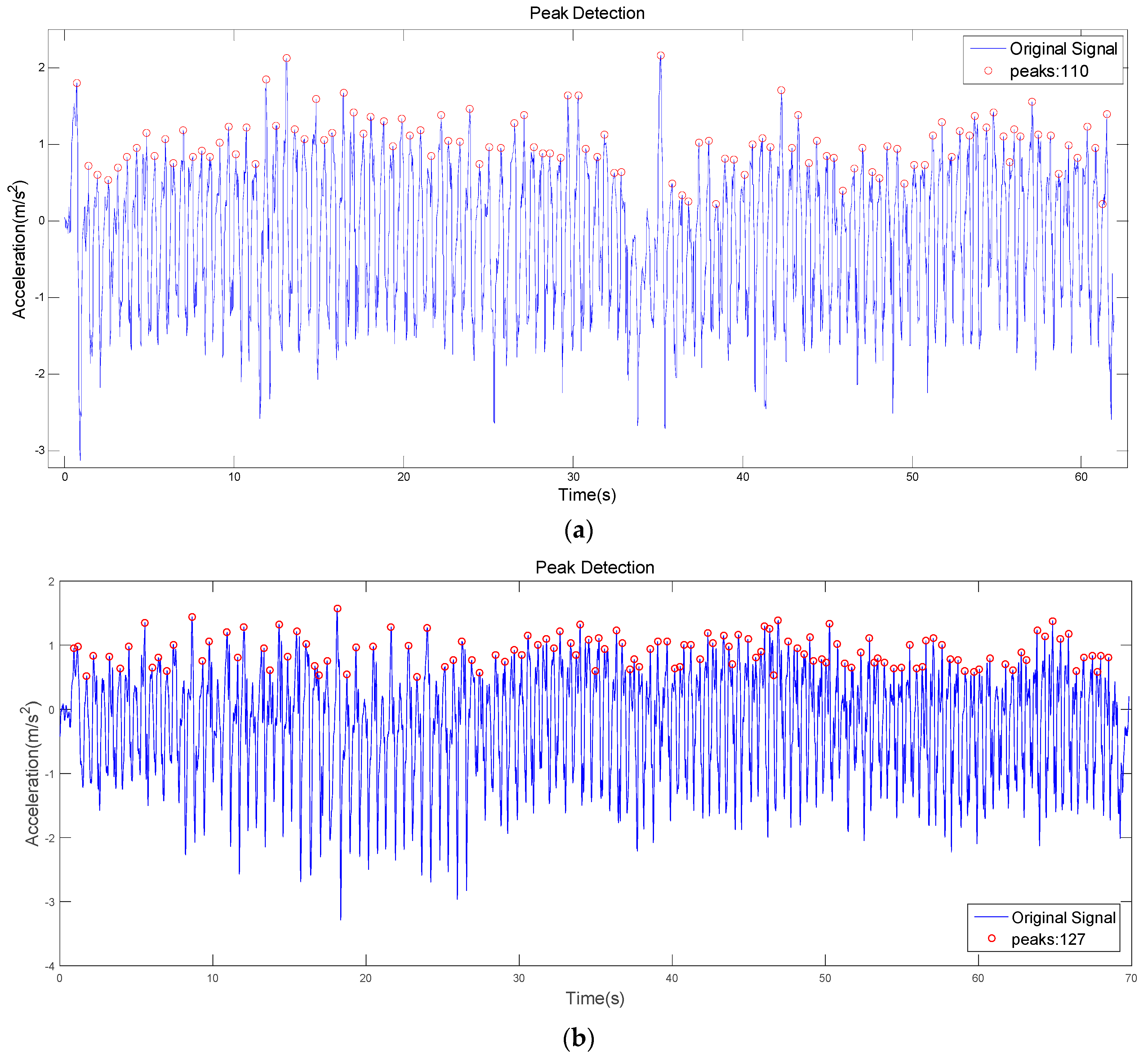

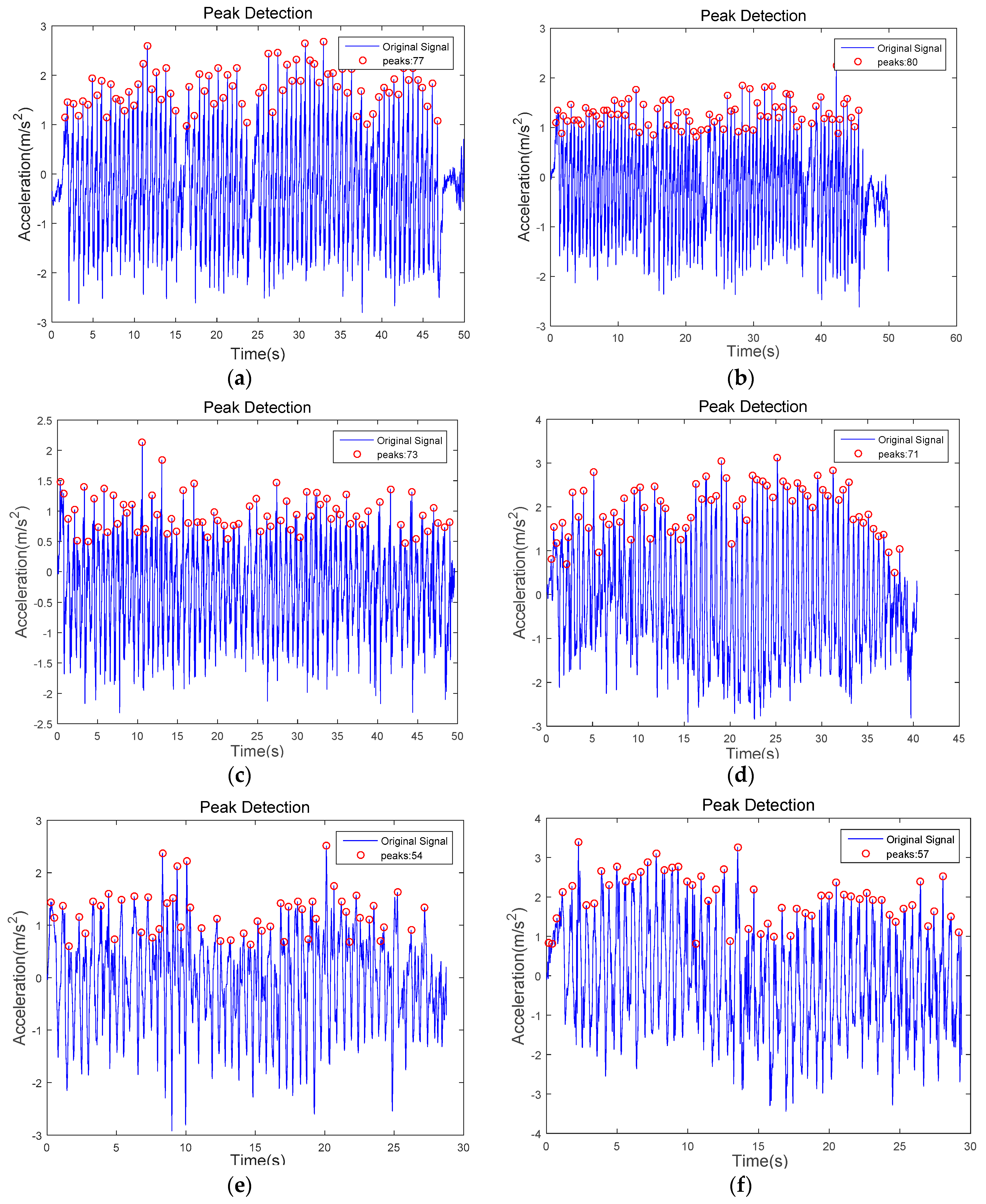

3.1.1. Step Detection

- : minimum acceleration magnitude that determines a peak; and

- : minimum time duration between two steps.

3.1.2. Step Length Estimation

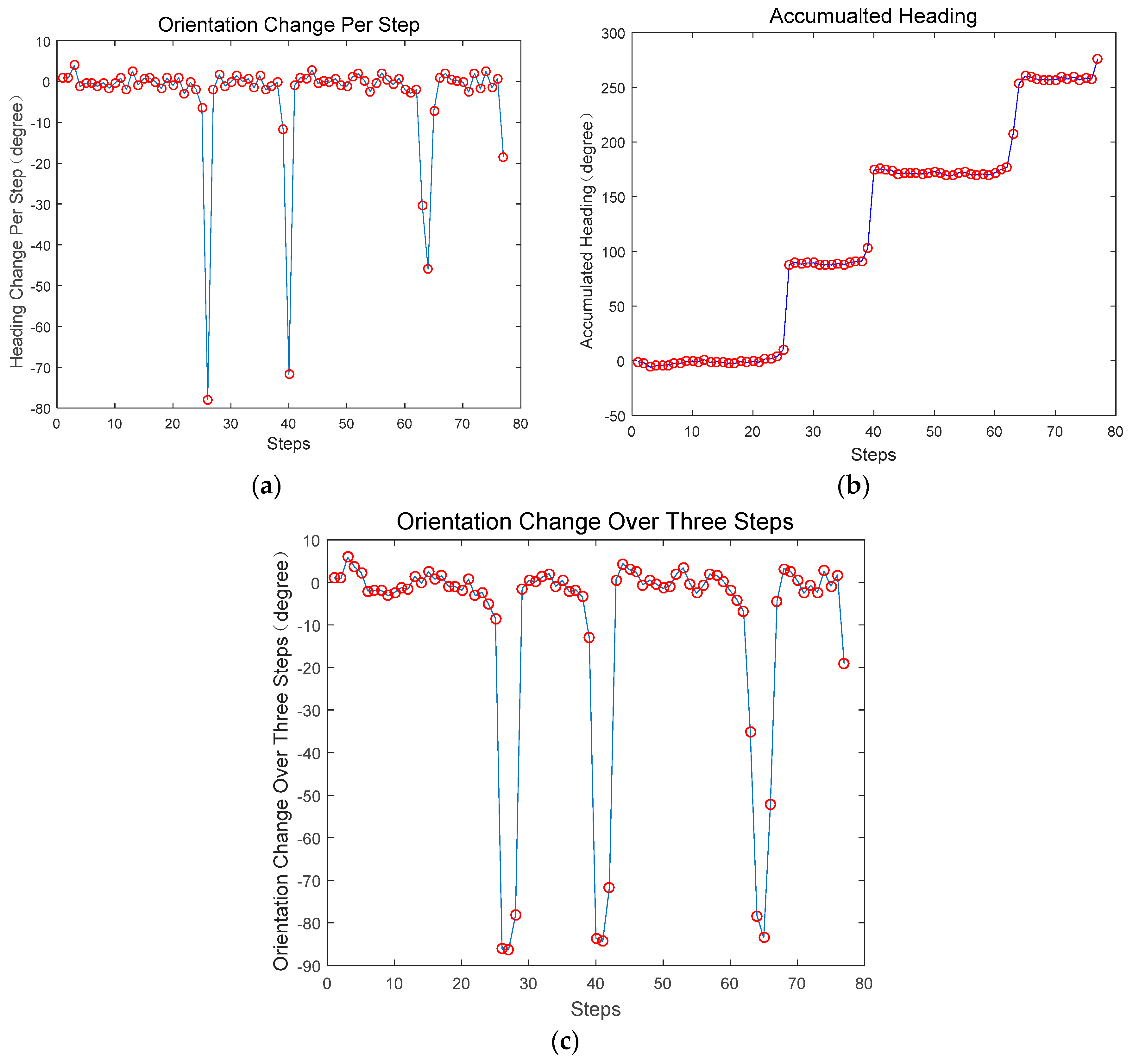

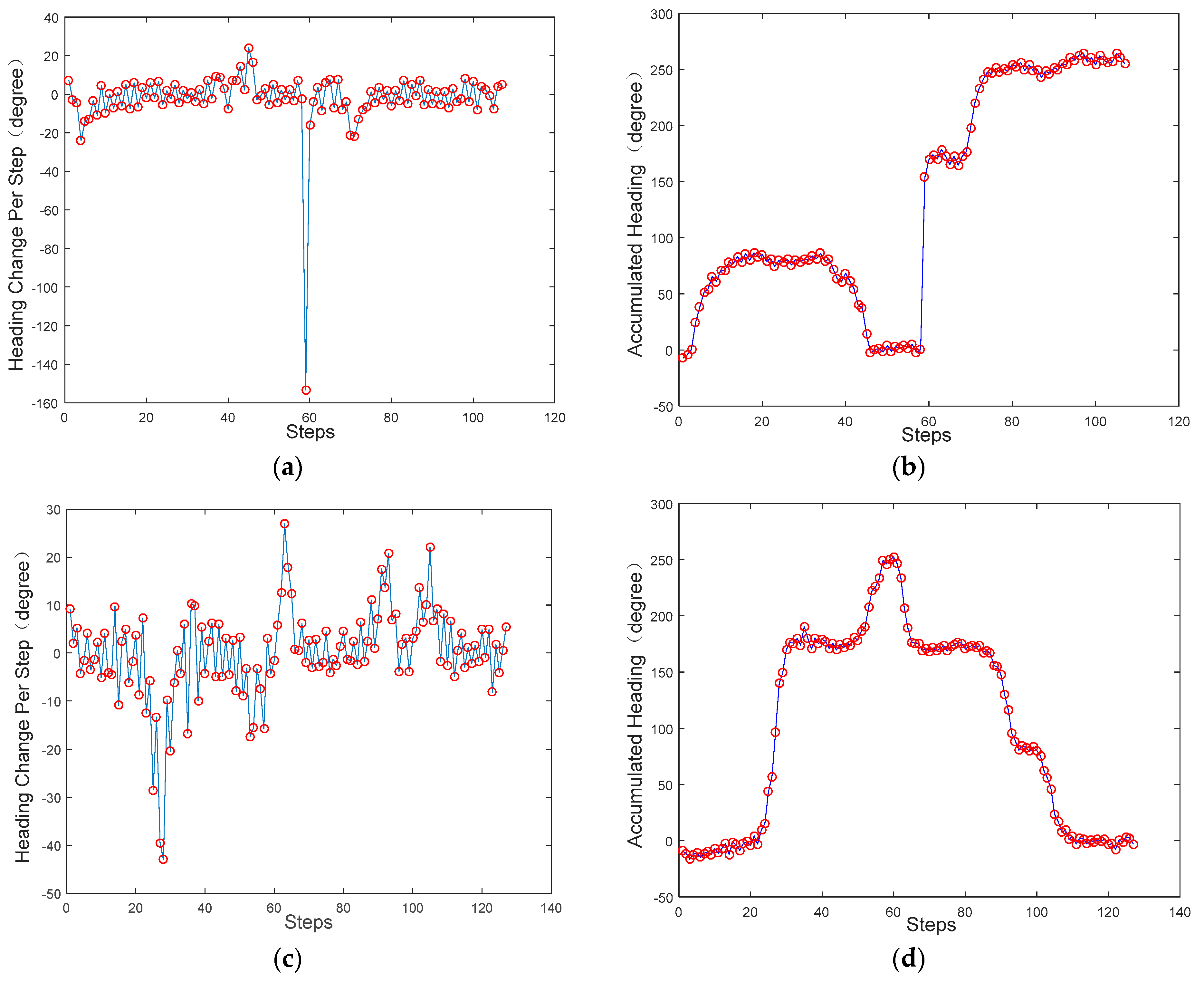

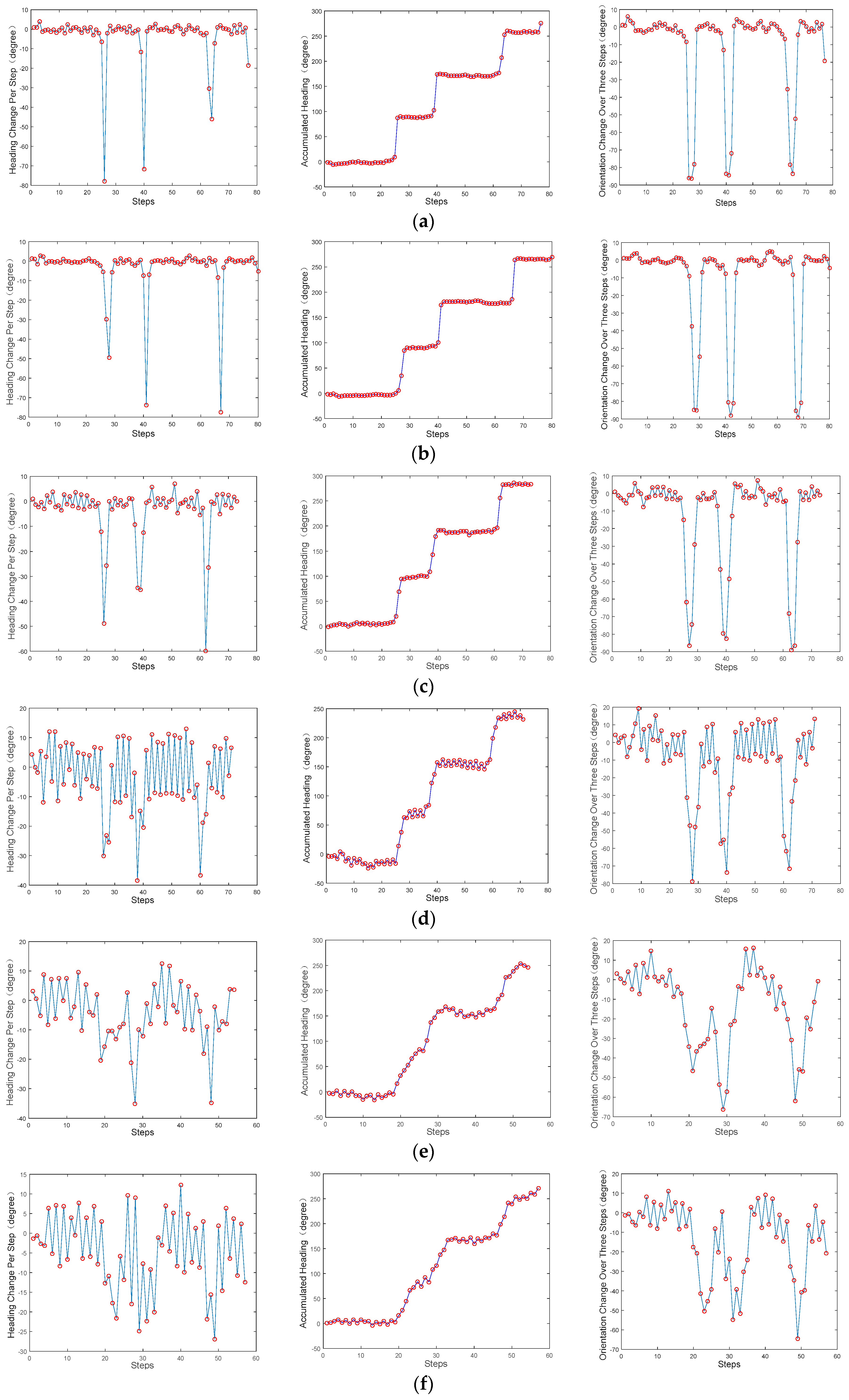

3.1.3. Orientation Estimation

- Initialization: the magnitude of the angle rotated within one sample time:where is the angular rate returned by the gyroscope at time stamps k, and is the time duration of one sampling.

- Quaternion update: calculate the rotation of one sample time from the device frame to the navigation frame:where:We rewrite the factor as:Consequently, Equation (4) can be rewritten as:

- Rotation matrix calculation: given the orientation quaternion , the rotation matrix from the device frame to the navigation frame at timestamp can be expressed as:

- Angle calculation: the angle at timestamp can be extracted from the rotation matrix as:

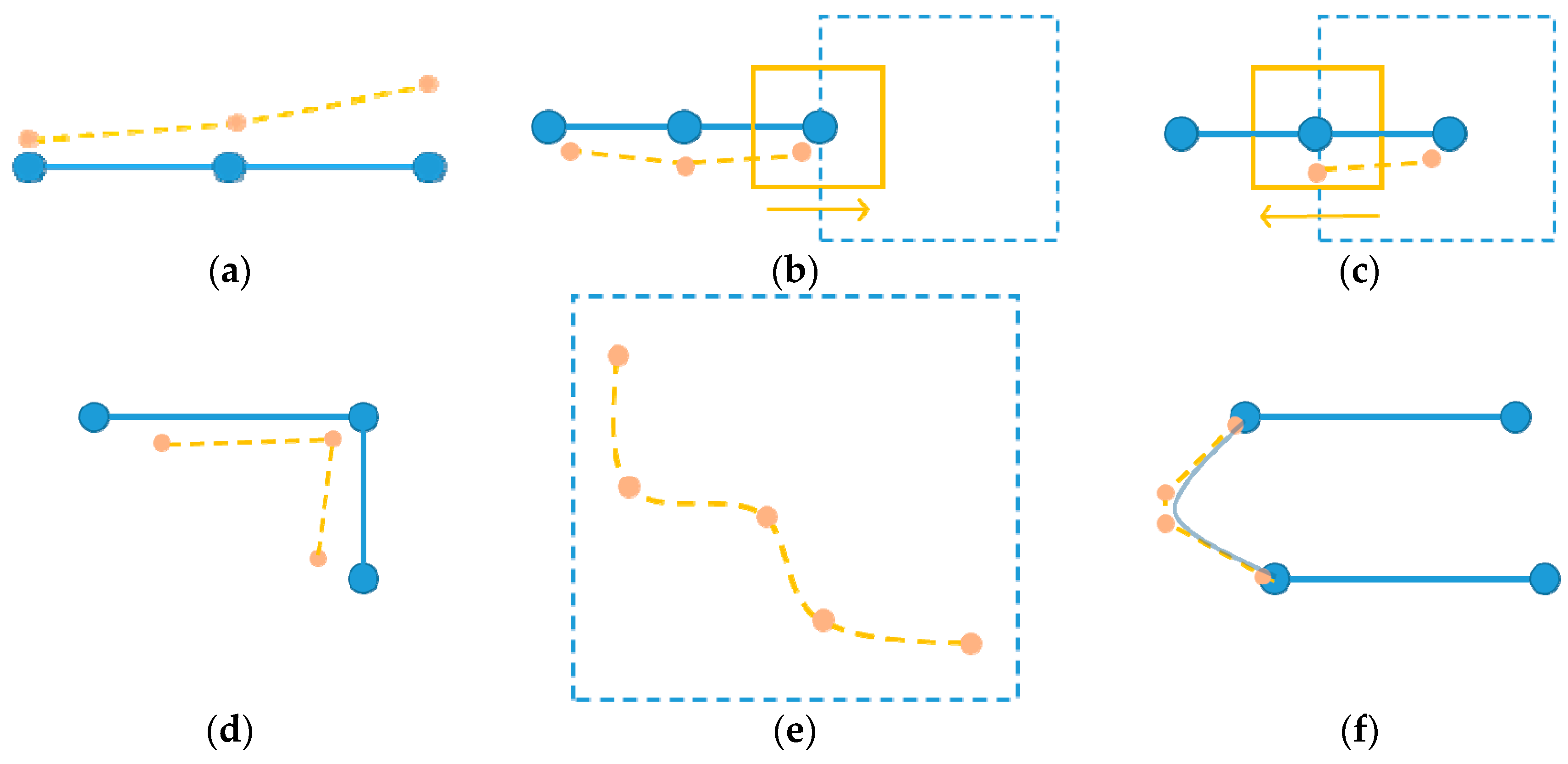

3.1.4. Turn Detection

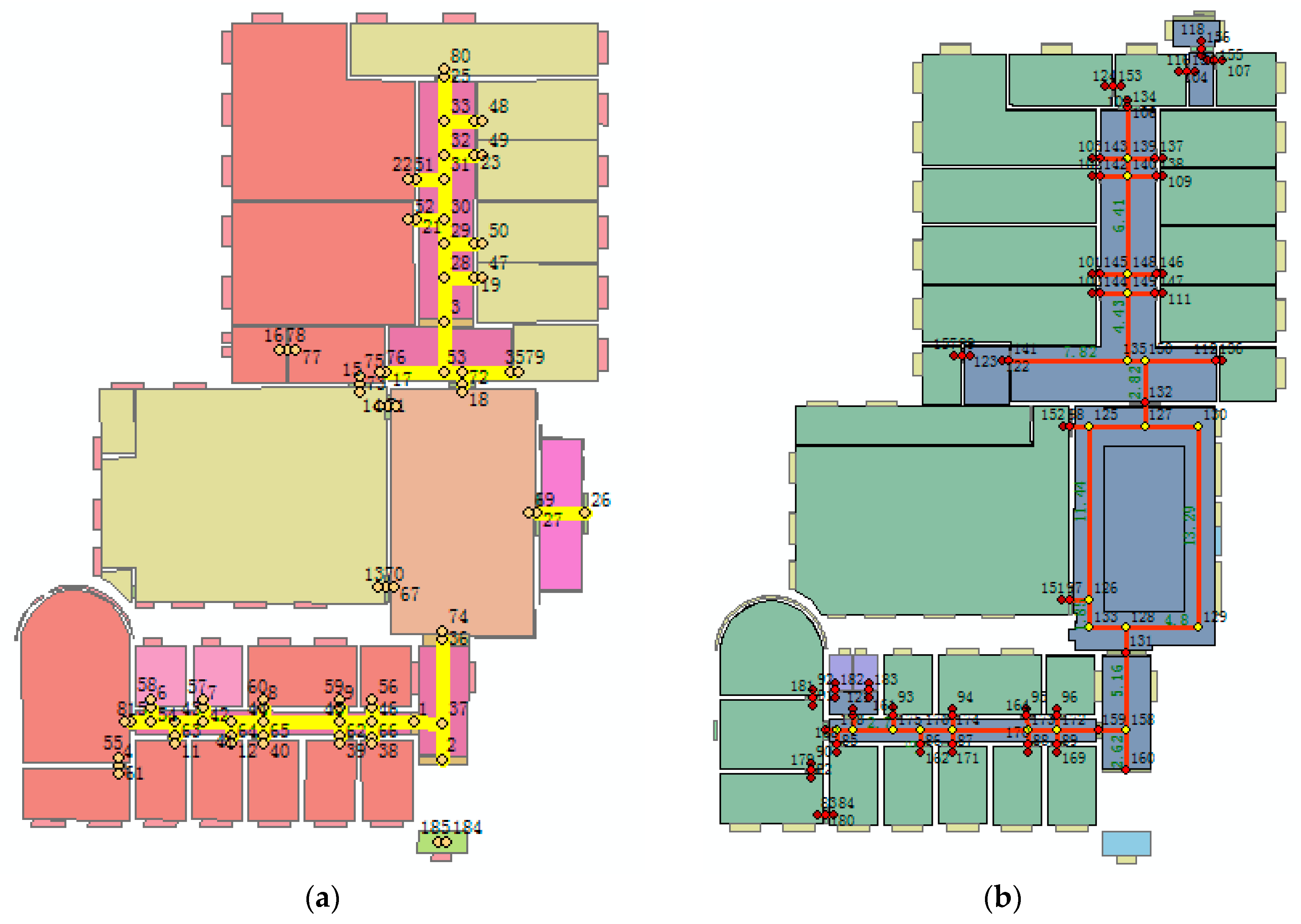

3.2. Semantic Augmented Route Network Graph Generation

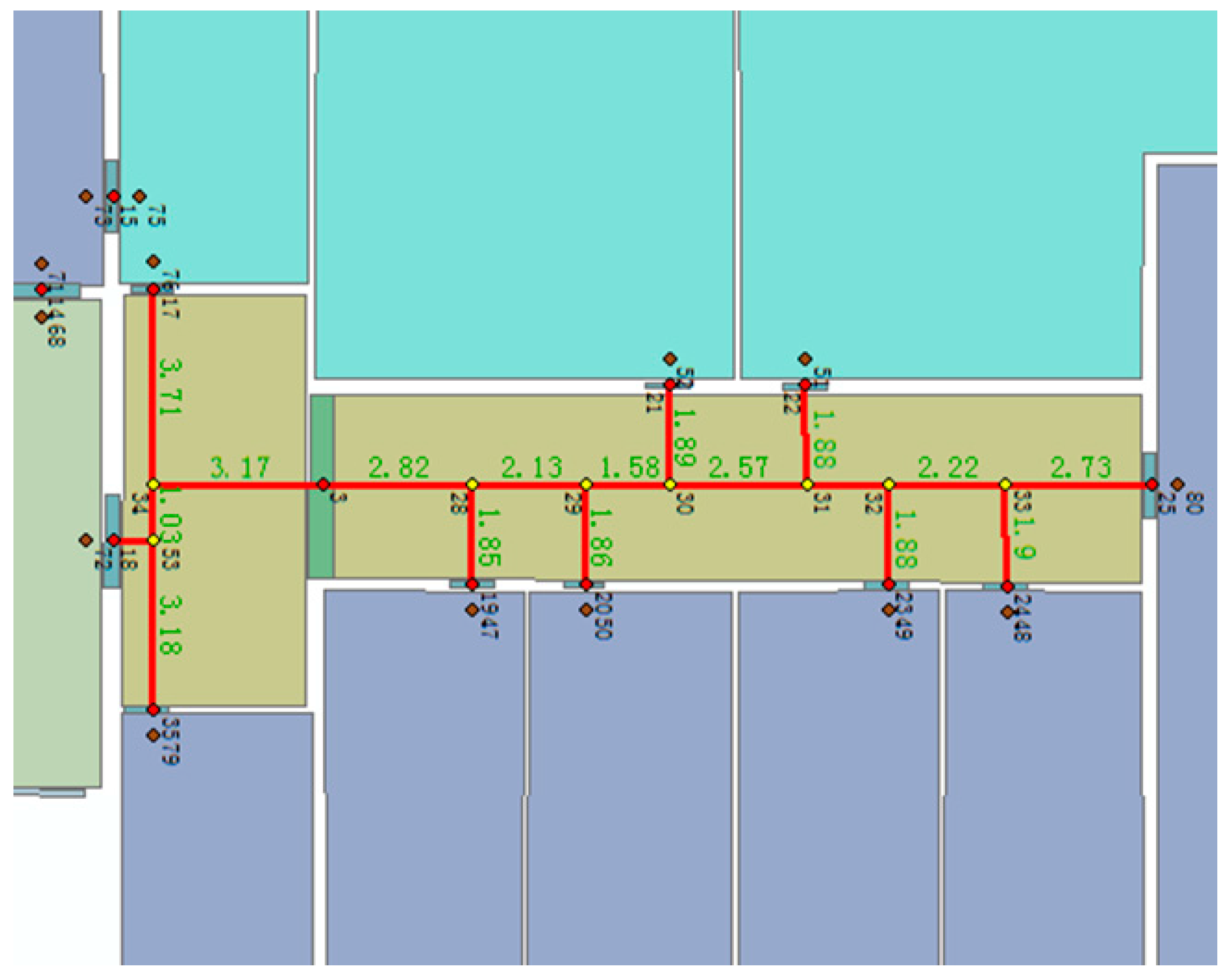

- Semi-metrical. We remove the irrelevant degrees of freedom by extracting a one-dimensional skeleton from the floorplan, while keeping the necessary metrical information for localization by labeling the length of the corridors and representing the rooms as abutting points with range buffers. In this way, the computational complexity is reduced.

- Motion-associated. Given the spatial relationships between indoor entities (e.g., rooms, corridors, and corners) and the user’s activity events (e.g., turning left and turning right), we can narrow the user path to the specific route in the graph or recalibrate the biased trajectory by recognizing the events. The adaptive edge length also extends the graph to be applicable to multiple user modes.

- Semantically-augmented. The route network represents the connectivity and accessibility of indoor space by defining the transition probabilities between connected nodes, and the semantic information attached to each node and edge provide extra semantic accuracy to the calibration result.

3.2.1. Indoor Structure Adaptive Extraction

3.2.2. User Motion Compatible Context

3.3. Graph Matching-Based Particle Filter

- Prediction: for each particle, the new state is predicted by sampling from the state transition probability distribution, given its current state:where and are the respective particle states at times and . represents the control information; for example, the map knowledge of the environment.

- Importance sampling: for any measurement, update each particle’s importance weight according to the measurement likelihood function, given its new state:where and are the respective importance weights at the current and previous timestamps, and denotes the current measurement.

- Resampling and particle updating: resampling is carried out when the effective sample size falls below a specified threshold (50% of the particle number in our case). Therefore, high weights are duplicated and low ones are eliminated. Resampling the particles to obtain equally-weighted particles by duplicating only the particles with qualified weights:where is the number of particles with qualified weights and is the resampled particles.

3.3.1. Context-Augmented State Space

- Three-dimensional coordinate of particles ;

- Start node and end node ;

- Orientation deviation from last time ; and

- Pedestrian step length .

3.3.2. PDR-Based Particle Filter Model

- Orientation: calculate all the edges connected with the current node with respect to their respective directions relative to the particle’s orientation:

- Location: calculate the nearest node in the graph to the new location calculated by the sampled edge and the particle’s displacement:

4. Experimental Evaluation

4.1. Experimental Setup

4.2. Applicability in a Complicated Indoor Environment

4.3. Applicability for Diverse Users

4.4. Computation Performance

5. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kothari, N.; Kannan, B.; Dias, M.B. Robust indoor localization on a commercial smart phone. Proced. Comput. Sci. 2012, 10, 1114–1120. [Google Scholar] [CrossRef]

- Jia, R.; Jin, M.; Zou, H.; Yesilata, Y.; Xie, L.; Spanos, C. MapSentinel: Can the knowledge of Space use improve indoor tracking further? Sensors 2016, 16, 472. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Gil, S.; Katabi, D.; Rus, D. Accurate indoor localization with zero start-up cost. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, Maui, HI, USA, 7–11 September 2014; pp. 483–494. [Google Scholar]

- Zhang, Y.; Yang, J.; Li, Y.; Qi, L.; Naser, E.S. Smartphone-based indoor localization with bluetooth low energy beacons. Sensors 2016, 16, 596. [Google Scholar] [CrossRef] [PubMed]

- Benjamin, K.; Pat, P.; Prabal, D. SurePoint: Exploiting ultra wideband flooding and diversity to provide robust, scalable, high-fidelity indoor localization. In Proceedings of the 14th ACM Conference on Embedded Network Sensor Systems CD-ROM, Stanford, CA, USA, 14–16 November 2016; pp. 137–149. [Google Scholar]

- Monica, S.; Ferrari, G. A swarm-based approach to real-time 3D indoor localization: Experimental performance analysis. Appl. Soft Comput. 2016, 43, 489–497. [Google Scholar] [CrossRef]

- Pasku, V.; Angelis, D.A.; Dionigi, M.; Angelis, D.G.; Moschitta, A.; Carbone, P. A positioning system based on low-frequency magnetic fields. IEEE Trans. Ind. Electron. 2016, 63, 2457–2468. [Google Scholar] [CrossRef]

- Wang, J.; Hu, A.D.; Liu, C.; Li, X. A floor-map-aided WIFI/pseudo-odometry integration algorithm for an indoor positioning system. Sensors 2015, 15, 7096–7124. [Google Scholar] [CrossRef] [PubMed]

- Park, J.G.; Teller, S. Motion Compatibility for Indoor Localization. Available online: http://hdl.handle.net/1721.1/89075 (accessed on 26 August 2014).

- Chen, Z.; Zou, H.; Jiang, H.; Zhu, Q.; Soh, Y.C.; Xie, L. Fusion of WiFi, smartphone sensors and landmarks using the kalman filter for indoor localization. Sensors 2015, 15, 715–732. [Google Scholar] [CrossRef] [PubMed]

- Pinto, A.M.; Moreira, A.P.; Costa, P.G. A localization method based on map-matching and particle swarm optimization. J. Intell. Robot. Syst. 2015, 77, 313–326. [Google Scholar] [CrossRef]

- Shang, J.; Gu, F.; Hu, X.; Kealy, A. APFiLoc: An infrastructure-free indoor localization method fusing smartphone inertial sensors, landmarkds and map information. Sensors 2015, 15, 27251–27272. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Sen, S.; Elgohary, A.; Farid, M.; Youssef, M.; Choudhury, R.R. No need to war-drive: Unsupervised indoor localization. In Proceedings of the 10th International Conference on Mobile Systems, Applications, and Services, Low Wood Bay, Windermere, UK, 25–29 June 2012. [Google Scholar]

- Bao, H.; Wong, W.C. A novel map-based dead-reckoning algorithm for indoor localization. J. Sens. Actuator Netw. 2014, 3, 44–63. [Google Scholar] [CrossRef]

- Jensen, C.S.; Lu, H.; Yang, B. Graph model based indoor tracking. In Proceedings of the 10th International Conference on Mobile Data Management: Systems, Services and Middleware, Taipei, Taiwan, 18–20 May 2009; pp. 122–131. [Google Scholar]

- Zhou, B.; Li, Q.; Mao, Q.; Tu, W.; Zhang, X. Activity sequence-based indoor pedestrian localization using smartphones. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 562–574. [Google Scholar] [CrossRef]

- Hilsenbeck, S.; Bobkov, D.; Schroth, G.; Huitl, R.; Steinbach, E. Graph-based data fusion of pedometer and WiFi measurements for mobile indoor positioning. In Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Seattle, WA, USA, 13–17 September 2014. [Google Scholar]

- Goodchild, M.F. Space, place and health. Ann. GIS 2014, 21, 97–100. [Google Scholar] [CrossRef]

- Lymberopoulos, D.; Liu, J.; Yang, X.; Choudhury, R.R.; Handziski, V.; Sen, S. A realistic evaluation and comparison of indoor location technologies: Experiences and lessons learned. In Proceedings of the 14th International Conference on Information Processing in Sensor Networks, Seattle, WA, USA, 14–16 April 2015. [Google Scholar]

- Harle, R. A survey of indoor inertial positioning systems for pedestrians. IEEE Commun. Surv. Tutor. 2013, 15, 1281–1293. [Google Scholar] [CrossRef]

- Bird, J.; Arden, D. Indoor navigation with foot-mounted strapdown inertial navigation and magnetic sensors. IEEE Wirel. Commun. 2011, 18, 28–35. [Google Scholar] [CrossRef]

- Aggarwal, P.; Thomas, D.; Ojeda, L.; Borenstein, J. Map matching and heuristic elimination of gyro drift for personal navigation systems in GPS-denied conditions. Meas. Sci.Technol. 2011, 22, 025205. [Google Scholar] [CrossRef]

- Xu, Z.; Wei, J.; Zhang, B.; Yang, W. A robust method to detect zero velocity for improved 3D personal navigation using inertial sensors. Sensors 2015, 15, 7708–7727. [Google Scholar] [CrossRef] [PubMed]

- Lan, K.C.; Shih, W.Y. On calibrating the sensor errors of a PDR-based indoor localization system. Sensors 2013, 13, 4781–4810. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Yan, W.; Shen, Q.; Chang, H. A handheld inertial pedestrian navigation system with accurate step modes and device poses recognition. IEEE Sens. J. 2015, 15, 1421–1429. [Google Scholar] [CrossRef]

- Li, F.; Zhao, C.; Ding, G.; Gong, J.; Liu, C.; Zhao, F. A reliable and accurate indoor localization method using phone inertial sensors. In Proceedings of the 2012 ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012. [Google Scholar]

- Lee, H.H.; Choi, S.; Lee, M.J. Step detection robust against the dynamics of smartphones. Sensors 2015, 15, 27230–27250. [Google Scholar] [CrossRef] [PubMed]

- Diaz, E.M.; Gonzalez, A.L.M. Step detector and step length estimator for an inertial pocket navigation system. In Proceedings of the Indoor Positioning and Indoor Navigation (IPIN), Busan, Korea, 27–30 October 2014. [Google Scholar]

- Xiao, Z.; Wen, H.; Markham, A.; Trigoni, N. Robust pedestrian dead reckoning (R-PDR) for arbitrary mobile device placement. In Proceedings of the Indoor Positioning and Indoor Navigation (IPIN), Busan, Korea, 27–30 October 2014. [Google Scholar]

- Lee, D.L.; Chen, Q. A model-based wifi localization method. In Proceedings of the 2nd International Conference on Scalable Information Systems, Suzhou, China, 6−8 June 2007. [Google Scholar]

- Jin, Y.; Soh, W.; Motani, M.; Wong, W. A robust indoor pedestrian tracking system with sparse infrastructure support. IEEE Trans. Mob. Comput. 2013, 12, 1392–1403. [Google Scholar] [CrossRef]

- Khan, M.I.; Syrjarinne, J. Investigating effective methods for integration of building’s map with low cost inertial sensors and wifi-based positioning. In Proceedings of the Indoor Positioning and Indoor Navigation, Montbeliard, France, 28–31 October 2013; pp. 1–8. [Google Scholar]

- Bojja, J.; Jaakkola, M.K.; Collin, J.; Takala, J. Indoor localization methods using dead reckoning and 3D map matching. J. Signal Process. Syst. 2014, 76, 301–312. [Google Scholar] [CrossRef]

- Xiao, Z.; Wen, H.; Markham, A.; Trigoni, N. Lightweight map matching for indoor localisation using conditional random fields. In Proceedings of the 13th International Symposium on Information Processing in Sensor Networks, Berlin, Germany, 13–16 April 2014. [Google Scholar]

- Becker, C.; Dürr, F. On location models for ubiquitous computing. Pers. Ubiquitous Comput. 2005, 9, 20–31. [Google Scholar] [CrossRef]

- Waqar, W.; Chen, Y.; Vardy, A. Smartphone positioning in sparse Wi-Fi environments. Comput. Commun. 2016, 73, 108–117. [Google Scholar] [CrossRef]

- Johansen, A.M. SMCTC: Sequencial monte carlo in C++. J. Stat. Softw. 2009, 30, 1–41. [Google Scholar] [CrossRef]

| Type | Index | X_cor | Y_cor | Z_cor | Neighbors | Neighbor Direction N, S, W, E | Neighbor Distance dN, dS, dW, dE |

|---|---|---|---|---|---|---|---|

| Corridor | 28 | −8.87 | 15.03 | 1.39 | 3, 29, 19 | 1, 1, 0, 1 | d28,29, d28,3, 0, d28,19 |

| Area | 47 | −6.52 | 15.03 | 1.39 | 0 | Door direction | Room range |

| Stair | 1.39 | Start and end point | Height of one step | ||||

| Door | 19 | −7.02 | 15.03 | 1.39 | 28, 47 | 0, 0, 1, 1 | 0, 0, d19,28, d19,47 |

| Localization Semantic | User Motion | Algorithm |

|---|---|---|

| Corridor | M1, M2, M3, M4 | Turning detection, buffer analysis |

| Open area | M3, M5 | Turning detection, buffer analysis, range constraint |

| Stair | M6 | Buffer analysis |

| Door | M2, M3 | Turning detection, buffer analysis |

| Participant | F1 (Zhu) | F2 (Zhou) | M1 (Guo) | M2 (Ma) | M3 (Liu) | M4 (He) |

|---|---|---|---|---|---|---|

| Height (cm) | 165 | 165 | 174 | 170 | 175 | 172 |

| Step Length (cm) | 51 | 53 | 55 | 58 | 75 | 72 |

| M1 | M2 | M3 | M4 | M5 | M6 |

|---|---|---|---|---|---|

| 0.32 | 0.77 | 0.36 | 0.42 | 0.36 | 0.47 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Y.; Zheng, X.; Xiong, H.; Chen, R. Robust Indoor Mobile Localization with a Semantic Augmented Route Network Graph. ISPRS Int. J. Geo-Inf. 2017, 6, 221. https://doi.org/10.3390/ijgi6070221

Zhou Y, Zheng X, Xiong H, Chen R. Robust Indoor Mobile Localization with a Semantic Augmented Route Network Graph. ISPRS International Journal of Geo-Information. 2017; 6(7):221. https://doi.org/10.3390/ijgi6070221

Chicago/Turabian StyleZhou, Yan, Xianwei Zheng, Hanjiang Xiong, and Ruizhi Chen. 2017. "Robust Indoor Mobile Localization with a Semantic Augmented Route Network Graph" ISPRS International Journal of Geo-Information 6, no. 7: 221. https://doi.org/10.3390/ijgi6070221