Combining Hector SLAM and Artificial Potential Field for Autonomous Navigation Inside a Greenhouse

Abstract

:1. Introduction

1.1. Related Work

1.2. Proposed Scheme

2. Materials and Methods

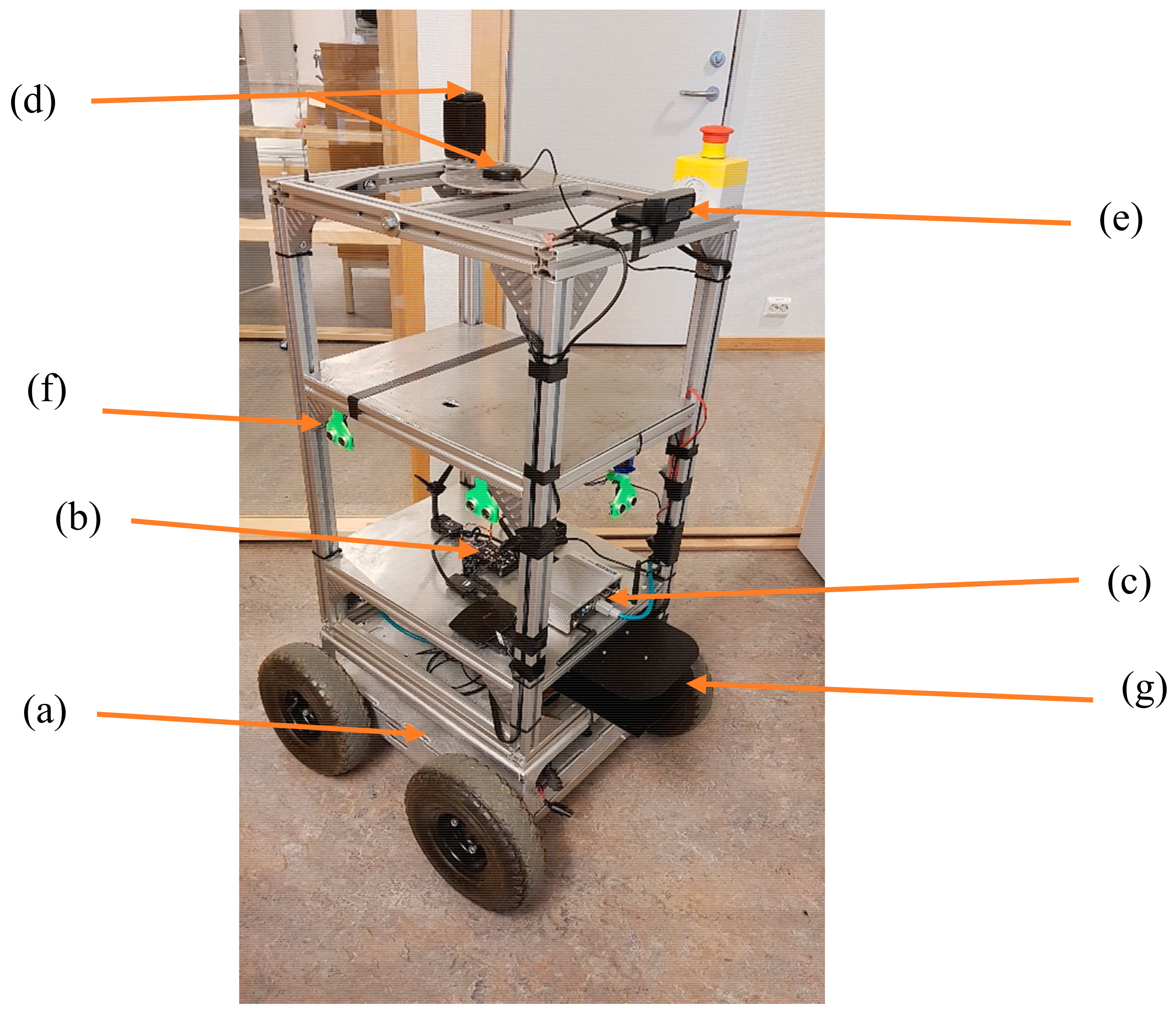

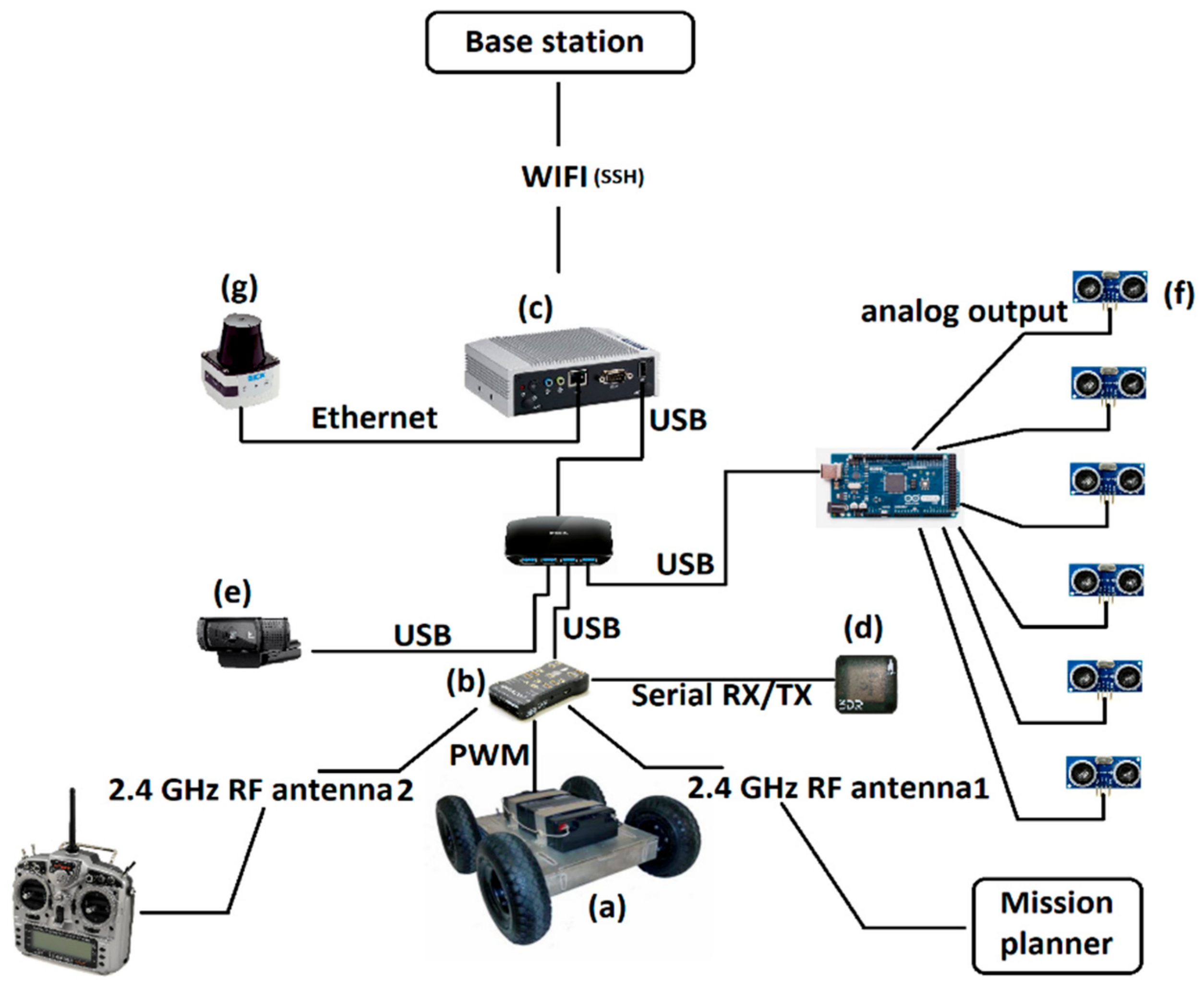

2.1. Hardware Architecture

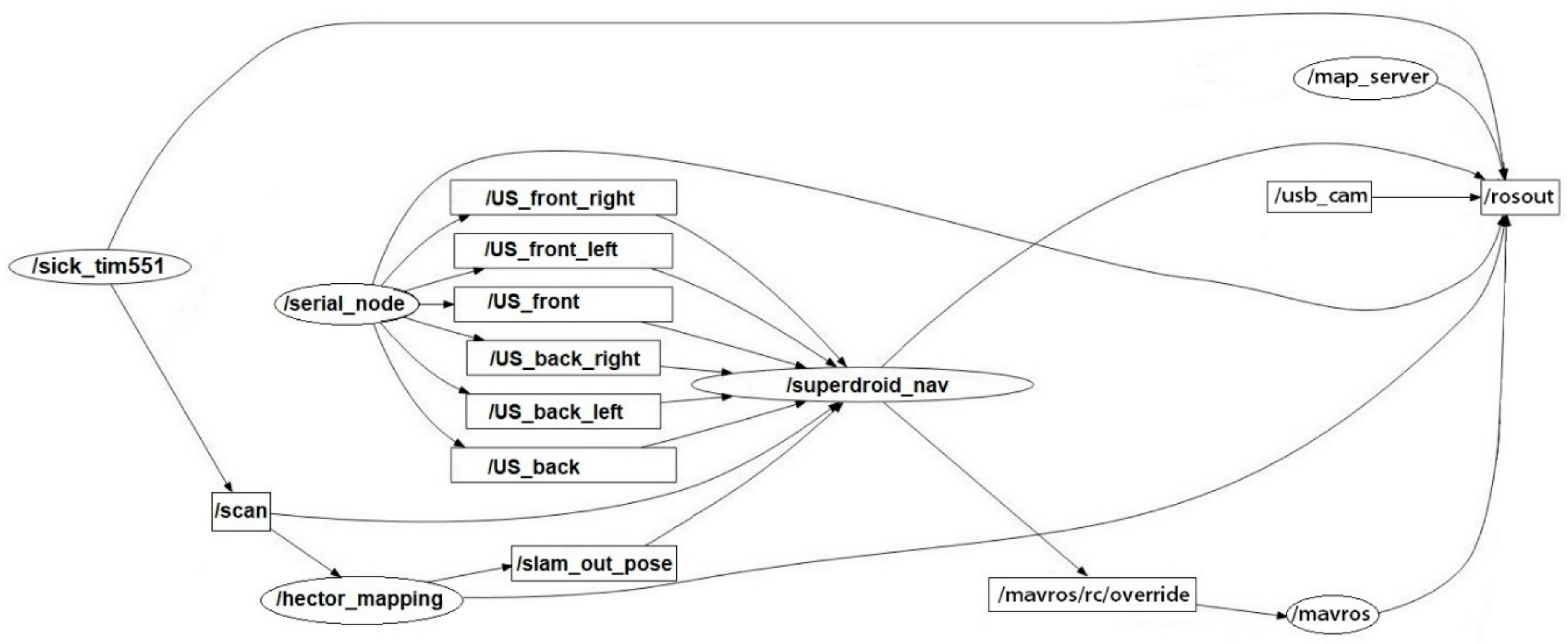

2.2. Software Architecture

- -

- /serial_node ran on the Arduino board. It published the readings obtained from the six US sensors mounted on the mobile robot.

- -

- /scan topic was the LIDAR readings published from /sick_tim551 node (the tim551 is compatible with the tim561 mounted of the mobile robot).

- -

- /hector_mapping subscribed to /scan topic and published the /slam_out_pose topic which is the relative pose (position and orientation) of the mobile robot.

- -

- /mavros/rc/override received velocity commands from /superdroid_nav, and emulated a generated signal from an RF controller, which was then turned by the Pixhawk board into PWM signals for the motors of the mobile carrying platform.

- -

- /map_server published the map of the environment, which was used for the a priori selection of navigation waypoints, as well as for overlaying the current position of the mobile robot upon the prebuilt map.

- -

- /usb_cam published the images captured by the C920 camera mounted on the front of the mobile robot.

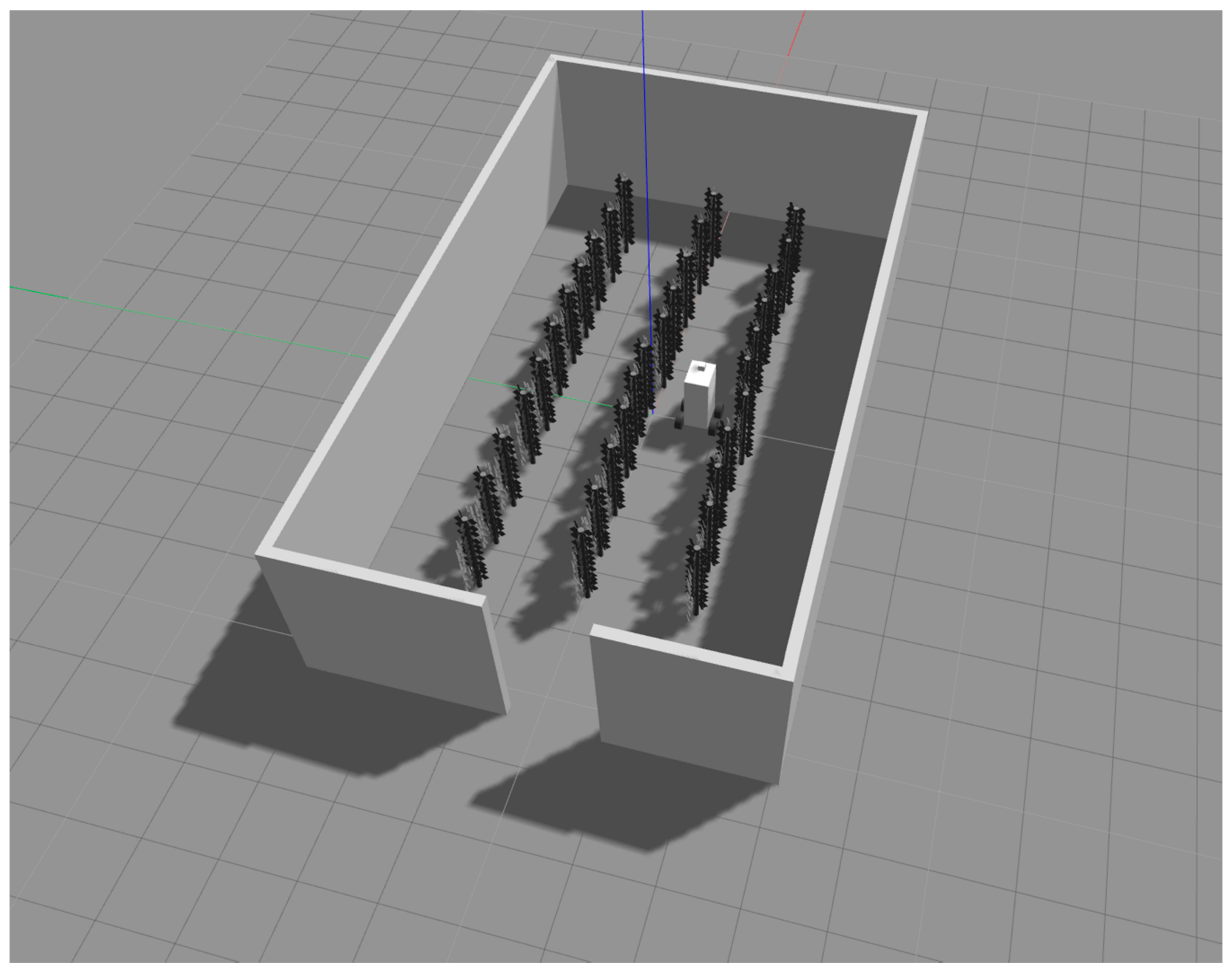

2.3. Simulation Software

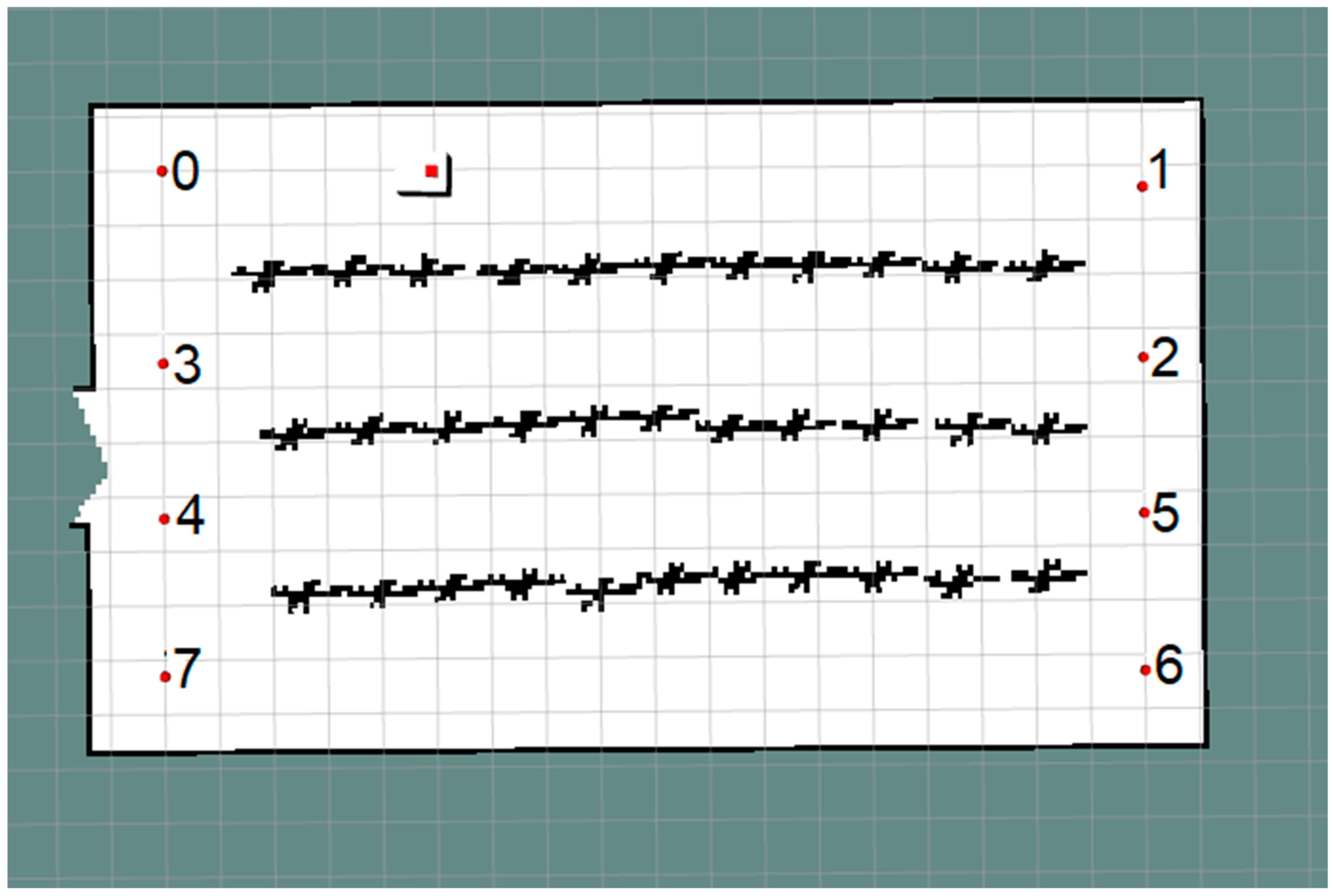

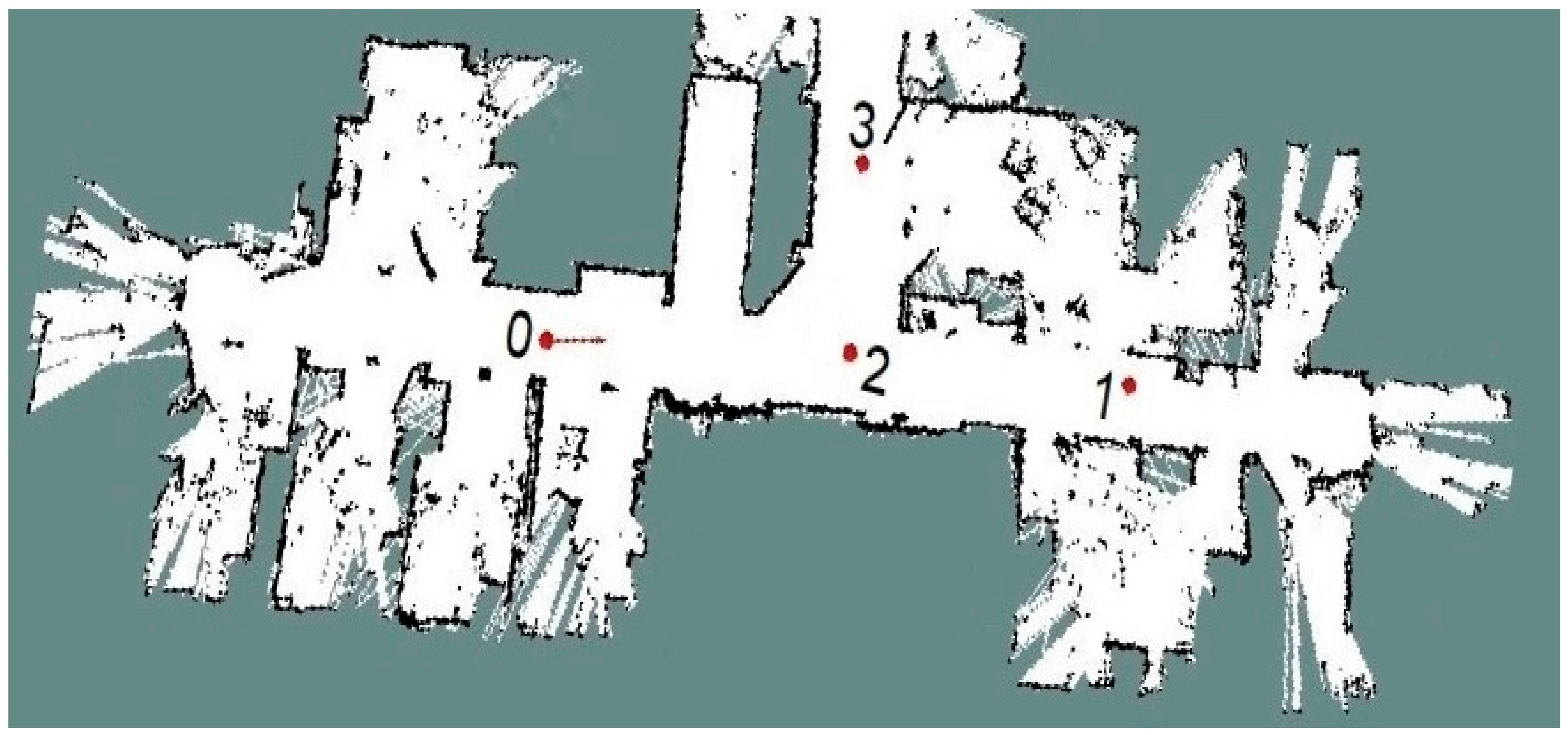

2.4. Experimental Environment

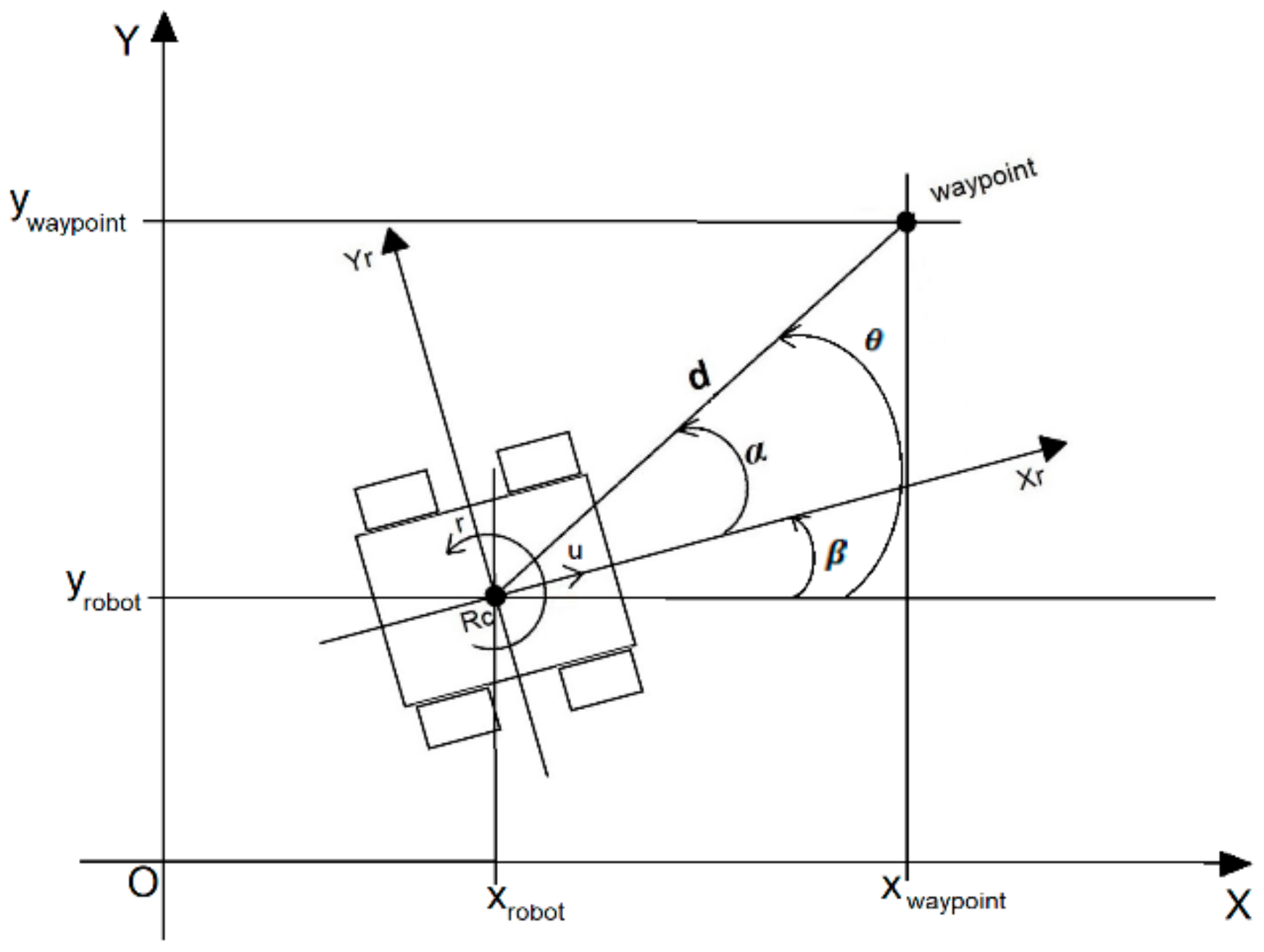

3. Autonomous Navigation

3.1. Artificial Potential Field

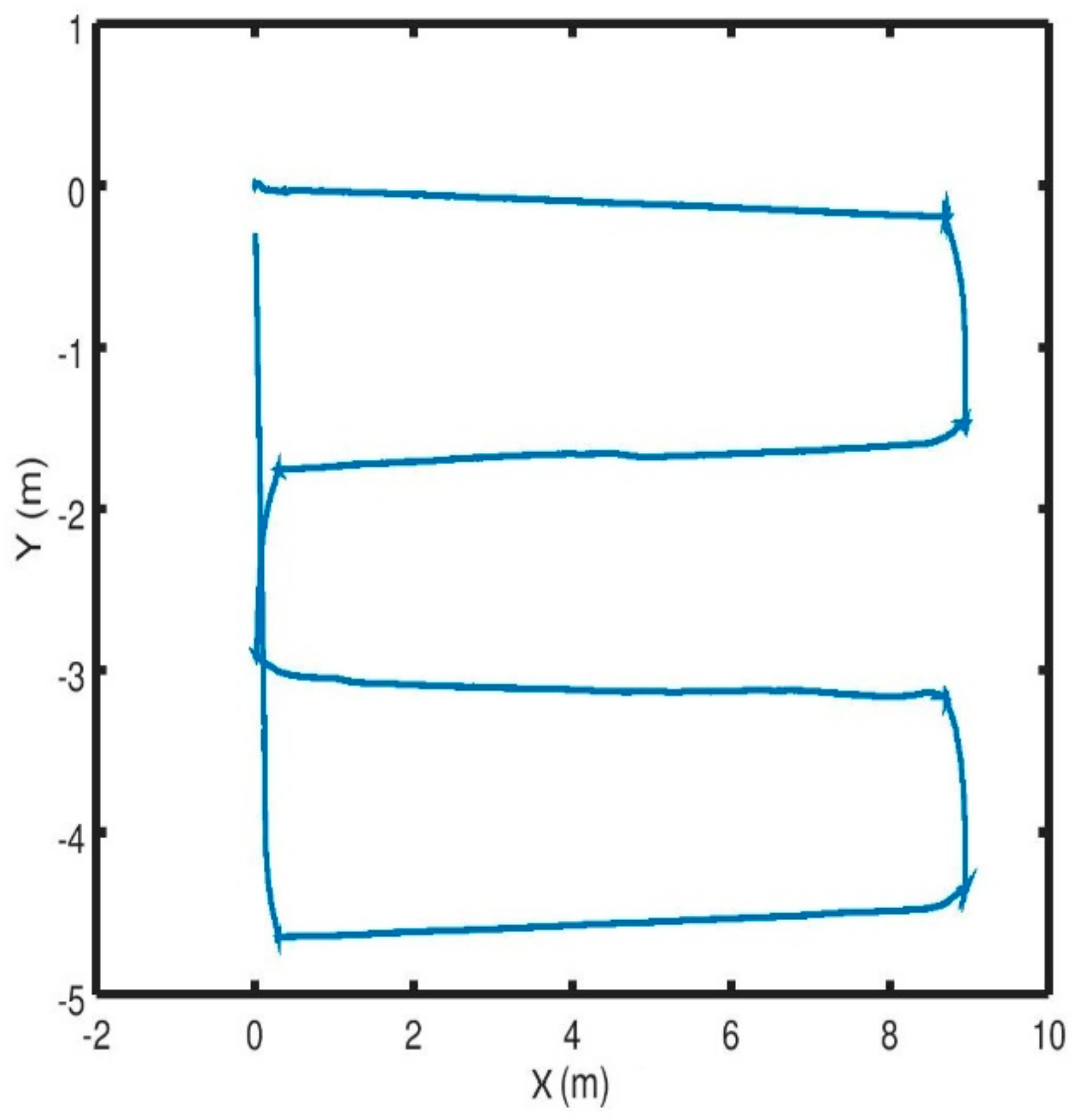

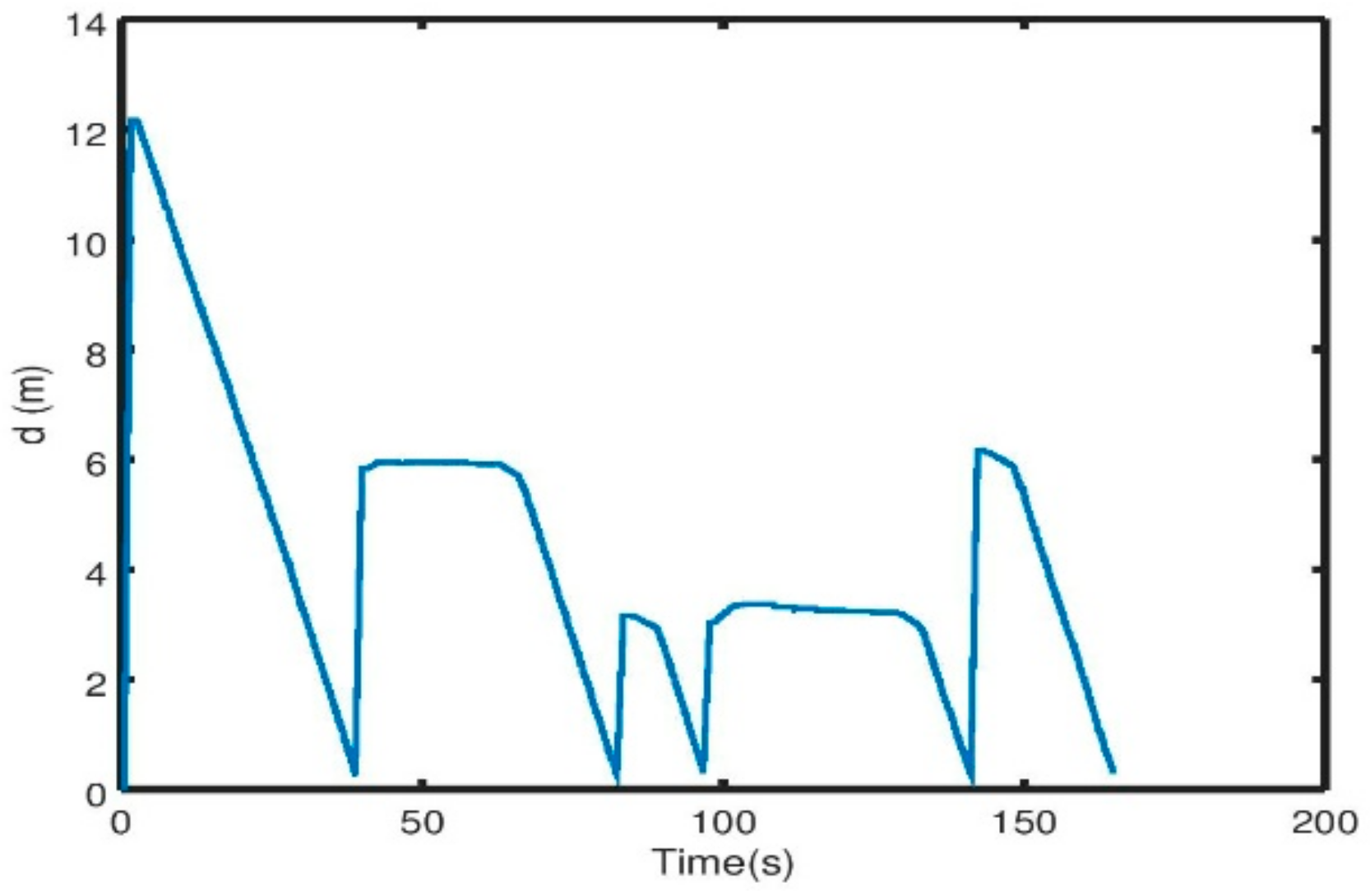

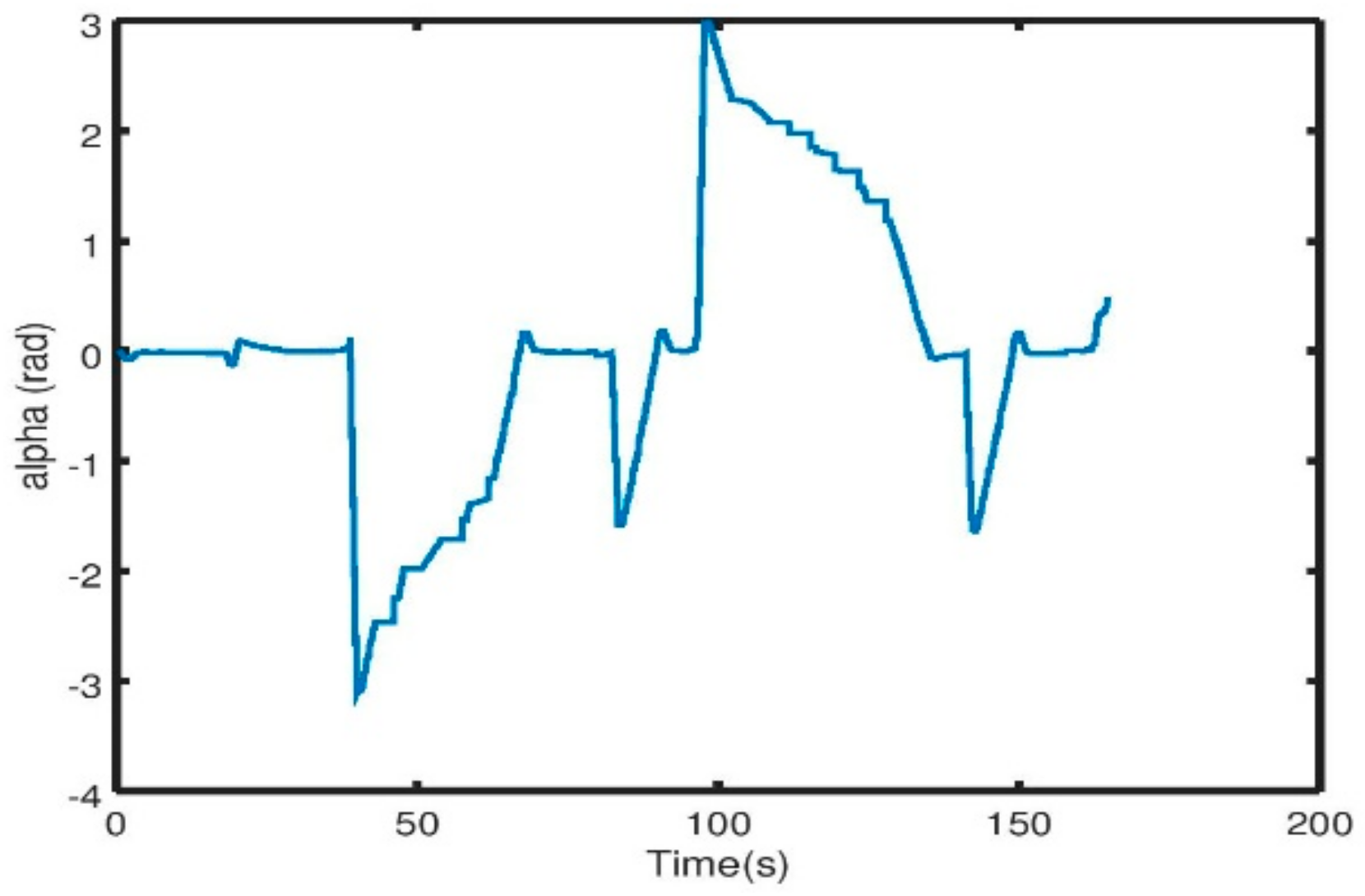

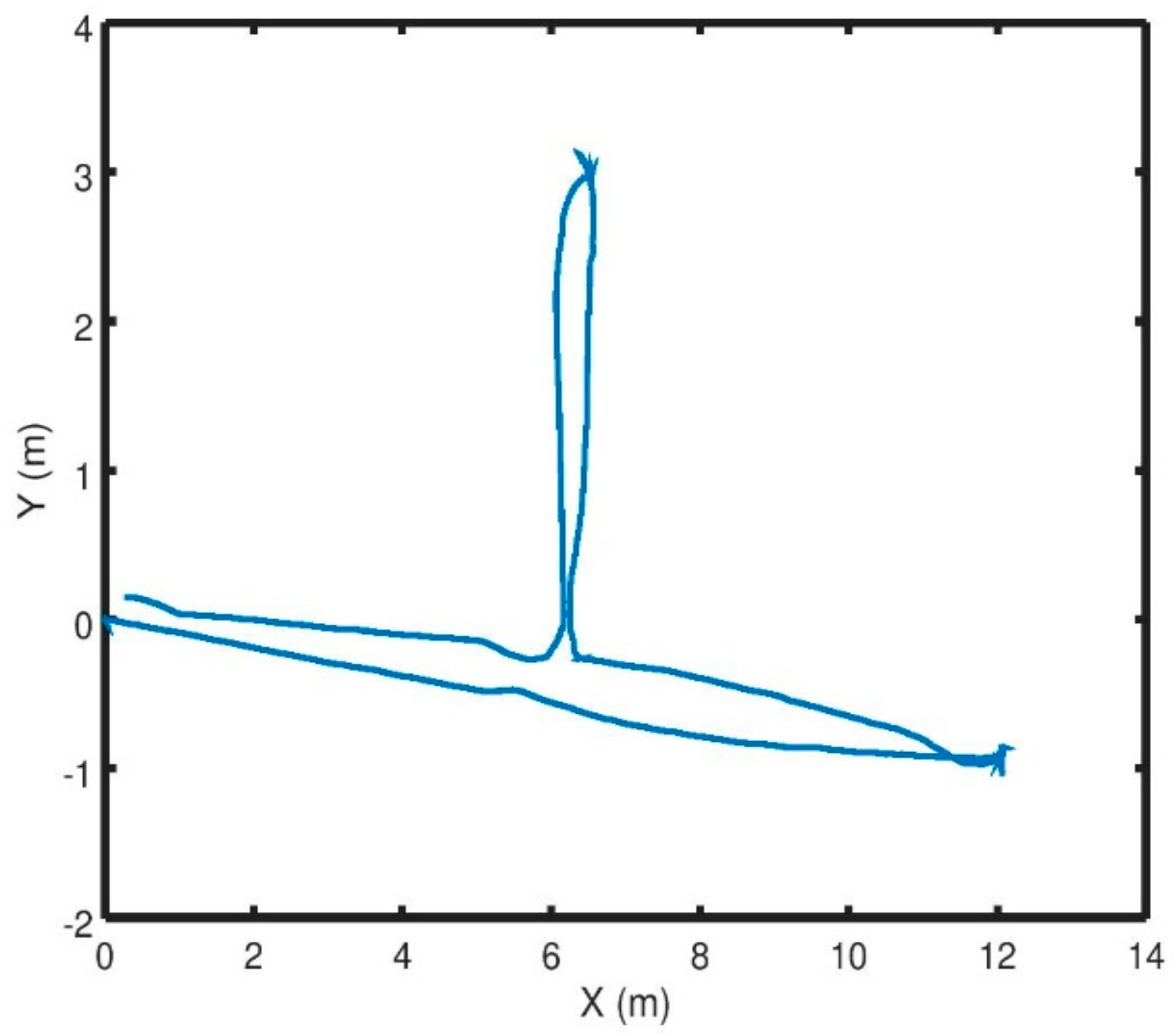

3.2. Simulation Results

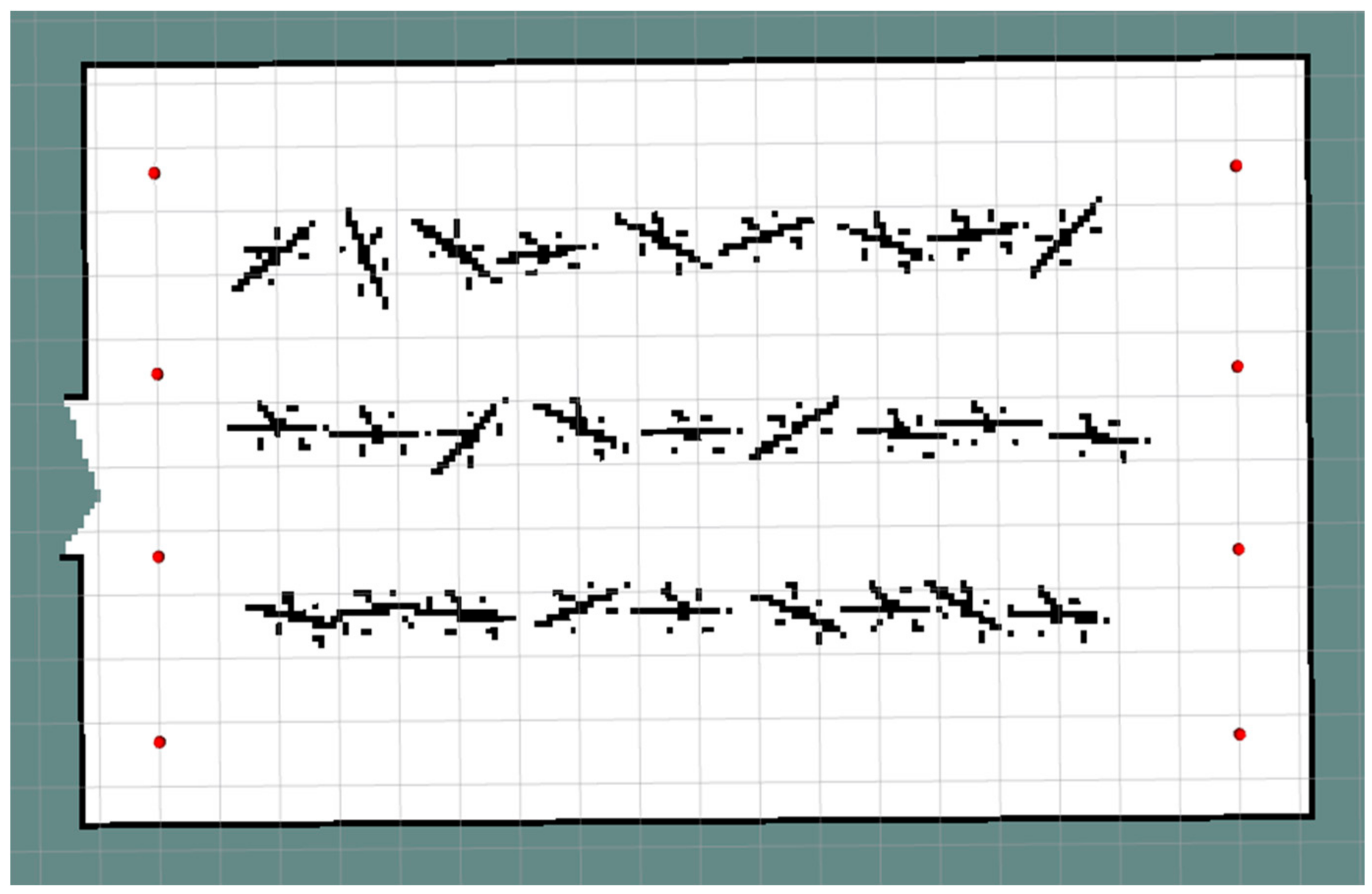

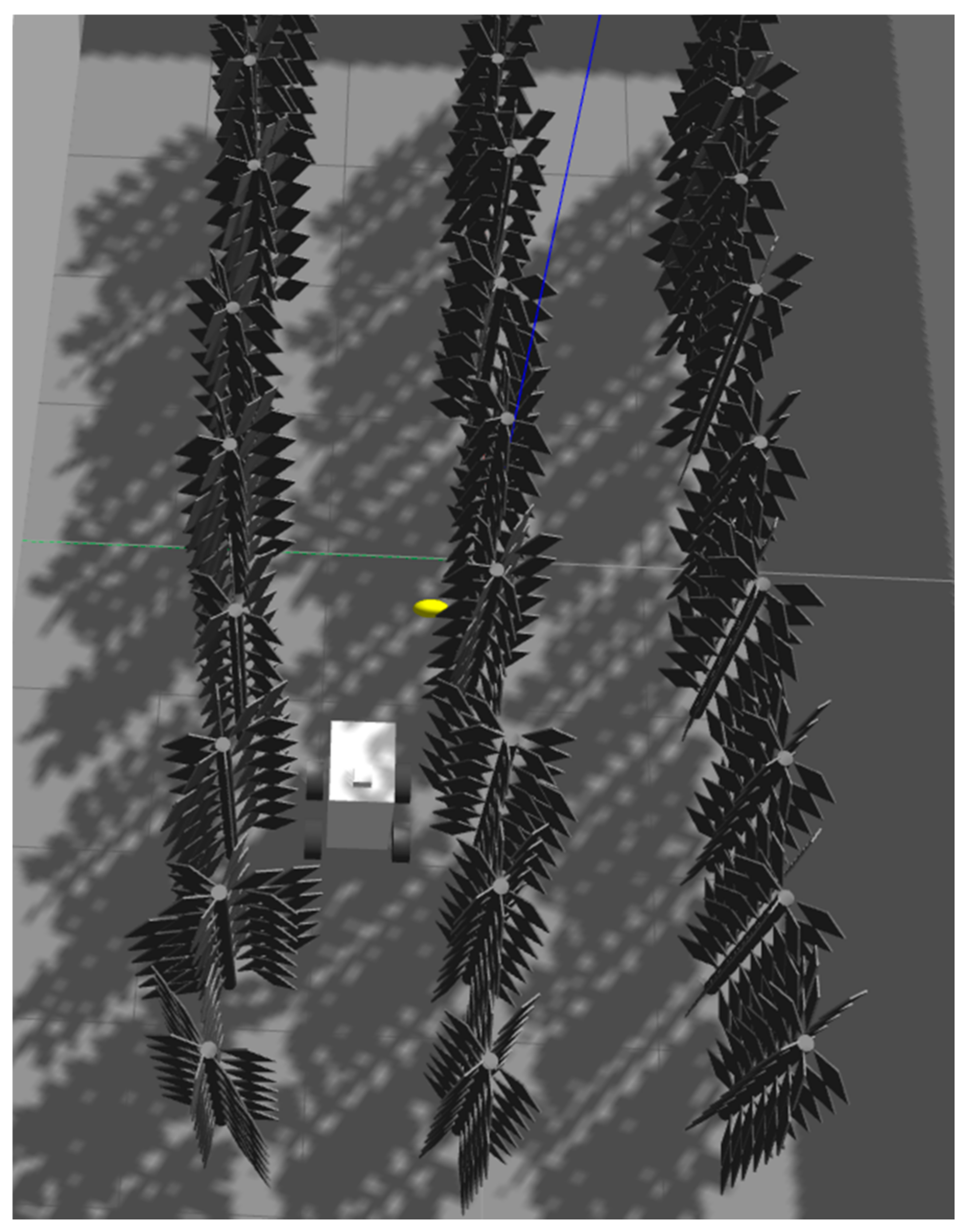

3.3. Dynamic Plant Development Simulation

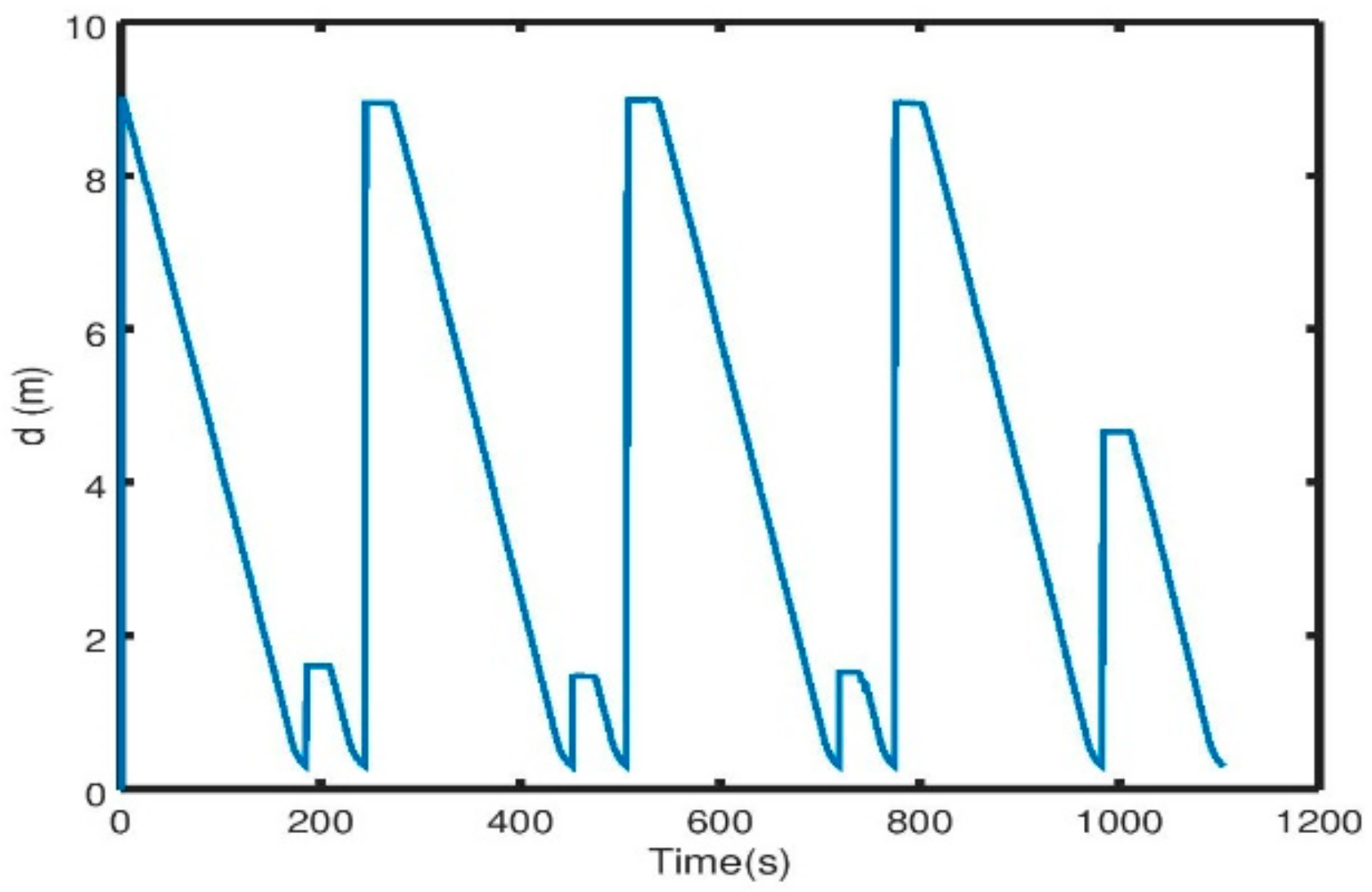

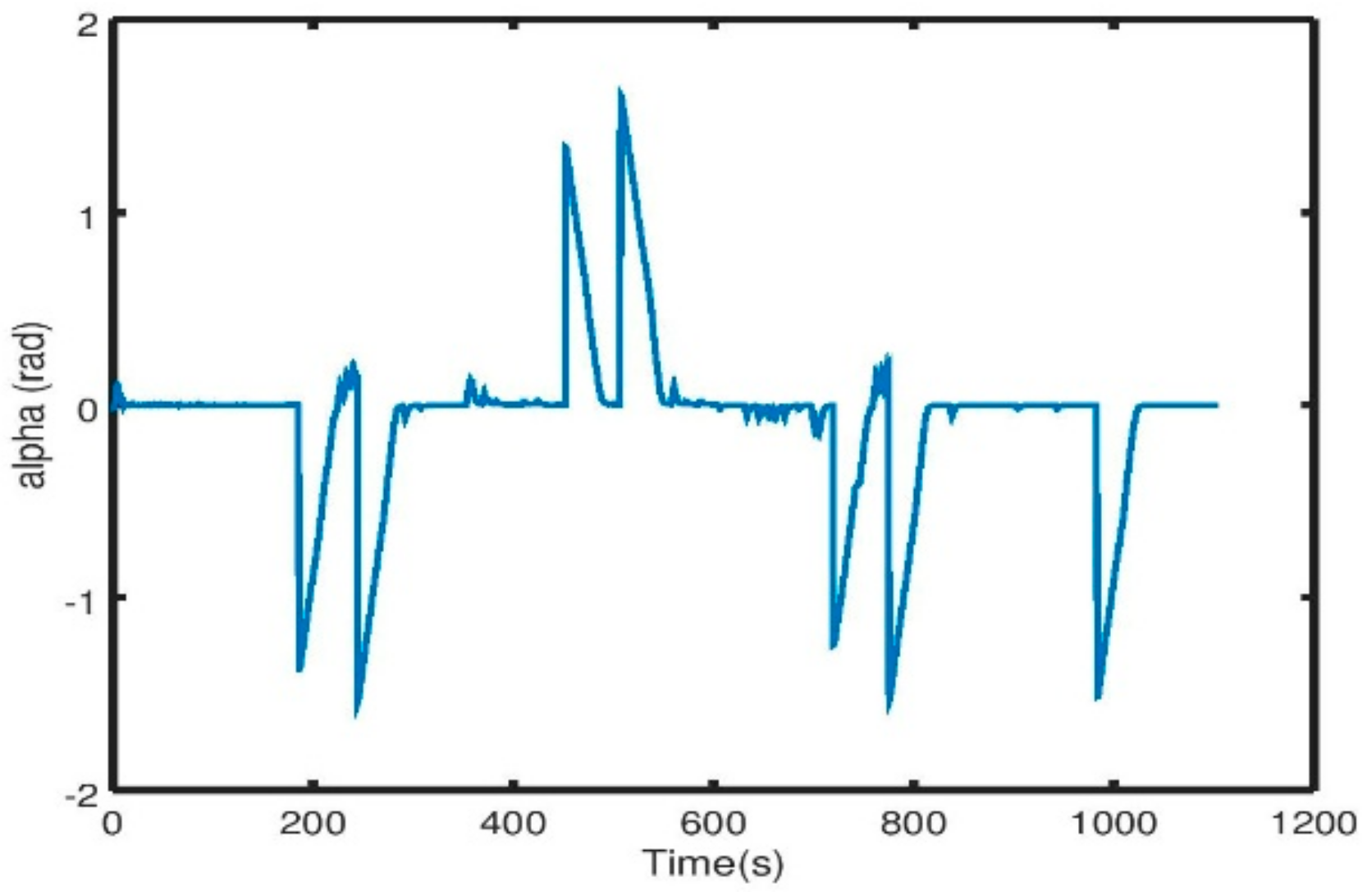

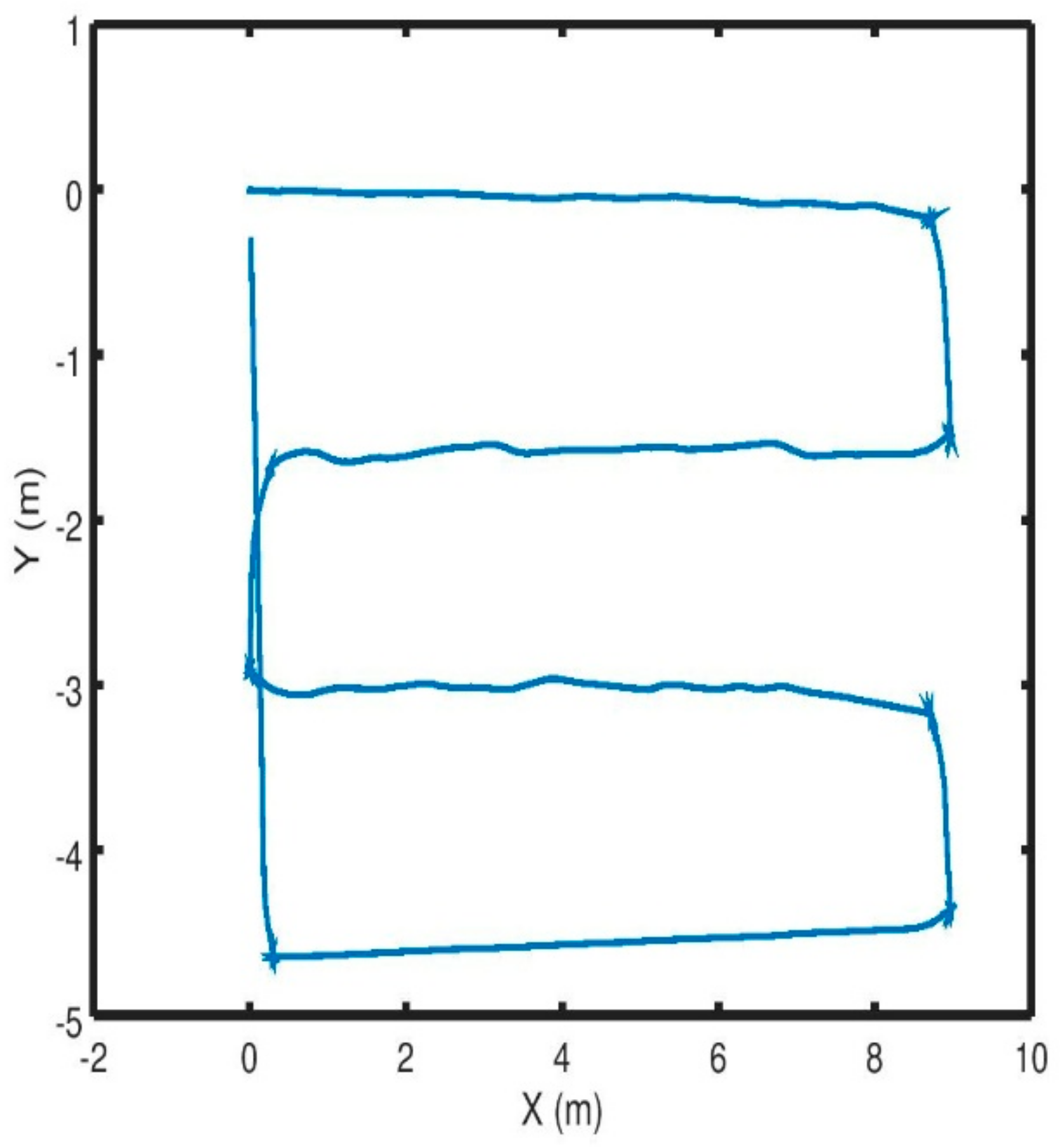

3.4. Experimental Results

3.5. Robustness: Experimental Analysis

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Vakilian, K.A.; Massah, J. A farmer-assistant robot for nitrogen fertilizing management of greenhouse crops. Comput. Electron. Agric. 2017, 139, 153–163. [Google Scholar] [CrossRef]

- Durmuş, E.O.G.; Kırcı, M. Data acquisition from greenhouses by using autonomous mobile robot. In Proceedings of the 2016 Fifth International Conference on Agro-Geoinformatics (Agro-Geoinformatics), Tianjin, China, 18–20 July 2016. [Google Scholar]

- Schor, N.; Bechar, A.; Ignat, T.; Dombrovsky, A.; Elad, Y.; Berman, S. Robotic disease detection in greenhouses: Combined detection of powdery mildew and tomato spotted wilt virus. IEEE Robot. Autom. Lett. 2016, 1, 354–360. [Google Scholar] [CrossRef]

- Van Henten, E.J.; Hemming, J.; Van Tuijl, B.A.J.; Kornet, J.G.; Meuleman, J.; Bontsema, J.; Van Os, E.A. An autonomous robot for harvesting cucumbers in greenhouses. Auton. Robot. 2002, 13, 241–258. [Google Scholar] [CrossRef]

- Gat, G.; Gan-Mor, S.; Degani, A. Stable and robust vehicle steering control using an overhead guide in greenhouse tasks. Comput. Electron. Agric. 2016, 121, 234–244. [Google Scholar] [CrossRef]

- Grimstad, L.; From, P.J. The Thorvald II Agricultural Robotic System. Robotics 2017, 6, 24. [Google Scholar] [CrossRef]

- Nakao, N.; Suzuki, H.; Kitajima, T.; Kuwahara, A.; Yasuno, T. Path Planning and Traveling Control for Pesticide-Spraying Robot in Greenhouse. J. Signal Process. 2017, 21, 175–178. [Google Scholar] [CrossRef]

- González, R.; Rodríguez, F.; Sánchez-Hermosilla, J.; Donaire, J.G. Navigation techniques for mobile robots in greenhouses. Appl. Eng. Agric. 2009, 25, 153–165. [Google Scholar] [CrossRef]

- Thrun, S. Robotic mapping: A survey. In Exploring Artificial Intelligence in the New Millennium; Morgan Kaufmann: Burlington, MA, USA, 2002; Volume 1, pp. 1–35. [Google Scholar]

- Xue, J.; Fan, B.; Zhang, X.; Feng, Y. An Agricultural Robot for Multipurpose Operations in a Greenhouse. DEStech Trans. Eng. Technol. Res. 2017. [Google Scholar] [CrossRef]

- Reiser, D.; Miguel, G.; Arellano, M.V.; Griepentrog, H.W.; Paraforos, D.S. Crop row detection in maize for developing navigation algorithms under changing plant growth stages. In Robot 2015: Second Iberian Robotics Conference; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Kohlbrecher, S.; Meyer, J.; Graber, T.; Petersen, K.; Klingauf, U.; Stryk, O. Hector open source modules for autonomous mapping and navigation with rescue robots. In Proceedings of the Robot Soccer World Cup, Eindhoven, The Netherlands, 24–30 June 2013. [Google Scholar]

- Kohlbrecher, S.; Meyer, J.; Graber, T.; Petersen, K.; Von Stryk, O.; Klingauf, U. Robocuprescue 2014-robot league team hector Darmstadt. In Proceedings of the RoboCupRescue 2014, João Pessoa, Brazil, 20–24 July 2014. [Google Scholar]

- Hoy, M.; Matveev, A.S.; Savkin, A.V. Algorithms for collision-free navigation of mobile robots in complex cluttered environments: A survey. Robotica 2015, 33, 463–497. [Google Scholar] [CrossRef]

- Valbuena, L.; Tanner, H.G. Hybrid potential field based control of differential drive mobile robots. J. Intell. Robot. Syst. 2012, 68, 307–322. [Google Scholar] [CrossRef]

- Zhu, Y.; Özgüner, Ü. Constrained model predictive control for nonholonomic vehicle regulation problem. IFAC Proc. Vol. 2008, 41, 9552–9557. [Google Scholar] [CrossRef]

- Yang, K.; Moon, S.; Yoo, S.; Kang, J.; Doh, N.L.; Kim, H.B.; Joo, S. Spline-based RRT path planner for non-holonomic robots. J. Intell. Robot. Syst. 2014, 73, 763–782. [Google Scholar] [CrossRef]

- Arismendi, C.; Álvarez, D.; Garrido, S.; Moreno, L. Nonholonomic motion planning using the fast marching square method. Int. J. Adv. Robot. Syst. 2015, 12, 56. [Google Scholar] [CrossRef]

- Khatib, O. Real-time obstacle avoidance for manipulators and mobile robots. Int. J. Robot. Res. 1986, 5, 90–98. [Google Scholar] [CrossRef]

- Iswanto, I.; Wahyunggoro, O.; Cahyadi, A.I. Path Planning Based on Fuzzy Decision Trees and Potential Field. Int. J. Electr. Comput. Eng. 2016, 6, 212. [Google Scholar] [CrossRef]

- Sgorbissa, A.; Zaccaria, R. Planning and obstacle avoidance in mobile robotics. Robot. Auton. Syst. 2012, 60, 628–638. [Google Scholar] [CrossRef]

- Masoud, A.A.; Ahmed, M.; Al-Shaikhi, A. Servo-level, sensor-based navigation using harmonic potential fields. In Proceedings of the 2015 European Control Conference (ECC), Linz, Austria, 15–17 July 2015. [Google Scholar]

- Rodrigues, R.T.; Basiri, M.; Aguiar, A.P.; Miraldo, P. Low-level Active Visual Navigation Increasing robustness of vision-based localization using potential fields. IEEE Robot. Autom. Lett. 2018, 3, 2079–2086. [Google Scholar] [CrossRef]

- Rodrigues, R.T.; Basiri, M.; Aguiar, A.P.; Miraldo, P. Feature Based Potential Field for Low-level Active Visual Navigation. In Proceedings of the Iberian Robotics Conference, Sevilla, Spain, 22–24 November 2017. [Google Scholar]

- Mur-artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Li, W.; Yang, C.; Jiang, Y.; Liu, X.; Su, C.-Y. Motion planning for omnidirectional wheeled mobile robot by potential field method. J. Adv. Transp. 2017, 2017, 4961383. [Google Scholar] [CrossRef]

- Lee, M.C.; Park, M.G. Artificial potential field based path planning for mobile robots using a virtual obstacle concept. In Proceedings of the 2003 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Kobe, Japan, 20–24 July 2003. [Google Scholar]

- Kuo, P.-L.; Wang, C.-H.; Chou, H.-J.; Liu, J.-S. A real-time streamline-based obstacle avoidance system for curvature-constrained nonholonomic mobile robots. In Proceedings of the 2017 6th International Symposium on Advanced Control of Industrial Processes (AdCONIP), Taipei, Taiwan, 28–31 May 2017. [Google Scholar]

- Urakubo, T. Stability analysis and control of nonholonomic systems with potential fields. J. Intell. Robot. Syst. 2018, 89, 121–137. [Google Scholar] [CrossRef]

- Poonawala, H.A.; Satici, A.C.; Eckert, H.; Spong, M.W. Collision-free formation control with decentralized connectivity preservation for nonholonomic-wheeled mobile robots. IEEE Trans. Control Netw. Syst. 2015, 2, 122–130. [Google Scholar] [CrossRef]

- Shimoda, S.; Kuroda, Y.; Iagnemma, K. Potential field navigation of high speed unmanned ground vehicles on uneven terrain. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation (ICRA 2005), Barcelona, Spain, 18–22 April 2005. [Google Scholar]

- Zhu, Q.; Yan, Y.; Xing, Z. Robot path planning based on artificial potential field approach with simulated annealing. In Proceedings of the Sixth International Conference on Intelligent Systems Design and Applications (ISDA’06), Jinan, China, 16–18 October 2006. [Google Scholar]

- Yun, X.; Tan, K.-C. A wall-following method for escaping local minima in potential field based motion planning. In Proceedings of the 8th International Conference on Advanced Robotics (ICAR’97), Monterey, CA, USA, 7–9 July 1997. [Google Scholar]

- Guerra, M.; Efimov, D.; Zheng, G.; Perruquetti, W. Avoiding local minima in the potential field method using input-to-state stability. Control Eng. Pract. 2016, 55, 174–184. [Google Scholar] [CrossRef]

- Warren, C.W. Global path planning using artificial potential fields. In Proceedings of the 1989 IEEE International Conference on Robotics and Automation, Scottsdale, AZ, USA, 14–19 May 1989. [Google Scholar]

- Siegwart, R.; Nourbakhsh, I.R.; Scaramuzza, D. Introduction to Autonomous Mobile Robots; MIT Press: Cambridge, MA, USA, 2011. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Move_base Package. Available online: http://wiki.ros.org/move_base (accessed on 16 April 2018).

- Harik, E.H.C.; Guerin, F.; Guinand, F.; Brethe, J.-F.; Pelvillain, H. A decentralized interactive architecture for aerial and ground mobile robots cooperation. In Proceedings of the IEEE International Conference on Control, Automation and Robotics (ICCAR 2015), Singapore, 20–22 May 2015. [Google Scholar]

- Triharminto, H.H.; Wahyunggoro, O.; Adji, T.B.; Cahyadi, A.I. An Integrated Artificial Potential Field Path Planning with Kinematic Control for Nonholonomic Mobile Robot. Int. J. Adv. Sci. Eng. Inf. Technol. 2016, 6, 410–418. [Google Scholar] [CrossRef]

- Bouraine, S.; Fraichard, T.; Salhi, H. Provably safe navigation for mobile robots with limited field-of-views in dynamic environments. Auton. Robot. 2012, 32, 267–283. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Harik, E.H.C.; Korsaeth, A. Combining Hector SLAM and Artificial Potential Field for Autonomous Navigation Inside a Greenhouse. Robotics 2018, 7, 22. https://doi.org/10.3390/robotics7020022

Harik EHC, Korsaeth A. Combining Hector SLAM and Artificial Potential Field for Autonomous Navigation Inside a Greenhouse. Robotics. 2018; 7(2):22. https://doi.org/10.3390/robotics7020022

Chicago/Turabian StyleHarik, El Houssein Chouaib, and Audun Korsaeth. 2018. "Combining Hector SLAM and Artificial Potential Field for Autonomous Navigation Inside a Greenhouse" Robotics 7, no. 2: 22. https://doi.org/10.3390/robotics7020022