FPGA-Based Real-Time Motion Detection for Automated Video Surveillance Systems

Abstract

:1. Introduction

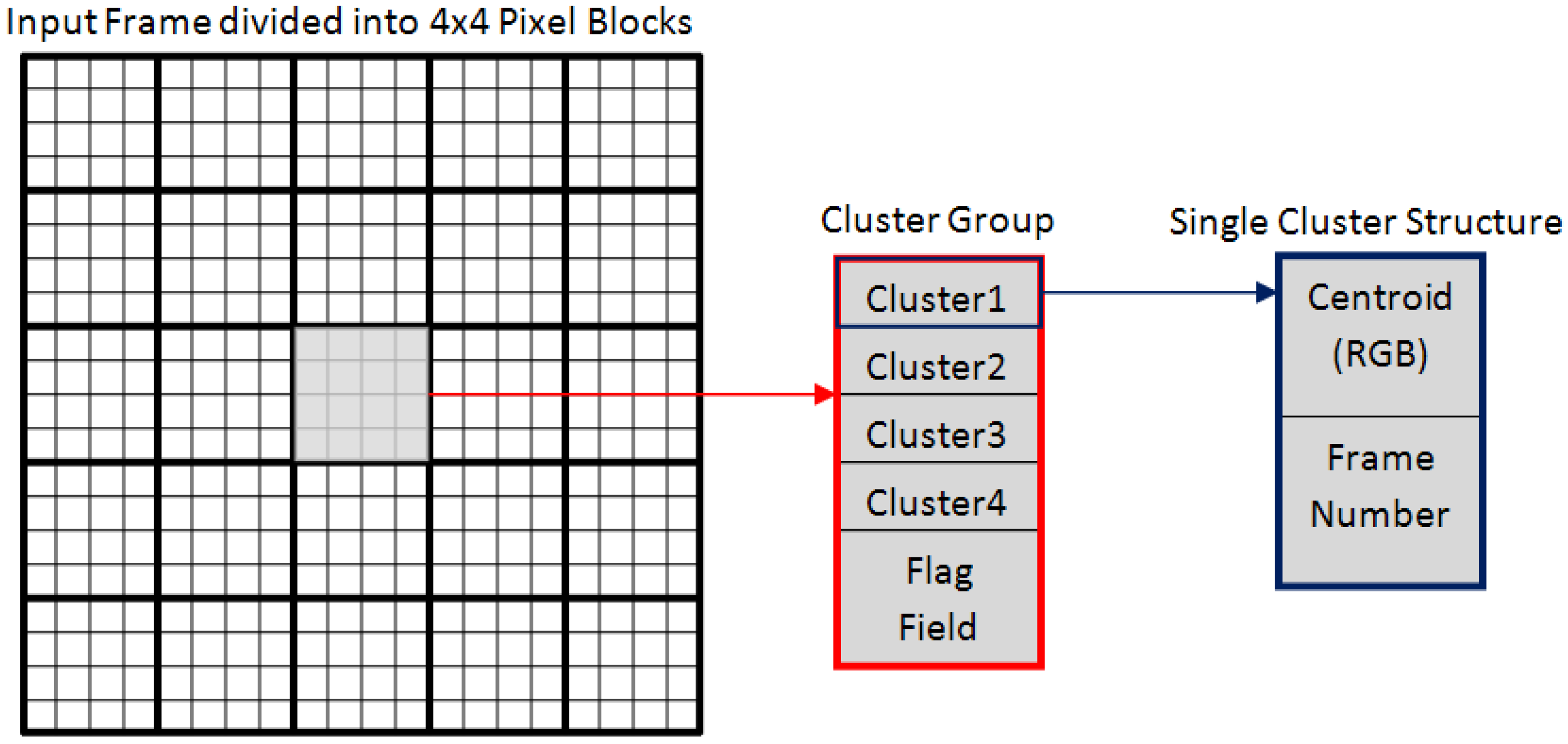

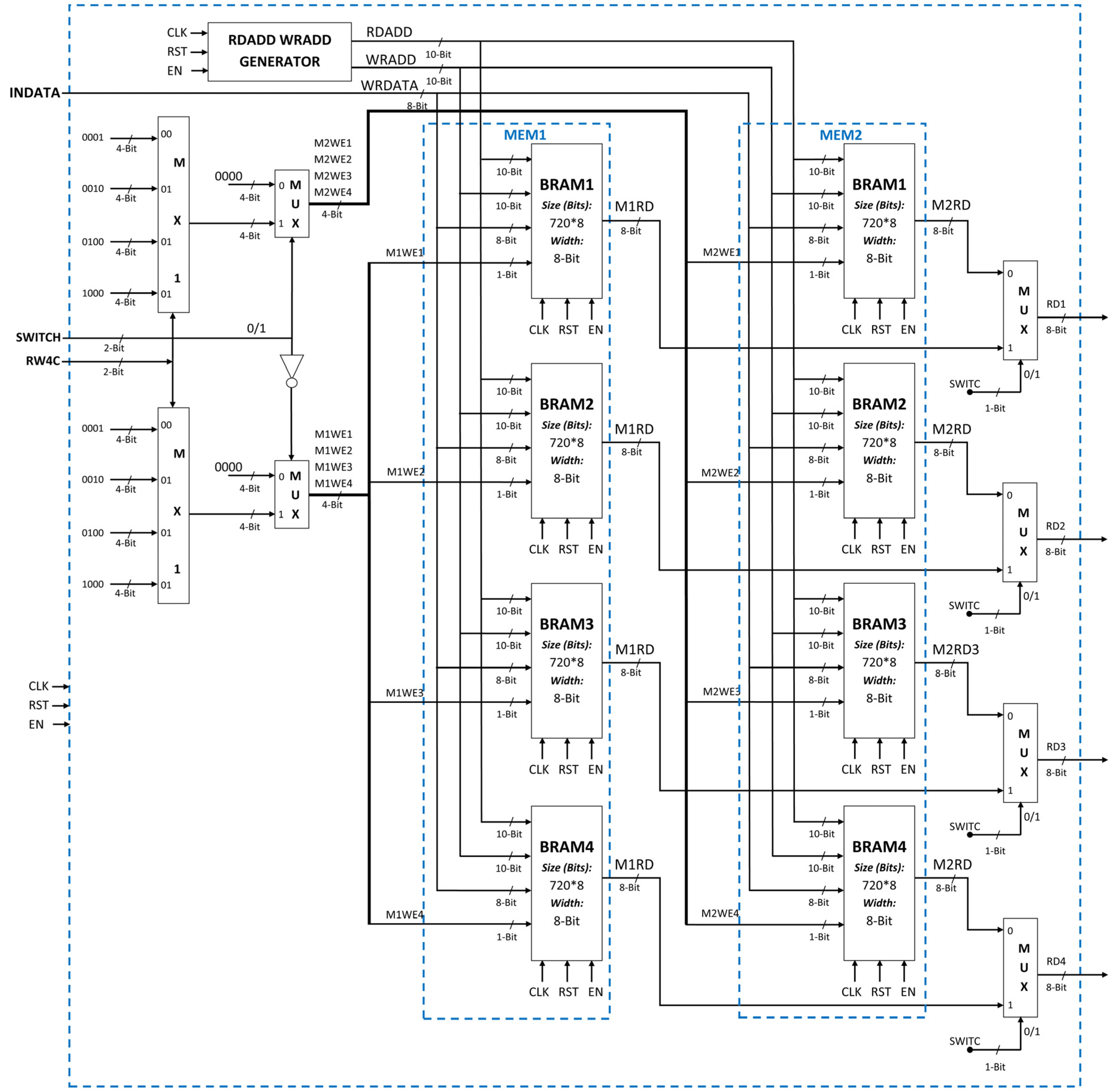

2. Motion Detection Algorithm

- Block Centroid Computation: Each incoming frame is partitioned into 4 × 4 blocks. For each block, the block centroid for RGB color space is computed by taking the average color value of the 16 pixels of that block. The block centroid is of 24-bits (8-bits for each R, G, and B color component).

- Cluster Group Initialization: During the initial four frames, initialization is performed. In the first frame, the first cluster of each block is initialized with its centroid set to the block centroid of corresponding block of the first frame and its frame number is set to 1. In the second frame, the second cluster of each block is initialized with its centroid set to the block centroid of the corresponding block of the second frame and its frame number is set to 2. In the third frame, the third cluster of each block is initialized with its centroid set to the block centroid of corresponding block of the third frame and its frame number is set to 3. In the fourth frame, the last/fourth cluster of each block is initialized with its centroid set to the block centroid of corresponding block of the fourth frame and its frame number is set to 4. In this way, during initialization all the four clusters of the cluster group are initialized.

- Cluster Matching: After initialization, the next step for motion detection in incoming frames is to compare each of the incoming blocks against the corresponding cluster group. The goal is to find a matching cluster within the cluster group. For finding a matching cluster, for each cluster in the cluster group, the difference between its centroid and the incoming current block centroid is computed. The cluster with minimum centroid difference below the user defined threshold is considered as a matching cluster. In order to simplify this computation, Manhattan distance (sum of absolute differences) is used which avoids the overheads of multiplication in difference computation [23]. Eliminating multiplications is very beneficial in terms of reducing computational complexity of the algorithm as multiplications are costly in hardware.

- Cluster Update: If, for a given block, a matching cluster is found within the cluster group, then the matching cluster is updated. The frame number of the matching cluster is replaced by the current frame number and the centroid of the matching cluster is replaced by the average value of the matching cluster centroid and the incoming current block centroid.

- Cluster Replace: If, for a given block, no matching cluster could be found within the group, then the oldest cluster which has not been updated for the longest period of time (cluster with minimum frame number) is deleted and a new cluster is created having the current block centroid as its centroid and the current frame number as its frame number.

- Classification: For a given block, if no matching cluster is found and the oldest cluster is replaced, then it implies that the incoming current block is not matching with the background models and it is marked as motion detected block by setting the motion flag field of the block to “1”. If a matching cluster is found within the cluster group and the matching cluster is updated, then the incoming current block belongs to the background and therefore, the motion flag field of the block is set to “0” (i.e., no motion detected).

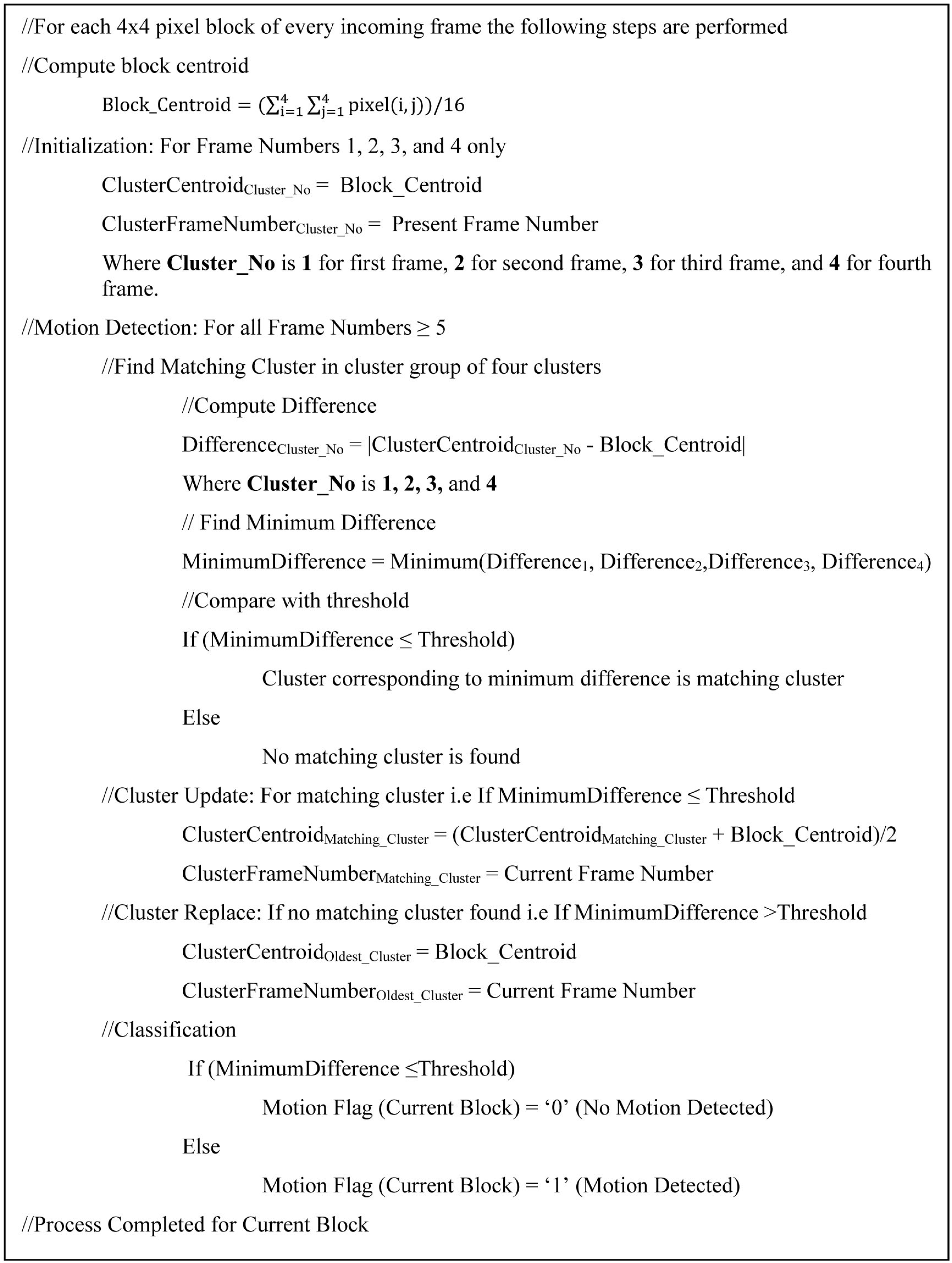

3. Motion Detection System

4. Proposed Architecture

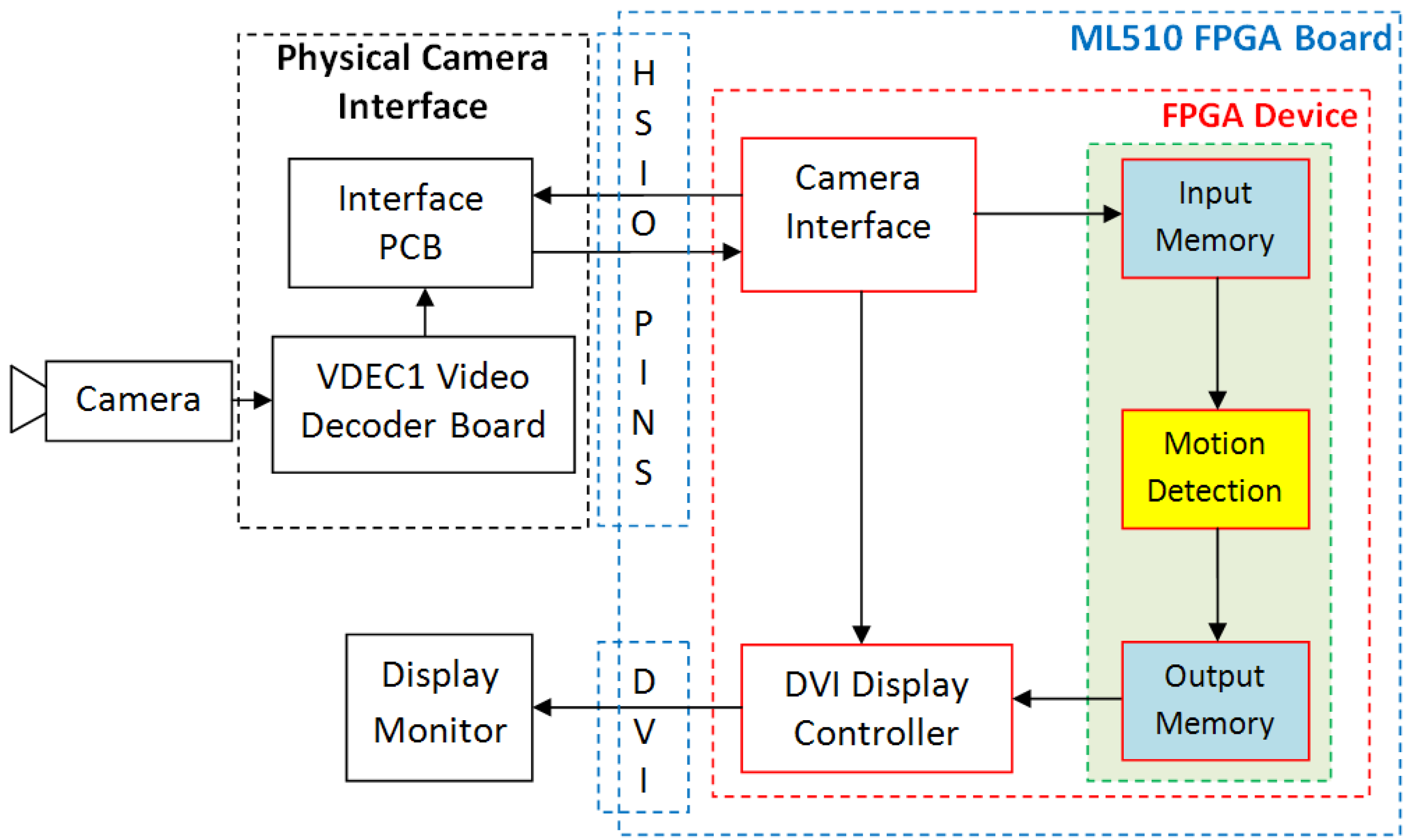

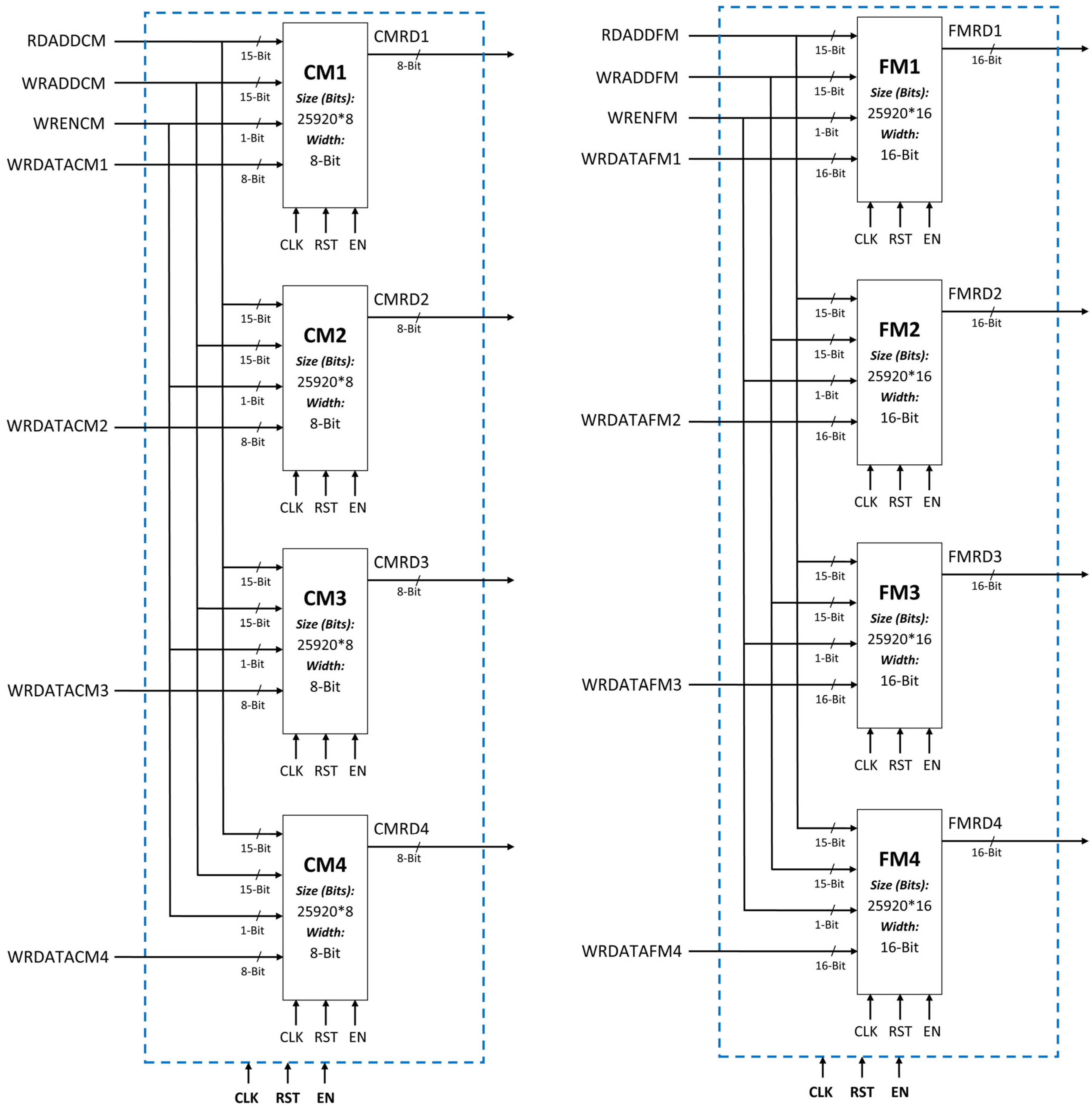

4.1. Input Buffer Memory

4.2. Minimum Centroid Difference Computation

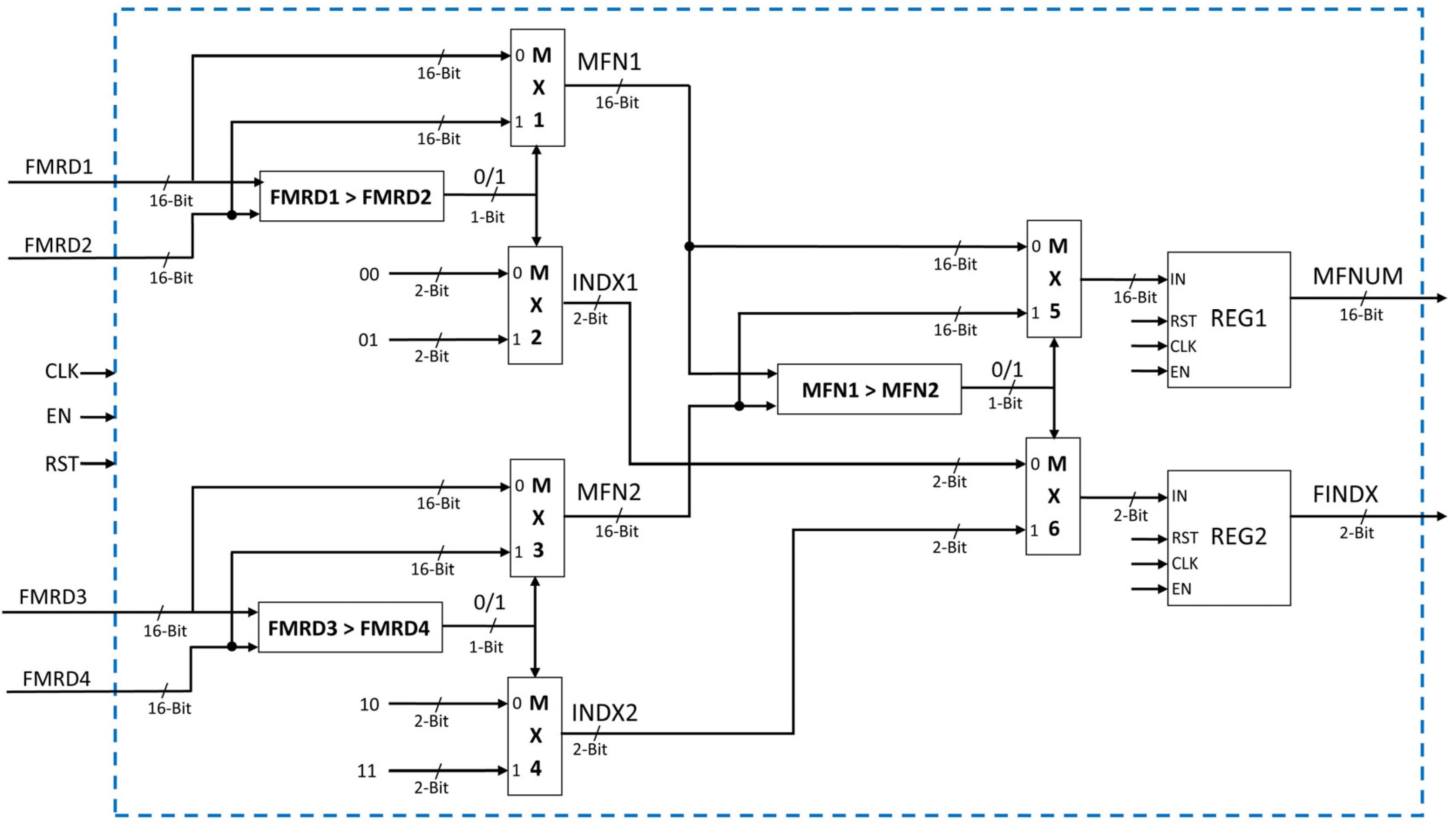

4.3. Minimum Frame Number Computation

4.4. Parameter Memory

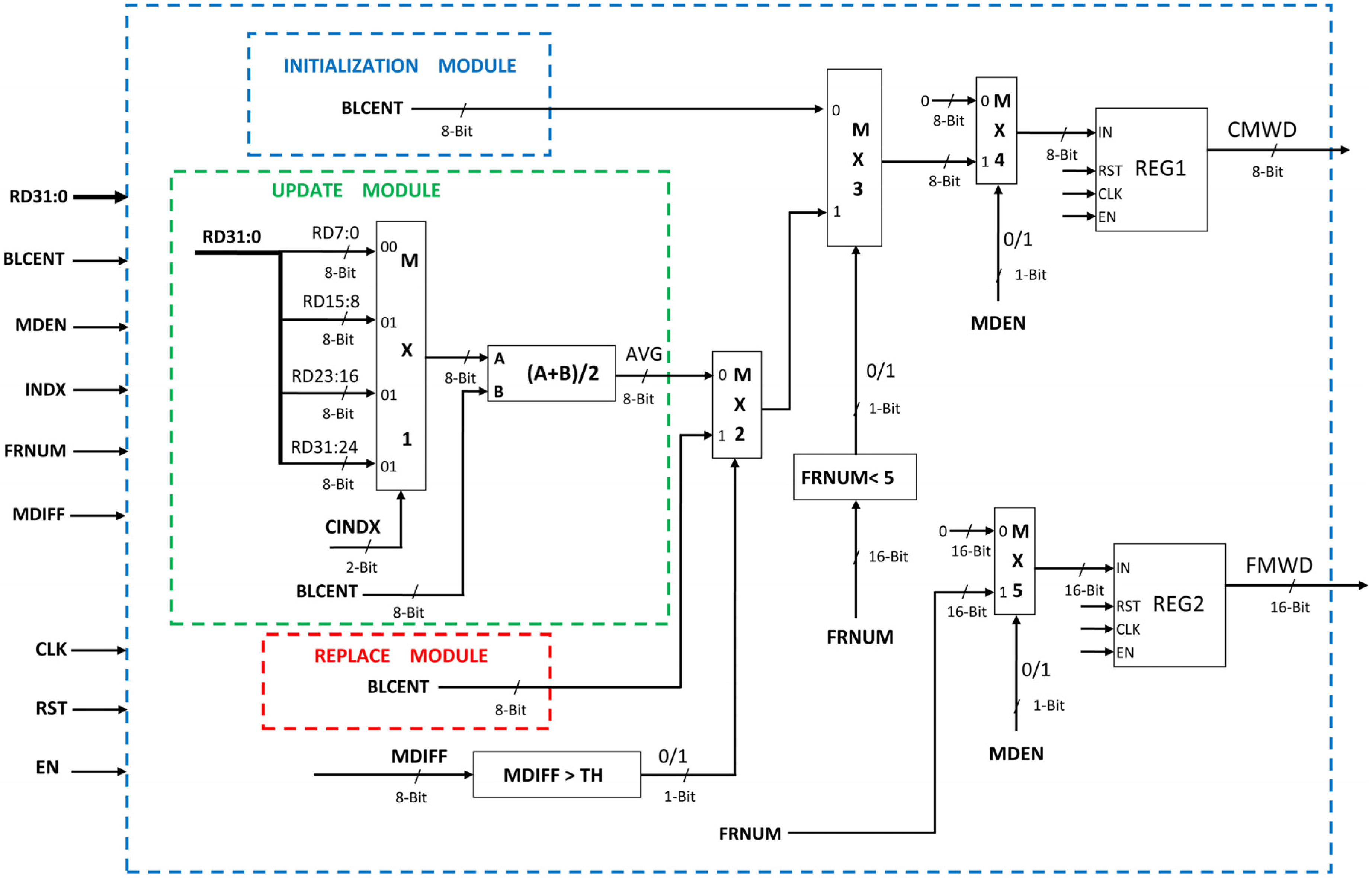

4.5. Initialize-Update-Replace Module

5. Synthesis Results

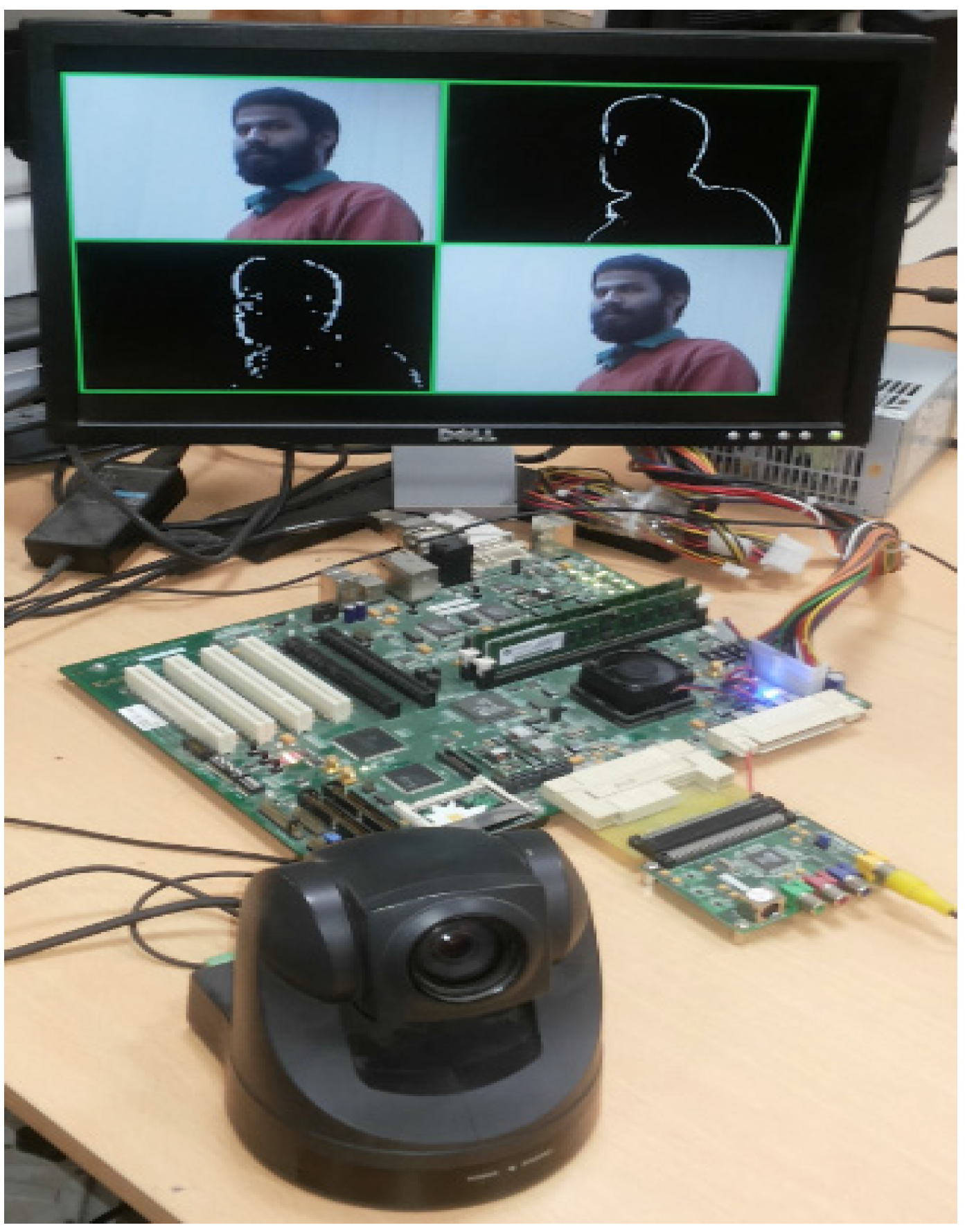

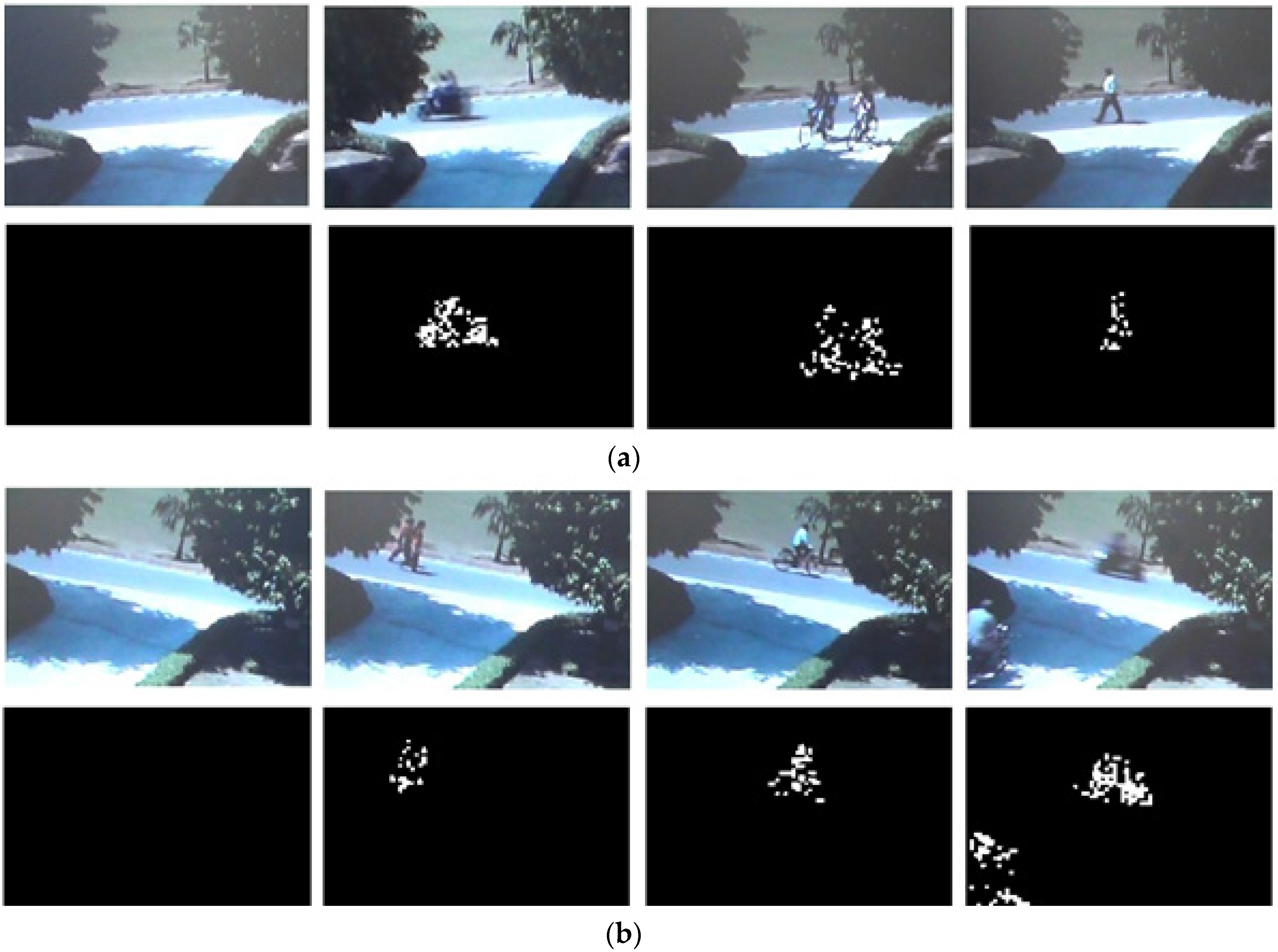

6. Motion Detection Results

7. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Radke, R.J.; Andra, S.; Kofahi, O.A.; Roysam, B. Image Change Detection Algorithms: A Systematic Survey. IEEE Trans. Image Process. 2005, 14, 294–307. [Google Scholar] [CrossRef] [PubMed]

- Rosin, P.L. Thresholding for Change Detection. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 4–7 January 1998; pp. 274–279.

- Rosin, P.L.; Ioannidis, E. Evaluation of Global Image Thresholding for Change Detection. Pattern Recognit. Lett. 2001, 24, 2345–2356. [Google Scholar] [CrossRef]

- Smits, P.C.; Annoni, A. Toward Specification-Driven Change Detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1484–1488. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic Analysis of the Difference Image for Unsupervised Change Detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Colwell, J.E.; Weber, F.P. Forest Change Detection. In Proceedings of the 15th International Symposium on Remote Sensing of the Environment, Ann Arbor, MI, USA, 11–15 May 1981; pp. 839–852.

- Malila, W.A. Change Vector Analysis: An Approach for Detecting Forest Changes with Landsat. In Proceedings of the Symposium on Machine Processing of Remotely Sensed Data, West Lafayette, IN, USA, 3–6 June 1980; pp. 326–336.

- Singh, A. Review Article: Digital Change Detection Techniques using Remotely-sensed Data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Stefano, L.D.; Mattoccia, S.; Mola, M. A Change-detection Algorithm based on Structure and Color. In Proceedings of the IEEE Conference on Advanced Video and Signal-Based Surveillance, 21–22 July 2003; pp. 252–259.

- Hsu, Y.Z.; Nagel, H.H.; Rekers, G. New Likelihood Test Methods for Change Detection in Image Sequences. Comput. Vision Graph. Image Process. 1984, 26, 73–106. [Google Scholar] [CrossRef]

- Skifstad, K.; Jain, R. Illumination Independent Change Detection for Real World Image Sequences. Comput. Vision Graph. Image Process. 1989, 46, 387–399. [Google Scholar] [CrossRef]

- Elfishawy, A.S.; Kesler, S.B.; Abutaleb, A.S. Adaptive Algorithms for Change Detection in Image Sequence. Signal Process. 1991, 23, 179–191. [Google Scholar] [CrossRef]

- Jain, Z.S.; Chau, Y.A. Optimum Multisensor Data Fusion for Image Change Detection. IEEE Trans. Syst. Man Cybern. 1995, 25, 1340–1347. [Google Scholar] [CrossRef]

- Toyama, K.; Krumm, J.; Brumitt, B.; Meyers, B. Wallflower: Principles and Practice of Background Maintenance. In Proceedings of the Seventh International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 255–261.

- Clifton, C. Change Detection in Overhead Imagery using Neural Networks. Appl. Intell. 2003, 18, 215–234. [Google Scholar] [CrossRef]

- Durucan, E.; Ebrahimi, T. Change Detection and Background Extraction by Linear Algebra. In Proceedings of the IEEE, October 2001; Volume 89, pp. 1368–1381. [CrossRef]

- Li, L.; Leung, M.K.H. Integrating Intensity and Texture Differences for Robust Change Detection. IEEE Trans. Image Process. 2002, 11, 105–112. [Google Scholar] [PubMed]

- Liu, S.C.; Fu, C.W.; Chang, S. Statistical Change Detection with Moments under Time-Varying Illumination. IEEE Trans. Image Process. 1998, 7, 1258–1268. [Google Scholar] [PubMed]

- Cavallaro, A.; Ebrahimi, T. Video Object Extraction based on Adaptive Background and Statistical Change Detection. In Proceedings of the SPIE Visual Communications and Image Processing, San Jose, CA, USA, 20 January 2001; pp. 465–475.

- Huwer, S.; Niemann, H. Adaptive Change Detection for Real-Time Surveillance Applications. In Proceedings of the Third IEEE International Workshop on Visual Surveillance, Dublin, Ireland, 1 July 2000; pp. 37–46.

- Kanade, T.; Collins, R.T.; Lipton, A.J.; Burt, P.; Wixson, L. Advances in Cooperative Multi-Sensor Video Surveillance. In Proceedings of the DARPA Image Understanding Workshop, San Francisco, USA, 20 November 1998; pp. 3–24.

- Stauffer, C.; Grimson, W.E.L. Learning Patterns of Activity using Real-Time Tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 747–757. [Google Scholar] [CrossRef]

- Butler, D.E.; Bove, V.M.; Sridharan, S. Real-Time Adaptive Foreground/Background Segmentation. EURASIP J. Appl. Signal Process. 2005, 2005, 2292–2304. [Google Scholar] [CrossRef] [Green Version]

- Dumontier, C.; Luthon, F.; Charras, J.P. Real-Time Implementation of An MRF-based Motion Detection Algorithm on a DSP Board. In Proceedings of the IEEE Workshop on Digital Signal Processing, Leon, 1–4 September 1996; pp. 183–186.

- Dumontier, C.; Luthon, F.; Charras, J.P. Real-Time DSP Implementation for MRF-based Video Motion Detection. IEEE Trans. Image Process. 1999, 8, 1341–1347. [Google Scholar] [CrossRef] [PubMed]

- Bassignana, P.; Martina, M.; Masera, G.; Molino, A.; Vacca, F. DSP Implementation of a Low Complexity Motion Detection Algorithm. In Proceedings of the 39th Asilomar Conference on Signals, Systems and Computers, Pacific Groove, USA, 28 October–1 November 2005; pp. 1352–1355.

- Benkhalil, A.K.; Lpson, S.S.; Booth, W. A Novel CPLD based Implementation of a Motion Detection Algorithm for Surveillance Applications. In Proceedings of the International Conference on Custom Integrated Circuits, Santa Clara, CA, USA, 11–14 May 1998; pp. 105–108.

- Liu, Z.; Li, Z.; Li, G.; Zhang, X.; Zhang, H. A Novel Motion-Detection and Edge Detection Algorithm Based on Motion Estimation. In Recent Advances in Computer and Information Engineering; Springer: Berlin, Heidelberg, 2012; Volume 128, pp. 295–308. [Google Scholar]

- Luthon, F.; Dragomirescu, D. A Cellular Analog Network for MRF Based Video Motion Detection. IEEE Trans. Circuits Syst. I 1999, 46, 281–293. [Google Scholar] [CrossRef]

- Kristensen, F.; Hedberg, H.; Jiang, H.; Nilsson, P.; Öwall, V. An Embedded Real-Time Surveillance System: Implementation and Evaluation. J. Signal Process. Syst. 2008, 52, 75–94. [Google Scholar] [CrossRef]

- Jiang, H.; Ardö, H.; Öwall, V. A Hardware Architecture for Real-time Video Segmentation Utilizing Memory Reduction Techniques. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 226–236. [Google Scholar] [CrossRef]

- Genovese, M.; Napoli, E.; Petra, N. OpenCV Compatible Real Time Processor for Background Foreground Identification. In Proceedings of the International Conference on Microelectronics, Cairo, Egypt, 19–22 December 2010; pp. 467–470.

- Genovese, M.; Napoli, E. FPGA-Based Architecture for Real Time Segmentation and Denoising of HD Video. J. Real Time Image Process. 2013, 8, 389–401. [Google Scholar] [CrossRef]

- Genovese, M.; Napoli, E. ASIC and FPGA Implementation of the Gaussian Mixture Model Algorithm for Real-time Segmentation of High Definition Video. IEEE Trans. Very Large Scale Integr. 2014, 22, 537–547. [Google Scholar] [CrossRef]

- Yu, N.; Kim, K.; Salcic, Z. A New Motion Estimation Algorithm for Mobile Real-Time Video and Its FPGA Implementation. In Proceedings of the IEEE Region 10 Conference TENCON, Thailand, 21–24 November 2004; pp. 383–386.

- Saad, E.M.; Hamdy, A.; Abutaleb, M.M. Reconfigurable Hardware Implementation of a Fast and Efficient Motion Detection Algorithm. In Proceedings of the 10th International Conference on Mathematical Methods and Computational Techniques in Electrical Engineering, Corfu Island, Greece, 26–28 October 2008; pp. 40–45.

- Zhang, T.; Wu, H.; Borst, A.; Kuhnlenz, K.; Buss, M. An FPGA Implementation of Insect-Inspired Motion Detector for High-Speed Vision Systems. In Proceedings of the International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 335–340.

- Hussian, S.N.; Rao, K.S.; Ashfaq, S.M. The Hardware Implementation of Motion Object Detection Based on Background Subtraction. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2013, 3, 1297–1302. [Google Scholar]

- Liang, H.; Morie, T. A Motion Detection Model Inspired by Hippocampal Function and Its FPGA Implementation. In Proceedings of the International Conference on Neural Information Processing, Shanghai, China, 13–17 November 2011; Volume 7064, pp. 522–529.

- McErlean, M. Hierarchical Motion Estimation for Embedded Object Tracking. In Proceedings of the IEEE International Symposium on Signal Processing and Information Technology, Vancouver, BC, Canada, 27–30 August 2006; pp. 797–802.

- Sanchez-Ferreira, C.; Mori, J.Y.; Llanos, C.H. Background Subtraction Algorithm for Moving Object Detection in FPGA. In Proceedings of the Southern Conference on Programmable Logic, Bento Goncalves, Brazil, 20–23 March 2012; pp. 1–6.

- Bing, X.; Charoensak, C. Rapid FPGA Prototyping of Gabor-Wavelet Transform for Applications in Motion Detection. In Proceedings of the 7th International Conference on Control, Automation, Robotics and Vision, Singapore, 2–5 December 2002; Volume 3, pp. 1653–1657.

- Desmouliers, C.; Oruklu, E.; Sanile, J. FPGA-Based Design of a High-Performance and Modular Video Processing Platform. In Proceedings of the International Conference on Electro/Information Technology, Windsor, ON, Canada, 7–9 June 2009; pp. 393–398.

- Singh, S.; Mandal, A.S.; Shekhar, C.; Vohra, A. Real-time Implementation of Change Detection for Automated Video Surveillance System. ISRN Electron. 2013, 2013. Article ID 691930. [Google Scholar] [CrossRef]

- Singh, S.; Dunga, S.M.; Saini, R.; Mandal, A.S.; Shekhar, C.; Vohra, A.; Chaudhary, S. Hardware Accelerator Design for Change Detection in Smart Camera. In Proceedings of the SPIE International Conference on Graphics and Image Processing (ICGIP 2011), Cairo, Egypt, 30 September 2011.

- Bartosinski, R.; Danek, M.; Sykora, J.; Kohout, L.; Honzik, P. Video Surveillance Application Based on Application Specific Vector Processors. In Proceedings of the International Conference on Design and Architecture for signal and Image Processing, Karlsruhe, Germany, 23–25 October 2012; pp. 1–8.

- Saad, E.M.; Hamdy, A.; Abutateb, M.M. FPGA Implementation of a Low Cost and Area Real-Time Motion Detection. In Proceedings of the 15th International Conference on Mixed Design of Integrated Circuits and Systems, Poznan, Poland, 19–21 June 2008; pp. 249–254.

- Chowdary, M.K.; Babu, S.S.; Babu, S.S.; Khan, H. FPGA Implementation of Moving Object Detection in Frames by Using Background Subtraction Algorithm. In Proceedings of the International Conference on Communication and Signal Processing, Melmaruvathur, India, 3–5 April 2013; pp. 1032–1036.

- Hao, J.; Shibata, T. A VLSI-Implementation-Friendly EGO-Motion Detection Algorithm Based on Edge-Histogram Matching. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Toulouse, 14–19 May 2006; Volume 2, pp. 245–248.

- Singh, S.; Dunga, S.M.; Mandal, A.S.; Shekhar, C.; Chaudhary, S. FPGA based Embedded Implementation of Video Summary Generation Scheme in Smart Camera Systems. Adv. Mater. Res. 2011, 403–408, 516–521. [Google Scholar] [CrossRef]

- Singh, S.; Dunga, S.M.; Mandal, A.S.; Chaudhary, S. Embedded Implementation of Change Detection Algorithm for Smart Camera Systems. In Proceedings of the IEEE International Conference on Recent Trends in Information, Telecommunication, and Computing, Kochi, Kerala, 12–13 March 2010; pp. 268–270.

- Singh, S.; Saini, R.; Saini, A.K.; Mandal, A.S.; Shekhar, C. Performance Evaluation of Different Memory Components for FPGA based Embedded System Design for Video Processing Application; International Journal of Intelligent Systems and Applications (IJISA), MECS Press: Hong Kong, China, 2013; Volume 5, pp. 113–119. [Google Scholar]

- Chutani, E.R.; Chaudhury, S. Video Trans-Coding in Smart Camera for Ubiquitous Multimedia Environment. In Proceedings of the International Symposium on Ubiquitous Multimedia Computing, Hobart, Australia, 13–15 October 2008; pp. 185–189.

- Rodriguez-Gomez, R.; Fernandez-Sanchez, E.J.; Diaz, J.; Ros, E. FPGA Implementation for Real-Time Background Subtraction Based on Horprasert Model. Sensors 2012, 12, 585–611. [Google Scholar] [CrossRef] [PubMed]

| Resources | Camera Interface (CI) | Display (DVI) | Proposed Architecture (PA) | Complete System (CI + PA +DVI) | Total Available Resources | Percentage of Utilization |

|---|---|---|---|---|---|---|

| Slice Registers | 391 | 79 | 239 | 701 | 81920 | 0.86% |

| Slice LUTs | 434 | 101 | 1379 | 1884 | 81920 | 2.30% |

| Route-thrus | 42 | 39 | 62 | 122 | 163840 | 0.07% |

| Occupied Slices | 199 | 33 | 467 | 697 | 20840 | 3.34% |

| BRAMs 36K | 3 | 0 | 168 | 171 | 298 | 57.38% |

| Memory (Kb) | 108 | 0 | 6048 | 6156 | 10728 | 57.38% |

| DSP Slices | 3 | 0 | 0 | 3 | 320 | 0.94% |

| IOs | 16 | 22 | 36 | 36 | 840 | 4.28% |

| Target FPGA Device | Implementation | Maximum Clock Frequency (MHz | Frame Rate for PAL Resolution Video |

|---|---|---|---|

| Virtex5 (xc5fx130t-2ff1738) | Our Implementation | 73.88 MHz | 178 |

| Virtex5 (xc5vlx50-2ff1153) | Our Implementation | 73.88 MHz | 178 |

| [33] | 50.50 MHz | 121 | |

| [32] | 47.00 MHz | 113 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Singh, S.; Shekhar, C.; Vohra, A. FPGA-Based Real-Time Motion Detection for Automated Video Surveillance Systems. Electronics 2016, 5, 10. https://doi.org/10.3390/electronics5010010

Singh S, Shekhar C, Vohra A. FPGA-Based Real-Time Motion Detection for Automated Video Surveillance Systems. Electronics. 2016; 5(1):10. https://doi.org/10.3390/electronics5010010

Chicago/Turabian StyleSingh, Sanjay, Chandra Shekhar, and Anil Vohra. 2016. "FPGA-Based Real-Time Motion Detection for Automated Video Surveillance Systems" Electronics 5, no. 1: 10. https://doi.org/10.3390/electronics5010010