Ability Tests Measure Personality, Personality Tests Measure Ability: Disentangling Construct and Method in Evaluating the Relationship between Personality and Ability

Abstract

:1. Introduction

2. Construct-Method Distinction

3. Sources of Test-Score Variance

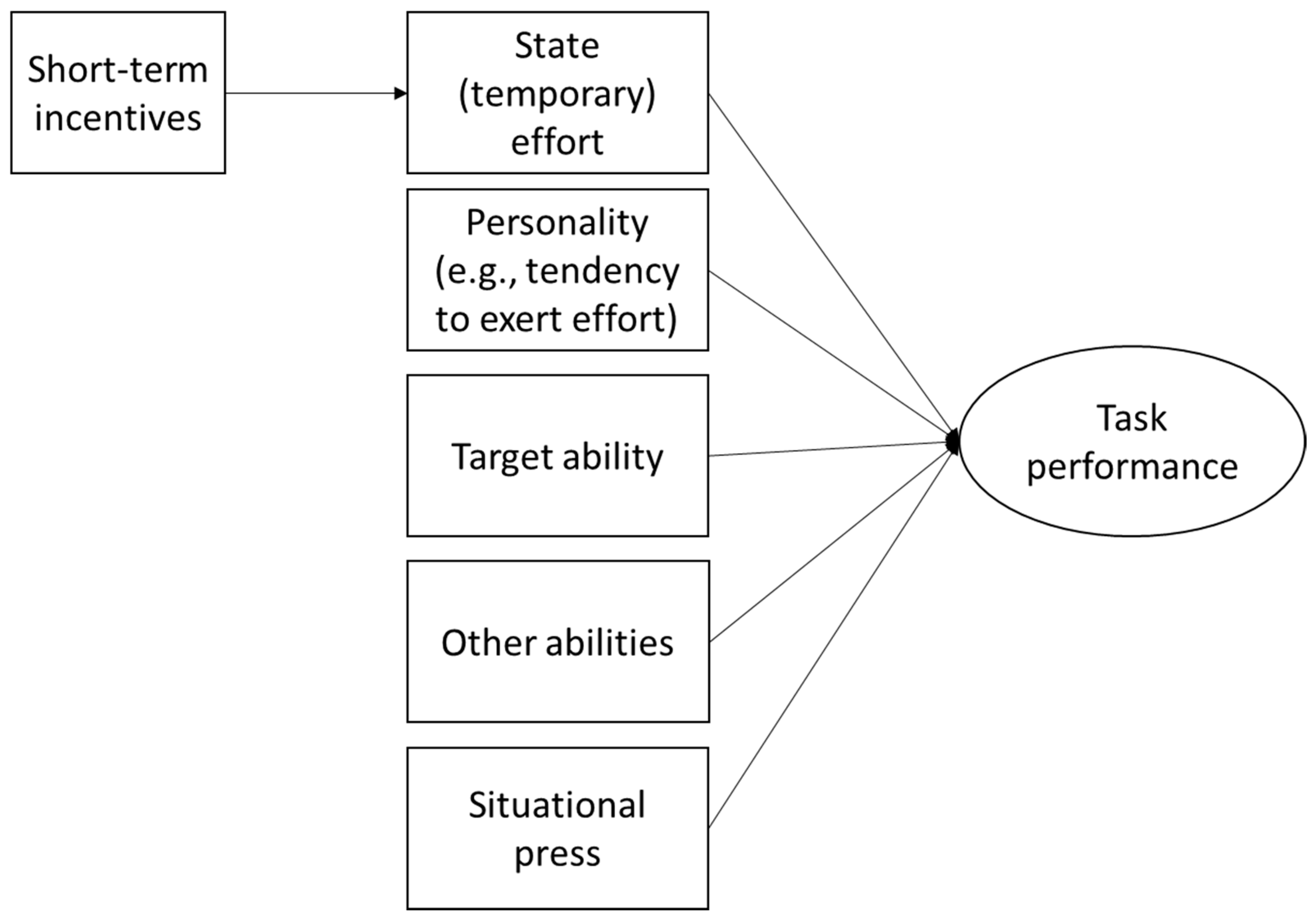

4. Response Model for Cognitive and Personality Tests

5. Maximal-Typical vs. High-Stakes Low-Stakes Distinction

6. Cognitive Test Performance under Low-Stakes Conditions

7. Personality Measured through Performance Tests

7.1. Objective Personality Tests

7.2. Grit Game

7.3. Coding Speed Test as a Measure of Personality

7.4. Economic Preference Games

7.5. Confidence

8. Personality Measured through Test and Survey Behavior

8.1. Survey Effort

8.2. Item Position Effects

8.3. Response Time

9. Personality Measured through Real World Behavior

9.1. Study Time

9.2. Registration Latency

9.3. Word Use, Office Appearance, and Facebook Likes as Personality Measures

10. Ability Effects on Personality Measures

10.1. Age Effects

10.2. Cognitive Ability Effects

10.3. Faking on Personality Tests

10.4. Anchoring Vignettes as a Window into Psychological Understanding

11. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ackerman, P.L. A theory of adult intellectual development: Process, personality, interests, and knowledge. Intelligence 1996, 22, 229–259. [Google Scholar] [CrossRef]

- Ackerman, P.L.; Heggestad, E.D. Intelligence, personality, and interests: Evidence for overlapping traits. Psychol. Bull. 1997, 121, 219–245. [Google Scholar] [CrossRef] [PubMed]

- Ziegler, M.; Danay, E.; Heene, M.; Asendorpf, J.B.; Buehner, M. Openness, fluid intelligence, and crystallized intelligence. J. Res. Personal. 2012, 46, 173–183. [Google Scholar] [CrossRef]

- Ackerman, P.L. The search for personality–intelligence relations: Methodological and conceptual Issues. J. Intell. 2018, 6, 2. [Google Scholar] [CrossRef]

- Rammstedt, B.; Lechner, C.M.; Danner, D. Relationships between personality and cognitive ability: A facet-level analysis. J. Intell. 2018, 6, 28. [Google Scholar] [CrossRef]

- Stankov, L. Low correlations between intelligence and big five personality traits: Need to broaden the domain of personality. J. Intell. 2018, 6, 26. [Google Scholar] [CrossRef]

- Cronbach, L.J. Essentials of Psychological Testing, 3rd ed.; Harper & Row: New York, NY, USA, 1970. [Google Scholar]

- Thorndike, R.L. Personnel Selection: Test and Measurement Techniques; John Wiley & Sons, Inc.: New York, NY, USA, 1949. [Google Scholar]

- Göllner, R.; Roberts, B.W.; Damian, R.I.; Lüdtke, O.; Jonkmann, K.; Trautwein, U. Whose “storm and stress” is it? Parent and child reports of personality development in the transition to early adolescence. J. Personal. 2016, 85, 376–387. [Google Scholar] [CrossRef] [PubMed]

- Roberts, B.W.; Walton, K.E.; Viechtbauer, W. Patterns of mean-level change in personality traits across the life course: A meta-analysis of longitudinal studies. Psychol. Bull. 2006, 132, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Roberts, B.W.; Luo, J.; Briley, D.A.; Chow, P.I.; Su, R.; Hill, P.L. A systematic review of personality trait change through intervention. Psychol. Bull. 2017, 143, 117–141. [Google Scholar] [CrossRef] [PubMed]

- Heckman, J.J.; Kautz, T. Fostering and measuring skills interventions that improve character and cognition. In The Myth of Achievement Tests: The GED and the Role of Character in American Life; Heckman, J.J., Humphries, J.E., Kautz, T., Eds.; The University of Chicago Press: Chicago, IL, USA, 2014; pp. 341–430. [Google Scholar]

- Roberts, B.W. Back to the future: Personality and assessment and personality development. J. Res. Personal. 2009, 43, 137–145. [Google Scholar] [CrossRef] [PubMed]

- Neisser, U.; Boodoo, G.; Bouchard, T.J.; Boykin, A.W.; Brody, N.; Ceci, S.; Halpern, D.; Loehlin, J.C.; Perloff, R.; Sternberg, R.J.; et al. Intelligence: Knowns and unknowns. Am. Psychol. 1996, 51, 77–101. [Google Scholar] [CrossRef]

- Likert, R. A technique for measurement of attitudes. Arch. Psychol. 1932, 140, 5–55. [Google Scholar]

- Rammstedt, B.; Danner, D.; Martin, S. The association between personality and cognitive ability: Going beyond simple effects. J. Res. Personal. 2016, 62, 39–44. [Google Scholar] [CrossRef]

- Boring, E. Intelligence as the Tests Test it; New Republic: Washington, DC, USA, 1923; pp. 35–37. [Google Scholar]

- Stanley, J.C. Reliability. In Educational Measurement, 2nd ed.; Thorndike, R.L., Ed.; American Council on Education: Washington, DC, USA, 1971; pp. 356–442. [Google Scholar]

- Chaplin, W.F.; John, O.P.; Goldberg, L.R. Conceptions of states and traits: Dimensional attributes with ideas as prototypes. J. Personal. Soc. Psychol. 1988, 54, 541–557. [Google Scholar] [CrossRef]

- Alan, S.; Boneva, T.; Ertac, S. Ever Failed, Try Again, Succeed Better: Results from a Randomized Educational Intervention on Grit. HCEO Working Paper. 2016. Available online: https://ssrn.com/abstract=2761390 (accessed on 13 April 2016). [CrossRef]

- Segal, C. Working when no one is watching: Motivation, test scores, and economic success. Manag. Sci. 2012, 58, 1438–1457. [Google Scholar] [CrossRef]

- Beus, J.M.; Whitman, D.S. The relationship between typical and maximum performance: A meta-analtyic examination. J. Hum. Perform. 2012, 25, 355–376. [Google Scholar] [CrossRef]

- Sackett, P.R.; Zedeck, S.; Fogli, L. Relations between measures of typical and maximum job performance. J. Appl. Psychol. 1988, 73, 482–486. [Google Scholar] [CrossRef]

- Hembree, R. Correlates, causes, effects, and treatment of test anxiety. Rev. Educ. Res. 1988, 58, 47–77. [Google Scholar] [CrossRef]

- Van der Linden, W.J. Setting time limits on tests. Appl. Psychol. Meas. 2011, 35, 183–199. [Google Scholar] [CrossRef]

- Liu, O.L.; Bridgeman, B.; Adler, R.M. Measuring learning outcomes in higher education: Motivation matters. Educ. Res. 2012, 41, 352–362. [Google Scholar] [CrossRef]

- Finn, B. Measuring motivation in low-stakes assessment. ETS Res. Rep. Ser. 2015, 2015, 1–17. [Google Scholar] [CrossRef]

- Hembree, R. The Nature, Effects, and Relief of Mathematics Anxiety. J. Res. Math. Educ. 1990, 21, 33–46. [Google Scholar] [CrossRef]

- Steele, C.M.; Aronson, J. Stereotype threat and the intellectual test performance of African-Americans. J. Personal. Soc. Psychol. 1995, 69, 797–811. [Google Scholar] [CrossRef]

- Beilock, S.L. Choke: What the Secrets of the Brain Reveal about Getting It Right When You Have to; Simon & Schuster: New York, NY, USA; Free Press: New York, NY, USA, 2010. [Google Scholar]

- Debeer, D.; Janssen, R. Modeling Item-Position Effects within an IRT Framework. J. Educ. Meas. 2013, 50, 164–185. [Google Scholar] [CrossRef]

- Rabbit, P. Error and error correction in choice-response tasks. J. Exp. Psychol. 1966, 71, 264–272. [Google Scholar] [CrossRef]

- Mueller, C.M.; Dweck, C.S. Intelligence praise can undermine motivation and performance. J. Personal. Soc. Psychol. 1998, 75, 33–52. [Google Scholar] [CrossRef]

- Kane, M.J.; McVay, J.C. What Mind Wandering Reveals About Executive-Control Abilities and Failures. Curr. Dir. Psychol. Sci. 2012, 21, 348–354. [Google Scholar] [CrossRef]

- Terhune, D.B.; Croucher, M.; Marcusson-Clavertz, D.; Macdonald, J.S.P. Time contracts and temporal precision declines when the mind wanders. J. Exp. Psychol. 2017, 43, 1864–1871. [Google Scholar] [CrossRef] [PubMed]

- Powers, D.E.; Rock, D.A. Effects of coaching on SAT I: Reasoning Test scores. J. Educ. Meas. 1999, 36, 93–118. [Google Scholar] [CrossRef]

- Irvine, S.H. Computerised Test Generation for Cross-National Military Recruitment: A Handbook; IOS Press: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Eich, E. Searching for mood dependent memory. Psychol. Sci. 1995, 6, 67–75. [Google Scholar] [CrossRef]

- Borghans, L.; Duckworth, A.L.; Heckman, J.J.; Weel, B.T. The economics and psychology of personality traits. J. Hum. Resour. 2008, 43, 972–1059. [Google Scholar]

- Ackerman, P.L.; Chamorro-Premuzic, T.; Furnham, A. Trait complexes and academic achievement: Old and new ways of examining personality in educational contexts. Br. J. Educ. Psychol. 2011, 81, 27–40. [Google Scholar] [CrossRef] [PubMed]

- Murray, H.A. Explorations in Personality; Oxford University Press: Oxford, UK, 1938. [Google Scholar]

- Mischel, W. On the future of personality measurement. Am. Psychol. 1977, 32, 246–254. [Google Scholar] [CrossRef]

- Sherman, R.A.; Nave, C.S.; Funder, D.C. Properties of persons and situations related to overall and distinctive personality-behavior congruence. J. Res. Personal. 2012, 46, 87–101. [Google Scholar] [CrossRef]

- Sherman, R.A.; Nave, C.S.; Funder, D.C. Situational construal is related to personality and gender. J. Res. Personal. 2013, 47, 1–14. [Google Scholar] [CrossRef]

- Ng, K.-Y.; Ang, S.; Chan, K.-Y. Personality and leadership effectiveness: A moderated mediation model of leadership self-efficacy, job demands, and job autonomy. J. Appl. Psychol. 2008, 93, 733–743. [Google Scholar] [CrossRef] [PubMed]

- Kelly, R.T.; Rawson, H.E.; Terry, R.L. Interaction effects of achievement need and situational press on performance. J. Soc. Psychol. 1973, 89, 141–145. [Google Scholar] [CrossRef] [PubMed]

- Sherman, R.A.; Nave, C.S.; Funder, D.C. Situational similarity and personality predict behavioral consistency. J. Personal. Soc. Psychol. 2010, 99, 330–343. [Google Scholar] [CrossRef] [PubMed]

- Brennan, R.L. Generalizability Theory; Springer: New York, NY, USA, 2001. [Google Scholar]

- Cronbach, L.J.; Gleser, G.C.; Nanda, H.; Rajaratnam, N. The Dependability of Behavioral Measurements: Theory of Generalizability for Scores and Profiles; Wiley: New York, NY, USA, 1972. [Google Scholar]

- Steyer, R.; Mayer, A.; Geiser, C.; Cole, D.A. A theory of states and traits—Revised. Ann. Rev. Clin. Psychol. 2015, 11, 71–98. [Google Scholar] [CrossRef] [PubMed]

- Steyer, R.; Schmitt, M.; Eid, M. Latent state-trait theory and research in personality and individual differences. Eur. J. Personal. 1999, 13, 389–408. [Google Scholar] [CrossRef]

- Vansteenkiste, M.; Sierens, E.; Soenens, B.; Luyckx, K.; Lens, W. Motivational profiles from a self-determination perspective: The quality of motivation matters. J. Educ. Psychol. 2009, 101, 671–688. [Google Scholar] [CrossRef]

- Curran, P.J.; Bauer, D.J. Building path diagrams for multilevel models. Psychol. Methods 2007, 12, 283–297. [Google Scholar] [CrossRef] [PubMed]

- McArdle, J.J.; Boker, S.M. RAMpath: Automatic Path Diagram Software; Erlbaum: Hillsdale, NJ, USA, 1991. [Google Scholar]

- Stagner, R. Psychology of Personality, 3rd ed.; McGraw-Hill Book Company, Inc.: New York, NY, USA, 1961. [Google Scholar]

- Matsumoto, D. (Ed.) The Cambridge Dictionary of Psychology; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- VandenBos, G.R. (Ed.) American Psychological Association Dictionary of Psychology, 2nd ed.; American Psychological Association: Washington, DC, USA, 2015. [Google Scholar]

- Fiske, D.W.; Butler, J.M. The experimental conditions for measuring individual differences. Educ. Psychol. Meas. 1963, 23, 249–266. [Google Scholar] [CrossRef]

- Klesges, R.C.; Mcginley, H.; Jurkovic, G.J.; Morgan, T.J. The predictive validity of typical and maximal personality measures in self-reports and peer reports. Bull. Psychon. Soc. 1979, 13, 401–404. [Google Scholar] [CrossRef] [Green Version]

- Paulhus, D.L.; Martin, C.L. The structure of personality capabilities. J. Personal. Soc. Psychol. 1987, 52, 354–365. [Google Scholar] [CrossRef]

- Turner, R.G. Consistency, self-consciousness, and the predictive validity of typical and maximal personality measures. J. Res. Personal. 1978, 12, 117–132. [Google Scholar] [CrossRef]

- Wallace, J. An abilities conception of personality: Some implications for personality measurement. Am. Psychol. 1966, 21, 132–138. [Google Scholar] [CrossRef]

- Willerman, L.; Turner, R.G.; Peterson, M. A comparison of the predictive validity of typical and maximal personality measures. J. Res. Personal. 1976, 10, 482–492. [Google Scholar] [CrossRef]

- Craik, K.H. Accentuated, revealed, and quotidian personalities. Psychol. Inq. 1993, 4, 278–280. [Google Scholar] [CrossRef]

- Cronbach, L.J. Essentials of Psychological Testing, 5th ed.; Harper & Row: New York, NY, USA, 1990. [Google Scholar]

- Carroll, J.B. Human Cognitive Abilities: A Survey of Factor-Analytic Studies; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Humphreys, L.G. Intelligence from the standpoint of a (pragmatic) behaviorist. Psychol. Inq. 1994, 5, 179–192. [Google Scholar] [CrossRef]

- Perkins, D.; Tishman, S.; Ritchhart, R.; Donis, K.; Andrade, A. Intelligence in the wild: A dispositional view of intellectual traits. Educ. Psychol. Rev. 2000, 12, 269–293. [Google Scholar] [CrossRef]

- Goff, M.; Ackerman, P.L. Personality-intelligence relations: Assessment of typical intellectual engagement. J. Educ. Psychol. 1992, 84, 537–552. [Google Scholar] [CrossRef]

- Dennis, M.J.; Sternberg, R.J.; Beatty, P. The construction of “user-friendly” tests of cognitive functioning: A synthesis of maximal-and typical-performance measurement philosophies. Intelligence 2000, 28, 193–211. [Google Scholar] [CrossRef]

- Ackerman, P.L. Adult intelligence: The construct and the criterion problem. Perspect. Psychol. Sci. 2017, 12, 987–998. [Google Scholar] [CrossRef] [PubMed]

- Cattell, R.B. Personality traits associated with abilities. II: With verbal and mathematical abilities. J. Educ. Psychol. 1945, 36, 475–486. [Google Scholar] [CrossRef] [PubMed]

- Goodenough, F.L. Mental Testing; Holt, Rinehart, & Winston: New York, NY, USA, 1949. [Google Scholar]

- Wernimont, P.F.; Campbell, J.P. Signs, samples, and criteria. J. Appl. Psychol. 1968, 52, 372–376. [Google Scholar] [CrossRef] [PubMed]

- Aftanas, M.S. Theories, models, and standard systems of measurement. Appl. Psychol. Meas. 1988, 12, 325–338. [Google Scholar] [CrossRef]

- Hunt, E. On the nature of intelligence. Science 1983, 219, 141–146. [Google Scholar] [CrossRef] [PubMed]

- Hunt, E. Science, technology, and intelligence. In The Influence of Cognitive Psychology on Testing; Ronning, R.R., Glover, J.A., Conoley, J.C., Witt, J.C., Eds.; Erlbaum: Hillsdale, NJ, USA, 1987; pp. 11–40. [Google Scholar]

- Reeve, C.L.; Scherbaum, C.; Goldstein, H. Manifestations of intelligence: Expanding the measurement space to reconsider specific cognitive abilities. Hum. Resour. Manag. Rev. 2015, 25, 28–37. [Google Scholar] [CrossRef]

- Cramer, A.O.; Sluis, S.; Noordhof, A.; Wichers, M.; Geschwind, N.; Aggen, S.H.; Kendler, S.H.; Borsboom, D. Dimensions of normal personality as networks in search of equilibrium: You can’t like parties if you don’t like people. Eur. J. Personal. 2012, 26, 414–431. [Google Scholar] [CrossRef]

- Mõttus, R.; Kandler, C.; Bleidorn, W.; Riemann, R.; McCrae, R.R. Personality traits below facets: The consensual validity, longitudinal stability, heritability, and utility of personality nuances. J. Personal. Soc. Psychol. 2017, 112, 474–490. [Google Scholar] [CrossRef] [PubMed]

- Connelly, B.S.; Ones, D.S. Another perspective on personality: Meta-analytic integration of observers’ accuracy and predictive validity. Psychol. Bull. 2010, 136, 1092–1122. [Google Scholar] [CrossRef] [PubMed]

- Freund, P.A.; Kasten, N. How smart do you think you are? A meta-analysis on the validity of self-estimates of cognitive ability. Psychol. Bull. 2012, 138, 296–321. [Google Scholar] [CrossRef] [PubMed]

- Furnham, A.; Monsen, J.; Ahmetoglu, G. Typical intellectual engagement, Big Five personality traits, approaches to learning and cognitive ability predictors of academic performance. Br. J. Educ. Psychol. 2009, 79, 769–782. [Google Scholar] [CrossRef] [PubMed]

- Ellis, A. The validity of personality questionnaires. Psychol. Bull. 1946, 43, 385–440. [Google Scholar] [CrossRef] [PubMed]

- Terman, L.M. The Measurement of Intelligence: An Explanation of and a Complete Guide for the Use of the Stanford Revision and Extension of the Binet-Simon Intelligence Scale; Houghton Mifflin: New York, NY, USA, 1916. [Google Scholar]

- Thorndike, E.L. An Introduction to the Theory of Mental and Social Measurements; Teachers College, Columbia University: New York, NY, USA, 1904. [Google Scholar]

- Revelle, W. Individual differences in personality and motivation: ‘Non-cognitive’ determinants of cognitive performance. In Attention: Selection, Awareness, and Control; Baddeley, A., Weiskrantz, L., Eds.; Oxford University Press: Oxford, UK, 1993; pp. 346–373. [Google Scholar]

- Benton, A.L. Influence of incentives upon intelligence test scores of school children. Pedagog. Semin. J. Genet. Psychol. 1936, 49, 494–497. [Google Scholar] [CrossRef]

- Ferguson, H.H. Incentives and an intelligence tests. Aust. J. Psychol. Philos. 1937, 15, 39–53. [Google Scholar] [CrossRef]

- Klugman, S.F. The effect of money incentive versus praise upon the reliability and obtained scores of the Revised Stanford-Binet Test. J. Gen. Psychol. 1944, 30, 255–269. [Google Scholar] [CrossRef]

- Knight, F.B.; Remmers, H.H. Fluctuations in mental production when motivation is the main variable. J. Appl. Psychol. 1923, 7, 209–223. [Google Scholar] [CrossRef]

- Maller, J.B.; Zubin, J. The effect of motivation upon intelligence test scores. Pedagog. Semin. J. Genet. Psychol. 1932, 41, 135–151. [Google Scholar] [CrossRef]

- Wise, S.L.; Smith, L.F. The validity of assessment when students don’t give good effort. In Handbook of Human and Social Conditions in Assessmen; Brown, G.T.L., Harris, L.R., Eds.; Routledge: New York, NY, USA, 2016; pp. 204–220. [Google Scholar]

- Braun, H.; Kirsch, I.; Yamamoto, K. An experimental study of the effects of monetary incentives on performance on the 12th-grade NAEP reading assessment. Teach. Coll. Rec. 2011, 113, 2309–2344. [Google Scholar]

- Pedulla, J.; Abrams, L.; Madaus, G.; Russell, M.; Ramos, M.; Miao, J. Perceived Effects of State-Mandated Testing Programs on Teaching and Learning: Findings from a National Survey of Teachers; National Board on Educational Testing and Public Policy: Boston, MA, USA, 2003. [Google Scholar]

- Zilberberg, A.; Anderson, R.D.; Finney, S.J.; Marsh, K.R. American college students’ attitudes toward institutional accountability testing: Developing measures. Educ. Assess. 2013, 18, 208–234. [Google Scholar] [CrossRef]

- Sundre, D.L.; Moore, D.L. The student opinion scale: A measure of examinee motivation. Assessment Update 2002, 14, 8–9. [Google Scholar]

- Duckworth, A.L.; Quinn, P.D.; Lynam, D.R.; Loeber, R.; Stouthamer-Loeber, M. Role of test motivation in intelligence testing. Proc. Natl. Acad. Sci. USA 2011, 108, 7716–7720. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wise, S.L.; Kong, X. Response time effort: A new measure of examinee motivation in computer-based tests. Appl. Meas. Educ. 2005, 18, 163–183. [Google Scholar] [CrossRef]

- Meijer, R.R. Diagnosing item score patterns on a test using item response theory-based person-fit statistics. Psychol. Methods 2003, 8, 72–87. [Google Scholar] [CrossRef] [PubMed]

- Wise, S.L.; DeMars, C.E. Low examinee effort in low-stakes assessment: Problems and potential solutions. Educ. Assess. 2005, 10, 1–17. [Google Scholar] [CrossRef]

- Revelle, W. Personality, motivation, and cognitive performance. In Abilities, Motivation and Methodology; Kanfer, R., Ackerman, P.L., Cudeck, R., Eds.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1989; pp. 297–343. [Google Scholar]

- Richardson, M.; Abraham, C. Conscientiousness and achievement motivation predict performance. Eur. J. Personal. 2009, 23, 589–605. [Google Scholar] [CrossRef]

- Costa, P.T., Jr.; McCrae, R.R.; Dye, D.A. Facet scales for agreeableness and conscientiousness: A revision of the NEO Personality Inventory. Personal. Individ. Differ. 1991, 12, 887–898. [Google Scholar] [CrossRef]

- Dudley, N.M.; Orvis, K.A.; Lebiecki, J.E.; Cortina, J.M. A meta-analytic investigation of conscientiousness in the prediction of job performance: Examining the intercorrelations and the incremental validity of narrow traits. J. Appl. Psychol. 2006, 91, 40–57. [Google Scholar] [CrossRef] [PubMed]

- Hough, L.M.; Johnson, J.W. Use and importance of personality variables in work settings. In Handbook of Psychology, Volume 12: Industrial and Organizational Psychology, 2nd ed.; Schmitt, N.W., Highhouse, S., Weiner, I.B., Eds.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2013; pp. 211–243. [Google Scholar]

- Messick, S. Validity. In Educational Measurement, 3rd ed.; Linn, R.L., Ed.; Macmillan: Old Tappan, NJ, USA, 1989; pp. 13–103. [Google Scholar]

- Richardson, M.; Abraham, C.; Bond, R. Psychological correlates of university students’ academic performance: A systematic review and meta-analysis. Psychol. Bull. 2012, 138, 353–387. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Poropat, A.E. A meta-analysis of the five-factor model of personality and academic performance. Psychol. Bull. 2009, 135, 322–338. [Google Scholar] [CrossRef] [PubMed]

- Noftle, E.E.; Robins, R.W. Personality predictors of academic outcomes: Big five correlates of GPA and SAT scores. J. Personal. Soc. Psychol. 2007, 93, 116–130. [Google Scholar] [CrossRef] [PubMed]

- Judge, T.A.; Higgins, C.A.; Thoresen, C.J.; Barrick, M.R. The big five personality traits, general mental ability, and career success across the life span. Pers. Psychol. 1999, 52, 621–652. [Google Scholar] [CrossRef]

- Batey, M.; Chamorro-Premuzic, T.; Furnham, A. Individual differences in ideational behavior: Can the big five and psychometric intelligence predict creativity scores? Creat. Res. J. 2010, 22, 90–97. [Google Scholar] [CrossRef]

- Lounsbury, J.W.; Sundstrom, E.; Loveland, J.M.; Gibson, L.W. Intelligence,“Big Five” personality traits, and work drive as predictors of course grade. Personal. Individ. Differ. 2003, 35, 1231–1239. [Google Scholar] [CrossRef]

- Robbins, S.B.; Lauver, K.; Le, H.; Davis, D.; Langley, R.; Carlstrom, A. Do psychosocial and study skill factors predict college outcomes? A meta-analysis. Psychol. Bull. 2004, 130, 261–288. [Google Scholar] [CrossRef] [PubMed]

- Murray, A.L.; Johnson, W.; McGue, M.; Iacono, W.G. How are conscientiousness and cognitive ability related to one another? A re-examination of the intelligence compensation hypothesis. Personal. Individ. Differ. 2014, 70, 17–22. [Google Scholar] [CrossRef]

- Cattell, R.B.; Warburton, F.W. Objective Personality and Motivation Tests; University of Illinois Press: Urbana, IL, USA, 1967. [Google Scholar]

- Hartshorne, H.; May, M.A. Studies in the Nature of Character: Studies in Deceit; MacMillan: New York, NY, USA, 1928. [Google Scholar]

- Ortner, T.M.; Schmitt, M. Advances and continuing challenges in objective personality testing. Eur. J. Psychol. Assess. 2014, 30, 163–168. [Google Scholar] [CrossRef]

- Ortner, T.; Proyer, R. Objective personality tests. In Behavior-Based Assessment in Psychology; Ortner, T.M., van de Vijver, F.J.R., Eds.; Hogrefe & Huber: Seattle, DC, USA, 2015; pp. 133–149. ISBN 978-0-88937-437-9. [Google Scholar]

- James, L. Measurement of personality via conditional reasoning. Organ. Res. Methods 1998, 1, 131–163. [Google Scholar] [CrossRef]

- Lejuez, C.W.; Read, J.P.; Kahler, C.W.; Richards, J.B.; Ramsey, S.E.; Stuart, G.L.; Brown, R.A. Evaluation of a behavioral measure of risk taking: The Balloon Analogue Risk Task (BART). J. Exp. Psychol. 2002, 8, 75–84. [Google Scholar] [CrossRef]

- Gugerty, L. Situation awareness during driving: Explicit and implicit knowledge in dynamic spatial memory. J. Exp. Psychol. 1997, 3, 42–66. [Google Scholar] [CrossRef]

- Hurwitz, J.B. Assessing a perceptual model of risky real-time decision making. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 1996, 40, 223–227. [Google Scholar] [CrossRef]

- Jackson, J.J.; Wood, D.; Bogg, T.; Walton, K.E.; Harms, P.D.; Roberts, B.W. What do conscientious people do? Development and validation of the Behavioral Indicators of Conscientiousness (BIC). J. Res. Personal. 2010, 44, 501–511. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Almlund, M.; Duckworth, A.L.; Heckman, J.J.; Kautz, T.D. Personality psychology and economics. (No. w16822). In Handbook of the Economics of Education; Hanushek, E.A., Machin, S., Woessmann, L., Eds.; Elsevier: Amsterdam, The Netherlands, 2011; pp. 1–181. [Google Scholar]

- Goldberg, L.R. The structure of phenotypic personality traits. Am. Psychol. 1993, 48, 26–34. [Google Scholar] [CrossRef] [PubMed]

- Falk, A.; Becker, A.; Dohmen, T.; Enke, B.; Huffman, D.; Sunde, U. The Nature and Predictive Power of Preferences: Global Evidence; Institute for the Study of Labor (IZA), Discussion Paper (DP) No. 9504; IZA: Bonn, Germany, 2015. [Google Scholar]

- Falk, A.; Becker, A.; Dohmen, T.; Huffman, D.; Sunde, U. The Preference Survey Module: A Validated Instrument for Measuring Risk, Time, and Social Preferences; Institute for the Study of Labor (IZA), Discussion Paper (DP) No. 9674; IZA: Bonn, Germany, 2016. [Google Scholar]

- Stankov, L.; Crawford, J.D. Confidence judgments in studies of individual differences. Personal. Individ. Differ. 1996, 21, 971–986. [Google Scholar] [CrossRef]

- Stankov, L.; Morony, S.; Lee, Y.-P. Confidence: The best non-cognitive predictor of academic achievement? Educ. Psychol. 2013, 34, 9–28. [Google Scholar] [CrossRef]

- Stankov, L.; Kleitman, S.; Jackson, S.A. Measures of the trait of confidence. In Measures of Personality and Social Psychological Constructs; Boyle, G.J., Saklofske, D.H., Matthews, G., Eds.; Academic Press: London, UK, 2014; pp. 158–189. [Google Scholar]

- Stankov, L.; Lee, J.; Luo, W.; Hogan, D.J. Confidence: A better predictor of academic achievement than self-efficacy, self-concept and anxiety? Learn. Individ. Differ. 2012, 22, 747–758. [Google Scholar] [CrossRef]

- Jackson, S.A.; Kleitman, S.; Howie, P.; Stankov, L. Cognitive abilities, monitoring confidence, and control thresholds explain individual differences in heuristics and biases. Front. Psychol. 2016, 7, 1559. [Google Scholar] [CrossRef] [PubMed]

- Lee, J. Universals and specifics of math self-concept, math-self-efficacy, and math anxiety across 41 PISA 2003 participating countries. Learn. Individ. Differ. 2009, 19, 355–365. [Google Scholar] [CrossRef]

- Kleitman, S.; Stankov, L. Self-confidence and metacognitive processes. Learn. Individ. Differ. 2007, 17, 161–173. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.L.; Curran, P.G.; Keeney, J.; Poposki, E.M.; DeShon, R.P. Detecting and deterring insufficient effort responding to surveys. J. Bus. Psychol. 2012, 27, 99–114. [Google Scholar] [CrossRef]

- Malhotra, N. Completion time and response order effects in web surveys. Public Opin. Q. 2008, 72, 914–934. [Google Scholar] [CrossRef]

- Meade, A.W.; Bartholomew, C.S. Identifying careless responses survey data. Psychol. Methods 2012, 17, 437–455. [Google Scholar] [CrossRef] [PubMed]

- Hitt, C.; Trivitt, J.; Cheng, A. When you say nothing at all: The predictive power of student effort on surveys. Econ. Educ. Rev. 2016, 52, 105–119. [Google Scholar] [CrossRef]

- Zamarro, G.; Cheng, A.; Shakeel, M.D.; Hitt, C. Comparing and validating measures of non-cognitive traits: Performance task measures and self-reports from a nationally representative internet panel. J. Behav. Exp. Econ. 2018, 72, 51–60. [Google Scholar] [CrossRef]

- Cheng, A.; Zamarro, G.; Orriens, B. Personality as a predictor of unit nonresponse in an internet panel. Sociol. Methods Res. 2018. [Google Scholar] [CrossRef]

- Leary, L.F.; Dorans, N.J. Implications for altering the context in which test items appear: A historical perspective on an immediate concern. Rev. Educ. Res. 1985, 55, 387–413. [Google Scholar] [CrossRef]

- Albano, A.D. Multilevel modeling of item position effects. J. Educ. Meas. 2014, 50, 408–426. [Google Scholar] [CrossRef]

- Debeer, D.; Buchholz, J.; Hartig, J.; Janssen, R. Student, school, and country differences in sustained test-taking effort in the 2009 PISA Reading assessment. J. Educ. Behav. Stat. 2014, 39, 502–523. [Google Scholar] [CrossRef]

- Borgonovi, F.; Biecek, P. An international comparison of students’ ability to endure fatigue and maintain motivation during a low-stakes test. Learn. Individ. Differ. 2016, 49, 128–137. [Google Scholar] [CrossRef]

- Weirich, S.; Hecht, M.; Penk, C.; Roppelt, A.; Bohme, K. Item position effects are moderated by changes in test-taking effort. Appl. Psychol. Meas. 2017, 41, 115–129. [Google Scholar] [CrossRef] [PubMed]

- Kong, X.J.; Wise, S.L.; Bhola, D.S. Setting the response time threshold parameter to differentiate solution behavior from rapid-guessing behavior. Educ. Psychol. Meas. 2007, 67, 606–619. [Google Scholar] [CrossRef]

- Wise, S.L.; Pastor, D.A.; Kong, X.J. Understanding correlates of rapid-guessing behavior in low stakes testing: Implications for test development and measurement practice. Appl. Meas. Educ. 2009, 22, 185–205. [Google Scholar] [CrossRef]

- Lee, Y.-H.; Jia, Y. Using response time to investigate students’ test-taking behaviors in a NAEP computer-based study. Large-Scale Assess. Educ. 2014, 2, 1–24. [Google Scholar] [CrossRef]

- Wise, S.L.; Gao, L. A general approach to measuring test-taking effort on computer-based tests. Appl. Meas. Educ. 2014, 30, 343–354. [Google Scholar] [CrossRef]

- Cooper, H.; Robinson, J.C.; Patall, E.A. Does homework improve academic achievement? A synthesis of research, 1987–2003. Rev. Educ. Res. 2006, 76, 1–62. [Google Scholar] [CrossRef]

- McMullen, S. The Impact of Homework Time on Academic Achievement. Available online: https://www.unc.edu/courses/2007fall/econ/994/040/2007fall/mcmullen.pdf (accessed on 9 July 2018).

- Grodner, A.; Rupp, N.G. The role of homework in student learning outcomes: Evidence from a field experiment. J. Econ. Educ. 2013, 44, 93–109. [Google Scholar] [CrossRef]

- Kalenkoski, C.M.; Pabilonia, S.W. Does High School Homework Increase Academic Achievement? Available online: https://www.bls.gov/osmr/pdf/ec150050.pdf (accessed on 9 July 2018).

- Novarese, M.; Di Giovinazzo, V. Promptness and Academic Performance. MPRA Paper No. 49746, Posted 11. 2013. Available online: http://mpra.ub.uni-muenchen.de/49746/ (accessed on 9 July 2018).

- Fast, L.A.; Funder, D.C. Personality as manifest in word use: Correlations with self-report, acquaintance report, and behavior. J. Personal. Soc. Psychol. 2008, 94, 334–346. [Google Scholar] [CrossRef] [PubMed]

- Gosling, S.D.; Ko, S.J.; Mannarelli, T.; Morris, M.E. A room with a cue: Personality judgments based on offices and bedrooms. J. Personal. Soc. Psychol. 2002, 82, 379–398. [Google Scholar] [CrossRef]

- Kosinski, M.; Stillwell, D.; Graepel, T. Private traits and attributes are predictable from digital records of human behavior. Proc. Natl. Acad. Sci. USA 2013, 110, 5802–5805. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Youyou, W.; Kosinski, M.; Stillwell, D. Computer-based personality judgments are more accurate than those made by humans. Proc. Natl. Acad. Sci. USA 2015, 112, 1036–1040. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Srivastava, S.; John, O.P.; Gosling, S.D.; Potter, J. Development of personality in early and middle adulthood: Set like plaster or persistent change? J. Personal. Soc. Psychol. 2003, 84, 1041–1053. [Google Scholar] [CrossRef] [Green Version]

- Costa, P.T., Jr.; McCrae, R.R. Revised NEO Personality Inventory (NEO-PI-R) and NEO Five-Factor Inventory (NEO-FFI) Professional Manual; Psychological Assessment Center: Odesa, FL, USA, 1992. [Google Scholar]

- Soto, C.J.; John, O.P.; Gosling, S.D.; Potter, J. The developmental psychometrics of Big Five self-reports: Acquiescence, factor structure, coherence, and differentiation from ages 10 to 20. J. Personal. Soc. Psychol. 2008, 94, 718–737. [Google Scholar] [CrossRef] [PubMed]

- Tucker-Drob, E.M. Differentiation of cognitive abilities across the lifespan. Dev. Psychol. 2010, 45, 1097–1118. [Google Scholar] [CrossRef] [PubMed]

- Mõttus, R.; Allik, J.; Pullman, H. Does personality vary across ability levels? A study using self and other ratings. J. Res. Personal. 2007, 41, 155–170. [Google Scholar] [CrossRef]

- Allik, J.; Laidra, K.; Realo, A.; Pullman, H. Personality development from 12 to 18 years of age: Changes in mean levels and structure of traits. Eur. J. Personal. 2004, 18, 445–462. [Google Scholar] [CrossRef]

- De Fruyt, F.; Mervielde, I.; Hoekstra, H.A.; Rolland, J.P. Assessing adolescents’ personality with the NEO PI-R. Assessment 2000, 7, 329–345. [Google Scholar] [CrossRef] [PubMed]

- MacCann, C.; Pearce, N.; Jiang, Y. The general factor of personality is stronger and more strongly correlated with cognitive ability under instructed faking. J. Individ. Differ. 2017, 38, 46–54. [Google Scholar] [CrossRef]

- Ellingson, J.E.; Sackett, P.R.; Connelly, B.S. Personality assessment across selection and development contexts: Insights into response distortion. J. Appl. Psychol. 2007, 92, 386–395. [Google Scholar] [CrossRef] [PubMed]

- Birkeland, S.A.; Manson, T.M.; Kisamore, J.L.; Brannick, M.T.; Smith, M.A. A meta-analytic investigation of job applicant faking on personality measures. Int. J. Sel. Assess. 2006, 14, 317–335. [Google Scholar] [CrossRef]

- Griffith, R.L.; Converse, P.D. The rules of evidence and the prevalence of applicant faking. In New Perspectives on Faking in Personality Assessment; Ziegler, M., MacCann, C., Roberts, R., Eds.; Oxford University Press: New York, NY, USA, 2011; pp. 34–52. [Google Scholar] [CrossRef]

- Cao, M. Examining the Fakability of Forced-Choice Individual Differences Measures. Ph.D. Thesis, Psychology. University of Illinois, Urbana, IL, USA, 2016. Available online: http://hdl.handle.net/2142/93064 (accessed on 9 July 2018).

- Viswevaran, C.; Ones, D.S. Meta-analyses of fakability estimates: Implications for personality measurement. Educ. Psychol. Meas. 1999, 59, 197–210. [Google Scholar] [CrossRef]

- Tett, R.P.; Freund, K.A.; Christiansen, N.D.; Fox, K.E. Faking on self-report emotional intelligence and personality tests: Effects of faking opportunity, cognitive ability, and job type. Personal. Individ. Differ. 2012, 52, 195–201. [Google Scholar] [CrossRef]

- King, G.; Wand, J. Comparing incomparable survey responses: Evaluating and selecting anchoring vignettes. Political Anal. 2007, 15, 46–66. [Google Scholar] [CrossRef]

- Kyllonen, P.C.; Bertling, J.P. Innovative questionnaire assessment methods to increase cross-country comparability. In Handbook of International Large-Scale Assessment: Background, Technical Issues, and Methods of Data Analysis; Rutkowski, L., von Davier, M., Rutkowski, D., Eds.; CRC Press: Boca Raton, FL, USA, 2014; pp. 277–285. [Google Scholar]

- Bertling, J.; Kyllonen, P.C. Anchoring adjustments of student questionnaire indexes: Possible scenarios for the PISA 2012 international database. In Proceedings of the OECD PISA Technical Advisory Group (TAG) Meeting, Melbourne, Australia, 4–5 April 2013. [Google Scholar]

- Ziegler, M.; Colom, R.; Horstmann, K.T.; Wehner, C.; Bensch, D. Special Issue: “The Ability-Personality Integration”. Available online: https://www.mdpi.com/journal/jintelligence/special_issues/ability_personality_integration (accessed on 4 June 2018).

- Flake, J. Jeff Flake: We Need Immigrants with Skills. But Working Hard Is a Skill. Available online: https://curranberger.com/nyt-we-need-immigrants-with-skills-but-working-hard-is-a-skill/ (accessed on 7 June 2018).

- Heckman, J.J. Measuring and Assessing Skills: Real-Time Measurement of Cognition, Personality, and Behavior. Available online: https://cehd.uchicago.edu/?page_id=1198 (accessed on 7 June 2018).

- National Research Council. Education for Life and Work: Developing Transferable Knowledge and Skills in the 21st Century; The National Academies Press: Washington, DC, USA, 2012. [Google Scholar] [CrossRef]

| 1 | |

| 2 | See also Roberts [13], who defines personality as “relatively enduring patterns of thoughts, feelings, and behaviors that reflect the tendency to respond in certain ways under certain circumstances”. |

| 3 | It is worth noting that such definitions often include references to “individual differences”. Individual differences should be thought of as a method for identifying the factors discussed here, but it is not the only method. Training effects (of intelligence or personality) are non-individual-differences methods, as are artificial intelligence approaches (e.g., building expert systems). |

| 4 | Situational press refers to the reduction in trait variance due to situational constraints [40]. H. A. Murray [41] distinguished alpha (consensual, objective) and beta (subjective) press. Related concepts include situational strength [42], personality-situation congruence [43], situational construal [44], and moderated-mediation models [45], with situations as a moderator, and personality as a mediator. |

| 5 | An anonymous reviewer pointed out that motivation itself may be multidimensional [52]. |

| 6 | |

| 7 | Self-reports of intelligence are also somewhat accurate, as a meta-analytic estimate of the correlation between self-estimates of intelligence and intelligence test scores was r = 0.33 [82]. Similarly, self-reports of typical intellectual engagement and scores on intelligence measures have been found to correlate from r = 0.43 to r = 0.50 [83]. |

| 8 | Other studies report much smaller relations between conscientiousness and scores on cognitive tests given under non-incentivized conditions [83,113]. Further complicating matters, a meta-analysis showed a correlation of 0.14 between achievement motivation and ACT/SAT [114]. Moreover, using ACT and SAT as the “gold standard” for high-stakes cognitive ability tests likely introduces complications due to range restriction and selection bias [115]. |

| 9 | Another type of “objective measure” that could be included here are personality measures based on the behaviors individuals who are high or low on a trait report doing. An example is the Behavioral Indicators of Conscientiousness (BIC) measure [124]. However, these items end up being almost indistinguishable from typical Likert-rating personality items. |

| 10 | The Big 5 is a prominent dimensional analysis of personality, positing that five orthogonal factors—Extraversion, Agreeableness, Conscientiousness, Neuroticism, and Openness—account for item responses on personality surveys and predict real-world outcomes [126]. |

| 11 | Using the standard correction for disattenuation, rx’y’ = rxy/(rxx’ryy)1/2, and numbers supplied in Falk et al. [128], we estimate the partly disattenuated correlation to be 0.53, 0.65, 0.77, 0.53, 0.71, 0.46, for risking taking, time, trust, altruism, positive and negative reciprocity, respectively, assuming perfect reliability of the composite. |

| 12 | Survey returners also were higher cognitive ability, more likely female, native born, not employed, and African American, controlling for many background factors. |

| 13 | This is true regardless of whether acquiescence is controlled for or not; acquiescence (“Yea-saying”) can be controlled by within-person standardization, or ipsatizing. |

| 14 | The authors argued that although there were differences as we note here, they did not support the conclusion that “personality differs substantially across ability groups” ([163], p. 155). However, our argument is simply that cognitive ability difference contributed to differences in personality responses, which is what the authors found. |

| 15 | Most of this literature is based on a rating-scale response format. Forced-choice methods appear to limit faking susceptibility [171]. |

| Sources of Cognitive Test-Score Variance | Examples | Design Treatment | Analysis Treatment |

|---|---|---|---|

| I. Lasting, general characteristics (lasting person characteristics that pertain to performance on this test and tests like it) | |||

| 1. Target construct of the test | general cognitive ability, verbal ability | lengthen test to extent feasible | true score variance |

| 2. Other cognitive factors that might influence test scores | reading, vocabulary, related cognitive factors | minimize role of other factors | factor analysis; MTMM |

| 3. General test-taking skills | comprehend instructions, test-wiseness | test coaching, practice tests | typically ignored |

| 4. Skill with the test’s item types | multiple-choice vs. short-answer | vary item type | ignore or model |

| 5. Personality—tendency to typically exert effort | grit game 1; picture-number 2, maximal-typical performance distinction 3 | - | predict important outcomes |

| 6. Personality—lack of anxiety | (lack of) test anxiety 4 | anxiety training | ignored |

| 7. Personality—managing time in a time-limit test | running out of time on a standardized test 5 | provide clocks, warnings | time-accuracy models |

| II. Lasting, specific characteristics (lasting characteristics that pertain only to this test or item subset) | - | - | |

| 1. Skills required by particular item types | mode (PBT, DBT), response format (MC, CR) | multiple methods | ignore or model |

| 2. Skills required by the particular content sample | form differences | create parallel forms | measurement error |

| 3. Personality—Effort inducing states due to test conditions | computer-based assessments, incentives 6 | make tests/items engaging | ignored or researched |

| 4. Personality—Emotional state induced by test stimuli | math anxiety 7, stereotype threat 8 | minimize inducements | ignored or researched |

| III. Temporary, general characteristics of the individual (pertain to the whole test and tests like it, but only for a short while) | |||

| 1. Temporary health, fatigue, emotional strain | poor performance due to being ill, sad, tired | allow retest | discard all but highest score |

| 2. Environment effects | poor performance due to noisy/hot room | allow retest, venue flexibility | discard all but highest score |

| 3. Level of practice on skills required by tests of this type | novel test format/content | provide pretest practice | model growth/dynamic testing |

| 4. Personality—Effort-inducing states | motivation incentives (feedback, payments) 9 | provide incentives to all | typically ignored |

| 5. Personality—Emotional states | stressors (high stakes, fear of failure) 10 | anxiety training | typically ignored |

| IV. Temporary, specific characteristics of the individual (pertain only to this test or item subset, and only this time) | |||

| 1. Personality—Changes in fatigue/motivation over the course of a test | Item position effects 11 | minimize test length, make test more engaging | error or model |

| 2. Personality—Emotional reaction to item response/feedback | discouragement/slowdown after item failure 12, “entity” theory of intelligence 13 | Content, sensitivity, fairness reviews | error |

| 3. Fluctuations in attention and memory | mind wandering 14 | make test/items more engaging | error |

| 4. Unique skill or knowledge of these particular items | effects of special coaching 15, prior exposure 16 | test coaching, practice tests | error or part of construct |

| 5. Mood/emotion State induced by item(s) | test item invokes a negative emotion 17 | content, fairness reviews | error or part of construct |

| 6. Luck in the selection of answers by guessing | guess correct answer | avoid MC or provide many options | error, guessing correction |

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kyllonen, P.C.; Kell, H. Ability Tests Measure Personality, Personality Tests Measure Ability: Disentangling Construct and Method in Evaluating the Relationship between Personality and Ability. J. Intell. 2018, 6, 32. https://doi.org/10.3390/jintelligence6030032

Kyllonen PC, Kell H. Ability Tests Measure Personality, Personality Tests Measure Ability: Disentangling Construct and Method in Evaluating the Relationship between Personality and Ability. Journal of Intelligence. 2018; 6(3):32. https://doi.org/10.3390/jintelligence6030032

Chicago/Turabian StyleKyllonen, Patrick C., and Harrison Kell. 2018. "Ability Tests Measure Personality, Personality Tests Measure Ability: Disentangling Construct and Method in Evaluating the Relationship between Personality and Ability" Journal of Intelligence 6, no. 3: 32. https://doi.org/10.3390/jintelligence6030032