1. Introduction

Generalization based on previous learning experiences is an important aspect of intelligence that can, in principle, generate adaptive behaviours. The ability to learn from previous experiences and apply this knowledge to new experiences could rely on a reasoning system that performs computations on mental representations of the perceived events to generate a logical conclusion or judgment [

1]. In particular, previous experiences could result in learning the laws, or rules, that seem to generate the perceived events. Such rule learning could be particularly useful for predicting future events that might follow the same pattern. Alternatively, generalization could rely on an associative system that is generally thought to be automatic and less computationally demanding. From this perspective, generalization can be described as resulting from the partial activation of previously learned associations when the current stimuli share some similarities (or features) with stimuli that were experienced in the past (e.g., [

2]).

Students of associative learning regularly study rule-based generalization using patterning discriminations [

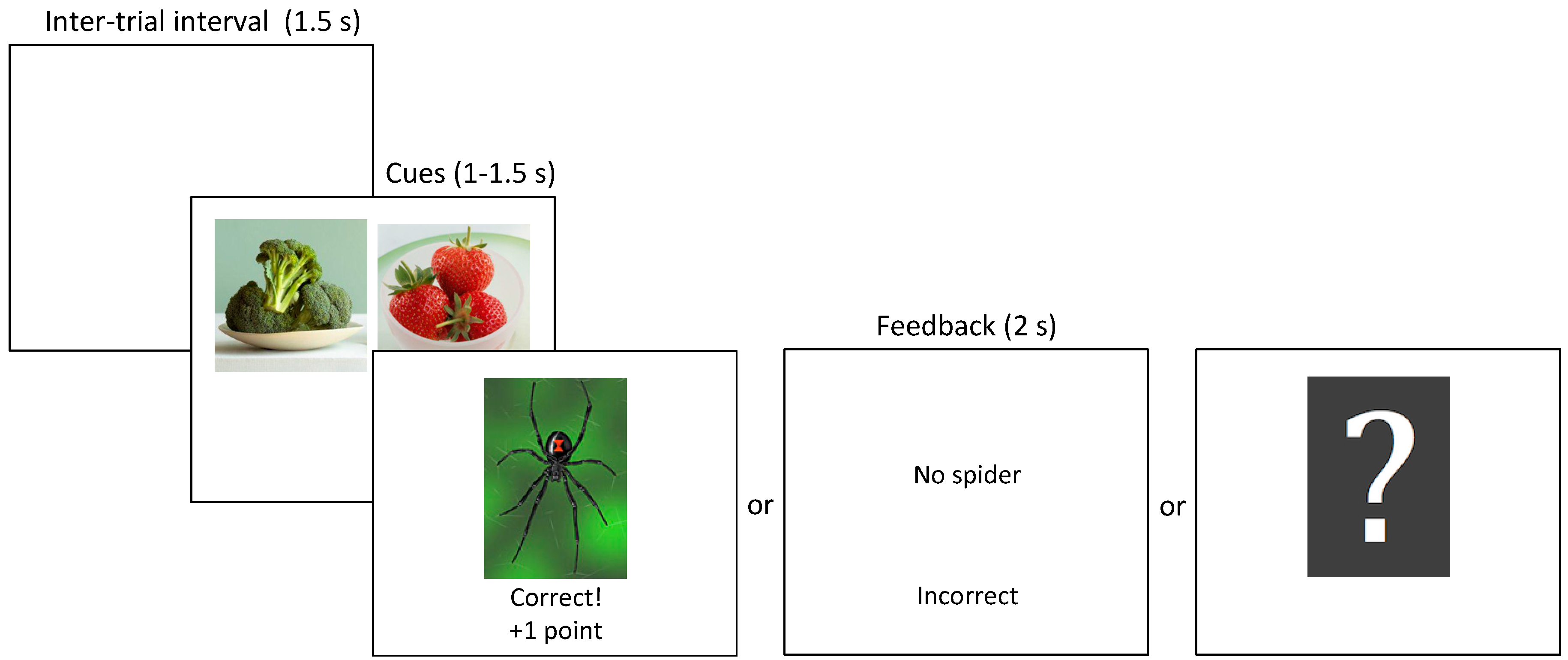

3]. In a positive patterning discrimination, participants learn by trial and error that a combination of two cues, say A and B, is always followed by an outcome (AB+) but when presented separately neither is followed by an outcome (A− and B−). On the other hand, in a negative patterning discrimination the individual cues are followed by the outcome (C+ and D+) but their combination is not (CD−).

Although some associative accounts can explain this type of learning (e.g., [

4,

5]), positive and negative patterning can, in principle, be solved by applying the ‘opposites’ rule whereby the outcome on compound trials is the opposite to the outcome that follows the individual elements [

6]. If participants learn this rule, then it is possible that they transfer or generalize it to other cues. For example, having experienced a positive patterning discrimination (A−, B−, AB+) and X− and Y− trials, they might predict that the outcome should occur on XY trials even though they have never seen that combination before. Alternatively, when presented with a novel combination of cues, individuals might generalize on the basis of their experience with the individual features of the XY compound, in which case they might expect similar or even stronger outcomes on compound and single element trials (e.g., they might expect no outcome to occur on a XY trial after experience with X− and Y− trials).

People’s performance on these transfer tests is

prima facie consistent with rule-based generalization (e.g., [

3]) but this type of generalization seems to require more cognitive resources than feature-based generalization. This hypothesis is supported by studies showing that rule-based generalization is more likely to occur when there are no time constraints [

7] and when learning occurs under low cognitive load [

8]. Furthermore, individual differences in fluid abilities have also been shown to correlate with generalization patterns. Wills et al. [

9] found that individuals with higher working memory capacity had a greater tendency for rule-based generalization. Maes et al. [

10] found a marginally significant positive correlation between rule-based generalization and abstract reasoning ability, although this was not statistically significant when performance on the training set was controlled for. Finally, Don et al. [

11] found a positive relationship between rule-based generalization and scores on the Cognitive Reflection Test, which estimates the degree to which individuals’ performance relies on deliberative processes.

Although these studies suggest that individual differences in fluid abilities might influence the extent to which individuals engage in rule-based generalization, the evidence for it is relatively sparse and several questions remain. First, it is still unclear which fluid abilities predict rule-based generalization in patterning discriminations. Previous studies [

9,

10] that have investigated this issue each assessed only one cognitive ability (working memory or reasoning ability) in relatively small samples (60 or fewer participants). But other fluid abilities, such as processing speed and visuospatial ability, might also influence generalization strategies. In the following experiments, we investigated the relationship between generalization strategies and four fluid abilities: reasoning ability, visuospatial ability, working memory and processing speed.

Second, it is unclear whether fluid abilities influence performance on transfer tests when participants are exposed to novel cue combinations, or whether they also play a role when participants are exposed to new discriminations. Previous studies assessed generalization strategies on transfer tests (e.g., participants were asked whether the outcome would follow the compound XY after experience with X− and Y− trials) but fluid abilities might also determine generalization strategies when participants have the opportunity to learn new patterning discriminations. That is, fluid abilities might influence the extent to which a previously learned discrimination influences learning of a discrimination involving new cues. For example, after having been exposed to positive (A−, B−, AB+) and negative (C+, D+, CD−) patterning discriminations, fluid abilities might influence the acquisition of new patterning discriminations (E−, F−, EF+ and G+, H+, GH−). If so, it would mean that fluid abilities not only influence generalization strategies in ambiguous situations (e.g., on a transfer test in which participants are asked to predict the outcome to a combination of cues they have never experienced) but also in unambiguous situations in which participants receive feedback. Indeed, it is known that learning to solve one discrimination influences performance on a subsequent discrimination [

12,

13,

14]. Our aim was to investigate the role of fluid abilities on the extent to which learning one discrimination influences the acquisition of new discriminations.

Third, we were interested in whether fluid abilities influence the subsequent acquisition of a discrimination by determining the extent to which participants use a rule learned while solving the first discrimination. Alternatively, it is possible that fluid abilities influence other mechanisms that affect performance, such as increasing the tendency to learn about stimulus configurations (configural processing) rather than about the individual elements of the configuration (elemental processing). Configural processing refers to the idea that combinations of stimuli are perceived as configurations rather than as a combination of the individual elements (e.g., the configuration AB is different from the sum of its individual elements, A and B). These configurations can become associated to outcomes and their association could be different from the associations between the individual elements and the outcome. Interestingly associative models that rely on configural processing (e.g., [

5]) can explain positive and negative patterning without assuming that individuals learn rules: the single elements and the compound configuration acquire opposite associations. This might explain why individuals are able to solve these discriminations. It has been suggested that configural and elemental processing lie on a continuum and that individuals can flexibly change their processing style depending on their experience [

12,

15,

16]. That is, it is possible that experience with discriminations that can be solved elementally increases elemental processing, whereas discriminations that can only be solved configurally increase configural processing. This can explain why solving a discrimination that has only a configural solution (i.e., cannot be solved on the basis of the individual cues alone) facilitates the acquisition of subsequent patterning discriminations [

12,

13,

14]. To investigate whether fluid abilities influence performance on a second discrimination by inducing a shift towards configural processing or by increasing the likelihood that a learned rule will be applied to the new discrimination, we tested whether fluid abilities could predict an improvement in performance in situations in which a rule could be learned and applied to the second discrimination (Experiments 1 and 2). We contrasted this with a situation in which this would not be possible and participants would have to rely on configural processing to solve both discriminations (Experiment 3).

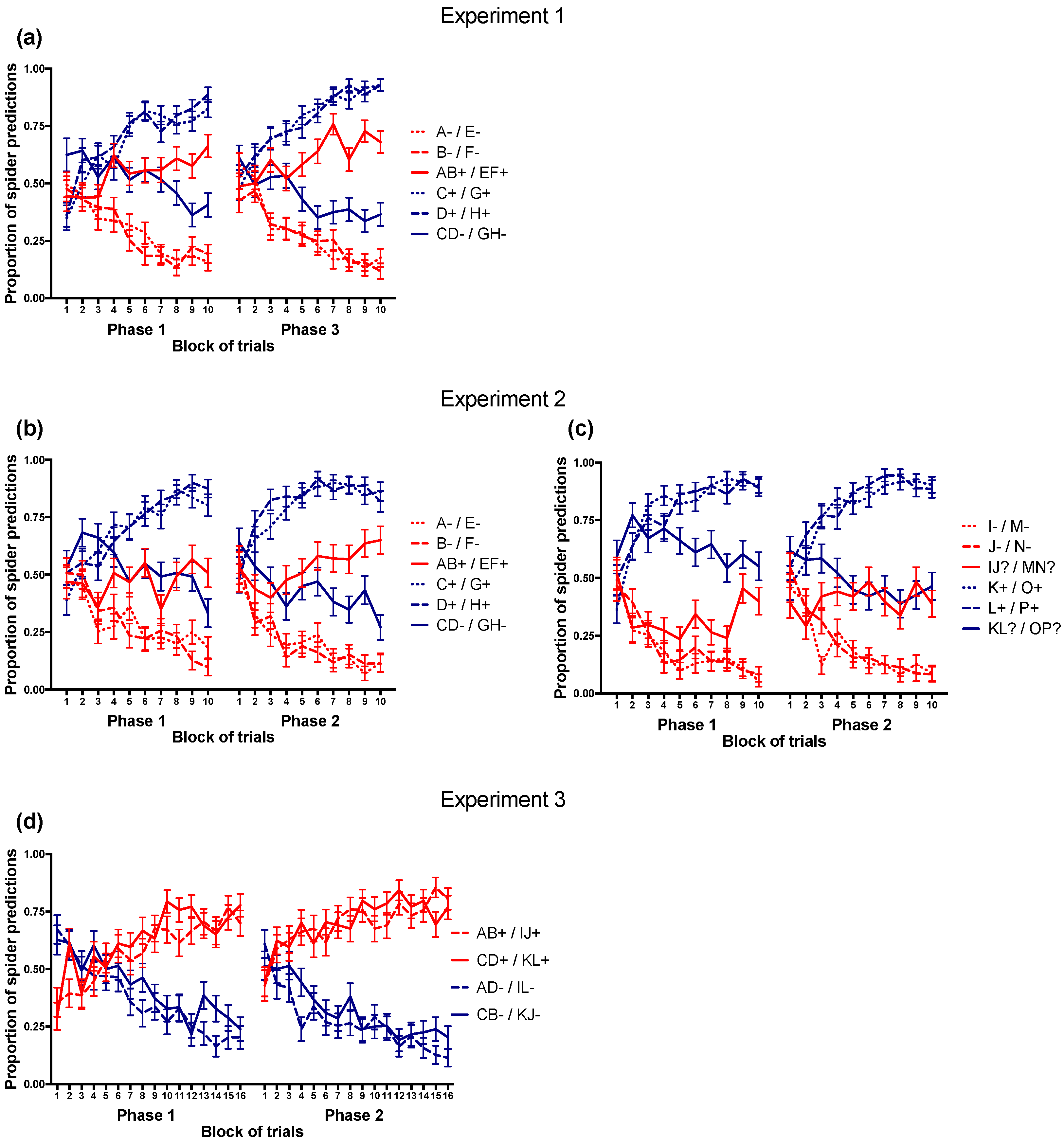

In Experiment 1 we tested whether solving patterning discriminations on one set of cues (A−, B−, AB+ and C+, D+, CD−) could subsequently help solve patterning discriminations on a different set of cues (E−, F−, EF+ and G+, H+, GH−). That is, we asked whether learning one set of discriminations could facilitate learning a new set of discriminations. If participants transfer the opposites rule to the new discriminations, then the second discrimination set should be acquired more rapidly. We measured the extent to which performance benefits from this rule generalization by comparing the relative improvement on the second discrimination set to the first. We further tested whether reasoning ability, visuospatial ability, working memory or processing speed predicted improvement in performance of rule-based generalization.

In Experiment 2 we replicated Experiment 1. In addition, we included a set of transfer trials in which some individual cues were followed by feedback (e.g., I−, J−) but the compounds were not (e.g., IJ?). Participants were required to guess the outcome on every trial regardless of whether they received feedback. If they transferred the opposites rule from the fully trained set to the transfer set, then they should make opposite outcome predictions on compound trials (i.e., IJ+). This transfer test is similar to that used in previous studies [

3,

9,

10]. We could therefore also investigate which fluid abilities predict performance on transfer trials. On the basis of previous studies [

9,

10], we expected at least reasoning ability and working memory to predict performance on the transfer test.

Finally, in Experiment 3 we tested whether the performance advantage on the discriminations trained second was specifically due to rule learning, or whether such an advantage would also pertain when there was no obvious rule that could be learned. In this experiment participants were first trained on a biconditional discrimination (AB+, CD+, AD−, CB−; [

17]) before being exposed to a second, similar, discrimination (IJ+, KL+, IL−, KJ−). Unlike positive and negative patterning discriminations that can be solved by applying a simple opposites rule, biconditional discriminations have no simple solution: they cannot be solved on the basis of the individual elements, nor can they be solved by applying a simple rule like the opposites rule. Rather, participants must learn the outcome associated with each configuration of cues. If the improvement in performance on the patterning discriminations trained second in Experiments 1 and 2 was due, at least in part, to factors other than rule learning, such as an increase in configural processing, then the second biconditional discrimination in Experiment 3 should be learned more rapidly than the first despite the fact that participants could not learn an easy rule that would help them solve the second discrimination. Furthermore, if fluid abilities predict an increased shift towards configural processing, then we expected a relationship between fluid abilities and performance improvements on the second biconditional discrimination similar to those found with the patterning discriminations in Experiments 1 and 2. Alternatively, if fluid abilities contribute to an improvement in performance only by facilitating the generalization of a specific rule from one set of discriminations to another, then we expected weaker or absent relationships between fluid abilities and performance improvements on the second biconditional discrimination.

Overall, these experiments allowed us to investigate the relationship between fluid abilities and performance improvements that could be explained by rule-based generalization or an increase in configural processing (in Experiments 1 and 2) and improvements that are not easily accounted for by rule-based generalization but may be explained by an increase in configural processing (Experiment 3). Furthermore, we investigated whether fluid abilities influence generalization strategies in unambiguous situations in which participants are required to learn new discriminations (Experiments 1–3), as well as in more ambiguous transfer tests in which participants do not receive feedback (Experiment 2).

4. Discussion

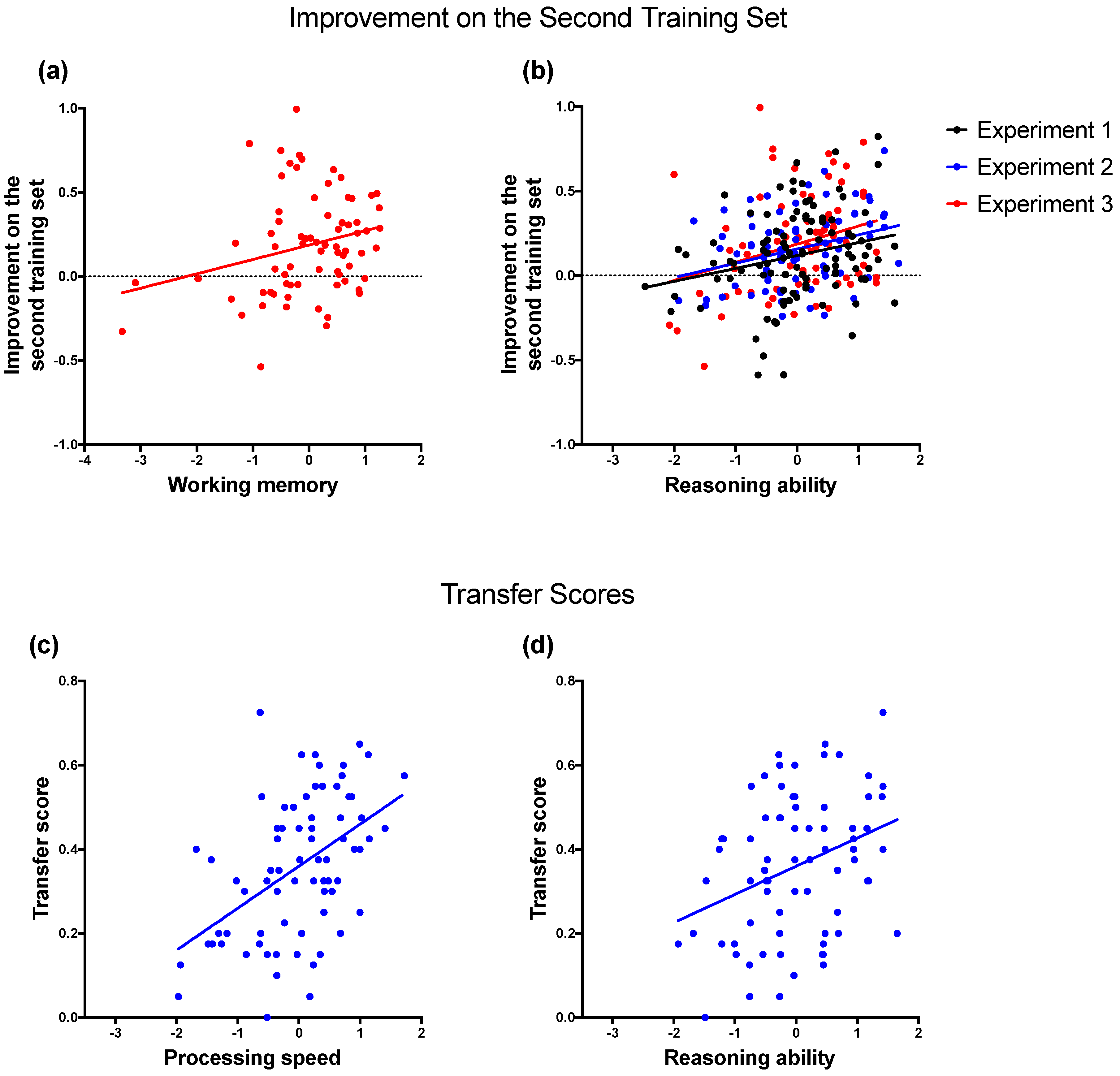

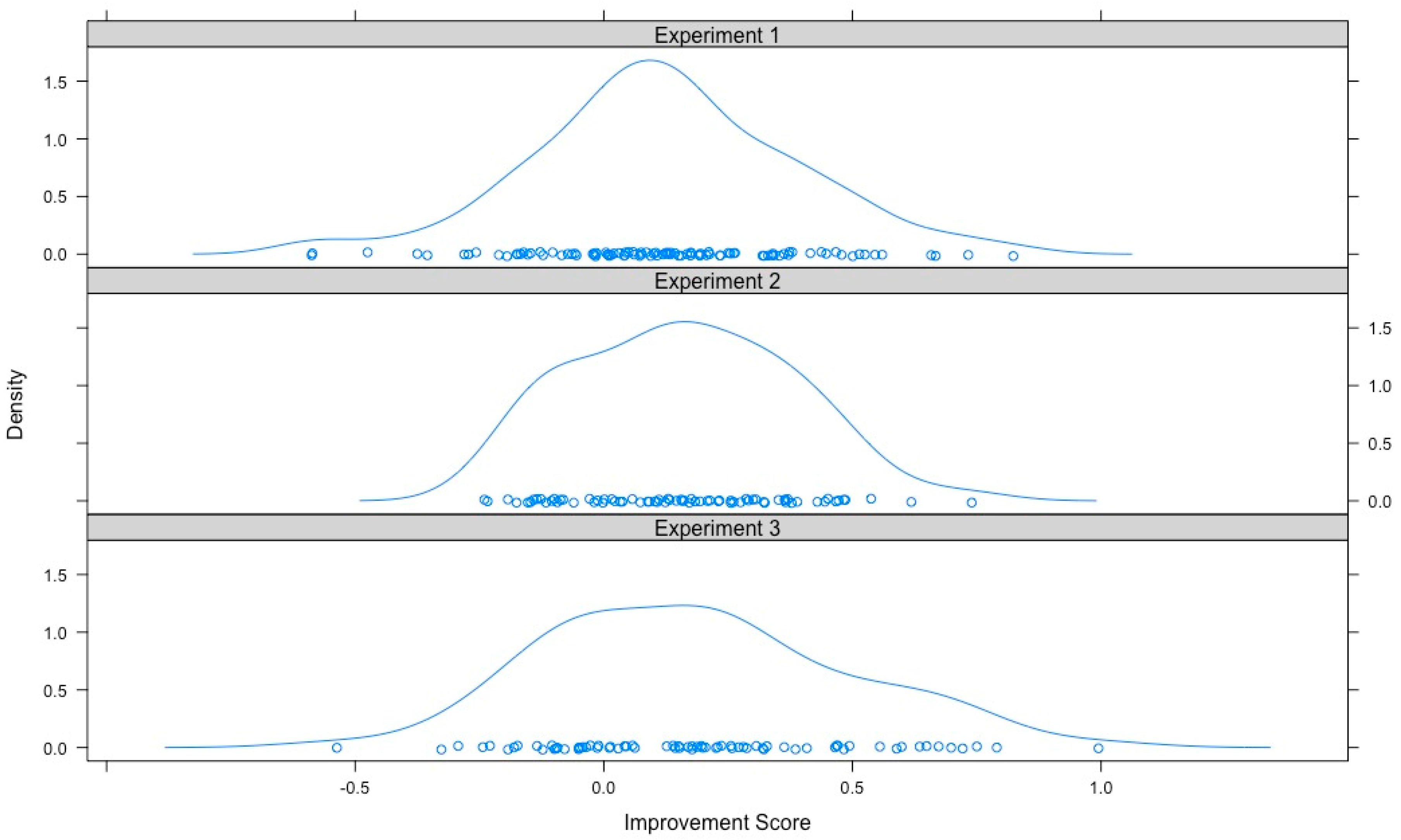

4.1. Improvement on the Second Training Set

Similar to previous studies [

12,

13,

26,

30], our results suggest that configural processing is flexible and increases after exposure to configural discriminations. This flexibility is thought to be advantageous, as it allows individuals to adapt their processing style depending on which strategy is more useful for learning contingencies between stimuli in the environment. We extend previous findings by demonstrating that individual differences in fluid abilities predict the extent to which an individual will benefit from previous exposure to configural discriminations, when performance on the original discriminations is controlled for. Because reasoning ability predicted this performance increase in Experiments 1 and 2, in which participants could have learned and generalized an opposites rule, and in Experiment 3, in which they could not use a simple rule to solve the discriminations, we propose that reasoning ability is more likely to influence the shift to configural processing. This explains the results of all three experiments, whereas rule-based generalization only explains the results of Experiments 1 and 2.

There were, however, some differences between experiments. Whereas reasoning ability predicted the performance improvement in all experiments, working memory only predicted it in Experiment 3. One possible explanation is that the biconditional discriminations were more difficult to solve than the patterning discriminations, thus increasing working memory demands and making it more likely that we would observe a relationship between working memory capacity and performance improvements in Experiment 3. Indeed, comparing the discrimination scores in Experiments 1 and 2 to those in Experiment 3 computed from the first 10 blocks of training (to equate the amount of training in all experiments) revealed that the discrimination scores in Experiment 3 were lower than those in Experiments 1 and 2 in Phase 1, minimum t(145) = 3.06, p = 0.003, CI95 [0.039 0.180]. Phase 2 discrimination scores, however, did not differ between experiments, maximum t(145) = 0.843, p = 0.401, CI95 [−0.054 0.135].

4.2. Transfer Scores in Experiment 2

Our findings regarding the performance on the transfer sets in Experiment 2 can be compared to previous studies that used similar transfer tests. First, we found that reasoning ability modestly predicted performance on the transfer sets. This is consistent with the results from Maes et al. [

10], who also found performance on the Raven’s Standard Progressive Matrices to modestly predict rule-based generalization following patterning discrimination training but only if performance on the training sets was not controlled for. Second, we found that working memory did not predict performance on the transfer sets. This is inconsistent with Wills et al.’s [

9] results, which found that individuals with high working memory capacity showed rule-based generalization, whereas individuals with low working memory capacity showed feature-based generalization. The fact that we did not replicate their finding could be due to the data analysis: whereas we analysed the full range of working memory scores using linear regression, Wills et al. only analysed the upper and lower quartiles of their sample on the working memory task (approximately 10 participants in each group) and consequently omitted the data from the 50% of their participants who fell in the middle range. An analysis similar to Wills et al. on our dataset using extreme quartiles, still did not reveal a significant working memory effect. This was true even if only the Phase 1 transfer scores were analysed, reflecting the fact that the procedure used by Wills et al. consisted of only one training phase.

Instead, we found that processing speed strongly predicted transfer scores that are consistent with rule-based generalization. It is possible that processing speed predicted rule-based generalization in our Experiment 2 because we used a speeded task in which participants only had one second to make a response. This could have placed an additional burden on processing that was not present in previous studies that used self-paced transfer tests, potentially explaining why the transfer scores in Experiment 2 were predicted so well by processing speed.

The speeded response requirement might also explain why the transfer scores were on average below 0.5, which indicates that participants were more likely to respond to the transfer compounds based on feature generalization than on the opposites rule. If feature-based generalization is less cognitively demanding than rule-based generalization, this might explain why we found that, on average, participants engaged in feature-based generalization, whereas other studies that used self-paced tests found a stronger tendency for rule-based generalization [

3,

7,

8,

10]; but see [

26]. Consistent with the idea that rule-based generalization is more demanding, Cobos et al. [

7] found that self-paced ratings on a transfer test reflected rule-based generalization but performance on a cued-response priming test was more consistent with feature-based generalization. The authors argued that feature-based generalization relies on fast associative mechanisms, whereas rule-based generalization relies on slower deliberative processes that require executive control and is thus more likely to occur under untimed conditions. It is possible that the speeded response requirement in our experiments decreased the likelihood that participants could engage in rule-based generalization by preventing these deliberative processes. Furthermore, our results suggest that participants could engage in rule-based generalization if their processing speed was sufficiently high, which would presumably allow more time for rule-based generalization processes.

4.3. Rule-Based Generalization vs. Configural Processing

As explained in the introduction, we were interested in investigating whether fluid abilities increase the likelihood that participants will use a rule to solve new discriminations or whether they contribute to a shift to configural processing following experience with discriminations that can be solved by learning about stimulus configurations. Taken together, the results of the three experiments suggest that reasoning ability is likely to predict a stronger shift towards configural processing following experience with configural problems. This is because reasoning ability consistently predicted the improvement on the second training set in all experiments, including Experiment 3 that ruled out the possibility that the improvement was due to rule-based generalization. Furthermore, reasoning ability only modestly predicted transfer scores consistent with rule-based generalization in Experiment 2 and this effect was not significant when performance on the training sets was controlled for. Thus overall, these results suggest that reasoning ability is more likely to generate a shift toward configural processing following experience with configural discriminations than encourage rule-based generalization.

Whereas reasoning ability consistently predicted the improvement on the second training set in all experiments, working memory only did so in Experiment 3, which involved more difficult biconditional discriminations. Although speculative, it is possible that working memory is a less reliable predictor of a shift toward configural processing than reasoning ability and this effect only reached the significance level when participants were exposed to more difficult discriminations.

The relationship between fluid abilities and the transfer scores in Experiment 2, however, cannot be explained by the possibility that fluid abilities only increase configural processing. That is, if fluid abilities increase the likelihood that stimuli will be processed configurally after experiencing configural problems, then one would expect subsequent configural problems to be solved more easily but one would not necessarily expect an increase in rule-like responses to the transfer stimuli that were not followed by feedback. This is because an increase in configural processing would merely help participants learn nonlinear discriminations (so it would improve performance on feedback trials) but it would not help them ‘deduce’ which outcome should occur on transfer trials (so it would not influence performance on no-feedback trials). It is therefore more likely that fluid abilities influenced the extent to which participants would use a rule-based strategy to make predictions on the ambiguous trials without feedback.

We found, once performance on the training sets was controlled for, that only processing speed strongly predicted the transfer scores in Experiment 2. However, processing speed did not have a significant effect on the improvement on the second training set in either experiment. This suggests that processing speed might determine the extent to which an individual is capable of applying a learnt rule in ambiguous situations in which feedback is omitted, so performance cannot rely on de novo learning. Furthermore, if one assumes that such rule-based generalization is computationally demanding [

7], then this might explain why this effect was particularly evident in our study because we used a speeded response task that imposed a relatively heavy burden on processing speed. So, the difficulty in generating rule-based generalization might have stemmed from the requirement to inhibit a more automatic feature-based generalization tendency rather than from discovering the simple opposites rule. Thus, rule-based generalization in our task might have depended more heavily on the ability to apply the simple rule within a short timeframe rather than on the ability to learn the rule. Had we used a more complex rule and given participants more time to respond, then reasoning ability rather than processing speed may have predicted rule-based generalization.

Nevertheless, although different procedures likely influence the extent to which specific fluid abilities contribute to performance, our results demonstrate that fluid abilities predict the extent to which an individual will experience a shift toward configural processing following training with configural discriminations. They also predict a stronger tendency to exhibit rule-based rather than feature-based generalization on transfer tests. Future research might further investigate the conditions in which specific fluid abilities play a role in experience-induced shifts in processing strategies or rule-based generalization.