Dissociating Cortical Activity during Processing of Native and Non-Native Audiovisual Speech from Early to Late Infancy

Abstract

:1. Introduction

2. Experimental Section

2.1. Participants

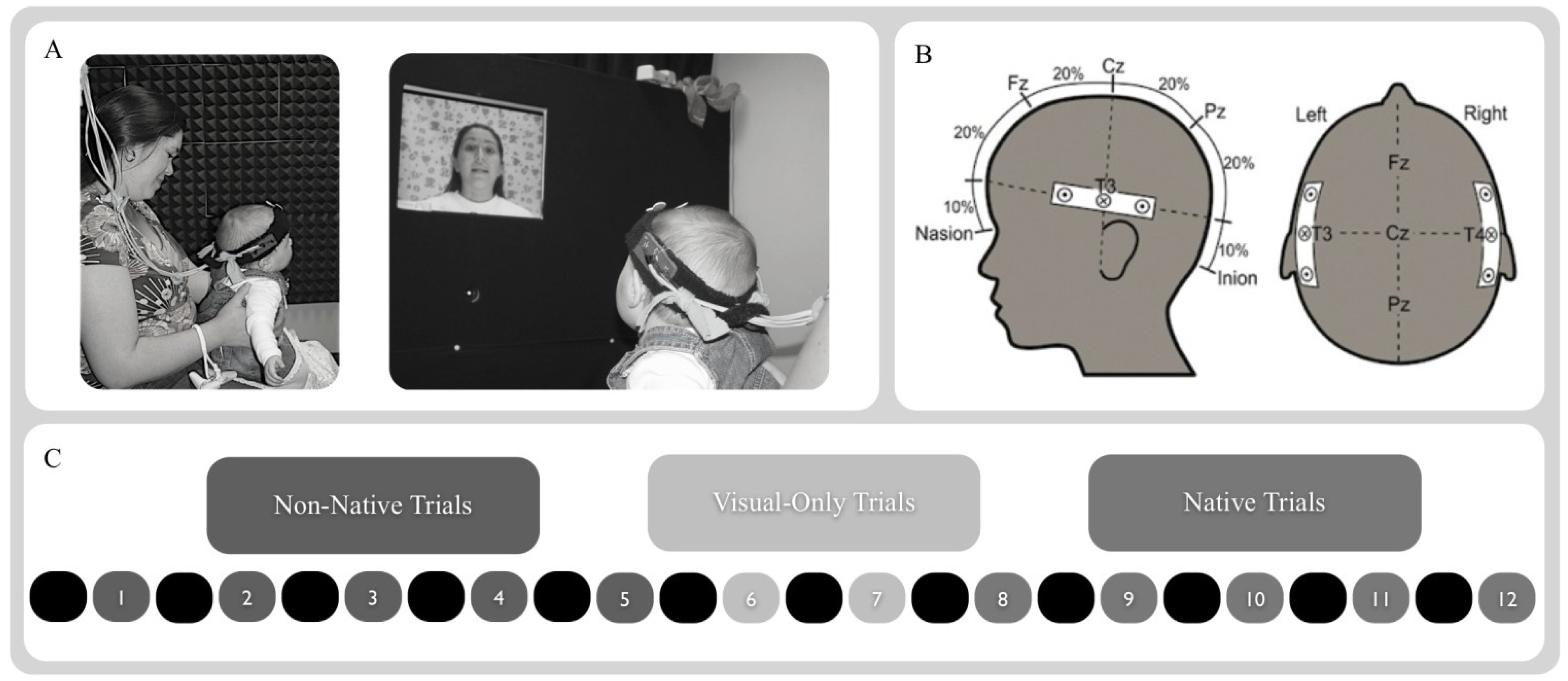

2.2. Stimuli and Design

2.3. Procedure

2.4. NIRS Probe and Apparatus

2.5. Filtering and Motion Artifact Detection and Correction

3. Results

3.1. Looking Time Analysis

3.2. Hemodynamic Analyses

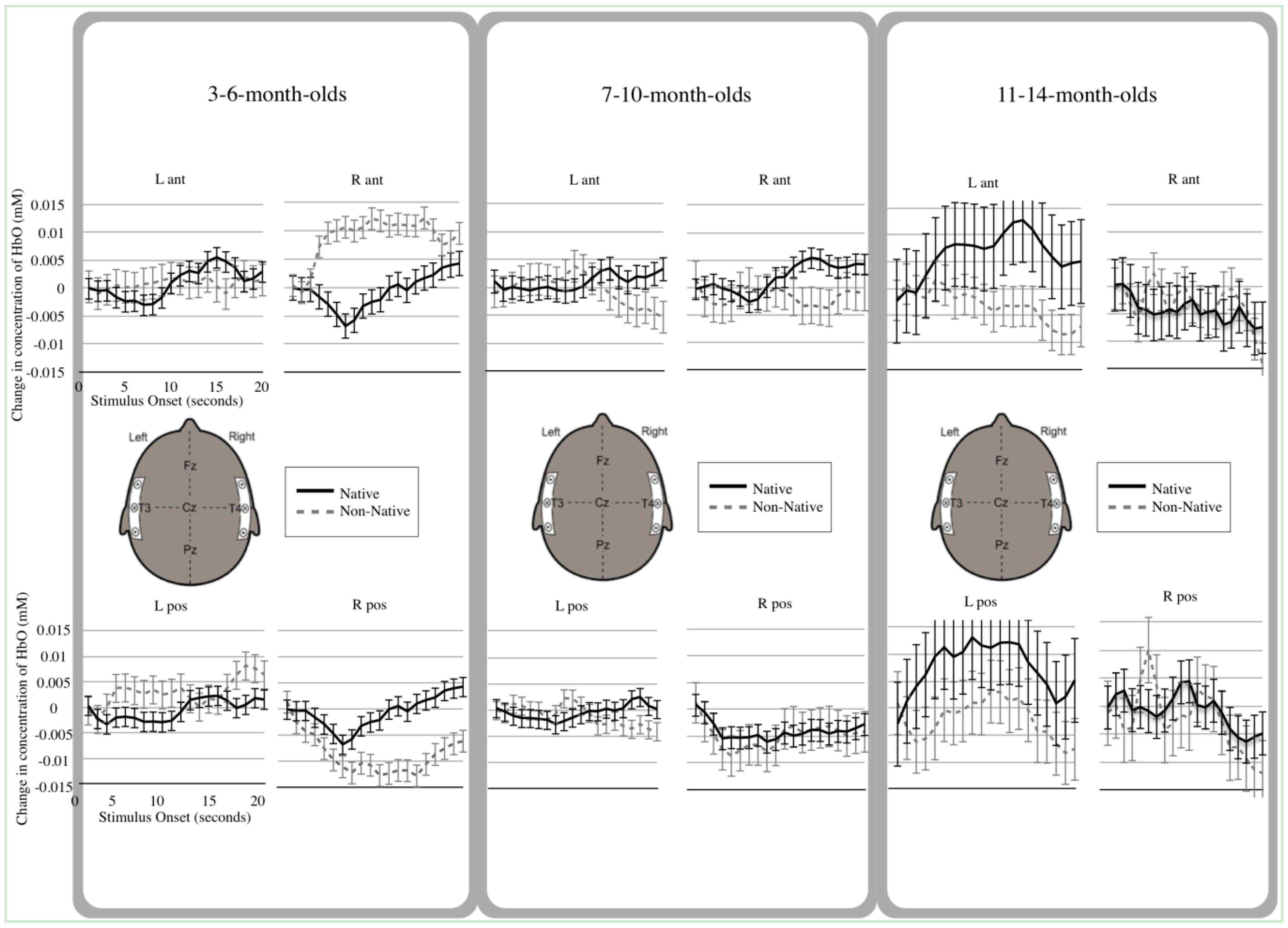

3.3. Main Effects

3.4. Interactions

4. Discussion

4.1. Young Infants’ Differential Native and Non-Native Speech Processing

4.2. Older Infants’ Use of the Left Anterior Temporal Area

4.3. Interpretation of Deactivated Hemodynamic Functions

5. Conclusions

Supplementary Files

Supplementary File 1Acknowledgments

Author Contributions

Conflicts of Interest

References

- Saffran, J.R.; Aslin, R.N.; Newport, E.L. Statistical learning by 8-month-old infants. Science 1996, 274, 1926–1928. [Google Scholar] [CrossRef]

- Weikum, W.M.; Vouloumanos, A.; Navarra, J.; Soto-Faraco, S.; Sebastian-Galles, N.; Werker, J.F. Visual language discrimination in infancy. Science 2007, 316, 1159. [Google Scholar]

- Werker, J.F.; Tees, R.C. Speech perception as a window for understanding plasticity and commitment in language systems of the brain. Dev. Psychobiol. 2005, 46, 233–251. [Google Scholar]

- Kuhl, P.K.; Williams, K.A.; Lacerda, F.; Stevens, K.N.; Lindblom, B. Linguistic experience alters phonetic perception in infants by 6 months of age. Science 1992, 255, 606–608. [Google Scholar]

- Fava, E.; Hull, R.; Bortfeld, H. Linking behavioral and neurophysiological indicators of perceptual tuning to language. Front. Psychol. 2011, 2, 1–14. [Google Scholar]

- Pons, F.; Lewkowicz, D.J.; Soto-Faraco, S.; Sebastian-Galles, N. Narrowing of intersensory speech perception in infancy. Proc. Natl. Acad. Sci. USA 2009, 106, 10598–10602. [Google Scholar]

- Kuhl, P.K.; Rivera-Gaxiola, M. Neural substrates of language acquisition. Annu. Rev. Neurosci. 2008, 31, 511–534. [Google Scholar]

- Streeter, L.A. Language perception of 2-mo-old infants shows effects of both innate mechanisms and experience. Nature 1976, 259, 39–41. [Google Scholar]

- Eimas, P.D.; Siqueland, E.R.; Jusczyk, P.W.; Vigorito, J. Speech perception in infants. Science 1971, 171, 303–306. [Google Scholar]

- Kuhl, P.K.; Stevens, E.B.; Hayashi, A.; Deguchi, T.; Kiritani, S.; Iverson, P. Infants show a facilitation effect for native language phonetic perception between 6 and 12 months. Dev. Sci. 2006, 9, F13–F21. [Google Scholar] [CrossRef]

- Best, C.; McRoberts, G.W.; Sithole, N.M. Examination of perceptual reorganization for nonnative speech contrasts: Zulu click discrimination by english-speaking adults and infants. J. Exp. Psychol. Hum. Percept. Perform. 1988, 14, 345–360. [Google Scholar]

- Polka, L.; Colantontio, C.; Sundara, M. A cross-language comparison of /d/-/th/ perception: Evidence for a new developmental pattern. J. Acoust. Soc. Am. 2001, 109, 2190–2201. [Google Scholar]

- Narayan, C.R.; Werker, J.F.; Beddor, P.S. The interaction between acoustic salience and language experience in developmental speech perception: Evidence from nasal place discrimination. Dev. Sci. 2010, 13, 407–420. [Google Scholar] [CrossRef]

- Werker, J.F.; Tees, R.C. Cross-language speech perception: Evidence for perceptual reorganization during the first year of life. Infant Behav. Dev. 1984, 7, 49–63. [Google Scholar]

- Best, C.; McRoberts, G.W. Infant perception of non-native consonant contrasts that adults assimilate in different ways. Lang. Speech 2003, 46, 183–216. [Google Scholar] [CrossRef]

- Best, C.; McRoberts, G.W.; LaFleur, R.; Silver-Isenstadt, J. Divergent developmental patterns for infants’ perception of two non-native consonant contrasts. Infant Behav. Dev. 1995, 18, 339–350. [Google Scholar]

- Tsushima, T.; Takizawa, O.; Sasaki, M.; Shiraki, S.; Nishi, K.; Kohno, M.; Menyuk, P.; Best, C. Discrimination of english /r-l/ and /w-y/ by japanese infants at 6–12 months: Language-specific developmental changes in speech perception abilities. In Proceedings of the International Conference on Spoken Language Processing, Yokohama, Japan, 1994; pp. 1695–1698.

- Mehler, J.; Bertoncini, J.; Barriere, M. Infant recognition of mother’s voice. Perception 1978, 7, 491–497. [Google Scholar]

- Mehler, J.; Jusczyk, P.W.; Lambertz, G.; Halsted, G.; Bertoncini, J.; Amiel-Tison, C. A precursor of language acquisition in young infants. Cognition 1988, 29, 143–178. [Google Scholar]

- Moon, C.; Panneton-Cooper, R.; Fifer, W.P. Two-day olds prefer their native language. Infant Behav. Dev. 1993, 16, 495–500. [Google Scholar]

- Nazzi, T.; Ramus, F. Perception and acquisition of linguistic rhythm by infants. Speech Commun. 2003, 41, 233–243. [Google Scholar] [CrossRef]

- Seidl, A.; Cristi, A. Developmental changes in the weighting of prosodic cues. Dev. Sci. 2008, 11, 596–606. [Google Scholar] [CrossRef]

- Seidl, A. Infants’ use and weighting of prosodic cues in clause segmentation. J. Mem. Lang. 2007, 57, 24–48. [Google Scholar] [CrossRef]

- Nazzi, T.; Jusczyk, P.W.; Johnson, E.K. Language discrimination by english-learning 5-month-olds: Effects of rhythm and familiarity. J. Mem. Lang. 2000, 43, 1–19. [Google Scholar] [CrossRef]

- Pascalis, O.; de Haan, M.; Nelson, C.A. Is face processing species-specific during the first year of life? Science 2002, 296, 1321–1323. [Google Scholar] [CrossRef]

- Kelly, D.J.; Quinn, P.C.; Slater, A.M.; Lee, K.; Gibson, A.; Smith, M.; Ge, L.; Pascalis, O. Three-month-olds, but not newborns, prefer own-race faces. Dev. Sci. 2005, 8, F31–F36. [Google Scholar] [CrossRef]

- Friederici, A.; Friederich, M.; Christophe, A. Brain responses in 4-month-old infants are already language specific. Curr. Biol. 2007, 17, 1208–1211. [Google Scholar] [CrossRef]

- Homae, F.; Watanabe, H.; Nakano, T.; Asakawa, K.; Taga, G. The right hemisphere of sleeping infant perceives sentential prosody. J. Neurosci. Res. 2006, 54, 276–280. [Google Scholar]

- Homae, F.; Watanabe, H.; Nakano, T.; Taga, G. Prosodic processing in the developing brain. J. Neurosci. Res. 2007, 59, 29–39. [Google Scholar]

- Wartenburger, I.; Steinbrink, J.; Telkemeyer, S.; Friedrich, M.; Friederici, A.D.; Obrig, H. The processing of prosody: Evidence of interhemispheric specialization at the age of four. NeuroImage 2007, 34, 416–425. [Google Scholar]

- Plante, E.; Holland, S.K.; Schmithorst, V.J. Prosodic processing by children: An fMRI study. Brain Lang. 2006, 97, 332–342. [Google Scholar] [CrossRef]

- Friederici, A. Towards a neural basis of auditory sentence processing. Trends Cogn. Sci. 2002, 6, 78–85. [Google Scholar] [CrossRef]

- Gandour, J.; Tong, Y.; Wong, D.; Talavage, T.; Dzemidzic, M.; Xu, Y.; Li, X.; Lowe, M. Hemispheric roles in the perception of speech prosody. NeuroImage 2004, 23, 344–357. [Google Scholar] [CrossRef]

- Zatorre, R.; Mondor, T.A.; Evans, A.C. Auditory attention to space and frequency activates similar cerebral systems. NeuroImage 1999, 10, 544–554. [Google Scholar] [CrossRef]

- Gervain, J.; Mehler, J. Speech perception and language acquisition in the first year of life. Annu. Rev. Psychol. 2010, 61, 191–218. [Google Scholar]

- Fernald, A. Four-month-old infants prefer to listen to motherese. Infant Behav. Dev. 1985, 8, 181–195. [Google Scholar]

- Fernald, A. Human maternal vocalizations to infants as biologically relevant signals: An evolutionary perspective. In The Adapted Mind; Barkhow, J.H., Cosmides, L., Tooby, J., Eds.; Oxford University Press: New York, UK, 1992; pp. 391–428. [Google Scholar]

- Fernald, A.; Kuhl, P.K. Acoustic determinants of infant preference for motherese speech. Infant Behav. Dev. 1987, 10, 279–293. [Google Scholar] [CrossRef]

- Fernald, A.; Morikawa, H. Common themes and cultural variations in Japanese and American mothers’ speech to infants. Child Dev. 1993, 64, 637–656. [Google Scholar] [CrossRef]

- Saito, Y.; Aoyama, S.; Kondo, T.; Fukumoto, R.; Konishi, N.; Nakamura, K.; Kobayashi, M.; Toshima, T. Frontal cerebral blood flow change associated with infant-directed speech. Arch. Dis. Child. Fetal Neonatal Ed. 2007, 92, F113–F116. [Google Scholar] [CrossRef]

- Naoi, N.; Minagawa-Kawai, Y.; Kobayashi, A.; Takeuchi, K.; Nakamura, K.; Yamamoto, J.-I.; Kojima, S. Cerebral responses to infant-directed speech and the effect of talker familiarity. NeuroImage 2012, 59, 1735–1744. [Google Scholar] [CrossRef]

- Bortfeld, H.; Fava, E.; Boas, D.A. Identifying cortical lateralization of speech processing in infants using near-infrared spectroscopy. Dev. Neuropsychol. 2009, 34, 52–65. [Google Scholar]

- Peña, M.; Maki, A.; Kovacić, D.; Dehaene-Lambertz, G.; Koizumi, H.; Bouquet, F.; Mehler, J. Sounds and silence: An optical topography study of language recognition at birth. Proc. Natl. Acad. Sci. USA 2003, 100, 11702–11705. [Google Scholar]

- Sato, H.; Hirabayashi, Y.; Tsubokura, H.; Kanai, M.; Ashida, T.; Konishi, I.; Uchida-Ota, M.; Konishi, Y.; Maki, A. Cerebral hemodynamics in newborn infants exposed to speech sounds: A whole-head optical topography study. Hum. Brain Mapp. 2012, 33, 2092–2103. [Google Scholar]

- Minagawa-Kawai, Y.; Mori, K.; Naoi, N.; Kojima, S. Neural attunement processes in infants during the acquisition of a language-specific phonemic contrast. J. Neurosci. 2007, 27, 315–321. [Google Scholar]

- May, L.; Byers-Heinlein, K.; Gervain, J.; Werker, J. Language and the newborn brain: Does prenatal language experience shape the neonate neural response to speech? Front. Lang. Sci. 2011, 2, 1–9. [Google Scholar]

- Petitto, L.A.; Berens, M.S.; Kovelman, I.; Dubins, M.H.; Jasinska, K.; Shalinsky, M. The “perceptual wedge” hypothesis as the basis for bilingual babies’ phonetic processing advantage: New insights from fnirs brain imaging. Brain Lang. 2012, 121, 130–143. [Google Scholar] [CrossRef]

- Arimitsu, T.; Uchida-Ota, M.; Yagihashi, T.; Kojima, S.; Watanabe, S.; Hokuto, I.; Ikeda, K.; Takahashi, T.; Minagawa-Kawai, Y. Functional hemispheric specialization in processing phonemic and prosodic auditory changes in neonates. Front. Psychol. 2011, 2, 1–10. [Google Scholar]

- Minagawa-Kawai, Y.; van der Lely, H.; Ramus, F.; Sato, Y.; Mazuka, R.; Dupoux, E. Optical brain imaging reveals general auditory and language-specific processing in early infant development. Cereb. Cortex 2011, 21, 254–261. [Google Scholar]

- Bortfeld, H.; Wruck, E.; Boas, D.A. Assessing infants’ cortical response to speech using near-infrared spectroscopy. NeuroImage 2007, 34, 407–415. [Google Scholar]

- Perrachione, T.K.; Del Tufo, S.N.; Gabrieli, J.D.E. Human voice recognition depends on language ability. Science 2011, 333, 595. [Google Scholar] [CrossRef]

- Roach, P. On the distinction between “stress-timed” and “syllable-timed” languages. In Linguistic Controversies; Crystal, D., Ed.; Edward Arnold: London, UK, 1982; pp. 73–79. [Google Scholar]

- Boas, D.A.; Franceschini, M.A.; Dunn, A.K.; Strangman, G. Noninvasive imaging of cerebral activation with diffuse optical tomography. In In Vivo Optical Imaging of Brain Function; Frostig, R.D., Ed.; CRC Press: Boca Raton, FL, USA, 2002; pp. 193–221. [Google Scholar]

- Wilcox, T.; Bortfeld, H.; Woods, R.; Wruck, E.; Boas, D.A. Using near-infrared spectroscopy to assess neural activation during object processing in infants. J. Biomed. Opt. 2005, 10, 011010. [Google Scholar] [CrossRef]

- Strangman, G.; Boas, D.A.; Sutton, J.P. Non-invasive neuroimaging using near-infrared light. Biol. Psychiatry 2002, 52, 679–693. [Google Scholar]

- Wilcox, T.; Bortfeld, H.; Woods, R.; Wruck, E.; Armstrong, J.; Boas, D.A. Hemodynamic changes in the infant cortex during the processing of featural and spatiotemporal information. Neuropsychologia 2009, 47, 657–662. [Google Scholar]

- Wilcox, T.; Bortfeld, H.; Woods, R.; Wruck, E.; Boas, D.A. Hemodynamic response to featural changes in the occipital and inferior temporal cortex in infants: A preliminary methodological exploration. Dev. Sci. 2008, 11, 361–370. [Google Scholar]

- Minagawa-Kawai, Y.; Cristia, A.; Dupoux, E. Cerebral lateralization and early speech acquisition: A developmental scenario. Dev. Cogn. Neurosci. 2011, 1, 217–232. [Google Scholar]

- Saito, Y.; Fukuhara, R.; Aoyama, S.; Toshima, T. Frontal brain activation in premature infants’ response to auditory stimuli in neonatal intensive care unit. Early Hum. Dev. 2009, 85, 471–474. [Google Scholar]

- Sangrigoli, S.; de Schonen, S. Effect of visual experience on face processing: A developmental study of inversion and non-native effects. Dev. Sci. 2004, 7, 74–87. [Google Scholar]

- Gallagher, A.; Theriault, M.; Maclin, E.; Low, K.; Gratton, G.; Fabiani, M.; Gagnon, L.; Valois, K.; Rouleau, I.; Sauerwein, H.C.; et al. Near-infrared spectroscopy as an alternative to the wada test for language mapping in children, adults and special populations. Epileptic Discord. 2007, 9, 241–255. [Google Scholar]

- Enager, P.; Gold, L.; Lauritzen, M. Impaired neurovascular coupling by transhemispheric diaschisis in rat cerebral cortex. J. Cereb. Blood Flow Metab. 2004, 24, 713–719. [Google Scholar]

- Shmuel, A.; Yacoub, E.; Pfeuffer, J.; van de Moortele, P.F.; Adriany, G.; Hu, X.; Ugurbil, K. Sustained negative bold, blood flow and oxygen consumption response and its coupling to the positive response in the human brain. Neuron 2002, 36, 1195–1210. [Google Scholar]

- Chen, C.C.; Tyler, C.W.; Liu, C.L.; Wang, Y.H. Lateral modulation of bold activation in unstimulated regions of the human visual cortex. NeuroImage 2005, 24, 802–809. [Google Scholar]

- Kozberg, M.G.; Chen, B.R.; DeLeo, S.E.; Bouchard, M.B.; Hillman, E.M. Resolving the transition from negative to positive blood oxygen level-dependent responses in the developing brain. Proc. Natl. Acad. Sci. USA 2013, 110, 4380–4385. [Google Scholar]

- Harrison, R.V.; Harel, N.; Panesar, J.; Mount, R.J. Blood capillary distribution correlates with hemodynamic-based functional imaging in cerebral cortex. Cereb. Cortex 2002, 12, 225–233. [Google Scholar]

- Iadecola, C.; Yang, G.; Ebner, T.J.; Chen, G. Local and propagated vascular responses evoked by focal synaptic activity in cerebellar cortex. J. Neurophysiol. 1997, 78, 651–659. [Google Scholar]

- Smith, A.T.; Williams, A.L.; Singh, K.D. Negative bold in the visual cortex: Evidence against blood stealing. Hum. Brain Mapp. 2004, 21, 213–220. [Google Scholar]

- Rodriguez-Baeza, A.; Reina de la Torre, F.; Ortega-Sanchez, M.; Sahu-Quillo-Barris, J. Perivascular structures in corrosion casts of human central nervous system: A confocal laser and scanning electron microscope study. Anat. Rec. 1998, 252, 176–184. [Google Scholar]

- Dick, A.S.; Solodkin, A.; Small, S.L. Neural development of networks for audiovisual speech comprehension. Brain Lang. 2010, 114, 101–114. [Google Scholar]

- Nath, A.R.; Fava, E.E.; Beauchamp, M.S. Neural correlates of interindividual differences in children’s audiovisual speech perception. J. Neurosci. 2011, 31, 13963–13971. [Google Scholar]

- Beauchamp, M.S.; Nath, A.R.; Pasalar, S. fMRI-guided transcranial magnetic stimulation reveals that the superior temporal sulcus is a cortical locus of the mcgurk effect. J. Neurosci. 2010, 30, 2414–2417. [Google Scholar]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Fava, E.; Hull, R.; Bortfeld, H. Dissociating Cortical Activity during Processing of Native and Non-Native Audiovisual Speech from Early to Late Infancy. Brain Sci. 2014, 4, 471-487. https://doi.org/10.3390/brainsci4030471

Fava E, Hull R, Bortfeld H. Dissociating Cortical Activity during Processing of Native and Non-Native Audiovisual Speech from Early to Late Infancy. Brain Sciences. 2014; 4(3):471-487. https://doi.org/10.3390/brainsci4030471

Chicago/Turabian StyleFava, Eswen, Rachel Hull, and Heather Bortfeld. 2014. "Dissociating Cortical Activity during Processing of Native and Non-Native Audiovisual Speech from Early to Late Infancy" Brain Sciences 4, no. 3: 471-487. https://doi.org/10.3390/brainsci4030471