Unconscious Effects of Action on Perception

Abstract

:1. Introduction

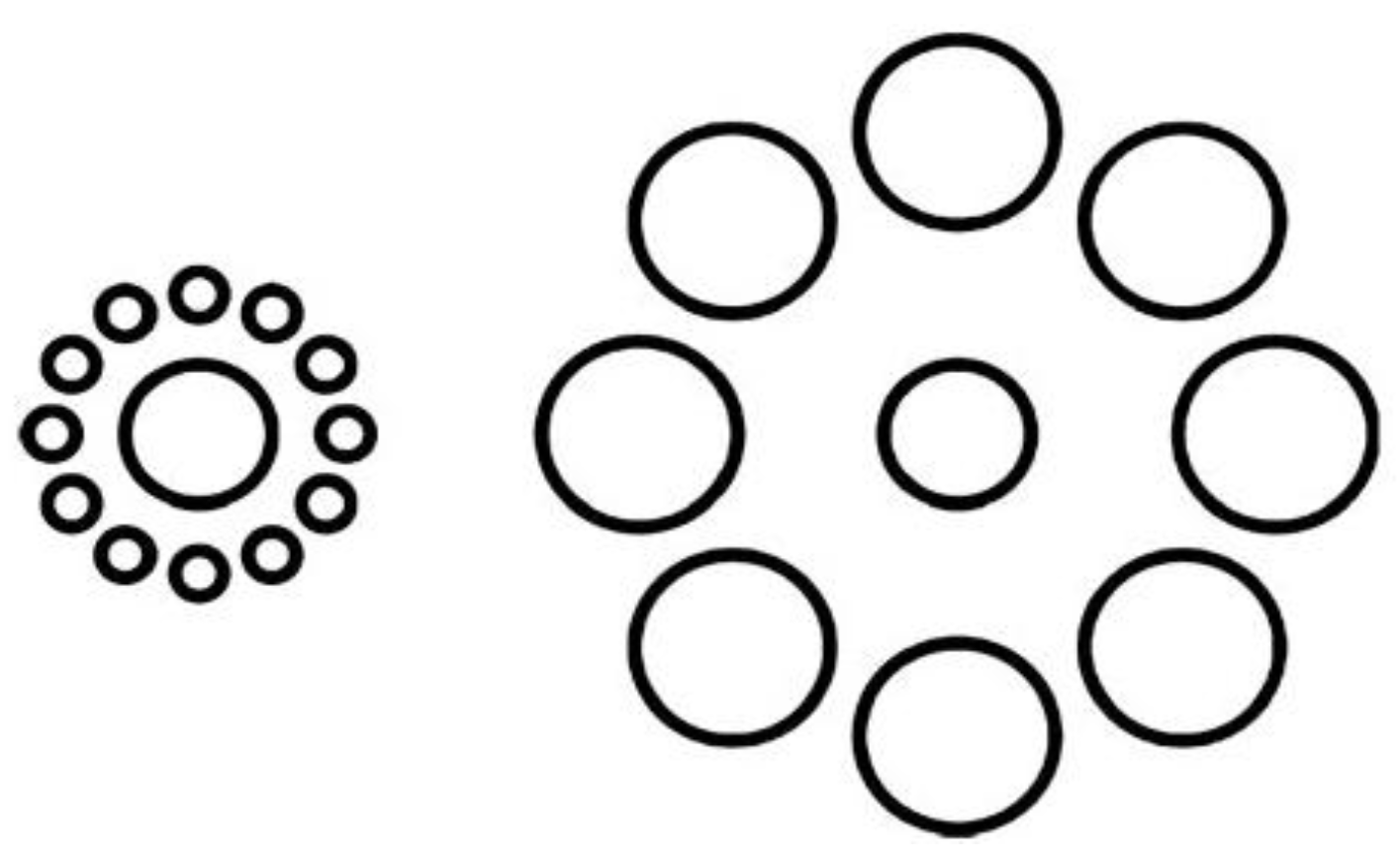

1.1. Common Coding Theory

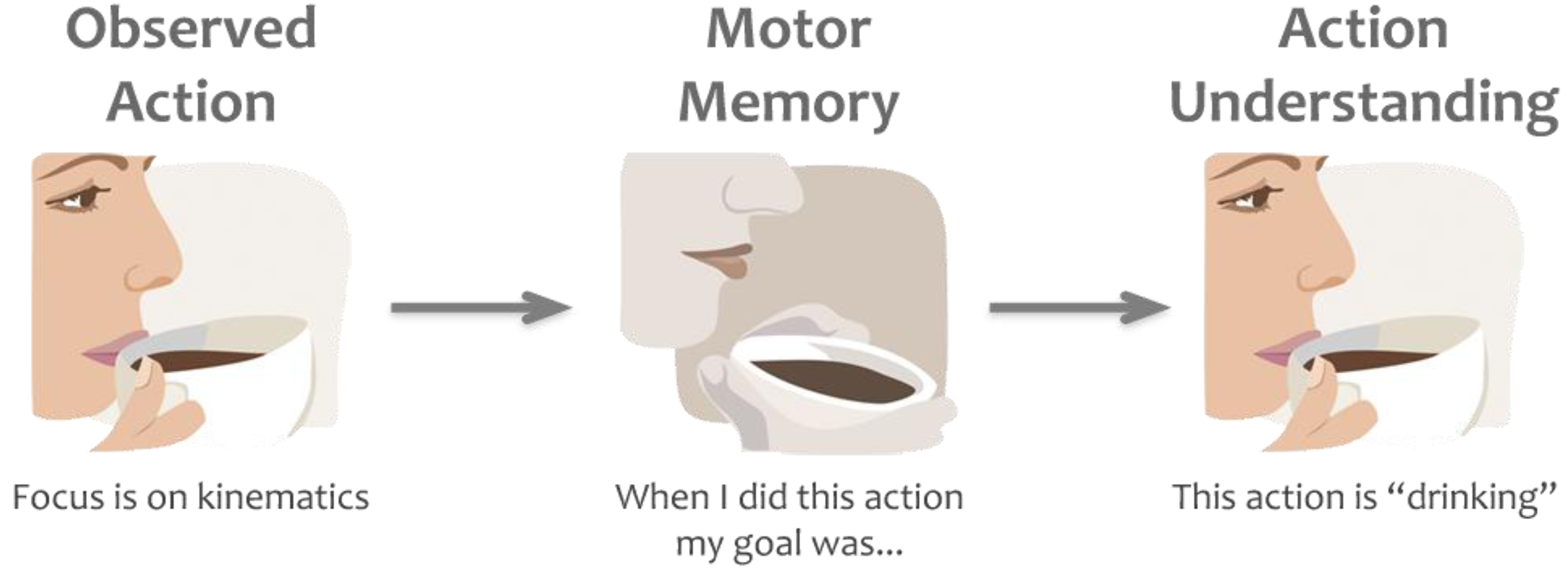

1.2. Direct Matching Hypothesis

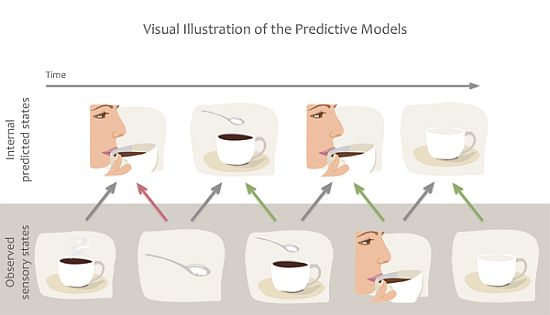

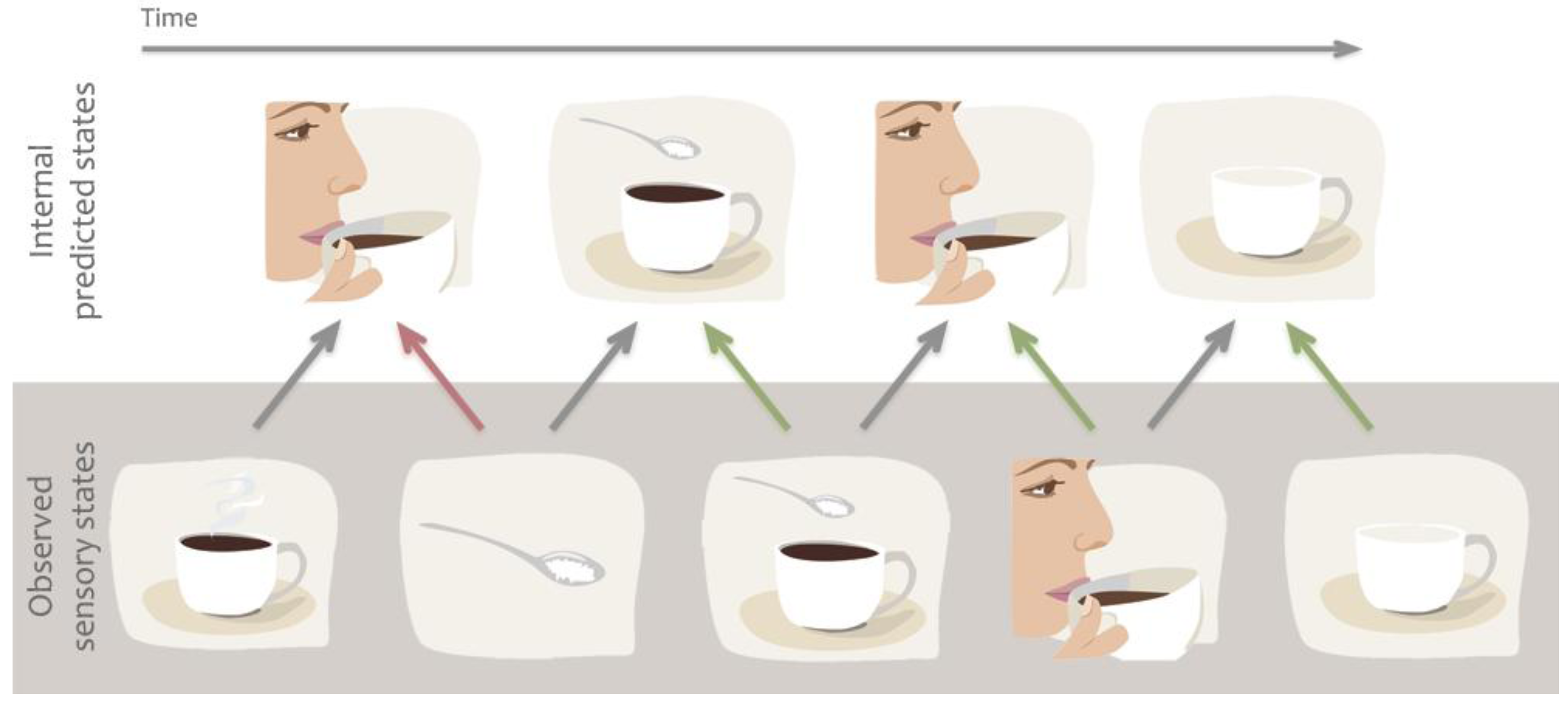

1.3. Predictive Models

2. Actions Influencing Perception

2.1. Effects of Long-Term Changes in the Motor System on Perception

2.1.1. Motor Disorders

2.1.2. Motor Expertise

2.2. Effects of Planned, Intended, or Executed Actions on Perception

2.2.1. Facilitatory Effects

2.2.2. Twisted Illusions

2.2.3. Action-Affected Blindness

2.2.4. When Similar Repels and Different Attracts

2.2.5. Dynamic Systems, Complex Interactions

3. The Case for Predictive Models

| Sensory InformationCompared to Motor Plan | Reported Perceptual Effect |

|---|---|

| Same | sensory information does not reach consciousness “action-blindness effect” |

| Similar | slow detection of stimuli, somewhat biased towards motor plan |

| Ambiguous | perception biased towards the direction of the motor plan |

| Different | quick detection of stimuli, no bias towards motor plans |

4. Conclusion

Acknowledgments

References

- Buccino, G.; Binkofski, F.; Fink, G.R.; Fadiga, L.; Fogassi, L.; Gallese, V.; Seitz, R.J.; Zilles, K.; Rizzolatti, G.; Freund, H.J. Action observation activates premotor and parietal areas in a somatotopic manner: An fMRI study. Eur. J. Neurosci. 2001, 13, 400–404. [Google Scholar]

- Gazzola, V.; Keysers, C. The observation and execution of actions share motor and somatosensory voxels in all tested subjects: Single-subject analyses of unsmoothed fMRI data. Cereb. Cortex 2009, 19, 1239–1255. [Google Scholar] [CrossRef]

- Fadiga, L.; Craighero, L.; Olivier, E. Human motor cortex excitability during the perception of others’ action. Curr. Opin. Neurobiol. 2005, 15, 213–218. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Craighero, L. The mirror-neuron system. Annu. Rev. Neurosci. 2004, 27, 169–192. [Google Scholar] [CrossRef]

- Gallese, V.; Fadiga, L.; Fogassi, L.; Rizzolatti, G. Action recognition in the premotor cortex. Brain 1996, 119, 593–609. [Google Scholar]

- Shin, Y.K.; Proctor, R.W.; Capaldi, E.J. A review of contemporary ideomotor theory. Psychol. Bull. 2011, 136, 943–974. [Google Scholar]

- James, A.W. The Principles of Psychology; Macmillan: London, UK, 1890. [Google Scholar]

- Prinz, W. Perception and action planning. Eur. J. Cogn. Psychol. 1997, 9, 129–154. [Google Scholar] [CrossRef]

- Hommel, B.; Müsseler, J.; Aschersleben, G.; Prinz, W. The Theory of event coding (TEC): A framework for perception and action planning. Behav. Brain Sci. 2001, 24, 849–878. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Fogassi, L.; Gallese, V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat. Rev. Neurosci. 2001, 2, 661–670. [Google Scholar] [CrossRef]

- Rizzolatti, G.; Sinigaglia, C. The functional role of the parieto-frontal mirror circuit: Interpretations and misinterpretations. Nat. Rev. Neurosci. 2010, 11, 264–274. [Google Scholar] [CrossRef]

- Csibra, G. Action Mirroring and Action Understanding: An Alternative Account. In The Sensorimotor Foundations of Higher Cognition:Attention and Performance XXII; Haggard, P., Rossetti, R., Kawato, M., Eds.; Oxford University Press: New York, NY, USA, 2007; pp. 435–459. [Google Scholar]

- Wilson, M.; Knoblich, G. The case for motor involvement in perceiving conspecifics. Psychol. Bull. 2005, 131, 460–473. [Google Scholar] [CrossRef]

- Fogassi, L.; Ferrari, P.F.; Gesierich, B.; Rozzi, S.; Chersi, F.; Rizzolatti, G. Parietal lobe: From action organization to intention understanding. Science 2005, 308, 662–667. [Google Scholar]

- Bonini, L.; Serventi, F.U.; Simone, L.; Rozzi, S.; Ferrari, P.F.; Fogassi, L. Grasping neurons of monkey parietal and premotor cortices encode action goals at distinct levels of abstraction during complex action sequences. J. Neurosci. 2011, 31, 5876–5887. [Google Scholar]

- Kilner, J.; Friston, K.; Frith, C. Predictive coding: An account of the mirror neuron system. Cogn. Process. 2007, 8, 159–166. [Google Scholar]

- Miall, R.C.; Wolpert, D.M. Forward models for physiological motor control. Neural Netw. 1996, 9, 1265–1279. [Google Scholar] [CrossRef]

- Stepp, N.; Turvey, M.T. On strong anticipation. Cogn. Syst. Res. 2010, 11, 148–164. [Google Scholar] [CrossRef]

- Dubois, D. Mathematical foundations of discrete and functional systems with strong and weak anticipations. Lect. Notes Comput. Sci. 2003, 2684, 110–132. [Google Scholar] [CrossRef]

- Serino, A.; de Filippo, L.; Casavecchia, C.; Coccia, M.; Shiffrar, M.; Làdavas, E. Lesions to the motor system affect action perception. J. Cogn. Neurosci. 2010, 22, 413–426. [Google Scholar] [CrossRef]

- Arrighi, R.; Cartocci, G.; Burr, D. Reduced perceptual sensitivity for biological motion in paraplegia patients. Curr. Biol. 2011, 21, R910–R911. [Google Scholar] [CrossRef]

- Pazzaglia, M.; Pizzamiglio, L.; Pes, E.; Aglioti, S.M. The sound of actions in apraxia. Curr. Biol. 2008, 18, 1766–1772. [Google Scholar] [CrossRef]

- Negri, G.A.L.; Rumiati, R.; Zadini, A.; Ukmar, M.; Mahon, B.; Caramazza, A. What is the role of motor simulation in action and object recognition? Evidence from apraxia. Cogn. Neuropsy 2007, 24, 795–816. [Google Scholar] [CrossRef]

- Leggio, M.G.; Tedesco, A.M.; Chiricozzi, F.R.; Clausi, S.; Orsini, A.; Molinari, M. Cognitive sequencing impairment in patients with focal or atrophic cerebellar damage. Brain 2008, 131, 1332–1343. [Google Scholar]

- Cattaneo, L.; Fasanelli, M.; Andreatta, O.; Bonifati, D.M.; Barchiesi, G.; Caruana, F. Your actions in my cerebellum: Subclinical deficits in action observation in patients with unilateral chronic cerebellar stroke. Cerebellum 2011, 1–8. [Google Scholar]

- Leggio, M.G.; Chiricozzi, F.R.; Clausi, S.; Tedesco, A.M.; Molinari, M. The neuropsychological profile of cerebellar damage: The sequencing hypothesis. Cortex 2011, 47, 137–144. [Google Scholar]

- Atkinson, J.; Braddick, O. From genes to brain development to phenotypic behavior: “Dorsal-stream vulnerability” in relation to spatial cognition, attention, and planning of actions in Williams syndrome (WS) and other developmental disorders. Prog. Brain Res. 2011, 189, 261–283. [Google Scholar] [CrossRef]

- Bhat, A.; Landa, R.; Galloway, J. Current perspectives on motor functioning in infants, children, and adults with autism spectrum disorders. Phys. Ther. 2011, 91, 1116–1129. [Google Scholar] [CrossRef]

- Kaiser, M.D.; Pelphrey, K.A. Disrupted action perception in autism: Behavioral evidence, neuroendophenotypes, and diagnostic utility. Dev. Cogn. Neurosci. 2012, 2, 25–35. [Google Scholar] [CrossRef]

- Virji-Babul, N.; Moiseev, A.; Cheung, T.; Weeks, D.J.; Cheyne, D.; Ribary, U. Neural mechanisms underlying action observation in adults with down syndrome. Am. J. Intellect. Dev. Disabil. 2010, 115, 113–127. [Google Scholar] [CrossRef]

- Calvo-Merino, B.; Grèzes, J.; Glaser, D.E.; Passingham, R.E.; Haggard, P. Seeing or doing? Influence of visual and motor familiarity in action observation. Curr. Biol. 2006, 16, 1905–1910. [Google Scholar]

- Cross, E.S.; de Hamilton, A.F.C.; Grafton, S.T. Building a motor simulation de novo: Observation of dance by dancers. NeuroImage 2006, 31, 1257–1267. [Google Scholar] [CrossRef]

- Engel, A.; Burke, M.; Fiehler, K.; Bien, S.; Rösler, F. What activates the human mirror neuron system during observation of artificial movements: Bottom-up visual features or top-down intentions? Neuropsychologia 2008, 46, 2033–2042. [Google Scholar]

- Aglioti, S.M.; Cesari, P.; Romani, M.; Urgesi, C. Action anticipation and motor resonance in elite basketball players. Nat. Neurosci. 2008, 11, 1109–1116. [Google Scholar]

- Casile, A.; Giese, M.A. Nonvisual motor training influences biological motion perception. Curr. Biol. 2006, 16, 69–74. [Google Scholar]

- Hecht, H.; Vogt, S.; Prinz, W. Motor learning enhances perceptual judgment: A case for action-perception transfer. Psychol. Res. 2001, 65, 3–14. [Google Scholar] [CrossRef]

- Beets, I.A.M.; Rösler, F.; Fiehler, K. Nonvisual motor learning improves visual motion perception: Evidence from violating the two-thirds power law. J. Neurophysiol. 2010, 104, 1612–1624. [Google Scholar]

- Knoblich, G.; Flach, R. Predicting the effects of actions: Interactions of perception and action. Psychol. Sci. 2001, 12, 467–472. [Google Scholar] [CrossRef]

- Loula, F.; Prasad, S.; Harber, K.; Shiffrar, M. Recognizing people from their movement. J. Exp. Psychol. Hum. Percept. Perform. 2005, 31, 210–220. [Google Scholar]

- Repp, B.H.; Knoblich, G. Performed or observed keyboard actions affect pianists’ judgements of relative pitch. Q. J. Exp. Psychol. 2009, 62, 2156–2170. [Google Scholar]

- Craighero, L.; Bello, A.; Fadiga, L.; Rizzolatti, G. Hand action preparation influences the responses to hand pictures. Neuropsychologia 2002, 40, 492–502. [Google Scholar]

- Lindemann, O.; Bekkering, H. Object manipulation and motion perception: Evidence of an influence of action planning on visual processing. J. Exp. Psychol. Hum. Percept. Perform. 2009, 35, 1062–1071. [Google Scholar]

- Wykowska, A.; Schubö, A.; Hommel, B. How you move is what you see: Action planning biases selection in visual search. J. Exp. Psychol. Hum. Percept. Perform. 2009, 35, 1755–1769. [Google Scholar]

- Wohlschläger, A. Visual motion priming by invisible actions. Vis. Res. 2000, 40, 925–930. [Google Scholar] [CrossRef]

- Beets, I.A.M.; Hart, B.M.T.; Rösler, F.; Henriques, D.Y.P.; Einhöuser, W.; Fiehler, K. Online action-to-perception transfer: Only percept-dependent action affects perception. Vis. Res. 2010, 50, 2633–2641. [Google Scholar]

- Vishton, P.M.; Stephens, N.J.; Nelson, L.A.; Morra, S.E.; Brunick, K.L.; Stevens, J.A. Planning to reach for an object changes how the reacher perceives it. Psychol. Sci. 2007, 18, 713–719. [Google Scholar]

- Müsseler, J.; Hommel, B. Blindness to response-compatible stimuli. J. Exp. Psychol.Hum. Percept. Perform. 1997, 23, 861–872. [Google Scholar]

- Müsseler, J.; Wühr, P.; Prinz, W. Varying the response code in the blindness to response-compatible stimuli. Vis. Cogn. 2000, 7, 743–767. [Google Scholar]

- Nishimura, A.; Yokosawa, K. Effector identity and orthogonal stimulus-response compatibility in blindness to response-compatible stimuli. Psychol. Res. 2010, 74, 172–181. [Google Scholar] [CrossRef]

- Stevanovski, B.; Oriet, C.; Jolicœur, P. Blinded by headlights. Can. J. Exp. Psychol. 2002, 56, 65–74. [Google Scholar]

- Stevanovski, B.; Oriet, C.; Jolicœur, P. Can blindness to response-compatible stimuli be observed in the absence of a response? J. Exp. Psychol. Hum. Percept. Perform. 2003, 29, 431–440. [Google Scholar] [CrossRef]

- Stevanovski, B.; Oriet, C.; Jolicœur, P. Symbolic- and response-related contributions to blindness to compatible stimuli. Vis. Cogn. 2006, 14, 326–350. [Google Scholar]

- Cattaneo, L.; Barchiesi, G.; Tabarelli, D.; Arfeller, C.; Sato, M.; Glenberg, A.M. One’s motor performance predictably modulates the understanding of others’ actions through adaptation of premotor visuo-motor neurons. Soc. Cogn. Aff. Neurosci. 2011, 6, 301–310. [Google Scholar]

- Zwickel, J.; Grosjean, M.; Prinz, W. Seeing while moving: Measuring the online influence of action on perception. Q. J. Exp. Psychol. 2007, 60, 1063–1071. [Google Scholar]

- Miall, R.C.; Stanley, J.; Todhunter, S.; Levick, C.; Lindo, S.; Miall, J.D. Performing hand actions assists the visual discrimination of similar hand postures. Neuropsychologia 2006, 44, 966–976. [Google Scholar]

- Hamilton, A.F.; Wolpert, D.; Frith, U. Your own action influences how you perceive another person’s action. Curr. Biol. 2004, 14, 493–498. [Google Scholar] [CrossRef]

- Jacobs, A.; Shiffrar, M. Walking perception by walking observers. J. Exp. Psychol. Hum. Percept. Perform. 2005, 31, 157–169. [Google Scholar] [CrossRef]

- Bortoletto, M.; Mattingley, J.B.; Cunnington, R. Action intentions modulate visual processing during action perception. Neuropsychologia 2011, 49, 2097–2104. [Google Scholar]

- Blakemore, S.J.; Wolpert, D.; Frith, C. Why can’t you tickle yourself? NeuroReport 2000, 11, R11–R16. [Google Scholar] [CrossRef]

- Bhalla, M.; Proffitt, D.R. Visual-motor recalibration in geographical slant perception. J. Exp. Psychol. Hum. Percept. Perform. 1999, 25, 1076–1096. [Google Scholar] [CrossRef]

- Witt, J.K.; Proffitt, D.R. Action-specific influences on distance perception: A role for motor simulation. J. Exp. Psychol. Hum. Percept. Perform. 2008, 34, 1479–1492. [Google Scholar] [CrossRef]

- Jeannerod, M.; Arbib, M.A.; Rizzolatti, G.; Sakata, H. Grasping objects: The cortical mechanisms of visuomotor transformation. Trends Neurosci. 1995, 18, 314–320. [Google Scholar]

- Bub, D.N.; Masson, M.E.J. Gestural knowledge evoked by objects as part of conceptual representations. Aphasiology 2006, 20, 1112–1124. [Google Scholar] [CrossRef]

- Jeannerod, M. The representing brain: Neural correlates of motor intention and imagery. Behav. Brain Sci. 1994, 17, 187–245. [Google Scholar]

- Grafton, S.T.; Hamilton, A.F. Evidence for a distributed hierarchy of action representation in the brain. Hum. Mov. Sci. 2007, 26, 590–616. [Google Scholar] [CrossRef]

- Hamilton, A.F.; Grafton, S.T. The Motor Hierarchy: From Kinematics to Goals and Intentions. In The Sensorimotor Foundations of Higher Cognition:Attention and Performance XXII; Haggard, P., Rossetti, R., Kawato, M., Eds.; Oxford University Press: New York, NY, USA, 2007; pp. 381–407. [Google Scholar]

- Pavlova, M.; Staudt, M.; Sokolov, A.; Birbaumer, N.; Krägeloh-Mann, I. Perception and production of biological movement in patients with early periventricular brain lesions. Brain 2003, 126, 692–701. [Google Scholar]

© 2012 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Halász, V.; Cunnington, R. Unconscious Effects of Action on Perception. Brain Sci. 2012, 2, 130-146. https://doi.org/10.3390/brainsci2020130

Halász V, Cunnington R. Unconscious Effects of Action on Perception. Brain Sciences. 2012; 2(2):130-146. https://doi.org/10.3390/brainsci2020130

Chicago/Turabian StyleHalász, Veronika, and Ross Cunnington. 2012. "Unconscious Effects of Action on Perception" Brain Sciences 2, no. 2: 130-146. https://doi.org/10.3390/brainsci2020130