Image Dehazing and Enhancement Using Principal Component Analysis and Modified Haze Features

Abstract

:1. Introduction

2. Theoretical Background

Degradation Model of a Haze Image

3. Haze Removal Using PCA and Haze-Relevant Features

3.1. Estimation of the Atmospheric Light Using PCA

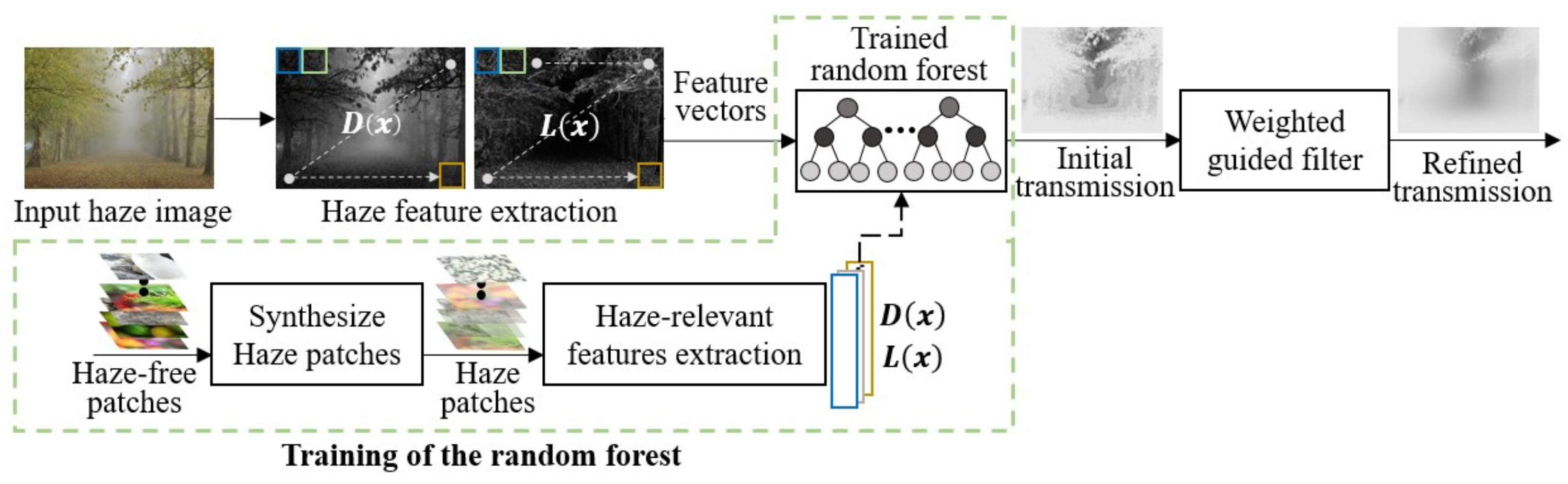

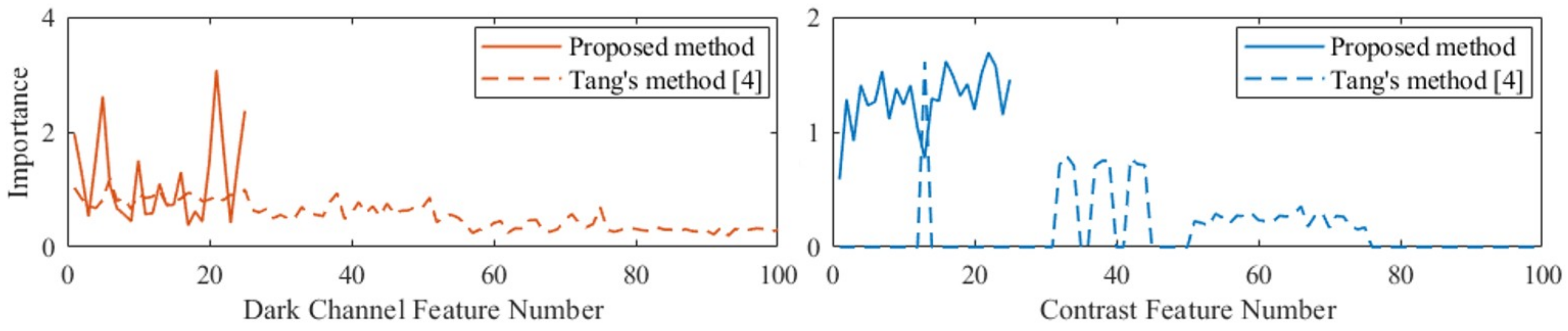

3.2. Transmission Estimation Using Random Forest

3.3. Training the Random Forest

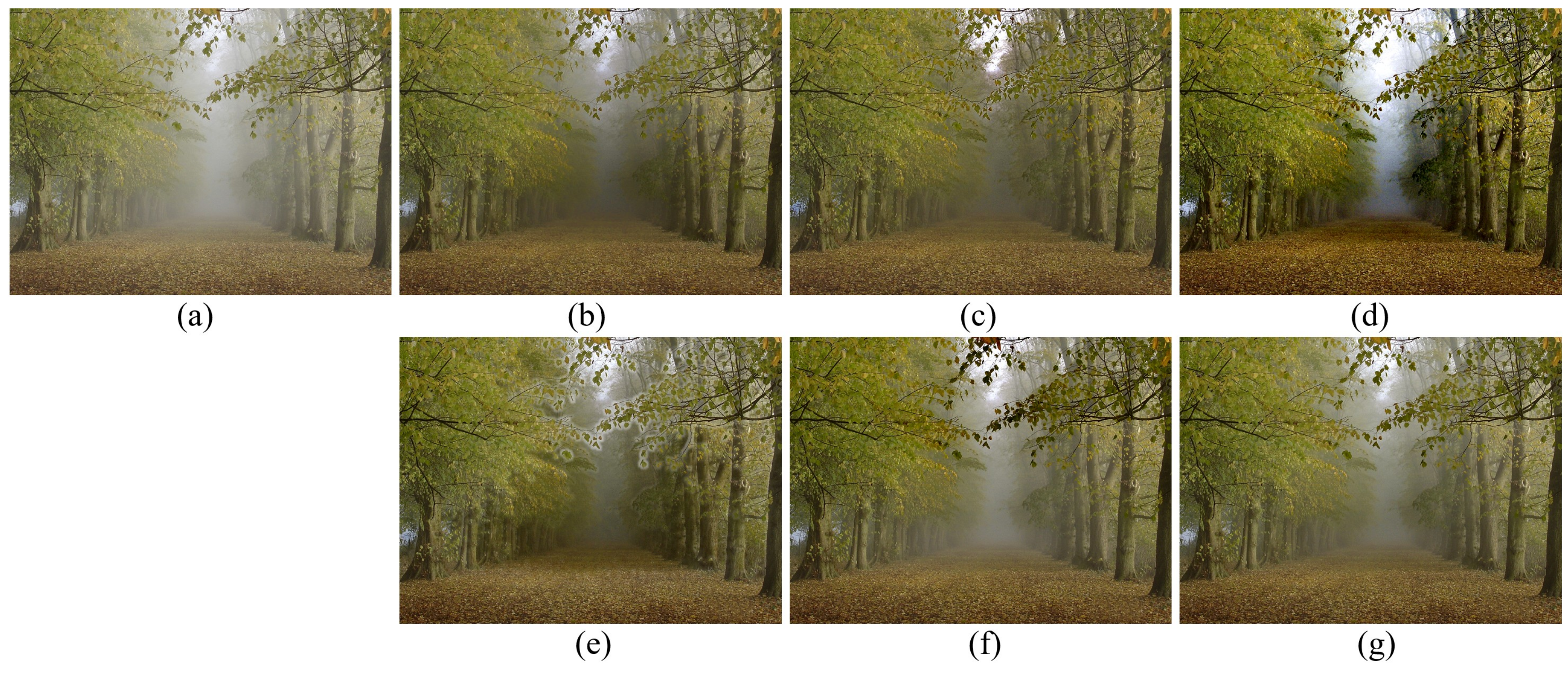

4. Experimental Results

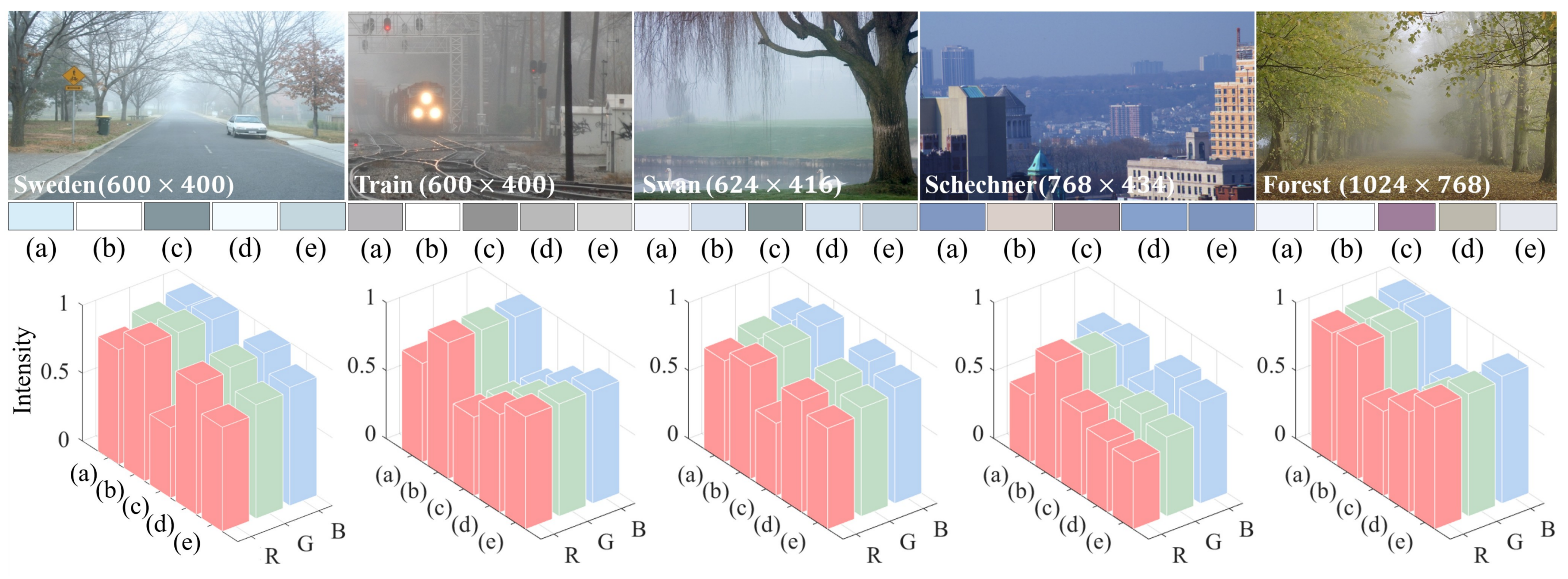

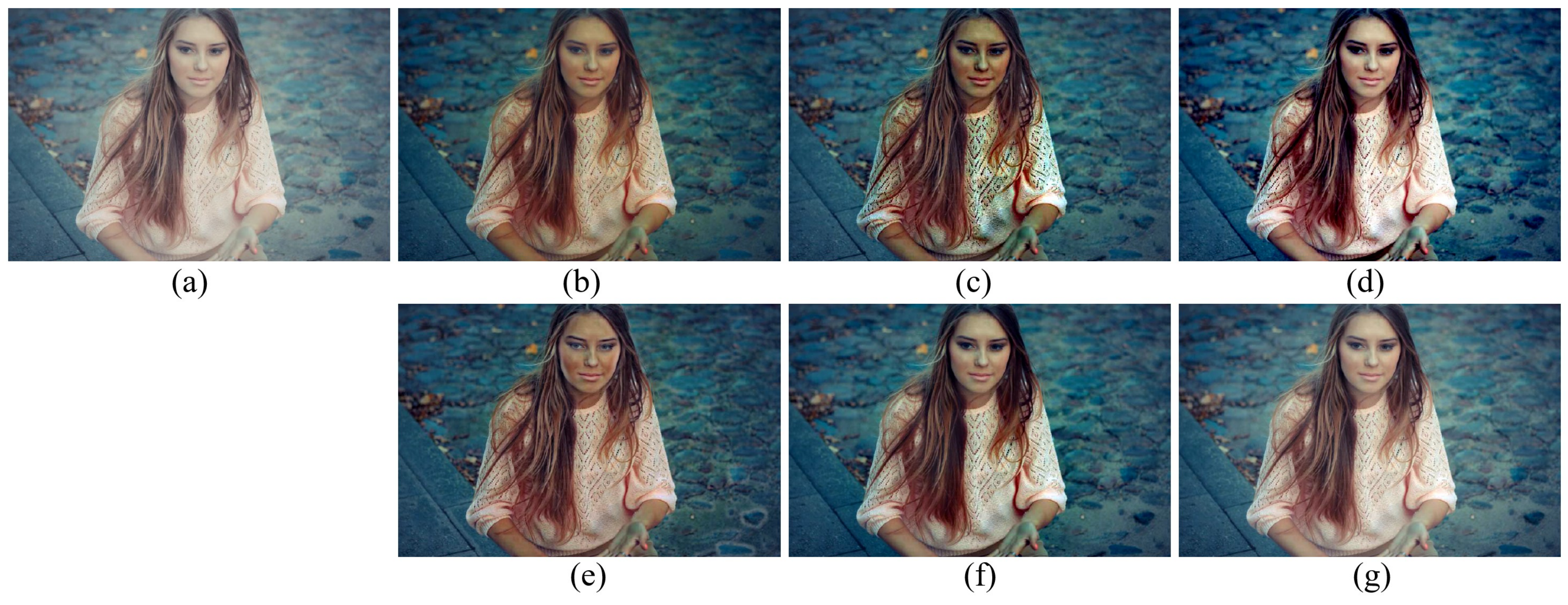

4.1. Comparison of the Estimated Atmospheric Light

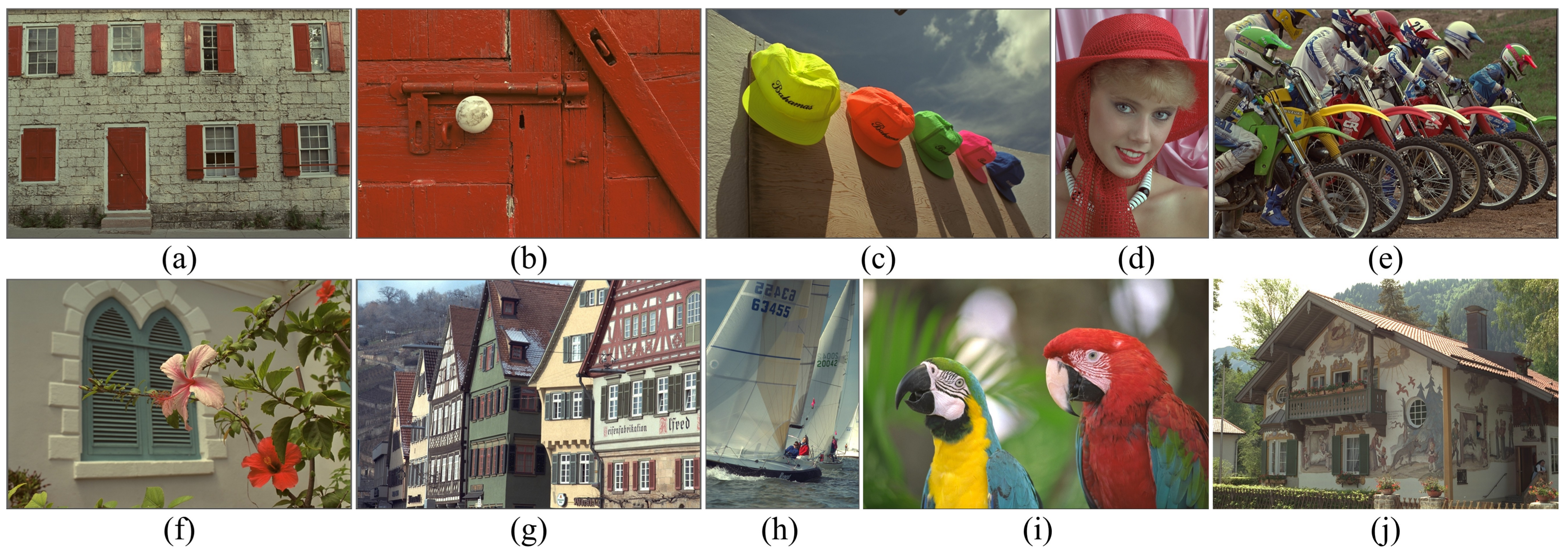

4.2. Objective Assessments

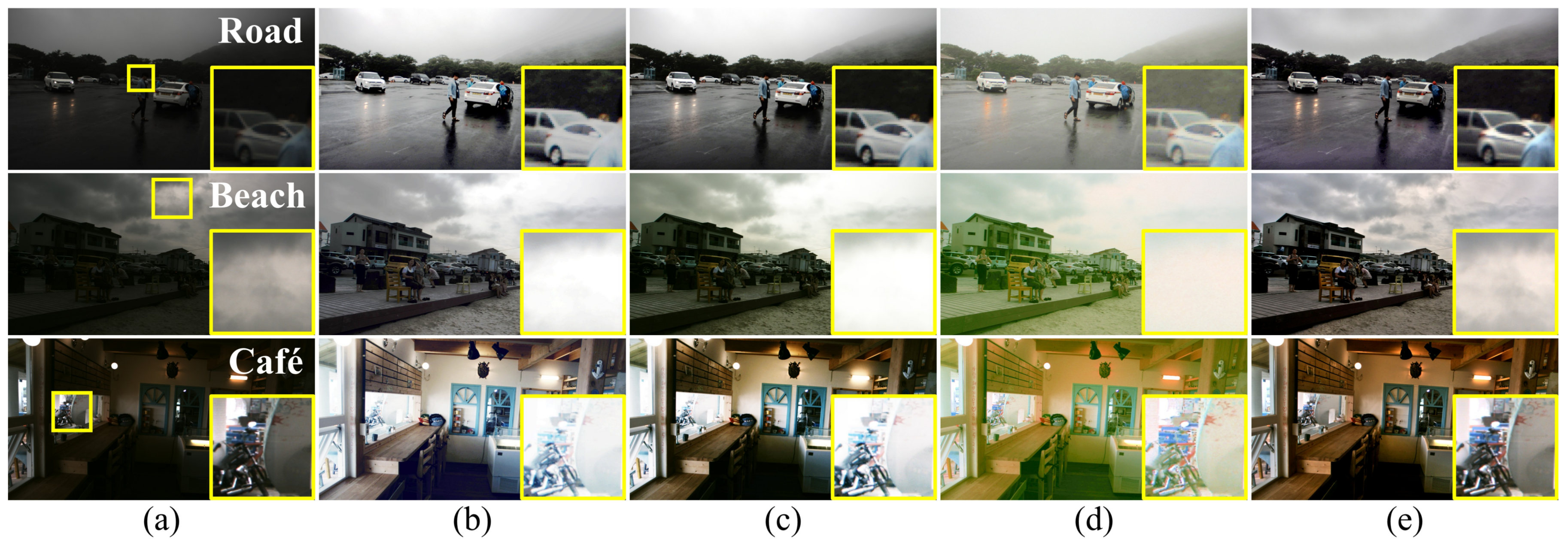

4.3. Application to Low-Light Image Enhancement

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Zhu, Q.; Mai, J.; Shao, L. A Fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

- Ancuti, O.; Ancuti, C.; Hermans, C.; Bekaert, P. A fast semi-inverse approach to detect and remove the haze from a single image. In Proceedings of the Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; pp. 501–514. [Google Scholar]

- Tang, K.; Yang, J.; Wang, J. Investigating haze-relevant features in a learning framework for image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 2995–3002. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 154–169. [Google Scholar]

- Fattal, R. Dehazing using color-lines. ACM Trans. Graph. 2014, 34, 13. [Google Scholar] [CrossRef]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE Conference on Computer Vision, Darling Harbour, Sydney, Australia, 3–6 December 2013; pp. 617–624. [Google Scholar]

- He, J.; Zhang, C.; Yang, R.; Zhu, K. Convex optimization for fast image dehazing. In Proceedings of the IEEE Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 2246–2250. [Google Scholar]

- Sulami, M.; Glatzer, I.; Fattal, R.; Werman, M. Automatic recovery of the atmospheric light in hazy images. In Proceedings of the IEEE International Conference Computer Photography, Santa Clara, CA, USA, 2–4 May 2014; pp. 1–11. [Google Scholar]

- Yoon, I.; Kim, S.; Kim, D.; Hayes, M.H.; Paik, J. Adaptive defogging with color correction in the HSV color space for consumer surveillance system. IEEE Trans. Consum. Electron. 2012, 58, 111–116. [Google Scholar] [CrossRef]

- Kim, W.; Bae, H.; Kim, T. Fast and efficient haze removal. In Proceedings of the IEEE International Conference Consumer Electronics, Las Vegas, NV, USA, 9–12 January 2015; pp. 360–361. [Google Scholar]

- Kim, H.; Park, J.; Park, H.; Paik, J. Iterative refinement of transmission map for stereo image defogging. In Proceedings of the IEEE International Conference Consumer Electronics, Las Vegas, NV, USA, 8–11 January 2017; pp. 296–297. [Google Scholar]

- Berman, D.; Treibitz, T.; Avidan, S. Air-light estimation using haze-lines. In Proceedings of the IEEE International Conference on Computer Photography, Palo Alto, CA, USA, 12–14 May 2017; pp. 1–9. [Google Scholar]

- Liu, P.J.; Horng, S.J.; Lin, J.S.; Li, T. Contrast in haze removal: configurable contrast enhancement model based on dark channel prior. IEEE Trans. Image Process. 2018. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zheng, Z.; Zhu, Z.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Trans. Image Process. 2015, 24, 120–129. [Google Scholar] [PubMed]

- Kim, K.; Kim, S.; Sohn, K. Lazy dragging: Effortless bounding-box drawing for touch-screen devices. IEEE Trans. Consum. Electron. 2017, 63, 93–100. [Google Scholar] [CrossRef]

- Unsplash. Available online: https://unsplash.com/ (accessed on 31 July 2018).

- Bahat, Y.; Irani, M. Blind dehazing using internal patch recurrence. In Proceedings of the IEEE International Conference on Computer Photography, Evanston, IL, USA, 13–15 May 2016; pp. 1–9. [Google Scholar]

- Hautiere, N.; Tarel, J.P.; Aubert, D.; Dumont, E. Blind contrast enhancement assessment by gradient ratioing at visible edges. Image Anal. Stereol. 2011, 27, 87–95. [Google Scholar] [CrossRef]

- Ancuti, O.; Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Ding, L.; Sharma, G. HazeRD: An outdoor scene dataset and benchmark for single image dehazing. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 3205–3209. [Google Scholar]

- Dong, X.; Wang, G.; Pang, Y.; Li, W.; Wen, J.; Meng, W.; Lu, Y. Fast efficient algorithm for enhancement of low lighting video. In Proceedings of the IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2011; pp. 1–6. [Google Scholar]

- Jiang, X.; Yao, H.; Zhang, S.; Lu, X.; Zeng, W. Night video enhancement using improved dark channel prior. In Proceedings of the IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2013; pp. 553–557. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice-Hall: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- Huang, S.C.; Cheng, F.C.; Chiu, Y.S. Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 2013, 22, 1032–1041. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Kodak Lossless True Color Image Suite. Available online: http://r0k.us/graphics/kodak/ (accessed on 31 July 2018).

- Gu, K.; Zhai, G.; Lin, W.; Yang, X.; Zhang, W. No-reference image sharpness assessment in autoregressive parameter space. IEEE Trans. Image Process. 2015, 24, 3218–3231. [Google Scholar] [PubMed]

| [1] | [11] | [15] | Proposed | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Error | Time | Error | Time | Error | Time | Error | Time | Time (Parallel) | |

| Sweden | 0.24 | 0.85 | 1.03 | 8.41 | 0.19 | 4.40 | 0.28 | 0.18 | 0.15 |

| Train | 0.82 | 0.67 | 0.45 | 5.43 | 0.03 | 2.25 | 0.30 | 0.34 | 0.29 |

| Swan | 0.23 | 1.72 | 0.68 | 8.36 | 0.21 | 5.10 | 0.02 | 0.22 | 0.21 |

| Schechner | 0.65 | 0.96 | 0.32 | 11.44 | 0.14 | 4.26 | 0.01 | 2.61 | 1.56 |

| Forest | 0.07 | 1.63 | 1.17 | 14.16 | 0.74 | 5.49 | 0.16 | 1.50 | 0.93 |

| Avg. | 0.40 | 1.17 | 0.73 | 9.56 | 0.26 | 4.30 | 0.15 | 0.97 | 0.63 |

| [1] | [9] | [8] | [2] | [6] | Proposed | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Figure 8a | 0.0000 | 1.0870 | 0.1848 | 1.5752 | 1.8338 | 1.7643 | 0.0011 | 1.4817 | 0.0335 | 1.2680 | 0.0095 | 1.1960 |

| Figure 8b | 0.1664 | 1.6934 | 1.1730 | 2.1021 | 0.2565 | 2.4137 | 0.1468 | 1.7906 | 0.1694 | 1.5785 | 0.2161 | 1.4357 |

| Figure 8c | 0.0451 | 1.3218 | 0.2335 | 2.1207 | 6.8876 | 2.3389 | 0.4164 | 1.5604 | 0.7551 | 1.2884 | 0.3001 | 1.2475 |

| Figure 8d | 0.0000 | 1.1548 | 0.0000 | 1.5989 | 0.1561 | 2.1856 | 0.0000 | 1.3821 | 0.0000 | 1.3001 | 0.0000 | 1.2380 |

| Figure 8e | 0.0168 | 1.6015 | 0.1468 | 2.1761 | 0.2651 | 2.1589 | 0.0485 | 1.7905 | 0.1226 | 1.7027 | 0.1152 | 1.4676 |

| Figure 8f | 0.1256 | 2.2194 | 0.0487 | 2.6322 | 0.0563 | 2.3590 | 0.8070 | 1.9389 | 0.0019 | 1.8372 | 0.4088 | 1.6709 |

| Figure 8g | 0.0285 | 1.0251 | 0.5099 | 1.3173 | 2.0646 | 1.9343 | 0.0801 | 1.2948 | 0.5049 | 1.1967 | 0.2038 | 1.1557 |

| Figure 8h | 0.0003 | 2.6491 | 0.0366 | 3.6266 | 0.1915 | 5.0999 | 0.0000 | 1.7928 | 0.0000 | 1.7705 | 0.0000 | 1.5342 |

| Figure 8i | 0.0000 | 1.2353 | 0.0293 | 2.3817 | 0.3793 | 2.0831 | 0.1233 | 1.3019 | 0.1233 | 1.3019 | 0.0459 | 1.2112 |

| Figure 8j | 0.3083 | 1.1934 | 0.2971 | 1.5665 | 4.7788 | 2.8473 | 54.6675 | 1.6899 | 0.2363 | 1.1427 | 0.2992 | 1.3260 |

| Avg. | 0.0691 | 1.5181 | 0.1660 | 2.1097 | 1.6870 | 2.5185 | 0.7291 | 1.6112 | 0.1947 | 1.4387 | 0.1599 | 1.3469 |

| # of Trees | [4] | Proposed | |||

|---|---|---|---|---|---|

| 200 | 200 | 100 | 50 | ||

| Figure 12a | MSE | 0.0600 | 0.0313 | 0.0316 | 0.0324 |

| Figure 12b | MSE | 0.0500 | 0.0428 | 0.0428 | 0.0430 |

| Figure 12c | MSE | 0.0780 | 0.0314 | 0.0316 | 0.0320 |

| Figure 12d | MSE | 0.0693 | 0.0245 | 0.0241 | 0.0252 |

| Figure 12e | MSE | 0.0612 | 0.0384 | 0.0390 | 0.0390 |

| [26] | [27] | [25] | Proposed | |||||

|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

| Figure 13a | 14.9340 | 0.6473 | 15.2250 | 0.6990 | 13.7155 | 0.7044 | 17.9683 | 0.8122 |

| Figure 13b | 8.9670 | 0.1310 | 12.7210 | 0.5678 | 15.0965 | 0.8063 | 18.1877 | 0.8205 |

| Figure 13c | 14.2907 | 0.4049 | 15.4260 | 0.5586 | 12.9453 | 0.6143 | 18.1130 | 0.5971 |

| Figure 13d | 13.7084 | 0.2131 | 15.7105 | 0.5963 | 14.2142 | 0.6855 | 16.7344 | 0.6968 |

| Figure 13e | 12.8507 | 0.6044 | 15.6484 | 0.6960 | 14.0895 | 0.6400 | 17.5864 | 0.6429 |

| Figure 13f | 14.4912 | 0.3775 | 14.8379 | 0.5650 | 12.6677 | 0.6375 | 19.3865 | 0.7534 |

| Figure 13g | 20.9754 | 0.8128 | 19.0706 | 0.7980 | 14.4191 | 0.7019 | 16.8107 | 0.7687 |

| Figure 13h | 15.6074 | 0.5902 | 15.8949 | 0.6559 | 12.0559 | 0.6022 | 14.5077 | 0.6213 |

| Figure 13i | 15.0136 | 0.3879 | 17.0851 | 0.5924 | 13.9160 | 0.6787 | 17.8701 | 0.7628 |

| Figure 13j | 15.5462 | 0.5564 | 16.7592 | 0.6522 | 12.7164 | 0.5974 | 18.2142 | 0.7238 |

| Avg. | 14.6384 | 0.4725 | 15.8379 | 0.6381 | 13.8536 | 0.6668 | 17.5379 | 0.7200 |

| Input | [26] | [27] | [25] | Proposed | |

|---|---|---|---|---|---|

| ARISM | ARISM | ARISM | ARISM | ARISM | |

| Road | 3.0858 | 5.0867 | 4.5993 | 4.0872 | 4.8857 |

| Beach | 3.0861 | 4.1843 | 3.4659 | 3.4488 | 4.0736 |

| Café | 3.0882 | 4.5606 | 3.9947 | 4.1014 | 4.2017 |

| Avg. | 3.0867 | 4.6105 | 4.0200 | 3.8791 | 4.3870 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, M.; Yu, S.; Park, S.; Lee, S.; Paik, J. Image Dehazing and Enhancement Using Principal Component Analysis and Modified Haze Features. Appl. Sci. 2018, 8, 1321. https://doi.org/10.3390/app8081321

Kim M, Yu S, Park S, Lee S, Paik J. Image Dehazing and Enhancement Using Principal Component Analysis and Modified Haze Features. Applied Sciences. 2018; 8(8):1321. https://doi.org/10.3390/app8081321

Chicago/Turabian StyleKim, Minseo, Soohwan Yu, Seonhee Park, Sangkeun Lee, and Joonki Paik. 2018. "Image Dehazing and Enhancement Using Principal Component Analysis and Modified Haze Features" Applied Sciences 8, no. 8: 1321. https://doi.org/10.3390/app8081321