Effect of the Temporal Gradient of Vegetation Indices on Early-Season Wheat Classification Using the Random Forest Classifier

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area and Data

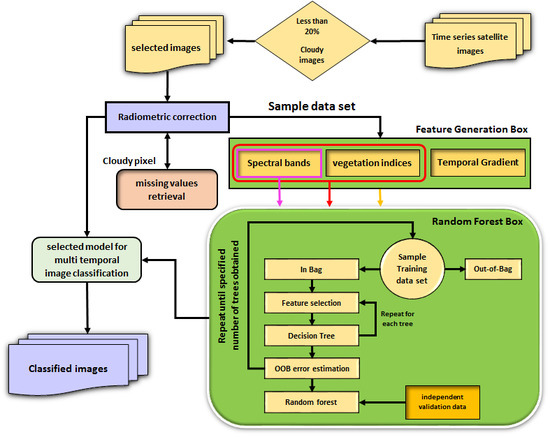

2.2. Methodology

3. Results and Discussion

3.1. Experiment 1: Optimum Training Sample Size

3.2. Experiment 2: Optimum Forest Size

3.3. Experiment 3: The Effect of Missing Data in the Training Data

3.4. Experiment 4: Appropriate Image Acquisition Times and Temporal Resolution

3.5. Experiment 5: The Effect of VIs and Their Gradient

3.6. Experiment 6: The Effect of the Number of Classes

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food security: The challenge of feeding 9 billion people. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed]

- Padilla, F.; Maas, S.; González-Dugo, M.; Mansilla, F.; Rajan, N.; Gavilán, P.; Domínguez, J. Monitoring regional wheat yield in southern spain using the grami model and satellite imagery. Field Crops Res. 2012, 130, 145–154. [Google Scholar] [CrossRef]

- Allen, R.; Hanuschak, G.; Craig, M. History of Remote Sensing for Crop Acreage in Usda’s National Agricultural Statistics Service; FAO: Rome, Italy, 2002. [Google Scholar]

- Pan, Y.; Li, L.; Zhang, J.; Liang, S.; Zhu, X.; Sulla-Menashe, D. Winter wheat area estimation from modis-evi time series data using the crop proportion phenology index. Remote Sens. Environ. 2012, 119, 232–242. [Google Scholar] [CrossRef]

- Ghamisi, P.; Plaza, J.; Chen, Y.; Li, J.; Plaza, A. Advanced supervised spectral classifiers for hyperspectral images: A review. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–32. [Google Scholar] [CrossRef]

- Vieira, C.; Mather, P.; McCullagh, M. The spectral-temporal response surface and its use in the multi-sensor, multi-temporal classification of agricultural crops. Int. Arch. Photogr. Remote Sens. 2000, 33, 582–589. [Google Scholar]

- Ghaffari, O.; Zoej, M.J.V.; Mokhtarzade, M. Reducing the effect of the endmembers’ spectral variability by selecting the optimal spectral bands. Remote Sens. 2017, 9, 884. [Google Scholar] [CrossRef]

- Wardlow, B.D.; Egbert, S.L.; Kastens, J.H. Analysis of time-series modis 250 m vegetation index data for crop classification in the us central great plains. Remote Sens. Environ. 2007, 108, 290–310. [Google Scholar] [CrossRef]

- Cammarano, D.; Fitzgerald, G.J.; Casa, R.; Basso, B. Assessing the robustness of vegetation indices to estimate wheat n in mediterranean environments. Remote Sens. 2014, 6, 2827–2844. [Google Scholar] [CrossRef]

- Carrão, H.; Gonçalves, P.; Caetano, M. Contribution of multispectral and multitemporal information from modis images to land cover classification. Remote Sens. Environ. 2008, 112, 986–997. [Google Scholar] [CrossRef]

- Chen, J.; Lu, M.; Chen, X.; Chen, J.; Chen, L. A spectral gradient difference based approach for land cover change detection. ISPRS J. Photogr. Remote Sens. 2013, 85, 1–12. [Google Scholar] [CrossRef]

- De Colstoun, E.C.B.; Story, M.H.; Thompson, C.; Commisso, K.; Smith, T.G.; Irons, J.R. National park vegetation mapping using multitemporal landsat 7 data and a decision tree classifier. Remote Sens. Environ. 2003, 85, 316–327. [Google Scholar] [CrossRef]

- Langley, S.K.; Cheshire, H.M.; Humes, K.S. A comparison of single date and multitemporal satellite image classifications in a semi-arid grassland. J. Arid Environ. 2001, 49, 401–411. [Google Scholar] [CrossRef]

- Zheng, B.; Myint, S.W.; Thenkabail, P.S.; Aggarwal, R.M. A support vector machine to identify irrigated crop types using time-series landsat ndvi data. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 103–112. [Google Scholar] [CrossRef]

- Bellón, B.; Bégué, A.; Lo Seen, D.; de Almeida, C.A.; Simões, M. A remote sensing approach for regional-scale mapping of agricultural land-use systems based on ndvi time series. Remote Sens. 2017, 9, 600. [Google Scholar] [CrossRef]

- Lobell, D.B.; Asner, G.P. Cropland distributions from temporal unmixing of modis data. Remote Sens. Environ. 2004, 93, 412–422. [Google Scholar] [CrossRef]

- Nitze, I.; Schulthess, U.; Asche, H. Comparison of machine learning algorithms random forest, artificial neural network and support vector machine to maximum likelihood for supervised crop type classification. In Proceedings of the of the 4th GEOBIA, Rio de Janeiro, Brazil, 7–9 May 2012; pp. 7–9. [Google Scholar]

- Lebourgeois, V.; Dupuy, S.; Vintrou, É.; Ameline, M.; Butler, S.; Bégué, A. A combined random forest and obia classification scheme for mapping smallholder agriculture at different nomenclature levels using multisource data (simulated sentinel-2 time series, vhrs and dem). Remote Sens. 2017, 9, 259. [Google Scholar] [CrossRef]

- Chen, J.; Jönsson, P.; Tamura, M.; Gu, Z.; Matsushita, B.; Eklundh, L. A simple method for reconstructing a high-quality ndvi time-series data set based on the savitzky–golay filter. Remote Sens. Environ. 2004, 91, 332–344. [Google Scholar] [CrossRef]

- Potgieter, A.; Apan, A.; Hammer, G.; Dunn, P. Early-season crop area estimates for winter crops in ne australia using modis satellite imagery. ISPRS J. Photogr. Remote Sens. 2010, 65, 380–387. [Google Scholar] [CrossRef]

- Du, P.; Xia, J.; Zhang, W.; Tan, K.; Liu, Y.; Liu, S. Multiple classifier system for remote sensing image classification: A review. Sensors 2012, 12, 4764–4792. [Google Scholar] [CrossRef] [PubMed]

- Eisavi, V.; Homayouni, S.; Yazdi, A.M.; Alimohammadi, A. Land cover mapping based on random forest classification of multitemporal spectral and thermal images. Environ. Monit. Assess. 2015, 187, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Fletcher, R.S. Using vegetation indices as input into random forest for soybean and weed classification. Am. J. Plant Sci. 2016, 7, 2186. [Google Scholar] [CrossRef]

- Millard, K.; Richardson, M. On the importance of training data sample selection in random forest image classification: A case study in peatland ecosystem mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the robustness of random forests to map land cover with high resolution satellite image time series over large areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Nitze, I.; Barrett, B.; Cawkwell, F. Temporal optimisation of image acquisition for land cover classification with random forest and modis time-series. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 136–146. [Google Scholar] [CrossRef]

- Hao, P.; Zhan, Y.; Wang, L.; Niu, Z.; Shakir, M. Feature selection of time series modis data for early crop classification using random forest: A case study in kansas, USA. Remote Sens. 2015, 7, 5347–5369. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P. Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Liu, J.; Feng, Q.; Gong, J.; Zhou, J.; Liang, J.; Li, Y. Winter wheat mapping using a random forest classifier combined with multi-temporal and multi-sensor data. Int. J. Digit. Earth 2017, 11, 1–20. [Google Scholar] [CrossRef]

- Khan, A.; Hansen, M.C.; Potapov, P.; Stehman, S.V.; Chatta, A.A. Landsat-based wheat mapping in the heterogeneous cropping system of punjab, pakistan. Int. J. Remote Sens. 2016, 37, 1391–1410. [Google Scholar] [CrossRef]

- Inglada, J.; Vincent, A.; Arias, M.; Marais-Sicre, C. Improved early crop type identification by joint use of high temporal resolution sar and optical image time series. Remote Sens. 2016, 8, 362. [Google Scholar] [CrossRef]

- Richter, R.; Schläpfer, D. Atmospheric/Topographic Correction for Satellite Imagery, ATCOR-2/3 User Guide, version 8.3.1; ReSe Applications Schläpfer: Wil, Switzerland, 2014. [Google Scholar]

- Ahmadi, K.; Gholizadeh, H.; Ebad zade, H.; Hoseinpoor, R.; Hatami, F.; Fazli, B.; Kazemian, A.; Rafiee, M. Agricultural Statistics of Crops in 2013–2014 First Volume. Available online: http://www.maj.ir/dorsapax/userfiles/file/amar1007.pdf (accessed on 23 July 2018).

- Rouse, J.W.H.R.; Schell, J.A.; Deering, D.W. Monitoring vegetation, systems in the great plains with erts. In Proceedings of the Third Earth Resources, Technology Satellite Symposium 1, Greenbelt, MD, USA, 10–14 December 1974. [Google Scholar]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Ridao, E.; Conde, J.R.; Mínguez, M.I. Estimating fapar from nine vegetation indices for irrigated and nonirrigated faba bean and semileafless pea canopies. Remote Sens. Environ. 1998, 66, 87–100. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Ward, A.D.; Lyon, J.G.; Merry, C.J. Thematic mapper vegetation indices for determining soybean and corn growth parameters. Photogramm. Eng. Remote Sens. 1994, 60, 437–442. [Google Scholar]

- Jafari, R.; Lewis, M.; Ostendorf, B. Evaluation of vegetation indices for assessing vegetation cover in southern arid lands in south australia. Rangel. J. 2007, 29, 39–49. [Google Scholar] [CrossRef]

- Liu, H.Q.; Huete, A. A feedback based modification of the ndvi to minimize canopy background and atmospheric noise. IEEE Trans. Geosci. Remote Sens. 1995, 33, 457–465. [Google Scholar]

- Qi, J.; Chehbouni, A.; Huete, A.; Kerr, Y.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Nemani, R.; Pierce, L.; Running, S.; Band, L. Forest ecosystem processes at the watershed scale: Sensitivity to remotely-sensed leaf area index estimates. Int. J. Remote Sens. 1993, 14, 2519–2534. [Google Scholar] [CrossRef]

- Huete, A.R. A soil-adjusted vegetation index (savi). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. Experiments with a New Boosting Algorithm. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.51.6252&rep=rep1&type=pdf (accessed on 23 July 2018).

- Breiman, L. Out-of-Bag Estimation; CiteSeer: Technical Report 513; University of California, Department of Statistic: Berkeley, CA, USA, 1996. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Leistner, C.; Saffari, A.; Santner, J.; Bischof, H. Semi-supervised random forests. In Proceedings of the IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; pp. 506–513. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Wang, L.A.; Zhou, X.; Zhu, X.; Dong, Z.; Guo, W. Estimation of biomass in wheat using random forest regression algorithm and remote sensing data. Crop J. 2016, 4, 212–219. [Google Scholar] [CrossRef]

| Image No. | Acquisition Date | Image No. | Acquisition Date |

|---|---|---|---|

| T1 | 11 January 15 | T6 | 17-APR-15 |

| T2 | 3 February 15 | T7 | 03-MAY-15 |

| T3 | 28 February 15 | T8 | 19-MAY-15 |

| T4 | 7 March 15 | T9 | 04-JUN-15 |

| T5 | 16 March 15 | T10 | 20-JUN-15 |

| Class | # of Training Fields | # of Test Fields | # of Training Pixels | # of Test Pixels |

|---|---|---|---|---|

| Wheat | 128 | 280 | 559 | 1265 |

| Barley | 15 | 51 | 93 | 307 |

| Other | 121 | 252 | 1419 | 3027 |

| VIs. No. | Index | Formula | Reference |

|---|---|---|---|

| 1 | Normalized Difference Vegetation Index | [34] | |

| 2 | Simple Ratio | [35] | |

| 3 | Stress-related Vegetation Index 1 | [36] | |

| 4 | Stress-related Vegetation Index 3 | [37] | |

| 5 | Stress-related Vegetation Index 4 | [37,38] | |

| 6 | Enhanced Vegetation Index | [9,39] | |

| 7 | Modified Soil Adjusted Vegetation Index | [40] | |

| 8 | Modified Normalized Difference Vegetation Index | [41] | |

| 9 | Soil Adjusted Vegetation Index | [42] |

| Experimental Setup | ||

|---|---|---|

| Forest size (# of trees) | 10–1000 | |

| Training sample size | 10–50% | |

| Missing data | Yes | No |

| VIs | Yes | No |

| Temporal gradient of VIs and spectral bands | Yes | No |

| # of images | 1–10 | |

| Case | # of Classes | Desired Classes |

|---|---|---|

| 1 | 3 | wheat, barley, other |

| 2 | 2 | (wheat and barley), other |

| Forest Size | 10 | 50 | 100 | 200 | 300 | 400 | 500 | 600 | 700 | 800 | 900 | 1000 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Processing time (s) | 88.5 | 165 | 268 | 477 | 688 | 907 | 1117 | 1329 | 1551 | 1758 | 1963 | 2176 |

| Missing Data Retrieval | OA | Kappa | Producer’s Accuracy Wheat | Producer’s Accuracy Barley | Producer’s Accuracy Others |

|---|---|---|---|---|---|

| No | 93.2 | 85.3 | 91.5 | 51.1 | 97.7 |

| Yes | 95.4 | 90.3 | 96.5 | 62.6 | 98.0 |

| Comb. No. | 11-JAN-15 | 03-FEB-15 Path 163 | 28-FEB-15 | 07-MAR-15 Path 163 | 16-MAR-15 | 17-APR-15 | 03-MAY-15 | 19-MAY-15 | 04-JUN-15 | 20-JUN-15 | OA | Kappa | PA Wheat | PA Barley |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | √ | - | √ | - | √ | √ | √ | √ | √ | √ | 93.2 | 85.3 | 91.5 | 51.1 |

| 2 | √ | - | √ | - | √ | √ | √ | √ | √ | - | 93.1 | 85.2 | 90.3 | 55.1 |

| 3 | √ | - | √ | - | √ | √ | √ | √ | - | - | 95.5 | 90.4 | 96.3 | 59.2 |

| 4 | √ | - | √ | - | √ | √ | √ | - | - | - | 94.3 | 87.8 | 96 | 46.6 |

| 5 | √ | - | √ | - | √ | √ | - | - | - | - | 91.2 | 81.2 | 92 | 14 |

| 6 | √ | - | √ | - | √ | - | - | - | - | - | 86.8 | 71.5 | 82.4 | 9.8 |

| 7 | √ | - | - | - | - | √ | - | - | - | - | 94.1 | 87.5 | 95.9 | 24.2 |

| 8 | √ | - | - | - | - | - | √ | - | - | - | 94.8 | 88.9 | 96.5 | 33.1 |

| 9 | √ | - | - | - | - | √ | √ | - | - | - | 95.3 | 90.1 | 97.7 | 45.5 |

| 10 | √ | √ | √ | √ | - | - | - | - | - | - | 87.4 | 72.8 | 84.0 | 11.4 |

| Comb. No. | 11-JAN-15 | 03-FEB-15 | 28-FEB-15 | 07-MAR-15 | 16-MAR-15 | 17-APR-15 | 03-MAY-15 | 19-MAY-15 | 04-JUN-15 | 20-JUN-15 | VIs | TGVI&TGSB | OA | Kappa | PA Wheat | PA Barley | PA Other | # of Features | Processing Time (s) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | √ | - | √ | - | √ | - | - | - | - | - | - | - | 86.8 | 71.5 | 82.4 | 9.8 | 95.9 | 24 | 264.5 |

| 2 | √ | - | √ | - | √ | - | - | - | - | - | √ | - | 88.1 | 73.9 | 83.9 | 10.9 | 96.7 | 51 | 270.8 |

| 3 | √ | - | √ | - | √ | - | - | - | - | - | √ | √ | 89.9 | 78.1 | 86.8 | 13.0 | 98.5 | 102 | 275.7 |

| 4 | √ | - | - | - | - | √ | √ | - | - | - | - | - | 95.3 | 90.1 | 97.7 | 45.5 | 99.0 | 24 | 264.5 |

| 5 | √ | - | - | - | - | √ | √ | - | - | - | √ | - | 96.4 | 92.3 | 98.2 | 49.3 | 100 | 51 | 270.8 |

| 6 | √ | - | - | - | - | √ | √ | - | - | - | √ | √ | 99.3 | 98.4 | 99.4 | 92.5 | 99.9 | 102 | 275.7 |

| 7 | √ | √ | √ | √ | - | - | - | - | - | - | √ | √ | 90.5 | 79.8 | 90.2 | 13.8 | 98.2 | 170 | 280.8 |

| Case No. | 11-JAN-15 | 03-FEB-15 | 28-FEB-15 | 07-MAR-15 | 16-MAR-15 | 17-APR-15 | 03-MAY-15 | 19-MAY-15 | 04-JUN-15 | 20-JUN-15 | VIs | Gradient | OA | Kappa | PA (Wheat + Barley) | PA Other |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | √ | - | √ | - | √ | - | - | - | - | - | √ | √ | 95.9 | 90.7 | 92.1 | 97.8 |

| 2 | √ | - | - | - | - | √ | √ | - | - | - | √ | √ | 99.6 | 99.0 | 99.0 | 99.8 |

| 3 | √ | √ | √ | √ | - | - | - | - | - | - | √ | √ | 97.1 | 93.5 | 94.9 | 98.2 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abad, M.S.J.; Abkar, A.A.; Mojaradi, B. Effect of the Temporal Gradient of Vegetation Indices on Early-Season Wheat Classification Using the Random Forest Classifier. Appl. Sci. 2018, 8, 1216. https://doi.org/10.3390/app8081216

Abad MSJ, Abkar AA, Mojaradi B. Effect of the Temporal Gradient of Vegetation Indices on Early-Season Wheat Classification Using the Random Forest Classifier. Applied Sciences. 2018; 8(8):1216. https://doi.org/10.3390/app8081216

Chicago/Turabian StyleAbad, Mousa Saei Jamal, Ali A. Abkar, and Barat Mojaradi. 2018. "Effect of the Temporal Gradient of Vegetation Indices on Early-Season Wheat Classification Using the Random Forest Classifier" Applied Sciences 8, no. 8: 1216. https://doi.org/10.3390/app8081216