Applying Acoustical and Musicological Analysis to Detect Brain Responses to Realistic Music: A Case Study

Abstract

:1. Introduction

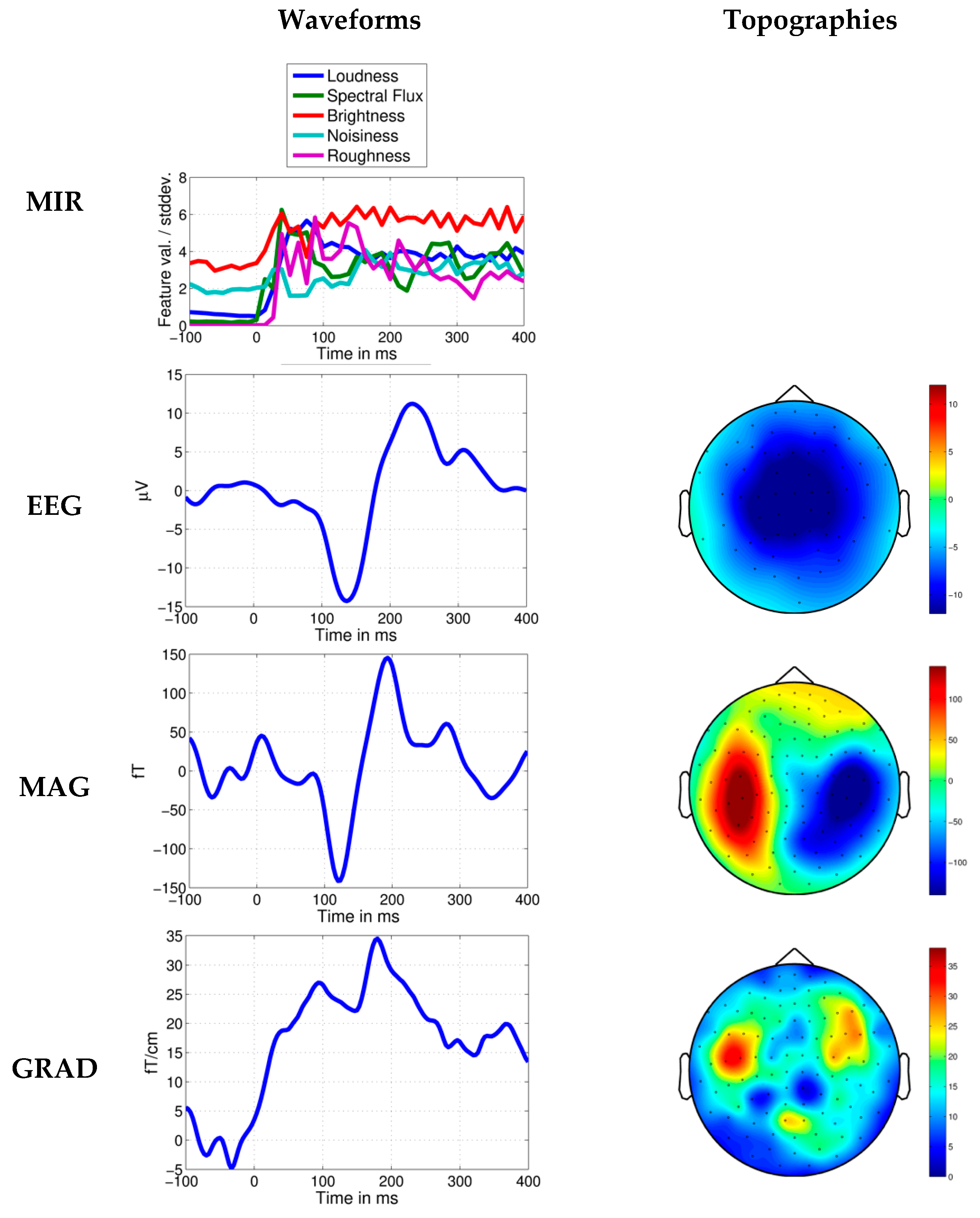

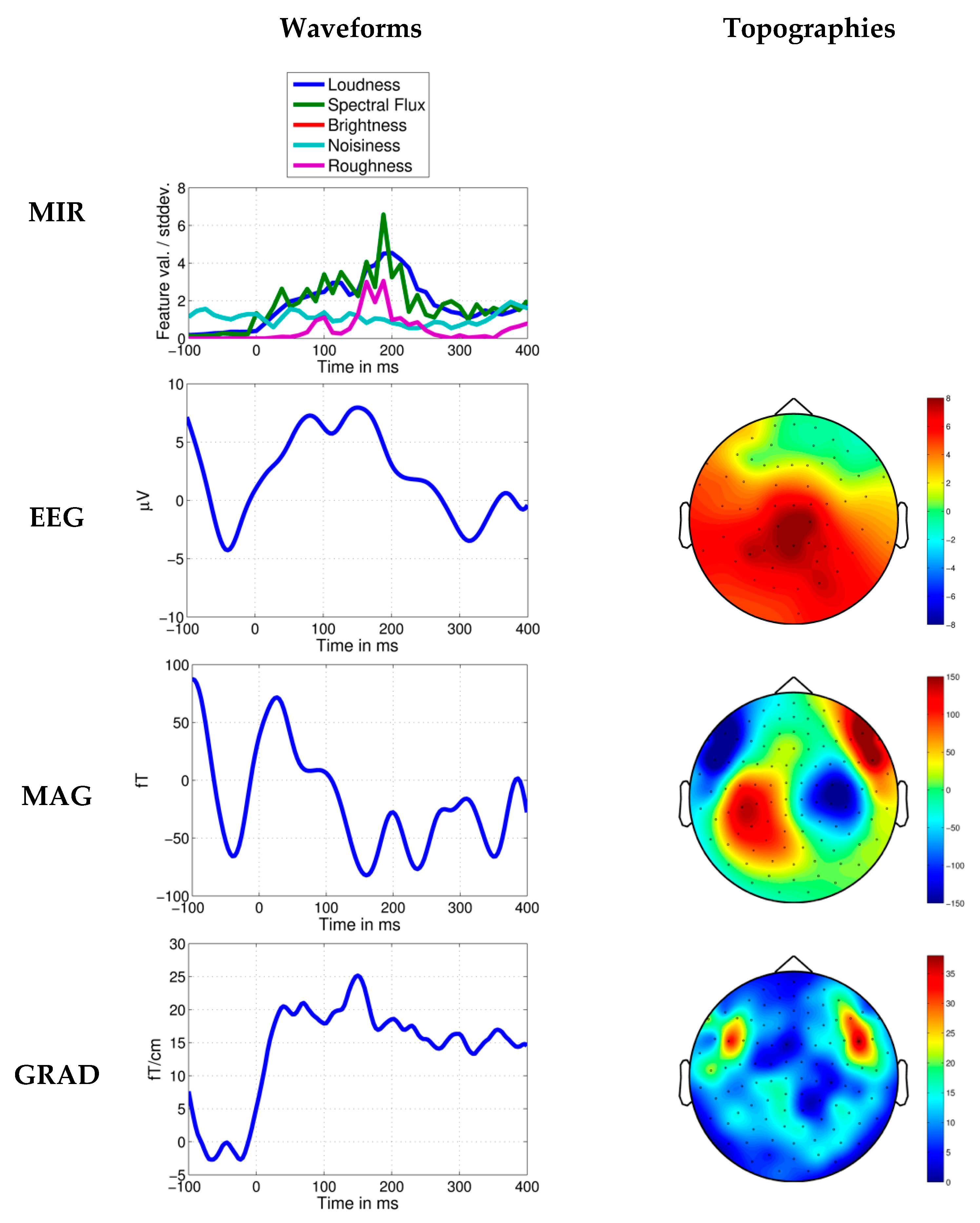

2. Materials and Methods

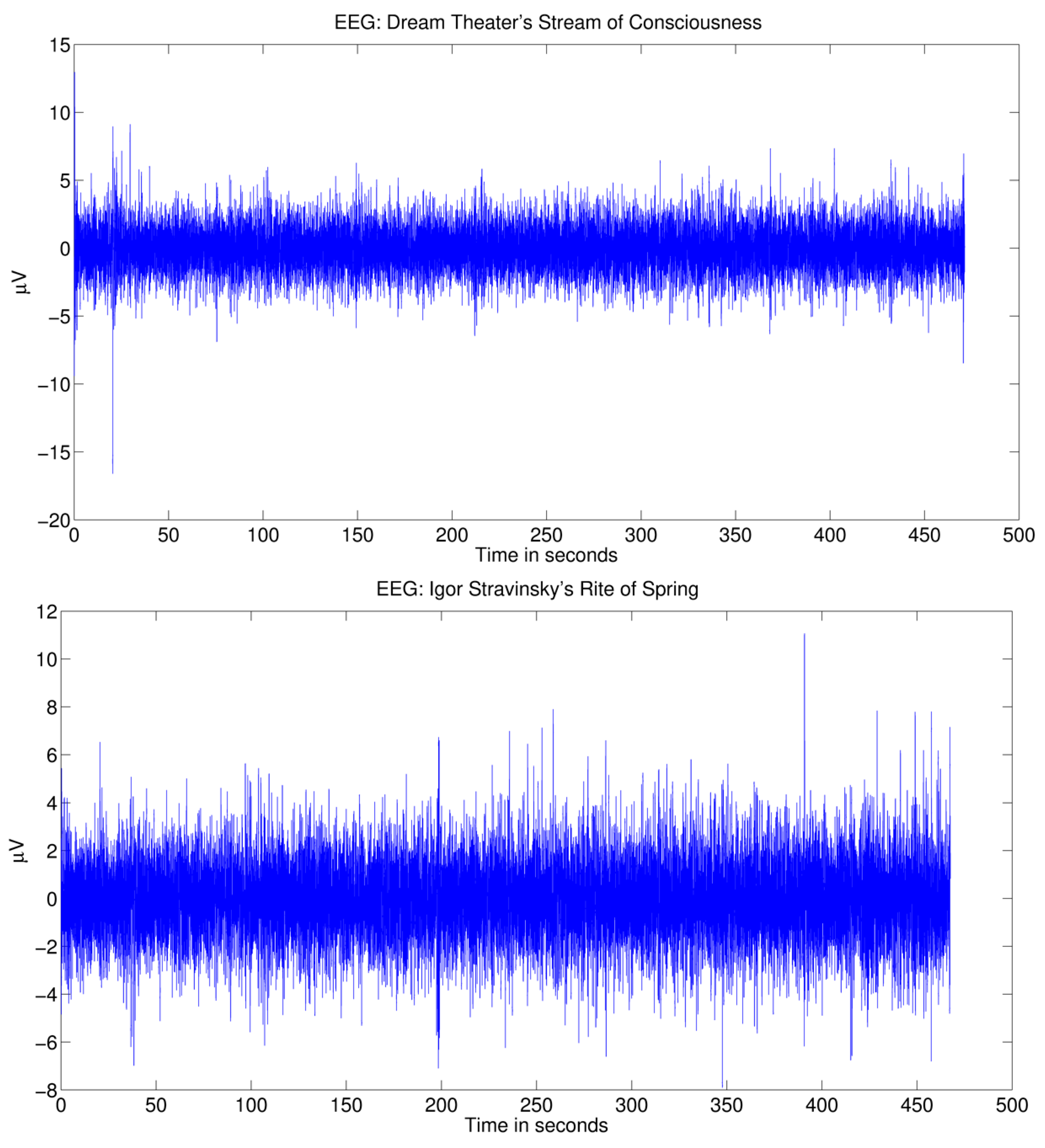

2.1. EEG and MEG Dataset

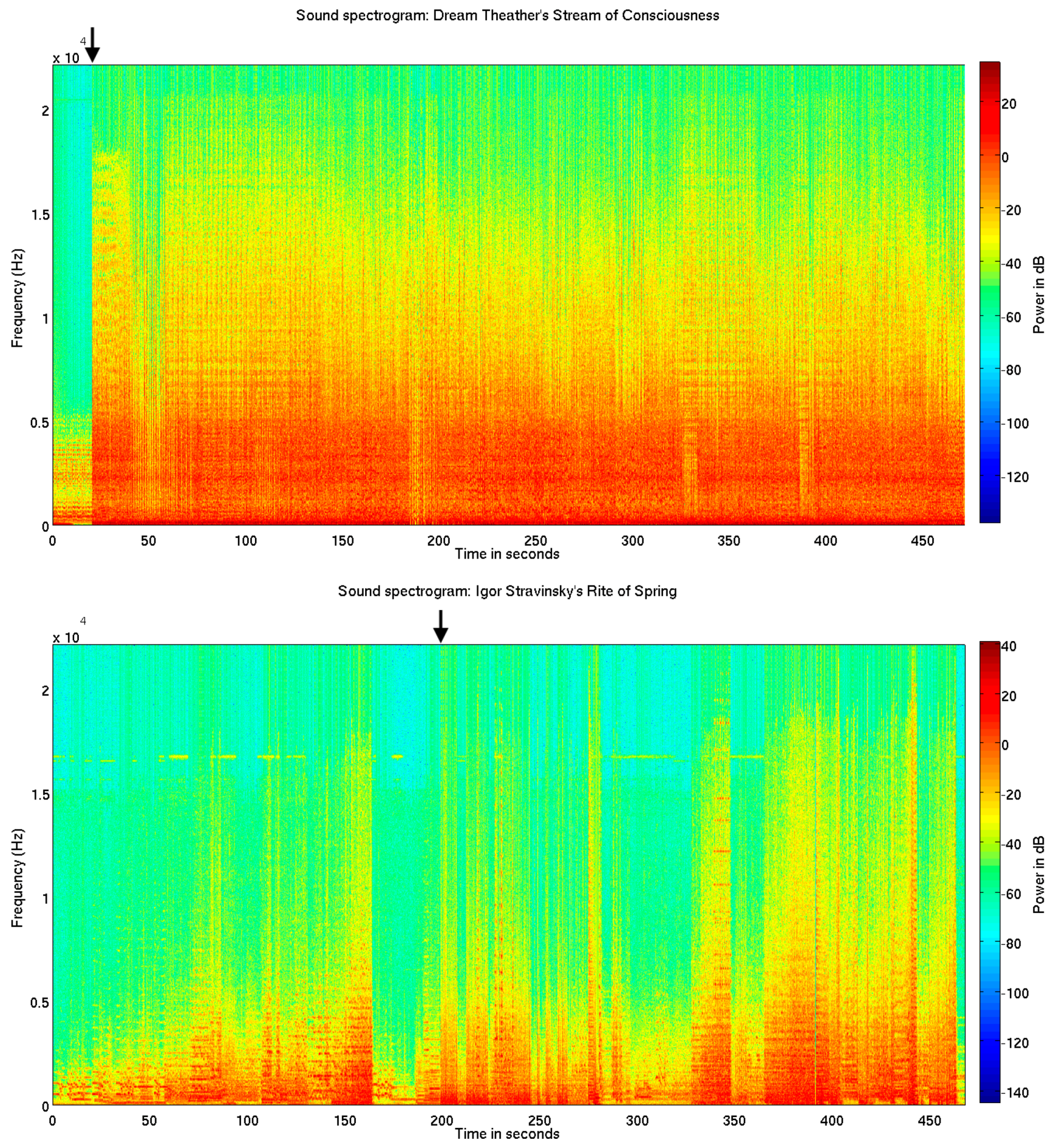

2.2. Stimuli

2.3. Feature Extraction with MIR Toolbox

2.4. Automatic Capture of Acoustical Changes Evoking Cortical Responses

- In order to keep the number of extracted time points constant for comparable signal-to-noise ratios when averaging across more brain responses, no more than 10 time points in the music piece satisfying the above criteria and which are maximally distributed across time are extracted.

2.5. Musicological Analysis of Novel Events

2.6. Statistical Analysis of Brain Responses

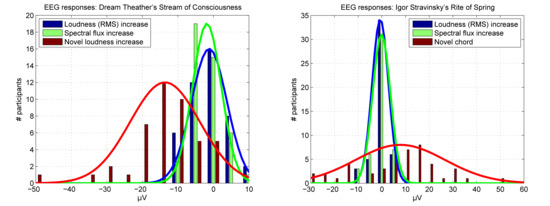

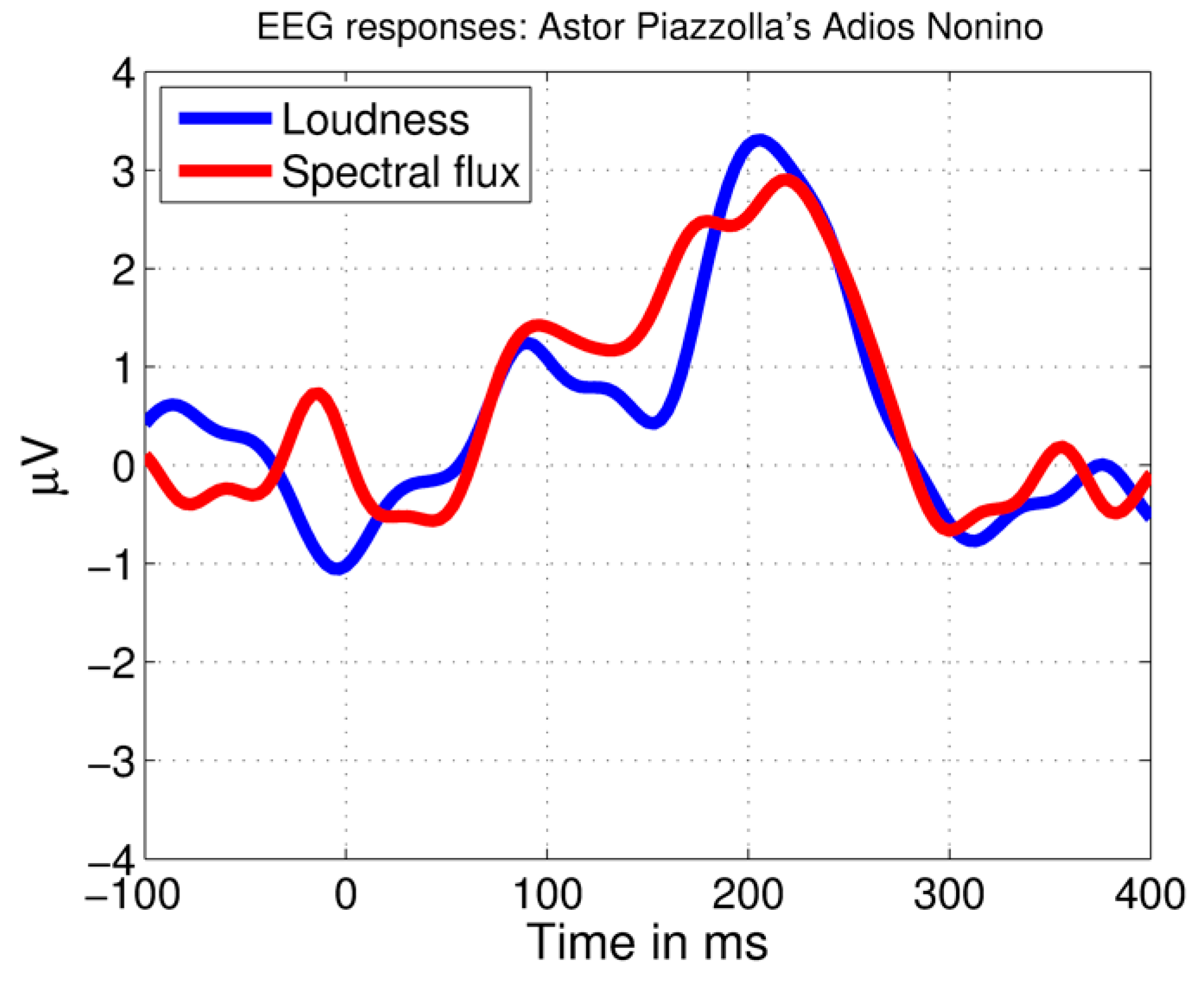

3. Results

4. Discussion

5. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Lartillot, O.; Toiviainen, P. A matlab toolbox for musical feature extraction from audio. In Proceedings of the International Conference on Digital Audio Effects, Bordeaux, France, 10–15 September 2007. [Google Scholar]

- Alías, F.; Socoró, J.; Sevillano, X. A review of physical and perceptual feature extraction techniques for speech, music and environmental sounds. Appl. Sci. 2016, 6, 143. [Google Scholar] [CrossRef]

- Alluri, V.; Toiviainen, P.; Jaaskelainen, I.P.; Glerean, E.; Sams, M.; Brattico, E. Large-scale brain networks emerge from dynamic processing of musical timbre, key and rhythm. Neuroimage 2012, 59, 3677–3689. [Google Scholar] [CrossRef] [PubMed]

- Burunat, I.; Toiviainen, P.; Alluri, V.; Bogert, B.; Ristaniemi, T.; Sams, M.; Brattico, E. The reliability of continuous brain responses during naturalistic listening to music. Neuroimage 2016, 124, 224–231. [Google Scholar] [CrossRef] [PubMed]

- Poikonen, H.; Alluri, V.; Brattico, E.; Lartillot, O.; Tervaniemi, M.; Huotilainen, M. Event-related brain responses while listening to entire pieces of music. Neuroscience 2016, 312, 58–73. [Google Scholar] [CrossRef] [PubMed]

- Poikonen, H.; Toiviainen, P.; Tervaniemi, M. Early auditory processing in musicians and dancers during a contemporary dance piece. Sci. Rep. 2016, 6, 33056. [Google Scholar] [CrossRef] [PubMed]

- Sturm, I.; Dahne, S.; Blankertz, B.; Curio, G. Multi-variate eeg analysis as a novel tool to examine brain responses to naturalistic music stimuli. PLoS ONE 2015, 10, e0141281. [Google Scholar] [CrossRef] [PubMed]

- Haumann, N.T.; Santacruz, J.L.; Kliuchko, M.; Vuust, P.; Brattico, E. Automatic capture of acoustic changes in real pieces of music: Transient and non-transient brain responses. 2018; in preparation. [Google Scholar]

- Hamalainen, M.; Hari, R.; Ilmoniemi, R.J.; Knuutila, J.; Lounasmaa, O.V. Magnetoencephalography—Theory, instrumentation, and applications to noninvasive studies of the working human brain. Rev. Mod. Phys. 1993, 65, 413–497. [Google Scholar] [CrossRef]

- Vuust, P.; Ostergaard, L.; Pallesen, K.J.; Bailey, C.; Roepstorff, A. Predictive coding of music—Brain responses to rhythmic incongruity. Cortex 2009, 45, 80–92. [Google Scholar] [CrossRef] [PubMed]

- Gebauer, L.; Kringelbach, M.L.; Vuust, P. Ever-changing cycles of musical pleasure: The role of dopamine and anticipation. Psychomusicol. Music Mind Brain 2012, 22, 152–167. [Google Scholar] [CrossRef]

- Rohrmeier, M.A.; Koelsch, S. Predictive information processing in music cognition. A critical review. Int. J. Psychophysiol. 2012, 83, 164–175. [Google Scholar] [CrossRef] [PubMed]

- Brattico, E.; Bogert, B.; Jacobsen, T. Toward a neural chronometry for the aesthetic experience of music. Front. Psychol. 2013, 4, 206. [Google Scholar] [CrossRef] [PubMed]

- Tillmann, B.; Poulin-Charronnat, B.; Bigand, E. The role of expectation in music: From the score to emotions and the brain. Wires Cogn. Sci. 2014, 5, 105–113. [Google Scholar] [CrossRef] [PubMed]

- Williams, D. Tracking timbral changes in metal productions from 1990 to 2013. Met. Music Stud. 2014, 1, 39–68. [Google Scholar] [CrossRef]

- Naatanen, R.; Picton, T. The N1 wave of the human electric and magnetic response to sound—A review and an analysis of the component structure. Psychophysiology 1987, 24, 375–425. [Google Scholar] [CrossRef] [PubMed]

- Garza-Villarreal, E.A.; Brattico, E.; Leino, S.; Ostergaard, L.; Vuust, P. Distinct neural responses to chord violations: A multiple source analysis study. Brain Res. 2011, 1389, 103–114. [Google Scholar] [CrossRef] [PubMed]

- Haumann, N.T.; Vuust, P.; Bertelsen, F.; Garza-Villarreal, E.A. Influence of musical enculturation on brain responses to metric deviants. Front. Neurosci. 2018, 12, 218. [Google Scholar] [CrossRef]

- Kliuchko, M.; Vuust, P.; Tervaniemi, M.; Bogert, B.; Sams, M.; Toiviainen, P.; Brattico, E. Fractionating music-derived neuroplasticity: Neural correlates of active versus passive musical style preference. 2018; in preparation. [Google Scholar]

- Koelsch, S.; Kilches, S.; Steinbeis, N.; Schelinski, S. Effects of unexpected chords and of performer’s expression on brain responses and electrodermal activity. PLoS ONE 2008, 3, e2631. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Neuhaus, C. Perceiving musical scale structures—A cross-cultural event-related brain potentials study. Ann. N. Y. Acad. Sci. 2003, 999, 184–188. [Google Scholar] [CrossRef] [PubMed]

- Pearce, M.T.; Ruiz, M.H.; Kapasi, S.; Wiggins, G.A.; Bhattacharya, J. Unsupervised statistical learning underpins computational, behavioural, and neural manifestations of musical expectation. Neuroimage 2010, 50, 302–313. [Google Scholar] [CrossRef] [PubMed]

- Vuust, P.; Brattico, E.; Seppanen, M.; Naatanen, R.; Tervaniemi, M. Practiced musical style shapes auditory skills. Neurosci. Music IV Learn. Mem. 2012, 1252, 139–146. [Google Scholar] [CrossRef] [PubMed]

- Alluri, V. Musical expertise modulates functional connectivity of limbic regions during continuous music listening. Psychomusicology 2015, 25, 443–454. [Google Scholar] [CrossRef]

- Burunat, I.; Brattico, E.; Puolivali, T.; Ristaniemi, T.; Sams, M.; Toiviainen, P. Action in perception: Prominent visuo-motor functional symmetry in musicians during music listening. PLoS ONE 2015, 10, e0138238. [Google Scholar] [CrossRef] [PubMed]

- Carlson, E.; Saarikallio, S.; Toiviainen, P.; Bogert, B.; Kliuchko, M.; Brattico, E. Maladaptive and adaptive emotion regulation through music: A behavioral and neuroimaging study of males and females. Front. Hum. Neurosci. 2015, 9, 466. [Google Scholar] [CrossRef] [PubMed]

- Kliuchko, M.; Heinonen-Guzejev, M.; Monacis, L.; Gold, B.P.; Heikkila, K.V.; Spinosa, V.; Tervaniemi, M.; Brattico, E. The association of noise sensitivity with music listening, training, and aptitude. Noise Health 2015, 17, 350–357. [Google Scholar] [CrossRef] [PubMed]

- Haumann, N.T.; Parkkonen, L.; Kliuchko, M.; Vuust, P.; Brattico, E. Comparing the performance of popular MEG/EEG artifact correction methods in an evoked-response study. Comput. Intell. Neurosci. 2016, 2016, 3. [Google Scholar] [CrossRef] [PubMed]

- Kliuchko, M.; Heinonen-Guzejev, M.; Vuust, P.; Tervaniemi, M.; Brattico, E. A window into the brain mechanisms associated with noise sensitivity. Sci. Rep. 2016, 6, 39236. [Google Scholar] [CrossRef] [PubMed]

- Alluri, V.; Toiviainen, P.; Burunat, I.; Kliuchko, M.; Vuust, P.; Brattico, E. Connectivity patterns during music listening: Evidence for action-based processing in musicians. Hum. Brain Mapp. 2017, 38, 2955–2970. [Google Scholar] [CrossRef] [PubMed]

- Bonetti, L.; Haumann, N.T.; Vuust, P.; Kliuchko, M.; Brattico, E. Risk of depression enhances auditory pitch discrimination in the brain as indexed by the mismatch negativity. Clin. Neurophysiol. 2017, 128, 1923–1936. [Google Scholar] [CrossRef] [PubMed]

- Burunat, I.; Tsatsishvili, V.; Brattico, E.; Toiviainen, P. Coupling of action-perception brain networks during musical pulse processing: Evidence from region-of-interest-based independent component analysis. Front. Hum. Neurosci. 2017, 11, 230. [Google Scholar] [CrossRef] [PubMed]

- Kliuchko, M.; Puolivali, T.; Heinonen-Guzejev, M.; Tervaniemi, M.; Toiviainen, P.; Sams, M.; Brattico, E. Neuroanatomical substrate of noise sensitivity. Neuroimage 2017, 167, 309–315. [Google Scholar] [CrossRef] [PubMed]

- Saari, P.; Burunat, I.; Brattico, E.; Toiviainen, P. Decoding musical training from dynamic processing of musical features in the brain. Sci. Rep. 2018, 8, 708. [Google Scholar] [CrossRef] [PubMed]

- Thiede, A.; Suppanen, E.; Brattico, E.; Sams, M.; Parkkonen, L. Magnetoencephalographic inter-subject correlation using continuous music stimuli. 2018; in preparation. [Google Scholar]

- Bonetti, L.; Haumann, N.T.; Brattico, E.; Kliuchko, M.; Vuust, P.; Näätänen, R. Working memory modulates frontal mismatch negativity responses to sound intensity and slide deviants. Brain Res. 2018. submitted. [Google Scholar]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.M. Fieldtrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput. Intell. Neurosci. 2011, 2011, 1. [Google Scholar] [CrossRef] [PubMed]

- Plomp, R.; Levelt, W.J.M. Tonal consonance and critical bandwidth. J. Acoust. Soc. Am. 1965, 38, 548–560. [Google Scholar] [CrossRef] [PubMed]

- Sethares, W.A. Consonance-based spectral mappings. Comput. Music J. 1998, 22, 56–72. [Google Scholar] [CrossRef]

- Chua, D.K.L. Rioting with stravinsky: A particular analysis of the “rite of spring”. Music Anal. 2007, 26, 59–109. [Google Scholar] [CrossRef]

- Picton, T.W.; Woods, D.L.; Baribeaubraun, J.; Healey, T.M.G. Evoked-potential audiometry. J. Otolaryngol. 1977, 6, 90–119. [Google Scholar]

- Picton, T.W.; Woods, D.L.; Proulx, G.B. Human auditory sustained potentials. 2. Stimulus relationships. Electroencephalogr. Clin. Neurophysiol. 1978, 45, 198–210. [Google Scholar] [CrossRef]

- Polich, J.; Aung, M.; Dalessio, D.J. Long latency auditory evoked potentials: Intensity, inter-stimulus interval, and habituation. Pavlov. J. Biol. Sci. 1988, 23, 35–40. [Google Scholar] [PubMed]

- Müller, M.; Prätzlich, T.; Driedger, J. A cross-version approach for stabilizing tempo-based novelty detection. In Proceedings of the ISMIR, Porto, Portugal, 8–12 October 2012. [Google Scholar]

- Flexer, A.; Pampalk, E.; Widmer, G. Novelty detection based on spectral similarity of songs. In Proceedings of the ISMIR, London, UK, 11–15 September 2005. [Google Scholar]

- Dubnov, S.; McAdams, S.; Reynolds, R. Structural and affective aspects of music from statistical audio signal analysis. J. Assoc. Inf. Sci. Technol. 2006, 57, 1526–1536. [Google Scholar] [CrossRef]

- Jun, S.; Rho, S.; Hwang, E. Music structure analysis using self-similarity matrix and two-stage categorization. Multimed. Tools Appl. 2015, 74, 287–302. [Google Scholar] [CrossRef]

- Hartmann, M.; Lartillot, O.; Toiviainen, P. Musical Feature and Novelty Curve Characterizations as Predictors of Segmentation Accuracy. In Proceedings of the Smc 2017: Proceedings of the 14th Sound and Music Computing Conference, Espoo, Finland, 5–8 July 2017; Lokki, T., Pätynen, J., Välimäki, V., Eds.; pp. 365–372. [Google Scholar]

- Kallinen, K. Emotional ratings of music excerpts in the western art music repertoire and their self-organization in the kohonen neural network. Psychol. Music 2005, 33, 373–393. [Google Scholar] [CrossRef]

- Tillmann, B.; Bharucha, J.J.; Bigand, E. Implicit learning of tonality: A self-organizing approach. Psychol. Rev. 2000, 107, 885–913. [Google Scholar] [CrossRef] [PubMed]

- Toiviainen, P.; Krumhansl, C.L. Measuring and modeling real-time responses to music: The dynamics of tonality induction. Perception 2003, 32, 741–766. [Google Scholar] [CrossRef] [PubMed]

- Toiviainen, P. Visualization of tonal content with self-organizing maps and self-similarity matrices. Comput. Entertain. CIE 2005, 3, 3. [Google Scholar] [CrossRef]

- Collins, T.; Tillmann, B.; Barrett, F.S.; Delbe, C.; Janata, P. A combined model of sensory and cognitive representations underlying tonal expectations in music: From audio signals to behavior. Psychol. Rev. 2014, 121, 33–65. [Google Scholar] [CrossRef] [PubMed]

- Rao, Z.; Guan, X.; Teng, J. Chord recognition based on temporal correlation support vector machine. Appl. Sci. 2016, 6, 157. [Google Scholar] [CrossRef]

- Jensen, K. Sensory dissonance using memory model. Dan. Musicol. Online Spec. Ed. 2015, 59–68. http://www.danishmusicologyonline.dk/arkiv/arkiv_dmo/dmo_saernummer_2015/dmo_saernummer_2015_musik_hjerneforskning_03.pdf.

- Sak, H.; Senior, A.; Beaufays, F. Long short-term memory recurrent neural network architectures for large scale acoustic modeling. In Proceedings of the INTERSPEECH, Singapore, 14–18 September 2014; pp. 338–342. [Google Scholar]

- Mcclelland, J.L.; Mcnaughton, B.L.; Oreilly, R.C. Why there are complementary learning-systems in the hippocampus and neocortex—Insights from the successes and failures of connectionist models of learning and memory. Psychol. Rev. 1995, 102, 419–457. [Google Scholar] [CrossRef] [PubMed]

- Frank, M.J.; Loughry, B.; O’Reilly, R.C. Interactions between frontal cortex and basal ganglia in working memory: A computational model. Cogn. Affect. Behav. Neurosci. 2001, 1, 137–160. [Google Scholar] [CrossRef] [PubMed]

- O’Reilly, R.C.; Munakata, Y.; Frank, M.J.; Hazy, T.E. Computational Cognitive Neuroscience, 1st ed.; Wiki Book: Boulder, CO, USA, 2012. [Google Scholar]

- Gordon, J.W. The perceptual attack time of musical tones. J. Acoust. Soc. Am. 1987, 82, 88–105. [Google Scholar] [CrossRef] [PubMed]

| Piece | Feature | n MoRI Peaks | n Time Points | PLIP (s.) | Distance to Prev. (s.) |

|---|---|---|---|---|---|

| AN (tango) | RMS amplitude | 1773 | 10 | 1.502 (1.009–5.604) | 14.710 (9.574–94.563) |

| Spectral flux | 3009 | 10 | 2.010 (1.27–5.601) | 13.355 (7.932–130.003) | |

| Brightness | 2737 | 10 | 1.392 (1.069–4.635) | 9.961 (5.917–41.248) | |

| Zero-crossing rate | 2628 | 10 | 1.810 (1.262–3.723) | 11.219 (6.950–50.958) | |

| Roughness | 2649 | 10 | 4.747 (1.056–32.270) | 25.715 (10.576–93.774) | |

| SC (metal rock) | RMS amplitude | 2509 | 5 | 1.139 (1.009–14.782) | 7.869 (5.105–91.639) |

| Spectral flux | 3198 | 5 | 1.239 (1.176–1.881) | 68.7329 (20.286–198.027) | |

| Brightness | 3172 | 0 | - | - | |

| Zero-crossing rate | 2651 | 1 | - | - | |

| Roughness | 3541 | 1 | - | - | |

| RS (symphonic) | RMS amplitude | 2235 | 10 | 3.823 (1.049–13.246) | 17.882 (9.084–66.265) |

| Spectral flux | 3193 | 10 | 2.681 (1.182–6.720) | 10.208 (7.512–53.475) | |

| Brightness | 3078 | 10 | 1.425 (1.079–2.814) | 13.961 (4.675–84.237) | |

| Zero-crossing rate | 2884 | 10 | 1.313 (1.016–1.945) | 22.206 (5.131–61.517) | |

| Roughness | 2820 | 10 | 4.494 (1.062–30.552) | 24.102 (12.947–54.451) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Haumann, N.T.; Kliuchko, M.; Vuust, P.; Brattico, E. Applying Acoustical and Musicological Analysis to Detect Brain Responses to Realistic Music: A Case Study. Appl. Sci. 2018, 8, 716. https://doi.org/10.3390/app8050716

Haumann NT, Kliuchko M, Vuust P, Brattico E. Applying Acoustical and Musicological Analysis to Detect Brain Responses to Realistic Music: A Case Study. Applied Sciences. 2018; 8(5):716. https://doi.org/10.3390/app8050716

Chicago/Turabian StyleHaumann, Niels Trusbak, Marina Kliuchko, Peter Vuust, and Elvira Brattico. 2018. "Applying Acoustical and Musicological Analysis to Detect Brain Responses to Realistic Music: A Case Study" Applied Sciences 8, no. 5: 716. https://doi.org/10.3390/app8050716