1. Introduction

This paper concerns one of the challenges in automatic multimodal affect recognition, i.e., mapping between emotion representation models. There are numerous emotion recognition algorithms that differ on input information channels, output labels, and representation models and classification methods. The most frequently used emotion recognition techniques that might be considered when designing an emotion monitoring solution include: facial expression analysis, audio (voice) signal analysis in terms of modulation, textual input analysis, physiological signals and behavioral patterns analysis. As literature on emotion recognition methods is very broad and has already been summarized several times, for an extensive bibliography, one may refer to Gunes and Piccardi [

1] or Zeng et al. [

2].

Hupont et al. claim that multimodal fusion improves robustness and accuracy of human emotion analysis. They observed that current solutions mostly use one input channel only and integration methods are regarded as ad-hoc [

3]. Late fusion combines the classification results provided by separate classifiers for every input channel; however, this requires some mapping between emotion representation models used as classifier outputs [

3]. Differences in emotion representation models used by emotion recognition solutions are among the key challenges in fusing affect from the input channels.

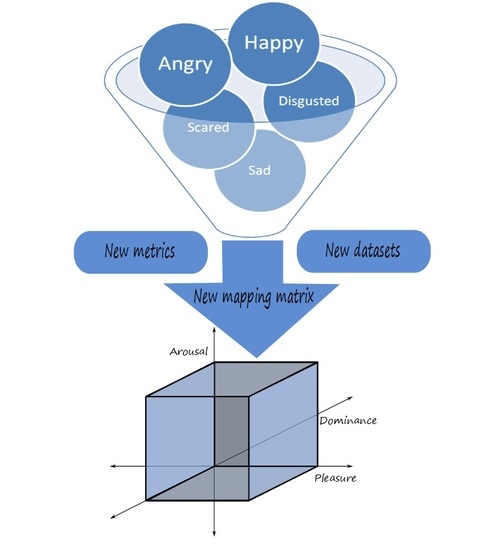

This paper concentrates on the challenge of mapping between emotion representation models. The purpose of the paper is to propose a method (including a set of metrics) for an evaluation of the mapping accuracy, as well as to propose a new mapping. The main research question addressed in this study is as follows: How to compare results from multiple emotion recognition algorithms, especially when they are provided in different affect representation models?

The studies related to this research fall into two categories: (1) emotion representation models, which are used as an output of affect recognition solutions; and (2) research on mapping algorithms between the models.

(1) There are three major model types of emotional state representation: discrete, dimensional and componential [

4]. Discrete models distinguish a set of basic emotions (word labels) and describe each affective state as belonging to a certain emotion from the predefined set. A significant group of emotion recognition algorithms uses emotion representation based on labels only, e.g., distinguishing stress from a no-stress condition [

5]. The label-based representation causes serious integration problems, when the label sets are different. Fuzziness of linking concepts with words and a problem of semantic disambiguation are the key issues causing the difficulty. One of the best known and extensively adapted discrete representation model is Ekman’s six basic emotions model, which includes joy, anger, disgust, surprise, sadness and fear [

6]. Although the model was not initially proposed for emotion recognition, it is the one used most frequently, for example in e-learning affect-aware solutions [

7]. Simple affective applications, such as games, frequently use very simple models of two or three labels [

8,

9]. Furthermore, some more sophisticated solutions, applied in e-learning, incorporating affect recognition, use their own discrete label set [

10,

11,

12].

Dimensional models represent an emotional state as a point in a multi-dimensional space. The circumplex model of affect, one of the most popular dimensional models, was proposed by Russell [

13]. In this model, any emotion might be represented as a point in a space of two continuous dimensions of valence and arousal. The valence (pleasure) dimension differentiates positive from negative emotions, while the dimension of arousal (activation) enables a differentiation between active and passive emotional states. Both dimensions are continuous with neutral affective states represented in the middle of the scale [

14]. The model was repeatedly extended with new dimensions, such as dominance and imageability. One of the extended models found some applications in affective computing, for example, the PAD (pleasure-arousal-dominance) model [

15,

16]. Furthermore, Ekman’s six emotions model has been adapted as a dimensional model with each emotion forming one dimension (sometimes a dimension for neutral state is also added). The dimensional models are frequently used by the emotion recognition algorithms and off-the-shelf solutions. Dimensional adaptation of Ekman’s six basic emotions is used for solutions based on facial expressions, and Facial Action Coding Scheme (FACS) is the most widely implemented technique [

17]. Sentiment analysis of textual inputs (used in opinion mining) mainly explores the valence dimension of the Circumplex/PAD model [

18]. Emotion elicitation techniques based on physiology mostly report only on the arousal dimension [

19], as positive and negative experiences might cause a similar activation of the nervous system.

Componential models use several factors that constitute or influence the resulting emotional state. The OCC model proposed by Ortony, Clore and Collin defines a hierarchy of 22 emotion types representing all possible states which might be experienced [

20]. In contrast to discrete or dimensional models of emotions, the OCC model takes into account the process of generating emotions. However, several papers outline that the 22 emotional categories need to be mapped to a (possibly) lower number of different emotional expressions [

21,

22].

The analysis of the emotion recognition solutions reveals that there is no one commonly accepted standard model for emotion representation. Dimensional adaptation of Ekman’s six basic emotions and the Circumplex/PAD model are the ones widely adopted in emotion recognition solutions. The problem of mapping is multifaceted, including mapping between dimensional and discrete representations, as well as mapping among different dimensional models. There are several solutions to the first issue: using weights, representing discrete labels as points in dimensional spaces and so on. However, the paper concentrates on the latter issue of mapping between dimensional representations. Appreciating label-based and componential models, a further part of this study focuses on mapping between dimensional models only.

(2) There are few studies on mapping between emotion representation models. The papers provide mapping techniques that enable conversions among a personality model, an OCC model and a PAD model of emotion. The mappings have a significant explanatory value of how personality characteristics, moods and emotions are interrelated [

16,

23]. However, as the personality model is not used in emotion recognition, they are not directly applicable in this study. Exploration of the literature provides one model of mapping between Ekman’s five basic emotions and the PAD model [

24]. The mapping technique reported in [

23,

24] provides a linear mapping based on a matrix of coefficients provided in Equation (1).

The mapping has been used in several further studies [

25,

26] and remains the most popular one. The existing matrix was not trained on any dataset, instead it was derived from an OCC model. The existing matrix might be considered rather as a theoretical model than a model based on evidence. Nevertheless, this is the only known mapping matrix; therefore, we use it as a reference, as there is no other one to compare our solution to. One might notice, that out of six emotions in Ekman’s basic set, the mapping utilizes only five, excluding surprise. An analysis of late fusion studies reveals that two approaches are applied: all emotion recognition algorithms use the common representation model as an output [

1], or all representations are mapped into a Circumplex/PAD model of emotions. According to the author’s best knowledge, no method nor metrics have been proposed so far for evaluation of the mapping accuracy. No alternative mapping to the one presented in Equation (1) is known to the author.

The study presented in this paper aims at proposing a procedure and metrics to evaluate mapping accuracy, as well as elaborating a new mapping between Ekman’s six basic emotions and the Pleasure-Arousal-Dominance Model. The only known mapping, as reported above, is used as a reference in the evaluation procedure. The thesis of the paper was formulated as follows: The proposed metrics set and procedure allows to develop and evaluate mappings between dimensional emotion representation models.

2. Materials and Methods

In this study, mapping techniques among two emotion representation models—Ekman’s six basic emotions (dimensional extension) and the PAD model—are explored in detail, using three datasets retrieved from affect-annotated lexicons. In this section, we report: (1) the procedure for obtaining and evaluation of the mapping; (2) the datasets construction; and (3) the metric set used in the evaluation process.

2.1. The Procedure

The procedure of this study was as follows:

- (1)

Preparation of the datasets to train, test and validate the mapping.

- (2)

Setting up the metrics and thresholds (mapping precision).

- (3)

Estimation using the state-of-the-art mapping matrix (for reference).

- (4)

Training new models for mapping with linear regression.

- (5)

Within-set and cross-set evaluation of the proposed new mapping.

The procedure proposed in this study, and especially the metric set and the datasets, might be used in further research for creating more mapping models using classifiers and a machine learning approach. The steps are described in detail in the following paragraphs.

2.2. The Datasets

There are at least two approaches that might be considered for obtaining new mappings between emotion representation models. The first one is to use a set of heuristics based on expert (psychological) knowledge, and that approach was the basis for creation of the state-of-the-art matrix. The second approach is to find a dataset that is annotated with both emotion representation models and to use statistical or machine learning techniques to find a mathematical model for the mapping. The latter approach was chosen for this study. The datasets were obtained by pairing lexicons of affect-annotated words.

The evaluation sets were retrieved from affect-annotated lexicons that use PAD and/or a dimensional adaptation of Ekman’s six basic emotions model. The available lexicons might be created by manual, automatic or semi-automatic annotation [

27]. As we wanted to use the data retrieved from the lexicons as the “ground truth”, only the lexicons with manual annotations were taken into account. The following lexicons were used in this study (historical order):

- (1)

The Mehrabian and Russel lexicon. This lexicon is a relatively old one, as it was developed and published in 1977 by Mehrabian and Russel [

28]. It was developed by manual annotation of English words and contains 151 words. The annotators used a PAD model for representation of emotions for the first time and the PAD model has been a reference model ever since then. The annotated sets include mean and standard deviations of evaluations per dimension provided for all participants. Sample words with annotations are presented in

Table 1.

There are at least two observations derived from studying the lexicon: firstly, some words are more ambiguous than others; and, secondly, some dimensions are more ambiguous than others (distribution of standard deviations differs).

- (2)

The ANEW Lexicon. The ANEW lexicon was initially developed for English [

29], but has many national adaptations. The lexicon contains the most frequently used words (1040). Annotation is based on a PAD model, and mean as well as standard deviations per dimension are provided. Sample words with annotations are presented in

Table 2.

Please note the difference in scaling the dimensions for Russel and Mehrabian’s and ANEW lexicons. Re-scaling is required for comparisons.

- (3)

The Synesketch lexicon. Synesketch [

30] contains 5123 English words annotated manually with overall emotion intensity and Ekman’s six basic emotions (anger, joy, surprise, sadness, disgust and fear). The Synesketch lexicon was found partially invalid from the viewpoint of this study—some words had no annotations in any of the dimensions of the basic six emotion set. The all-zeros vector might be interpreted in two ways: a case of neutral annotation or an error. An additional feature provided within the lexicon (overall emotion intensity) was used to differentiate the two cases. If overall emotion intensity was assigned a non-zero value and the other dimensions were not, the vector was considered invalid and excluded from further analysis. Sample words with annotations are presented in

Table 3.

- (4)

The NAWL lexicon. The fourth of the lexicons, NAWL (Nencki Affective Word List), was manually annotated twice by a significant number of annotators using two different representation models, forming a natural set for this study [

31,

32]. The models used for annotation were: pleasure-arousal-imageability and a subset of Ekman’s six, namely: happiness, anger, disgust, sadness and fear. Please note, that for this set the dimensions of dominance and surprise are omitted. The imageability dimension as an additional one was not used in this study. The annotated set includes mean and standard deviations of evaluations per dimension provided for all participants and also for males and females separately. All participants’ metrics are used in this study. The number of words in the lexicon is 2902. Sample words with annotations are presented in

Table 4.

As the lexicons, and even sometimes the dimensions within one lexicon, were annotated with different scales, re-scaling was performed for comparability of the results for different set and dimensions. A scale of <0.1> was chosen for all dimensions. Re-scaling followed the definitions of mean and standard deviation for addition and multiplication.

The lexicons were automatically paired, which required finding a common subset of words, then the operation of the pair-wise (same word) concatenation of the two annotations was performed. As a result of this pairing procedure, the following datasets were created for this study:

(1) The ANEW-MEHR dataset

The dataset was a result of pairing ANEW and Russel and Mehrabian’s lexicons [

28,

29]. The common subset of words is the same as for the latter (smaller) lexicon (151 words). Please note that, in this dataset, there are two independent annotations in the PAD scale paired and no annotation in Ekman’s dimensions. The set was created purposefully for estimating residual error, that is a result of pairing two independent mappings. The metric values, calculated based on the dataset, might be treated as marginal accuracies that might be obtained from the mappings based on pairing lexicons. The detailed specification of the dataset is provided in

Appendix A.

(2) The SYNE-ANEW dataset

The second set used in this study was paired based on ANEW [

29] and Synesketch [

30] lexicons. Only a common subset of words was used as an evaluation set (267 words). The set uses all dimensions of the PAD model (including dominance) and uses a complete set of Ekman’s six basic emotions (including surprise). The detailed specification of the dataset is provided in

Appendix B.

(3) The NAWL-NAWL dataset

As in NAWL, [

31,

32], a list of words was annotated twice, the creation of the dataset was (almost) automatic—the only operation to perform was a pair-wise (same word!) concatenation of the two annotations from separate files. The word count equals the size of the lexicon (2902). The size of the lexicon makes it preferable over other datasets: however, one must note that the dataset does not include dimensions of dominance and surprise. The detailed specification of the dataset is provided in

Appendix C.

As the sets might be considered complementary rather than competitive, all three are employed in this mapping technique elaboration study. The datasets are available as a

supplementary material.

2.3. Metric Set

While evaluating classifiers (and the emotion recognition algorithm is one of these), precision and accuracy are the most popular metrics. Regression models (with continuous outputs) are typically measured by MAE (mean average error), RMSE (root mean squared error) or R2 metric. All the typically used metrics are invariant to the required precision of the estimate and prone to misinterpretation if the variance of estimate error is high. Mapping between two emotion representation models might be very accurate, if the differences are calculated using precise numbers. However, with all the fuzziness related to emotion concepts, high precision might be misleading. Mathematically, we could find a difference of 0.01 in (−1, 1) scale, but this would not make sense in interpreting the emotion recognition result. Therefore, apart from typical measures of regression model evaluation, a number of metrics was proposed within this study that measures accuracy above a given precision threshold. Moreover, two approaches were adapted, treating dimensions independently and jointly (as 3D space). The definition of the proposed metric set follows. Both the proposed and typical metrics for estimate error are reported within the results section.

2.3.1. Precision in Emotion Recognition

The word annotations, with regard to sentiment, as well the emotional states themselves, are fuzzy and even sometimes ambiguous. One might note that, together with an average annotation of the word, standard deviation is reported. On the other hand, in most of the applications of affect recognition, the decisions are based on simple models, for example, two or three classes of emotions. Therefore, in most of the cases, small mathematical differences in emotion estimates are not interpretable within the application context. In this study, I proposed to use the following precision estimates: (1) absolute distance (mathematical concept equivalent to MAE (mean absolute error)); (2) distance size smaller than 10% of the scale; (3) distance size smaller than 20% of the scale; and (4) distance size smaller than standard deviation of the evaluations (if available). The latter approach uses an additional feature available for the sets, i.e., standard deviation. Please note that the metric thresholds for precision might be adjusted, whenever necessary.

2.3.2. Metrics for the Dimensions Treated Independently

The independently treated dimensions are operationalized with the following metrics (if variables in consecutive equations are assigned the same definition, the definitions are not repeated):

MAE mean absolute distance between the actual and the estimated emotional state per dimension

where

xi—the dimension value for ith word (retrieved from the lexicon).

–the dimension value for ith word (estimated using mapping).

n–number of words within the set.

X.

M

10 relative number of estimates that differ from the actual value by less than 10% of the scale

where

M

20 relative number of estimates that differ from the actual value by less than 20% of the scale

where

M

SD relative number of estimates that differ from the actual value by less than standard deviation size

where

2.3.3. Metrics for the Joint Dimensions Accuracy

The joint dimensions accuracy was operationalized with the following metrics:

PAD

abs mean absolute distance between the actual and the estimated emotional state in PAD space (calculated using Pythagorean theorem):

where

ddi—direct distance between estimated and actual emotion calculated for all dimensions using Pythagorean theorem.

pi, ai, di—the dimension value for ith word (retrieved from the lexicon).

—the dimension value for ith word (estimated using mapping).

n—number of words within the set.

(PAD

10) relative number of estimates that differ from the actual value by less than 10% of the scale in each dimension.

where

(PAD

20) relative number of estimates that differ from the actual value less than 20% of the scale in each dimension.

where

(PAD

SD) relative number of estimates that differ from the actual value for less than standard deviation size

where

(DD

10) relative number of estimates that have direct distance from the estimate smaller than 10% of the scale.

where

(DD

20) relative number of estimates that have direct distance from the estimate smaller than 10% of the scale.

where

The above presented set of metrics (four per dimension and six joint-dimensions metrics) are used in the further analysis as accuracy measures.

2.4. Evaluation Calculations

Firstly, the lexicons were re-scaled and paired (details are provided in

Section 2.2) forming datasets for further study. Then, the calculations held within the study were performed with Knime analytical tool as described below.

For estimating residual error of pairing lexicons, the ANEW-MEHR dataset was processed with the following steps: (1) calculation of absolute difference for valence, arousal and dominance dimensions per word; (2) calculation of typical metrics (MAE, RMSE, and R

2) for the dataset; (3) calculation of absolute distance in 3D model of emotions per word; (4) comparison with thresholds per word; and (5) calculation of frequency, and threshold-based metrics. The results are reported in

Section 3.1.

For reference model mapping evaluation, two datasets, SYNE-ANEW and NAWL-NAWL, were processed with the same procedure: (1) application of the reference mapping to the dataset, adding columns of predicted valence, arousal and dominance; (2) calculation of absolute difference between the actual and predicted values of dimension per word; (3) calculation of typical metrics (MAE, RMSE, and R

2) for the dataset; (4) calculation of absolute distance in 3D model of emotions per word; (5) comparison with thresholds per word; and (6) calculation of frequency and threshold-based metrics. The results are reported in

Section 3.2.

For obtaining and evaluating new mapping matrices, two datasets, SYNE-ANEW and NAWL-NAWL, were processed independently using the same procedure: (1) the linear regression model was trained and a mapping matrix was obtained for the set using a ten-fold cross-validation scheme with a random selection of words to separate training and validation subsets and the obtained result sets included both the actual and the estimated value of dimensions per word; (2) calculation of absolute difference between the actual and predicted values of dimension per word; (3) calculation of typical metrics (MAE, RMSE, and R

2) for the dataset; (4) calculation of absolute distance in a 3D model of emotions per word; (5) comparison with thresholds per word; and (6) calculation of frequency- and threshold-based metrics. The proposed matrices are reported in

Section 3.3, while the 10-fold cross-validation results are reported in

Section 3.4.

Additionally, the cross-set validation was performed for the evaluation of generalizability using the following scheme: (1) application of the new mapping matrix obtained from ANEW_SYNE to the NAWL-NAWL dataset, adding columns of predicted valence, arousal and dominance; (2) calculation of absolute difference between the actual and predicted values of dimension per word; (3) calculatiaon of typical metrics (MAE, RMSE, and R

2) for the dataset; (4) calculation of absolute distance in a 3D model of emotions per word; (5) comparison with thresholds per word; and (6) calculation of frequency- and threshold-based metrics. The results are reported in

Section 3.5.

3. Results

Study results are reported for the evaluation sets defined in

Section 2.2 and with the metrics operationalized in

Section 2.3.

3.1. The Margin Accuracies—Calculations for the ANEW-MEHR Dataset

The ANEW and Russel and Mehrabian’s lexicons are both independently created and use the same PAD model for annotation. The differences (averaged over all words) among the annotations derived from the two lexicons are used in this study as an estimate of residual error, that is the result of pairing two independent mappings. The metric values are provided in

Table 5.

The observations derived from pairing the annotations using the same PAD model are the following:

- -

There is a non-zero residual error for mapping based on pairing independent affect-annotated lexicons.

- -

The residual error is diverse among the dimensions within the PAD model—it is lowest for valence (P), higher for arousal (A) and the highest for dominance (D). This observation is compliant with results of dimensions understanding reported in literature (dominance is the least understood dimension resulting in more ambiguous annotation results).

- -

For a threshold of 10% of the scale, 80.6% of words for valence, 57.1% of words for arousal, and 62.5% for dominance have consistent annotations; for a threshold of 20% of the scale, 95.5% of words for valence, 92% of words for arousal, and 90.2% for dominance have consistent annotations. This observation is compliant with a typical precision-accuracy trade-off.

- -

Considering the ambiguity of annotations, it is advisable to use the metrics based on the standard deviation for annotated words, as using the same set threshold value for every dimension might cause misinterpretation of accuracy results.

- ∙

The traditional metrics (MAE, RMSE and R2) allow for the interpretation of dimensions independently; however, in practical settings, it would be important to have a mapping that deals with all dimensions within the set threshold; the proposed metrics: PAD10, PAD20, PADSD, DD10 and DD20 allow for the interpretation of the mapping accuracies for all dimensions together (treating the PAD model as a typical 3D space).

The residual error obtained from the comparison will be used for reference in an interpretation of the results obtained in the study.

3.2. The Reference Mapping Accuracies for the SYNE-ANEW and NAWL-NAWL Datasets

The mapping matrix derived from literature and reported as in Equation (1) was used as a reference model for the model proposed in this study. The results of mapping using the known matrix for the two datasets: SYNE-ANEW and NAWL-NAWL are provided in

Table 6 and

Table 7, consecutively.

The absolute measures for the dataset show small differences between the dimensions. A relatively high mean distance is obtained for joined dimensions, which is partially explained by cumulating errors from all dimensions, as the metric PADABS is calculated based on the geometrical distance in 3D space.

It seems that setting a proper threshold is crucial and has an enormous influence on the resulting metrics (precision-accuracy trade-off). For a high precision (10% of the scale), accuracy results are around 25% for valence, 24% for arousal, and 23% for dominance. With lower precision requirements, the accuracies increase (46% for valence, 48% for arousal, and 45% for dominance). Setting the accuracy threshold based on standard deviation per word increases accuracies above 55% for the dimensions of arousal and dominance.

The joined dimensions accuracies are lower than for separate dimensions, which could have been expected. If for one dimension mapping is precise, it might not be for the other one and, as a result, the errors cumulate over dimensions. Therefore, we have only 1.5% accuracy for an expected precision of 10% of the scale, 14.6% accuracy for 20% precision and 25.8% for SD-based precision.

For the second dataset, the mapping accuracies are higher for all precision thresholds. This might be a result of the set size and quality (the set is derived from one lexicon purposefully annotated twice); however, one must notice the lack of Surprise and Dominance dimensions among the annotation labels. Please note, that due to the latter limitation, the results between the two sets are comparable for the single-dimension metrics, but incomparable for joint-dimensions metrics. Accuracies are still dependent on the set precision threshold.

As precision and accuracy are interchangeable, one might go further in proposing new thresholds. Perhaps, the acceptable precision should be set case-by-case, as this might depend on the context of the emotion recognition.

The accuracy results obtained for the known matrix show some room for improvement, especially for higher precision requirements and regarding all dimensions. The results justify undertaking this study towards new mappings.

3.3. The Proposed Mapping Matrices

Two mapping matrices were obtained using linear regression learning with SYNE-ANEW and NAWL-NAWL datasets. The procedure involved a 10-fold cross-validation with linear regression coefficients averaged over repetitions. Cross-validation is a validation technique for assessing how the results of a prediction model would generalize to an independent data set. It is a well-established alternative to the hand-made partitioning of a dataset to training and validation subsets for regression models [

33]. One round of cross-validation involves partitioning a sample of data into complementary subsets, performing the analysis on one subset (training set), and validating the analysis on the other subset (validation set). Multiple rounds of partitioning are performed and the validation results are averaged over the rounds to evaluate the model. There are several methods of performing cross-validation [

34]. In this procedure, a k-fold technique was used, with 10 folds. In 10-fold cross-validation, the original sample was partitioned randomly into 10 sub-samples of equal size. In a single fold, a single subsample was retained as the validation set, and the remaining nine sub-samples were used as training data. As a result, each of the 10 sub-samples was used exactly once as the validation data. The partitioning was randomized, and the cross-validation procedure was performed using Knime analytical tool.

The cross-validation procedure was repeated 10 times. The matrix coefficients obtained via repetition were similar (with differences <0.03 for all matrix coefficients) and were averaged over the 10 resulting models.

The two resulting matrices are provided as Equations (13) and (14) consecutively. The existing mapping matrix from literature was repeated with a column shuffle in Equation (15) for comparison.

3.4. The Proposed Mapping Accuracies for the SYNE-ANEW and NAWL-NAWL Datasets

For evaluation of the new mapping matrices, a 10-fold cross-validation procedure was performed, as described in

Section 3.3 for the two datasets SYNE-ANEW and NAWL-NAWL independently. Cross-validation results for the datasets are presented in

Table 8 and

Table 9.

The proposed mapping matrix validation results on the SYNE-ANEW dataset are relatively high. For the dimension of valence, over 33% accuracy was obtained for the 10% precision threshold, while, for the 20% and SD-based precision thresholds, the accuracy was around 60%. For the dimension of arousal and dominance, over 58% accuracy was obtained for the 10% precision threshold, while, for the 20% and SD-based precision thresholds, the accuracy exceeded 90%. Single-dimension results are very promising. However, the joint dimension accuracies are lower. Setting a 10% precision threshold resulted in 17.8% accuracy, while the 20% and SD-based thresholds resulted in 54.9% and 59.9% accuracies, respectively. The observed accuracies are higher than for the reference mapping for the same dataset; however, these might still be insufficient for high precision requirements.

The proposed mapping matrix validation results on the NAWL-NAWL dataset are very high. For the dimension of valence, over 90% accuracy was obtained for all precision thresholds. For the dimension of arousal, over 74% accuracy was obtained for the 10% precision threshold, while, for the 20% and SD-based precision thresholds, the accuracy exceeded 97%. Single-dimension results are satisfactory. The joint dimension accuracies are slightly lower, but still exceed 97% for the 20% and SD-based precision thresholds. The observed accuracies are higher than for the reference mapping for the same dataset.

3.5. Cross-Set Accuracies for the Proposed Mapping

Additionally, the cross-set validation was performed for evaluation of generalizability following the procedure described in

Section 2.4. The results are presented in

Table 10.

The cross-set validation results on the NAWL-NAWL dataset are moderate: higher than reference mapping, but lower than in cross-validation on the same dataset. This result was expected—usually cross-validation procedures provide higher accuracies. For the dimension of valence: over 56% accuracy was obtained for the 10% precision threshold, while, for 20% and SD-based precision thresholds, the accuracy exceeded 87%. For the dimension of arousal: over 50% accuracy was obtained for the 10% precision threshold, while, for 20% and SD-based precision thresholds, the accuracy exceeded 84%. Single-dimension results are lower than in cross-validation, but still satisfactory for lower precision requirements. The joint dimension accuracies are lower. Setting a 10% precision threshold resulted in 30% accuracy, while the 20% and SD-based thresholds resulted in about 81% accuracies. The observed accuracies are higher than for the reference mapping for the same dataset; however, for high precision requirements this might still be insufficient.

4. Summary of Results and Discussion

The mapping technique using the linear transformation based on a coefficient matrix was evaluated in this study. The results may be summarized in the following statements.

Obtaining both accurate and precise mapping results is a challenge. The results confirm standard observations of precision-accuracy conflict. The mapping model provided is better than a reference model, but still might be insufficient for high precision requirements. The summary of results is provided in

Table 11.

In the SYNE-ANEW dataset, the accuracy results are better for the proposed mapping than for the reference mapping. The same applies to the NAWL-NAWL results. In the latter, the results in cross-validation are even better than for the ANEW-MEHR dataset. Interpretation of cross-set evaluation results is limited because the dataset (and obtained matrices) differed in dimension count (for one of the datasets surprise and dominance was missing).

The proposed mapping matrices might be applied in the comparison and fusion of emotion recognition results; however, the required precision must be taken into account. Acceptable precision should be set based on the emotion recognition goals and application context, and especially on the significance of I type and II type error. Using standard deviation as a threshold for precision is one of the promising directions.

The proposed method and metrics for accuracy proved useful and allowed the mapping technique to be evaluated. Please note, that the proposed metrics are:

dataset-independent: might be applied to any dataset constructed

scale-independent: due to setting precision thresholds as percent of the scale or SD-based

model-dependent: they have been proposed specifically for the pleasure-arousal dominance model; however, they might be adapted to any dimensional emotion representation model

ambiguity-robust: valid for metrics based on the standard deviation threshold: MSD, PADSD

The author acknowledges that this study is not free from some limitations. The most important threats to its validity are listed below.

- (1)

Only three mapping matrices were explored in detail. Perhaps more models might be proposed or retrieved from the datasets available. A comparison of the mapping accuracies obtained from a different mapping model and the same datasets would provide more insight.

- (2)

The 10%, 20% and SD measures for threshold were chosen arbitrarily. Other thresholds might be considered for other contexts and input channels. The required precision is dependent on the context of application. Although 20% of the scale might seem a broad precision margin, in most cases, it might be sufficient, as frequently only two classes of emotions are analyzed (e.g., a stress and no-stress condition). Our previous study showed, for example, that for word annotations an intra-rater inconsistency of up to 15% of the scale was encountered in 89.1%, 74.3% and 79.4% of the ratings for valence, arousal and dominance, respectively [

35]. Some inconsistency in annotations with affect is imminent due to the ambiguous nature of emotion; therefore, in this study we decided to report results for 10% as well as 20% of the scale, followed by the standard-deviation-based metric.

- (3)

The evaluation is based only on sets retrieved from affect-annotated lexicons. It is expected that the results might be different for sets based on alternative input channels. However, training a mapping model requires a double annotation of some media (the same word/image/sound/video being assigned values in two emotion representation models). The first challenge in this research was to actually find datasets that are annotated twice, using two different emotion representation models, and that was found true only for the presented lexicons. Basing the mapping on lexicons only might limit the generalizability of the obtained solution, but currently there are no other twice-annotated datasets available.

The new matrices were obtained using the 10-fold cross-validation method for regression model. Cross-validation, although well-established, is also reported as having both advantages and disadvantages. More recent reviews suggest that traditional cross-validation needs to be supplemented with other studies [

36]. Therefore, in this study, we report both cross-validation, as well as cross-set evaluation results.

The purpose of the paper was to propose a method and a set of metrics for the evaluation of the mapping accuracy and to propose a new mapping according to the procedure. Despite the limitations, the purpose was achieved, the new mapping was formed, and the thesis the proposed metric set allows for evaluation of emotion model mapping accuracy might be accepted.

Implications of the study include the following:

- (1)

Affective states recognition algorithms provide estimates that might be wrong or imprecise. Additionally, any mapping between the emotion representation models might enlarge uncertainty related to emotion recognition, as there is non-zero accuracy error for all mappings. Therefore, it is always worthwhile considering an emotion recognition solution that provides an estimate in the representation model and that is better fitted into your context of applications.

- (2)

The new mapping matrices might be applied when you need emotions described with a PA or PAD model and get Ekman’s six basic emotions vector form the affect recognition system. The mapping might also be found useful in a late fusion of hypotheses on emotional states obtained from multiple affect recognition algorithms. In this study, two matrices were proposed (Equations (13) and (14)) and it is important to emphasize the differences between them. The first one was derived from a smaller dataset, and is therefore less accurate, but this applies to a complete list of dimensions. However, if you expect dominance and surprise not to play an important role in your application context, you might consider the latter one, as it was derived from a bigger dataset and therefore achieved higher accuracies for all precision thresholds.

- (3)

One of the crucial issues in applying the mapping is setting a precision threshold, which might be context-dependent. A comparison of the metrics used in this study showed that using standard deviation as a threshold might be advisable in some contexts, as it takes into account the ambiguity of the annotated words.

- (4)

The proposed procedure and metrics were validated and proven useful in the study of mapping between the emotion representation models. The metrics might also prove useful in a comparison of the two annotations. The datasets developed for this study will be shared. The procedure, metrics and datasets set a framework for future works on mappings between the emotion representation models.