4.2.1. Experimental Setups

● Rotation stage

We implemented an experiment consisting of an object attached to a motorized rotation stage (CR1-Z7), which was controlled by a motor controller (TDC001 DC) from Thorlabs. The stage provides continuous 360° travel, with a wobble and repeatability of less than 2 arcsec and 1 arcmin, respectively. We set a trajectory consisting of a rotation of 5 degrees, with a maximum angular acceleration αm = 0.5 deg/s2 and a maximum angular velocity ωm = 1 deg/s. The motion employed is described by a trapezoidal velocity profile: the stage is gradually ramped at an acceleration to a maximum velocity, and, as the destination is approached, the stage is decelerated so that the final position is slowly reached.

The object was a sheet with a calibrated grid and the printed logo of our University. The grid was used to facilitate the calculation of the pixel to millimetre ratio. The video sequence was recorded using a Nikon 5100 camera with a Nikkor 18–55 objective, a resolution of 25 fps and 1920 × 1080 px at a distance of 1 m from the object, which provided a spatial resolution equal to 4.4 px/mm. The whole scene was illuminated with a halogen projector.

● Mechanical torsion and bending in a beam

We have implemented another experiment aimed at measuring the torsion and bending of a cantilever steel beam with a 50 × 25 × 4 mm U cross-section and a length of 775 mm. In

Figure 6, we show a scheme of the experimental setup. On the free end, a perpendicular plate is welded. In that plate, one can hang different weights from a hole located at 296.5 mm from point O. In our case, we used a weight

w = 5 N. A displacement measurer was placed over the plate at

xD = 200 mm from the point O in the X direction. It is used to measure the vertical displacement resulting from the combination of torsion and bending forces in the system. We used two Basler AC800-510uc video cameras with objectives Pentax TV lens of 25 mm recording at 300 frames per second (fps). One captured the distance measurer and the other one recorded the profile of the U-shape beam, specifically point A (0, −25) mm. The resolution obtained with this last camera was equal to 9.5 px/mm.

The bending and torsion of the experimental structure caused by a load of known weight

w can be theoretically characterized using the moment-area theorems [

17], by which we obtain the vertical displacements due to bending both in the U cross-section beam,

, and in the perpendicular plate,

, as well as the torsional angle

in the cross-section beam for the point D, following:

where all distances are in millimeters,

w in Newtons, and

in radians. In our case, we got

and

. Due to the eccentric load, the section rotates around its shear center

S that is located at

xS = 6.56 mm. Due to the rotation of the U section, all points have an additional displacement, given by

where

and

are the distance of a point (

x,

y) to the shearing center, and γ indicates the direction from S to the point.

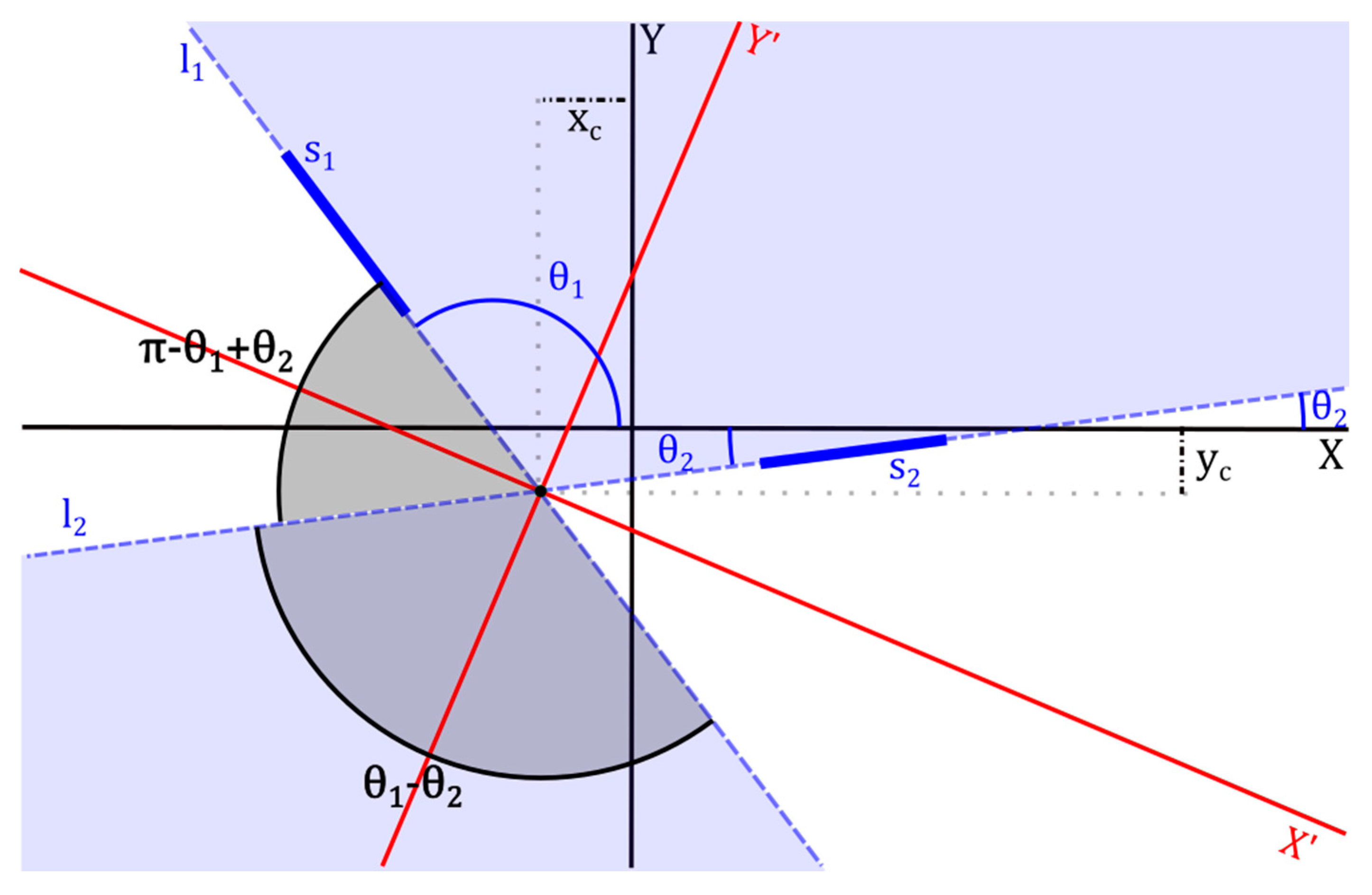

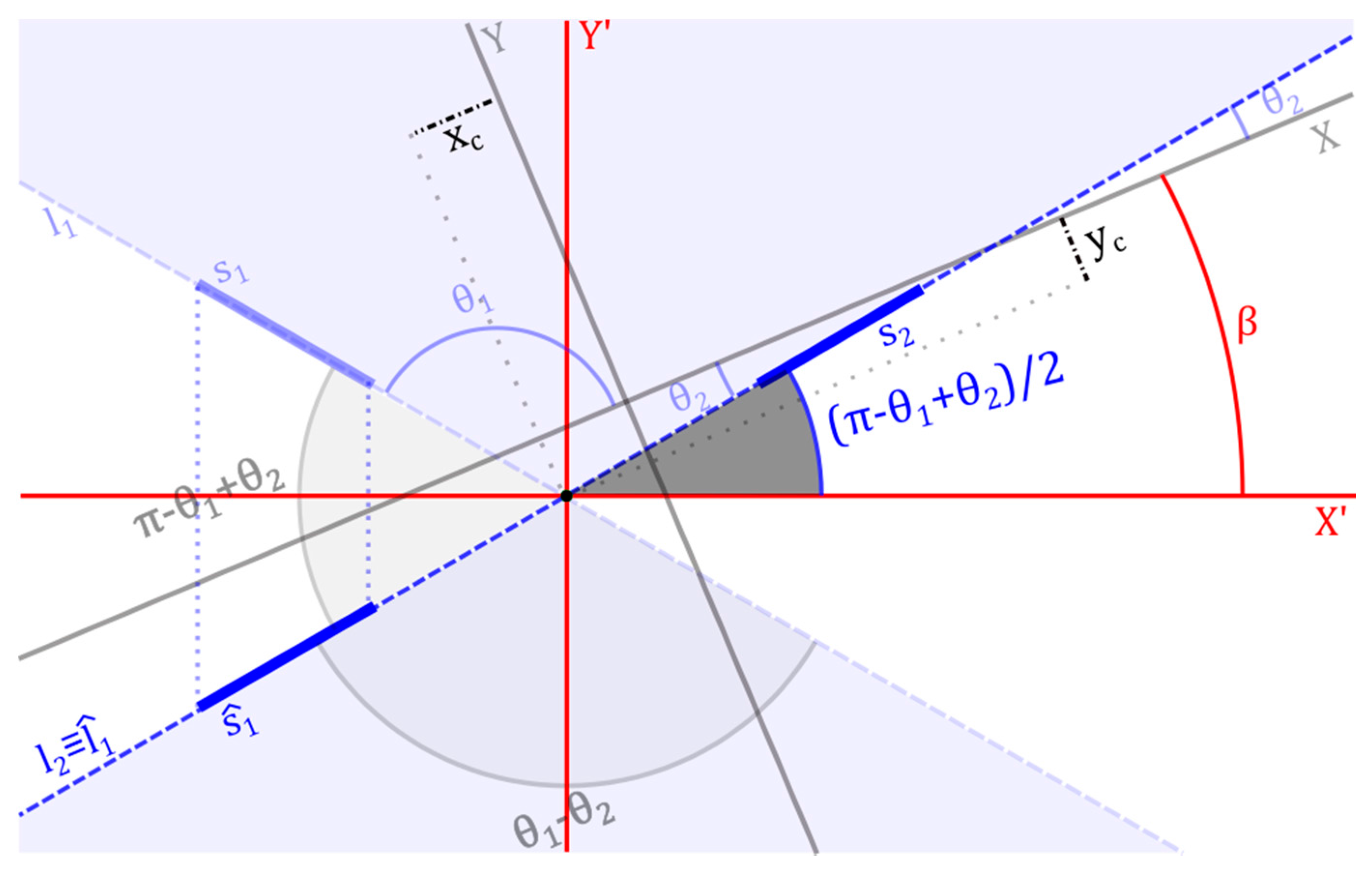

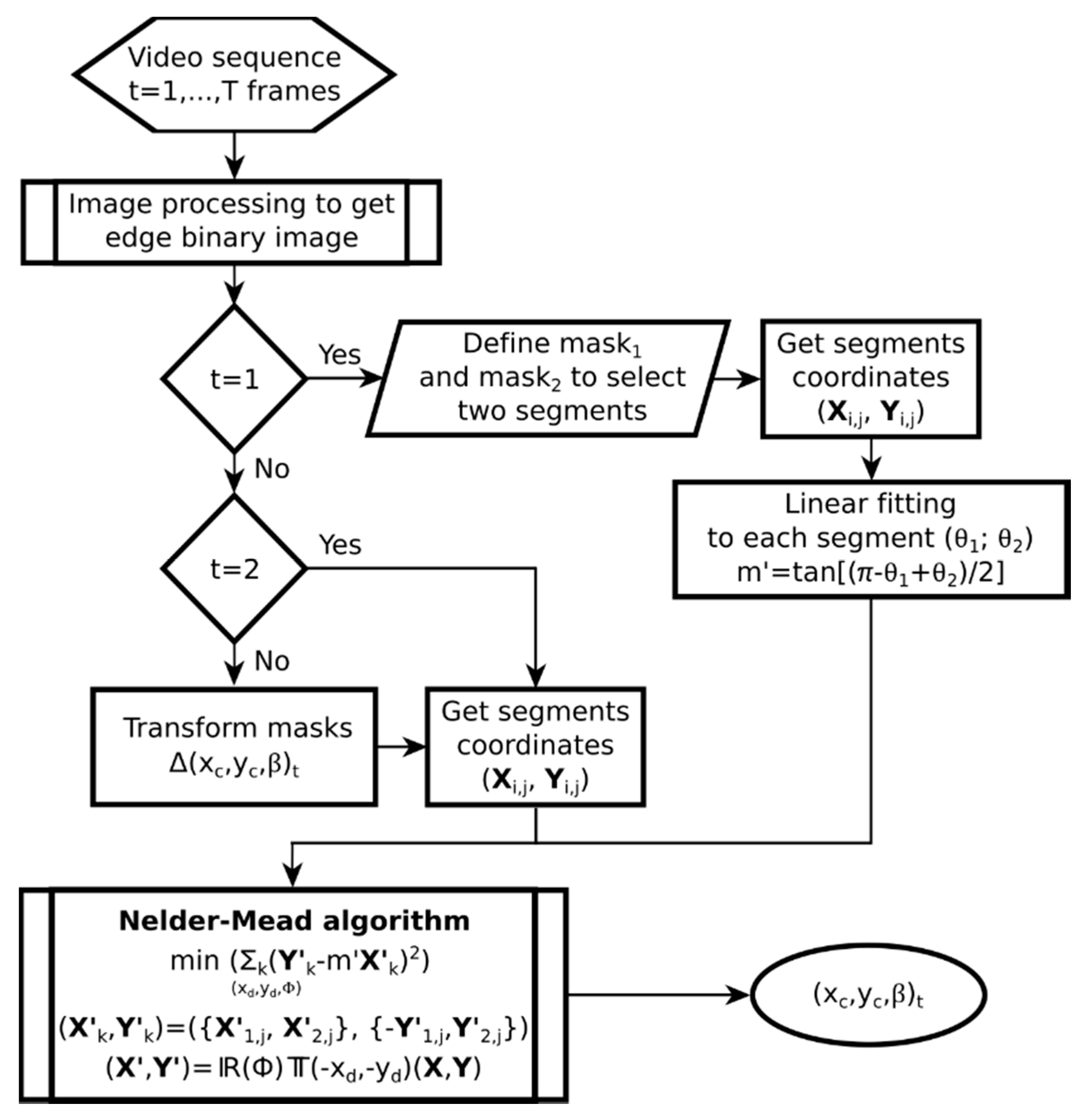

4.2.2. Video Processing and Object Tracking Calculation

The video sequences were processed offline following our own algorithm implemented in Matlab as we explained in the Method section.

● Rotation stage

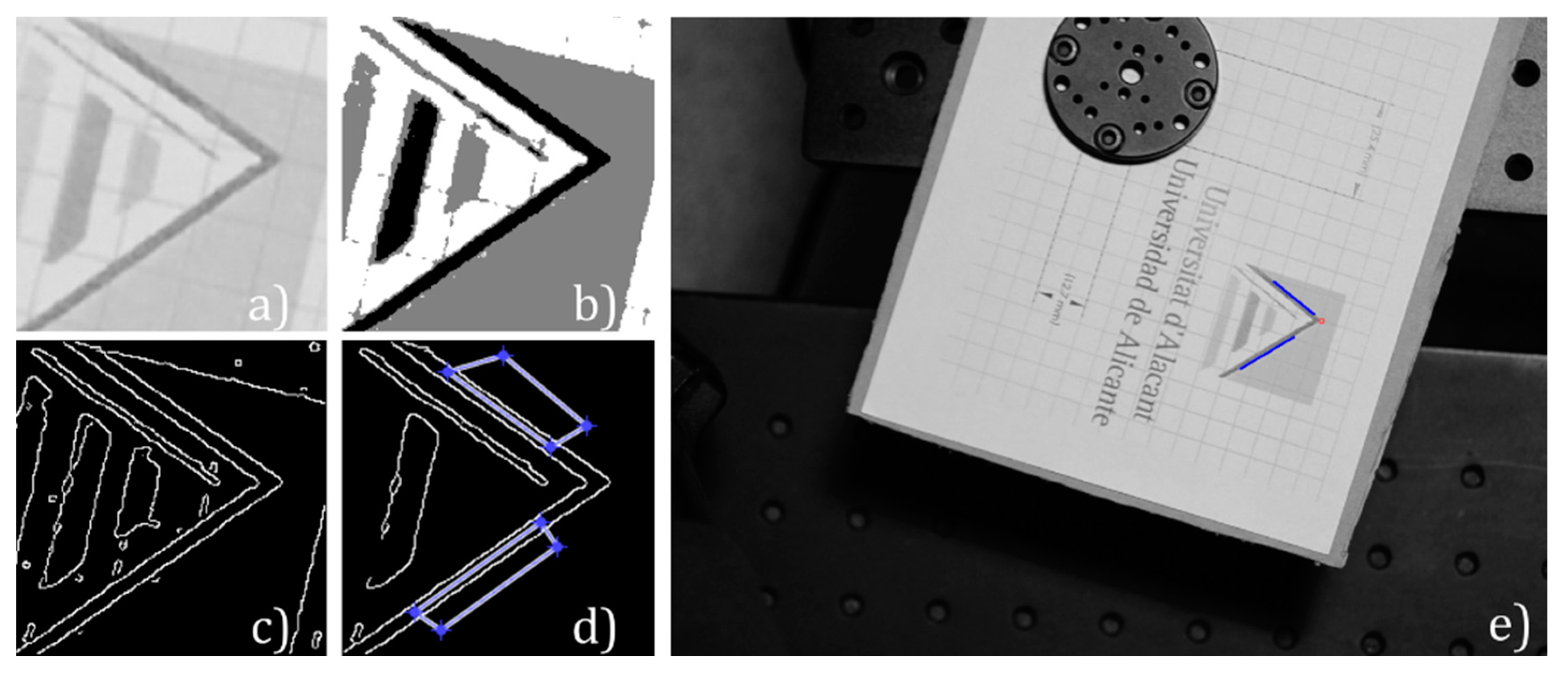

In

Figure 7, we show images resulting from the main stages of the image processing algorithm used in the experiment of the rotation stage. The logo of our University was the object to be tracked, so, from each frame, we cropped a region where we expected to extract two nonparallel segments (

Figure 7a). Then, we quantized the image using two quantization levels (

Figure 7b) and extracted the edges (

Figure 7c) using the Canny method [

18]. Next, we applied a binary opening filter to remove objects smaller than 200 px, to avoid undesired interferences, and manually selected two regions of the processed image that contained the two nonparallel segments (

s1 and

s2) (

Figure 7d). There, we got the coordinates of both segments. The two masks were automatically transformed frame to frame following the relative movement. We computed a location vector for each frame of the sequence.

Figure 7e shows a frame of the sequence with the selected segments (blue) and the computed intersection (red square).

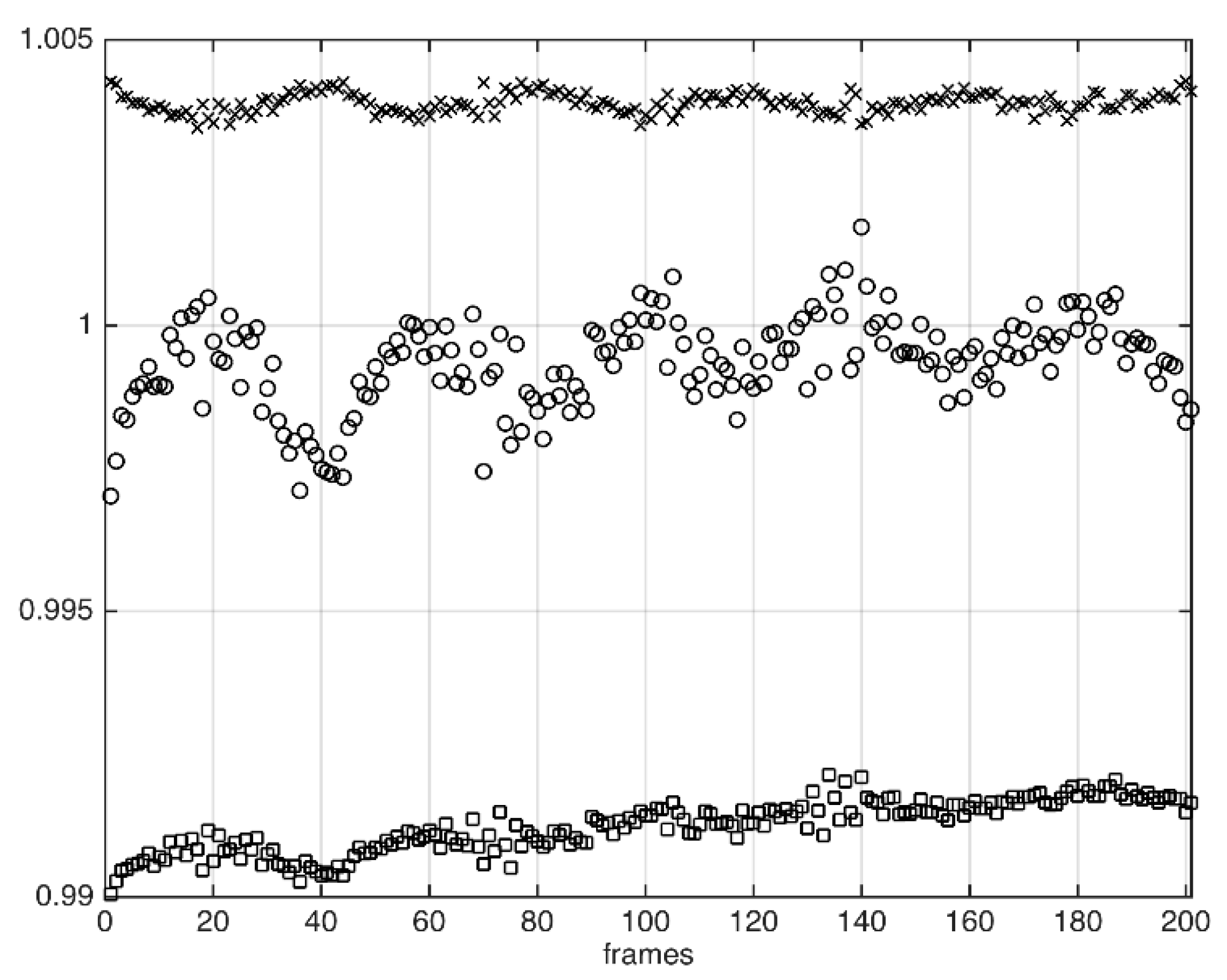

In

Figure 8, we present the variation of the angle β of the computed location vector in time through the video. Since we were not able to compare the position with the theoretical value, we only evaluated the parameters of the rotation stage provided by the manufacturer. As it was said above, the trajectory was a rotation of 5 degrees, in accordance with the registered rotation. The start and the end of the curve correspond to the time intervals when the object is stopped. There, one can appreciate two extrema zones where the stage motor accelerates and decelerates, in concordance with the employed trapezoidal velocity profile. Between these zones, the stage theoretically reached a constant maximum angular velocity of 1 deg/s. We have approximately cropped these three zones in the curve and fitted them to uniformly accelerated and constant velocity movement Equations, and obtained significant correlations (

p-value < 0.05). The first interval, which corresponds to the start of the engine until it reached constant velocity at around

t = 2 s, was fitted assuming it was uniformly accelerated, i.e.,

. The initial acceleration

resulted in

deg/s

2, with a Pearson coefficient

R = 0.89. Next, approximately between

t = 2 s and

t = 6 s, the curve was fitted to the straight line

with

R = 0.99, thus assuming constant angular velocity movement. The slope resulted in 0.978 ± 0.004 deg/s, which deviates 2% from the maximum theoretical value, and

s. At this instant, the angle in the uniformly accelerated phase would be

degrees. Finally, we assumed that the rotation stage uniformly decelerated and fitted data from

t = 6 s to the end to the curve

. We constrained the angular velocity to be

deg/s. We obtained

deg/s

2,

s, and

degrees, with

R = 0.94.

Therefore, the proposed method allowed us to obtain angular acceleration and velocity with accuracies of hundredths of deg/s2 and thousandths of deg/s, respectively, besides tracking the position of the object (see Visualization 2). Under the assumption of being a motion consisting of a constant velocity phase between two uniformly accelerated ones, we have been able to determine the instants and angular positions limiting each motion phase.

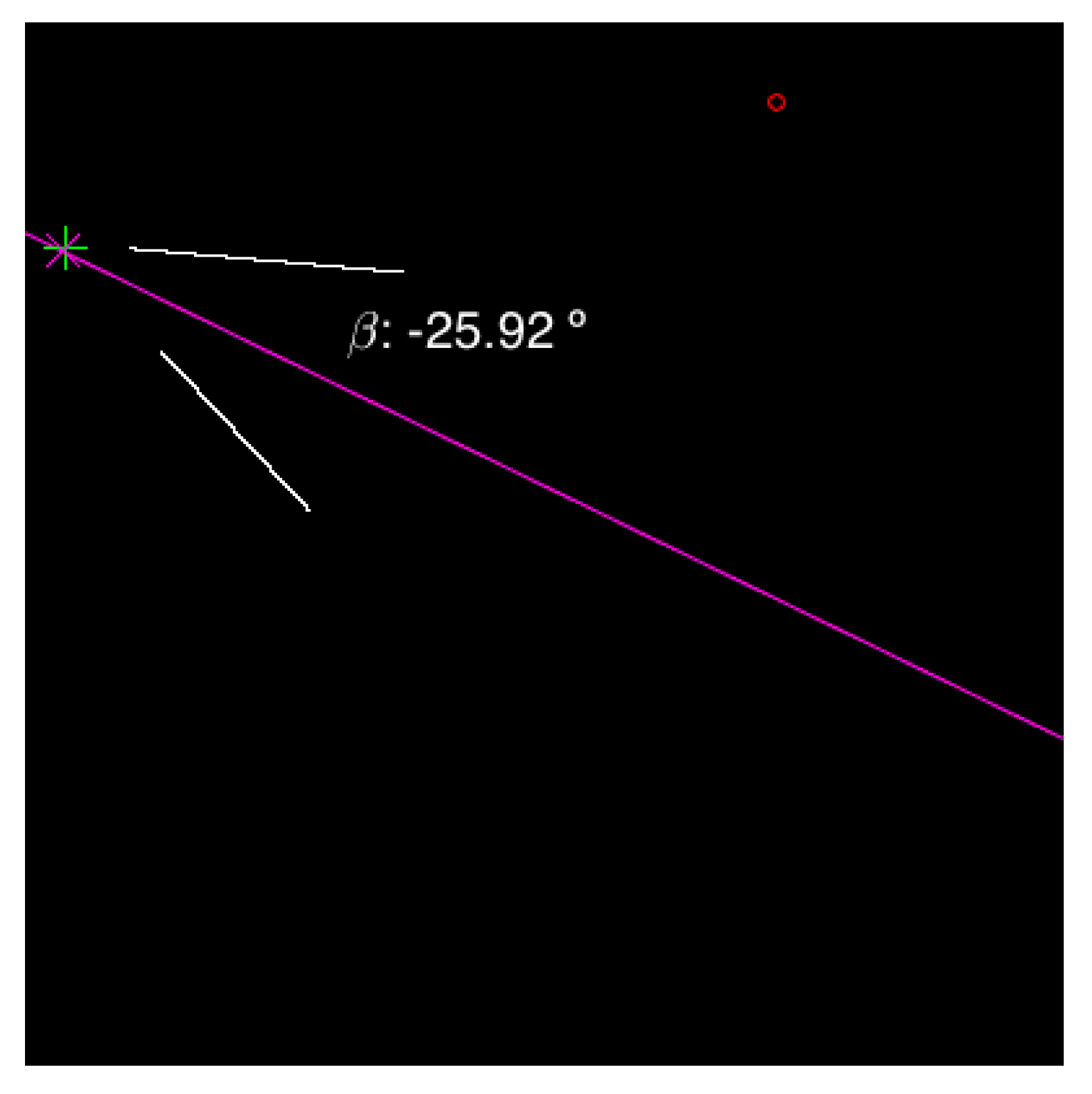

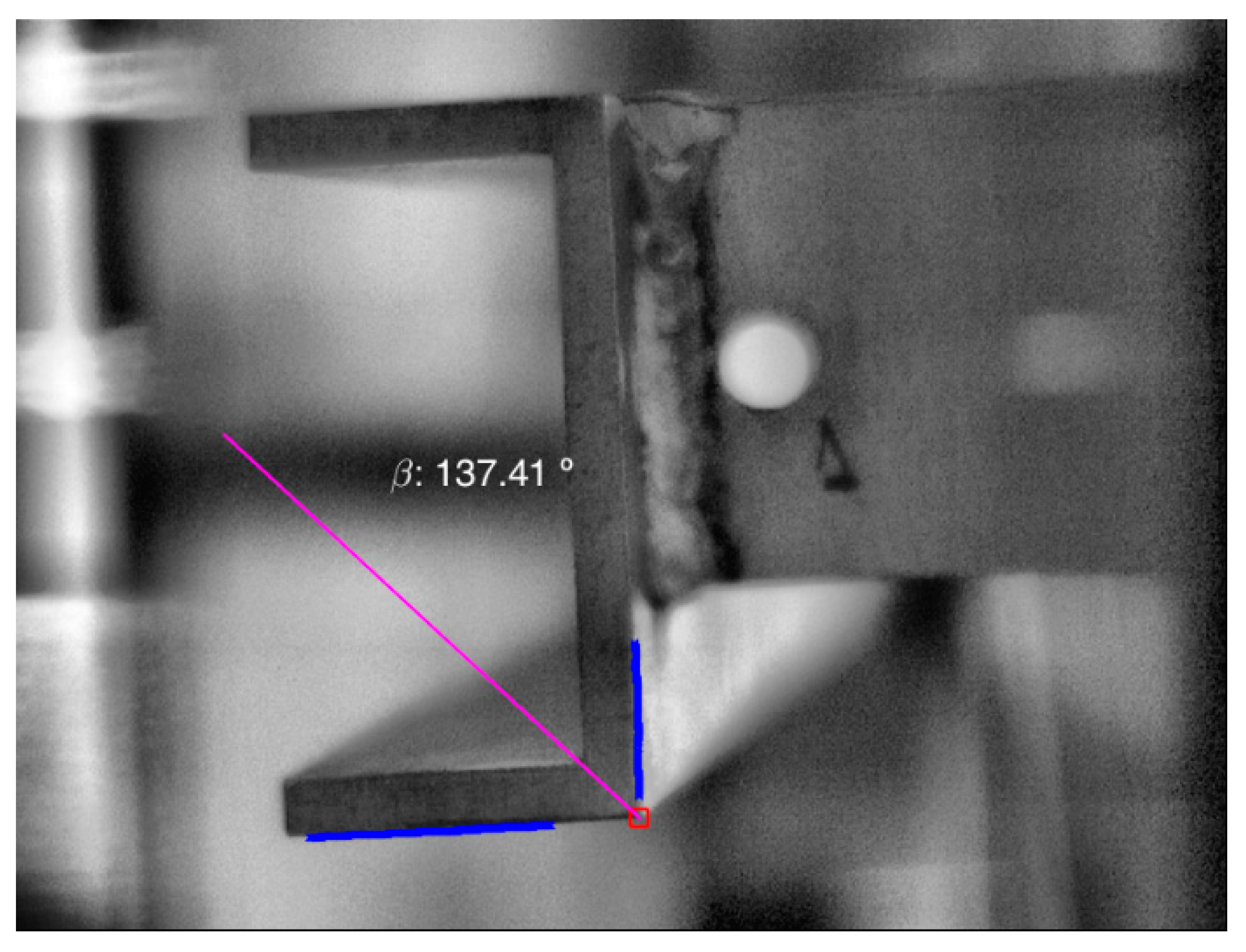

● Mechanical torsion and bending in a beam

In this experiment, we obtained two video sequences: one from the camera recording the beam, and another one from the camera recording the contact distance measurer. On the one hand, the processing of the first video is again directed to obtain an edge binary image. The object to be tracked is the U-shape beam profile, so, from each frame, we cropped a region around the point A, where we expected to extract two intersecting segments. Then, we equalized the histogram of the image to enhance contrast and we binarized it. Straightaway, we extracted the edges using the Canny method [

18], and applied an opening filter to remove small objects (those shorter than 200 px, in this case). In the final processed image, we manually selected two regions that contained the two nonparallel segments (

s1 and

s2), and got the coordinates of both segments. These regions were automatically transformed frame to frame following the relative movement. With those, we computed the location vector (

xc,

yc, β) for each frame of the sequence through the presented minimization method. In

Figure 9, we show a frame of the sequence with the selected segments (blue), the computed intersection (red square), which coincided with the corner of the beam as expected, and the bisector (pink).

In

Table 1, we present the results for the location vectors’ parameters computed separately solving (5), with those obtained by applying the proposed minimization method and the theoretical values obtained from (8) and (9). We can compare the errors and deviations obtained with both methods at point A with reference to the theoretical one. The torsional angle coincides with the variation of β. Its value obtained with the proposed method is quite similar to the theoretical one, with an absolute difference of 4 × 10

−3 radians and a relative deviation of 18%, whereas the result obtained solving (5) differed around 0.02 radians with a relative deviation of 78%. The errors of the fittings in (5) probably accumulate, a fact that leads to a bad angle estimation, and therefore the method is dismissed.

By comparing the theory and the minimization method regarding the displacements, one can see that the deviation in the x-direction is similar to that of the angle. However, the deviation in the y-direction is quite higher. Note that the displacement magnitudes are low, so the signal-to-noise ratio is high and the measurements inaccurate, although the estimation is not bad. It is not worth comparing displacement values because those magnitudes are related to the torsional angle in Equation (9). Indeed, they are more sensitive to errors in the theoretical calculations. Note that the structure is not ideal (welding, holes, not squarely welded pieces, irregularities in the shape pieces, inhomegeneities in the material, …).

Besides the rotation and the total displacement of points once the applied load has stabilized, the proposed method allows for the instantaneous measurement of these quantities in time, while the load is being transferred from the operator’s hand to the structure and the structure distributes the stresses and suffers strains though the whole structure. The load was applied slowly enough to avoid an additional impact factor in the structure, but fast enough to minimize transference time from the operator’s hand to the structure. Despite this, some small time was spent during this transference and hence both effects (transference load and strain-stresses redistribution) are mixed in the results in time. In

Figure 10, we represent the relative displacement of the point A in (a) the

x-direction, (b) the

y-direction, and (c) the torsional angle computed both separately solving (5) and with the proposed minimization method for the video of

Figure 9. Separately solving Equation (5) seems to work well; however, it suddenly provides an inaccurate solution from around 0.8 s. This might be because the errors of both fittings accumulate. The results provided by the minimization method show a smooth behaviour, which allows us to assess the dynamic process. Note that the accuracy of the method (0.01 mm, equivalent to 0.1 px) and the theoretical total displacement in the

y-direction (0.07 mm) are of the same order, so the graph in

Figure 10b seems wrinklier, and thus the results obtained for this displacement are not reliable.

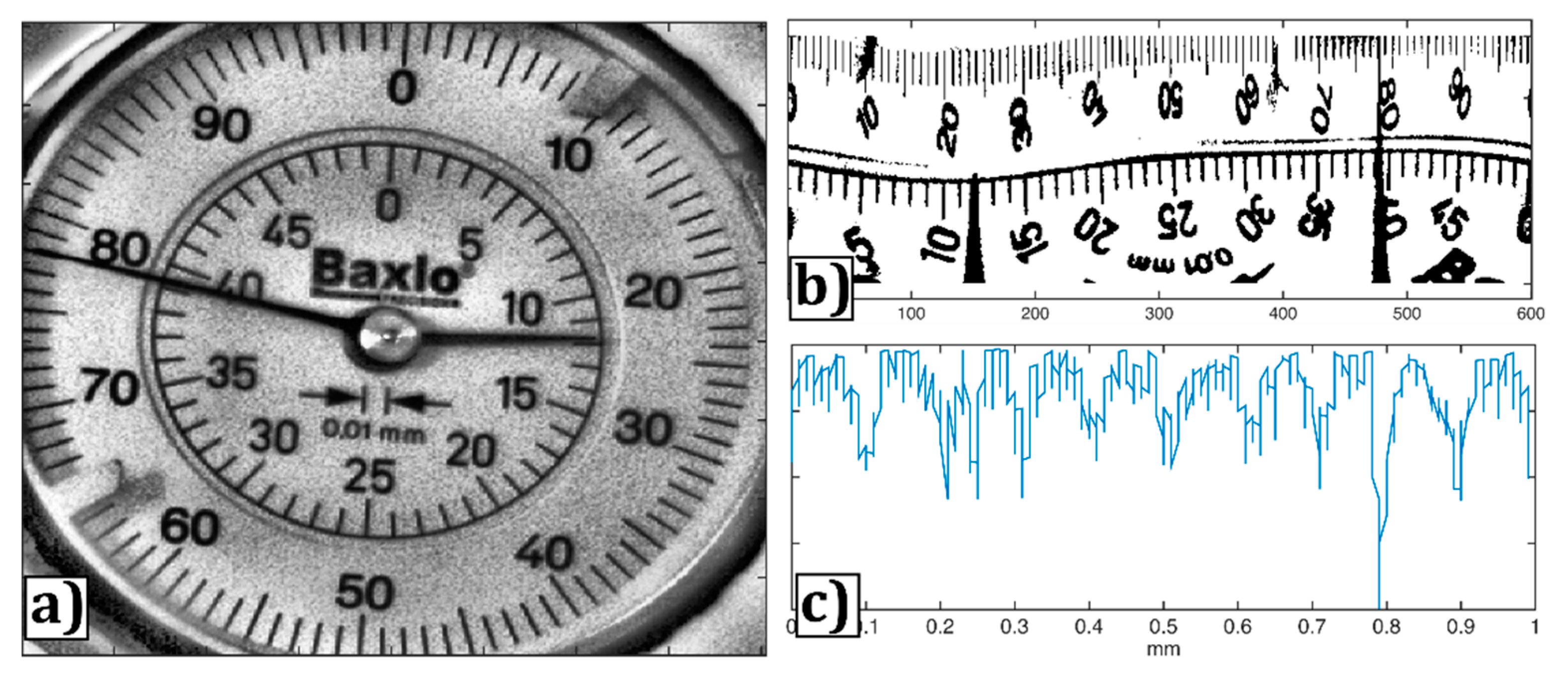

On the other hand, we have processed the video from the camera recording the contact distance measurer (

Figure 11a) in order to obtain the vertical displacement of the beam in time. This measurement can be used to evaluate the results provided by the minimization method in the dynamic process of the deflection. Therefore, we compare it with the results of the proposed technique. Each frame was cropped, binarized, and transformed to polar coordinates around the centre of the clock (

Figure 11b). This point was manually selected in the first frame of the sequence. The sum of the intensity values of the columns of that image in polar coordinates is at a minimum where the needle is, so we computed the column of minimum sum and we quantized the number of columns to one hundred discrete levels between 0 and 1, which corresponds to the hundredths of a millimetre (

Figure 11c) of the accuracy of the device. Each time a whole lap was performed, we added 1 mm.

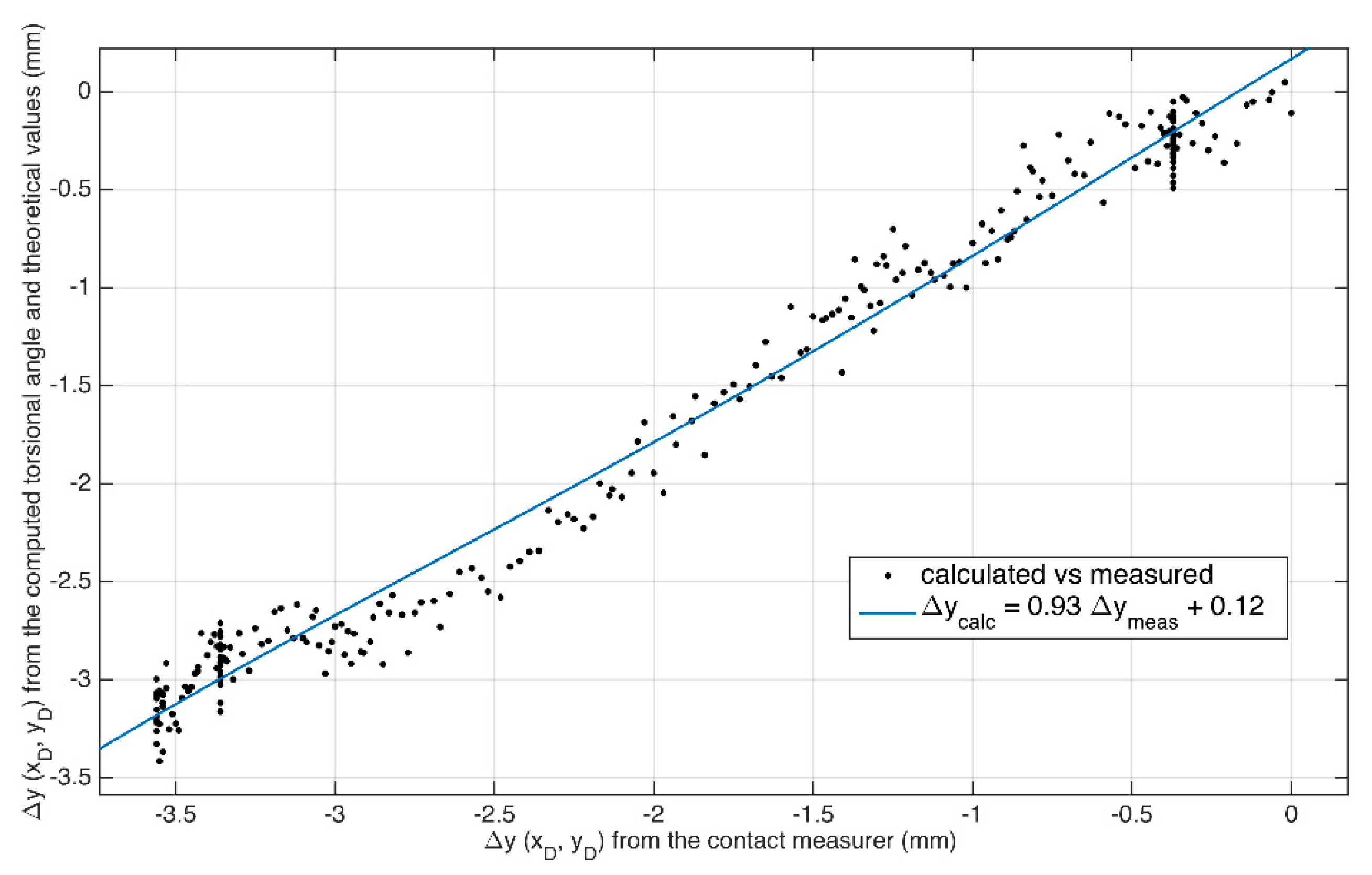

In

Figure 12, we have represented the displacement measured with the contact measurer at the point D,

versus the torsional angle computed from the orientation of the bisector obtained with the proposed method at the point A,

. They are related following (9), which can be simplified into:

We have compared the data from the contact measurer

with the angles computed with the proposed technique,

, through Equation (10). We imposed the bending values

and

, the positions

and

, and the angle

, according to the theory, and computed the linear least-square fitting. The results from the fitting are shown in

Figure 12, with a

p-value <0.05, root mean squared error equal to 0.16 mm, and

R = 0.99.

Theoretically, for the point D, the total deflection is

. Again, the theoretical value differs from the measurements, if we compare that value with those shown in

Figure 12 (around −3.50 mm). This fact made us doubt the accuracy of the parameters used for the theoretical calculations. Probably, some inhomogeneities (density, shape, weldings) in the steel pieces of the structure affect the inertial moments, and therefore the position of the shearing center and/or the torsional angle are not accurately predicted.