Piecewise-Linear Frequency Shifting Algorithm for Frequency Resolution Enhancement in Digital Hearing Aids

Abstract

:1. Introduction

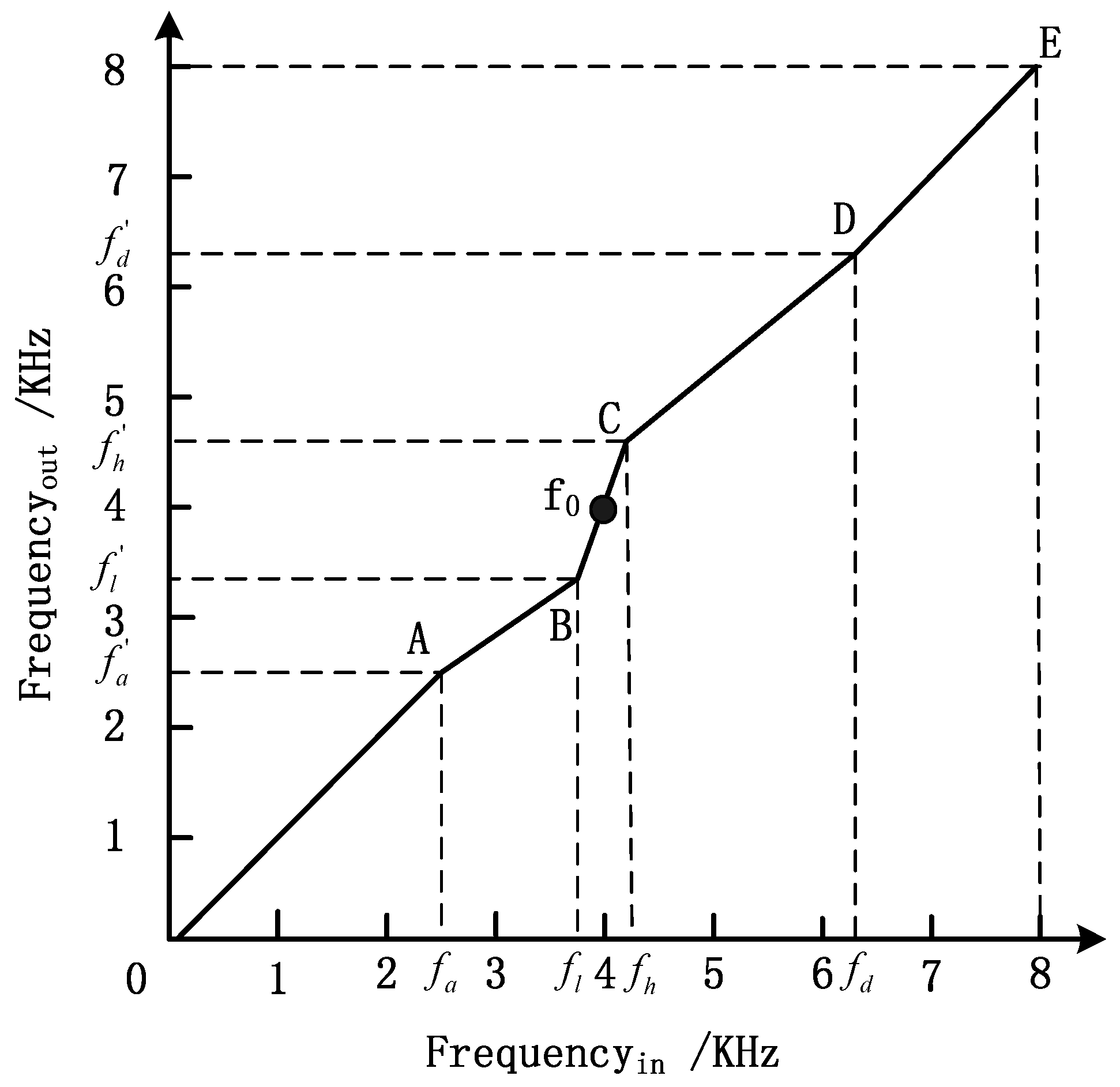

2. Piecewise-Linear Frequency Shifting Algorithm

3. Implementation of the Piecewise-Linear Frequency Shifting Algorithm

4. Experimental Results

4.1. Subjects and Their Pure Tone Audiograms

4.2. Measurements of the Frequency Discrimination

- Step 1

- The software plays an audio sequence at the testing frequency. The sequence consists of two 1-second pure tone signals with a 100 ms interval between them, namely “pure tone 1—pause—pure tone 2”. In the sequence, pure tone 1 is the probe signal at the testing frequency, and pure tone 2 is the offset frequency signal or the same probe signal.

- Step 2

- Each sequence is played once or twice. The second play is optional and activated by the listener. The listener needs to decide whether the two signals are identical.

- Step 3

- The software adaptively adjusts the frequency offset according to the correctness of the subject’s indication for the tone. The frequency offset values can be set as , with initial frequency offset , where is the frequency of the probe pure tone.

- Step 4

- When the subject gives N (N can be set by the software) times correct indications, then the frequency offset is halved. When the subject hits N times incorrect indications, the frequency offset is doubled. Otherwise the frequency offset is held constant and the testing continues.

- Step 5

- When the offset is switched back and forth between the adjacent [Δf1 Δf2]·M times, or the offset is already tested M times, the frequency discrimination threshold at the probe frequency is considered to be the geometric average of the last two adjacent frequencies . The measurement moves to the next frequency and then returns to step 1.

4.3. The Performance of the Frequency Shifting Algorithm

4.4. Experiment Results of SDS for Monosyllabic Vocabulary

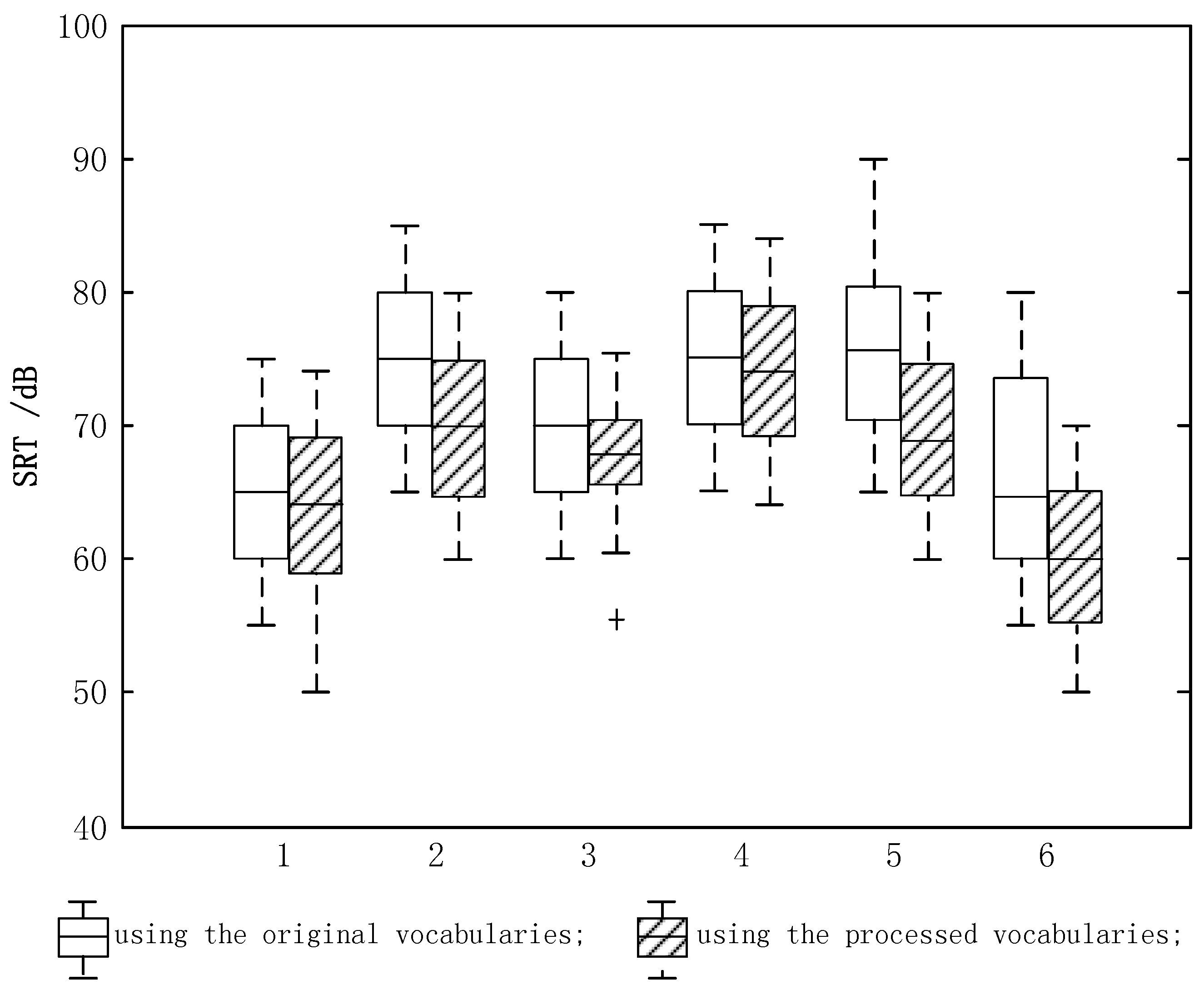

4.5. Speech Reception Threshold (SRT) Measurement Using Disyllabic Vocabulary in a Noisy Environment

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kalluri, S.; Humes, L.E. Hearing technology and cognition. Am. J. Audiol. 2012, 21, 338–343. [Google Scholar] [CrossRef]

- Li, X.Q. Fundamental and Application of Auditory Evoked Repsponse; Peoples Military Medical Press: Beijing, China, 2007. [Google Scholar]

- Hamilton, T.J.; Jin, C.; Tapson, J.; Van Schaik, A. A 2-D Cochlea with Hopf Oscillators. In Proceedings of the IEEE Biomedical Circuits and Systems Conference, Montreal, QC, Canada, 27–30 November 2007. [Google Scholar]

- Phillips, S.L.; Gordon-Salant, S.; Fitzgibbons, P.J.; Yeni-Komshian, G. Frequency and temporal resolution in elderly listeners with good and poor word recognition. J. Speech Lang. Hear. Res. 2000, 43, 217–228. [Google Scholar] [CrossRef] [PubMed]

- Jin, S.-H.; Nelson, P.B. Interrupted speech perception: The effects of hearing sensitivity and frequency resolution. J. Acoust. Soc. Am. 2010, 128, 881–889. [Google Scholar] [PubMed]

- Lunner, T.; Rudner, M.; Rönnberg, J. Cognition and hearing aids. Scand. J. Psychol. 2009, 50, 395–403. [Google Scholar] [CrossRef] [PubMed]

- Kulkarni, P.; Pandey, P.; Jangamashetti, D. Multi-band frequency compression for reducing the effects of spectral masking. Int. J. Speech Technol. 2007, 10, 219–227. [Google Scholar] [CrossRef]

- Chen, J.; Baer, T.; Moore, B.C. Effect of enhancement of spectral changes on speech intelligibility and clarity preferences for the hearing impaired. J. Acoust. Soc. Am. 2012, 131, 2987–2998. [Google Scholar] [CrossRef] [PubMed]

- Nogueira, W.; Rode, T.; Büchner, A. Spectral contrast enhancement improves speech intelligibility in noise for cochlear implants. J. Acoust. Soc. Am. 2016, 139, 728–739. [Google Scholar] [CrossRef] [PubMed]

- Simpson, A. Frequency-lowering devices for managing high-frequency hearing loss: A review. Trends Amplif. 2009, 13, 87–106. [Google Scholar] [CrossRef] [PubMed]

- Souza, P.E.; Arehart, K.H.; Kates, J.M.; Croghan, N.B.; Gehani, N. Exploring the limits of frequency lowering. J. Speech Lang. Hear. Res. 2013, 56, 1349–1363. [Google Scholar] [CrossRef]

- Yimu, A.; Di, Y.; Qiu, C. Classification of the audiograms for sensorineural deafness. J. Audiol. Speech Pathol. 2006, 14, 384–385. [Google Scholar]

- Bohnert, A.; Nyffeler, M.; Keilmann, A. Advantages of a non-linear frequency compression algorithm in noise. Eur. Arch. Oto-Rhino-Laryngol. 2010, 267, 1045–1053. [Google Scholar] [CrossRef] [PubMed]

- Moore, B.C. Psychophysical tuning curves measured in simultaneous and forward masking. J. Acoust. Soc. Am. 1978, 63, 524–532. [Google Scholar] [CrossRef] [PubMed]

- Moore, B.C. An Introduction to the Psychology of Hearing; BRILL: Leiden, The Netherlands, 2012. [Google Scholar]

- Jesteadt, W.; Sims, S. Decision process in frequency discrimination. J. Acoust. Soci. Am. 1974, 56, S44. [Google Scholar] [CrossRef]

- Sęk, A.; Alcántara, J.; Moore, B.C.; Kluk, K.; Wicher, A. Development of a fast method for determining psychophysical tuning curves. Int. J. Audiol. 2005, 44, 408–420. [Google Scholar] [CrossRef] [PubMed]

- Kluk, K.; Moore, B.C. Factors affecting psychophysical tuning curves for normally hearing subjects. Hear. Res. 2004, 194, 118–134. [Google Scholar] [CrossRef] [PubMed]

- Kluk, K.; Moore, B.C. Factors affecting psychophysical tuning curves for hearing-impaired subjects with high-frequency dead regions. Hear. Res. 2005, 200, 115–131. [Google Scholar] [CrossRef] [PubMed]

- Levitt, H. Transformed up-down methods in psychoacoustics. J. Acoust. Soc. Am. 1971, 49, 467–477. [Google Scholar] [CrossRef]

- Xi, X. Operation procedures on speech audiometry (1). J. Audiol. Speech Pathol. 2011, 19, 489–490. [Google Scholar]

- Xi, X. Operation procedures on speech audiometry (2). J. Audiol. Speech Pathol. 2011, 19, 582–584. [Google Scholar]

- Xi, X.; Huang, G.Y.; Ji, F. The establishment of computer-assisted Chinese speech audiometry platform. Chin. Sci. J. Hear. Speech Rehabil. 2010, 41, 31–34. (In Chinese) [Google Scholar]

- Liang, R.; Xi, J.; Zhou, J.; Zou, C.; Zhao, L. An improved method to enhance high-frequency speech intelligibility in noise. Appl. Acoust. 2013, 74, 71–78. [Google Scholar] [CrossRef]

- Zhao, H. Hearing Aids; People’s Medical Publishing House: Shelton, CT, USA, 2004. [Google Scholar]

- Boothroyd, A. The performance/intensity function: An underused resource. Ear Hear. 2008, 29, 479–491. [Google Scholar] [CrossRef] [PubMed]

- American Speech-Language-Hearing Association. Determining threshold level for speech. ASHA 1987, 29, 141–147. [Google Scholar]

- Xi, X. The basic content and clinical value of adult speech audiometry. J. Clin. Otorhinolaryngol. Head Neck Surg. 2013, 27, 337–339. [Google Scholar]

| Subject | Age | Gender | Cause of Deafness | Duration | Deafness Degree | Wearing Experience | Wearing Hours Everyday |

|---|---|---|---|---|---|---|---|

| S1 | 42 | Male | Noise-induced | 2 years | Moderate | 12 months | ≥8 h |

| S2 | 25 | Male | Hereditary | 1 year | Moderate | 3 months | 2~8 h |

| S3 | 47 | Male | Noise-induced | 4 years | Moderate | 12 months | ≥8 h |

| S4 | 60 | Female | Mixed | 5 years | Severe | 28 months | ≥8 h |

| S5 | 38 | Female | Noise-induced | 4 years | Severe | 20 months | ≥8 h |

| S6 | 40 | Female | Noise-induced | 4 years | Moderate | 24 months | 4~8 h |

| S1 | S2 | S3 | S4 | S5 | S6 | |

|---|---|---|---|---|---|---|

| probe frequency/Hz | 4000 | 3000 | 4000 | 6000 | 4000 | 3000 |

| frequency discrimination threshold |

| Subject | Frequency Discrimination Threshold | /Hz | /Hz | The Number of Interpolated and Sampled | |||

|---|---|---|---|---|---|---|---|

| S1 | 3936 | 4064 | 4 | 0.95 | 0.95 | 24 | |

| S2 | 2904 | 3096 | 3 | 0.94 | 0.96 | 24 | |

| S3 | 3872 | 4128 | 3 | 0.94 | 0.94 | 32 | |

| S4 | 5625 | 6375 | 2 | 0.93 | 0.81 | 48 | |

| S5 | 3750 | 4250 | 2 | 0.93 | 0.93 | 32 | |

| S6 | 2952 | 3048 | 4 | 0.97 | 0.98 | 18 |

| Frequency Range/Hz | Contribution Score % |

|---|---|

| 2 | |

| 3 | |

| 35 | |

| 35 | |

| 13 | |

| 12 |

| Subjects | SRT/dB (The Original) | SRT/dB (The Processed) |

|---|---|---|

| S1 | 46 | 42 |

| S2 | 62 | 54 |

| S3 | 57 | 54 |

| S4 | 65 | 62 |

| S5 | 71 | 67 |

| S6 | 49 | 45 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Liang, R.; Rahardja, S.; Zhao, L.; Zou, C.; Zhao, L. Piecewise-Linear Frequency Shifting Algorithm for Frequency Resolution Enhancement in Digital Hearing Aids. Appl. Sci. 2017, 7, 335. https://doi.org/10.3390/app7040335

Wang Q, Liang R, Rahardja S, Zhao L, Zou C, Zhao L. Piecewise-Linear Frequency Shifting Algorithm for Frequency Resolution Enhancement in Digital Hearing Aids. Applied Sciences. 2017; 7(4):335. https://doi.org/10.3390/app7040335

Chicago/Turabian StyleWang, Qingyun, Ruiyu Liang, Susanto Rahardja, Liye Zhao, Cairong Zou, and Li Zhao. 2017. "Piecewise-Linear Frequency Shifting Algorithm for Frequency Resolution Enhancement in Digital Hearing Aids" Applied Sciences 7, no. 4: 335. https://doi.org/10.3390/app7040335