A Photometric Stereo Using Re-Projected Images for Active Stereo Vision System

Abstract

:1. Introduction

2. Related Works

2.1. Active Stereo Vision Using Structured Light

2.2. Photometric Stereo

2.3. Data Combining Method

2.4. Previous Works

3. Our Approach

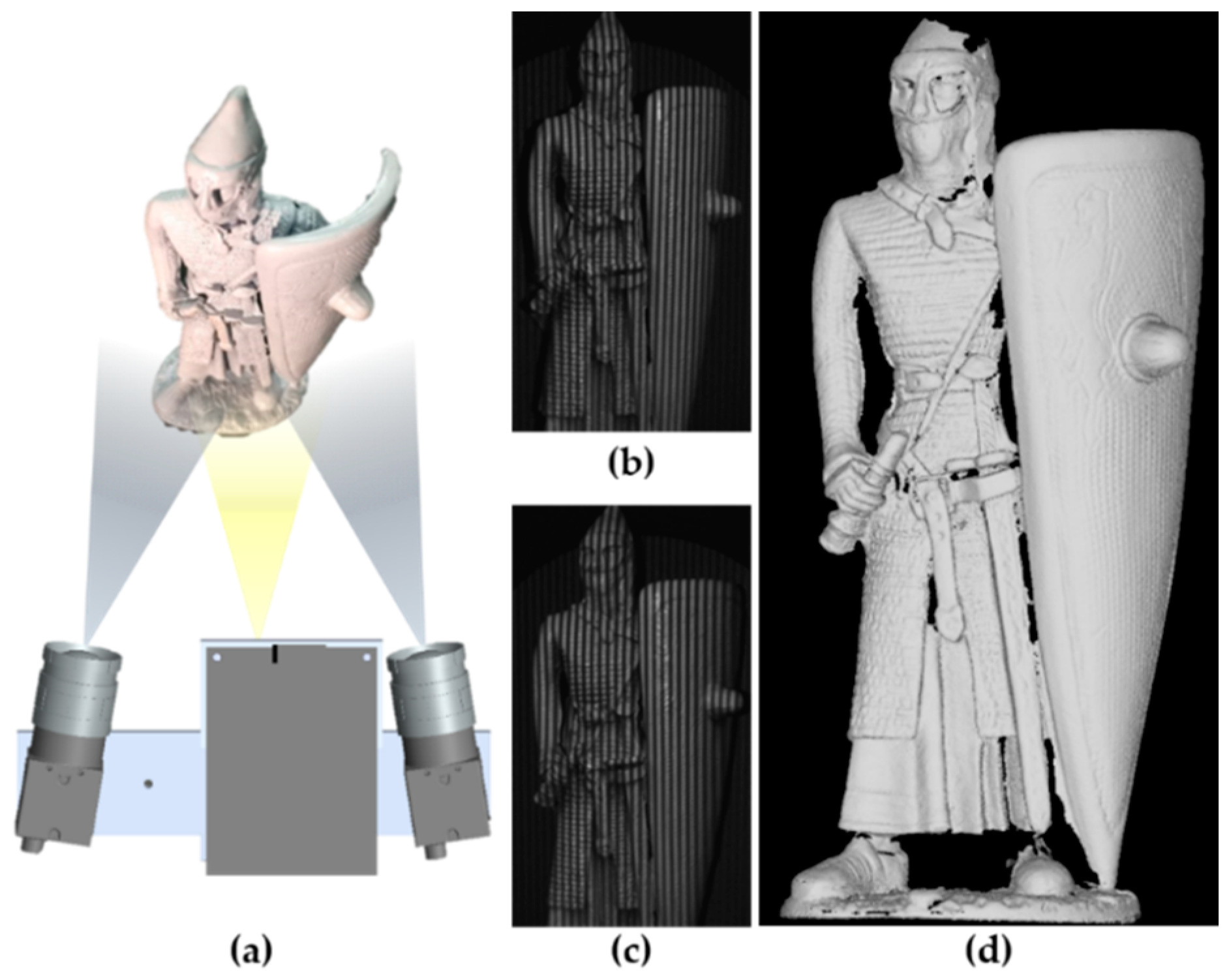

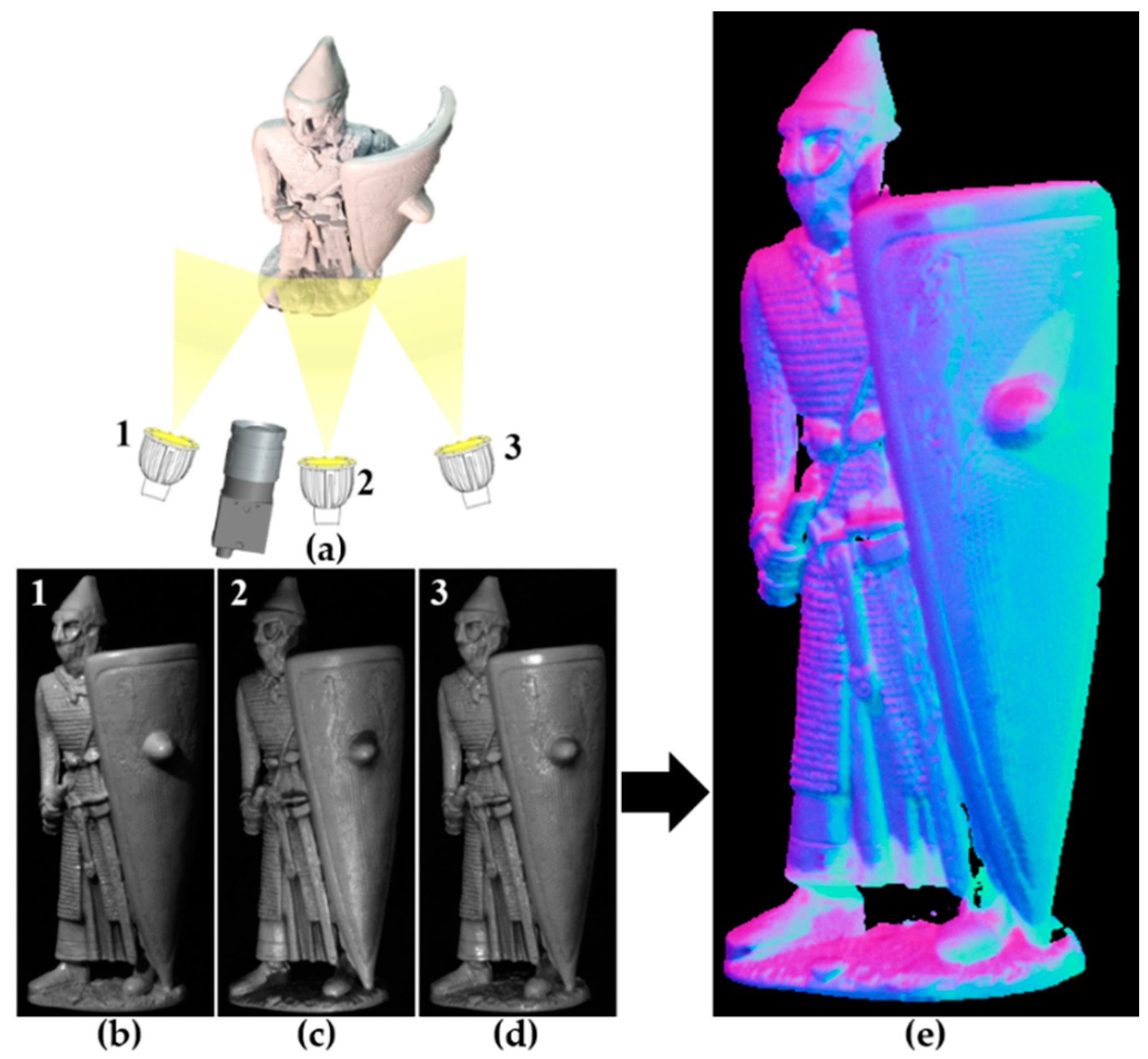

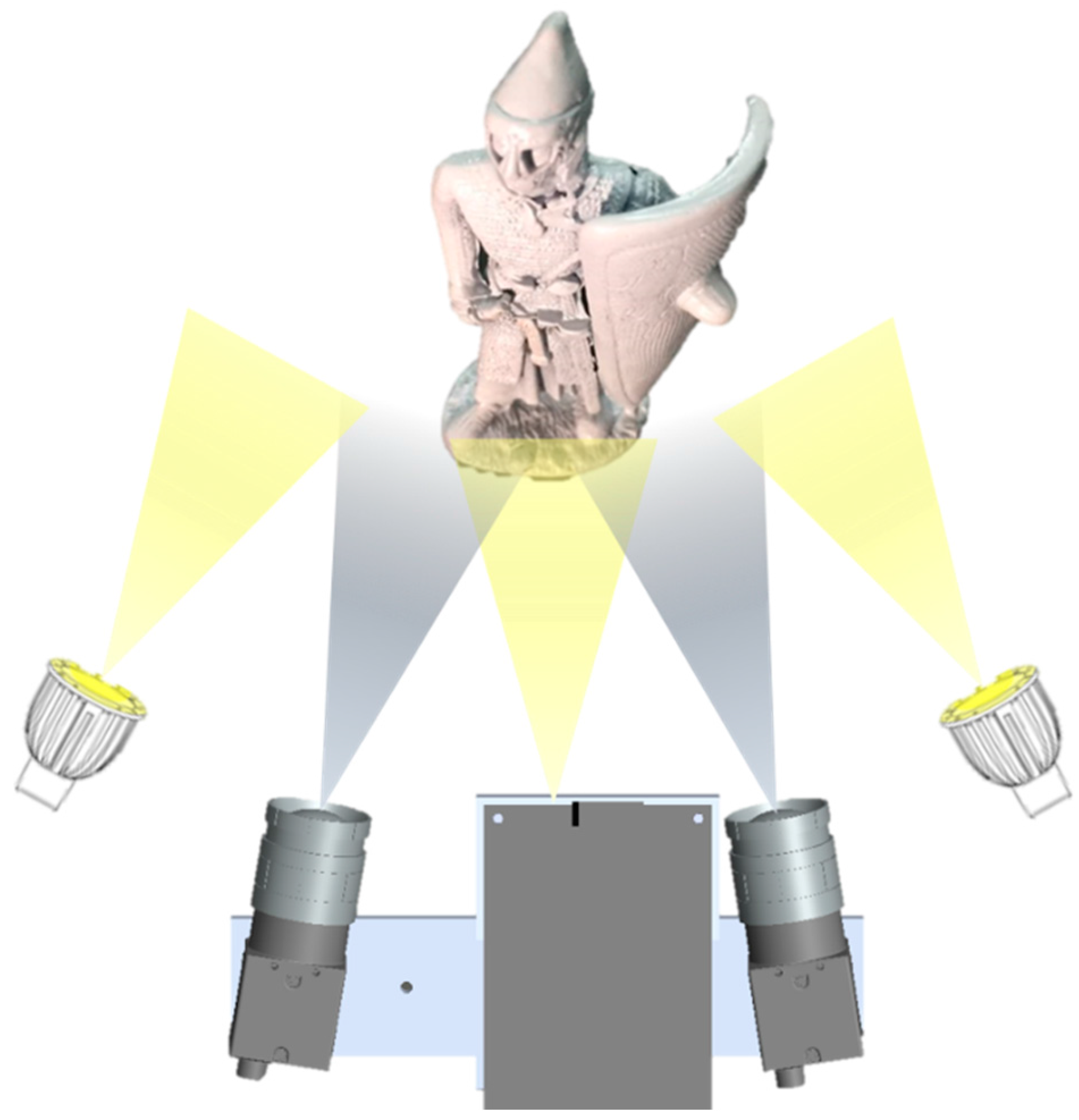

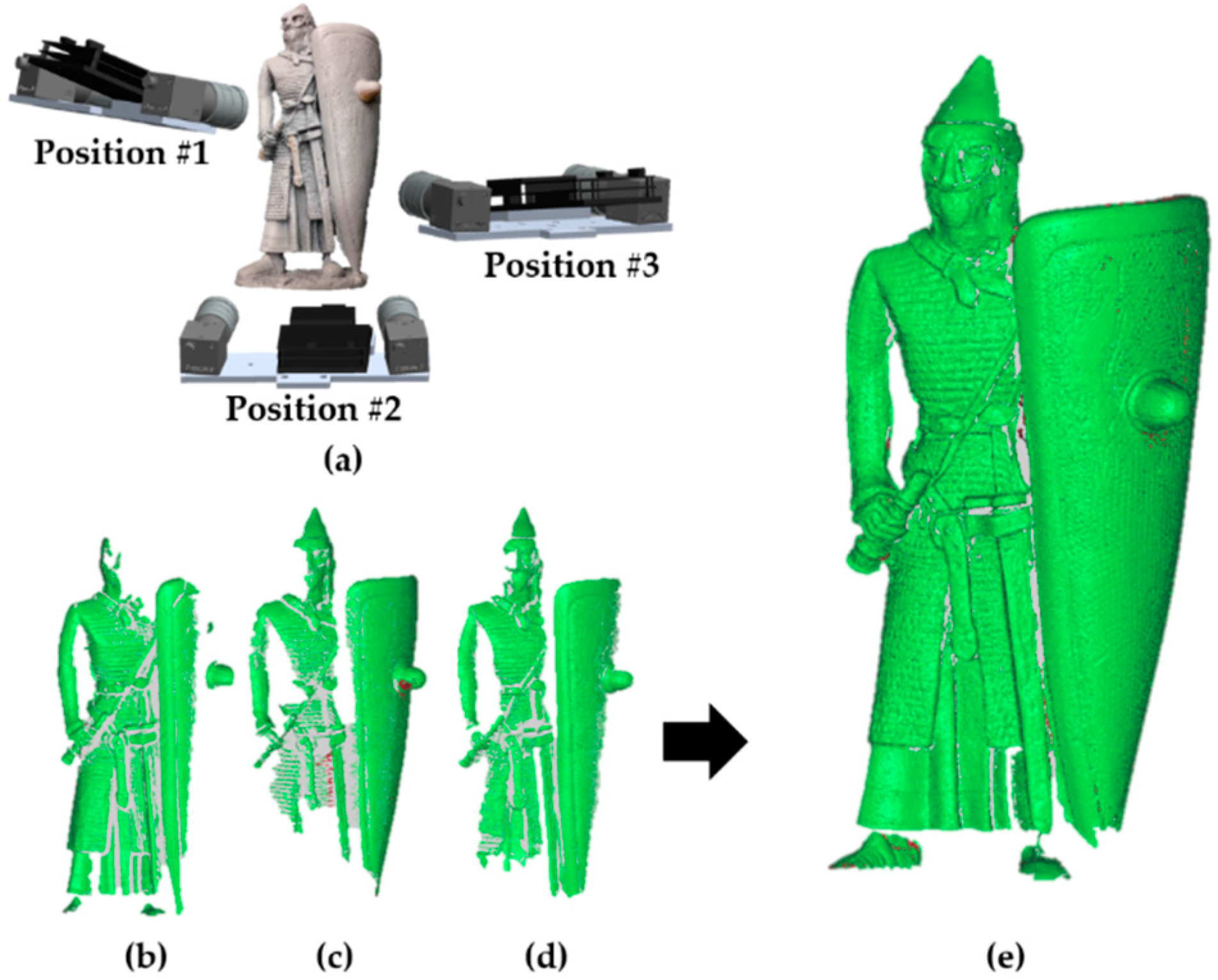

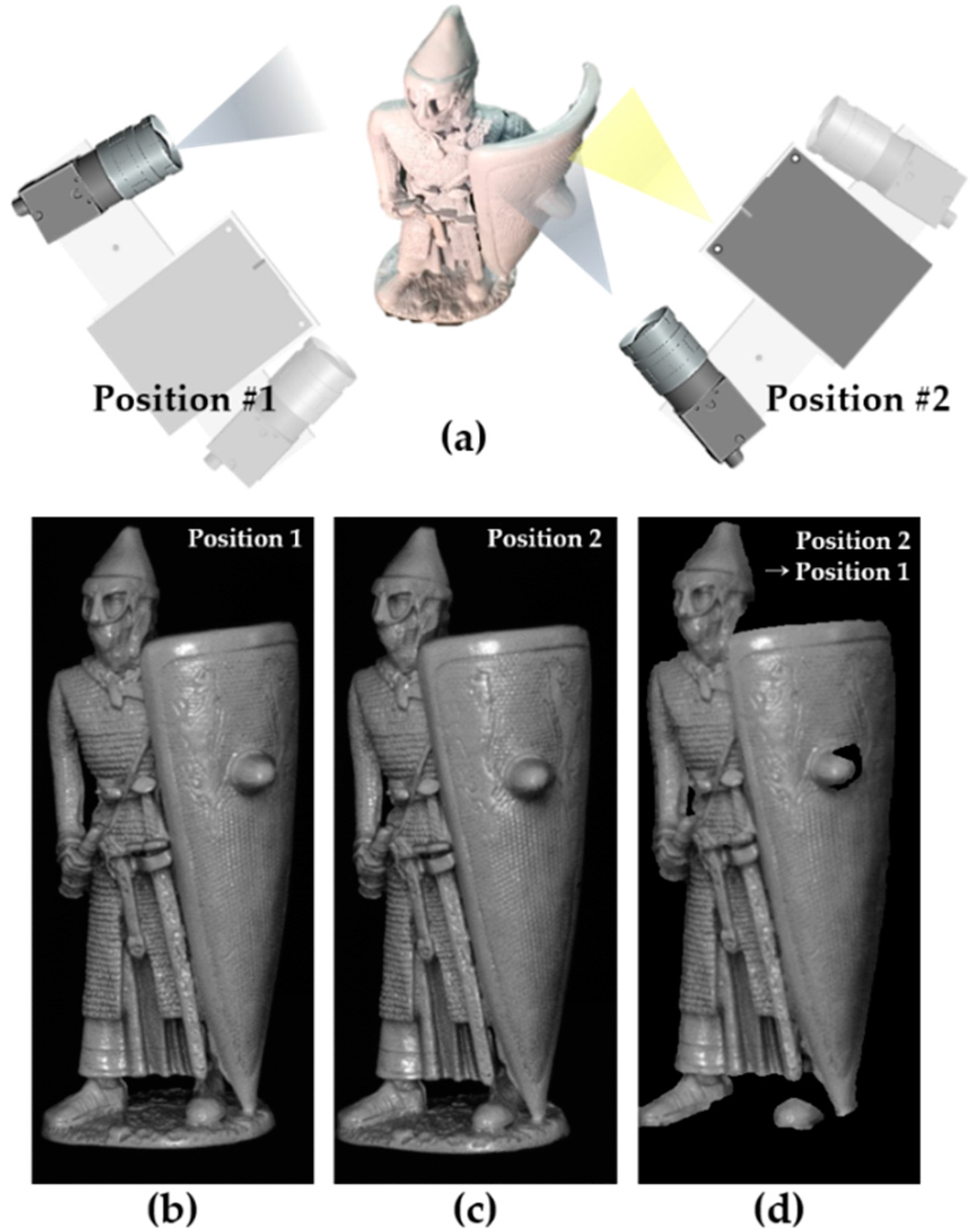

3.1. Active Stereo Vision Measurement System Analysis

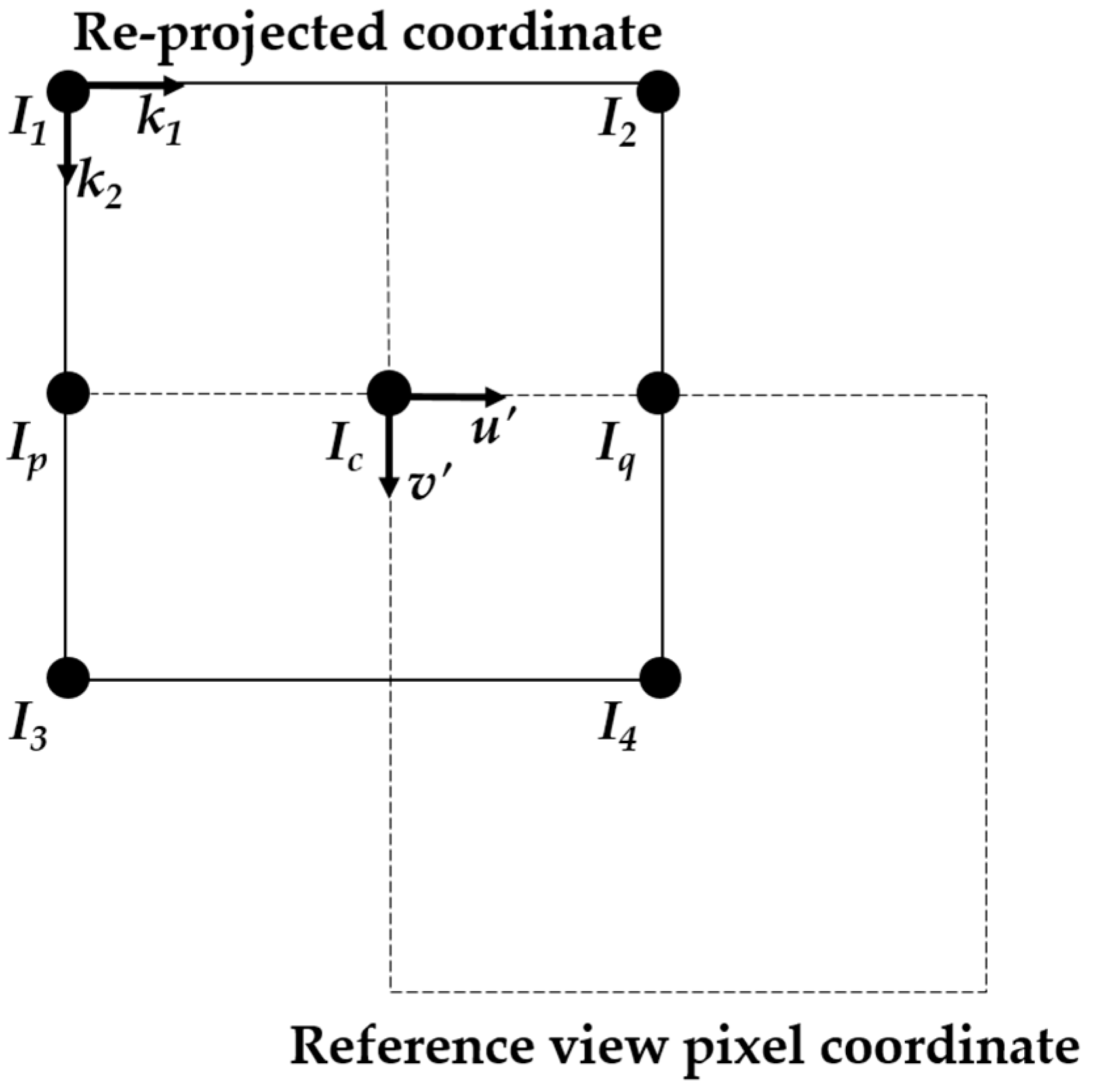

3.2. Re-Projection Method

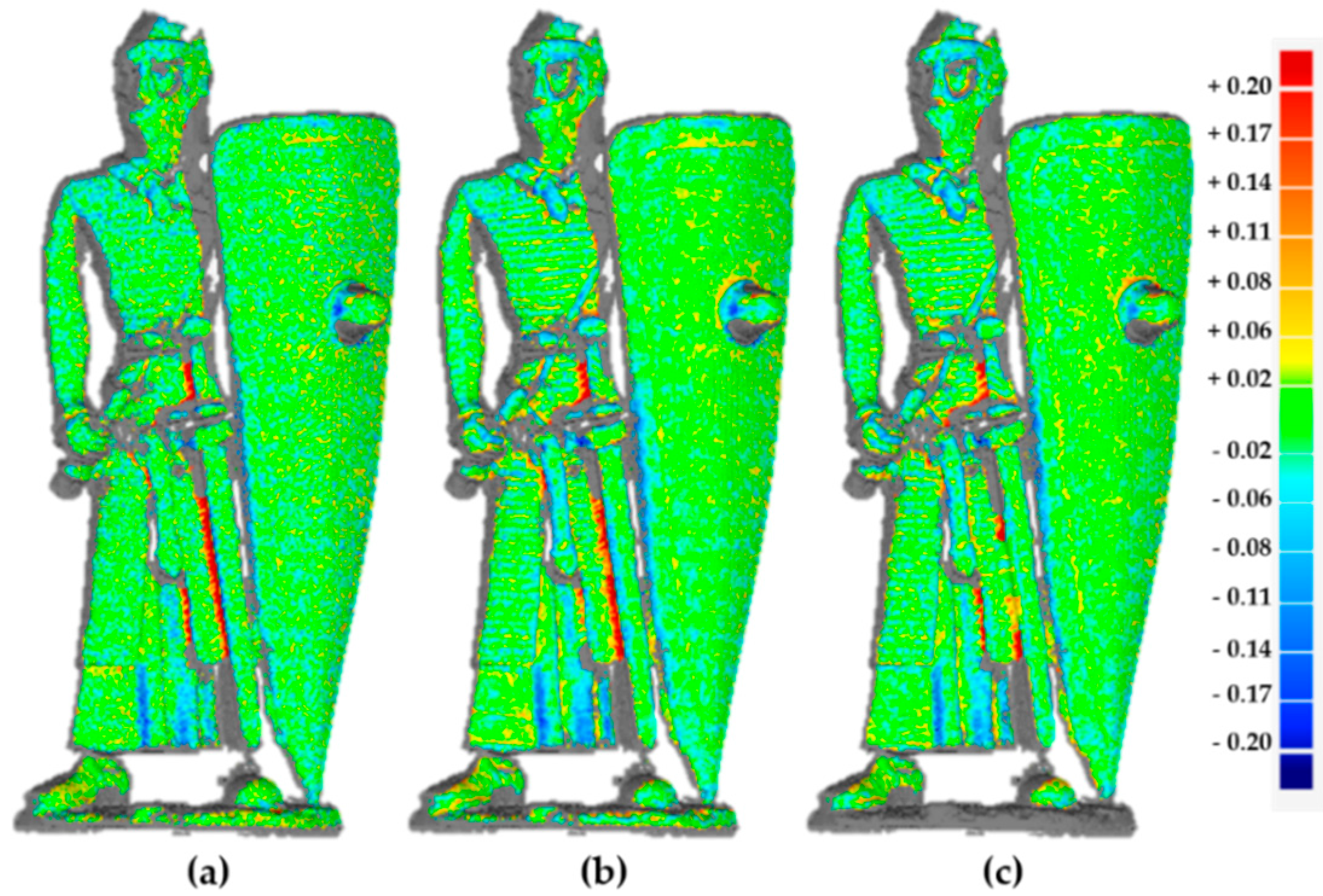

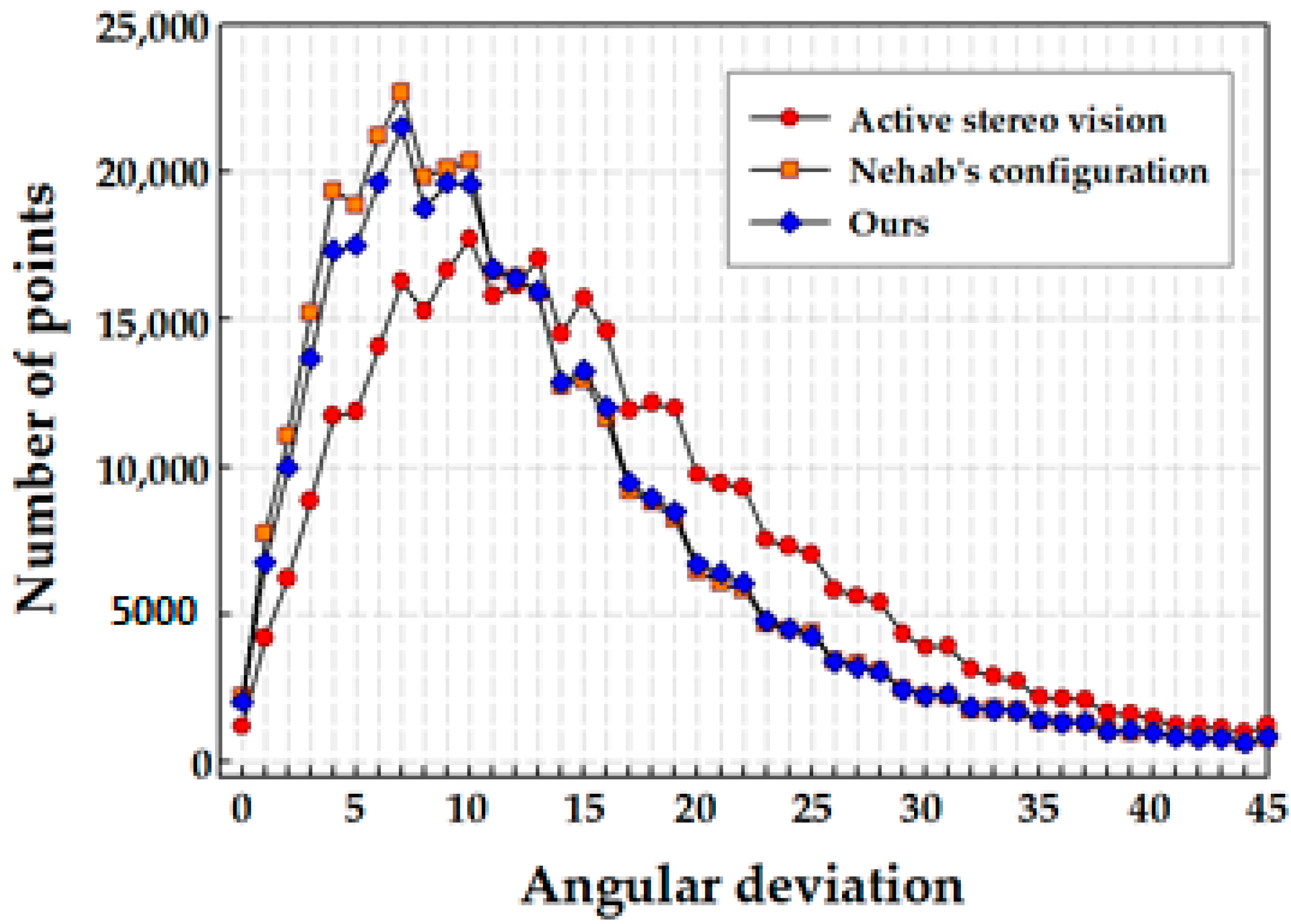

4. Experimental Results and Evaluation

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Chen, F.; Brown, G.M.; Song, M. Overview of three-dimensional shape measurement using optical methods. Opt. Eng. 2000, 39, 10–22. [Google Scholar]

- Bernardini, F.; Rushmeier, H.; Ioana, M. Building a digital model of Michelangelo’s Florentine Pietà. IEEE Comput. Graph. Appl. 2002, 22, 59–67. [Google Scholar] [CrossRef]

- Seno, T.; Ohtake, Y.; Kikuchi, Y.; Saito, N.; Suzuki, H.; Nagai, Y. 3D scanning based mold correction for planar and cylindrical parts in aluminum die casting. J. Comput. Des. Eng. 2015, 2, 96–104. [Google Scholar] [CrossRef]

- Betta, G.; Capriglione, D.; Corvino, M.; Lavatelli, A.; Liguori, C.; Sommella, P.; Zappa, E. Metrological characterization of 3D biometric face recognition systems in actual operating conditions. ACTA IMEKO 2017, 6, 33–42. [Google Scholar] [CrossRef]

- Fu, X.; Peng, C.; Li, Z.; Liu, S.; Tan, M.; Song, J. The application of multi-baseline digital close-range photogrammetry in three-dimensional imaging and measurement of dental casts. PLoS ONE 2017, 12. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Yang, J.; Kong, B.; Wang, C. An automatic measurement method for absolute depth of objects in two monocular images based on sift feature. Appl. Sci. 2017, 7, 517. [Google Scholar] [CrossRef]

- Li, Y.; Li, Z. A multi-view stereo algorithm based on homogeneous direct spatial expansion with improved reconstruction accuracy and completeness. Appl. Sci. 2017, 7, 446. [Google Scholar] [CrossRef]

- Besl, P.J. Active optical range imaging sensors. Mach. Vis. Appl. 1989, 1, 127–152. [Google Scholar] [CrossRef]

- Valkenburg, R.J.; McIvor, A.M. Accurate 3D measurement using a structured light system. Image Vis. Comput. 1998, 16, 99–110. [Google Scholar] [CrossRef]

- Geng, J. Structured-light 3D surface imaging: A tutorial. Adv. Opt. Photonics 2011, 3, 128–160. [Google Scholar] [CrossRef]

- Woodham, R.J. Photometric method for determining surface orientation from multiple images. Opt. Eng. 1980, 19, 139–144. [Google Scholar] [CrossRef]

- Hernandez, C.; Vogiatzis, G.; Cipolla, R. Multiview photometric stereo. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 548–554. [Google Scholar] [CrossRef] [PubMed]

- Basri, R.; Jacobs, D.; Kemelmacher, I. Photometric stereo with general, unknown lighting. Int. J. Comput. Vis. 2007, 72, 239–257. [Google Scholar] [CrossRef]

- Nehab, D.; Rusinkiewicz, S.; Davis, J.; Ramamoorthi, R. Efficiently combining positions and normals for precise 3D geometry. ACM Trans. Graph (TOG) 2005, 24, 536–543. [Google Scholar] [CrossRef]

- Malzbender, T.; Wilburn, B.; Gelb, D.; Ambrisco, B. Surface enhancement using real-time photometric stereo and reflectance transformation. In Proceedings of the 17th Eurographics Conference on Rendering Techniques, Nicosia, Cyprus, 26–28 June 2006; pp. 245–250. [Google Scholar]

- Herbort, S.; Gerken, B.; Schugk, D.; Wöhler, C. 3D range scan enhancement using image-based methods. ISPRS J. Photogramm. Remote Sens. 2013, 84, 69–84. [Google Scholar] [CrossRef]

- Park, J.; Sinha, S.N.; Matsushita, Y.; Tai, Y.W.; Kweon, I.S. Multiview photometric stereo using planar mesh parameterization. Proc. IEEE Int. Conf. Comput. Vis. 2013, 39, 1161–1168. [Google Scholar]

- Im, S.; Lee, Y.; Kim, J.; Chang, M. A solution for camera occlusion using a repaired pattern from a projector. Int. J. Precis. Eng. Manuf. 2016, 17, 1443–1450. [Google Scholar] [CrossRef]

- Geomagic Control X. Available online: http://www.geomagic.com/en/products/control/overview (accessed on 25 August 2017).

| Error (mm) | Active Stereo Vision | Nehab’s Configuration | Ours |

|---|---|---|---|

| RMS (root mean square) Estimate | 0.0490 | 0.0450 | 0.0454 |

| Standard deviation | 0.0489 | 0.0450 | 0.0454 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, K.; Kim, S.; Im, S.; Choi, T.; Chang, M. A Photometric Stereo Using Re-Projected Images for Active Stereo Vision System. Appl. Sci. 2017, 7, 1058. https://doi.org/10.3390/app7101058

Jung K, Kim S, Im S, Choi T, Chang M. A Photometric Stereo Using Re-Projected Images for Active Stereo Vision System. Applied Sciences. 2017; 7(10):1058. https://doi.org/10.3390/app7101058

Chicago/Turabian StyleJung, Keonhwa, Seokjung Kim, Sungbin Im, Taehwan Choi, and Minho Chang. 2017. "A Photometric Stereo Using Re-Projected Images for Active Stereo Vision System" Applied Sciences 7, no. 10: 1058. https://doi.org/10.3390/app7101058