Technology-Facilitated Diagnosis and Treatment of Individuals with Autism Spectrum Disorder: An Engineering Perspective

Abstract

:1. Introduction

Research Contribution

2. Inclusion of Literature

3. Presentation to the Participants

3.1. Computers, Game Consoles and Mobile Devices

- Strong customizability. The game must be customizable to fit the needs and preferences of each child with autism. Unlike many diseases, autism children may have drastically different strengths and skill deficiencies.

- Increasing levels of complexity of game tasks. As a child acquires more skills, progressively more challenging tasks should be made available. This is the case for virtually all serious games. For example, Chua et al. [14] reported the development of an iPad-based game for children with ASD to learn emotion recognition. At the highest level, there are three worlds. There are six difficulty levels within each world. Each scenario contains one scenario depicted by a video of a human actor. At the end of the video, the headshot of the actor with a facial expression appropriate for the scenario is shown and the participant is asked to identify the emotion expressed by the headshot.

- Clear and easy to understand task goals. For each task, there should be a clear goal that can be easily understand by the participant.

- Multiple means of communicating game instructions, such as text, voice, and visual cues. Some children with low functioning ASD would need visual cues in particular.

- Positive reinforcement with rewards. Game score alone might not be enough to motivate children with ASD. Hence, other forms of rewards such as video or audio effect should be provided to encourage and motivate participants. In [13], a smiley face is shown at the completion of each game regardless of the score. This is referred to as reward-based intervention.

- Repeatability and predictability of game play. Unpredictability may cause anxiety to any children with ASD. The repeatability is needed for participants to learn.

- Smooth transitions. The game must be made easy to repeat and easy to transition to a higher level without noticeable delay so that children with ASD are not discouraged.

- Minimalistic graphics and sound/music. All graphical/sound elements must be included for the game goal because children with ASD maybe subject to sensor overload. Even the use of color may play an important role for improved usability for children with ASD [14].

- Dynamic stimuli. Prolong static scene should be avoided to trigger motor rigidity.

3.2. Virtual Reality Systems/Devices

3.3. Social Robots

4. Input/Reactions from Participants

4.1. Keyboard, Mouse, Touch Screen

4.2. Facial Motion/Expression Tracking

4.3. Eye Gaze Tracking

4.4. Human Motion Tracking via Depth Sensors

4.5. Speech Recognition

4.6. Physiological Data Collection

4.7. Video/Voice Recording

5. Program Customizability and Adaptability

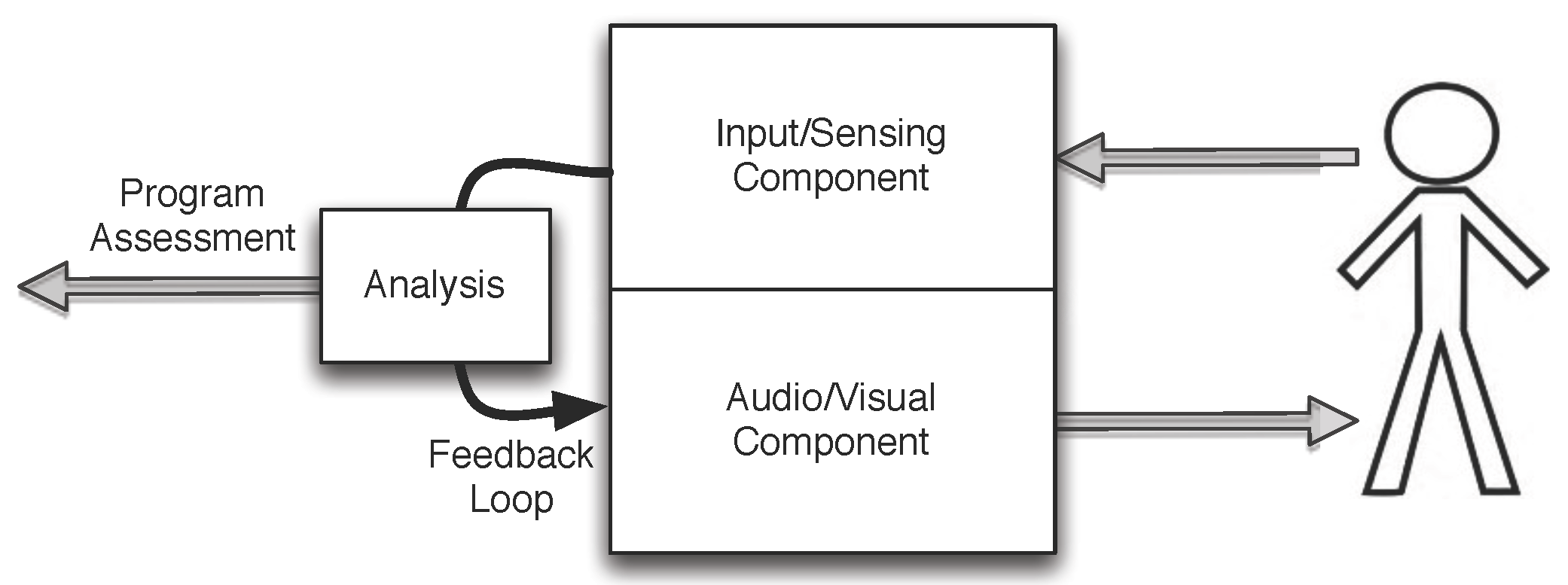

5.1. Automatic Adaption of Programs

5.2. Manual Control

6. Program Evaluation

6.1. Behavioral Pattern Assessment

6.2. Evaluation of Treatment Effectiveness

6.3. Usability Assessment

7. Discussion

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| ASD | Autism Spectrum Disorder |

| DOF | Degrees of Freedom |

| ECG | Electrocardiographic |

| EDA | Electrodermal Activity |

| EEG | Electroencephalography |

| ES | Engagement-Sensitive System |

| GSR | Galvanic skin response |

| ITC-SoPI | Independent Television Commission-Sense of Presence Inventory |

| PPG | Pulse Plethysmogram |

| RSP | Respiration |

| SKT | Skin Temperature |

| PS | Performance-Sensitive System |

References

- Wieckowski, A.T.; White, S.W. Application of technology to social communication impairment in childhood and adolescence. Neurosci. Biobehav. Rev. 2017. [Google Scholar] [CrossRef] [PubMed]

- Aresti-Bartolome, N.; Garcia-Zapirain, B. Technologies as support tools for persons with autistic spectrum disorder: A systematic review. Int. J. Environ. Res. Public Health 2014, 11, 7767–7802. [Google Scholar] [CrossRef] [PubMed]

- Scassellati, B.; Admoni, H.; Matarić, M. Robots for use in autism research. Ann. Rev. Biomed. Eng. 2012, 14, 275–294. [Google Scholar] [CrossRef] [PubMed]

- Diehl, J.J.; Schmitt, L.M.; Villano, M.; Crowell, C.R. The clinical use of robots for individuals with autism spectrum disorders: A critical review. Res. Autism Spectr. Disord. 2012, 6, 249–262. [Google Scholar] [CrossRef] [PubMed]

- Cabibihan, J.J.; Javed, H.; Ang, M.; Aljunied, S.M. Why robots? A survey on the roles and benefits of social robots in the therapy of children with autism. Int. J. Soc. Robot. 2013, 5, 593–618. [Google Scholar] [CrossRef]

- Pennisi, P.; Tonacci, A.; Tartarisco, G.; Billeci, L.; Ruta, L.; Gangemi, S.; Pioggia, G. Autism and social robotics: A systematic review. Autism Res. 2015, 9, 165–183. [Google Scholar] [CrossRef] [PubMed]

- Begum, M.; Serna, R.W.; Yanco, H.A. Are robots ready to deliver autism interventions? a comprehensive review. Int. J. Soc. Robot. 2016, 8, 157–181. [Google Scholar] [CrossRef]

- Parsons, S. Authenticity in Virtual Reality for assessment and intervention in autism: A conceptual review. Educ. Res. Rev. 2016, 19, 138–157. [Google Scholar] [CrossRef]

- Zakari, H.M.; Ma, M.; Simmons, D. A review of serious games for children with autism spectrum disorders (ASD). In Proceedings of the International Conference on Serious Games Development and Applications, Berlin, Germany, 9–10 October 2014; Springer: Cham, Switzerland, 2014; pp. 93–106. [Google Scholar]

- Grossard, C.; Grynspan, O.; Serret, S.; Jouen, A.L.; Bailly, K.; Cohen, D. Serious games to teach social interactions and emotions to individuals with autism spectrum disorders (ASD). Comput. Educ. 2017, 113, 195–211. [Google Scholar] [CrossRef]

- Chuah, M.C.; Coombe, D.; Garman, C.; Guerrero, C.; Spletzer, J. Lehigh instrument for learning interaction (lili): An interactive robot to aid development of social skills for autistic children. In Proceedings of the IEEE 11th International Conference on Mobile Ad Hoc and Sensor Systems, Philadelphia, PA, USA, 28–30 October 2014; pp. 731–736. [Google Scholar]

- Marwecki, S.; Rädle, R.; Reiterer, H. Encouraging Collaboration in Hybrid Therapy Games for Autistic Children. In CHI ’13 Extended Abstracts on Human Factors in Computing Systems; ACM: New York, NY, USA, 2013; pp. 469–474. [Google Scholar]

- Jouen, A.L.; Narzisi, A.; Xavier, J.; Tilmont, E.; Bodeau, N.; Bono, V.; Ketem-Premel, N.; Anzalone, S.; Maharatna, K.; Chetouani, M.; et al. GOLIAH (Gaming Open Library for Intervention in Autism at Home): A 6-month single blind matched controlled exploratory study. Child Adolesc. Psychiatry Ment. Health 2017, 11, 17. [Google Scholar] [CrossRef] [PubMed]

- Chua, L.; Goh, J.; Nay, Z.T.; Huang, L.; Cai, Y.; Seah, R. ICT-Enabled Emotional Learning for Special Needs Education. In Simulation and Serious Games for Education; Springer: Cham, Switzerland, 2017; pp. 29–45. [Google Scholar]

- Zhang, L.; Gabriel-King, M.; Armento, Z.; Baer, M.; Fu, Q.; Zhao, H.; Swanson, A.; Sarkar, M.; Warren, Z.; Sarkar, N. Design of a Mobile Collaborative Virtual Environment for Autism Intervention. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Toronto, ON, Canada, 17–21 July 2016; Springer: Cham, Switzerland, 2016; pp. 265–275. [Google Scholar]

- Vullamparthi, A.J.; Nelaturu, S.C.B.; Mallaya, D.D.; Chandrasekhar, S. Assistive learning for children with autism using augmented reality. In Proceedings of the 2013 IEEE Fifth International Conference on Technology for Education (T4E), Kharagpur, India, 18–20 December 2013; pp. 43–46. [Google Scholar]

- Simões, M.; Mouga, S.; Pedrosa, F.; Carvalho, P.; Oliveira, G.; Branco, M.C. Neurohab: a platform for virtual training of daily living skills in autism spectrum disorder. Procedia Technol. 2014, 16, 1417–1423. [Google Scholar] [CrossRef]

- Aresti-Bartolome, N.; Garcia-Zapirain, B. Cognitive rehabilitation system for children with autism spectrum disorder using serious games: A pilot study. Bio-Med. Mater. Eng. 2015, 26, S811–S824. [Google Scholar] [CrossRef] [PubMed]

- Fridenson-Hayo, S.; Berggren, S.; Lassalle, A.; Tal, S.; Pigat, D.; Meir-Goren, N.; O’Reilly, H.; Ben-Zur, S.; Bölte, S.; Baron-Cohen, S. Emotiplay: A serious game for learning about emotions in children with autism: Results of a cross-cultural evaluation. Eur. Child Adolesc. Psychiatry 2017, 26, 979–992. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. A Novel Collaborative Virtual Reality Game for Children with ASD to Foster Social Interaction. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Toronto, ON, Canada, 17–21 July 2016; Springer: Cham, Switzerland, 2016; pp. 276–288. [Google Scholar]

- Bono, V.; Narzisi, A.; Jouen, A.L.; Tilmont, E.; Hommel, S.; Jamal, W.; Xavier, J.; Billeci, L.; Maharatna, K.; Wald, M.; et al. GOLIAH: A gaming platform for home-based intervention in autism–principles and design. Front. Psychiatry 2016, 7, 70. [Google Scholar] [CrossRef] [PubMed]

- Bartoli, L.; Corradi, C.; Garzotto, F.; Valoriani, M. Exploring motion-based touchless games for autistic children’s learning. In Proceedings of the 12th International Conference on Interaction Design and Children, New York, NY, USA, 24–27 June 2013; pp. 102–111. [Google Scholar]

- Garzotto, F.; Gelsomini, M.; Oliveto, L.; Valoriani, M. Motion-based touchless interaction for ASD children: A case study. In Proceedings of the 2014 International Working Conference on Advanced Visual Interfaces, Como, Italy, 27–29 May 2014; pp. 117–120. [Google Scholar]

- Ge, Z.; Fan, L. Social Development for Children with Autism Using Kinect Gesture Games: A Case Study in Suzhou Industrial Park Renai School. In Simulation and Serious Games for Education; Springer: Cham, Switzerland, 2017; pp. 113–123. [Google Scholar]

- Bartoli, L.; Garzotto, F.; Gelsomini, M.; Oliveto, L.; Valoriani, M. Designing and Evaluating Touchless Playful Interaction for ASD Children. In Proceedings of the 2014 Conference on Interaction Design and Children, Aarhus, Denmark, 17–20 June 2014; ACM: New York, NY, USA, 2014; pp. 17–26. [Google Scholar]

- Barajas, A.O.; Al Osman, H.; Shirmohammadi, S. A Serious Game for children with Autism Spectrum Disorder as a tool for play therapy. In Proceedings of the 2017 IEEE 5th International Conference on Serious Games and Applications for Health (SeGAH), Perth, WA, Australia, 2–4 April 2017; pp. 1–7. [Google Scholar]

- Whyte, E.M.; Smyth, J.M.; Scherf, K.S. Designing serious game interventions for individuals with autism. J. Autism Dev. Disord. 2015, 45, 3820–3831. [Google Scholar] [CrossRef] [PubMed]

- Jarrold, W.; Mundy, P.; Gwaltney, M.; Bailenson, J.; Hatt, N.; McIntyre, N.; Kim, K.; Solomon, M.; Novotny, S.; Swain, L. Social attention in a virtual public speaking task in higher functioning children with autism. Autism Res. 2013, 6, 393–410. [Google Scholar] [CrossRef] [PubMed]

- Ip, H.H.; Lai, C.H.Y.; Wong, S.W.; Tsui, J.K.; Li, R.C.; Lau, K.S.Y.; Chan, D.F. Visuospatial attention in children with Autism Spectrum Disorder: A comparison between 2-D and 3-D environments. Cogent Educ. 2017, 4, 1307709. [Google Scholar] [CrossRef]

- Lorenzo, G.; Lledó, A.; Pomares, J.; Roig, R. Design and application of an immersive virtual reality system to enhance emotional skills for children with autism spectrum disorders. Comput. Educ. 2016, 98, 192–205. [Google Scholar] [CrossRef]

- Bozgeyikli, L.; Bozgeyikli, E.; Raij, A.; Alqasemi, R.; Katkoori, S.; Dubey, R. Vocational training with immersive virtual reality for individuals with autism: Towards better design practices. In Proceedings of the 2016 IEEE 2nd Workshop on Everyday Virtual Reality (WEVR), Greenville, SC, USA, 20 March 2016; pp. 21–25. [Google Scholar]

- Bozgeyikli, E.; Raij, A.; Katkoori, S.; Dubey, R. Locomotion in Virtual Reality for Individuals with Autism Spectrum Disorder. In Proceedings of the 2016 Symposium on Spatial User Interaction, Tokyo, Japan, 15–16 October 2016; pp. 33–42. [Google Scholar]

- Ip, H.H.; Wong, S.W.; Chan, D.F.; Byrne, J.; Li, C.; Yuan, V.S.; Lau, K.S.; Wong, J.Y. Virtual reality enabled training for social adaptation in inclusive education settings for school-aged children with autism spectrum disorder (ASD). In Proceedings of the International Conference on Blending Learning, Beijing, China, 19–21 July 2016; Springer: Cham, Switzerland, 2016; pp. 94–102. [Google Scholar]

- Cheng, Y.; Huang, C.L.; Yang, C.S. Using a 3D immersive virtual environment system to enhance social understanding and social skills for children with autism spectrum disorders. Focus Autism Other Dev. Disabil. 2015, 30, 222–236. [Google Scholar] [CrossRef]

- Maskey, M.; Lowry, J.; Rodgers, J.; McConachie, H.; Parr, J.R. Reducing specific phobia/fear in young people with autism spectrum disorders (ASDs) through a virtual reality environment intervention. PLoS ONE 2014, 9, e100374. [Google Scholar] [CrossRef] [PubMed]

- Bozgeyikli, L.; Bozgeyikli, E.; Clevenger, M.; Gong, S.; Raij, A.; Alqasemi, R.; Sundarrao, S.; Dubey, R. VR4VR: Towards vocational rehabilitation of individuals with disabilities in immersive virtual reality environments. In Proceedings of the 2014 2nd Workshop on Virtual and Augmented Assistive Technology (VAAT), Minneapolis, MN, USA, 30 March 2014; pp. 29–34. [Google Scholar]

- Lahiri, U.; Bekele, E.; Dohrmann, E.; Warren, Z.; Sarkar, N. Design of a virtual reality based adaptive response technology for children with autism. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 55–64. [Google Scholar] [CrossRef] [PubMed]

- Carter, E.J.; Williams, D.L.; Hodgins, J.K.; Lehman, J.F. Are children with autism more responsive to animated characters? A study of interactions with humans and human-controlled avatars. J. Autism Dev. Disord. 2014, 44, 2475–2485. [Google Scholar] [CrossRef] [PubMed]

- Amaral, C.P.; Simões, M.A.; Castelo-Branco, M.S. Neural Signals Evoked by Stimuli of Increasing Social Scene Complexity Are Detectable at the Single-Trial Level and Right Lateralized. PLoS ONE 2015, 10, e0121970. [Google Scholar] [CrossRef] [PubMed]

- Kuriakose, S.; Lahiri, U. Understanding the psycho-physiological implications of interaction with a virtual reality-based system in adolescents with autism: a feasibility study. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 665–675. [Google Scholar] [CrossRef] [PubMed]

- Lahiri, U.; Bekele, E.; Dohrmann, E.; Warren, Z.; Sarkar, N. A physiologically informed virtual reality based social communication system for individuals with autism. J. Autism Dev. Disord. 2015, 45, 919–931. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.D.; Allen, T.; Abdullahi, S.M.; Pelphrey, K.A.; Volkmar, F.R.; Chapman, S.B. Brain responses to biological motion predict treatment outcome in young adults with autism receiving Virtual Reality Social Cognition Training: Preliminary findings. Behav. Res. Ther. 2017, 93, 55–66. [Google Scholar] [CrossRef] [PubMed]

- Wallace, S.; Parsons, S.; Bailey, A. Self-reported sense of presence and responses to social stimuli by adolescents with ASD in a collaborative virtual reality environment. J. Intellect. Dev. Disabil. 2017, 42, 131–141. [Google Scholar] [CrossRef]

- Forbes, P.A.; Pan, X.; Hamilton, A.F. Reduced mimicry to virtual reality avatars in Autism Spectrum Disorder. J. Autism Dev. Disord. 2016, 46, 3788–3797. [Google Scholar] [CrossRef] [PubMed]

- Didehbani, N.; Allen, T.; Kandalaft, M.; Krawczyk, D.; Chapman, S. Virtual reality social cognition training for children with high functioning autism. Comput. Hum. Behav. 2016, 62, 703–711. [Google Scholar] [CrossRef]

- Ke, F.; Im, T. Virtual-reality-based social interaction training for children with high-functioning autism. J. Educ. Res. 2013, 106, 441–461. [Google Scholar] [CrossRef]

- Wang, M.; Reid, D. Using the virtual reality-cognitive rehabilitation approach to improve contextual processing in children with autism. Sci. World J. 2013, 2013, 716890. [Google Scholar] [CrossRef] [PubMed]

- Newbutt, N.; Sung, C.; Kuo, H.J.; Leahy, M.J.; Lin, C.C.; Tong, B. Brief report: A pilot study of the use of a virtual reality headset in autism populations. J. Autism Dev. Disord. 2016, 46, 3166–3176. [Google Scholar] [CrossRef] [PubMed]

- Miller, H.L.; Bugnariu, N.L. Level of immersion in virtual environments impacts the ability to assess and teach social skills in autism spectrum disorder. Cyberpsychol. Behav. Soc. Netw. 2016, 19, 246–256. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Desalvo, N.; Gao, Z.; Zhao, X.; Lerman, D.C.; Gnawali, O.; Shi, W. Eye Contact Conditioning in Autistic Children Using Virtual Reality Technology. In Proceedings of the International Symposium on Pervasive Computing Paradigms for Mental Health, Tokyo, Japan, 8–9 May 2014; Springer: Cham, Switzerland, 2014; pp. 79–89. [Google Scholar]

- Li, Y.; Elmaghraby, A.S.; El-Baz, A.; Sokhadze, E.M. Using physiological signal analysis to design affective VR games. In Proceedings of the 2015 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Abu Dhabi, UAE, 7–10 Deccember 2015; pp. 57–62. [Google Scholar]

- Carter, E.J.; Hyde, J.; Williams, D.L.; Hodgins, J.K. Investigating the influence of avatar facial characteristics on the social behaviors of children with autism. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 140–151. [Google Scholar]

- Hopkins, I.M.; Gower, M.W.; Perez, T.A.; Smith, D.S.; Amthor, F.R.; Casey Wimsatt, F.; Biasini, F.J. Avatar assistant: improving social skills in students with an ASD through a computer-based intervention. J. Autism Dev. Disord. 2011, 41, 1543–1555. [Google Scholar] [CrossRef] [PubMed]

- Cai, Y.; Chiew, R.; Fan, L.; Kwek, M.K.; Goei, S.L. The Virtual Pink Dolphins Project: An International Effort for Children with ASD in Special Needs Education. In Simulation and Serious Games for Education; Springer: Cham, Switzerland, 2017; pp. 1–11. [Google Scholar]

- Cai, Y.; Chia, N.K.; Thalmann, D.; Kee, N.K.; Zheng, J.; Thalmann, N.M. Design and development of a virtual dolphinarium for children with autism. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 208–217. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Cai, Y. A Gaze Tracking System for Children with Autism Spectrum Disorders. In Simulation and Serious Games for Education; Springer: Cham, Switzerland, 2017; pp. 137–145. [Google Scholar]

- Kuriakose, S.; Lahiri, U. Design of a Physiology-Sensitive VR-Based Social Communication Platform for Children With Autism. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1180–1191. [Google Scholar] [CrossRef] [PubMed]

- Halabi, O.; El-Seoud, S.A.; Alja’am, J.M.; Alpona, H.; Al-Hemadi, M.; Al-Hassan, D. Design of Immersive Virtual Reality System to Improve Communication Skills in Individuals with Autism. Int. J. Emerg. Technol. Learn. 2017, 12. [Google Scholar] [CrossRef]

- Kim, K.; Rosenthal, M.Z.; Gwaltney, M.; Jarrold, W.; Hatt, N.; McIntyre, N.; Swain, L.; Solomon, M.; Mundy, P. A virtual joy-stick study of emotional responses and social motivation in children with autism spectrum disorder. J. Autism Dev. Disord. 2015, 45, 3891–3899. [Google Scholar] [CrossRef] [PubMed]

- Bekele, E.; Crittendon, J.; Zheng, Z.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Assessing the utility of a virtual environment for enhancing facial affect recognition in adolescents with autism. J. Autism Dev. Disord. 2014, 44, 1641–1650. [Google Scholar] [CrossRef] [PubMed]

- Serret, S.; Hun, S.; Iakimova, G.; Lozada, J.; Anastassova, M.; Santos, A.; Vesperini, S.; Askenazy, F. Facing the challenge of teaching emotions to individuals with low-and high-functioning autism using a new Serious game: a pilot study. Mol. Autism 2014, 5, 37. [Google Scholar] [CrossRef] [PubMed]

- Blain, S.D.; Peterman, J.S.; Park, S. Subtle cues missed: Impaired perception of emotion from gait in relation to schizotypy and autism spectrum traits. Schizophr. Res. 2017, 183, 157–160. [Google Scholar] [CrossRef] [PubMed]

- Roether, C.L.; Omlor, L.; Christensen, A.; Giese, M.A. Critical features for the perception of emotion from gait. J. Vis. 2009, 9, 15. [Google Scholar] [CrossRef] [PubMed]

- Hsu, C.W.; Teoh, Y.S. Investigating Event Memory in Children with Autism Spectrum Disorder: Effects of a Computer-Mediated Interview. J. Autism Dev. Disord. 2017, 47, 359–372. [Google Scholar] [CrossRef] [PubMed]

- Kandalaft, M.R.; Didehbani, N.; Krawczyk, D.C.; Allen, T.T.; Chapman, S.B. Virtual reality social cognition training for young adults with high-functioning autism. J. Autism Dev. Disord. 2013, 43, 34–44. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Jia, Q.; Feng, Y. Human-Robot Interactoin Design for Robot-Assisted Intervention for Children with Autism Based on ES Theory. In Proceedings of the 2016 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 27–28 August 2016; Volume 2, pp. 320–324. [Google Scholar]

- Good, J.; Good, J.; Parsons, S.; Parsons, S.; Yuill, N.; Yuill, N.; Brosnan, M.; Brosnan, M. Virtual reality and robots for autism: moving beyond the screen. J. Assist. Technol. 2016, 10, 211–216. [Google Scholar] [CrossRef]

- De Haas, M.; Aroyo, A.M.; Barakova, E.; Haselager, W.; Smeekens, I. The effect of a semi-autonomous robot on children. In Proceedings of the 2016 IEEE 8th International Conference on Intelligent Systems (IS), Sofia, Bulgaria, 4–6 September 2016; pp. 376–381. [Google Scholar]

- Bernardo, B.; Alves-Oliveira, P.; Santos, M.G.; Melo, F.S.; Paiva, A. An Interactive Tangram Game for Children with Autism. In Proceedings of the International Conference on Intelligent Virtual Agents, Los Angeles, CA, USA, 20–23 September 2016; Springer: Cham, Switzerland, 2016; pp. 500–504. [Google Scholar]

- Hong, T.S.; Mohamaddan, S.; Shazali, S.T.S.; Mohtadzar, N.A.A.; Bakar, R.A. A review on assistive tools for autistic patients. In Proceedings of the 2016 IEEE EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 4–8 December 2016; pp. 51–56. [Google Scholar]

- Mengoni, S.E.; Irvine, K.; Thakur, D.; Barton, G.; Dautenhahn, K.; Guldberg, K.; Robins, B.; Wellsted, D.; Sharma, S. Feasibility study of a randomised controlled trial to investigate the effectiveness of using a humanoid robot to improve the social skills of children with autism spectrum disorder (Kaspar RCT): A study protocol. BMJ Open 2017, 7, e017376. [Google Scholar] [CrossRef] [PubMed]

- Sartorato, F.; Przybylowski, L.; Sarko, D.K. Improving therapeutic outcomes in autism spectrum disorders: Enhancing social communication and sensory processing through the use of interactive robots. J. Psychiatr. Res. 2017, 90, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Wong, H.; Zhong, Z. Assessment of robot training for social cognitive learning. In Proceedings of the 2016 16th International Conference on Control, Automation and Systems (ICCAS), Gyeongju, Korea, 16–19 October 2016; pp. 893–898. [Google Scholar]

- So, W.C.; Wong, M.Y.; Cabibihan, J.J.; Lam, C.Y.; Chan, R.Y.; Qian, H.H. Using robot animation to promote gestural skills in children with autism spectrum disorders. J. Comput. Assist. Learn. 2016, 32, 632–646. [Google Scholar] [CrossRef]

- Bharatharaj, J.; Huang, L.; Al-Jumaily, A.M.; Krageloh, C.; Elara, M.R. Effects of Adapted Model-Rival Method and parrot-inspired robot in improving learning and social interaction among children with autism. In Proceedings of the 2016 International Conference on Robotics and Automation for Humanitarian Applications (RAHA), Kollam, India, 18–20 December 2016; pp. 1–5. [Google Scholar]

- Tennyson, M.F.; Kuester, D.A.; Casteel, J.; Nikolopoulos, C. Accessible robots for improving social skills of individuals with autism. J. Artif. Intell. Soft Comput. Res. 2016, 6, 267–277. [Google Scholar] [CrossRef]

- Suzuki, R.; Lee, J. Robot-play therapy for improving prosocial behaviours in children with Autism Spectrum Disorders. In Proceedings of the 2016 International Symposium on Micro-NanoMechatronics and Human Science (MHS), Nagoya, Japan, 28–30 November 2016; pp. 1–5. [Google Scholar]

- Wainer, J.; Dautenhahn, K.; Robins, B.; Amirabdollahian, F. A pilot study with a novel setup for collaborative play of the humanoid robot KASPAR with children with autism. Int. J. Soc. Robot. 2014, 6, 45–65. [Google Scholar] [CrossRef] [Green Version]

- Wainer, J.; Robins, B.; Amirabdollahian, F.; Dautenhahn, K. Using the humanoid robot KASPAR to autonomously play triadic games and facilitate collaborative play among children with autism. IEEE Trans. Auton. Ment. Dev. 2014, 6, 183–199. [Google Scholar] [CrossRef]

- Vanderborght, B.; Simut, R.; Saldien, J.; Pop, C.; Rusu, A.S.; Pintea, S.; Lefeber, D.; David, D.O. Using the social robot probo as a social story telling agent for children with ASD. Interact. Stud. 2012, 13, 348–372. [Google Scholar] [CrossRef]

- Kajopoulos, J.; Wong, A.H.Y.; Yuen, A.W.C.; Dung, T.A.; Kee, T.Y.; Wykowska, A. Robot-assisted training of joint attention skills in children diagnosed with autism. In Proceedings of the International Conference on Social Robotics, Paris, France, 26–30 October 2015; Springer: Cham, Switzerland, 2015; pp. 296–305. [Google Scholar]

- Bharatharaj, J.; Huang, L.; Al-Jumaily, A.M.; Krageloh, C.; Elara, M.R. Experimental evaluation of parrot-inspired robot and adapted model-rival method for teaching children with autism. In Proceedings of the 2016 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1–6. [Google Scholar]

- Bharatharaj, J.; Huang, L.; Mohan, R.E.; Al-Jumaily, A.; Krägeloh, C. Robot-Assisted Therapy for Learning and Social Interaction of Children with Autism Spectrum Disorder. Robotics 2017, 6, 4. [Google Scholar] [CrossRef]

- Koch, S.A.; Stevens, C.E.; Clesi, C.D.; Lebersfeld, J.B.; Sellers, A.G.; McNew, M.E.; Biasini, F.J.; Amthor, F.R.; Hopkins, M.I. A Feasibility Study Evaluating the Emotionally Expressive Robot SAM. Int. J. Soc. Robot. 2017, 9, 601–613. [Google Scholar] [CrossRef]

- Warren, Z.E.; Zheng, Z.; Swanson, A.R.; Bekele, E.; Zhang, L.; Crittendon, J.A.; Weitlauf, A.F.; Sarkar, N. Can robotic interaction improve joint attention skills? J. Autism Dev. Disord. 2015, 45, 3726. [Google Scholar] [CrossRef] [PubMed]

- Wiese, E.; Müller, H.J.; Wykowska, A. Using a gaze-cueing paradigm to examine social cognitive mechanisms of individuals with autism observing robot and human faces. In Proceedings of the International Conference on Social Robotics, Sydney, Australia, 27–29 October 2014; Springer: Cham, Switzerland, 2014; pp. 370–379. [Google Scholar]

- Hirokawa, M.; Funahashi, A.; Pan, Y.; Itoh, Y.; Suzuki, K. Design of a robotic agent that measures smile and facing behavior of children with Autism Spectrum Disorder. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 843–848. [Google Scholar]

- Yun, S.S.; Choi, J.; Park, S.K. Robotic behavioral intervention to facilitate eye contact and reading emotions of children with autism spectrum disorders. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 694–699. [Google Scholar]

- Yun, S.S.; Park, S.K.; Choi, J. A robotic treatment approach to promote social interaction skills for children with autism spectrum disorders. In Proceedings of the 2014 RO-MAN: The 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 130–134. [Google Scholar]

- Hirose, J.; Hirokawa, M.; Suzuki, K. Robotic gaming companion to facilitate social interaction among children. In Proceedings of the 2014 RO-MAN: The 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 63–68. [Google Scholar]

- Mavadati, S.M.; Feng, H.; Gutierrez, A.; Mahoor, M.H. Comparing the gaze responses of children with autism and typically developed individuals in human-robot interaction. In Proceedings of the 2014 14th IEEE-RAS International Conference on Humanoid Robots (Humanoids), Madrid, Spain, 18–20 November 2014; pp. 1128–1133. [Google Scholar]

- Anzalone, S.M.; Boucenna, S.; Cohen, D.; Chetouani, M. Autism assessment through a small humanoid robot. In Proceedings of the HRI: A Bridge between Robotics and Neuroscience, Workshop of the 9th ACM/IEEE International Conference on Human-Robot Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 1–2. [Google Scholar]

- Anzalone, S.M.; Tilmont, E.; Boucenna, S.; Xavier, J.; Jouen, A.L.; Bodeau, N.; Maharatna, K.; Chetouani, M.; Cohen, D.; Group, M.S.; et al. How children with autism spectrum disorder behave and explore the 4-dimensional (spatial 3D+ time) environment during a joint attention induction task with a robot. Res. Autism Spectr. Disord. 2014, 8, 814–826. [Google Scholar] [CrossRef]

- Conti, D.; Di Nuovo, S.; Buono, S.; Trubia, G.; Di Nuovo, A. Use of robotics to stimulate imitation in children with Autism Spectrum Disorder: A pilot study in a clinical setting. In Proceedings of the 2015 24th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Kobe, Japan, 31 August–4 September 2015; pp. 1–6. [Google Scholar]

- Srinivasan, S.M.; Park, I.K.; Neelly, L.B.; Bhat, A.N. A comparison of the effects of rhythm and robotic interventions on repetitive behaviors and affective states of children with Autism Spectrum Disorder (ASD). Res. Autism Spectr. Disord. 2015, 18, 51–63. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, S.M.; Eigsti, I.M.; Gifford, T.; Bhat, A.N. The effects of embodied rhythm and robotic interventions on the spontaneous and responsive verbal communication skills of children with Autism Spectrum Disorder (ASD): A further outcome of a pilot randomized controlled trial. Res. Autism Spectr. Disord. 2016, 27, 73–87. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, S.M.; Kaur, M.; Park, I.K.; Gifford, T.D.; Marsh, K.L.; Bhat, A.N. The effects of rhythm and robotic interventions on the imitation/praxis, interpersonal synchrony, and motor performance of children with autism spectrum disorder (ASD): A pilot randomized controlled trial. Autism Res. Treat. 2015, 2015, 736516. [Google Scholar] [CrossRef] [PubMed]

- Mavadati, S.M.; Feng, H.; Salvador, M.; Silver, S.; Gutierrez, A.; Mahoor, M.H. Robot-based therapeutic protocol for training children with Autism. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 855–860. [Google Scholar]

- Chevalier, P.; Martin, J.C.; Isableu, B.; Bazile, C.; Iacob, D.O.; Tapus, A. Joint Attention using Human-Robot Interaction: Impact of sensory preferences of children with autism. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 849–854. [Google Scholar]

- Boucenna, S.; Anzalone, S.; Tilmont, E.; Cohen, D.; Chetouani, M. Learning of social signatures through imitation game between a robot and a human partner. IEEE Trans. Auton. Ment. Dev. 2014, 6, 213–225. [Google Scholar] [CrossRef]

- Ranatunga, I.; Torres, N.A.; Patterson, R.; Bugnariu, N.; Stevenson, M.; Popa, D.O. RoDiCA: a human-robot interaction system for treatment of childhood autism spectrum disorders. In Proceedings of the 5th International Conference on PErvasive Technologies Related to Assistive Environments, Heraklion, Greece, 6–8 June 2012; p. 50. [Google Scholar]

- Costa, S.C.; Soares, F.O.; Pereira, A.P.; Moreira, F. Constraints in the design of activities focusing on emotion recognition for children with ASD using robotic tools. In Proceedings of the 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics, Rome, Italy, 24–27 June 2012; pp. 1884–1889. [Google Scholar]

- Salvador, M.J.; Silver, S.; Mahoor, M.H. An emotion recognition comparative study of autistic and typically-developing children using the zeno robot. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 6128–6133. [Google Scholar]

- Chevalier, P.; Martin, J.C.; Isableu, B.; Bazile, C.; Tapus, A. Impact of sensory preferences of individuals with autism on the recognition of emotions expressed by two robots, an avatar, and a human. Auton. Robot. 2017, 41, 613–635. [Google Scholar] [CrossRef]

- Khosla, R.; Nguyen, K.; Chu, M.T. Socially Assistive Robot Enabled Home-Based Care for Supporting People with Autism. In Proceedings of the Pacific Asia Conference on Information Systems, Singapore, 5–9 July 2015; p. 12. [Google Scholar]

- Dehkordi, P.S.; Moradi, H.; Mahmoudi, M.; Pouretemad, H.R. The design, development, and deployment of RoboParrot for screening autistic children. Int. J. Soc. Robot. 2015, 7, 513–522. [Google Scholar] [CrossRef]

- Mazzei, D.; Greco, A.; Lazzeri, N.; Zaraki, A.; Lanata, A.; Igliozzi, R.; Mancini, A.; Stoppa, F.; Scilingo, E.P.; Muratori, F.; et al. Robotic social therapy on children with autism: preliminary evaluation through multi-parametric analysis. In Proceedings of the International Conference on Social Computing, Amsterdam, The Netherlands, 3–5 September 2012; pp. 766–771. [Google Scholar]

- Cao, H.L.; Esteban, P.G.; De Beir, A.; Simut, R.; Van De Perre, G.; Vanderborght, B. A platform-independent robot control architecture for multiple therapeutic scenarios. arXiv, 2016; arXiv:1607.04971. [Google Scholar]

- Cootes, T.F.; Edwards, G.J.; Taylor, C.J. Active appearance models. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 681–685. [Google Scholar] [CrossRef]

- Cazzato, D.; Mazzeo, P.L.; Spagnolo, P.; Distante, C. Automatic joint attention detection during interaction with a humanoid robot. In Proceedings of the International Conference on Social Robotics, Paris, France, 26–30 October 2015; Springer: Cham, Switzerland, 2015; pp. 124–134. [Google Scholar]

- Nunez, E.; Matsuda, S.; Hirokawa, M.; Suzuki, K. Humanoid robot assisted training for facial expressions recognition based on affective feedback. In Proceedings of the International Conference on Social Robotics, Paris, France, 26–30 October 2015; Springer: Cham, Switzerland, 2015; pp. 492–501. [Google Scholar]

- Leo, M.; Del Coco, M.; Carcagni, P.; Distante, C.; Bernava, M.; Pioggia, G.; Palestra, G. Automatic emotion recognition in robot-children interaction for asd treatment. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 145–153. [Google Scholar]

- Ponce, P.; Molina, A.; Grammatikou, D. Design based on fuzzy signal detection theory for a semi-autonomous assisting robot in children autism therapy. Comput. Hum. Behav. 2016, 55, 28–42. [Google Scholar] [CrossRef]

- Yun, S.S.; Kim, H.; Choi, J.; Park, S.K. A robot-assisted behavioral intervention system for children with autism spectrum disorders. Robot. Auton. Syst. 2016, 76, 58–67. [Google Scholar] [CrossRef]

- Simut, R.; Van de Perre, G.; Costescu, C.; Saldien, J.; Vanderfaeillie, J.; David, D.; Lebefer, D.; Vanderborght, B. Probogotchi: A novel edutainment device as a bridge for interaction between a child with asd and the typically developed sibling. J. Evid.-Based Psychother. 2016, 16, 91. [Google Scholar]

- Boccanfuso, L.; Scarborough, S.; Abramson, R.K.; Hall, A.V.; Wright, H.H.; O’Kane, J.M. A low-cost socially assistive robot and robot-assisted intervention for children with autism spectrum disorder: Field trials and lessons learned. Auton. Robot. 2017, 41, 637–655. [Google Scholar] [CrossRef]

- Liu, R.; Salisbury, J.P.; Vahabzadeh, A.; Sahin, N.T. Feasibility of an autism-focused augmented reality smartglasses system for social communication and behavioral coaching. Front. Pediatr. 2017, 5, 145. [Google Scholar] [CrossRef] [PubMed]

- Barakova, E.I.; Bajracharya, P.; Willemsen, M.; Lourens, T.; Huskens, B. Long-term LEGO therapy with humanoid robot for children with ASD. Expert Syst. 2015, 32, 698–709. [Google Scholar] [CrossRef]

- Boccanfuso, L.; Barney, E.; Foster, C.; Ahn, Y.A.; Chawarska, K.; Scassellati, B.; Shic, F. Emotional robot to examine different play patterns and affective responses of children with and without ASD. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016; pp. 19–26. [Google Scholar]

- Rudovic, O.; Lee, J.; Mascarell-Maricic, L.; Schuller, B.W.; Picard, R.W. Measuring Engagement in Robot-Assisted Autism Therapy: A Cross-Cultural Study. Front. Robot. AI 2017, 4, 36. [Google Scholar] [CrossRef]

- KB, P.R.; Lahiri, U. Design of Eyegaze-sensitive Virtual Reality Based Social Communication Platform for Individuals with Autism. In Proceedings of the 2016 7th International Conference on Intelligent Systems, Modelling and Simulation (ISMS), Bangkok, Thailand, 25–27 January 2016; pp. 301–306. [Google Scholar]

- Noris, B.; Nadel, J.; Barker, M.; Hadjikhani, N.; Billard, A. Investigating gaze of children with ASD in naturalistic settings. PLoS ONE 2012, 7, e44144. [Google Scholar] [CrossRef] [PubMed]

- Courgeon, M.; Rautureau, G.; Martin, J.C.; Grynszpan, O. Joint attention simulation using eye-tracking and virtual humans. IEEE Trans. Affect. Comput. 2014, 5, 238–250. [Google Scholar] [CrossRef]

- Esubalew, T.; Lahiri, U.; Swanson, A.R.; Crittendon, J.A.; Warren, Z.E.; Sarkar, N. A step towards developing adaptive robot-mediated intervention architecture (ARIA) for children with autism. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 21, 289–299. [Google Scholar]

- Bekele, E.; Crittendon, J.A.; Swanson, A.; Sarkar, N.; Warren, Z.E. Pilot clinical application of an adaptive robotic system for young children with autism. Autism 2014, 18, 598–608. [Google Scholar] [CrossRef] [PubMed]

- Caruana, N.; McArthur, G.; Woolgar, A.; Brock, J. Detecting communicative intent in a computerised test of joint attention. PeerJ 2017, 5, e2899. [Google Scholar] [CrossRef] [PubMed]

- Grynszpan, O.; Nadel, J.; Martin, J.C.; Simonin, J.; Bailleul, P.; Wang, Y.; Gepner, D.; Le Barillier, F.; Constant, J. Self-monitoring of gaze in high functioning autism. J. Autism Dev. Disord. 2012, 42, 1642–1650. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Fu, Q.; Zhao, H.; Swanson, A.R.; Weitlauf, A.S.; Warren, Z.E.; Sarkar, N. Design of an Autonomous Social Orienting Training System (ASOTS) for Young Children With Autism. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 668–678. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Nie, G.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Longitudinal Impact of Autonomous Robot-Mediated Joint Attention Intervention for Young Children with ASD. In Proceedings of the International Conference on Social Robotics, Kansas City, MO, USA, 1–3 November 2016; Springer: Cham, Switzerland, 2016; pp. 581–590. [Google Scholar]

- Zhang, L.; Wade, J.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Cognitive state measurement from eye gaze analysis in an intelligent virtual reality driving system for autism intervention. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 532–538. [Google Scholar]

- Gyori, M.; Borsos, Z.; Stefanik, K.; Csákvári, J. Data Quality as a Bottleneck in Developing a Social-Serious-Game-Based Multi-modal System for Early Screening for High Functioning Cases of Autism Spectrum Condition. In Proceedings of the International Conference on Computers Helping People with Special Needs, Linz, Austria, 13–15 July 2016; Springer: Cham, Switzerland, 2016; pp. 358–366. [Google Scholar]

- Lun, R.; Zhao, W. A Survey of Applications and Human Motion Recognition with Microsoft Kinect. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1555008. [Google Scholar] [CrossRef]

- Zhao, W. A concise tutorial on human motion tracking and recognition with Microsoft Kinect. Sci. China Inf. Sci. 2016, 59, 93101. [Google Scholar] [CrossRef]

- Christinaki, E.; Vidakis, N.; Triantafyllidis, G. A novel educational game for teaching emotion identification skills to preschoolers with autism diagnosis. Comput. Sci. Inf. Syst. 2014, 11, 723–743. [Google Scholar] [CrossRef]

- Zheng, Z.; Das, S.; Young, E.M.; Swanson, A.; Warren, Z.; Sarkar, N. Autonomous robot-mediated imitation learning for children with autism. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2707–2712. [Google Scholar]

- Ge, B.; Park, H.W.; Howard, A.M. Identifying Engagement from Joint Kinematics Data for Robot Therapy Prompt Interventions for Children with Autism Spectrum Disorder. In Proceedings of the International Conference on Social Robotics, Kansas City, MO, USA, 1–3 November 2016; Springer: Cham, Switzerland, 2016; pp. 531–540. [Google Scholar]

- Yun, S.S.; Choi, J.; Park, S.K.; Bong, G.Y.; Yoo, H. Social skills training for children with autism spectrum disorder using a robotic behavioral intervention system. Autism Res. 2017, 10, 1306–1323. [Google Scholar] [CrossRef] [PubMed]

- Uzuegbunam, N.; Wong, W.H.; Cheung, S.c.S.; Ruble, L. MEBook: Kinect-based self-modeling intervention for children with autism. In Proceedings of the 2015 IEEE International Conference on Multimedia and Expo (ICME), Turin, Italy, 29 June–3 July 2015; pp. 1–6. [Google Scholar]

- Kuriakose, S.; Kunche, S.; Narendranath, B.; Jain, P.; Sonker, S.; Lahiri, U. A step towards virtual reality based social communication for children with Autism. In Proceedings of the 2013 International Conference on Control, Automation, Robotics and Embedded Systems (CARE), Jabalpur, India, 16–18 December 2013; pp. 1–6. [Google Scholar]

- Di Palma, S.; Tonacci, A.; Narzisi, A.; Domenici, C.; Pioggia, G.; Muratori, F.; Billeci, L.; The MICHELANGELO study group. Monitoring of autonomic response to sociocognitive tasks during treatment in children with autism spectrum disorders by wearable technologies: A feasibility study. Comput. Biol. Med. 2017, 85, 143–152. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wade, J.; Bian, D.; Fan, J.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Cognitive load measurement in a virtual reality-based driving system for autism intervention. IEEE Trans. Affect. Comput. 2017, 8, 176–189. [Google Scholar] [CrossRef] [PubMed]

- White, S.W.; Richey, J.A.; Gracanin, D.; Coffman, M.; Elias, R.; LaConte, S.; Ollendick, T.H. Psychosocial and Computer-Assisted Intervention for College Students with Autism Spectrum Disorder: Preliminary Support for Feasibility. Educ. Train. Autism Dev. Disabil. 2016, 51, 307. [Google Scholar] [PubMed]

- Bekele, E.; Wade, J.; Bian, D.; Fan, J.; Swanson, A.; Warren, Z.; Sarkar, N. Multimodal adaptive social interaction in virtual environment (MASI-VR) for children with Autism spectrum disorders (ASD). In Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, USA, 19–23 March 2016; pp. 121–130. [Google Scholar]

- Fan, J.; Wade, J.W.; Bian, D.; Key, A.P.; Warren, Z.E.; Mion, L.C.; Sarkar, N. A Step towards EEG-based brain computer interface for autism intervention. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3767–3770. [Google Scholar]

- Özcan, B.; Caligiore, D.; Sperati, V.; Moretta, T.; Baldassarre, G. Transitional wearable companions: A novel concept of soft interactive social robots to improve social skills in children with autism spectrum disorder. Int. J. Soc. Robot. 2016, 8, 471–481. [Google Scholar] [CrossRef]

- Bian, D.; Wade, J.; Warren, Z.; Sarkar, N. Online Engagement Detection and Task Adaptation in a Virtual Reality Based Driving Simulator for Autism Intervention. In Proceedings of the International Conference on Universal Access in Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Springer: Cham, Switzerland, 2016; pp. 538–547. [Google Scholar]

- Bakeman, R. Behavioral observation and coding. In Handbook of Research Methods in Social and Personality Psychology; Cambridge University Press: New York, NY, USA, 2000; pp. 138–159. [Google Scholar]

- Weick, K.E. Systematic observational methods. In The Handbook of Social Psychology; John Wiley and Sons: Hoboken, NJ, USA, 1968; Volume 2, pp. 357–451. [Google Scholar]

- Khosla, R.; Nguyen, K.; Chu, M.T. Service personalisation of assistive robot for autism care. In Proceedings of the IECON 2015-41st Annual Conference of the IEEE Industrial Electronics Society, Yokohama, Japan, 9–12 November 2015; pp. 002088–002093. [Google Scholar]

- Mourning, R.; Tang, Y. Virtual reality social training for adolescents with high-functioning autism. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 004848–004853. [Google Scholar]

- Bekele, E.; Zheng, Z.; Swanson, A.; Crittendon, J.; Warren, Z.; Sarkar, N. Understanding how adolescents with autism respond to facial expressions in virtual reality environments. IEEE Trans. Vis. Comput. Graph. 2013, 19, 711–720. [Google Scholar] [CrossRef] [PubMed]

- Kaboski, J.R.; Diehl, J.J.; Beriont, J.; Crowell, C.R.; Villano, M.; Wier, K.; Tang, K. Brief report: A pilot summer robotics camp to reduce social anxiety and improve social/vocational skills in adolescents with ASD. J. Autism Dev. Disord. 2015, 45, 3862–3869. [Google Scholar] [CrossRef] [PubMed]

- Newbutt, N.; Sung, C.; Kuo, H.J.; Leahy, M.J. The potential of virtual reality technologies to support people with an autism condition: A case study of acceptance, presence and negative effects. Ann. Rev. Cyber Ther. Telemed. (ARCTT) 2016, 14, 149–154. [Google Scholar]

- Ekman, P.; Friesen, W.V. Unmasking the Face: A Guide to Recognizing Emotions from Facial Clues; ISHK: Los Altos, CA, USA, 2003. [Google Scholar]

- Benton, A.L. The neuropsychology of facial recognition. Am. Psychol. 1980, 35, 176. [Google Scholar] [CrossRef] [PubMed]

- Gresham, F.M.; Elliott, S.N. Social Skills Rating System: Manual; American Guidance Service: Circle Pines, MN, USA, 1990. [Google Scholar]

- Suskind, R.; Nguyen, J.; Patterson, S.; Springer, S.; Fanty, M. Guided Personal Companion. U.S. Patent 9,710,613, 18 July 2017. [Google Scholar]

- Liu, X.; Zhao, W. Buddy: A Virtual Life Coaching System for Children and Adolescents with High Functioning Autism. In Proceedings of the IEEE Cyber Science and Technology Congress, Orlando, FL, USA, 5–9 November 2017. [Google Scholar]

- Bernardini, S.; Porayska-Pomsta, K.; Smith, T.J. ECHOES: An intelligent serious game for fostering social communication in children with autism. Inf. Sci. 2014, 264, 41–60. [Google Scholar] [CrossRef]

- Esteban, P.G.; Baxter, P.; Belpaeme, T.; Billing, E.; Cai, H.; Cao, H.L.; Coeckelbergh, M.; Costescu, C.; David, D.; De Beir, A.; et al. How to build a supervised autonomous system for robot-enhanced therapy for children with autism spectrum disorder. Paladyn J. Behav. Robot. 2017, 8, 18–38. [Google Scholar] [CrossRef]

- Luo, X.; Deng, J.; Liu, J.; Wang, W.; Ban, X.; Wang, J.H. A quantized kernel least mean square scheme with entropy-guided learning for intelligent data analysis. China Commun. 2017, 14, 1–10. [Google Scholar] [CrossRef]

- Luo, X.; Deng, J.; Wang, W.; Wang, J.H.; Zhao, W. A Quantized Kernel Learning Algorithm Using a Minimum Kernel Risk-Sensitive Loss Criterion and Bilateral Gradient Technique. Entropy 2017, 19, 365. [Google Scholar] [CrossRef]

- Luo, X.; Lv, Y.; Zhou, M.; Wang, W.; Zhao, W. A laguerre neural network-based ADP learning scheme with its application to tracking control in the Internet of Things. Pers. Ubiquitous Comput. 2016, 20, 361–372. [Google Scholar] [CrossRef]

- Luo, X.; Xu, Y.; Wang, W.; Yuan, M.; Ban, X.; Zhu, Y.; Zhao, W. Towards Enhancing Stacked Extreme Learning Machine With Sparse Autoencoder by Correntropy. J. Frankl. Inst. 2017. [Google Scholar] [CrossRef]

- Luo, X.; Zhang, D.; Yang, L.T.; Liu, J.; Chang, X.; Ning, H. A kernel machine-based secure data sensing and fusion scheme in wireless sensor networks for the cyber-physical systems. Future Gener. Comput. Syst. 2016, 61, 85–96. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Wu, Q.; Zhao, W.; Luo, X. Technology-Facilitated Diagnosis and Treatment of Individuals with Autism Spectrum Disorder: An Engineering Perspective. Appl. Sci. 2017, 7, 1051. https://doi.org/10.3390/app7101051

Liu X, Wu Q, Zhao W, Luo X. Technology-Facilitated Diagnosis and Treatment of Individuals with Autism Spectrum Disorder: An Engineering Perspective. Applied Sciences. 2017; 7(10):1051. https://doi.org/10.3390/app7101051

Chicago/Turabian StyleLiu, Xiongyi, Qing Wu, Wenbing Zhao, and Xiong Luo. 2017. "Technology-Facilitated Diagnosis and Treatment of Individuals with Autism Spectrum Disorder: An Engineering Perspective" Applied Sciences 7, no. 10: 1051. https://doi.org/10.3390/app7101051