RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy

Abstract

:1. Introduction

2. Materials and Methods

2.1. System Overview

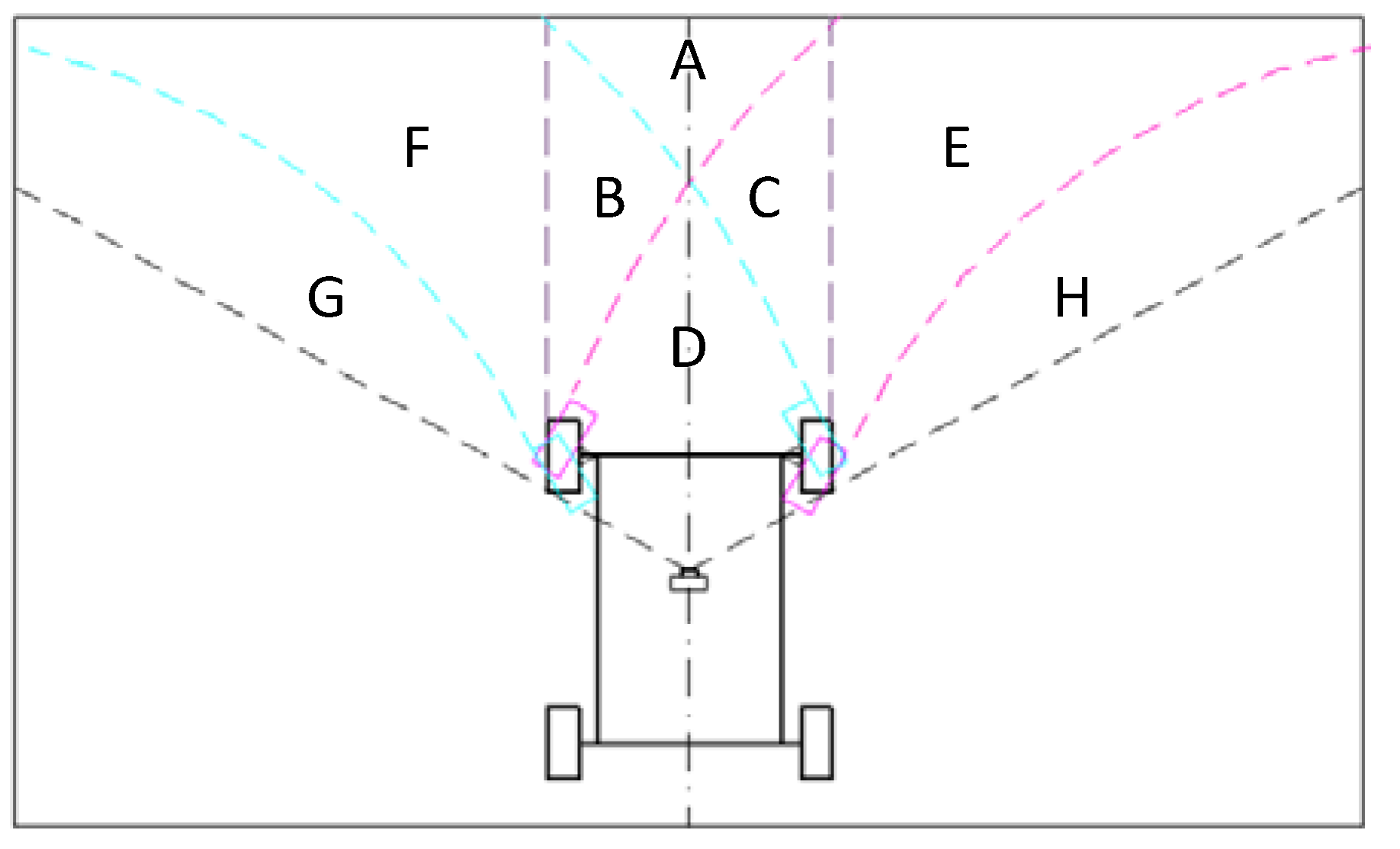

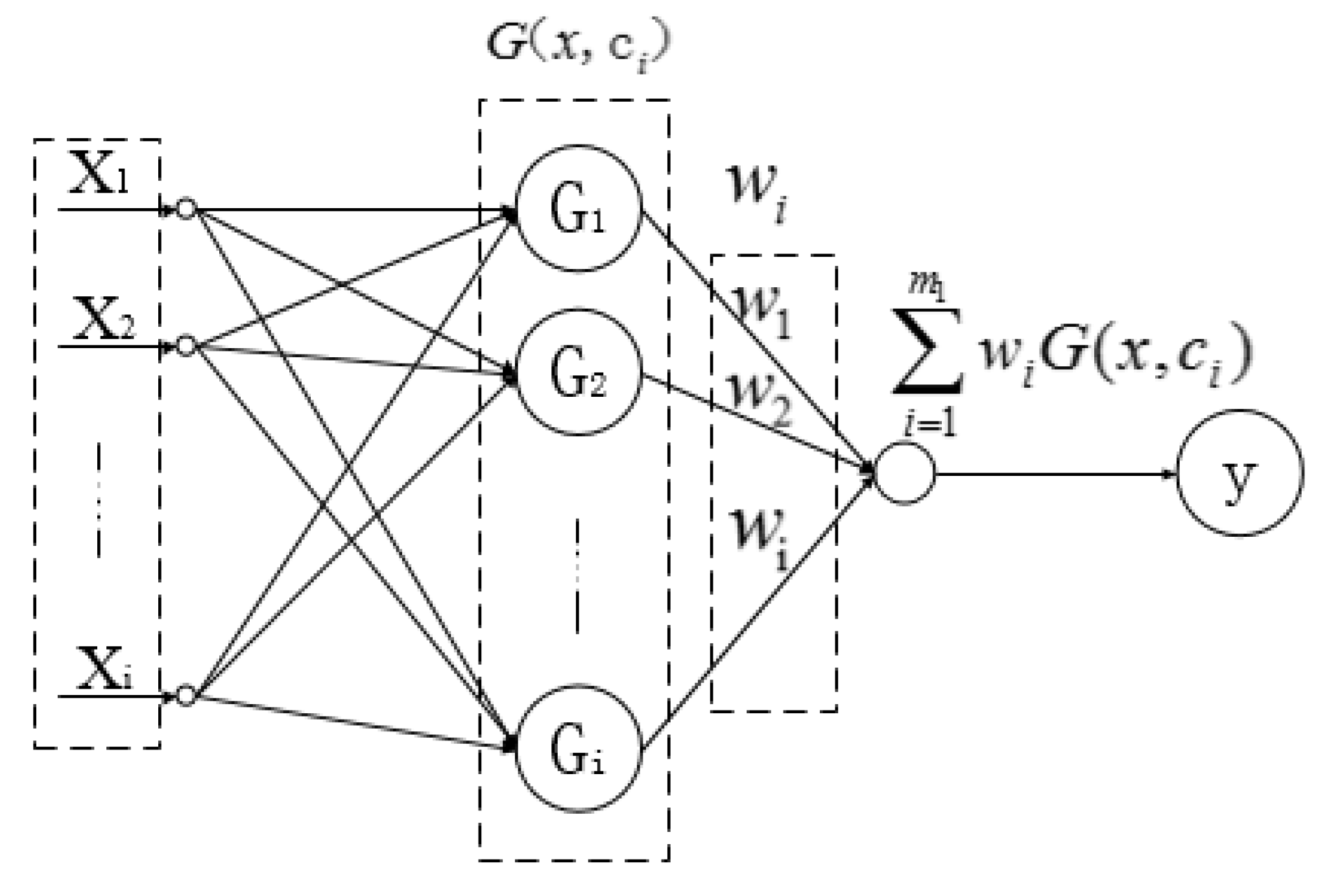

2.2. Navigation Method

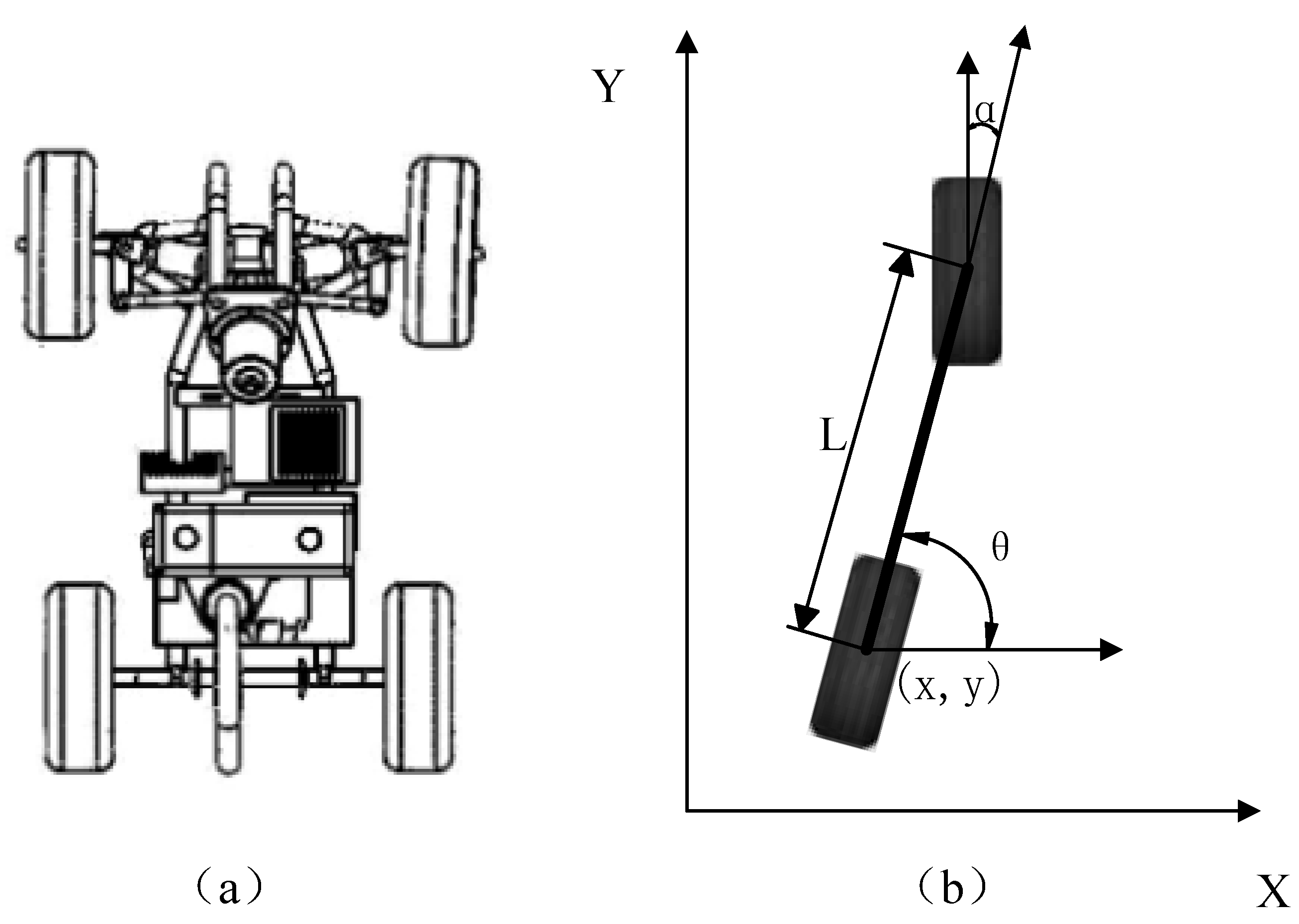

2.2.1. Vehicle Kinematic Model

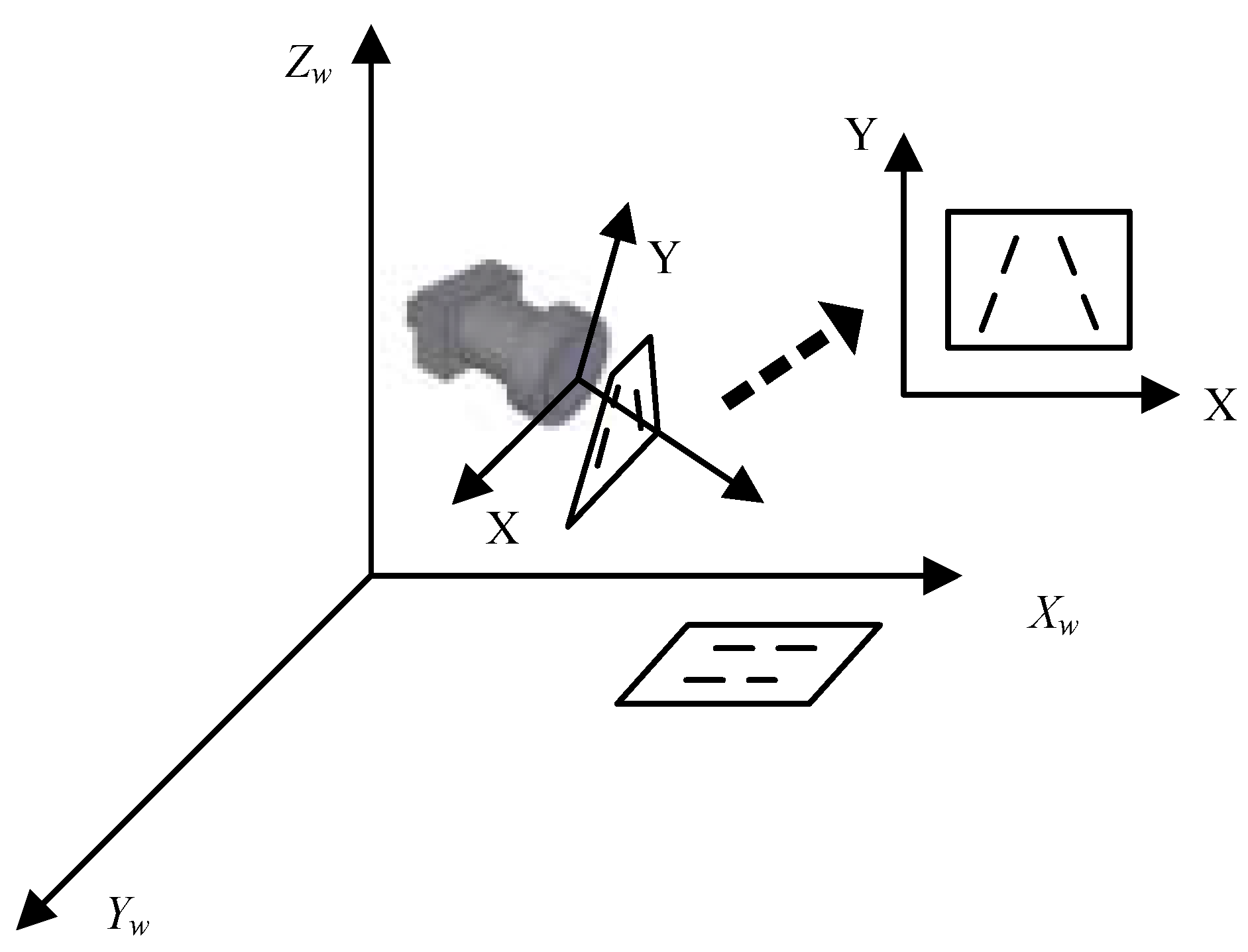

2.2.2. Camera Calibration

2.2.3. Image Recognition

- (i)

- RGB threshold analysis: the target stalk image region and other regional RGB values are separated, and the threshold range image is determined.

- (ii)

- Otsu threshold analysis: this study uses the Otsu algorithm for image binarization. The threshold of the method is used to maximize the class variance between the foreground and background. This method is very sensitive to noise and target size and has better image segmentation results for the variance between two classes of a unimodal image, as shown in Figure 7.

- (iii)

- Analysis of the image block filter: the domain neighbor segmentation method is used to filter out noise points caused by the binarization processing of the weeds and corn stubble of the previous quarter image:

2.2.4. Detection of the Crop Row Line

3. Experimental and Discussion

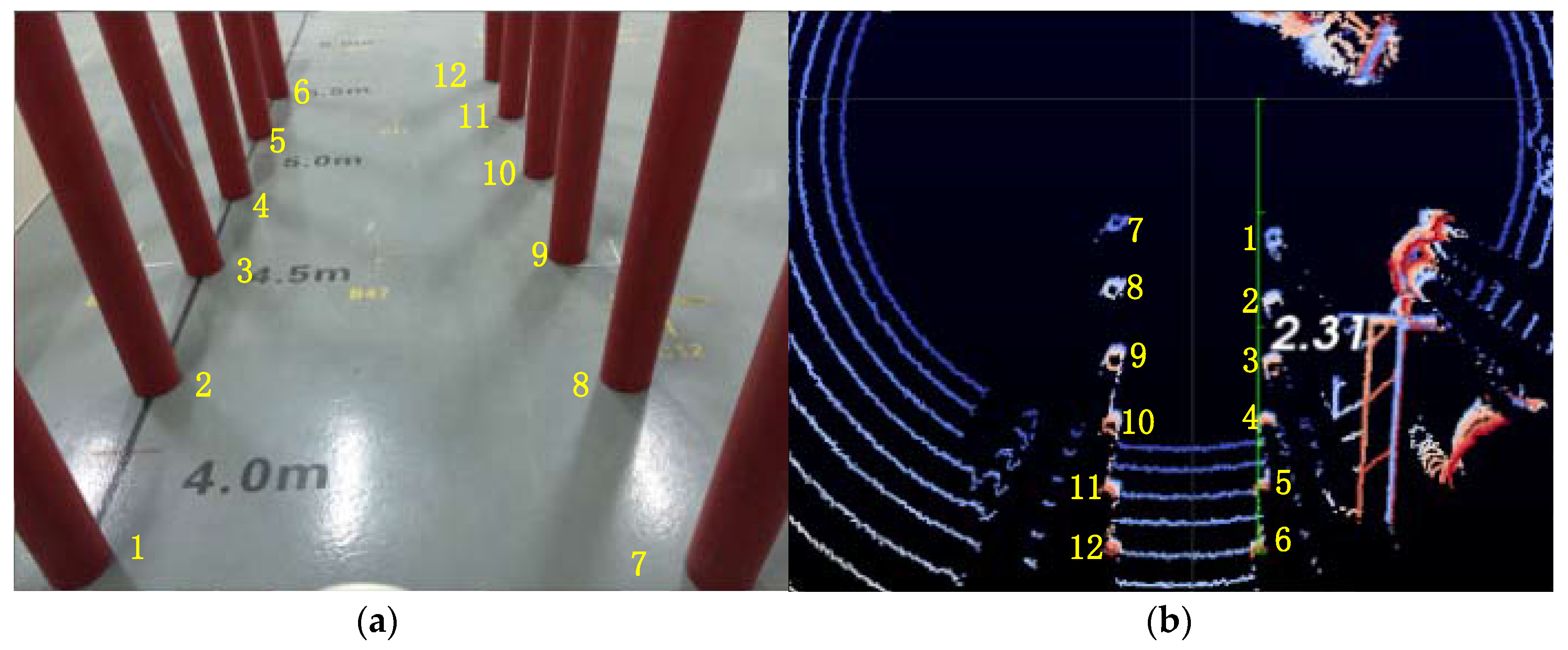

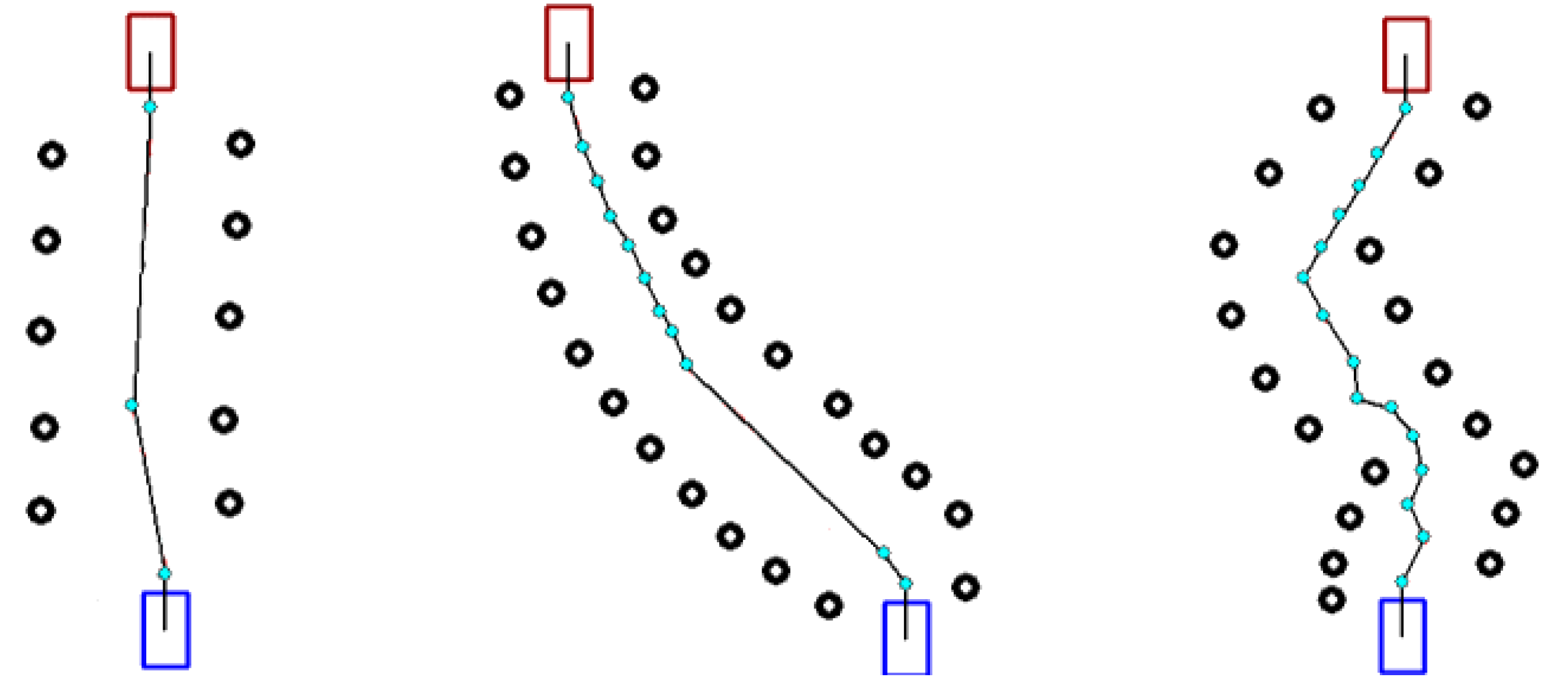

3.1. Laboratory Tests

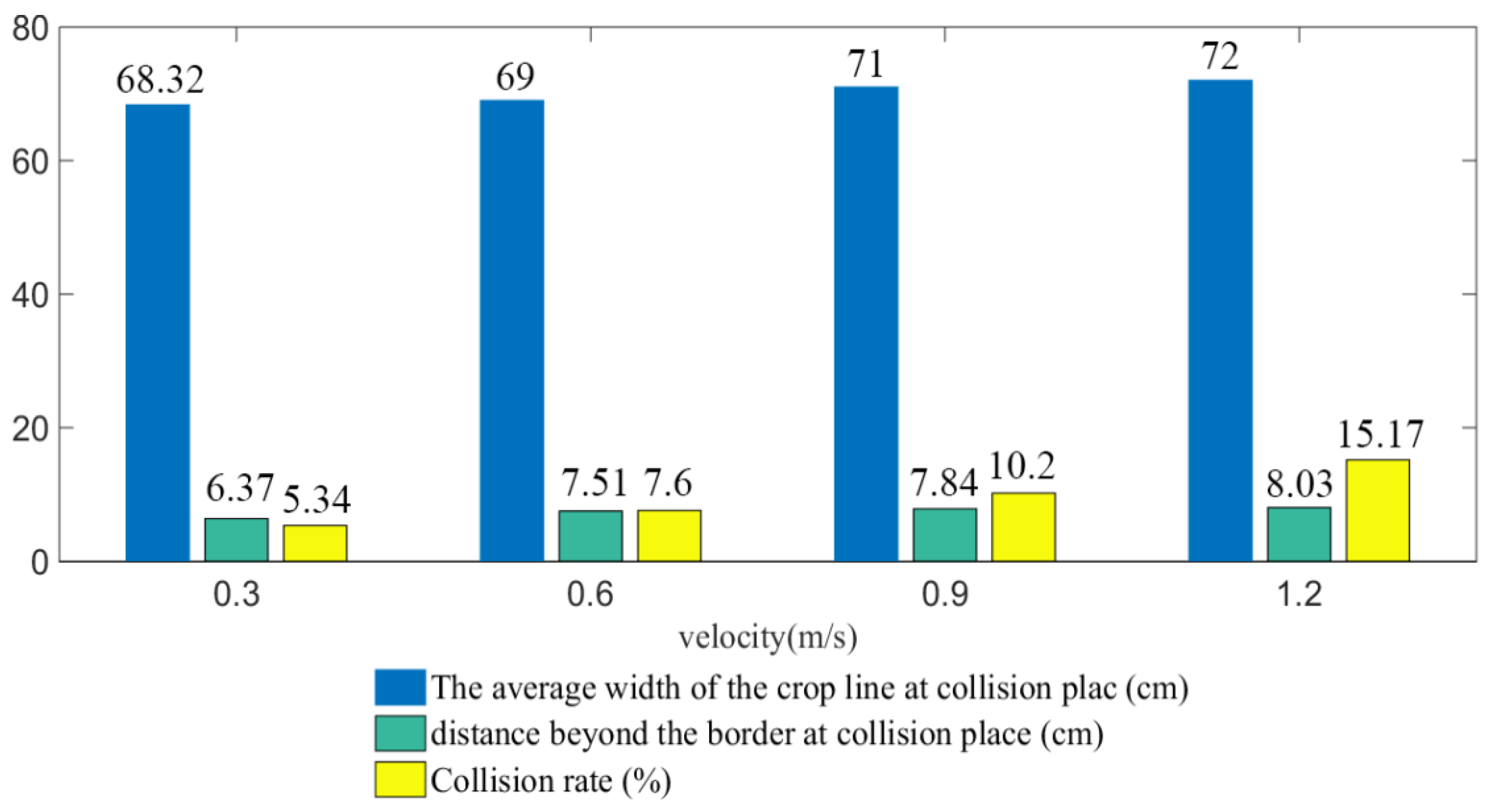

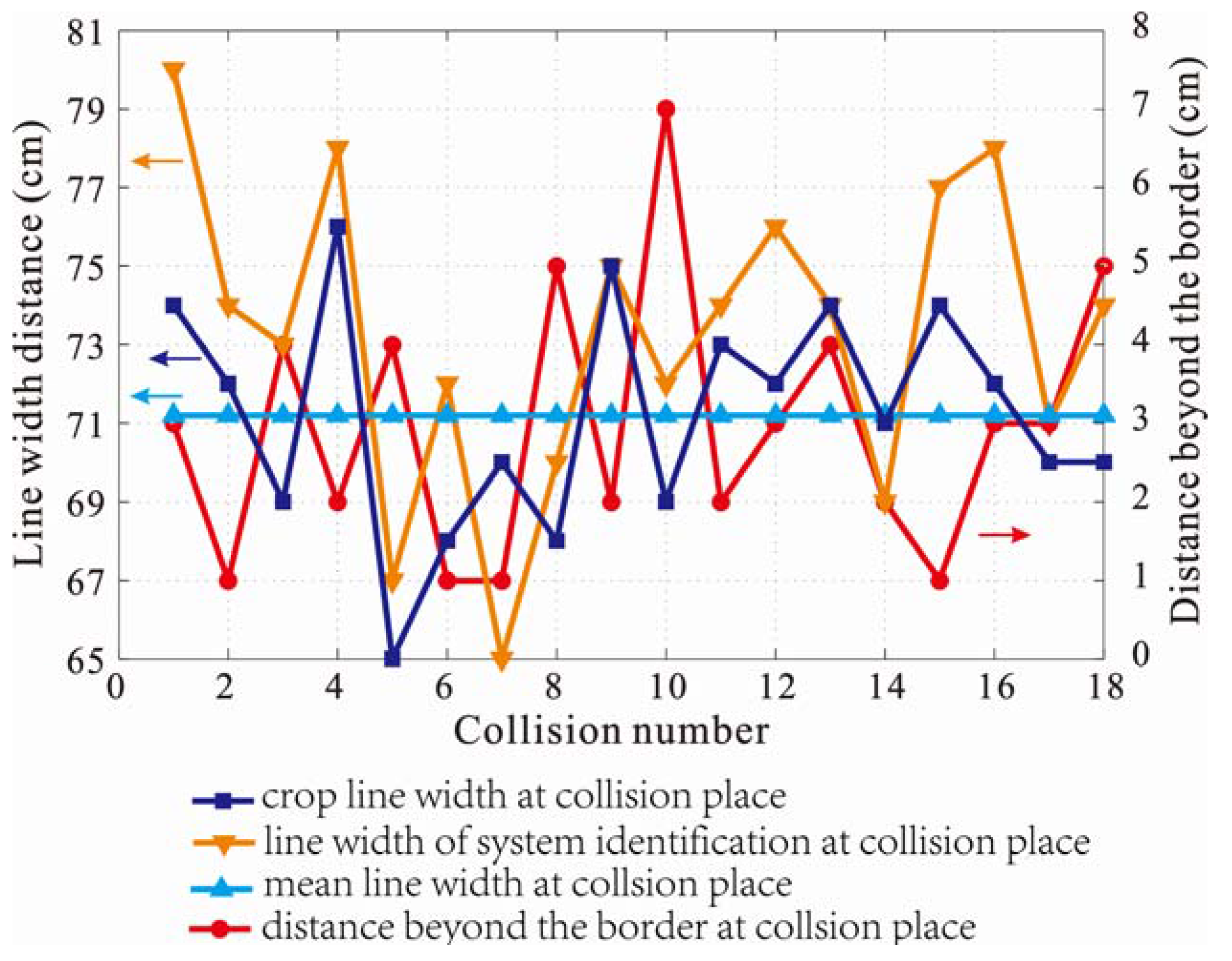

3.2. Field Trials

- (i)

- When the line width is small, the possibility of a vehicle collision with stalks is very high. For example, for the collision with an average row width of 68 cm, the actual measured line width is 74 cm.

- (ii)

- When the system inaccurately recognizes the root position above the ground, it can easily lead to a collision between the vehicle and stalk.

- (iii)

- For the crop density, when the vehicle drives through a sparsely planted area, collision is likely.

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bochtis, D.D.; Sørensen, C.G.C.; Busato, P. Advances in agricultural machinery management: A review. Biosyst. Eng. 2014, 126, 69–81. [Google Scholar] [CrossRef]

- Mathanker, S.K.; Grift, T.E.; Hansen, A.C. Effect of blade oblique angle and cutting speed on cutting energy for energycane stems. Biosyst. Eng. 2015, 133, 64–70. [Google Scholar] [CrossRef]

- Qi, L.; Miller, P.C.H.; Fu, Z. The classification of the drift risk of sprays produced by spinning discs based on wind tunnel measurements. Biosyst. Eng. 2008, 100, 38–43. [Google Scholar] [CrossRef]

- Tillett, N.D.; Hague, T.; Grundy, A.C.; Dedousis, A.P. Mechanical within-row weed control for transplanted crops using computer vision. Biosyst. Eng. 2008, 99, 171–178. [Google Scholar] [CrossRef]

- Vidoni, R.; Bietresato, M.; Gasparetto, A.; Mazzetto, F. Evaluation and stability comparison of different vehicle configurations for robotic agricultural operations on side-slopes. Biosyst. Eng. 2015, 129, 197–211. [Google Scholar] [CrossRef]

- Suprem, A.; Mahalik, N.; Kim, K. A review on application of technology systems, standards and interfaces for agriculture and food sector. Comput. Stand. Interfaces 2013, 35, 355–364. [Google Scholar]

- Cordill, C.; Grift, T.E. Design and testing of an intra-row mechanical weeding machine for corn. Biosyst. Eng. 2011, 110, 247–252. [Google Scholar] [CrossRef]

- Bochtis, D.; Griepentrog, H.W.; Vougioukas, S.; Busato, P.; Berruto, R.; Zhou, K. Route planning for orchard operations. Comput. Electron. Agric. 2015, 113, 51–60. [Google Scholar] [CrossRef]

- Pérez-Ruiz, M.; Gonzalez-de-Santos, P.; Ribeiro, A.; Fernandez-Quintanilla, C.; Peruzzi, A.; Vieri, M.; Tomic, S.; Agüera, J. Highlights and preliminary results for autonomous crop protection. Comput. Electron. Agric. 2015, 110, 150–161. [Google Scholar] [CrossRef]

- Aldo Calcante, F.M. Design, development and evaluation of a wireless system for the automatic identification of implements. Comput. Electron. Agric. 2014, 2014, 118–127. [Google Scholar] [CrossRef]

- Balsari, P.; Manzone, M.; Marucco, P.; Tamagnone, M. Evaluation of seed dressing dust dispersion from maize sowing machines. Crop Prot. 2013, 51, 19–23. [Google Scholar] [CrossRef]

- Gobor, Z.; Lammer, P.S.; Martinov, M. Development of a mechatronic intra-row weeding system with rotational hoeing tools: Theoretical approach and simulation. Comput. Electron. Agric. 2013, 98, 166–174. [Google Scholar] [CrossRef]

- Bakker, T.; van Asselt, K.; Bontsema, J.; Müller, J.; van Straten, G. Autonomous navigation using a robot platform in a sugar beet field. Biosyst. Eng. 2011, 109, 357–368. [Google Scholar] [CrossRef]

- Bakker, T.; Wouters, H.; van Asselt, K.; Bontsema, J.; Tang, L.; Müller, J.; van Straten, G. A vision based row detection system for sugar beet. Comput. Electron. Agric. 2008, 60, 87–95. [Google Scholar] [CrossRef]

- Gan-Mor, S.; Clark, R.L.; Upchurch, B.L. Implement lateral position accuracy under RTK-GPS tractor guidance. Comput. Electron. Agric. 2007, 59, 31–38. [Google Scholar]

- Åstrand, B.; Baerveldt, A.-J. A vision based row-following system for agricultural field machinery. Mechatronics 2005, 15, 251–269. [Google Scholar] [CrossRef]

- Chen, B.; Tojo, S.; Watanabe, K. Machine Vision for a Micro Weeding Robot in a Paddy Field. Biosyst. Eng. 2003, 85, 393–404. [Google Scholar] [CrossRef]

- Billingsley, J.; Schoenfisch, M. The successful development of a vision guidance system for agriculture. Comput. Electron. Agric. 1997, 16, 147–163. [Google Scholar] [CrossRef]

- Burgos-Artizzu, X.P.; Ribeiro, A.; Guijarro, M.; Pajares, G. Real-time image processing for crop/weed discrimination in maize fields. Comput. Electron. Agric. 2011, 75, 337–346. [Google Scholar] [CrossRef] [Green Version]

- Meng, Q.; Qiu, R.; He, J.; Zhang, M.; Ma, X.; Liu, G. Development of agricultural implement system based on machine vision and fuzzy control. Comput. Electron. Agric. 2014, 112, 128–138. [Google Scholar] [CrossRef]

- Xue, J.; Zhang, L.; Grift, T.E. Variable field-of-view machine vision based row guidance of an agricultural robot. Comput. Electron. Agric. 2012, 84, 85–91. [Google Scholar] [CrossRef]

- Bui, T.T.Q.; Hong, K.-S. Evaluating a color-based active basis model for object recognition. Comput. Vis. Image Underst. 2012, 116, 1111–1120. [Google Scholar]

- Bui, T.T.Q.; Hong, K.-S. Extraction of sparse features of color images in recognizing objects. Int. J. Control Autom. Syst. 2016, 14, 616–627. [Google Scholar]

- Gée, C.; Bossu, J.; Jones, G.; Truchetet, F. Crop/weed discrimination in perspective agronomic images. Comput. Electron. Agric. 2008, 60, 49–59. [Google Scholar] [CrossRef]

- Guerrero, J.M.; Pajares, G.; Montalvo, M.; Romeo, J.; Guijarro, M. Support Vector Machines for crop/weeds identification in maize fields. Expert Syst. Appl. 2012, 39, 11149–11155. [Google Scholar] [CrossRef]

- Montalvo, M.; Guerrero, J.M.; Romeo, J.; Emmi, L.; Guijarro, M.; Pajares, G. Automatic expert system for weeds/crops identification in images from maize fields. Expert Syst. Appl. 2013, 40, 75–82. [Google Scholar] [CrossRef]

- Zhang, J.; Kantor, G.; Bergerman, M. Monocular visual navigation of an autonomous vehicle in natural scene corridor-like environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vilamoura, Portugal, 7–12 October 2012; IEEE: Piscataway, NJ, USA; pp. 3659–3666.

- Montalvo, M.; Pajares, G.; Guerrero, J.M.; Romeo, J.; Guijarro, M.; Ribeiro, A.; Ruz, J.J.; Cruz, J.M. Automatic detection of crop rows in maize fields with high weeds pressure. Expert Syst. Appl. 2012, 39, 11889–11897. [Google Scholar] [CrossRef] [Green Version]

- Du, M.; Mei, T.; Liang, H.; Chen, J.; Huang, R.; Zhao, P. Drivers’ Visual Behavior-Guided RRT Motion Planner for Autonomous On-Road Driving. Sensors 2016, 16, 102. [Google Scholar] [CrossRef] [PubMed]

- Bui, T.T.Q.; Hong, K.-S. Sonar-based obstacle avoidance using region partition scheme. J. Mech. Sci. Technol. 2010, 24, 365–372. [Google Scholar]

- Pamosoaji, A.K.; Hong, K.-S. A path planning algorithm using vector potential functions in triangular regions. IEEE Trans. Syst. Man Cybern. Syst. 2013, 43, 832–842. [Google Scholar] [CrossRef]

- Tamba, T.A.; Hong, B.; Hong, K.-S. A path following control of an unmanned autonomous forklift. Int. J. Control Autom. Syst. 2009, 7, 113–122. [Google Scholar] [CrossRef]

- Chen, J.; Zhao, P.; Liang, H.; Mei, T. Motion planning for autonomous vehicle based on radial basis function neural network in unstructured environment. Sensors 2014, 14, 17548–17566. [Google Scholar] [CrossRef] [PubMed]

| Velocity | RBF (%) | Hough Transform (%) | ||||||

|---|---|---|---|---|---|---|---|---|

| m/s | Straight | Left Turn | Right Turn | S-Turn | Straight | Left Turn | Right Turn | S-Turn |

| 0.3 | 0.00 | 0.03 | 0.07 | 0.17 | 0.00 | 0.13 | 0.17 | 0.30 |

| 0.5 | 0.00 | 0.10 | 0.07 | 0.20 | 0.00 | 0.17 | 0.13 | 0.33 |

| 0.7 | 0.00 | 0.07 | 0.10 | 0.13 | 0.00 | 0.17 | 0.20 | 0.43 |

| 0.9 | 0.03 | 0.13 | 0.07 | 0.20 | 0.00 | 0.27 | 0.27 | 0.43 |

| 1.1 | 0.00 | 0.10 | 0.13 | 0.23 | 0.00 | 0.30 | 0.33 | 0.50 |

| 1.3 | 0.07 | 0.10 | 0.10 | 0.27 | 0.03 | 0.40 | 0.37 | 0.60 |

| Algorithm | Straight | Left-Turn | Right-Turn | S-Turn | ||||

|---|---|---|---|---|---|---|---|---|

| Time (ms) | L (cm) | Time (ms) | L (cm) | Time (ms) | L (cm) | Time (ms) | L (cm) | |

| Hough | 25.16 | 25.4 | 25.70 | 22.7 | 25.76 | 21.5 | 26.31 | 16.4 |

| RBF | 28.07 | 27.6 | 30.64 | 26.3 | 30.22 | 27.7 | 31.40 | 28.1 |

| Collision Number | The Total Number of Maize | The Number of Collisions with Robot | Collision Rate | The Average Line Width (cm) | The Average Line Width of Collision (cm) | Distance Beyond the Border at Collision Place (cm) |

|---|---|---|---|---|---|---|

| 1 | 357 | 18 | 5.04% | 74.5 | 68.1 | 5.8 |

| 2 | 329 | 21 | 6.38% | 72.8 | 67.4 | 6.4 |

| 3 | 336 | 19 | 5.65% | 74.2 | 68.8 | 7.2 |

| 4 | 341 | 16 | 4.69% | 76.4 | 69.0 | 6.1 |

| SUM | 1363 | 74 | 5.43% | - | - | - |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, L.; Mei, T.; Niu, R.; Wang, J.; Liu, Y.; Chu, S. RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy. Appl. Sci. 2016, 6, 182. https://doi.org/10.3390/app6060182

Liu L, Mei T, Niu R, Wang J, Liu Y, Chu S. RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy. Applied Sciences. 2016; 6(6):182. https://doi.org/10.3390/app6060182

Chicago/Turabian StyleLiu, Lu, Tao Mei, Runxin Niu, Jie Wang, Yongbo Liu, and Sen Chu. 2016. "RBF-Based Monocular Vision Navigation for Small Vehicles in Narrow Space below Maize Canopy" Applied Sciences 6, no. 6: 182. https://doi.org/10.3390/app6060182