On the Use of the Humanoid Bioloid System for Robot-Assisted Transcription of Mexican Spanish Speech

Abstract

:1. Introduction

- (a)

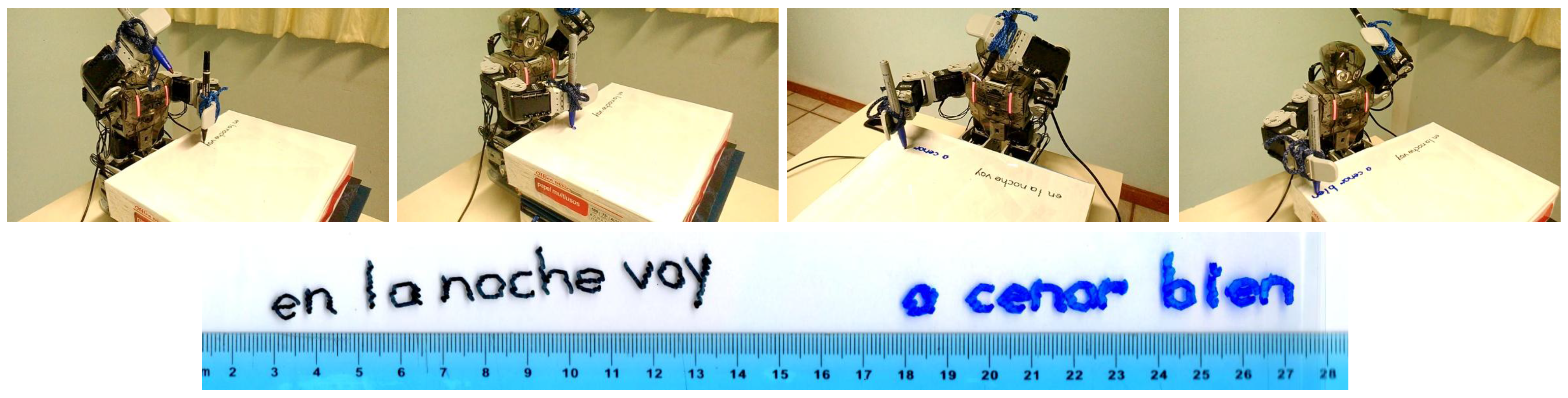

- development of a physical speech transcription system by means of robot-assisted handwriting;

- (b)

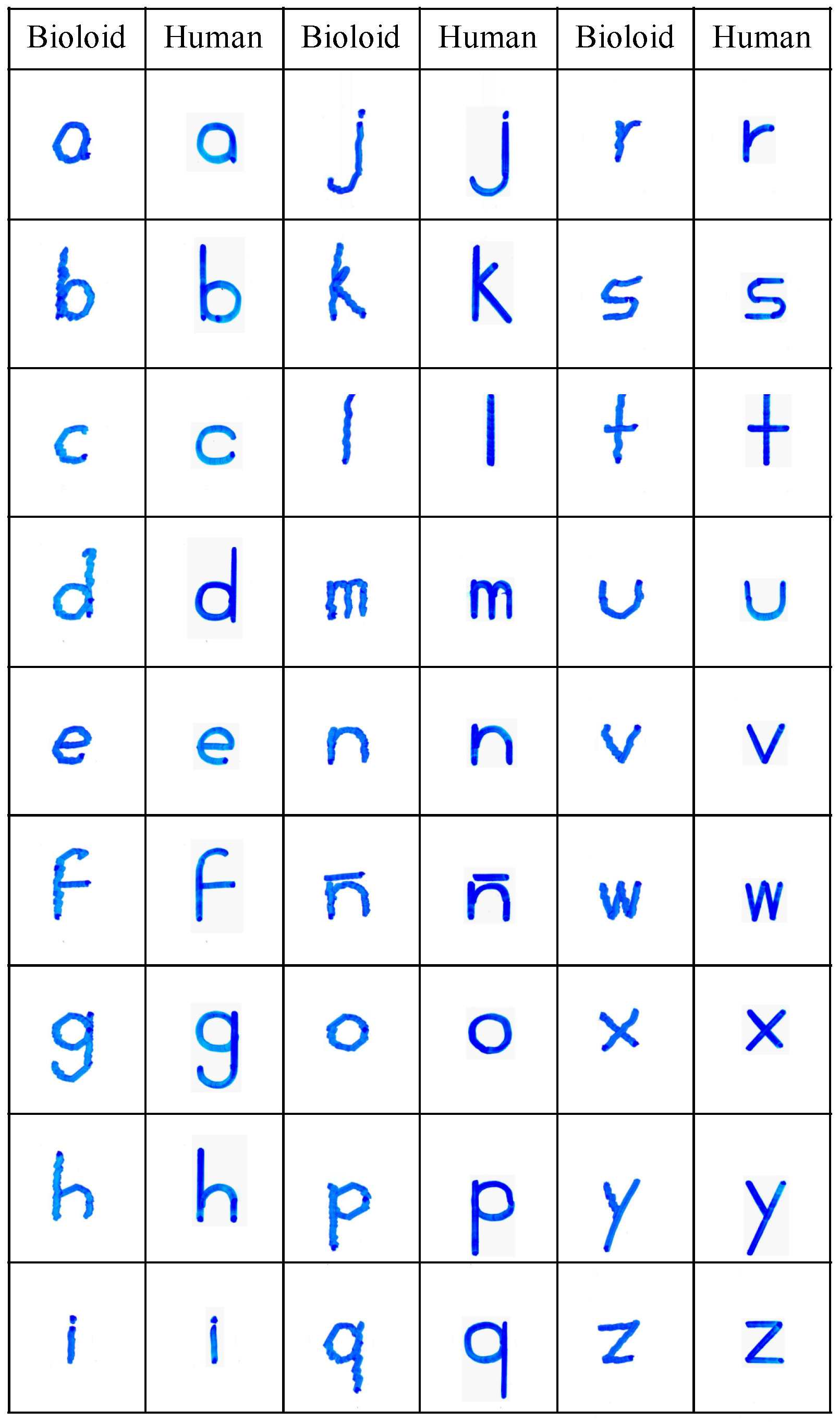

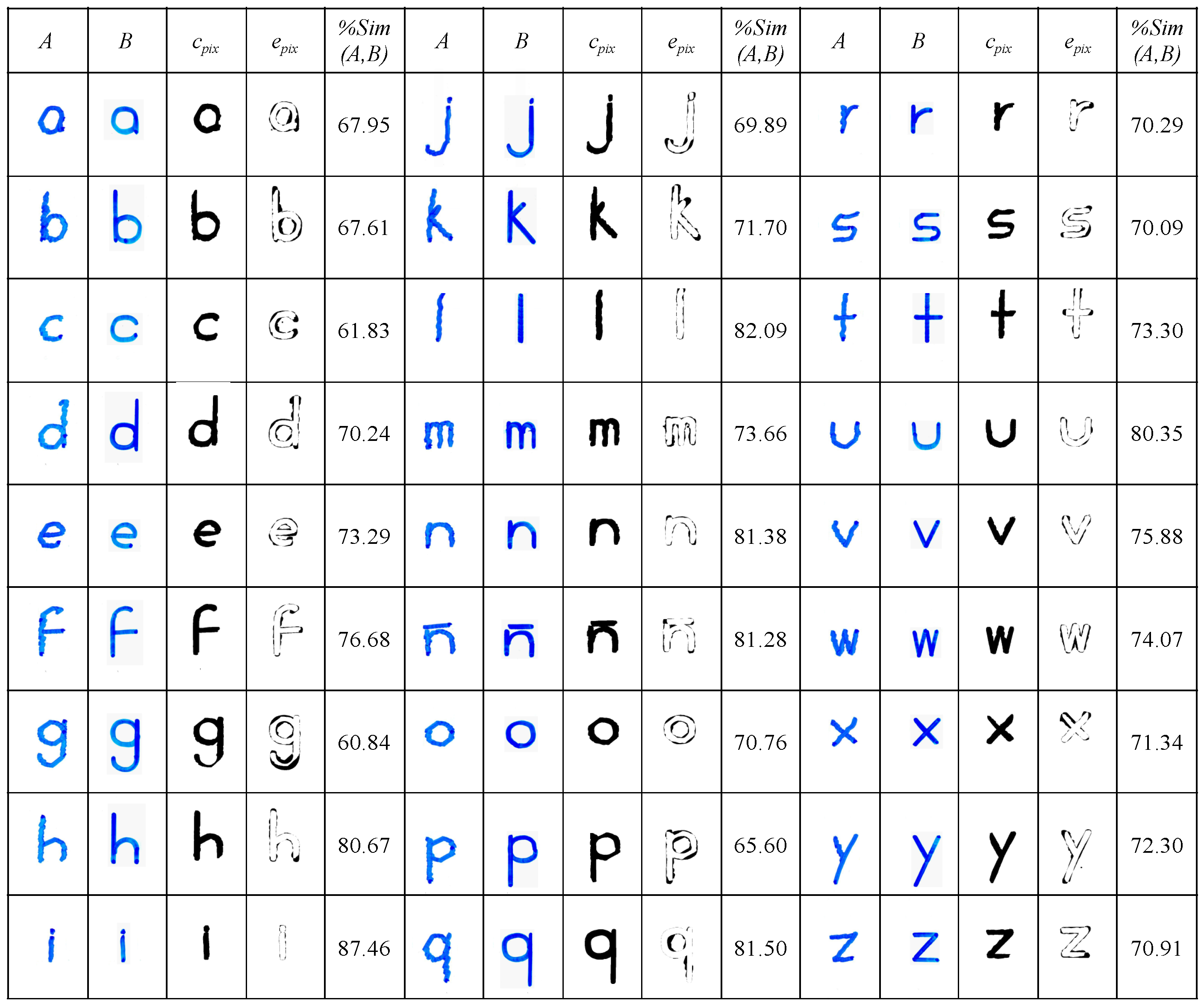

- performance evaluation of the humanoid Bioloid to draw small (1.0 cm width) alphabet characters in curved lowercase format;

- (c)

- development of the kinematic models for the humanoid Bioloid to perform the drawing of the Mexican Spanish alphabet with both arms;

- (d)

- development of a two-arm writing scheme for the transcription (handwriting) of large spoken sentences (multiple words).

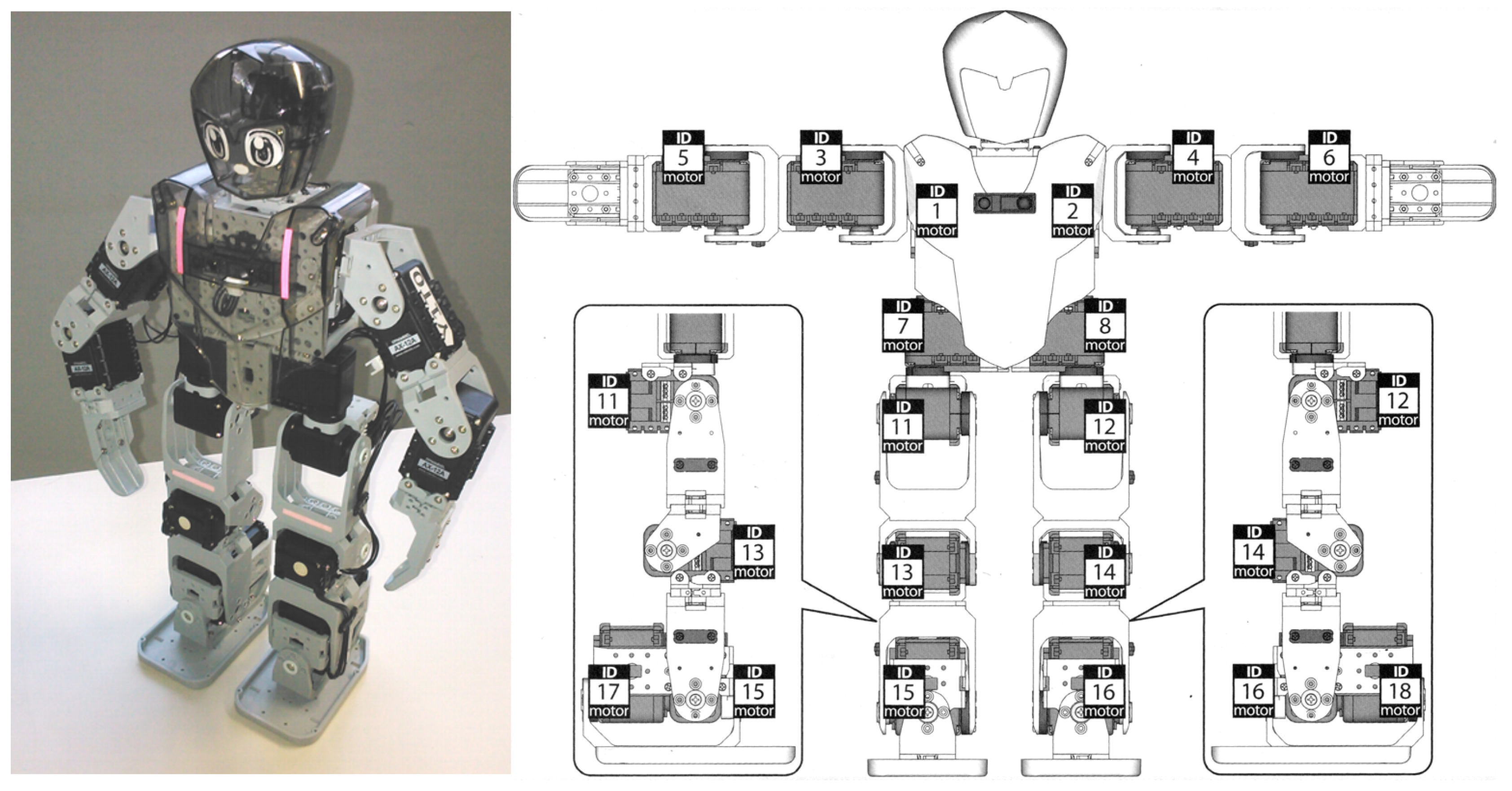

2. The Bioloid System

Programming Platform

- (a)

- RoboPlus Task: This utility is used for the development of “task code” (e.g., programming code) for management and control of the Bioloid’s hardware resources. Sequences of motions can be coordinated by specific task codes, and complex functions or actions can be accomplished by the coordination of task codes.

- (b)

- RoboPlus Manager: This utility is used for the maintenance of the Bioloid’s hardware components. Hence, major functions of this utility are updating and restoration of the controller’s firmware and testing of the controller and peripheral devices (servomechanisms, sensors).

- (c)

- RoboPlus Motion: This utility is used for the development of “step” motions for the Bioloid. These motions then can be managed by task code.

- (a)

- It only allows the development of standalone applications.

- (b)

- There is no direct integration with other programming languages.

- (c)

- Motions created with RoboPlus Motion can only be accessed via RoboPlus Task code.

- (d)

- The creation scheme for motions, which is based on “steps”, is suitable for the realization of medium-to-large straight or curved trajectories, such as those described in [28]. However, this scheme is not suitable for the realization of fine movements or trajectories required to draw small and curved lowercase alphabet characters.

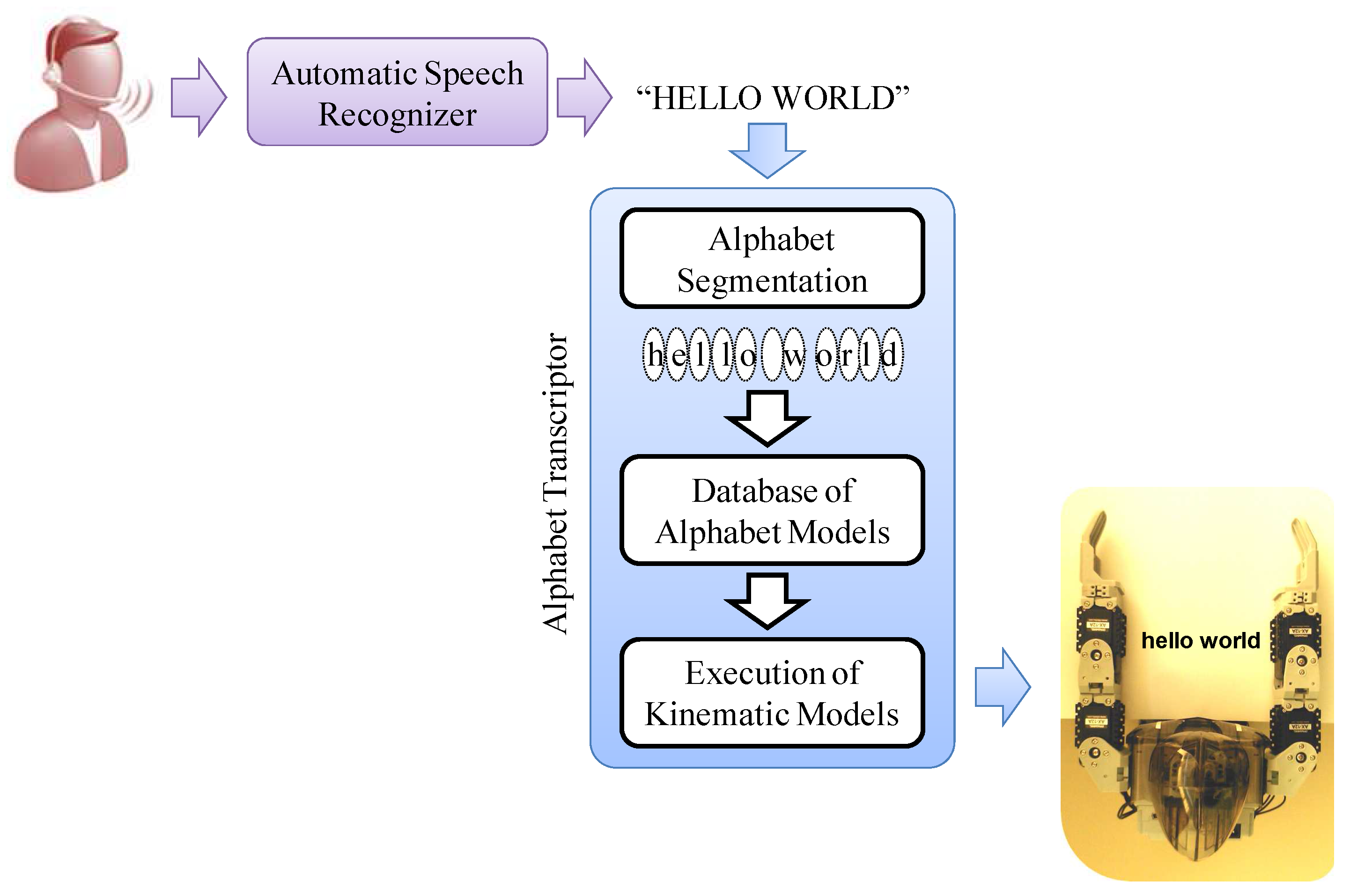

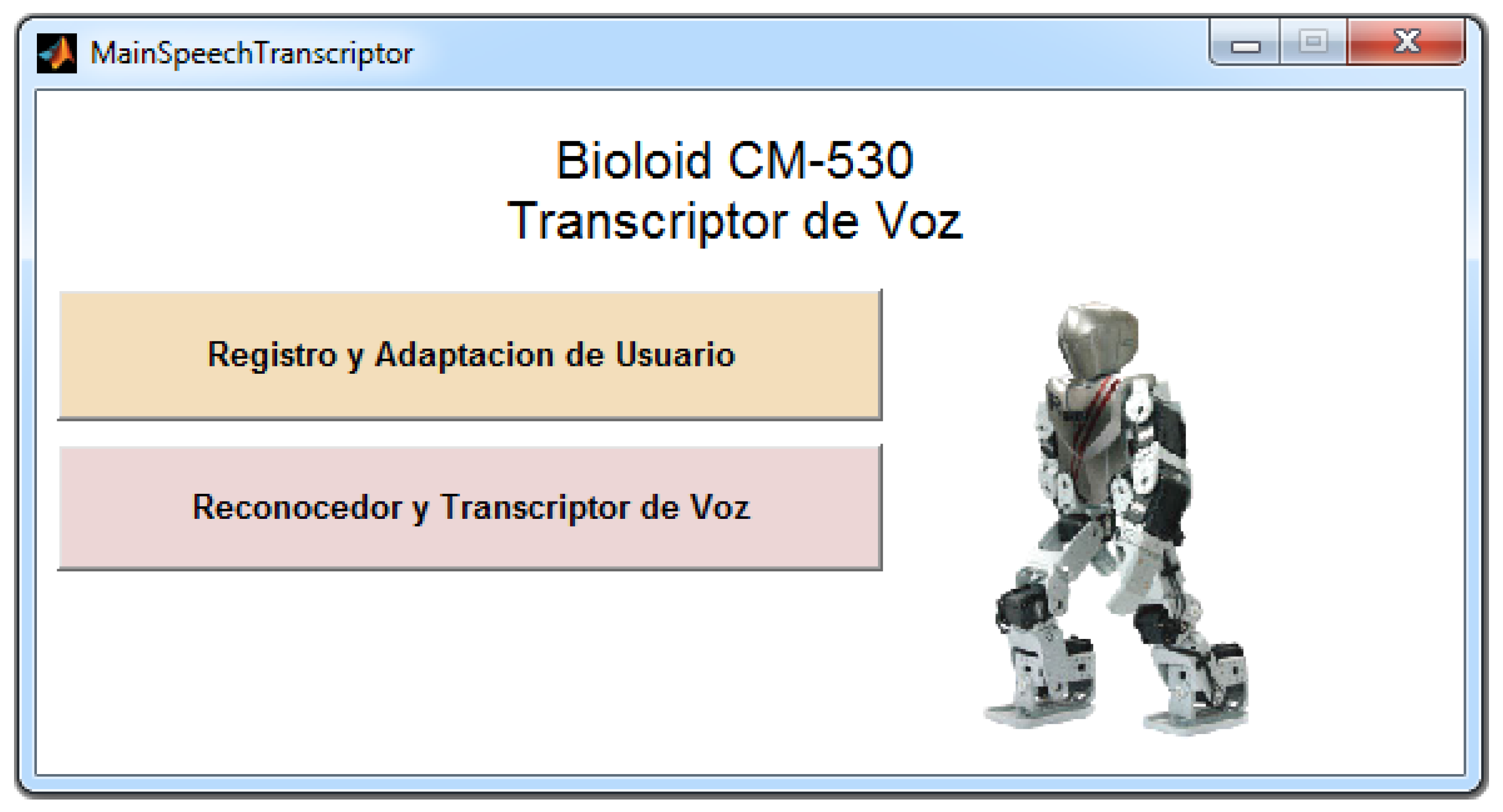

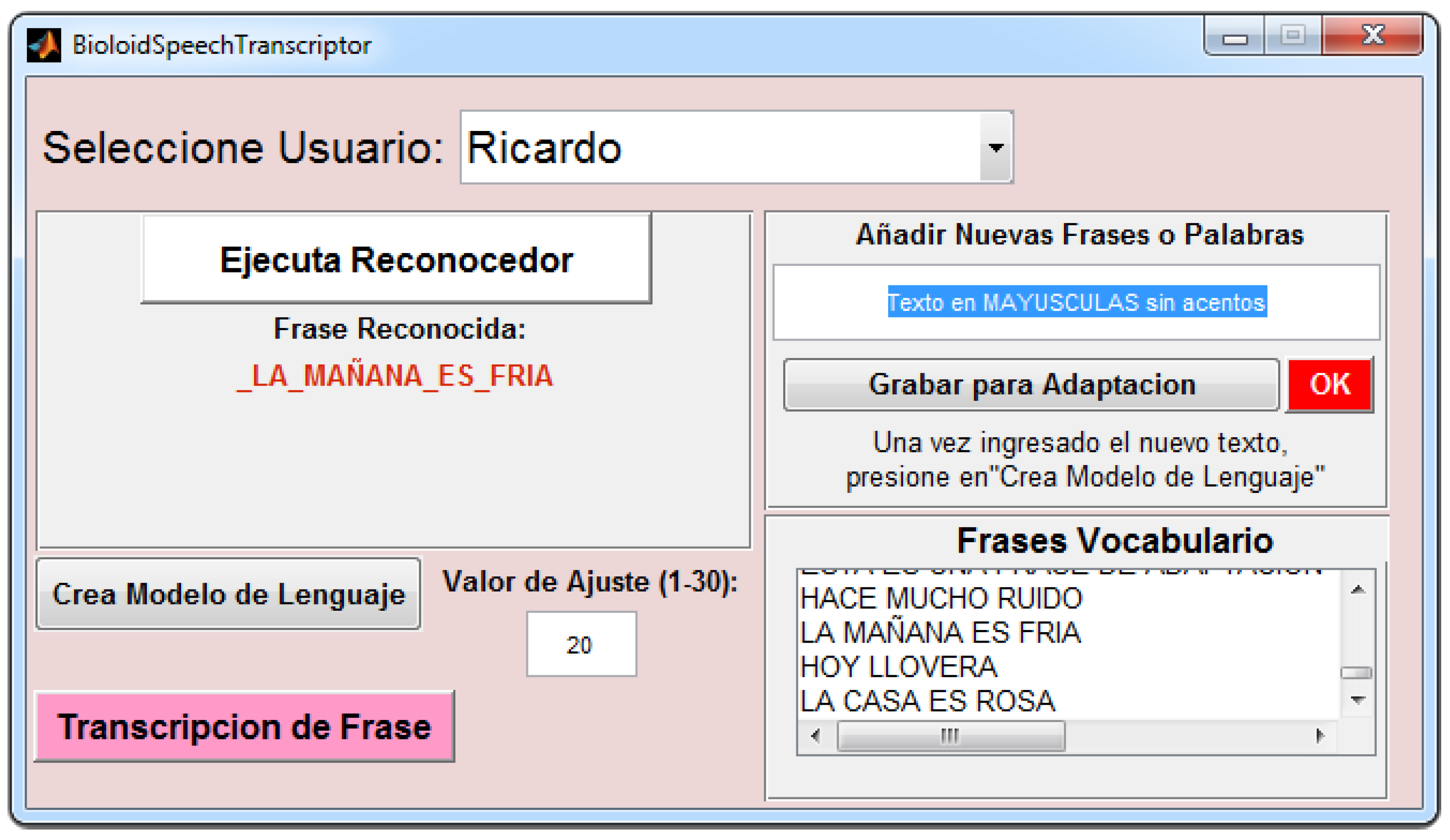

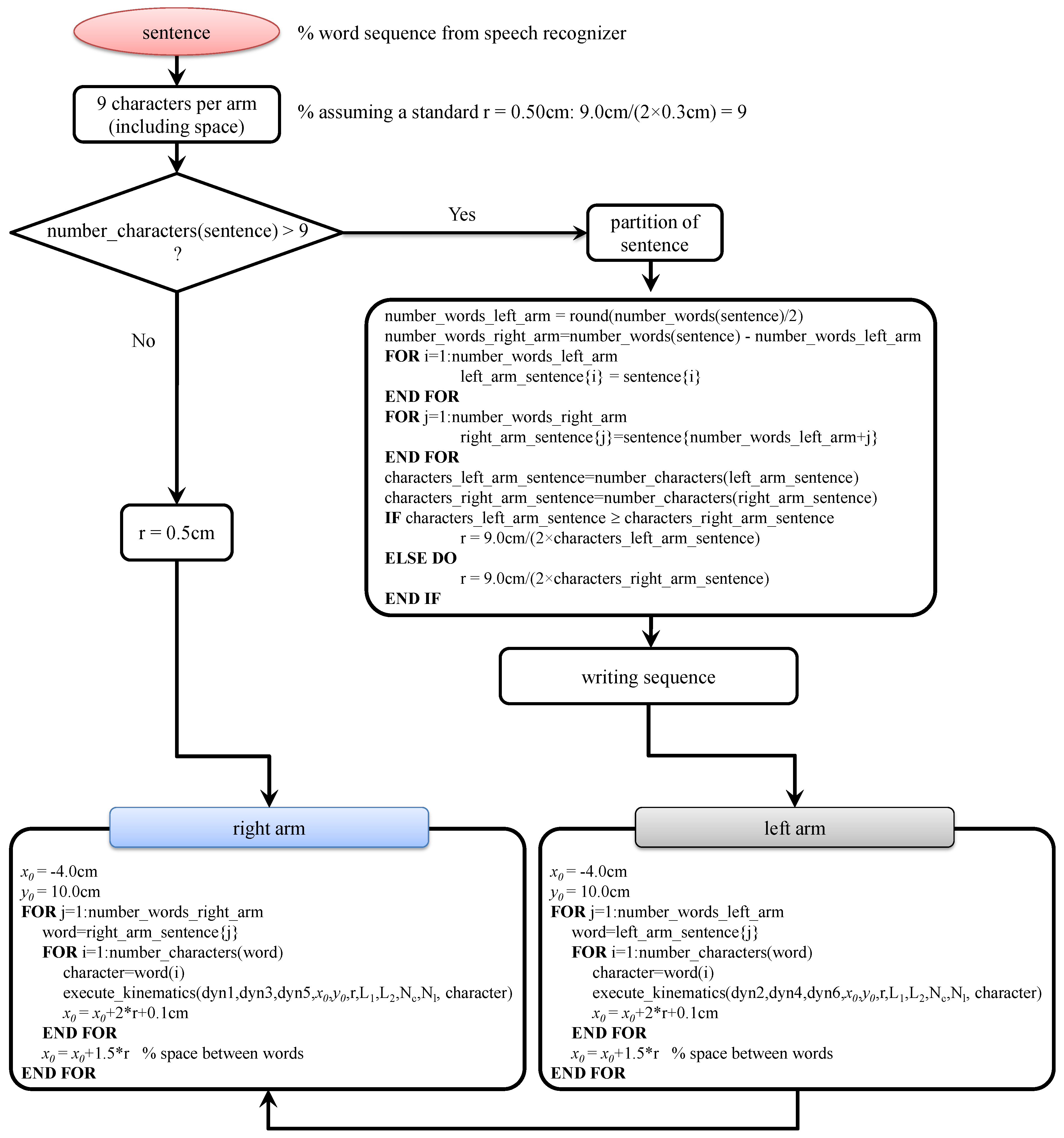

3. Speech-to-Text Transcriptor

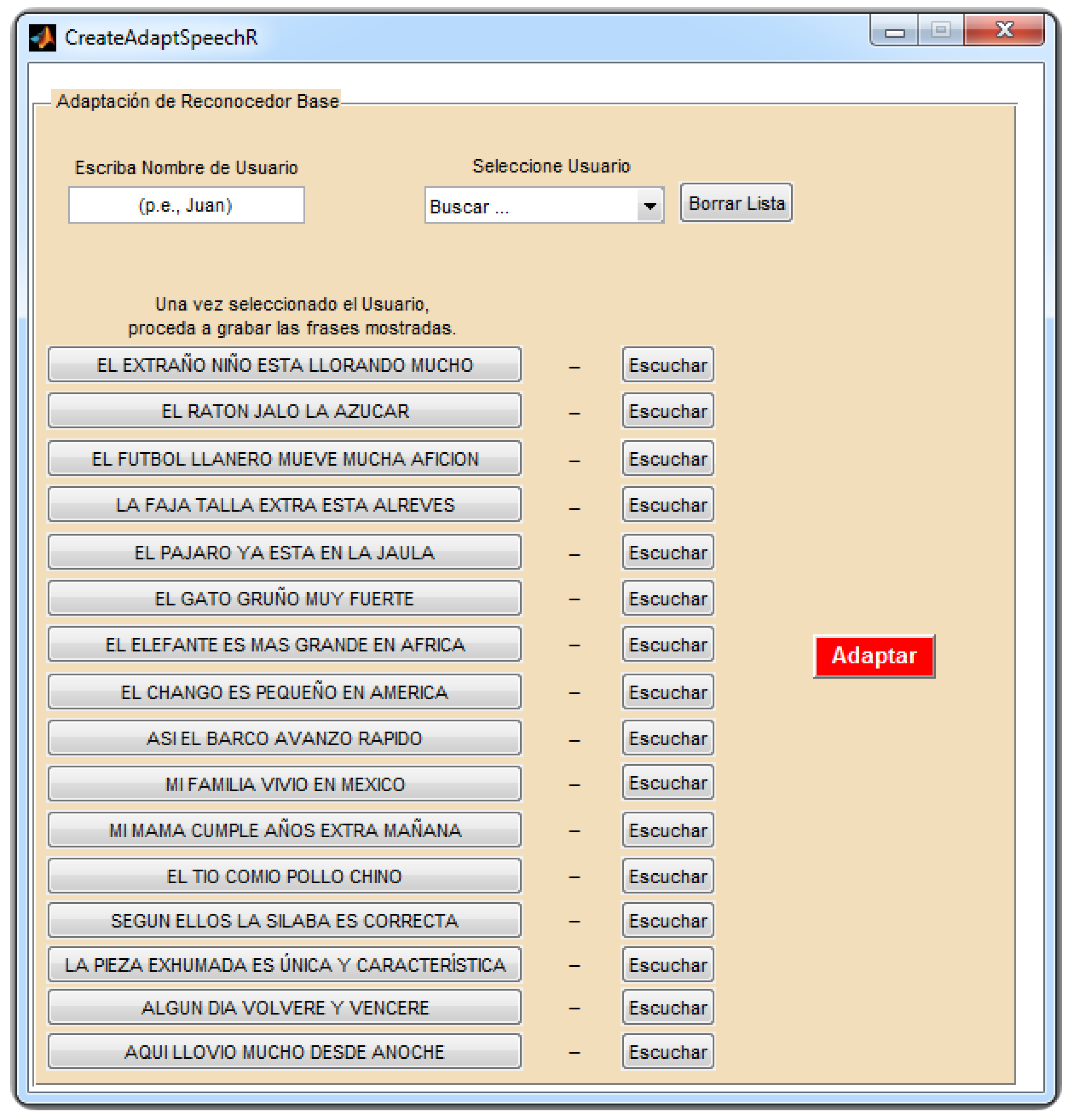

3.1. Speech Recognizer and Alphabet Segmentation

3.2. Database of Alphabet Models

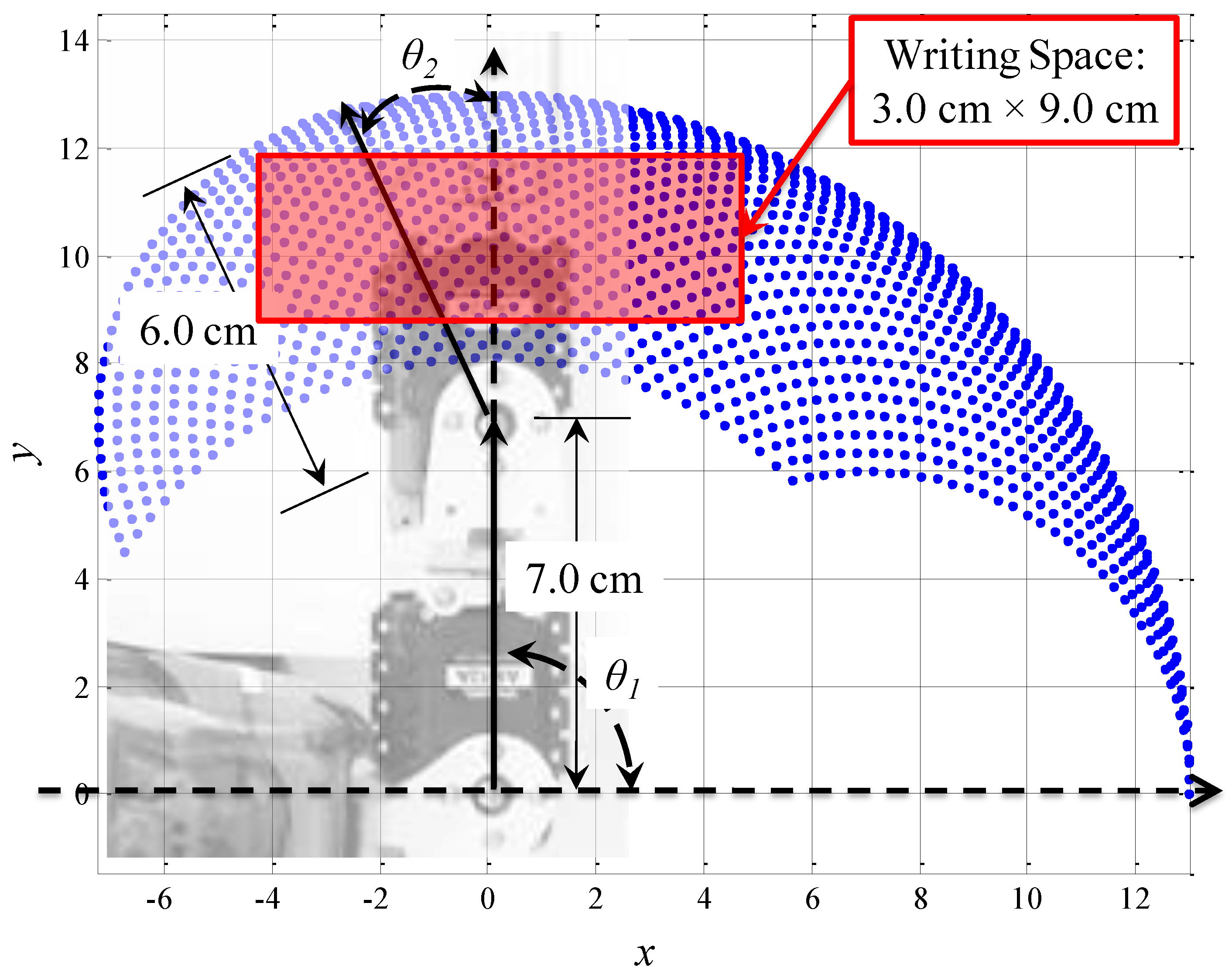

3.2.1. Writing Space

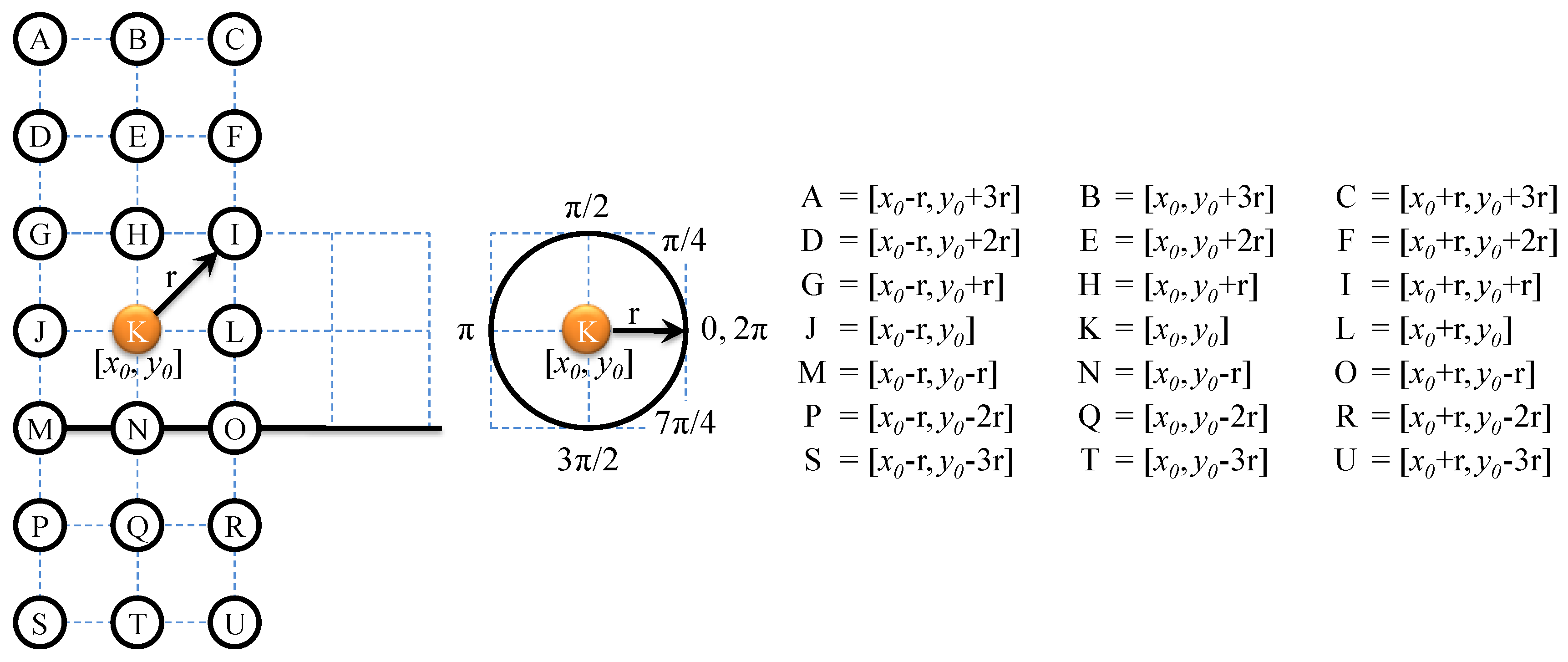

3.2.2. Inverse Kinematics

| Alphabet | Straight Lines | Curved Lines-Circumferences | ||

|---|---|---|---|---|

| Start Point | End Point | Center, Radius | Angular Range | |

| a | I, where I(2) = I(2) − r/2 | O | K, r | |

| b | A | M | K, r | |

| c | K, r | |||

| d | C | O | K, r | |

| e | J | L | K, r | |

| f | D | M | E, r | |

| G | I | |||

| g | R | I | Q, r | |

| K, r | ||||

| h | O | L | K, r | |

| M | A | |||

| i | N | H | ||

| H, where H(2) = H(2) + 0.2 | H, where H(2) = H(2) + 0.3 | |||

| j | R | I | Q, r | |

| I, where I(2) = I(2) + 0.2 | I, where I(2) = I(2) + 0.3 | |||

| k | A | M | ||

| E | G | |||

| G | O | |||

| l | B | N | ||

| ll | B, where B(1) = B(1) − 0.3 | N, where N(1) = N(1) − 0.3 | ||

| B, where B(1) = B(1) + 0.3 | N, where N(1) = N(1) + 0.3 | |||

| m | O | I, where I(2) = I(2) − r/2 | K, where K(1) = K(1) + r/2 and K(2) = K(2) + r/2, r = r/2 | |

| N | H, where H(2) = H(2) − r/2 | K, where K(1) = K(1) − r/2 and K(2) = K(2) + r/2, r = r/2 | ||

| G | M | |||

| n | O | L | K, r | |

| G | M | |||

| ñ | O | L | K, r | |

| G | M | |||

| G, where G(2) = G(2) + 0.2 | I, where I(2) = I(2) + 0.2 | |||

| o | K, r | |||

| p | G | S | K, r | |

| q | I | U | K, r | |

| r | G | M | K, r | |

| s | I | G, where G(1) = G(1) + r/2 | K, where K(1) = K(1) − r/2 and K(2) = K(2) + r/2, r = r/2 | |

| J, where J(1) = J(1) + r/2 | L, where L(1) = L(1) − r/2 | K, where K(1) = K(1) + r/2 and K(2) = K(2) − r/2, r = r/2 | ||

| M | O, where O(1) = O(1) − r/2 | |||

| t | B | N | ||

| G | I | |||

| u | G | J | K, r | |

| L | I | |||

| v | G | N | ||

| N | I | |||

| w | G | N, where N(1) = N(1) − r/2 | ||

| N, where N(1) = N(1) − r/2 | H | |||

| H | O, where O(1) = O(1) − r/2 | |||

| O, where O(1) = O(1) − r/2 | I | |||

| x | G | O | ||

| I | M | |||

| y | G | N | ||

| I | S | |||

| z | G | I | ||

| I | M | |||

| M | O | |||

- (a)

- Define the start (s) and end (e) x-y points: , .

- (b)

- Define the vector X with equally-spaced points between and .

- (c)

- Define the vector Y with equally-spaced points between and .

- (d)

- Because X and Y are equally-sized vectors, each [X(i), Y(i)], where i = 1, ..., represents the coordinate of the -th x-y point between and .

- (e)

- For each [X(i), Y(i)], compute the inverse kinematics to obtain the associated and angles:For the right arm:For the left arm:Then, can be computed with the arctangent function as:is computed as follows:

- (a)

- Define the reference (center) x-y point for the curve (e.g., K):

- (b)

- Define the vector α with equally-spaced angular points between zero and 2π. This vector defines the angular/rotation range for the radius r, which is used as follows: to draw the “o” character, a complete circle must be performed; thus, α must vary from zero to 2π (angular range = ). For other characters, range fractions may be used: to draw the “c” character, α should vary from π/4–7π/4 (angular range = ). By changing the location of the point, the scale for r and the ranges for α, smaller curves required for characters, such as “m”, can be drawn.

- (c)

- For each where i = 1, ..., , a coordinate point [X(i), Y(i)] can be computed as follows:

- (d)

- For each [X(i), Y(i)], the inverse kinematics to obtain the associated and angles can be computed as in the case for straight lines.

3.2.3. Angular Conversion

- (a)

- For the right arm:

- (b)

- For the left arm:

3.3. Execution of the Kinematic Models

4. Performance

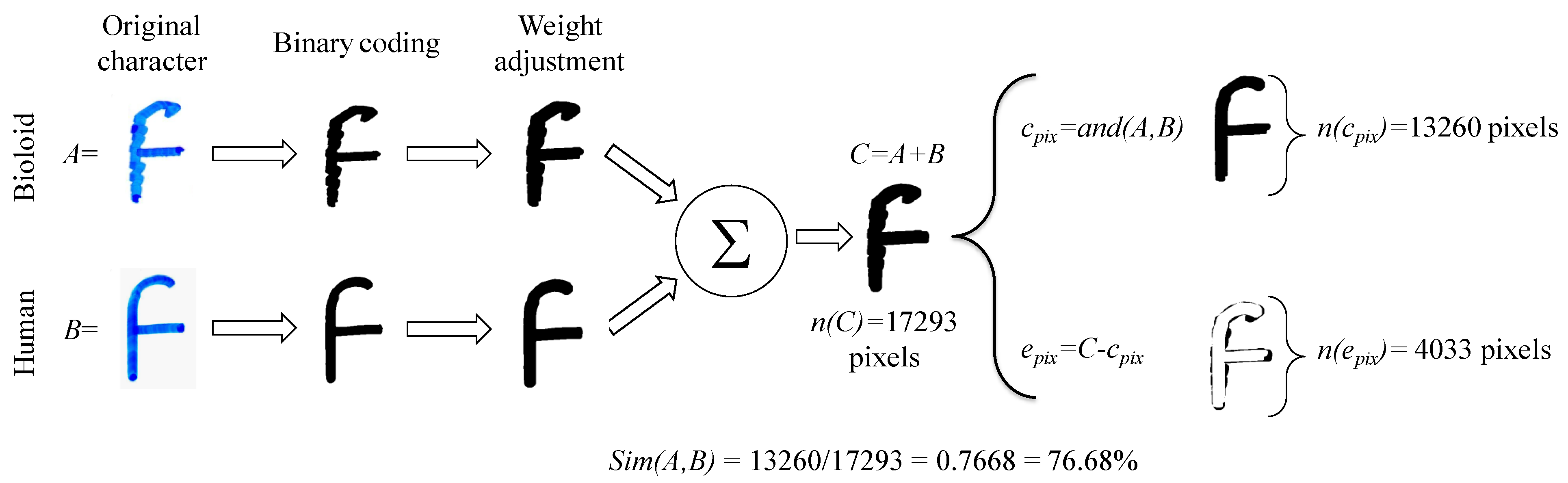

- (a)

- Initially, the characters that were drawn by the Bioloid robot and the human user (A and B, respectively) are coded into binary format.

- (b)

- Then, an adjustment of the character’s weight is performed to reduce the effect of the weight on the characters’ comparison process. This adjustment was performed with the bwmorph function in MATLAB.

- (c)

- An integrated character is obtained by performing the sum C = . Due to the binary coding, the sum of all values in C () represents the total number of pixels in A and B. These pixels include “correct” pixels (those in A that match B) and “error” pixels (those in A that do not match B).

- (d)

- is computed as and is computed as . Then, the similarity between A and B (i.e., ) is estimated by considering the number of “correct” pixels within C ().

5. Discussion and Future Work

- (a)

- Stability of the robot’s pose: Several poses were considered for the robot in order to have proper stability for the writing task. Initially, the kneeling position was considered; however, significant instability was observed. Then, the standing position was considered with more stability. In this work, however, the kneeling position was considered for the task, because it is a more identifiable pose for the writing task. More stability was achieved by disabling the knee Dynamixels (dyn13, dyn14) to allow the whole body to “rest” on the “back-feet” Dynamixels (dyn17, dyn18). Then, by enabling the thigh Dynamixels (dyn11, dyn12), a straight pose of the upper body was achieved. This was also helpful to produce a “reaction force” in case of a significant friction between the pen and the writing plane (e.g., paper or transparency sheet). Nevertheless, due to the nature and characteristics of the Bioloid’s different elements and moving parts, the robot’s structure is not rigid, and it is susceptible to vibrations and instability.

- (b)

- Trajectory accuracy and feedback frequency: The Dynamixels have a relatively low trajectory accuracy and low feedback frequency [25], which reduces the precision of the required movements. As such, there are disparities between the straight and curved lines that were planned and the actual trajectory executed by the robotic arm [25].

- (c)

- Friction: During the writing task, there is a friction force caused by the texture of the pen and the writing plane, which restricts the smooth movements of the Dynamixels. This may also depend on the type of ink and pen. As a consequence of this situation, the tip of the pen may get stuck in a trajectory point. This leads to the following events: (1) the arm’s Dynamixels continue the movement required to complete the trajectory, which increases the force on the pen; (2) when this force is more significant, the tip of the pen is released abruptly, leading to an alteration in the drawing pattern.

- (d)

- Axis deviation of the writing line: As presented in Figure 14 and Figure 15, a small deviation on the y-axis was observed in both arms while writing the short and long sentences. This may be caused by the approximate conversion from radians to 0–1024 values and the reference values considered for the coordinate axes on the 0–1024 range (see Figure 9).

- (a)

- Develop the dynamic models required for the handwriting task [11].

- (b)

- Integrate a visual feedback system to perform compensatory actions to correct alterations (and deviations) in the trajectories.

- (c)

- Adapt the speech-to-text transcriptor to other humanoid systems, such as the NAO robot.

- (d)

- Integrate a sensor to adjust the force of the arm on the writing plane to reduce friction with the tip of the pen.

- (e)

- Reduce the gap between the writing spaces of the left and right arms.

- (f)

- Perform transcription of sentences into paragraphs. The visual feedback is fundamental for this implementation.

Conflicts of Interest

References

- Han, J. Robot-Aided Learning and r-Learning Services. In Human-Robot Interaction; Chugo, D., Ed.; Intech: Rijeka, Croatia, 2010; pp. 247–266. [Google Scholar]

- Chang, C.; Lee, J.; Chao, P.; Wang, C.; Chen, G. Exploring the Possibility of Using Humanoid Robots as Instructional Tools for Teaching a Second Language in Primary School. Educ. Technol. Soc. 2010, 13, 13–24. [Google Scholar]

- Mubin, O.; Stevens, C.; Shahid, S.; Al-Mahmud, A.; Dong, J. A Review of the Applicability of Robots in Education. Technol. Educ. Learn. 2013, 1, 1–7. [Google Scholar] [CrossRef]

- Hood, D.; Lemaignan, S.; Dillenbourg, P. When Children Teach a Robot to Write: An Autonomous Teachable Humanoid Which Uses Simulated Handwriting. In Proceedings of the 10th Annual ACM/IEEE International Conference on Human-Robot Interaction, Portland, OR, USA, 2–5 March 2015; pp. 1–8.

- Bainbridge, W.; Hart, J.; Kim, E.; Scassellati, B. The benefits of interactions with physically present robots over video-displayed agents. Int. J. Soc. Robot. 2011, 3, 41–52. [Google Scholar] [CrossRef]

- Kidd, C.; Breazeal, C. Robots at home: Understanding long-term human-robot interaction. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2008, Nice, France, 22–26 September 2008; pp. 3230–3235.

- Leyzberg, D.; Spaulding, S.; Toneva, M.; Scassellati, B. The physical presence of a robot tutor increases cognitive learning gains. In Proceedings of the 34th Annual Conference of the Cognitive Science Society, Sapporo, Japan, 1–4 August 2012; pp. 1–6.

- Medwell, J.; Wray, D. Handwriting—A Forgotten Language Skill? Lang. Educ. 2008, 22, 34–47. [Google Scholar] [CrossRef]

- Malloy-Miller, T.; Polatajko, H.; Anstett, B. Handwriting error patterns of children with mild motor difficulties. Can. J. Occup. Ther. 1995, 62, 258–267. [Google Scholar] [CrossRef]

- Christensen, C. The Role of Orthographic Motor Integration in the Production of Creative and Well-Structured Written Text for Students in Secondary School. Educ. Psychol. 2005, 25, 441–453. [Google Scholar] [CrossRef]

- Potkonjak, V.; Popović, M.; Lazarević, M.; Sinanović, J. Redundancy Problem in Writing: From Human to Anthropomorphic Robot Arm. IEEE Trans. Syst. Man Cybern. Part B Cybern. 1998, 28, 790–805. [Google Scholar] [CrossRef] [PubMed]

- Potkonjak, V.; Tzafestas, S.; Kostic, D.; Djordjevic, G. Human-like behavior of robot arms: General considerations and the handwriting task—Part I: Mathematical description of human-like motion: Distributed positioning and virtual fatigue. Robot. Comput. Integr. Manuf. 2001, 17, 305–315. [Google Scholar] [CrossRef]

- Teo, C.; Burdet, E.; Lim, H. A robotic teacher of Chinese handwriting. In Proceedings of the 10th Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Orlando, FL, USA, 24–25 March 2002; pp. 335–341.

- Basteris, A.; Bracco, L.; Sanguineti, V. Robot-assisted intermanual transfer of handwriting skills. Hum. Mov. Sci. 2012, 31, 1175–1190. [Google Scholar] [CrossRef] [PubMed]

- Hood, D.; Lemaignan, S.; Dillenbourg, P. The CoWriter Project: Teaching a Robot how to Write. In Proceedings of the 10th Annual ACM/IEEE International Conference on Human-Robot Interaction, HRI’15 Extended Abstracts, Portland, OR, USA, 2–5 March 2015; p. 269.

- Matsui, A.; Katsura, S. A method of motion reproduction for calligraphy education. In Proceedings of the 2013 IEEE International Conference on Mechatronics (ICM), Vicenza, Italy, 27 February–1 March 2013; pp. 452–457.

- Franke, K.; Schomaker, L.; Koppen, M. Pen Force Emulating Robotic Writing Device and its Application. In Proceedings of the 2005 IEEE Workshop on Advanced Robotics and its Social Impacts, Hsinchu, Taiwan, 12–15 June 2005; pp. 36–46.

- Pérez-Marcos, D.; Buitrago, J.; Giraldo-Velásquez, F. Writing through a robot: A proof of concept for a brain-machine interface. Med. Eng. Phys. 2011, 33, 1314–1317. [Google Scholar] [CrossRef] [PubMed]

- Man, Y.; Bian, C.; Zhao, H.; Xu, C. A Kind of Calligraphy Robot. In Proceedings of the 3rd International Conference on Information Sciences and Interaction Sciences (ICIS 2010), Chengdu, China, 23–25 June 2010; pp. 635–638.

- Huebel, N.; Mueggler, E.; Waibel, M.; D’Andrea, R. Towards Robotic Calligraphy. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October, 2012; pp. 5165–5166.

- Fujioka, H.; Kano, H. Design of Cursive Characters Using Robotic Arm Dynamics as Generation Mechanism. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 3195–3200.

- Florez-Escobar, J. Writing SCARA Robot. Available online: http://www.youtube.com/watch?v=DmUlbw8kiX8 (accessed on 22 October 2014).

- Calzada, F. NAO Robot Writer. Available online: http://www.youtube.com/watch?v=5hqNi2S4rlM (accessed on 22 October 2014).

- Potkonjak, V.; Tzafestas, S.; Kostic, D.; Djordjevic, G. Human-like behavior of robot arms: General considerations and the handwriting task—Part II: The robot arm in handwriting. Robot. Comput. Integr. Manuf. 2001, 17, 317–327. [Google Scholar] [CrossRef]

- Tresset, P.; Leymarie, F. Portrait drawing by Paul the robot. Comput. Graph. 2013, 37, 348–363. [Google Scholar] [CrossRef]

- Han, J.; Ha, I. Educational Robotic Construction Kit: Bioloid. In Proceedings of the 17th World Congress of the International Federation of Automatic Control (IFAC 2008), Seoul, Korea, 6–10 July 2008; pp. 3035–3036.

- Röfer, T.; Laue, T.; Burchardt, A.; Damrose, E.; Fritsche, M.; Müller, J.; Rieskamp, A. B-Human: Team Description for RoboCup 2008. In RoboCup 2008: Robot Soccer World Cup XII Preproceedings; Iocchi, L., Matsubara, H., Weitzenfeld, A., Zhou, C., Eds.; RoboCup: Suzhou, China, 2008; pp. 1–6. [Google Scholar]

- Caballero-Morales, S. Development of motion models for writting of the Spanish alphabet on the humanoid Bioloid robotic platform. In Proceedings of the 24th International Conference on Electronics, Communications and Computers (CONIELECOMP 2014), Puebla, Mexico, 26–28 February 2014; pp. 217–224.

- Incognite: Cognitive Modeling and Bio-Inspired Robotics. Bioloid Toolbox for Matlab. Available online: http://incognite.felk.cvut.cz/index.php?page=downloads&cat=downloads (accessed on 22 October 2014).

- Bonilla-Enríquez, G.; Caballero-Morales, S. Communication Interface for Mexican Spanish Dysarthric Speakers. Acta Univ. 2012, 22, 98–105. [Google Scholar]

- Young, S.; Woodland, P. The HTK Book; Version 3.4; Cambridge University Engineering Department: Cambridge, UK, 2006. [Google Scholar]

- Cuétara, J. Fonética de la Ciudad de México: Aportaciones desde las Tecnologías del Habla. Master’s Thesis, National Autonomous University of México (UNAM), Mexico City, Mexico, 2004. [Google Scholar]

- Kucuk, S.; Bingul, Z. Robot Kinematics: Forward and Inverse Kinematics. In Industrial Robotics: Theory, Modelling and Control; Cubero, S., Ed.; InTech: Rijeka, Croatia, 2006; pp. 117–148. [Google Scholar]

- Nunez, J.; Briseno, A.; Rodriguez, D.; Ibarra, J.; Rodriguez, V. Explicit analytic solution for inverse kinematics of Bioloid humanoid robot. In Proceedings of the 2012 Brazilian Robotics Symposium and Latin American Robotics Symposium, Fortaleza, Brazil, 16–19 October 2012; pp. 33–38.

- Bioloid Premium. In Quick Start: Assembly and Program Download Manual; Robotis Co. Ltd.: Seoul, South Korea, 2012.

© 2015 by the author; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Caballero-Morales, S.-O. On the Use of the Humanoid Bioloid System for Robot-Assisted Transcription of Mexican Spanish Speech. Appl. Sci. 2016, 6, 1. https://doi.org/10.3390/app6010001

Caballero-Morales S-O. On the Use of the Humanoid Bioloid System for Robot-Assisted Transcription of Mexican Spanish Speech. Applied Sciences. 2016; 6(1):1. https://doi.org/10.3390/app6010001

Chicago/Turabian StyleCaballero-Morales, Santiago-Omar. 2016. "On the Use of the Humanoid Bioloid System for Robot-Assisted Transcription of Mexican Spanish Speech" Applied Sciences 6, no. 1: 1. https://doi.org/10.3390/app6010001