Fuzzy-System-Based Detection of Pupil Center and Corneal Specular Reflection for a Driver-Gaze Tracking System Based on the Symmetrical Characteristics of Face and Facial Feature Points

Abstract

:1. Introduction

- -

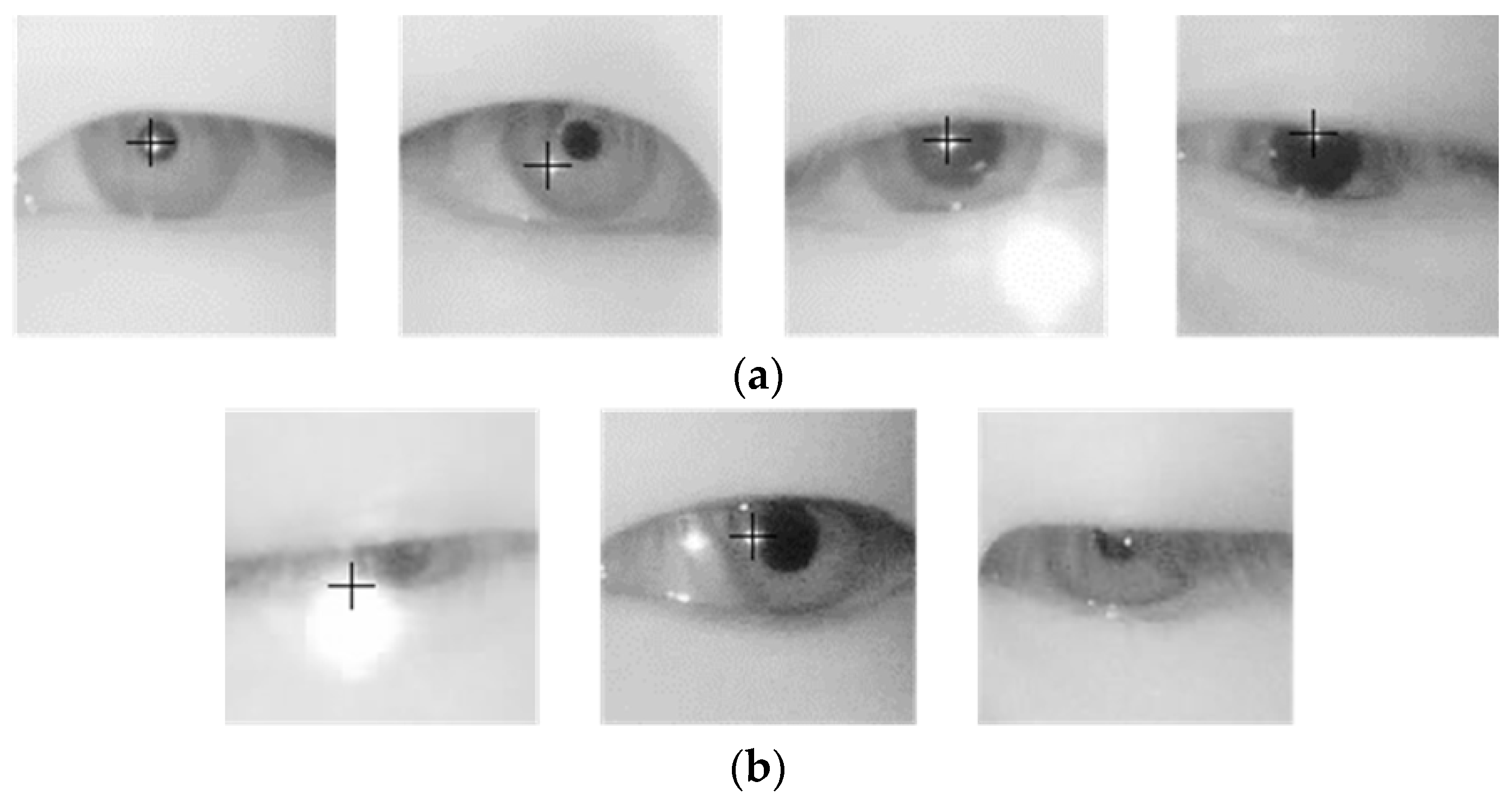

- We calculated a focus value of the eye region detected from an input image and excluded optical and motion blurs from the detection of pupil and corneal SR, which decreased the error rate of gaze tracking.

- -

- Considering the symmetrical characteristics of face and facial feature points, we set the two distance ratios between the facial feature points, which were detected to measure the status of head rotation, as two features, and used them as the two inputs of the fuzzy system.

- -

- We used a fuzzy system to measure the driver’s head rotation. Accordingly, we did not reflect the information on pupil and corneal SR, which were detected in the eye’s region of interest (ROI) while the head was turning, and thus, the error rate was high in gaze tracking. In this way, the gaze tracking accuracy could be improved.

- -

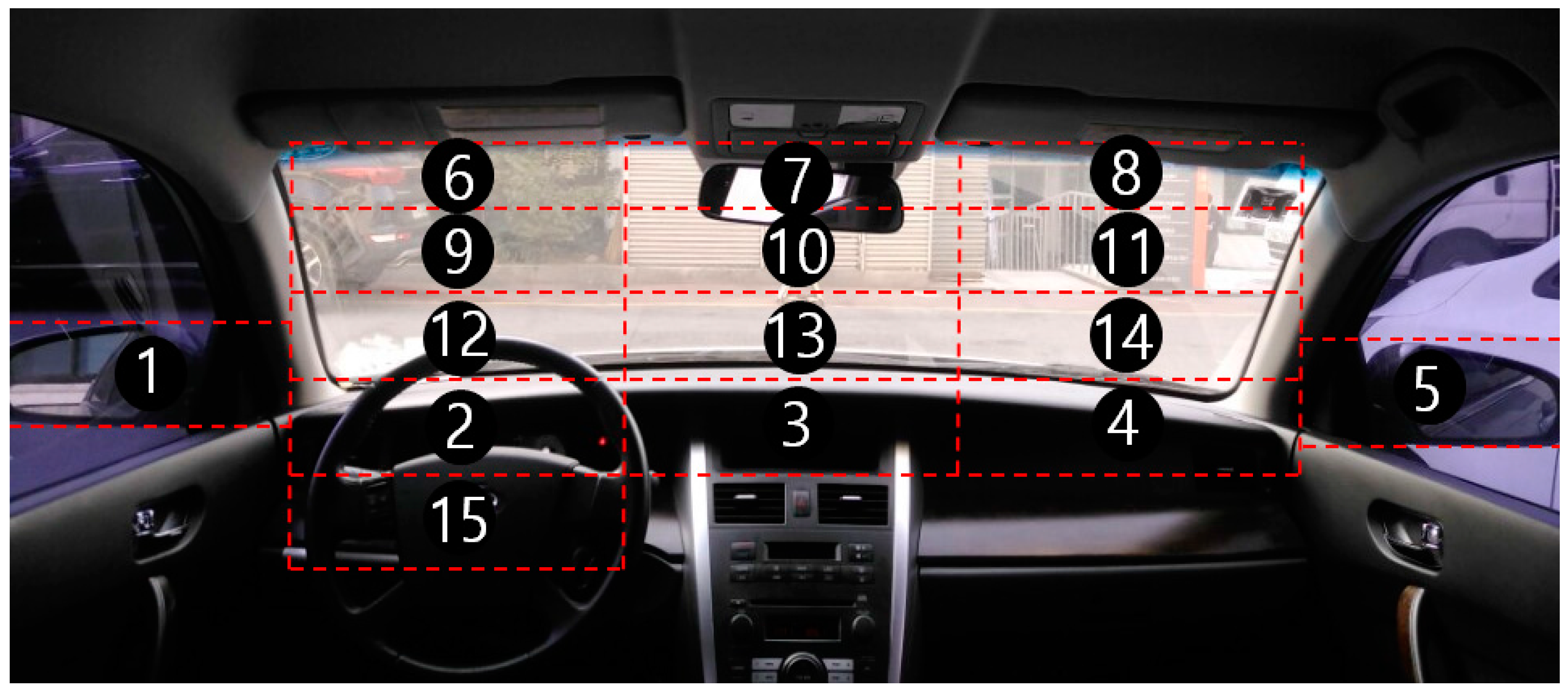

- We opened our algorithm codes and driver-gaze-tracking database (DB), which we constructed for a driver looking at fifteen points in a real vehicle and prepared for experimental purposes, to other researchers who can freely request our database by sending email to authors. We expect the methods of other studies to contribute to a fair performance evaluation.

2. Related Works

3. Materials and Methods

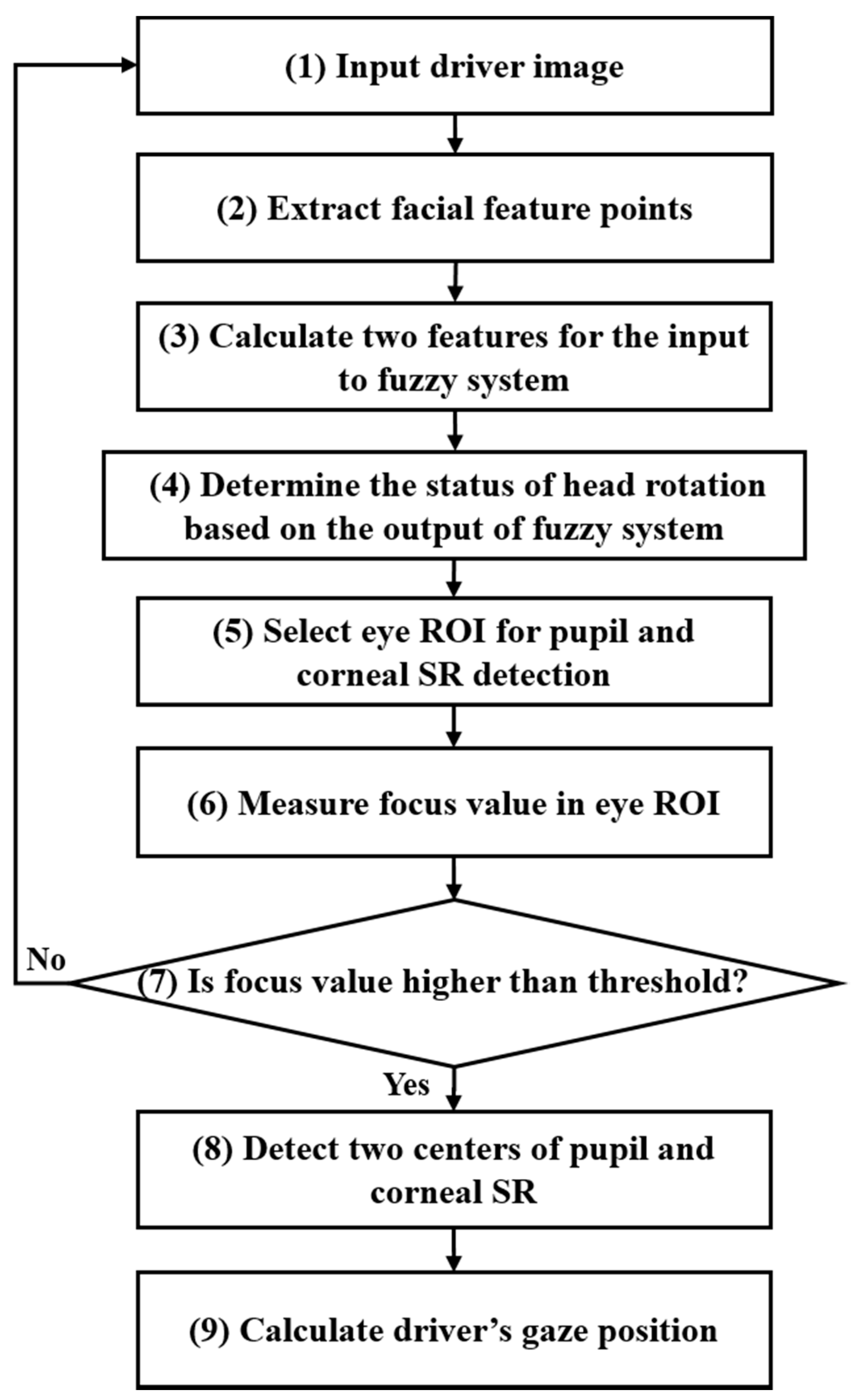

3.1. Overview of the Proposed Method

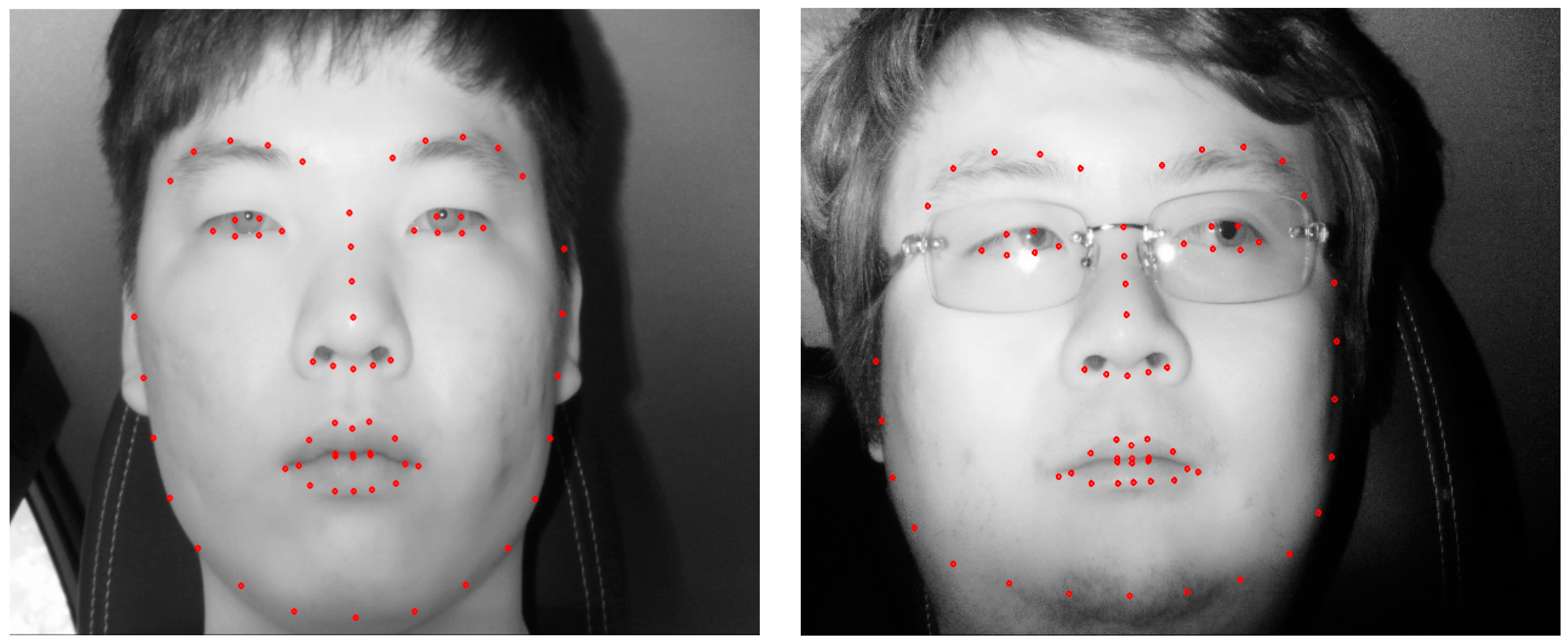

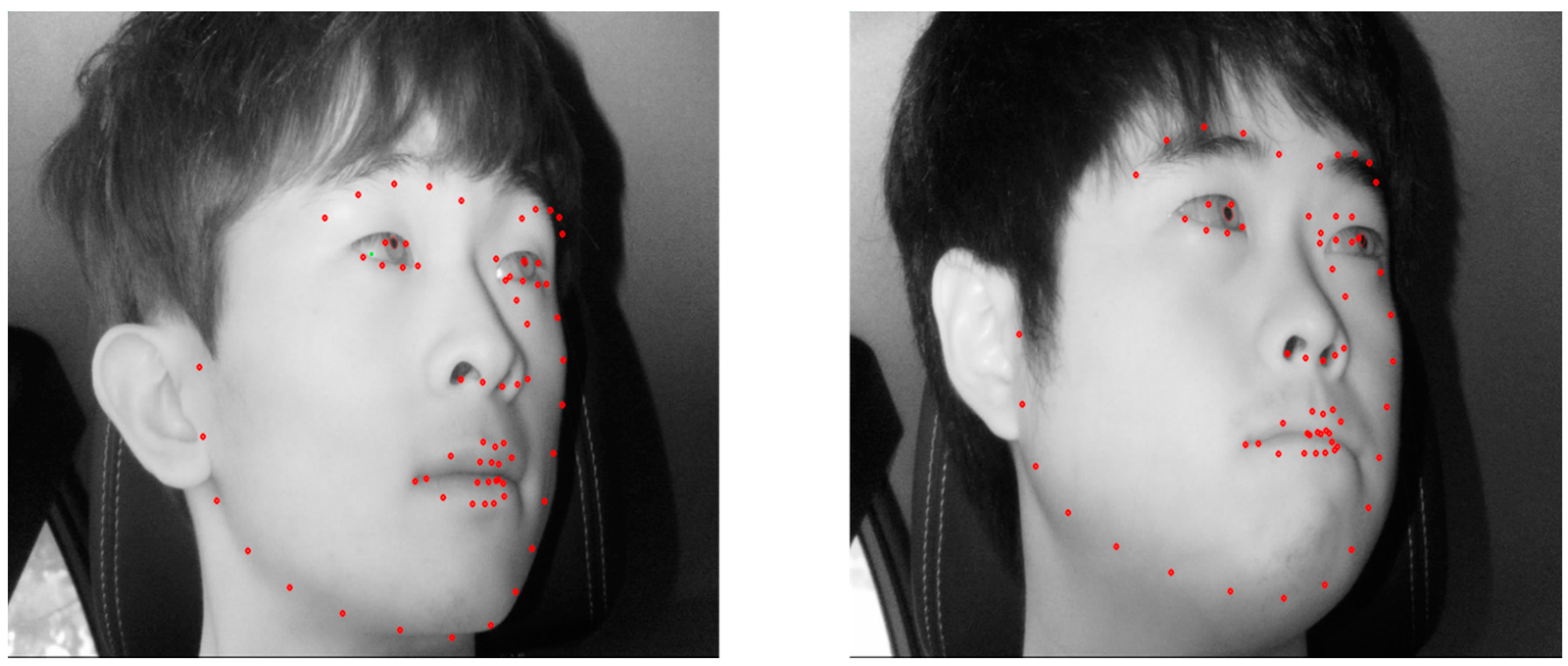

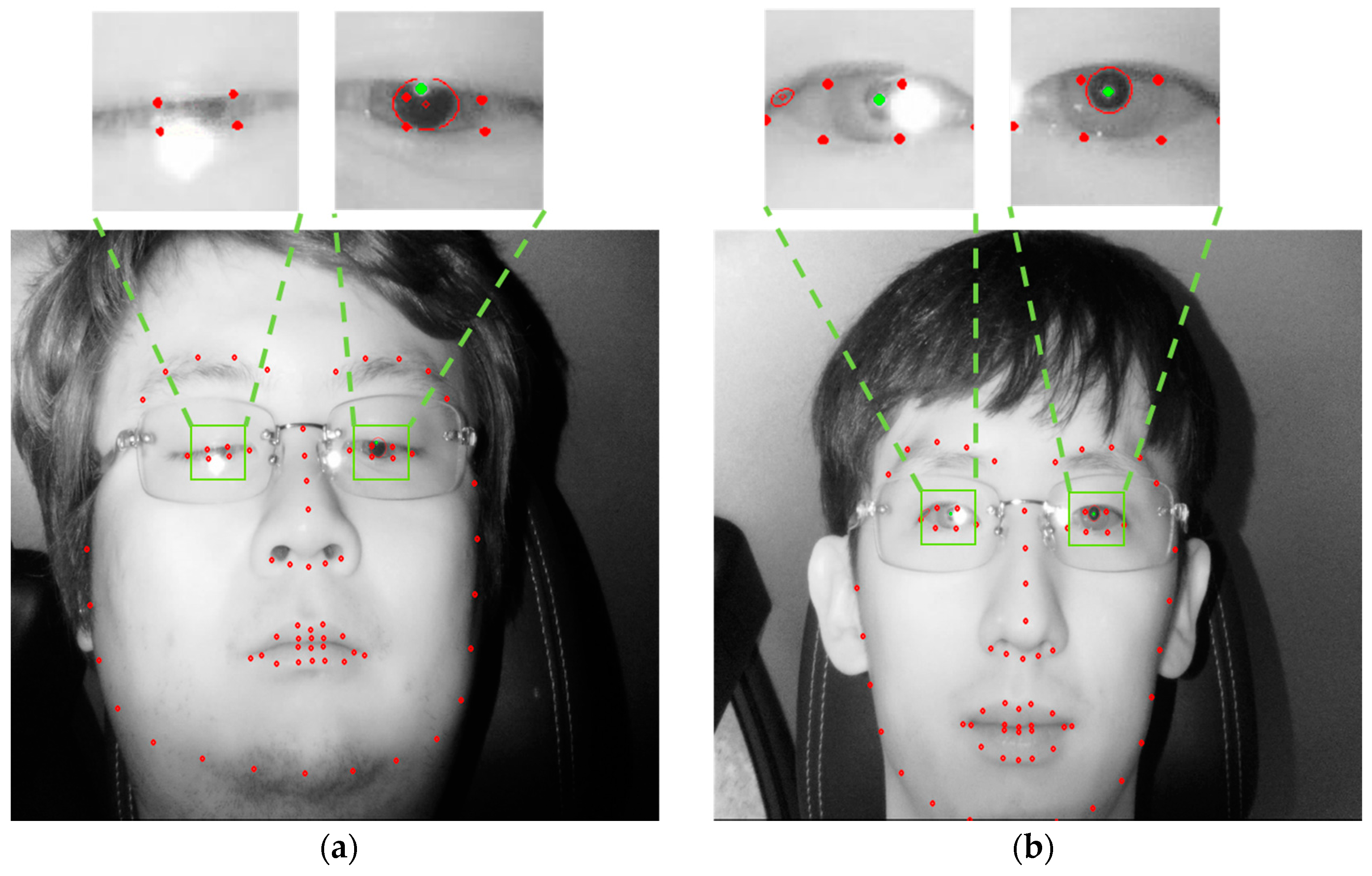

3.2. Detection of Facial Feature Points

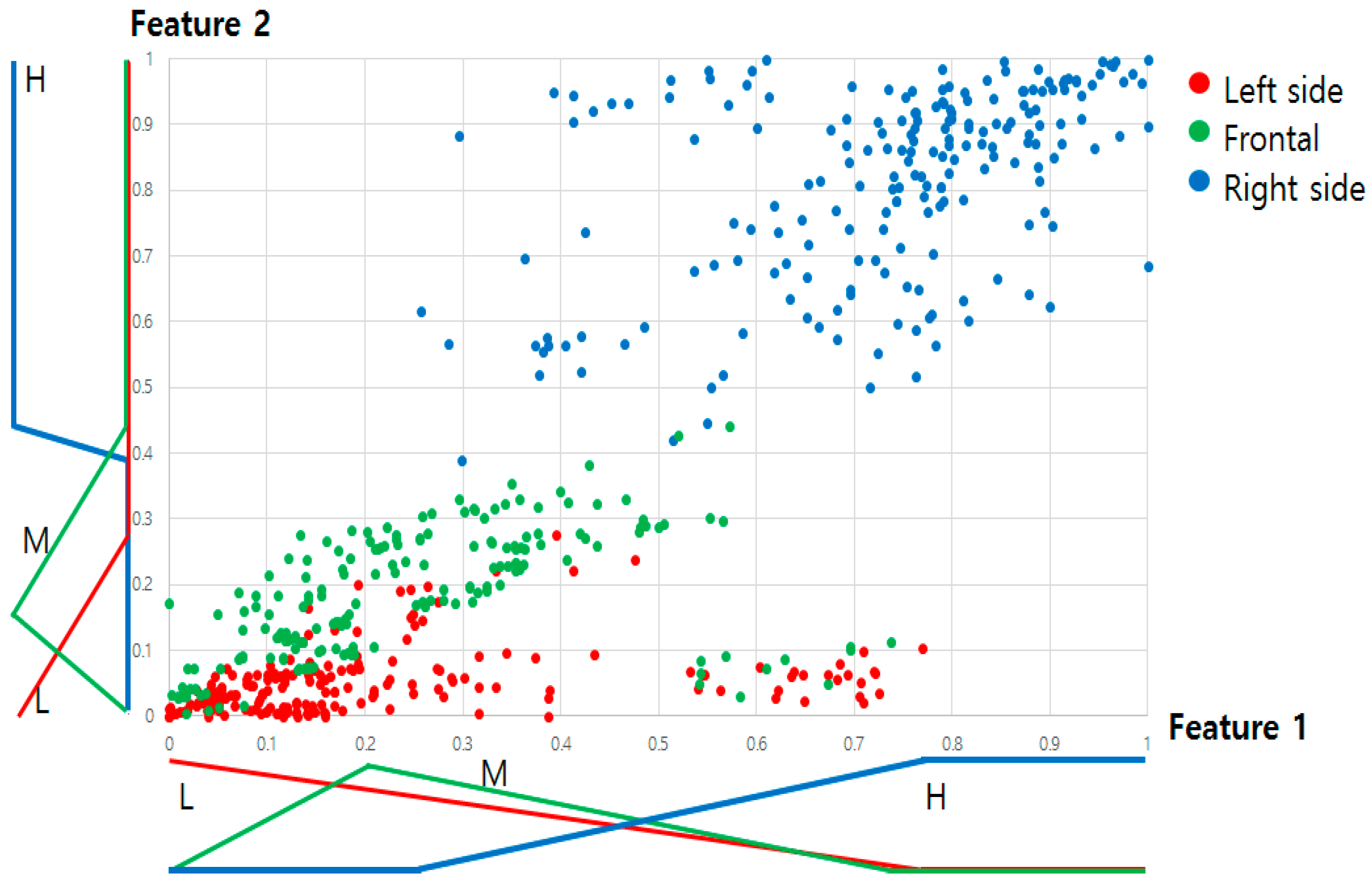

3.3. Determination of the Head Rotation Status Based on the Output of the Fuzzy System

3.3.1. Calculation of Two Features Considering the Symmetrical Characteristics of Face and Facial Feature Points for the Inputs to the Fuzzy System

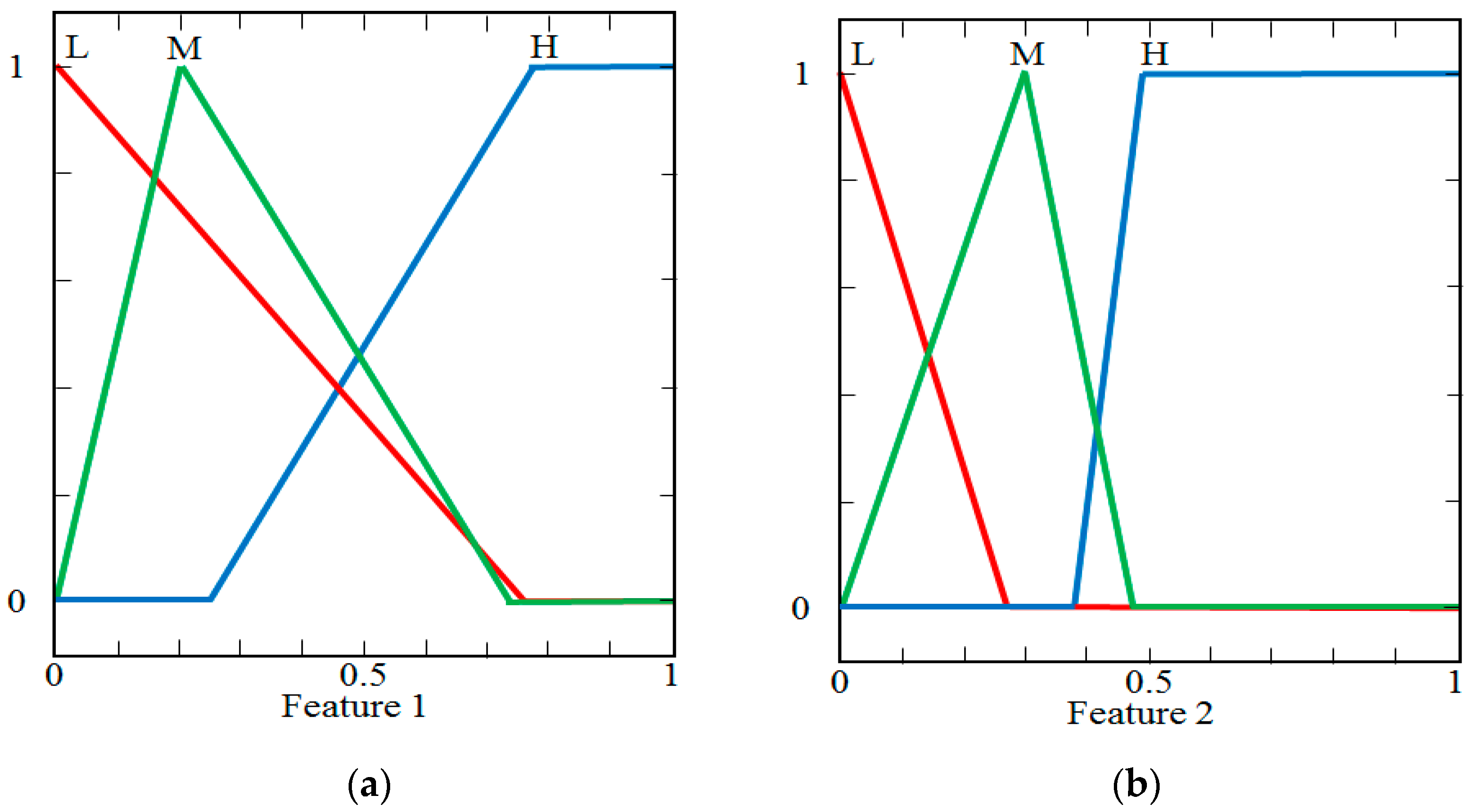

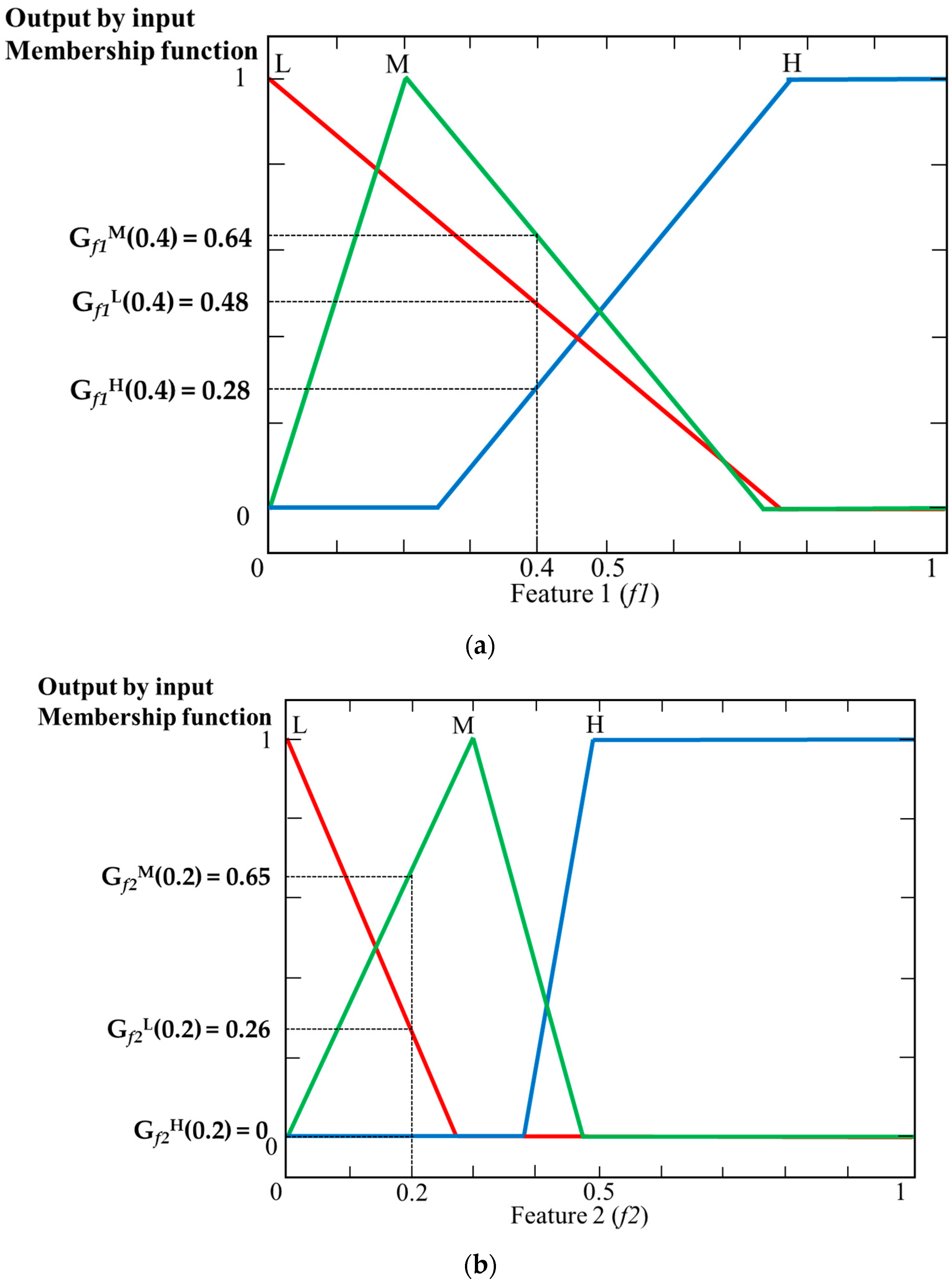

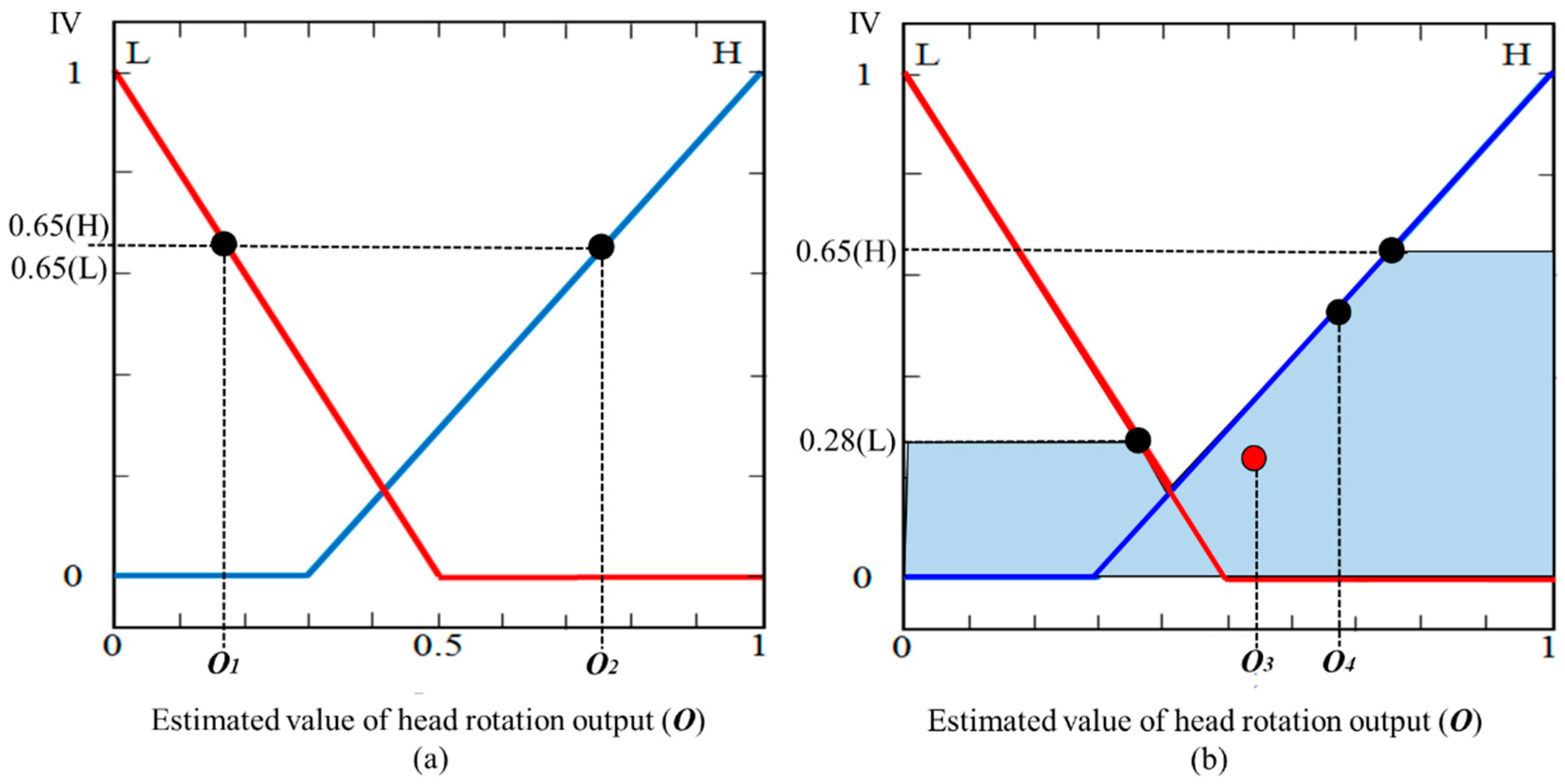

3.3.2. Design of Fuzzy Membership Function and Rule Table

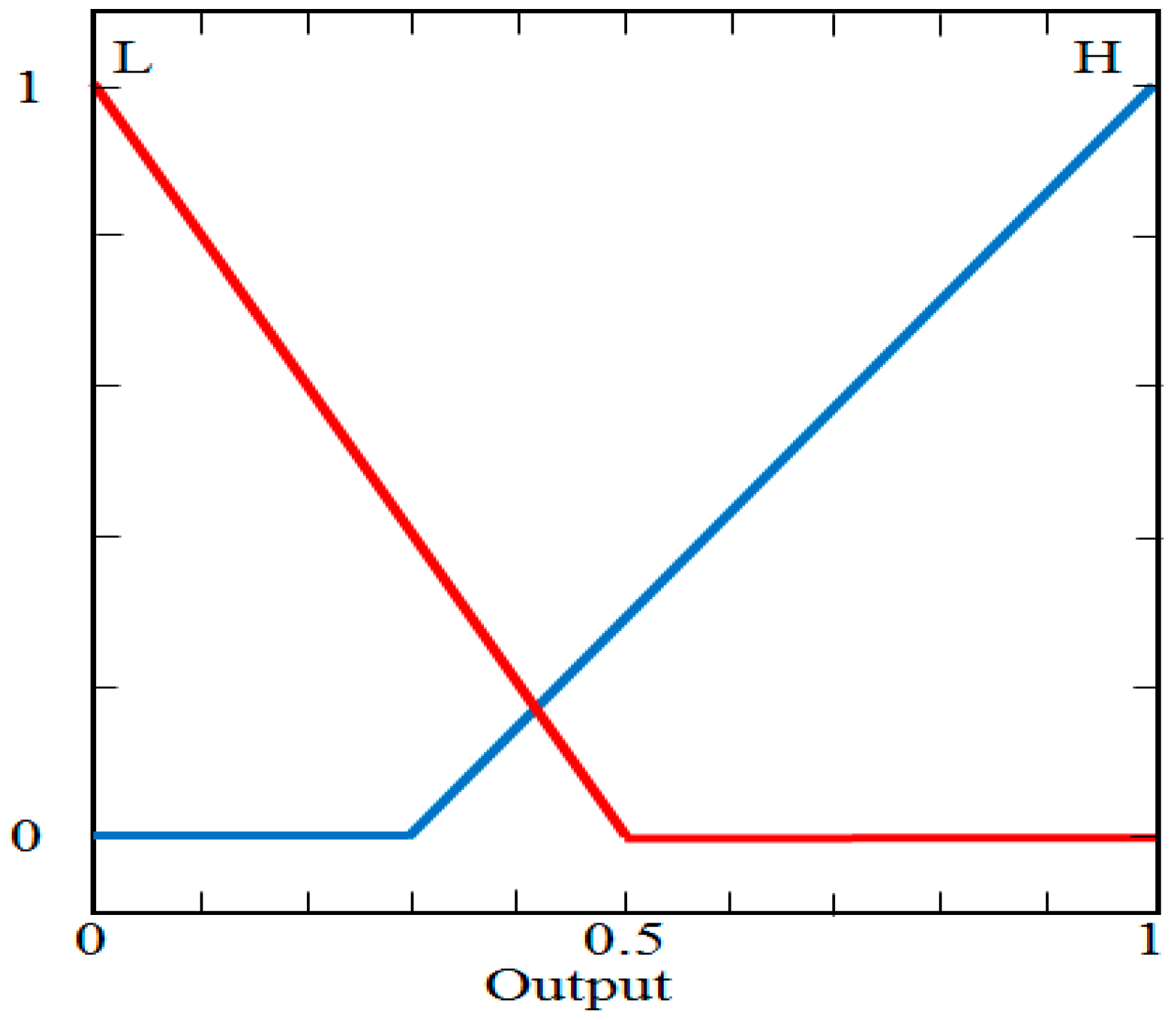

3.3.3. Acquisition of the Output of the Fuzzy System by Defuzzification

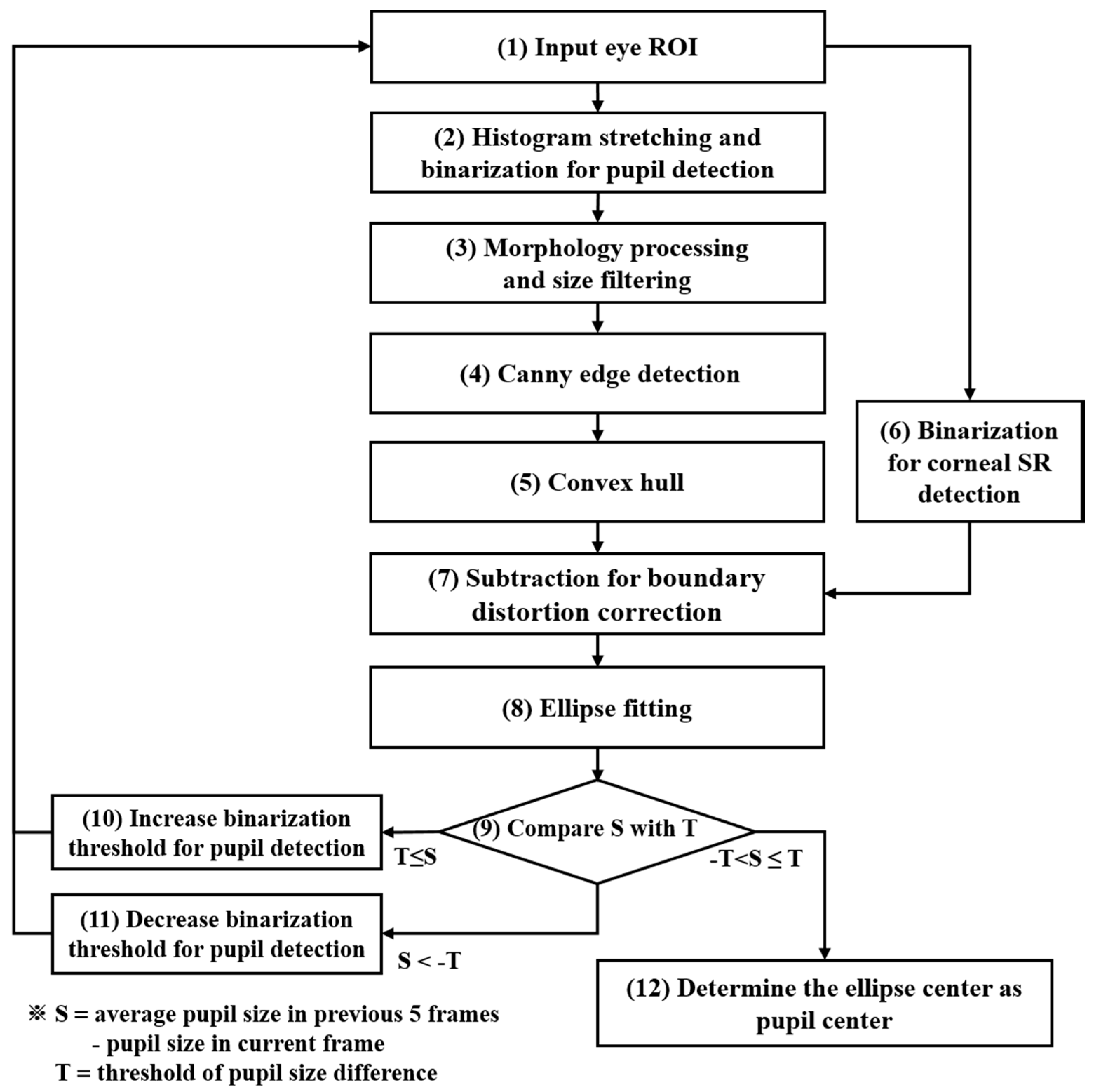

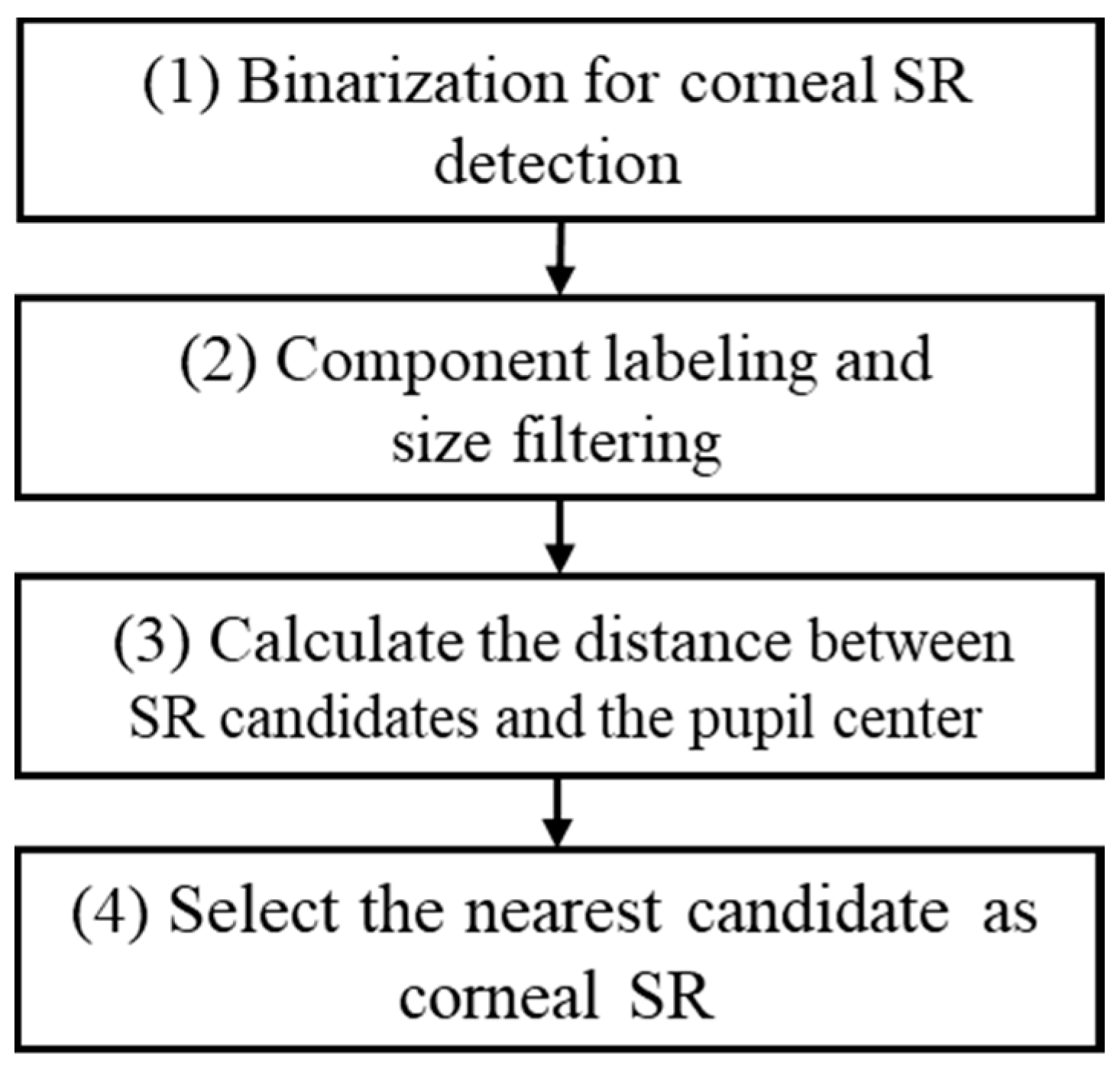

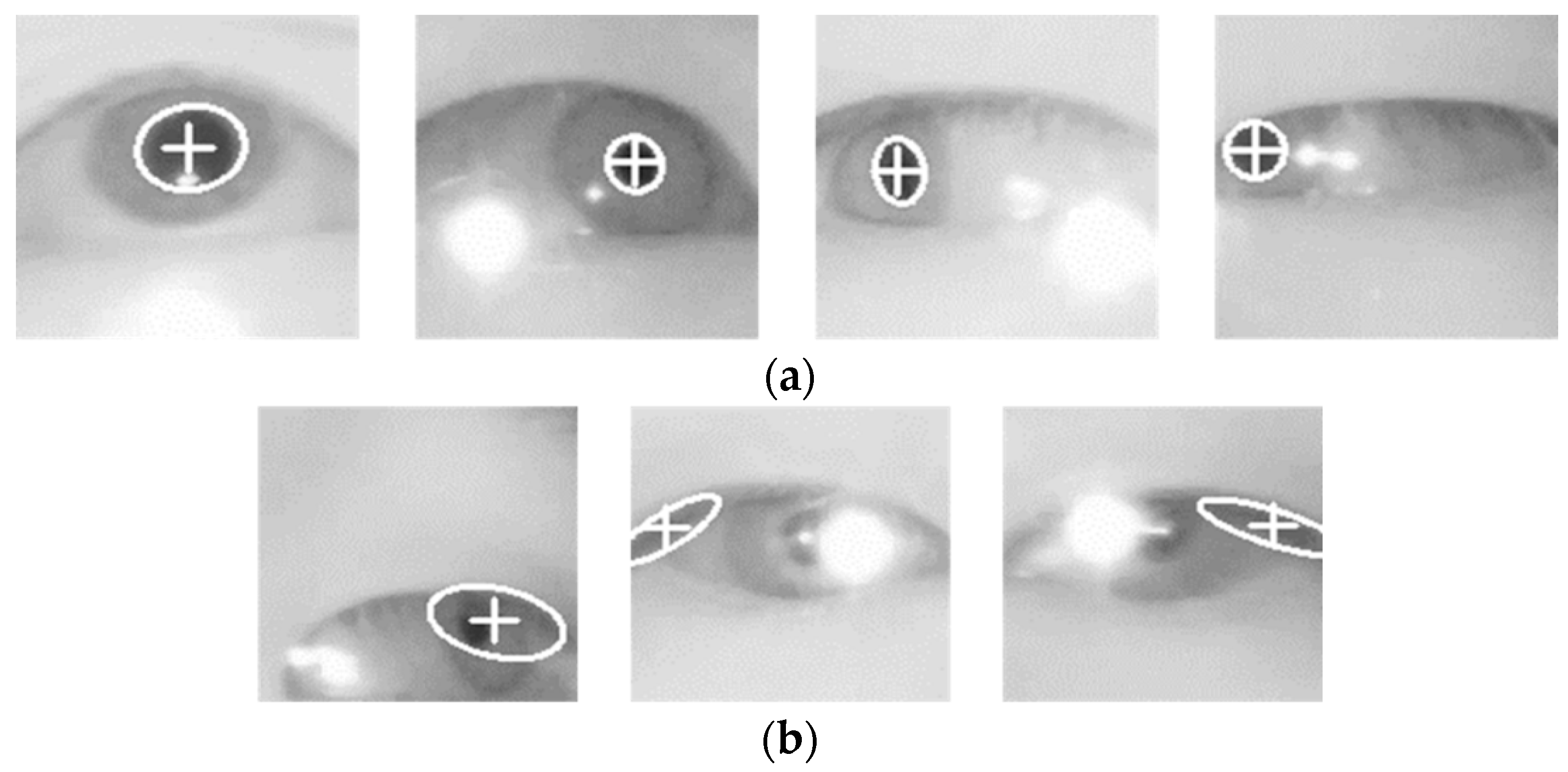

3.4. Detection of the Two Centers of the Pupil and Corneal SR, and Calculating Gaze Position

4. Results

4.1. Experimental Data and Environment

4.2. Performance Evaluation

5. Discussion and Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey; Traffic Safety Facts Crash Stats. Report No. DOT HS 812 115; National Highway Traffic Safety Administration: Washington, DC, USA, February 2015.

- Li, Z.; Li, S.E.; Li, R.; Cheng, B.; Shi, J. Online detection of driver fatigue using steering wheel angles for real driving conditions. Sensors 2017, 17, 495. [Google Scholar] [CrossRef] [PubMed]

- Diddi, V.K.; Jamge, S.B. Head pose and eye state monitoring (HEM) for driver drowsiness detection: Overview. Int. J. Innov. Sci. Eng. Technol. 2014, 1, 504–508. [Google Scholar]

- Schneider, E.; Dera, T.; Bartl, K.; Boening, G.; Bardins, S.; Brandt, T. Eye movement driven head-mounted camera: It looks where the eyes look. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Waikoloa, HI, USA, 10–12 October 2005; pp. 2437–2442. [Google Scholar]

- Ji, Q.; Zhu, Z.; Lan, P. Real-time nonintrusive monitoring and prediction of driver fatigue. IEEE Trans. Veh. Technol. 2004, 53, 1052–1068. [Google Scholar] [CrossRef]

- Hansen, D.W.; Ji, Q. In the eye of the beholder: A survey of models for eyes and gaze. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 478–500. [Google Scholar] [CrossRef] [PubMed]

- Guestrin, E.D.; Eizenman, M. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans. Biomed. Eng. 2006, 53, 1124–1133. [Google Scholar] [CrossRef] [PubMed]

- Noureddin, B.; Lawrence, P.D.; Man, C.F. A non-contact device for tracking gaze in a human computer interface. Comput. Vis. Image Underst. 2005, 98, 52–82. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Koons, D.; Amir, A.; Flickner, M.; Zhai, S. Keeping an eye for HCI. In Proceedings of the 12th Brazilian Symposium on Computer Graphics and Image Processing, Campinas, Brazil, 17–20 October 1999; pp. 171–176. [Google Scholar]

- Yoo, D.H.; Chung, M.J. A novel non-intrusive eye gaze estimation using cross-ratio under large head motion. Comput. Vis. Image Underst. 2005, 98, 25–51. [Google Scholar] [CrossRef]

- Cho, C.W.; Lee, H.C.; Gwon, S.Y.; Lee, J.M.; Jung, D.; Park, K.R.; Kim, H.-C.; Cha, J. Binocular gaze detection method using a fuzzy algorithm based on quality measurements. Opt. Eng. 2014, 53, 053111-1–053111-22. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Mimica, M.R.M. Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 2005, 98, 4–24. [Google Scholar] [CrossRef]

- Shih, S.-W.; Liu, J. A novel approach to 3-D gaze tracking using stereo cameras. IEEE Trans. Syst. Man Cybern. Part B 2004, 34, 234–245. [Google Scholar] [CrossRef]

- Tobii. Available online: http://www.tobii.com (accessed on 7 March 2017).

- SMI. Available online: http://www.smivision.com/ (accessed on 7 March 2017).

- Zhu, Z.; Ji, Q. Novel eye gaze tracking techniques under natural head movement. IEEE Trans. Biomed. Eng. 2007, 54, 2246–2260. [Google Scholar] [PubMed]

- Ohno, T.; Mukawa, N. A free-head, simple calibration, gaze tracking system that enables gaze-based interaction. In Proceedings of the Symposium on Eye Tracking Research & Applications, San Antonio, TX, USA, 22–24 March 2004; pp. 115–122. [Google Scholar]

- Talmi, K.; Liu, J. Eye and gaze tracking for visually controlled interactive stereoscopic displays. Signal Process. Image Commun. 1999, 14, 799–810. [Google Scholar] [CrossRef]

- Cho, D.-C.; Kim, W.-Y. Long-range gaze tracking system for large movements. IEEE Trans. Biomed. Eng. 2013, 60, 3432–3440. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Wang, Z.; Tu, X. A real-time compensation strategy for non-contact gaze tracking under natural head movement. Chin. J. Electron. 2010, 19, 446–450. [Google Scholar]

- Lee, J.M.; Lee, H.C.; Gwon, S.Y.; Jung, D.; Pan, W.; Cho, C.W.; Park, K.R.; Kim, H.-C.; Cha, J. A new gaze estimation method considering external light. Sensors 2015, 15, 5935–5981. [Google Scholar] [CrossRef] [PubMed]

- Lee, H.C.; Luong, D.T.; Cho, C.W.; Lee, E.C.; Park, K.R. Gaze tracking system at a distance for controlling IPTV. IEEE Trans. Consum. Electron. 2010, 56, 2577–2583. [Google Scholar] [CrossRef]

- Jung, D.; Lee, J.M.; Gwon, S.Y.; Pan, W.; Lee, H.C.; Park, K.R.; Kim, H.-C. Compensation method of natural head movement for gaze tracking system using an ultrasonic sensor for distance measurement. Sensors 2016, 16, 110. [Google Scholar] [CrossRef] [PubMed]

- Villanueva, A.; Cabeza, R.; Porta, S. Eye tracking: Pupil orientation geometrical modeling. Image Vis. Comput. 2006, 24, 663–679. [Google Scholar] [CrossRef]

- Baluja, S.; Pomerleau, D. Non-intrusive gaze tracking using artificial neural networks. Adv. Neural Inf. Process. Syst. 1993, 6, 753–760. [Google Scholar]

- Williams, O.; Blake, A.; Cipolla, R. Sparse and semi-supervised visual mapping with the S3GP. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 230–237. [Google Scholar]

- Xu, L.-Q.; Machin, D.; Sheppard, P. A novel approach to real- time non-intrusive gaze finding. In Proceedings of the British Machine Vision Conference, Southampton, UK, 14–17 September 1998; pp. 428–437. [Google Scholar]

- Morimoto, C.H.; Koons, D.; Amir, A.; Flickner, M. Pupil detection and tracking using multiple light sources. Image Vis. Comput. 2000, 18, 331–335. [Google Scholar] [CrossRef]

- Bozomitu, R.G.; Păsărică, A.; Cehan, V.; Rotariu, C.; Barabasa, C. Pupil centre coordinates detection using the circular Hough transform technique. In Proceedings of the 38th IEEE International Spring Seminar on Electronics Technology, Eger, Hungary, 6–10 May 2015; pp. 462–465. [Google Scholar]

- Leimberg, D.; Vester-Christensen, M.; Ersbøll, B.K.; Hansen, L.K. Heuristics for speeding up gaze estimation. In Proceedings of the Svenska Symposium i Bildanalys, Malmø, Sweden, 10–11 March 2005; pp. 1–4. [Google Scholar]

- Hansen, D.W.; Pece, A.E.C. Iris tracking with feature free contours. In Proceedings of the IEEE International Workshop on Analysis and Modeling of Faces and Gestures, Nice, France, 17 October 2003; pp. 208–214. [Google Scholar]

- Zhu, D.; Moore, S.T.; Raphan, T. Robust pupil center detection using a curvature algorithm. Comput. Methods Programs Biomed. 1999, 59, 145–157. [Google Scholar] [CrossRef]

- Fitzgibbon, A.; Pilu, M.; Fisher, R.B. Direct least square fitting of ellipses. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 476–480. [Google Scholar] [CrossRef]

- Ahlstrom, C.; Kircher, K.; Kircher, A. A gaze-based driver distraction warning system and its effect on visual behavior. IEEE Trans. Intell. Transp. Syst. 2013, 14, 965–973. [Google Scholar] [CrossRef]

- Liang, Y.; Reyes, M.L.; Lee, J.D. Real-time detection of driver cognitive distraction using support vector machines. IEEE Trans. Intell. Transp. Syst. 2007, 8, 340–350. [Google Scholar] [CrossRef]

- Tawari, A.; Trivedi, M.M. Robust and continuous estimation of driver gaze zone by dynamic analysis of multiple face videos. In Proceedings of the IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 8–11 June 2014; pp. 344–349. [Google Scholar]

- Smith, P.; Shah, M.; da Vitoria Lobo, N. Determining driver visual attention with one camera. IEEE Trans. Intell. Transp. Syst. 2003, 4, 205–218. [Google Scholar] [CrossRef]

- Smith, P.; Shah, M.; da Vitoria Lobo, N. Monitoring head/eye motion for driver alertness with one camera. In Proceedings of the International Conference on Pattern Recognition, Barcelona, Spain, 3–7 September 2000; pp. 636–642. [Google Scholar]

- Bergen, J.R.; Anandan, P.; Hanna, K.J.; Hingorani, R. Hierarchical model-based motion estimation. In Proceedings of the European Conference on Computer Vision, Santa Margherita Ligure, Italy, 19–22 May 1992; pp. 237–252. [Google Scholar]

- Vicente, F.; Huang, Z.; Xiong, X.; De la Torre, F.; Zhang, W.; Levi, D. Driver gaze tracking and eyes off the road detection system. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2014–2027. [Google Scholar] [CrossRef]

- Batista, J.P. A real-time driver visual attention monitoring system. In Proceedings of the 2nd Iberian Conference on Pattern Recognition and Image Analysis, Estoril, Portugal, 7–9 June 2005; pp. 200–208. [Google Scholar]

- Fridman, L.; Lee, J.; Reimer, B.; Victor, T. “Owl” and “Lizard”: Patterns of head pose and eye pose in driver gaze classification. IET Comput. Vis. 2016, 10, 308–313. [Google Scholar] [CrossRef]

- Sigut, J.; Sidha, S.-A. Iris center corneal reflection method for gaze tracking using visible light. IEEE Trans. Biomed. Eng. 2011, 58, 411–419. [Google Scholar] [CrossRef] [PubMed]

- ELP-USB500W02M-L36. Available online: http://www.elpcctv.com/5mp-ultra-wide-angle-hd-usb-camera-board-with-mpeg-format-p-83.html (accessed on 11 September 2017).

- 850 nm CWL, 10 nm FWHM, 25 mm Mounted Diameter. Available online: https://www.edmundoptics.com/optics/optical-filters/bandpass-filters/850nm-cwl-10nm-fwhm-25mm-mounted-diameter (accessed on 11 September 2017).

- Dlib C++ Library. Real-Time Face Pose Estimation. Available online: http://blog.dlib.net/2014/08/real-time-face-pose-estimation.html (accessed on 7 March 2017).

- Kazemi, V.; Sullivan, J. One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1867–1874. [Google Scholar]

- Zhao, J.; Bose, B.K. Evaluation of membership functions for fuzzy logic controlled induction motor drive. In Proceedings of the IEEE Annual Conference of the Industrial Electronics Society, Sevilla, Spain, 5–8 November 2002; pp. 229–234. [Google Scholar]

- Bayu, B.S.; Miura, J. Fuzzy-based illumination normalization for face recognition. In Proceedings of the IEEE Workshop on Advanced Robotics and Its Social Impacts, Tokyo, Japan, 7–9 November 2013; pp. 131–136. [Google Scholar]

- Barua, A.; Mudunuri, L.S.; Kosheleva, O. Why trapezoidal and triangular membership functions work so well: Towards a theoretical explanation. J. Uncertain Syst. 2014, 8, 164–168. [Google Scholar]

- Precup, R.-E.; Preitl, S.; Petriu, E.M.; Tar, J.K.; Tomescu, M.L.; Pozna, C. Generic two-degree-of-freedom linear and fuzzy controllers for integral processes. J. Frankl. Inst. 2009, 346, 980–1003. [Google Scholar] [CrossRef]

- Medina, J.; Ojeda-Aciego, M. Multi-adjoint t-concept lattices. Inf. Sci. 2010, 180, 712–725. [Google Scholar] [CrossRef]

- Nowaková, J.; Prílepok, M.; Snášel, V. Medical image retrieval using vector quantization and fuzzy S-tree. J. Med. Syst. 2017, 41, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Kumar, D.; Jarial, S.K. A hybrid clustering method based on improved artificial bee colony and fuzzy C-Means algorithm. Int. J. Artif. Intell. 2017, 15, 40–60. [Google Scholar]

- Wei, Y.; Qiu, J.; Lam, H.-K. A novel approach to reliable output feedback control of fuzzy-affine systems with time-delays and sensor faults. IEEE Trans. Fuzzy Syst. 2016. [Google Scholar] [CrossRef]

- Wei, Y.; Qiu, J.; Karimi, H.R. Reliable output feedback control of discrete-time fuzzy affine systems with actuator faults. IEEE Trans. Circuits Syst. I 2017, 64, 170–181. [Google Scholar] [CrossRef]

- Qiu, J.; Wei, Y.; Karimi, H.R.; Gao, H. Reliable control of discrete-time piecewise-affine time-delay systems via output feedback. IEEE Trans. Reliab. 2017, 1–13. [Google Scholar] [CrossRef]

- Wei, Y.; Qiu, J.; Lam, H.-K.; Wu, L. Approaches to T–S fuzzy-affine-model-based reliable output feedback control for nonlinear itô stochastic systems. IEEE Trans. Fuzzy Syst. 2017, 25, 569–583. [Google Scholar] [CrossRef]

- Klir, G.J.; Yuan, B. Fuzzy Sets and Fuzzy Logic—Theory and Applications; Prentice-Hall: Upper Saddle River, NJ, USA, 1995. [Google Scholar]

- Defuzzification Methods. Available online: https://kr.mathworks.com/help/fuzzy/examples/defuzzification-methods.html (accessed on 7 September 2017).

- Leekwijck, W.V.; Kerre, E.E. Defuzzification: Criteria and classification. Fuzzy Sets Syst. 1999, 108, 159–178. [Google Scholar] [CrossRef]

- Broekhoven, E.V.; Baets, B.D. Fast and accurate center of gravity defuzzification of fuzzy system outputs defined on trapezoidal fuzzy partitions. Fuzzy Sets Syst. 2006, 157, 904–918. [Google Scholar] [CrossRef]

- Lee, H.C.; Lee, W.O.; Cho, C.W.; Gwon, S.Y.; Park, K.R.; Lee, H.; Cha, J. Remote gaze tracking system on a large display. Sensors 2013, 13, 13439–13463. [Google Scholar] [CrossRef] [PubMed]

- Renault Samsung SM5. Available online: https://en.wikipedia.org/wiki/Renault_Samsung_SM5 (accessed on 7 September 2017).

- Visual Studio 2013. Available online: https://www.visualstudio.com/en-us/vs (accessed on 11 September 2017).

- OpenCV. Available online: http://opencv.org (accessed on 11 September 2017).

- Boost C++ Library. Available online: http://www.boost.org (accessed on 11 September 2017).

- Choi, I.-H.; Hong, S.K.; Kim, Y.-G. Real-time categorization of driver’s gaze zone using the deep learning techniques. In Proceedings of the International Conference on Big Data and Smart Computing, Hong Kong, China, 18–20 January 2016; pp. 143–148. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25; Curran Associates, Inc.: New York, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

| Environment | Methods | Advantage and Disadvantage | |

|---|---|---|---|

| Indoor | Dark pupil & bright pupil effect [28] | <Advantage> Robust against the change of extraneous light <Disadvantage>

| |

| Circular HT [29] | <Advantage> Robust against noise <Disadvantage> Driver’s head rotation decreases the detection accuracy for elliptical pupil | ||

| Deformable template [30], active contours and particle filtering [31] | <Advantage> Accurate pupil center can be detected <Disadvantage> Real-time detection is difficult by low processing speed | ||

| Logical AND operation of two binarized images [43] | <Advantage> Fast detection through binarization and labeling <Disadvantage> Vulnerable to change in extraneous light | ||

| Binarization, boundary segmentation, and ellipse fitting [4] | <Advantage> High-resolution eye image can be obtained <Disadvantage>

| ||

| Vehicle | Dual-camera [5,35,36,37] | <Advantage> The combination of two image data improves the system’s accuracy <Disadvantage>

| |

| Single-camera | 3D-based [37,38,40] (3D modeling of head or eyeball) | <Advantage> Lower computational complexity than that using dual cameras. <Disadvantage> Gaze tracking is based not on the pupil center, but on the iris center, which constrains the improvement of accuracy. | |

| 2D-based (Purkinje-image [41], iris-center [42]) | <Advantage> Lower computational complexity than the 3D-based method. <Disadvantage> | ||

| 2D-based (fuzzy-system, and proposed method) | <Advantage>

Fuzzy membership function and rule table need to be defined. | ||

| Feature 1 | Feature 2 | Output of Fuzzy System |

|---|---|---|

| L | L | L |

| L | M | L |

| L | H | H |

| M | L | L |

| M | M | H |

| M | H | L |

| H | L | H |

| H | M | L |

| H | H | L |

| Feature 1 | Feature 2 | IV | |

|---|---|---|---|

| Min Rule | Max Rule | ||

| 0.48(L) | 0.26(L) | 0.26(L) | 0.48(L) |

| 0.48(L) | 0.65(M) | 0.48(L) | 0.65(L) |

| 0.48(L) | 0(H) | 0(H) | 0.48(H) |

| 0.64(M) | 0.26(L) | 0.26(L) | 0.64(L) |

| 0.64(M) | 0.65(M) | 0.64(H) | 0.65(H) |

| 0.64(M) | 0(H) | 0(L) | 0.64(L) |

| 0.28(H) | 0.26(L) | 0.26(H) | 0.28(H) |

| 0.28(H) | 0.65(M) | 0.28(L) | 0.65(L) |

| 0.28(H) | 0(H) | 0(L) | 0.28(L) |

| COG | BOA | MOM | FOM | LOM | |

|---|---|---|---|---|---|

| Min rule | 98.4 | 92.6 | 75.1 | 52.1 | 75.1 |

| Max rule | 73.3 | 73.3 | 71 | 65.7 | 71 |

| COG | BOA | MOM | FOM | LOM | |

|---|---|---|---|---|---|

| Min rule | 84.2 | 82.5 | 67.1 | 48.1 | 67.1 |

| Max rule | 62.5 | 62.5 | 61 | 54.3 | 61 |

| Target Zone | Previous Method [23] | Proposed Method | |

|---|---|---|---|

| Without Fuzzy System | With Fuzzy System | ||

| 1 | 5.35 | 5.35 | 3.03 |

| 2 | 22.40 | 12.42 | 12.11 |

| 3 | 7.78 | 7.16 | 4.63 |

| 4 | 8.59 | 3.79 | 2.52 |

| 5 | 8.34 | 3.95 | 3.08 |

| 6 | 11.48 | 3.37 | 2.90 |

| 7 | 6.26 | 3.92 | 3.92 |

| 8 | 12.25 | 7.43 | 3.80 |

| 9 | 4.92 | 2.36 | 2.26 |

| 10 | 3.22 | 2.66 | 2.65 |

| 11 | 5.27 | 3.80 | 3.13 |

| 12 | 6.18 | 5.26 | 5.16 |

| 13 | 3.47 | 2.28 | 2.17 |

| 14 | 4.06 | 2.05 | 1.31 |

| 15 | 8.72 | 8.40 | 8.31 |

| Average | 7.88 | 4.94 | 4.06 |

| Target Zone | Previous Method [23] | Proposed Method | |

|---|---|---|---|

| Without Fuzzy System | With Fuzzy System | ||

| 1 | 5.35 | 5.35 | 3.03 |

| 4 | 8.59 | 3.79 | 2.52 |

| 5 | 8.34 | 3.95 | 3.08 |

| 8 | 12.25 | 7.43 | 3.80 |

| 11 | 5.27 | 3.80 | 3.13 |

| 14 | 4.06 | 2.05 | 1.31 |

| Average | 7.31 | 4.39 | 2.81 |

| Target Zone | Previous Method [23] | Proposed Method | |

|---|---|---|---|

| Without Fuzzy System | With Fuzzy System | ||

| 1 | 7.09 | 5.35 | 4.45 |

| 2 | 5.35 | 1.93 | 1.73 |

| 3 | 4.10 | 2.76 | 2.76 |

| 4 | 6.15 | 3.34 | 2.63 |

| 5 | 6.17 | 3.77 | 3.01 |

| 6 | 2.50 | 1.66 | 1.54 |

| 7 | 5.35 | 3.36 | 3.36 |

| 8 | 9.73 | 7.43 | 3.80 |

| 9 | 1.79 | 1.52 | 1.46 |

| 10 | 6.91 | 2.83 | 2.83 |

| 11 | 5.67 | 4.51 | 3.71 |

| 12 | 1.94 | 1.60 | 1.60 |

| 13 | 2.00 | 1.54 | 1.04 |

| 14 | 6.02 | 2.05 | 1.98 |

| 15 | 13.66 | 3.19 | 1.40 |

| average | 5.62 | 3.12 | 2.48 |

| Target Zone | Previous Method [23] | Proposed Method | |

|---|---|---|---|

| Without Fuzzy System | With Fuzzy System | ||

| 1 | 7.09 | 5.35 | 4.45 |

| 4 | 6.15 | 3.34 | 2.63 |

| 5 | 6.17 | 3.77 | 3.01 |

| 8 | 9.73 | 7.43 | 3.80 |

| 11 | 5.67 | 4.51 | 3.71 |

| 14 | 6.02 | 2.05 | 1.98 |

| average | 6.80 | 4.40 | 3.26 |

| Gaze Region | Previous Method [23] | Proposed Method | |||||

|---|---|---|---|---|---|---|---|

| Without Fuzzy System | With Fuzzy System | ||||||

| Target Zone | Neighbors | SCER | LCER | SCER | LCER | SCER | LCER |

| 1 | 2, 12, 15 | 81.38 | 93.94 | 93.94 | 95.57 | 98.90 | 100 |

| 2 | 1, 3, 12, 13, 15 | 61.87 | 92.8 | 62.81 | 98.37 | 62.95 | 98.37 |

| 3 | 2, 4, 12, 13, 14, 15 | 70.54 | 88.65 | 90.92 | 94.72 | 90.92 | 94.72 |

| 4 | 3, 5, 13, 14 | 45.4 | 78.06 | 55 | 86.63 | 57.79 | 87.3 |

| 5 | 4, 14 | 76.46 | 78.28 | 73.8 | 80.41 | 87.71 | 89.73 |

| 6 | 7, 9, 10 | 85.02 | 92.94 | 92.63 | 97.73 | 92.99 | 97.96 |

| 7 | 6, 8, 9, 10, 11 | 55.53 | 84.57 | 69.59 | 86.98 | 71.45 | 88.28 |

| 8 | 7, 10, 11 | 45.72 | 73.39 | 66.89 | 88.27 | 79.02 | 94.12 |

| 9 | 6, 7, 10, 12, 13 | 84.22 | 98.04 | 77.19 | 99.5 | 77.28 | 99.5 |

| 10 | 6, 7, 8, 9, 11, 12, 13, 14 | 70.47 | 96.03 | 94 | 99.02 | 94.33 | 99.02 |

| 11 | 7, 8, 10, 13, 14 | 50.43 | 95.76 | 68.45 | 97.91 | 70.09 | 99.08 |

| 12 | 1, 2, 3, 9, 10, 13 | 56.32 | 90.04 | 76.01 | 96.94 | 76.12 | 96.94 |

| 13 | 2, 3, 4, 9, 10, 11, 12, 14 | 78.61 | 95.07 | 84.22 | 99.67 | 84.32 | 99.78 |

| 14 | 3, 4, 5, 10, 11, 13 | 51.01 | 91.03 | 71.37 | 96.38 | 74.64 | 99.65 |

| 15 | 1, 2, 3 | 26.9 | 66.24 | 51.16 | 91.17 | 51.16 | 91.49 |

| Average | 62.66 | 87.65 | 75.19 | 93.95 | 77.97 | 95.72 | |

| Gaze Region | Previous Method [23] | Proposed Method | |||||

|---|---|---|---|---|---|---|---|

| Without Fuzzy System | With Fuzzy System | ||||||

| Target Zone | Neighbors | SCER | LCER | SCER | LCER | SCER | LCER |

| 1 | 2, 12, 15 | 81.38 | 93.94 | 93.94 | 95.57 | 98.90 | 100 |

| 4 | 3, 5, 13, 14 | 45.4 | 78.06 | 55 | 86.63 | 57.79 | 87.3 |

| 5 | 4, 14 | 76.46 | 78.28 | 73.8 | 80.41 | 87.71 | 89.73 |

| 8 | 7, 10, 11 | 45.72 | 73.39 | 66.89 | 88.27 | 79.02 | 94.12 |

| 11 | 7, 8, 10, 13, 14 | 50.43 | 95.76 | 68.45 | 97.91 | 70.09 | 99.08 |

| 14 | 3, 4, 5, 10, 11, 13 | 51.01 | 91.03 | 71.37 | 96.38 | 74.64 | 99.65 |

| Average | 58.4 | 85.07 | 71.57 | 90.86 | 78.02 | 94.98 | |

| Target Zone | Neighbors | Previous Method [68] | Proposed Method | ||

|---|---|---|---|---|---|

| SCER | LCER | SCER | LCER | ||

| 1 | 2, 12, 15 | 68.6 | 94 | 98.90 | 100 |

| 2 | 1, 3, 12, 13, 15 | 65.55 | 98.05 | 62.95 | 98.37 |

| 3 | 2, 4, 12, 13, 14, 15 | 57.75 | 84.8 | 90.92 | 94.72 |

| 4 | 3, 5, 13, 14 | 68.35 | 96.9 | 57.79 | 87.3 |

| 5 | 4, 14 | 68.2 | 87.65 | 87.71 | 89.73 |

| 6 | 7, 9, 10 | 55.5 | 91.75 | 92.99 | 97.96 |

| 7 | 6, 8, 9, 10, 11 | 62.45 | 79.8 | 71.45 | 88.28 |

| 8 | 7, 10, 11 | 62.55 | 78.25 | 79.02 | 94.12 |

| 9 | 6, 7, 10, 12, 13 | 64.75 | 87 | 77.28 | 99.5 |

| 10 | 6, 7, 8, 9, 11, 12, 13, 14 | 51.5 | 90 | 94.33 | 99.02 |

| 11 | 7, 8, 10, 13, 14 | 68.55 | 94.35 | 70.09 | 99.08 |

| 12 | 1, 2, 3, 9, 10, 13 | 60.7 | 77.8 | 76.12 | 96.94 |

| 13 | 2, 3, 4, 9, 10, 11, 12, 14 | 68.5 | 81.25 | 84.32 | 99.78 |

| 14 | 3, 4, 5, 10, 11, 13 | 72.7 | 95 | 74.64 | 99.65 |

| 15 | 1, 2, 3 | 62 | 80.55 | 51.16 | 91.49 |

| Average | 63.84 | 87.81 | 77.97 | 95.72 | |

| Tobii System [14] | Proposed Method | |

|---|---|---|

| Average SCER | 73 | 80.99 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, D.E.; Yoon, H.S.; Hong, H.G.; Park, K.R. Fuzzy-System-Based Detection of Pupil Center and Corneal Specular Reflection for a Driver-Gaze Tracking System Based on the Symmetrical Characteristics of Face and Facial Feature Points. Symmetry 2017, 9, 267. https://doi.org/10.3390/sym9110267

Lee DE, Yoon HS, Hong HG, Park KR. Fuzzy-System-Based Detection of Pupil Center and Corneal Specular Reflection for a Driver-Gaze Tracking System Based on the Symmetrical Characteristics of Face and Facial Feature Points. Symmetry. 2017; 9(11):267. https://doi.org/10.3390/sym9110267

Chicago/Turabian StyleLee, Dong Eun, Hyo Sik Yoon, Hyung Gil Hong, and Kang Ryoung Park. 2017. "Fuzzy-System-Based Detection of Pupil Center and Corneal Specular Reflection for a Driver-Gaze Tracking System Based on the Symmetrical Characteristics of Face and Facial Feature Points" Symmetry 9, no. 11: 267. https://doi.org/10.3390/sym9110267