1. Introduction

It is said that agriculture, such as rice domestication, began around 10,000 B.C. After that, civilization started based on agriculture and the agriculture technology has advanced to these days over human history. Agriculture is becoming increasingly intensive and is developing towards the use of high-tech. Agriculture is an essential condition for human activity and the maintenance of a society [

1,

2]. Governments around the world are increasingly investing in agricultural technology development, and private sector spending is catching up with public sector spending. Achieving higher levels of productivity to feed the wealthier and more urbanized population of the future will require significant investment in agriculture research and development [

3].

As a part of this trend, attention to smart farming and precision agriculture is increasing. Precision agriculture can increase the predictability of crops and crop spacing area through the automation of agricultural machinery using information and communications technology (ICT), sensor technology, and information processing technology, and environment-friendly farming through variable fertilization technology [

4].

The basic concept of precision agriculture is defined as “apply the right treatment in the right place at the right time” [

5]. The difference in yield and quality is due to differences in soil characteristics and crop growth characteristics by farmland location. In other words, precision agriculture means informatization farming based on a variant prescription that is suitable for a small area location characteristic unlike mechanized agriculture, which performs uniform agriculture work on a larger area. To realize this, various technologies such as sensor technology, ICT technology, and information management are integrated and thus productivity is guaranteed, even with a small labor force.

The development of precision agriculture also provides a new way to grow and harvest orchard crops. In particular, the use of orchard agricultural robotic technology enables regular and quantitative spraying, as well as orchard irrigation, thereby reducing farmers’ work and increasing productivity [

6,

7]. As a result, farmers can avoid excessive use of pesticides, chemical fertilizers, and other resources, and prevent environmental pollution [

8]. The use of these orchard agricultural robots can help produce high quality apples, grapes, and other fruits [

9,

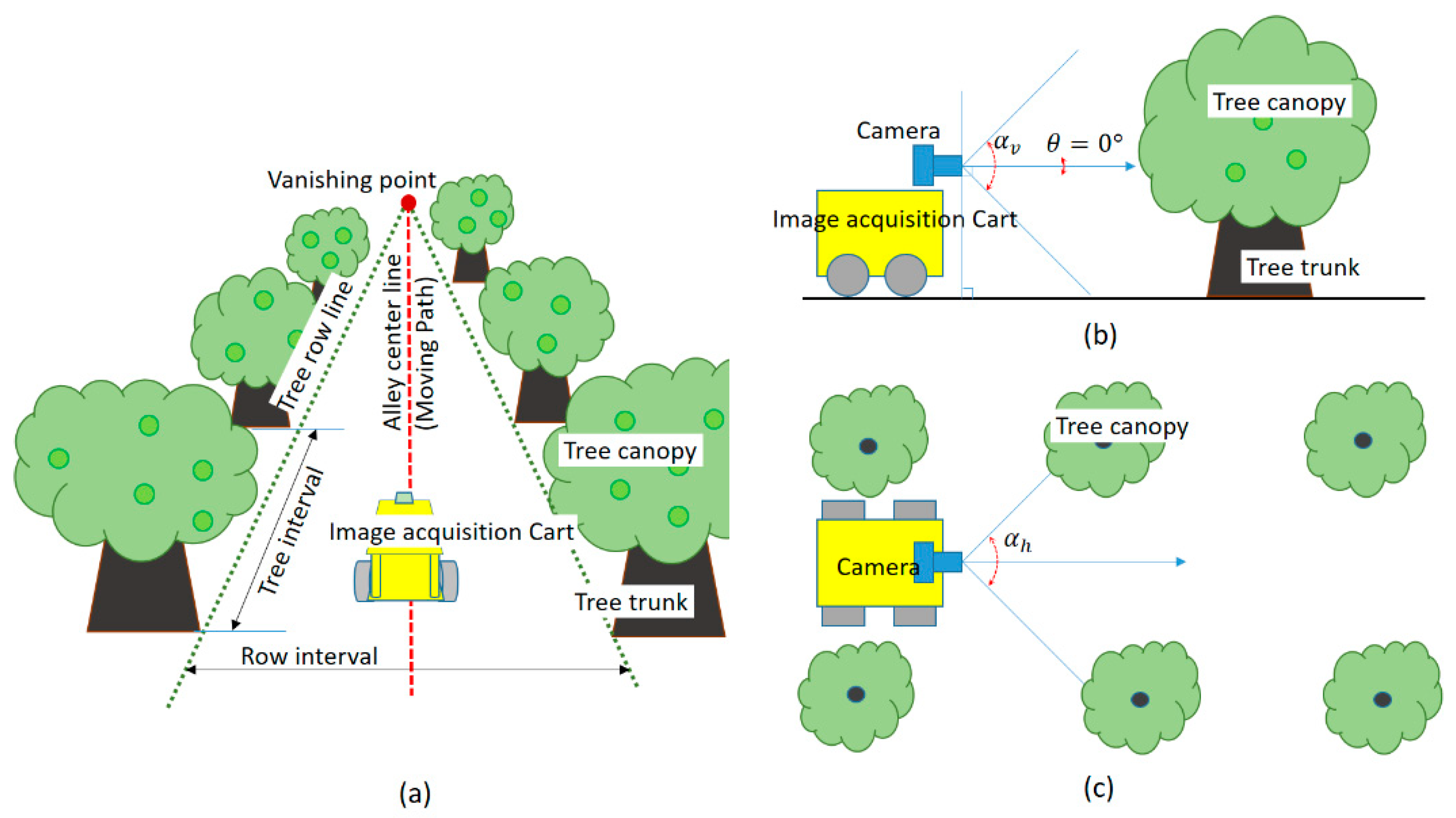

10]. The basic technology for the development of typical orchard farming robots is the recognition of location, that is, localization technology. Especially, in the case of apple orchards, it is an outdoor natural environment and the apple trees are planted in straight, parallel lines, and the distance between two row lines is almost the same and the distance between each tree in a row is also almost the same. Therefore, it is suitable for location recognition and movement path generation of a mobile robot. However, planting locations are not accurate; therefore, tree recognition is needed for the localization of orchard mobile robots. In addition, because the shape of the tree is varied, it is difficult to accurately detect the tree and obtain location information. For this reason, an outdoor apple orchard environment is usually called a “semi-structured” environment.

Recently, researchers of mobile robots for orchards have applied the SLAM (synchronized localization and mapping) [

11] method to realize precision agriculture in orchards. When SLAM is applied, the position of trees in a semi-structured orchard environment can be used as an indicator of SLAM and can be used to provide information about localization and search paths for autonomous movement [

12]. Shalal et al. proposed a multisensory combination method and created SLAM information in a real orchard environment with limited conditions where tree trunks are not covered by branches and leaves [

9,

10]. Cheein et al. provide a histogram of oriented gradients (HOG) where features of tree trunks were extracted to optimize recognition performance and these features were used to train support vector machine (SVM) classifiers to recognize tree trunks [

13]. Garcia-Alegre et al. (2011) and Ali et al. (2008) proposed several methods for separating images into tree trunks and background objects using a cluster algorithm based on color and texture features [

14,

15]. Meanwhile, laser sensors were used to locate moving machines. However, these results show that the tree trunk detection algorithm is constrained when trunks are covered with fallen tree branches and leaves [

9,

13,

16]. In the case of an algorithm that generates autonomous paths in an apple orchard based on a mono camera, the path estimation algorithm cannot guarantee performance when the ground pattern is complicated by weeds and soils [

17]. As an alternative to this problem, an algorithm using a sky-based machine vision technique has been proposed, but there is a limitation that it is difficult to apply it in a season with little leaves [

18]. After all, it is still difficult to accurately identify and locate tree trunks in an orchard environment.

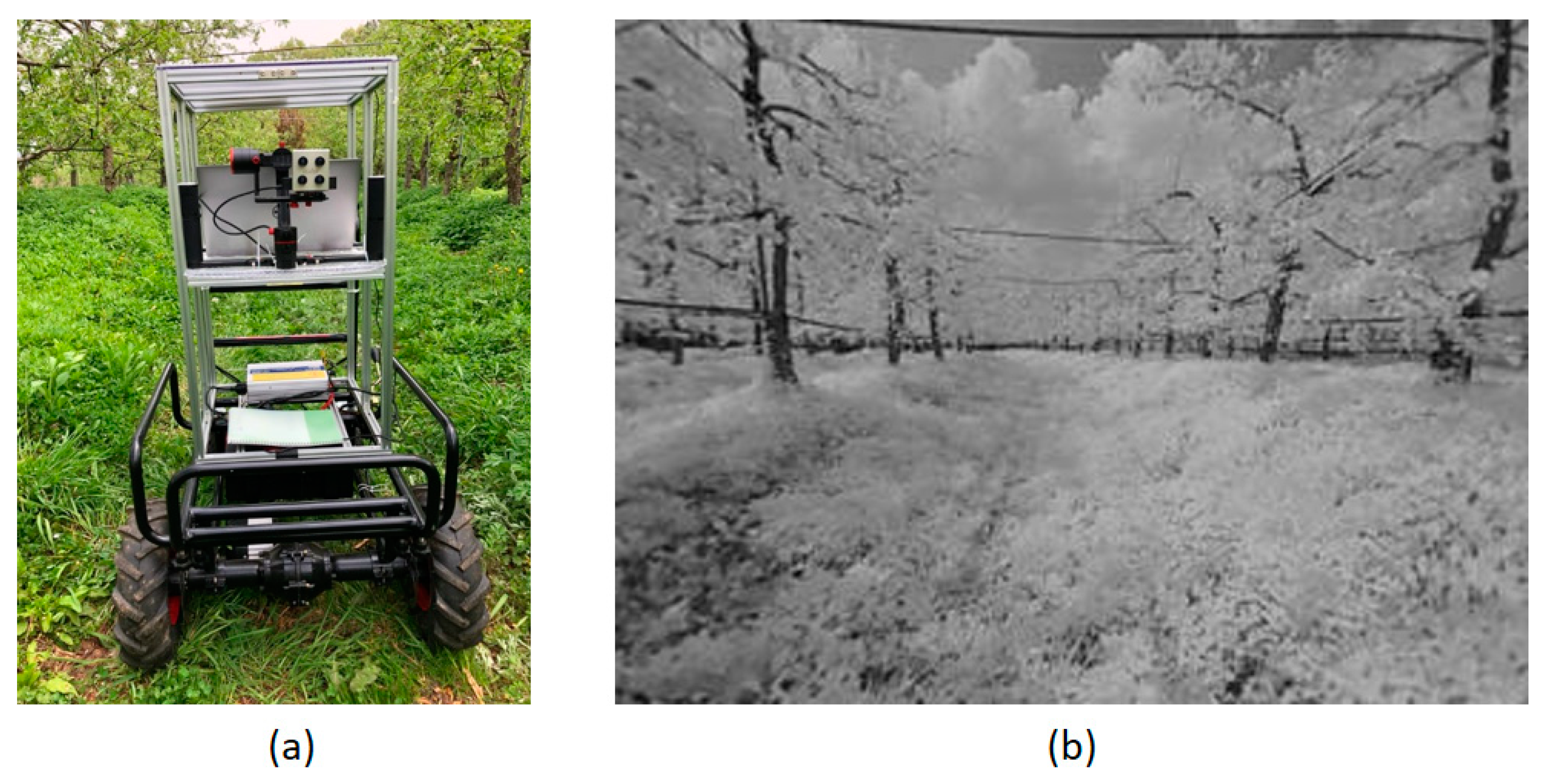

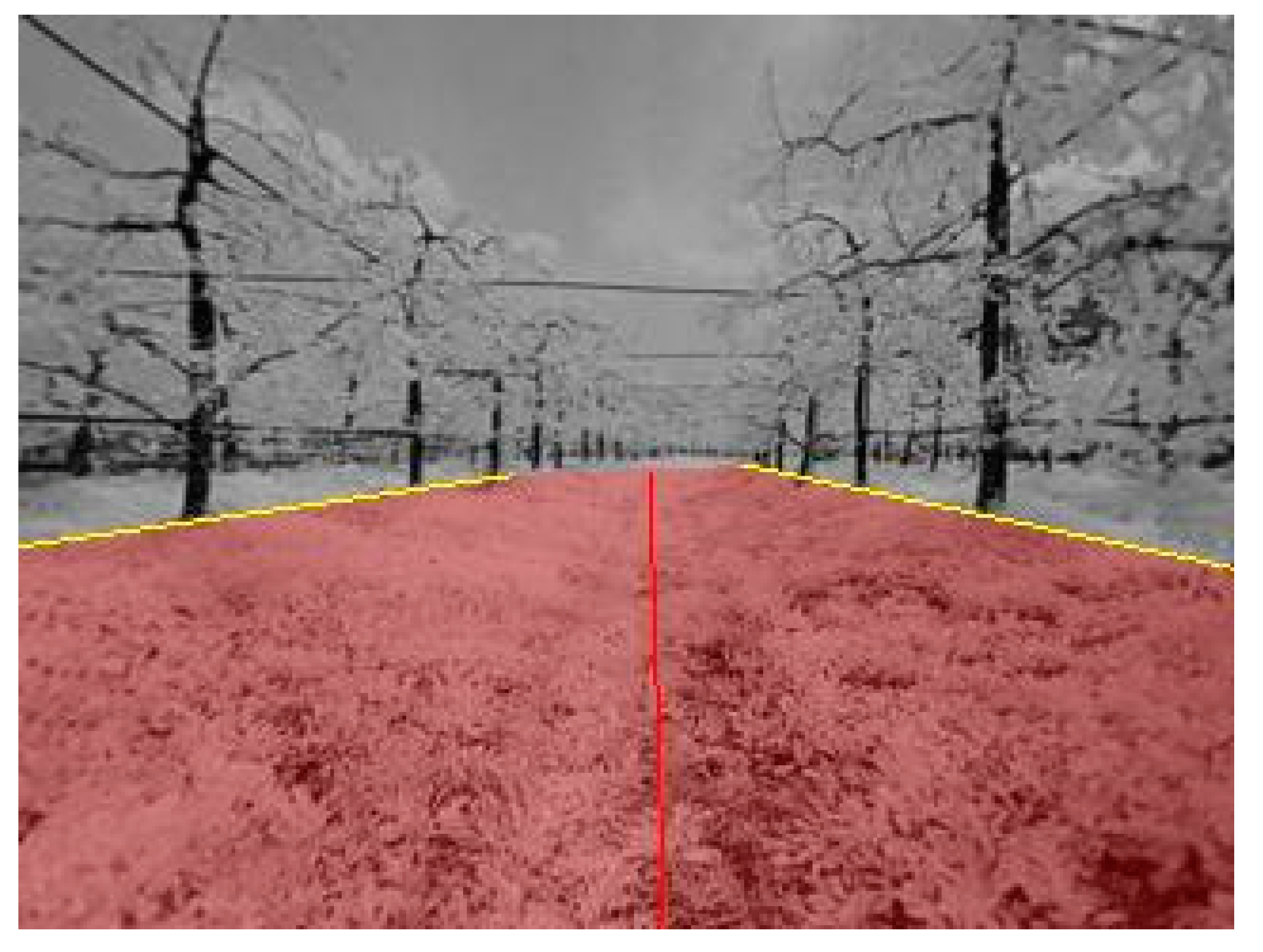

A trunk recognition technique in an orchard environment is a very important technology for precision agriculture of orchard and autonomous driving and autonomous operation of a mobile robot. In this paper, we have proposed an algorithm to recognize trunks and a method to generate a path that an unmanned ground vehicle (UGV) can travel in an apple orchard. In the case of apple trees, the branches are low, so the length of the trunks is short, and the branches and leaves often visually obscure the trunk, as shown in the

Figure 1. In addition, the orchard weeds cover the trunk-to-ground boundary, and the ground is irregularly composed of soils and weeds, often making the bottom pattern uneven. For this reason, it becomes more difficult to determine the travelable route based on tree trunks using machine vision.

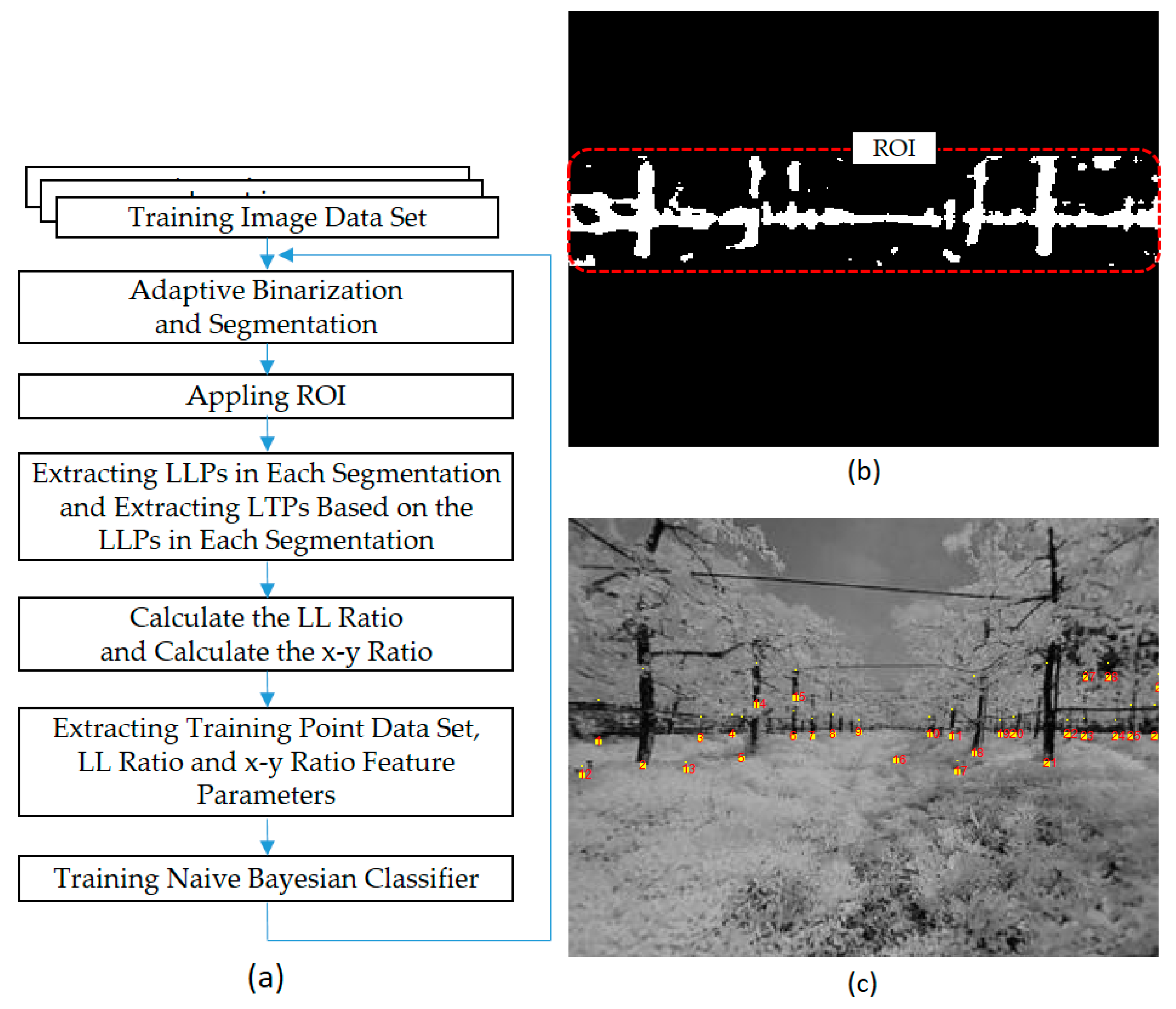

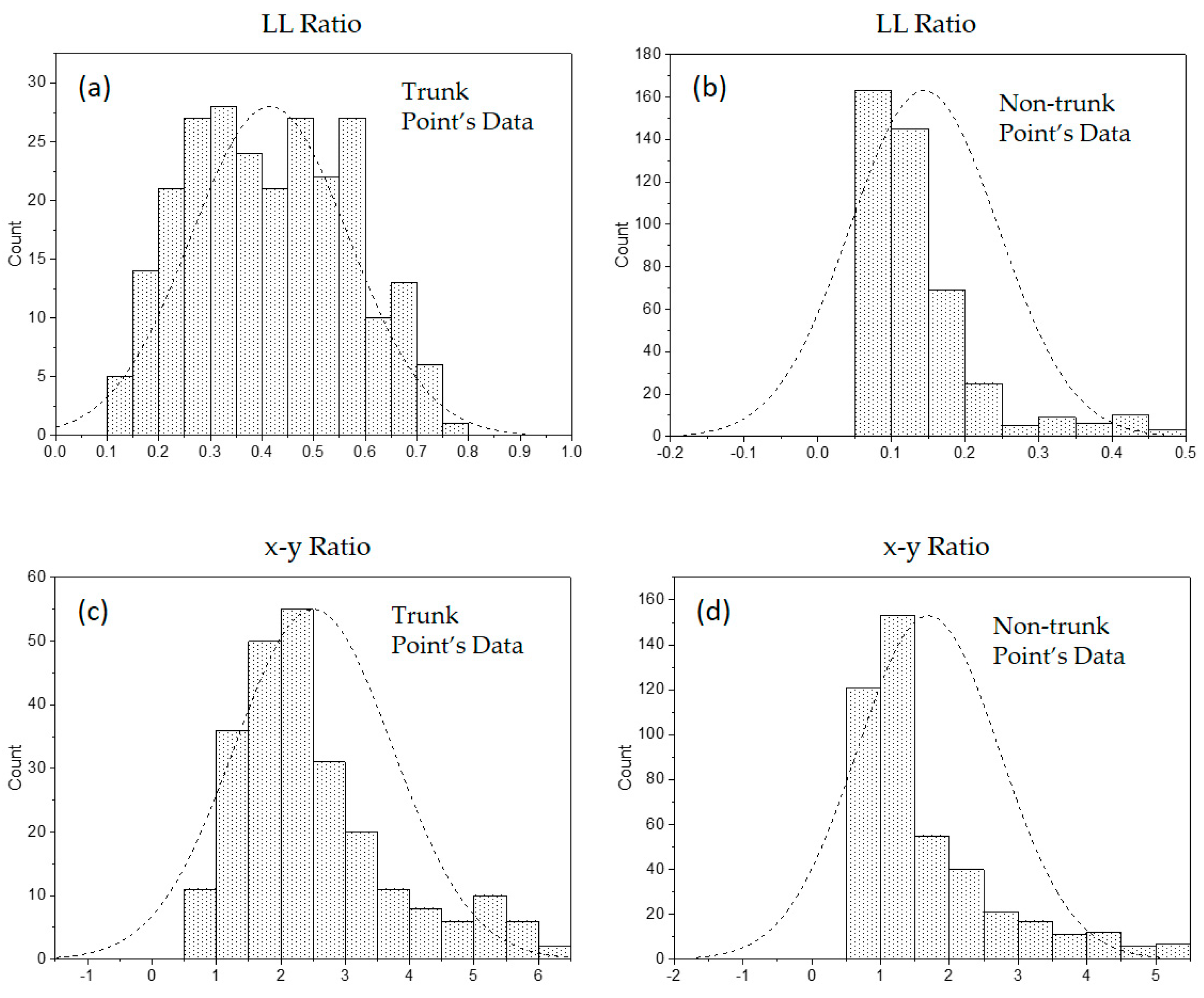

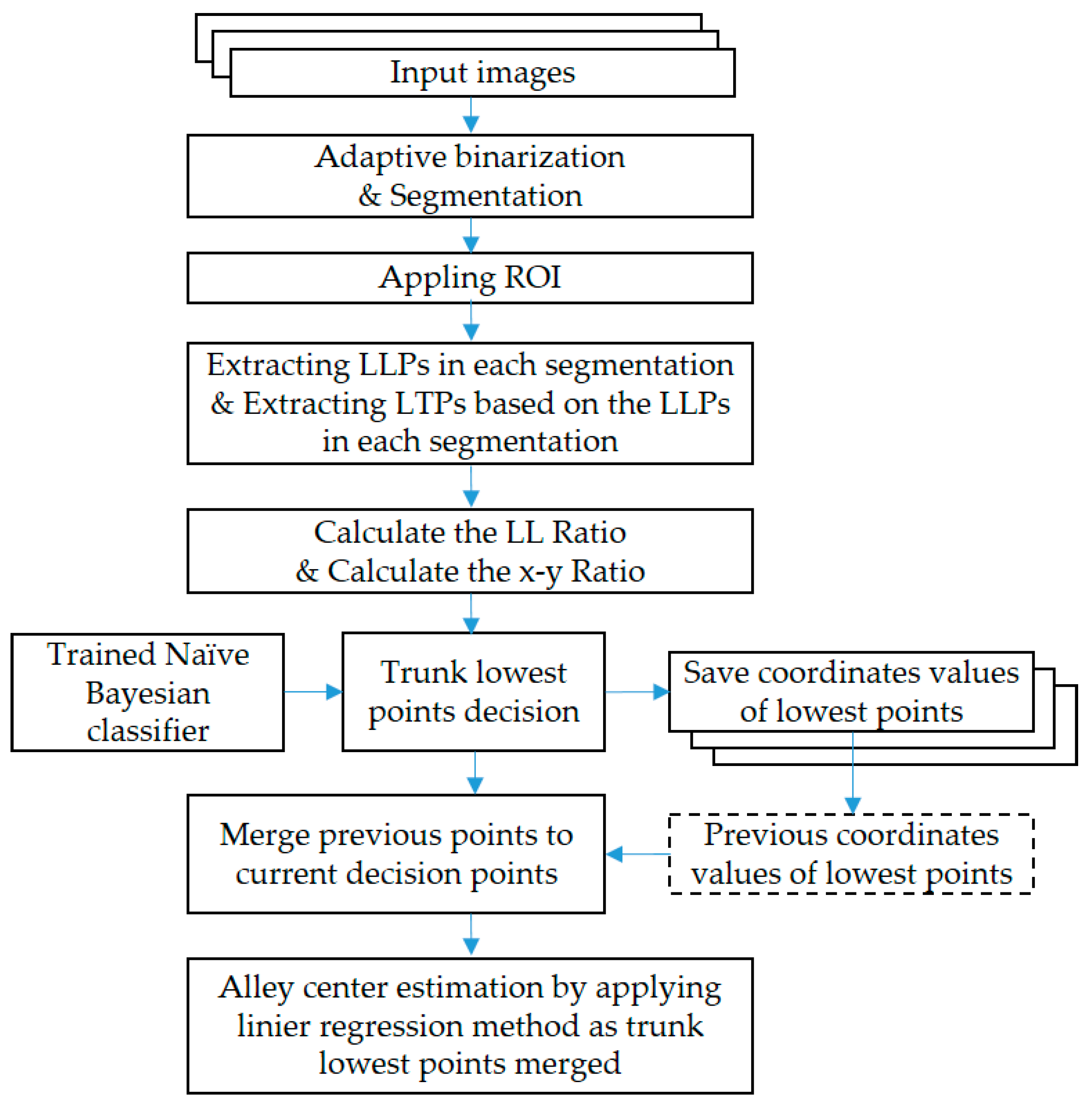

To detect the trunk’s lowest points, we have applied a naïve Bayesian classification, and extracted free spaces by connecting these points and the center line is generated. For the detection of the trunk, monocular near-infrared (NIR) camera images were converted to a binary image divided into a brightness region containing tree trunks and another region. The lowest points of the segments containing the tree trunks were detected and the tree trunk’s lowest points were determined by applying a naïve Bayesian probabilities. The detected trunk’s lowest points were used to estimate the free space center line of the orchard alley using linear regression analysis, and the alley center line can be used as a movement path or navigation information for the UGV.

4. Results and Discussion

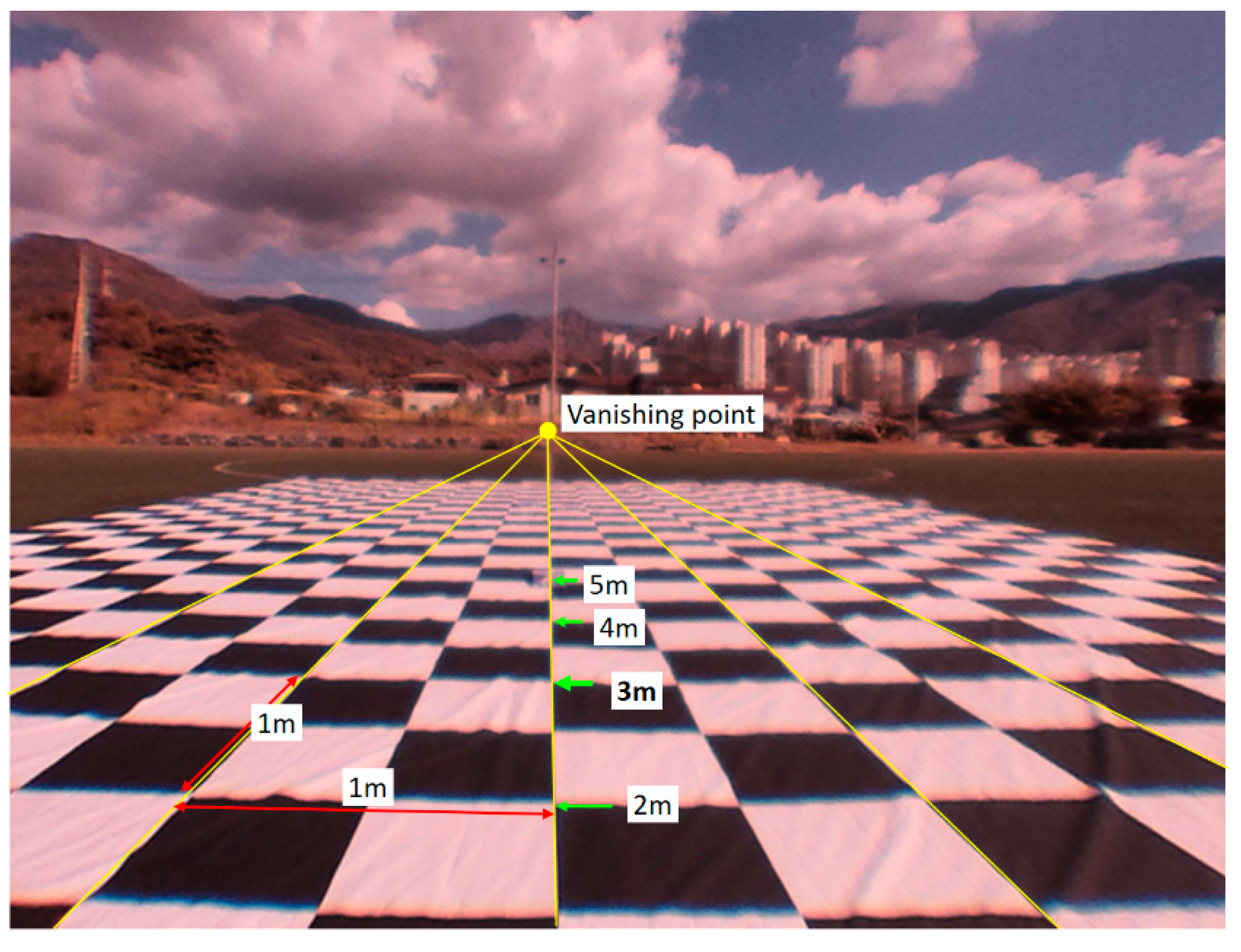

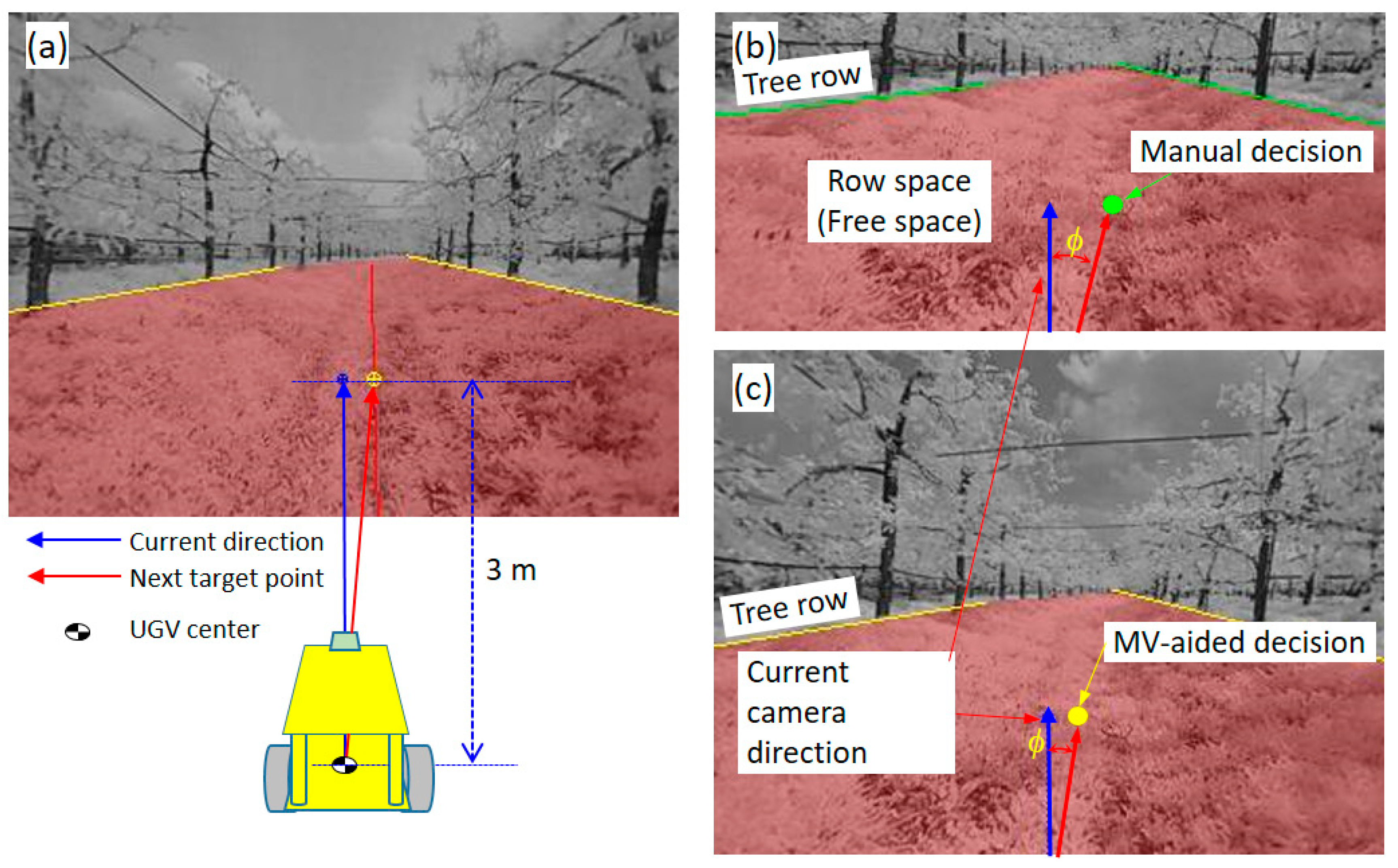

The simplest way to move the unmanned ground vehicle (UGV) is through the steering control from the current reference point to the next point of interest as a method for performing autonomous self-driving. For this operation, it is important to understand the parameter expressing the positional relationship between the current position and the target position. One point on the center line detected through the proposed algorithm is the target point for the next time point for UGV travel. The variables related to the current position of the UGV and the target point could be used as processor variables of the UGV controller. Therefore, in this study, we have calculated the alley center line that represents the center of the free row-space, which was the next target point. After that, the target point and the target steering angle, which were located 3 m ahead the alley center line, were calculated through post-processing. In order to determine the position within the image 3 m ahead, we performed a grid image experiment as shown in

Figure 8. As described above, the camera tilt angle (

θ) and the roll angle were fixed at 0° so that the optical axis of the camera was always parallel to the ground. The specific

y-axis position of the acquired image in this condition corresponded to the specific distance in front. The exact position could be determined through conversion to the bird’s-eye image, but we allowed for some error to minimize the complexity of the algorithm. As shown in

Figure 9a–c, the target steering angle,

φ, refers to the steering angle to move to the next point 3 m ahead based on current point of the UGV. The manual decision point and the MV-aided decision point also refer to a point 3 m ahead on each line. The total apple orchard continuous images used in this study were collected at intervals of 0.25 m, and 229 images were collected, which corresponded to a 57.25 m alley path. We have evaluated the MV-aided center line extracted by the proposed algorithm by comparing it to the manual center line decided by using the unaided-eye.

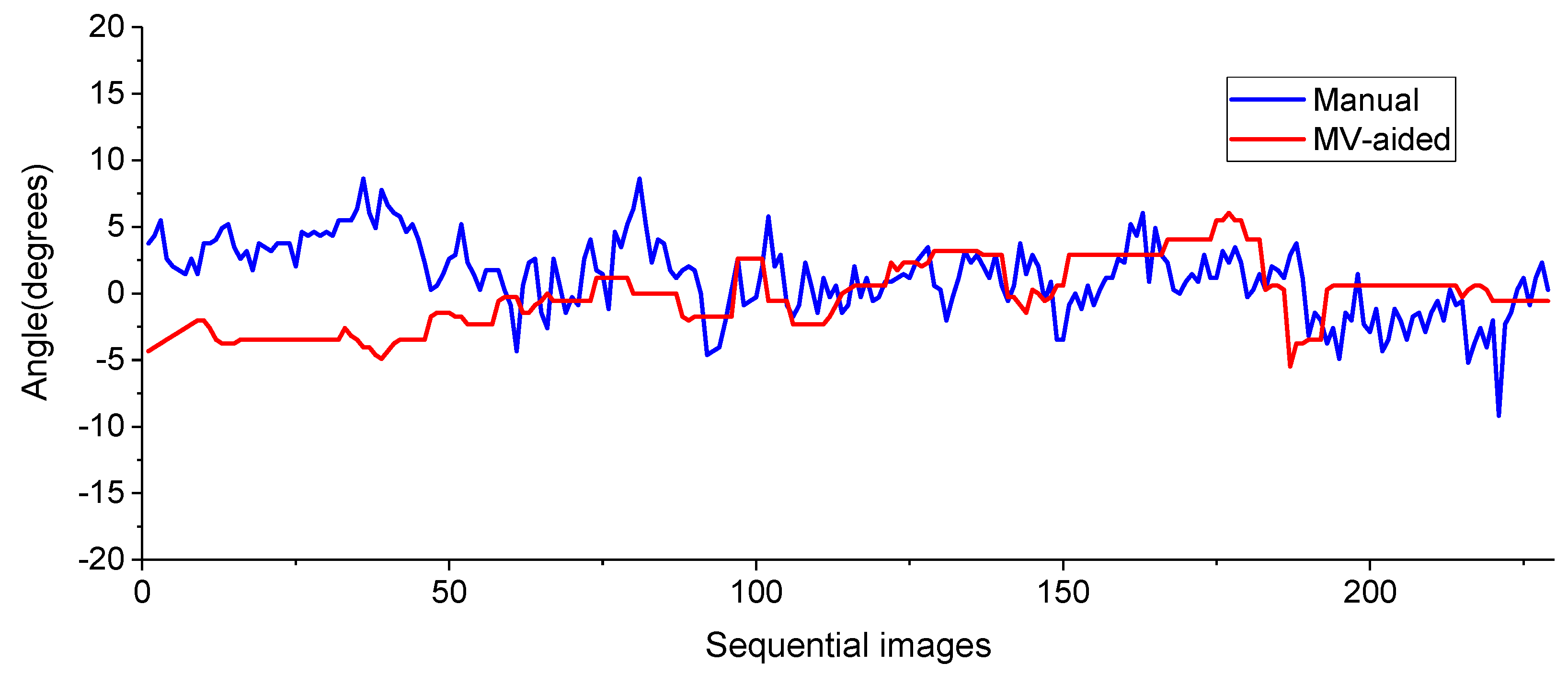

In order to quantitatively compare the characteristics of the manual decision center line and the MV-aided decision center line, we calculated each steering angle based on a point located 3 m ahead of each center line. When the target point was located on the left side based on the current camera direction line, it was expressed as a negative steering angle value, and on the right side, it was expressed as a positive value. The results for 229 images are shown in

Figure 10. Through the graph, we can compare the variation of the target steering angles while moving about 57 m, based on the points on the manual center line and MV-aided center line. It can be seen that the change of the steering angle based on the MV-aided center line required more monotonous and relatively stable steering variation than the change based on manual center line.

The average of the 229 steering angles, the maximum left, the maximum right, the standard deviation, and the number of zero crossings were extracted and are shown in

Table 2. The mean values of the MV-aided center line and manual center line were −0.2 degrees and 1.3 degrees, respectively. The difference between the mean value of the results of the proposed algorithm and the results of the manual method by visual inspection was about 1.1 degrees. First, for the number of zero crossings, the MV-aided center line and manual center line values were 50 and 27, respectively. The number of zero crossings in this experiment meant the number of times that the zigzag steering was required to match the target position 3 m ahead of the camera center while the UGV travelled about 57 m. Therefore, the larger the number of zero crossing value was, the more the zigzag steering travel of the UGV was, which meant that the driving was unstable. Therefore, it could be concluded that the algorithm proposed in this study was more stable than the unaided-eye manual decision result through the comparison of the number of zero crossings. The maximum left and maximum right values represented the largest left or largest right steering angle among the 229 steering angles calculated to drive to the target point ahead. In the case of maximum left, the values of the MV-aided center line and manual center line were −5.5 degrees and −9.2 degrees, respectively. For the maximum right, the MV-aided center line and manual center line were 6.1 degrees and 8.6 degrees, respectively. In both cases, the results of the algorithm proposed in this study showed a smaller steering angle than the manual decision results. As the result of the comparison of the number of zero crossings, it can be concluded that the proposed algorithm was more stable than the manual center line decision. Finally, the standard deviations of the steer angles obtained from the 229 test images were compared. The standard deviation is a measure of the scattering of the data, defined as the square root of the amount of variance. The smaller the standard deviation, the closer the distance of the variables from the mean value.

As a result of this comparison, the standard deviations shown in

Table 2 represent the square root of the difference of the distance between the 229 points in the average value of 229 points extracted from the 229 sample images used in this experiment. As seen in

Table 2, the result for the standard deviation of the proposed algorithm was smaller than the manual center line decision. The comparison of the standard deviation shows that the movement path information extracted by the proposed algorithm was more stable than the unaided-eye manual decision center line. As shown in

Table 2, the results of the comparison of maximum left, maximum right, standard deviation, and number of zero crossings show that the MV-aided center line calculated from the proposed algorithm was more stable in all items than the manual center line results. The orchard free space and driving center line decision algorithm using the proposed monocular NIR camera for orchard navigation has shown the possibility of guiding a UGV in an apple orchard environment including the noisy environment of apple orchard due to fallen branches, leaves, and a mixed ground pattern with weed and soil areas.

However, in order to use the algorithm proposed in this study, several preconditions were required. First, the camera tilt angle and roll angle were always set to “0” by using gimbals or similar things with the same function so that the optical axis of the camera was always parallel to the ground surface. Second, if the center line was not detected due to various orchard environment changes while driving in the free row space, it was necessary to utilize feedback information such as vehicle posture, speed, and steering angle of the UGV. Third, the orchard environment images used in this experiment may be limited to the case of Fuji varieties of apple trees in the Republic of Korea. Also, even in the same varieties, the orchard environment can be changed according to the cultivation technique and with the seasons. It is necessary to supplement the algorithm through additional experiments in various environments. The algorithm proposed in this study is more effective in the seasons with few leaves on the tree, but in the case of the leafy summer, the image processing method may be different, even if it is an apple orchard of the same region and same variety. In this case, it is necessary to implement an adaptive image processing method such as the sky-based machine vision technique. Another limitation of the proposed approach is that the UGV handles the movement along the central path between trees, but not the end of the line. The end of a tree row can be handled in several ways. One can detect in the image that there were no consecutive tree trunks on the extension of the tree row. Also, ultrasonic sensors can be used to recognize the end of a tree row. In the case of the ≈750–850 nm NIR image used in this study, it is expected that the active light of the corresponding wavelength can be used to acquire images at night, and the proposed algorithm can be used as it is.

5. Conclusions

We have developed an algorithm for the autonomous orchard navigation of a UGV and compared the performance with manually unaided eye-decision results. For this experiment, a monocular NIR CIS camera was used to obtain sample orchard images. The images were converted to a binary image divided into a brightness region containing tree trunks and another region. The lowest points of the segments containing the tree trunks were detected and the tree trunks’ lowest points were determined using a naïve Bayesian classifier. Because the naïve Bayesian classification applied in this study required a small number of samples for training and a simple training process, it was able to effectively classify tree trunks’ points and noise points of the orchard, which were problematic in vision-based processing, and noise caused by small branches, soil, weeds, and tree shadows on the ground. From the classified tree trunks’ points, the trunk row lines were calculated using a linear regression analysis, and the center line of the alley was calculated on two trunk row lines situated on both sides. The performance of the proposed algorithm was investigated using 229 sample images obtained from an image acquisition system. For the performance test, the stabilities of the two center lines extracted by the manual decision and by the MV-aided results using the proposed algorithm were compared and analyzed for the maximum left, the maximum right, the standard deviation, and the number of zero crossings. In all these parameters, the MV-aided center line was more stable than the manual center line results. We are expecting that the proposed algorithm had the potential to take charge of the autonomous driving function of various working vehicles in the orchard for precision agriculture. Our future research would include a method of detecting a more reliable center line and travel route information by integrating center line estimation results and driving information such as vehicle posture, speed, and steering angle of UGV, and the method of recognizing the end of a tree row.