Neutrosophic Association Rule Mining Algorithm for Big Data Analysis

Abstract

:1. Introduction

- (1)

- Volume: big data is measured by petabytes and zettabytes.

- (2)

- Velocity: the accelerating speed of data flow.

- (3)

- Variety: the various sources and types of data requiring analysis and management.

- (4)

- Veracity: noise, abnormality, and biases of generated knowledge.

- (1)

- Business domain.

- (2)

- Technology domain.

- (3)

- Health domain.

- (4)

- Smart cities designing.

- (1)

- Analytics Architecture: The optimal architecture for dealing with historic and real-time data at the same time is not obvious yet.

- (2)

- Statistical significance: Fulfill statistical results, which should not be random.

- (3)

- Distributed mining: Various data mining methods are not fiddling to paralyze.

- (4)

- Time evolving data: Data should be improved over time according to the field of interest.

- (5)

- Compression: To deal with big data, the amount of space that is needed to store is highly relevant.

- (6)

- Visualization: The main mission of big data analysis is the visualization of results.

- (7)

- Hidden big data: Large amounts of beneficial data are lost since modern data is unstructured data.

Research Contribution

2. Association Rules Mining

- (i)

- represents all the possible data sets, called items.

- (ii)

- Transaction set is the set of domain data resulting from transactional processing such as T ⊆ I.

- (iii)

- For a given itemset X ⊆ I and a given transaction T, we say that T contains X if and only if X ⊆ T.

- (iv)

- : the support frequency of X, which is defined as the number of transactions out of D that contain X.

- (v)

- : the support threshold.

3. Fuzzy Association Rules

4. Neutrosophic Association Rules

4.1. Neutrosophic Set Definitions and Operations

4.2. Proposed Model for Association Rule

- Step 1

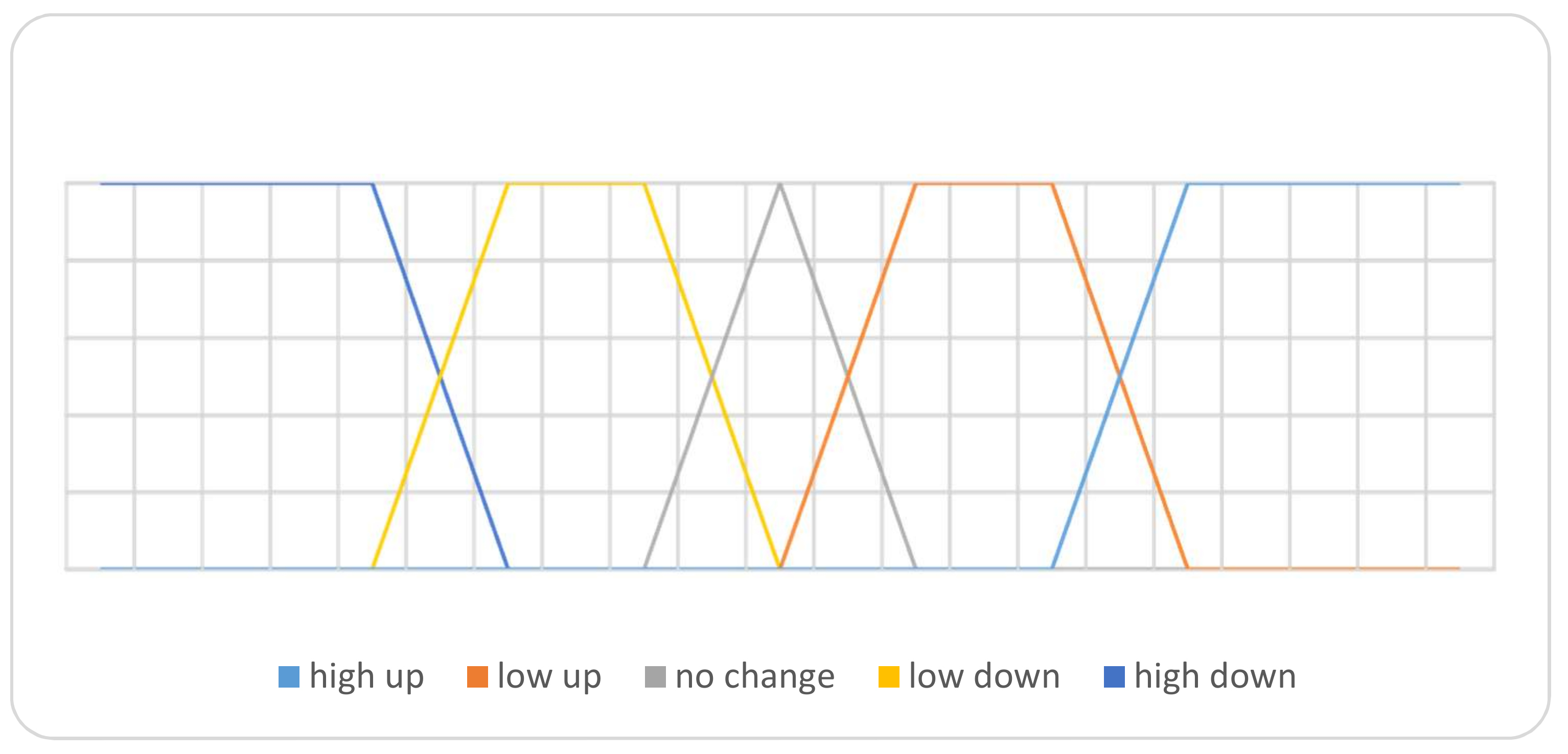

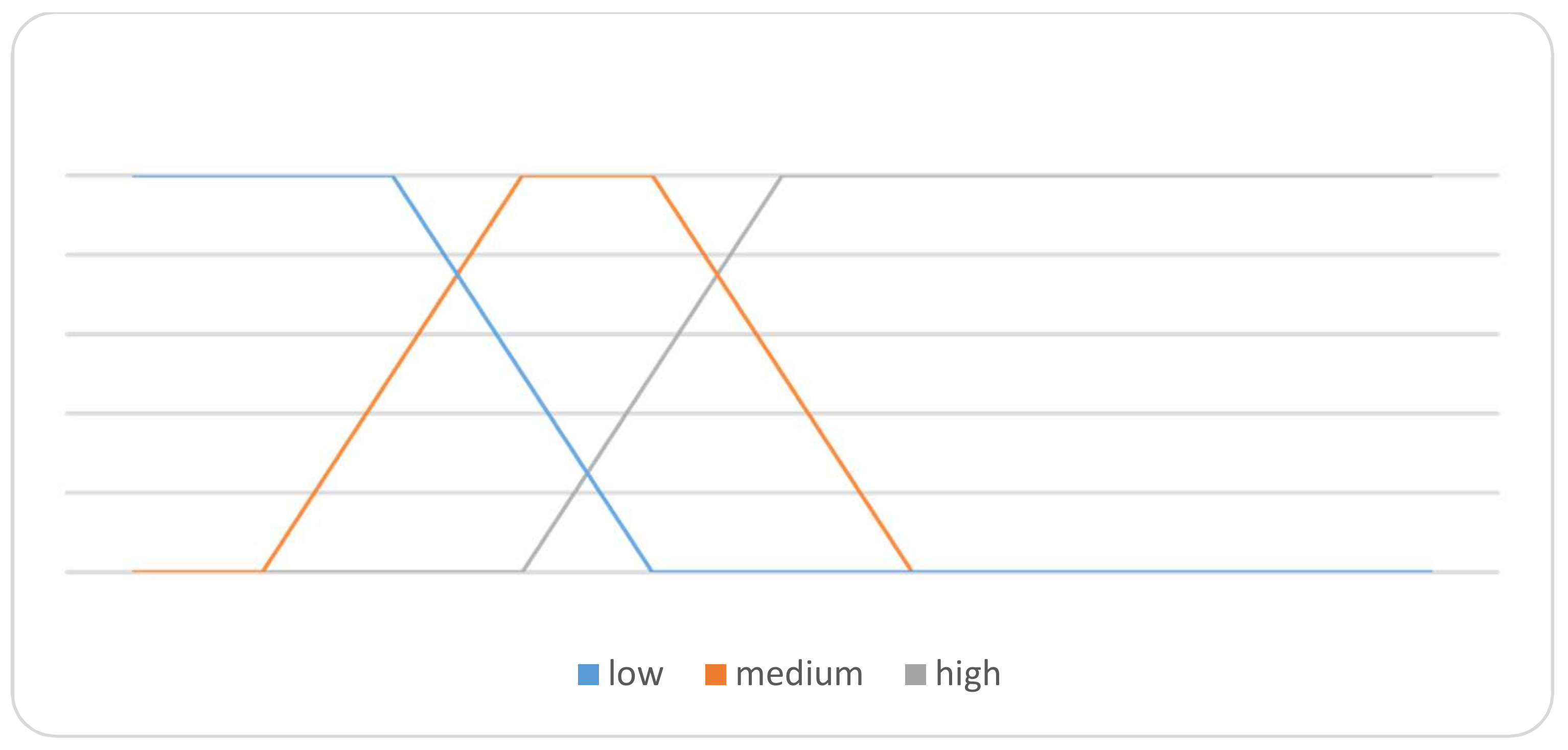

- Set linguistic terms of the variable, which will be used for quantitative attribute.

- Step 2

- Define the truth, indeterminacy, and the falsity membership functions for each constructed linguistic term.

- Step 3

- For each transaction in , compute the truth-membership, indeterminacy-membership and falsity-membership degrees.

- Step 4

- Extend each linguistic term l in set of linguistic terms L into TL, IL, and FL to denote truth-membership, indeterminacy-membership, and falsity-membership functions, respectively.

- Step 5

- For each item set where , and number of iterations.

- calculate count of each linguistic term by summing degrees of membership for each transaction as where is or .

- calculate support for each linguistic term

- Step 6

- The above procedure has been repeated for every quantitative attribute in the database.

- Step 1

- The attribute “temperature’ has set the linguistic terms “very cold”, “cold”, “cool”, “warm”, and “hot”, and their ranges are defined in Table 4.

- Step 2

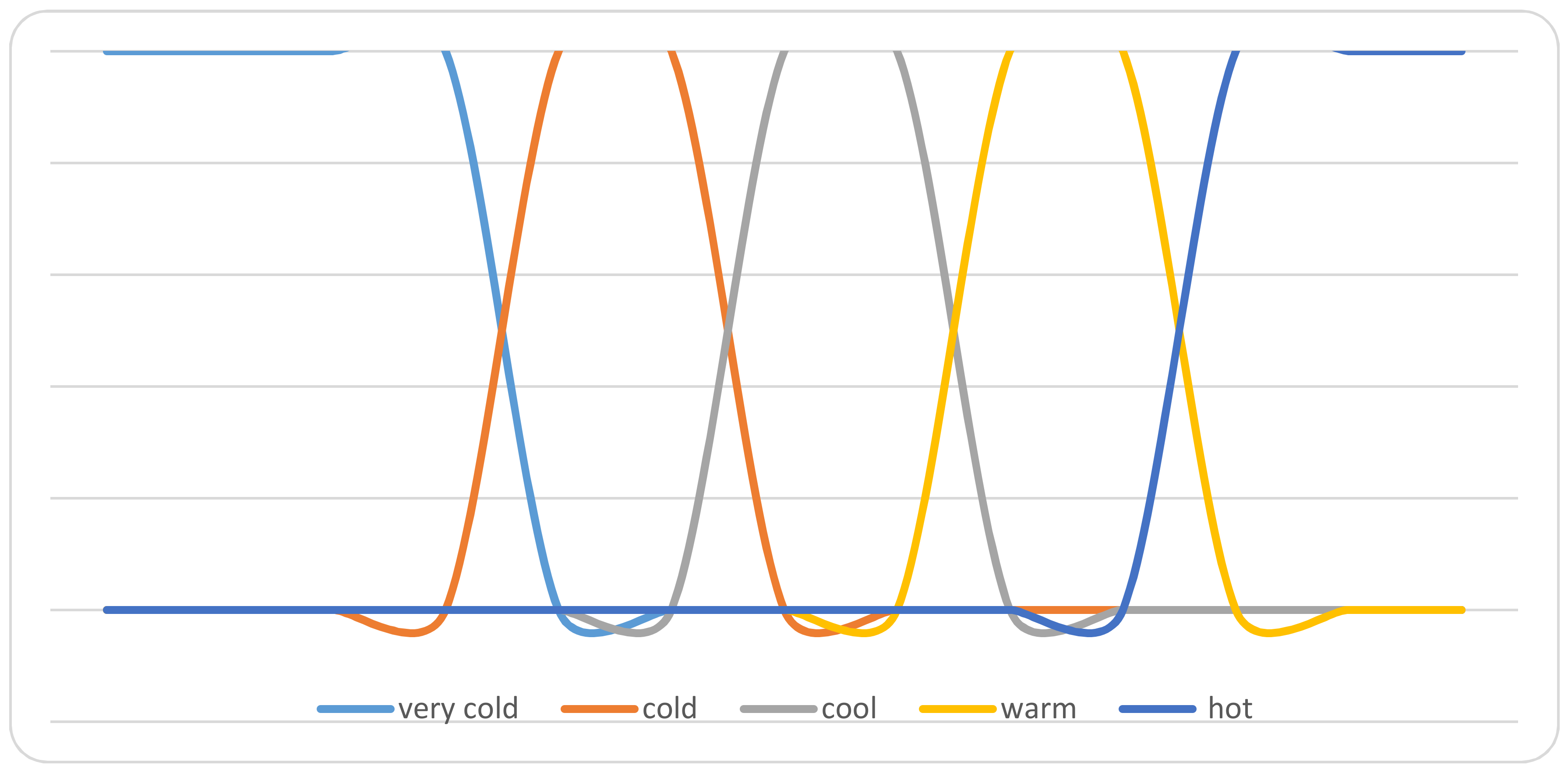

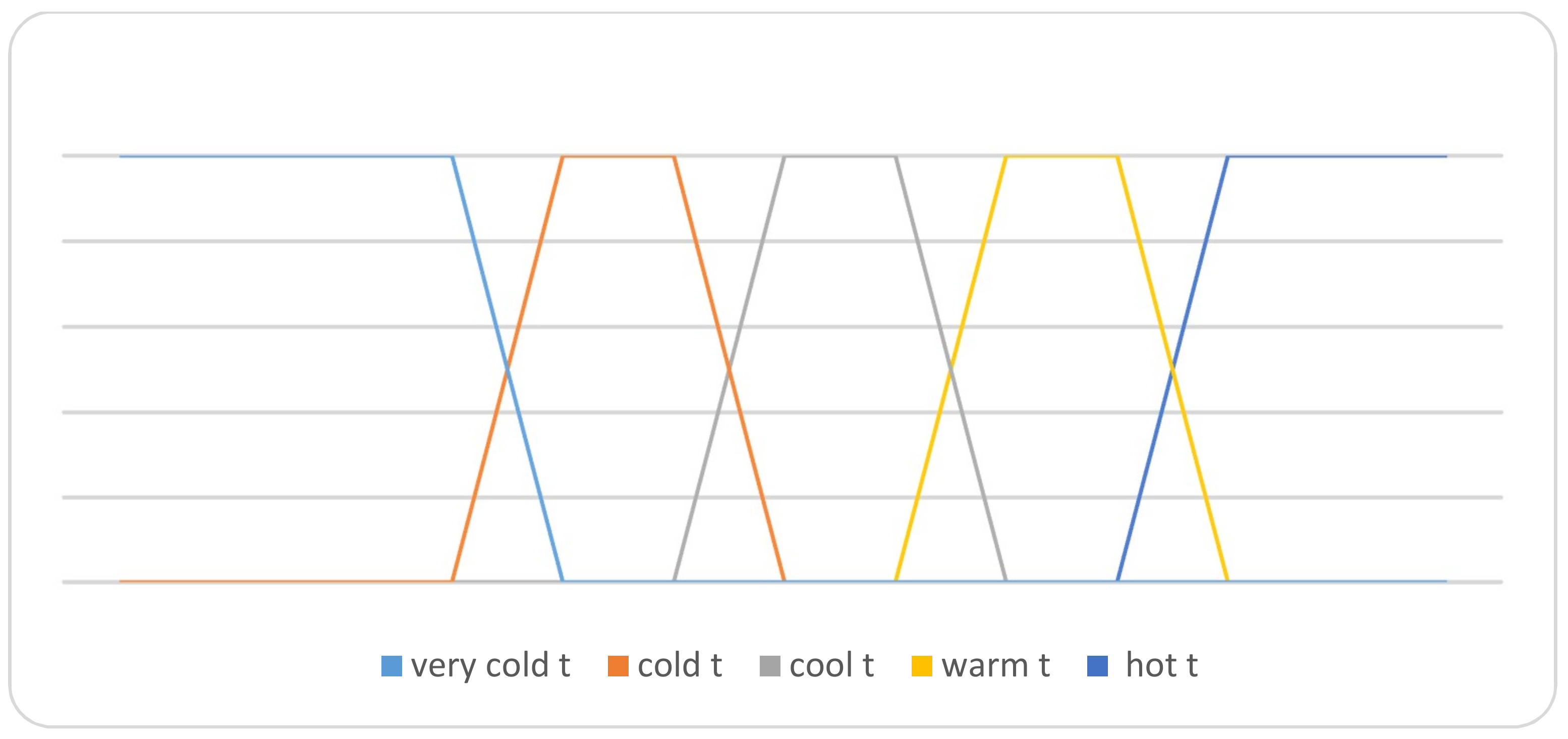

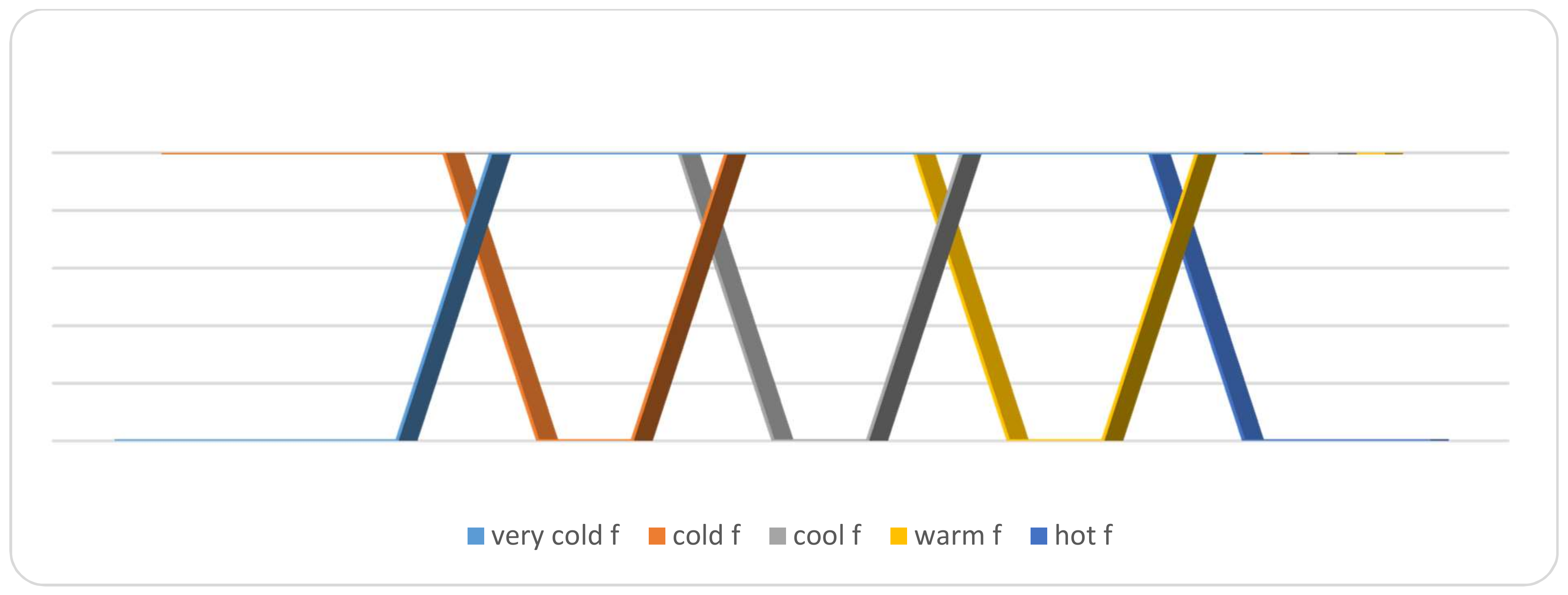

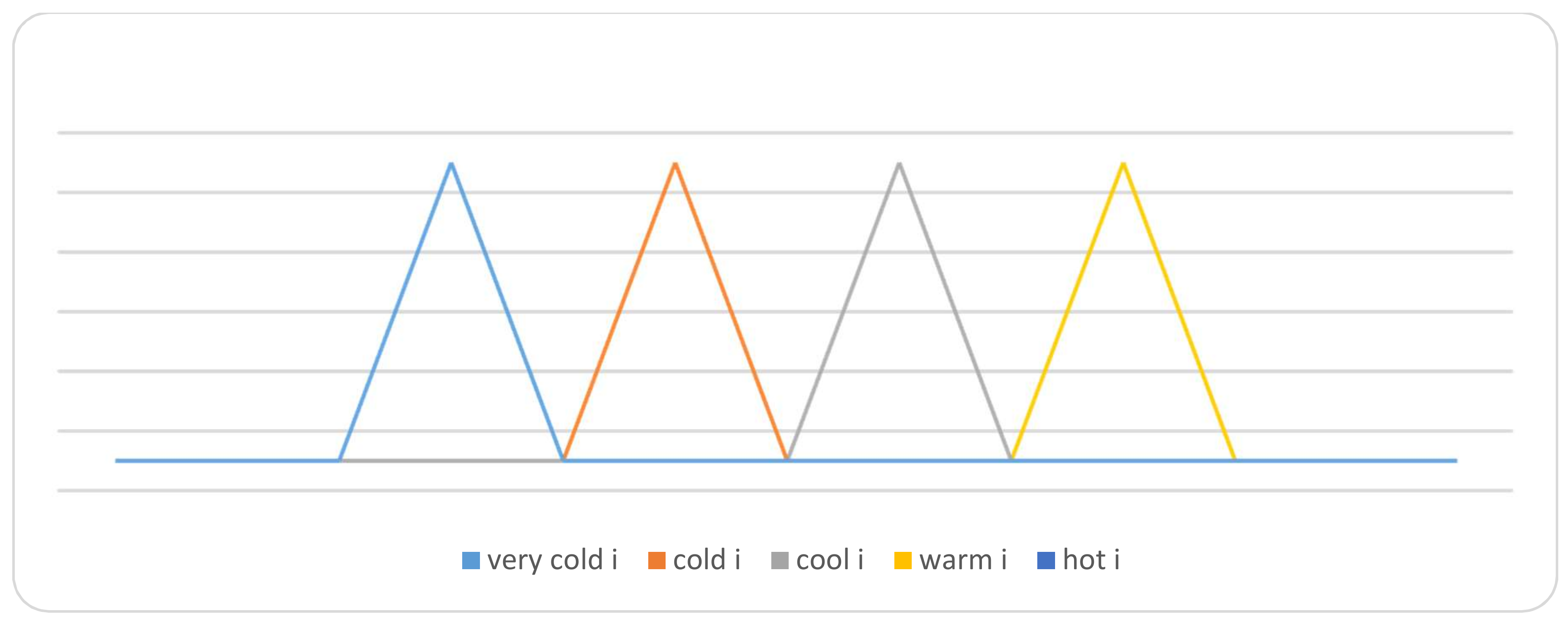

- Based on these linguistic term ranges, the truth-membership functions of each linguistic variable are defined, as follows:

- Step 3

- Based on the membership grades, different transaction has been set up by taking different sets of the temperatures. The membership grades in terms of the neutrosophic sets of these transactions are summarized in Table 5.

- Step 4

- Now, we count the set of linguistic terms {very cold, cold, cool, warm, hot} for every element in transactions. Since the truth, falsity, and indeterminacy-memberships are independent functions, the set of linguistic terms can be extended to where means not worm and means not sure of warmness. This enhances dealing with negative association rules, which is handled as positive rules without extra calculations.

- Step 5

5. Case Study

6. Experimental Results

7. Conclusions and Future Work

Author Contributions

Conflicts of Interest

References

- Gartner. Available online: http://www.gartner.com/it-glossary/bigdata (accessed on 3 December 2017).

- Intel. Big Thinkers on Big Data (2012). Available online: http://www.intel.com/content/www/us/en/bigdata/ big-thinkers-on-big-data.html (accessed on 3 December 2017).

- Aggarwal, C.C.; Ashish, N.; Sheth, A. The internet of things: A survey from the data-centric perspective. In Managing and Mining Sensor Data; Springer: Berlin, Germany, 2013; pp. 383–428. [Google Scholar]

- Parker, C. Unexpected challenges in large scale machine learning. In Proceedings of the 1st International Workshop on Big Data, Streams and Heterogeneous Source Mining: Algorithms, Systems, Programming Models and Applications, Beijing, China, 12 August 2012; pp. 1–6. [Google Scholar]

- Gopalkrishnan, V.; Steier, D.; Lewis, H.; Guszcza, J. Big data, big business: Bridging the gap. In Proceedings of the 1st International Workshop on Big Data, Streams and Heterogeneous Source Mining: Algorithms, Systems, Programming Models and Applications, Beijing, China, 12 August 2012; pp. 7–11. [Google Scholar]

- Chytil, M.; Hajek, P.; Havel, I. The GUHA method of automated hypotheses generation. Computing 1966, 1, 293–308. [Google Scholar]

- Park, J.S.; Chen, M.-S.; Yu, P.S. An effective hash-based algorithm for mining association rules. SIGMOD Rec. 1995, 24, 175–186. [Google Scholar] [CrossRef]

- Aggarwal, C.C.; Yu, P.S. Mining large itemsets for association rules. IEEE Data Eng. Bull. 1998, 21, 23–31. [Google Scholar]

- Savasere, A.; Omiecinski, E.R.; Navathe, S.B. An efficient algorithm for mining association rules in large databases. In Proceedings of the 21th International Conference on Very Large Data Bases, Georgia Institute of Technology, Zurich, Swizerland, 11–15 September 1995. [Google Scholar]

- Agrawal, R.; Srikant, R. Fast algorithms for mining association rules. In Proceedings of the 20th International Conference of Very Large Data Bases (VLDB), Santiago, Chile, 12–15 September 1994; pp. 487–499. [Google Scholar]

- Hidber, C. Online association rule mining. In Proceedings of the 1999 ACM SIGMOD International Conference on Management of Data, Philadelphia, PA, USA, 31 May–3 June 1999; Volume 28. [Google Scholar]

- Valtchev, P.; Hacene, M.R.; Missaoui, R. A generic scheme for the design of efficient on-line algorithms for lattices. In Conceptual Structures for Knowledge Creation and Communication; Springer: Berlin/Heidelberg, Germany, 2003; pp. 282–295. [Google Scholar]

- Verlinde, H.; de Cock, M.; Boute, R. Fuzzy versus quantitative association rules: A fair data-driven comparison. IEEE Trans. Syst. Man Cybern. Part B 2005, 36, 679–684. [Google Scholar] [CrossRef]

- Huang, C.-H.; Lien, H.-L.; Wang, L.S.-L. An Empirical Case Study of Internet Usage on Student Performance Based on Fuzzy Association Rules. In Proceedings of the 3rd Multidisciplinary International Social Networks Conference on Social Informatics 2016 (Data Science 2016), Union, NJ, USA, 15–17 August 2016; p. 7. [Google Scholar]

- Hui, Y.Y.; Choy, K.L.; Ho, G.T.; Lam, H. A fuzzy association Rule Mining framework for variables selection concerning the storage time of packaged food. In Proceedings of the 2016 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Vancouver, BC, Canada, 24–29 July 2016; pp. 671–677. [Google Scholar]

- Huang, T.C.-K. Discovery of fuzzy quantitative sequential patterns with multiple minimum supports and adjustable membership functions. Inf. Sci. 2013, 222, 126–146. [Google Scholar] [CrossRef]

- Hong, T.-P.; Kuo, C.-S.; Chi, S.-C. Mining association rules from quantitative data. Intell. Data Anal. 1999, 3, 363–376. [Google Scholar] [CrossRef]

- Pei, B.; Zhao, S.; Chen, H.; Zhou, X.; Chen, D. FARP: Mining fuzzy association rules from a probabilistic quantitative database. Inf. Sci. 2013, 237, 242–260. [Google Scholar] [CrossRef]

- Siji, P.D.; Valarmathi, M.L. Enhanced Fuzzy Association Rule Mining Techniques for Prediction Analysis in Betathalesemia’s Patients. Int. J. Eng. Res. Technol. 2014, 4, 1–9. [Google Scholar]

- Lee, Y.-C.; Hong, T.-P.; Wang, T.-C. Multi-level fuzzy mining with multiple minimum supports. Expert Syst. Appl. 2008, 34, 459–468. [Google Scholar] [CrossRef]

- Chen, C.-H.; Hong, T.-P.; Li, Y. Fuzzy association rule mining with type-2 membership functions. In Proceedings of the Asian Conference on Intelligent Information and Database Systems, Bali, Indonesia, 23–25 March 2015; pp. 128–134. [Google Scholar]

- Sheibani, R.; Ebrahimzadeh, A. An algorithm for mining fuzzy association rules. In Proceedings of the International Multi Conference of Engineers and Computer Scientists, Hong Kong, China, 19–21 March 2008. [Google Scholar]

- Lee, Y.-C.; Hong, T.-P.; Lin, W.-Y. Mining fuzzy association rules with multiple minimum supports using maximum constraints. In Knowledge-Based Intelligent Information and Engineering Systems; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1283–1290. [Google Scholar]

- Han, J.; Pei, J.; Kamber, M. Data Mining: Concepts and Techniques; Elsevier: New York, NY, USA, 2011. [Google Scholar]

- Hong, T.-P.; Lin, K.-Y.; Wang, S.-L. Fuzzy data mining for interesting generalized association rules. Fuzzy Sets Syst. 2003, 138, 255–269. [Google Scholar] [CrossRef]

- Au, W.-H.; Chan, K.C. Mining fuzzy association rules in a bank-account database. IEEE Trans. Fuzzy Syst. 2003, 11, 238–248. [Google Scholar]

- Dubois, D.; Prade, H.; Sudkamp, T. On the representation, measurement, and discovery of fuzzy associations. IEEE Trans. Fuzzy Syst. 2005, 13, 250–262. [Google Scholar] [CrossRef]

- Smarandache, F. Neutrosophic set-a generalization of the intuitionistic fuzzy set. J. Def. Resour. Manag. 2010, 1, 107. [Google Scholar]

- Abdel-Basset, M.; Mohamed, M. The Role of Single Valued Neutrosophic Sets and Rough Sets in Smart City: Imperfect and Incomplete Information Systems. Measurement 2018, 124, 47–55. [Google Scholar] [CrossRef]

- Ye, J. A multicriteria decision-making method using aggregation operators for simplified neutrosophic sets. J. Intell. Fuzzy Syst. 2014, 26, 2459–2466. [Google Scholar]

- Ye, J. Vector Similarity Measures of Simplified Neutrosophic Sets and Their Application in Multicriteria Decision Making. Int. J. Fuzzy Syst. 2014, 16, 204–210. [Google Scholar]

- Hwang, C.-M.; Yang, M.-S.; Hung, W.-L.; Lee, M.-G. A similarity measure of intuitionistic fuzzy sets based on the Sugeno integral with its application to pattern recognition. Inf. Sci. 2012, 189, 93–109. [Google Scholar] [CrossRef]

- Wang, H.; Smarandache, F.; Zhang, Y.; Sunderraman, R. Single valued neutrosophic sets. Rev. Air Force Acad. 2010, 10, 11–20. [Google Scholar]

- Mondal, K.; Pramanik, S.; Giri, B.C. Role of Neutrosophic Logic in Data Mining. New Trends Neutrosophic Theory Appl. 2016, 1, 15. [Google Scholar]

- Lu, A.; Ke, Y.; Cheng, J.; Ng, W. Mining vague association rules. In Advances in Databases: Concepts, Systems and Applications; Kotagiri, R., Krishna, P.R., Mohania, M., Nantajeewarawat, E., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4443, pp. 891–897. [Google Scholar]

- Srinivas, K.; Rao, G.R.; Govardhan, A. Analysis of coronary heart disease and prediction of heart attack in coal mining regions using data mining techniques. In Proceedings of the 5th International Conference on Computer Science and Education (ICCSE), Hefei, China, 24–27 August 2010; pp. 1344–1349. [Google Scholar]

| Transaction | Temp. | Membership Degree |

|---|---|---|

| T1 | 18 | 1 cool |

| T2 | 13 | 0.6 cool, 0.4 cold |

| T3 | 12 | 0.4 cool, 0.6 cold |

| T4 | 33 | 0.6 warm, 0.4 hot |

| T5 | 21 | 0.2 warm, 0.8 cool |

| T6 | 25 | 1 warm |

| 1-itemset | Count | Support |

|---|---|---|

| Very cold | 0 | 0 |

| Cold | 1 | 0.17 |

| Cool | 2.8 | 0.47 |

| Warm | 1.6 | 0.27 |

| Hot | 0.6 | 0.1 |

| 2-itemset | Count | Support |

|---|---|---|

| {Cold, cool} | 0.8 | 0.13 |

| {Warm, hot} | 0.4 | 0.07 |

| {warm, cool} | 0.2 | 0.03 |

| Linguistic Term | Core Range | Left Boundary Range | Right Boundary Range |

|---|---|---|---|

| Very Cold | −∞–0 | N/A | 0–5 |

| Cold | 5–10 | 0–5 | 10–15 |

| Cool | 15–20 | 10–15 | 20–25 |

| Warm | 25–30 | 20–25 | 30–35 |

| Hot | 35–∞ | 30–35 | N/A |

| Transaction | Temp. | Membership Degree |

|---|---|---|

| T1 | 18 | Very-cold <0,0,1> cold <0,0,1> cool <1,0.1,0> warm <0,0.1,1> hot <0,0,1> |

| T2 | 13 | Very cold <0,0,1> cold <0.4,0.9,0.6> cool <0.6,0.9,0.4> warm <0,0,1> hot <0,0,1> |

| T3 | 12 | Very cold <0,0,1> cold <0.6,0.9,0.4> cool <0.4,0.9,0.6> warm <0,0,1> hot <0,0,1> |

| T4 | 33 | Very cold <0,0,1> cold <0,0,1> cool <0,0,1> warm <0.4,0.9,0.6> hot <0.6,0.9,0.4> |

| T5 | 21 | Very cold <0,0,1> cold <0,0,1> cool <0.8,0.7,0.2> warm <0.2,0.7,0.8> hot <0,0,1> |

| T6 | 25 | Very cold <0,0,1> cold <0,0,1> cool <0,0,1> warm <1,0.5,0> hot <0,0,1> |

| 1-itemset | Count | Support |

|---|---|---|

| Tverycold | 0 | 0 |

| TCold | 1 | 0.17 |

| TCool | 2.8 | 0.47 |

| TWarm | 1.6 | 0.27 |

| THot | 0.6 | 0.1 |

| Iverycold | 0 | 0 |

| ICold | 1.8 | 0.3 |

| ICool | 2.6 | 0.43 |

| IWarm | 2.2 | 0.37 |

| IHot | 0.9 | 0.15 |

| Fverycold | 6 | 1 |

| FCold | 5 | 0.83 |

| FCool | 3.2 | 0.53 |

| FWarm | 4.4 | 0.73 |

| FHot | 5.4 | 0.9 |

| 2-itemset | Count | Support | 2-itemset | Count | Support |

|---|---|---|---|---|---|

| {TCold, TCool} | 0.8 | 0.13 | {ICold, ICool} | 1.8 | 0.30 |

| {TCold, ICold} | 1 | 0.17 | {ICold, Fverycold} | 1.8 | 0.30 |

| {TCold, ICool} | 1 | 0.17 | {ICold, FCold} | 1 | 0.17 |

| {TCold, Fverycold} | 1 | 0.17 | {ICold, FCool} | 1 | 0.17 |

| {TCold, FCold} | 0.8 | 0.13 | {ICold, FWarm} | 1.8 | 0.30 |

| {TCold, FCool} | 1 | 0.17 | {ICold, FHot} | 1.8 | 0.30 |

| {TCold, FWarm} | 1 | 0.17 | {ICool, IWarm} | 0.8 | 0.13 |

| {TCold, FHot} | 1 | 0.17 | {ICool, Fverycold} | 2.6 | 0.43 |

| {TCool, TWarm} | 0.2 | 0.03 | {ICool, FCold} | 1.8 | 0.30 |

| {TCool, ICold} | 1 | 0.17 | {ICool, FCool} | 1.2 | 0.20 |

| {TCool, FCool} | 1.8 | 0.30 | {ICool, FWarm} | 2.6 | 0.43 |

| {TCool, IWarm} | 0.8 | 0.13 | {ICool, FHot} | 2.6 | 0.43 |

| {TCool, Fverycold} | 2.8 | 0.47 | {IWarm, IHot} | 0.9 | 0.15 |

| {TCool, FCold} | 2.8 | 0.47 | {IWarm, Fverycold} | 2.2 | 0.37 |

| {TCool, FCool} | 1 | 0.17 | {IWarm, FCold} | 2.2 | 0.37 |

| {TCool, FWarm} | 2.8 | 0.47 | {IWarm, FCool} | 1.6 | 0.27 |

| {TCool, FHot} | 2.8 | 0.47 | {IWarm, FWarm} | 1.4 | 0.23 |

| {TWarm, THot} | 0.4 | 0.07 | {IWarm, FHot} | 1.7 | 0.28 |

| {TWarm, ICool} | 0.2 | 0.03 | {IHot, Fverycold} | 0.9 | 0.15 |

| {TWarm, IWarm} | 1.1 | 0.18 | {IHot, FCold} | 0.9 | 0.15 |

| {TWarm, IHot} | 0.4 | 0.07 | {IHot, FCool} | 0.9 | 0.15 |

| {TWarm, Fverycold} | 1.6 | 0.27 | {IHot, FWarm} | 0.6 | 0.10 |

| {TWarm, FCold} | 1.6 | 0.27 | {IHot, FHot} | 0.4 | 0.07 |

| {TWarm, FCool} | 1.6 | 0.27 | {Fverycold, FCold} | 5 | 0.83 |

| {TWarm, FWarm} | 0.6 | 0.10 | {Fverycold, FCool} | 3.2 | 0.53 |

| {TWarm, FHot} | 1.6 | 0.27 | {Fverycold, FWarm} | 4.4 | 0.73 |

| {THot, IWarm} | 0.6 | 0.10 | {Fverycold, FHot} | 5.4 | 0.90 |

| {THot, IHot} | 0.6 | 0.10 | {FCold, FCool} | 3 | 0.50 |

| {THot, Fverycold} | 0.6 | 0.10 | {FCold, FWarm} | 3.4 | 0.57 |

| {THot, FCold} | 0.6 | 0.10 | {FCold, FHot} | 4.4 | 0.73 |

| {THot, FCool} | 0.6 | 0.10 | {FCool, FWarm} | 1.8 | 0.30 |

| {THot, FWarm} | 0.6 | 0.10 | {FCool, FHot} | 2.6 | 0.43 |

| {THot, FHot} | 0.4 | 0.07 | {FWarm, FHot} | 4.2 | 0.70 |

| Ts_Date | Month | Quarter | Change | Volume | Change30 | Change70 | Change100 |

|---|---|---|---|---|---|---|---|

| 13 September 2012 | September | 3 | 0.64 | 0.03 | −1.11 | 0.01 | −0.43 |

| 16 September 2012 | September | 3 | 0.07 | 0.02 | 2.82 | 4.50 | 3.67 |

| 17 September 2012 | September | 3 | 3.47 | 0.12 | 1.27 | 0.76 | 0.81 |

| 18 September 2012 | September | 3 | 1.38 | 0.03 | −0.08 | −0.48 | −0.43 |

| 19 September 2012 | September | 3 | −1.48 | 0.02 | 0.35 | −1.10 | −0.64 |

| 20 September 2012 | September | 3 | 0.47 | 0.05 | −1.41 | −1.64 | −1.55 |

| 23 September 2012 | September | 3 | 3.64 | 0.02 | −0.21 | 1.00 | 0.41 |

| 24 September 2012 | September | 3 | −0.47 | 0.05 | 0.27 | −0.09 | 0.03 |

| 25 September 2012 | September | 3 | −2.77 | 0.15 | 2.15 | 1.79 | 1.85 |

| 26 September 2012 | September | 3 | 1.96 | 0.04 | 0.22 | 0.96 | 0.57 |

| 27 September 2012 | September | 3 | 0.90 | 0.05 | −1.38 | −0.88 | −0.92 |

| 30 September 2012 | September | 3 | −0.14 | 0.00 | −1.11 | −0.79 | −0.75 |

| 1 October 2012 | October | 4 | −1.60 | 0.02 | −2.95 | −4.00 | −3.51 |

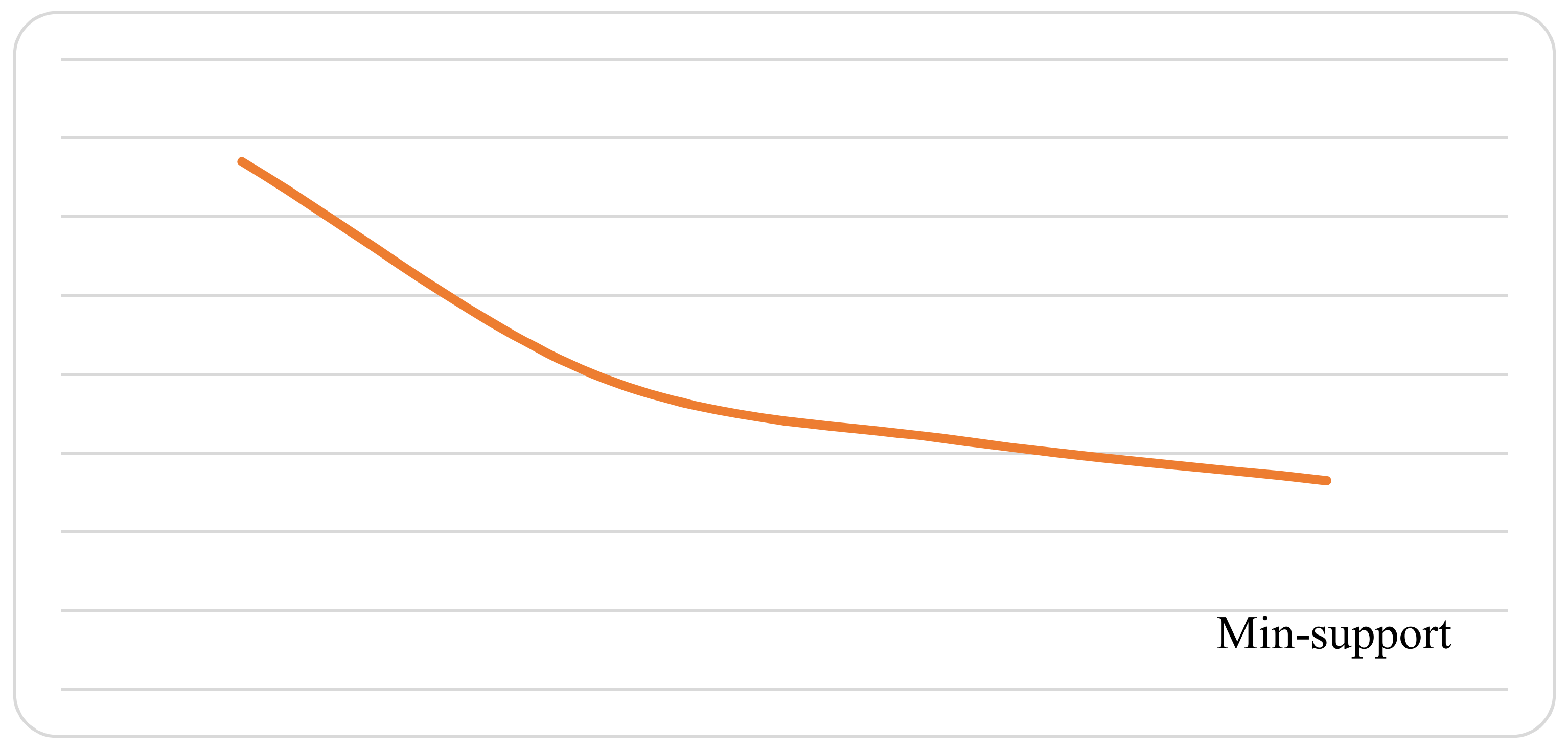

| Min-Support | 0.02 | 0.03 | 0.04 | 0.05 |

|---|---|---|---|---|

| 1-itemset | 10 | 10 | 10 | 10 |

| 2-itemset | 37 | 36 | 36 | 33 |

| 3-itemset | 55 | 29 | 15 | 10 |

| 4-itemset | 32 | 4 | 2 | 0 |

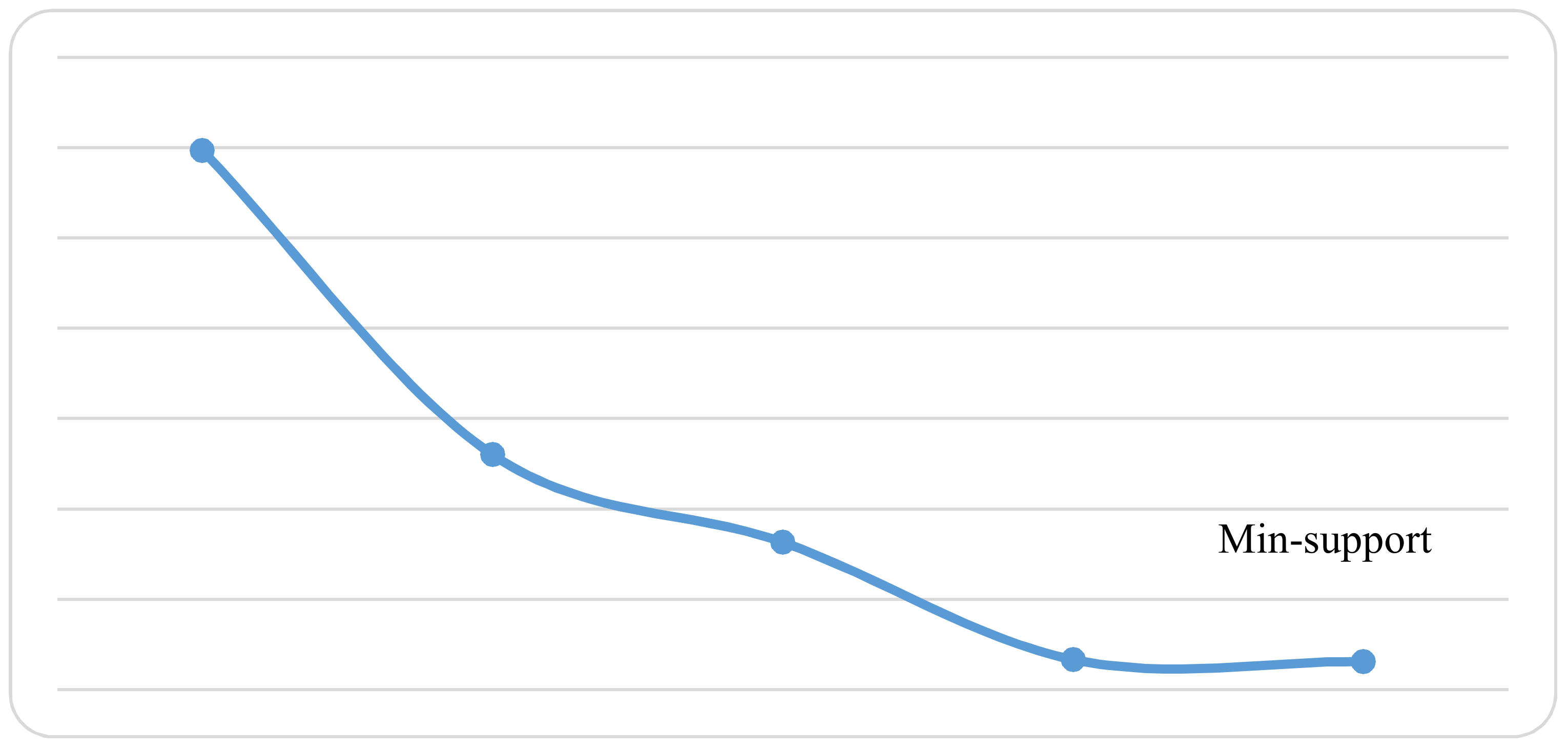

| Min-Support | 0.02 | 0.03 | 0.04 | 0.05 |

|---|---|---|---|---|

| 1-itemset | 26 | 26 | 26 | 26 |

| 2-itemset | 313 | 311 | 309 | 300 |

| 3-itemset | 2293 | 2164 | 2030 | 1907 |

| 4-itemset | 11233 | 9689 | 8523 | 7768 |

| Min-Support | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 |

|---|---|---|---|---|---|

| 1-itemset | 11 | 9 | 9 | 6 | 5 |

| 2-itemset | 50 | 33 | 30 | 11 | 10 |

| 3-itemset | 122 | 64 | 50 | 10 | 10 |

| 4-itemset | 175 | 71 | 45 | 5 | 5 |

| 5-itemset | 151 | 45 | 21 | 1 | 1 |

| 6-itemset | 88 | 38 | 8 | 0 | 0 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdel-Basset, M.; Mohamed, M.; Smarandache, F.; Chang, V. Neutrosophic Association Rule Mining Algorithm for Big Data Analysis. Symmetry 2018, 10, 106. https://doi.org/10.3390/sym10040106

Abdel-Basset M, Mohamed M, Smarandache F, Chang V. Neutrosophic Association Rule Mining Algorithm for Big Data Analysis. Symmetry. 2018; 10(4):106. https://doi.org/10.3390/sym10040106

Chicago/Turabian StyleAbdel-Basset, Mohamed, Mai Mohamed, Florentin Smarandache, and Victor Chang. 2018. "Neutrosophic Association Rule Mining Algorithm for Big Data Analysis" Symmetry 10, no. 4: 106. https://doi.org/10.3390/sym10040106