1. Introduction

One of the main assumptions in economics and especially of large population models is that economic agents interact globally. In this sense, agents do not care with whom they interact. Moreover, what matters is how the overall population behaves. In many economic applications this assumption seems to be appropriate. For example, when modelling the interaction of merchants what really matters is only the actual distribution of bids and asks and not the identities of the buyers and sellers. However, there are situations in which it is more plausible that economic agents only interact with a small subgroup of the overall population. For instance, think of the choice of a text editing programme from a set of (to a certain degree) incompatible programmes, as e.g.,

![Games 01 00551 i001]()

, MS-Word, and Scientific Workplace. This choice will probably be influenced to a larger extent by the technology standard the people one works with use than by the overall distribution of technology standards. Similarly, it is also reasonable to think that e.g., family members, neighbors, or business partners interact more often with each other than with anybody chosen randomly from the entire population. In such situations we speak of “local interactions”.

Further, note that in many situations people can benefit from coordinating on the same action. Typical examples include common technology standards, as e.g. the aforementioned choice of a text editing programme, common legal standards, as e.g., driving on the left versus the right side of the road, or common social norms, as e.g., the affirmative versus the disapproving meaning of shaking one’s head in different parts of the world. These situations give rise to coordination games. In these coordination games the problem of equilibrium selection is probably most evident, as classical game theory can not provide an answer to the question which convention or equilibrium will eventually arise. The reason for this shortcoming is that no equilibrium refinement concept can discard a strict Nash equilibrium.

This paper aims at providing a detailed overview of the answers models of local interaction can give to the question which equilibrium will be adopted in the long run.

1 We further provide insight on the main technical tools employed, the main forces at work, and the most prominent results of the game theoretic literature on coordination games under local interactions. Jackson [

2], Goyal [

3], and Vega-Redondo [

4] also provide surveys on the topic of networks and local interactions. These authors consider economics and networks in general, whereas we almost entirely concentrate on the coordination games under local interactions. This allows us to give a more detailed picture of the literature within this particular area.

Starting with the seminal works of Foster and Young [

5], Kandori, Mailath, and Rob [

6], henceforth KMR, and Young [

7] a growing literature on equilibrium selection in models of

bounded rationality has evolved over the past two decades. Typically, in these models a finite set of players is assumed to be pairwise matched according to some matching rule and each pair plays a coordination game against each other in discrete time. Rather than assuming that players are fully rational, these models postulate a certain degree of bounded rationality on the side of the players: Instead of reasoning about other players’ future behavior players just use simple adjustment rules.

This survey concentrates on two prominent dynamic adjustment rules used in these models of bounded rationality.

2 The first is based on myopic best reply, as e.g., in Ellison [

1,

9] or Kandori and Rob [

10,

11]. Under myopic best response learning players play a best response to the current strategies of their opponents. This is meant to capture the idea that players cannot forecast what their opponents will do and, hence, react to the current distribution of play. The second model dynamic is imitative, as e.g., in KMR, [

12], Eshel, Samuelson, and Shaked [

13], or Alós-Ferrer and Weidenholzer [

14,

15]. Under imitation rules players merely mimic the most successful behavior they observe. While myopic best reponse assumes a certain degree of rationality and knowledge of the underlying game, imitation is an even more “boundedly rational" rule of thumb and can be justified under lack of information or in the presence of decision costs.

Both, myopic best reply and imitation rules, give rise to an adjustment process which depends only on the distribution of play in the previous period,

i.e., a Markov process. For coordination games this process will (after some time) converge to a

convention,

i.e., a state where all players use the same strategy. Further, once the process has settled down at a convention it will stay there forever. To which particular convention the process converges depends on the initial distribution of play across players. Hence, the process exhibits a high degree of path dependence. KMR and Young [

7] introduce the possibility of mistakes on the side of players. With probability

, each period each player makes a mistake,

i.e., he chooses a strategy different to the one specified by the adjustment process. In the presence of such mistakes the process may jump from one convention to another. As the probability of mistakes converges to zero the invariant distribution of this Markov process singles out a prediction for the long run behavior of the population,

i.e., the

Long Run Equilibrium, (LRE). Hence, models of bounded rationality can give equilibrium predictions even in the presence of multiple strict Nash equilibria.

However, explicitly calculating the invariant distribution of the process is not tractable for a large class of models.

3 Fortunately, the work of Freidlin and Wentzell [

16] provides us with an easy algorithm which allows us to directly find the LRE. This algorithm has been first applied in an economic context by KMR and Young [

7] and has been further developed and improved by Ellison [

1]. In a nutshell, Freidlin and Wentzell [

16] and Ellison [

1] show that a profile is a LRE if it can be relatively easy accessed from other profiles by the mean of independent mistakes while it is at the same time relatively difficult to leave that profile through independent mistakes.

KMR, Kandori and Rob [

10,

11], and Ellison [

1] study the case where players interact globally. At the bottom line,

risk dominance in

- and

-dominance in

-games turn out to be the main criteria for equilibrium selection under global interactions. A strategy is said to be risk dominant in the sense of Harsanyi and Selten [

17] if it is a best response against a player playing both strategies with probability

. Morris, Rob, and Shin’s [

18] concept of

-dominance generalizes the notion of risk dominance to general

games. A strategy

s is

-dominant if it is a unique best response against all mixed strategy profiles involving at least a probability of

on

s. The reason for the selection of risk dominant (or

-dominant) conventions is that from any other state less than one half of the population has to be shifted (to the risk dominant strategy) for the risk dominant convention to be established. On the contrary, to upset the state where everybody plays the risk dominant strategy more than half of the population have to adopt a different strategy.

There are, however, three major drawbacks of these global interactions models: First, the speed at which the dynamic process converges to its long run limit depends on the population size. Hence, in large population the long run prediction might not be observed within any (for economic applications) reasonable amount of time. Second, Bergin and Lipman [

19] have shown that the model’s predictions are not independent of the underlying specification of noise. Third, Kim and Wong [

20] have argued that the model is not robust to the addition of strictly dominated strategies.

Ellison [

9] studies a local interactions model where the players are arranged on a circle with each player only interacting with a few neighbors.

4 Note that under local interactions a risk dominant (or a

-dominant) strategy may spread out contagiously from an initially small subset adopting it. To see this point, note that if half of a player’s neighbors play the risk dominant strategy it is optimal also to play the risk dominant strategy. Hence, small clusters of agents using the risk dominant strategy will grow until they have taken over the entire population. This observation has two important consequences: First, it is relatively easy to move into the basin of attraction of the risk dominant convention. Second, note that since the risk dominant strategy is contagious it will spread back from any state that contains a relatively small cluster of agents using it. Thus, it is relatively difficult to leave the risk dominant convention. These two observations combined essentially imply that risk dominant (or

-dominant) conventions will arise in the long run.

Thus, in the presence of a risk dominant (or

-dominant ) strategy the local and the global interaction model predict the same long run outcome. Note, however, that as risk dominant or

-dominant strategies are able to spread from a small subset the speed of convergence is independent of the population size. This in turn implies that that models of local interactions in general maintain their predictive power in large populations, thus, essentially challenging the first critique, mentioned beforehand. Further, Lee, Szeidl, and Valentinyi [

25] argue that this contagious spread essentially also implies that the prediction in a local interactions model will be independent of the underlying model of noise for a sufficiently large population. Weidenholzer [

26] shows that for a sufficiently large population the local interaction model is also robust to the addition (and, thus, also elimination) of strictly dominated strategies. Thus, the local interaction model is robust to all three points of critique mentioned beforehand. However, one has to be careful when justifying outcomes of a global model by using the nice features of the local model. Already, in

games in the absence of

-dominant strategies simple local interactions models may predict different outcomes than the global interactions benchmark, as observed in Ellison [

9] or Alós-Ferrer and Weidenholzer [

27]. In general, though, if

-dominant strategies are present they are selected by the best reply dynamics in a large range of local interactions models, see e.g., Blume [

21,

22], Ellison [

1,

9], or Durieu and Solal [

28]. Note, however, that risk dominance does not necessarily imply efficiency. Hence, under best reply learning societies might actually do worse than they could do.

It has been observed by models of multiple locations that if players in addition to their strategy choice in the base game may move between different locations or islands they are able to achieve efficient outcomes (see e.g., Oechssler [

29,

30] and Ely [

31]). When agents have the choice between multiple locations where the game is played an agent using a risk dominant strategy will no longer prompt his neighbors to switch strategies but instead to simply move away. This implies that locations where the risk dominant strategy is played will be abandoned and locations where the payoff dominant strategy is played will be the center of attraction. Thus, by “voting by their feet” agents are able to identify preferred outcomes, thereby achieving efficient outcomes. Anwar [

32] shows that if not all players may move to their preferred location some players will get stuck at a location using the inefficient risk dominant strategy. In this case we might observe the coexistence of conventions in the long run. Jackson and Watts [

33], Goyal and Vega-Redondo [

34], and Hojman and Szeidl [

35] present models where players may not merely switch locations but in addition to their strategy choice decide on whom to maintain a (costly) link to. For low linking costs the risk dominant convention is selected. For high linking costs the payoff dominant convention is uniquely selected in Goyal and Vega-Redondo’ [

34] and Hojman and Szeidl’s [

35] model. In Jackson and Watts [

33] model the risk dominant convention is selected for low linking costs and the risk dominant and the payoff dominant convention are selected for high linking costs.

Finally, we discuss imitation learning within the context of local interaction and global interactions. Under imitation learning agents simply mimics other agents who are perceived as successful. Thus, imitation is a cogitatively even simpler rule than myopic best response.

5 Robson and Vega-Redondo [

12] show that if agents use such imitation rules the payoff dominant outcome obtains in a global interaction framework with random interactions. Eshel, Samuelson, and Shaked [

13] and Alós-Ferrer and Weidenholzer [

14,

15] demonstrate that imitation learning might also lead to the adoption of efficient conventions in local interactions models. The basic reason for these results is that under imitation rules risk minimizing considerations (which favor risk dominance strategies under best reply) cease to play an important role.

The remainder of this survey is structured in the following way:

Section 2 introduces the basic framework of global interaction and the techniques used to find the long run equilibrium. In

Section 3 we discuss Ellison’s [

9] local interaction models in the circular city and on two dimensional lattices.

Section 4 discusses multiple location models where players in addition to their strategy choice can choose their preferred location where the game is played and models of network formation models where players can directly choose their opponents. In

Section 5 we discuss imitation learning rules and

Section 6 concludes.

3. Local Interactions

We will now study settings where players only interact with a small subset of the population, such as close friends, neighbors, or colleagues, rather than with the overall population.

3.1. The Circular City

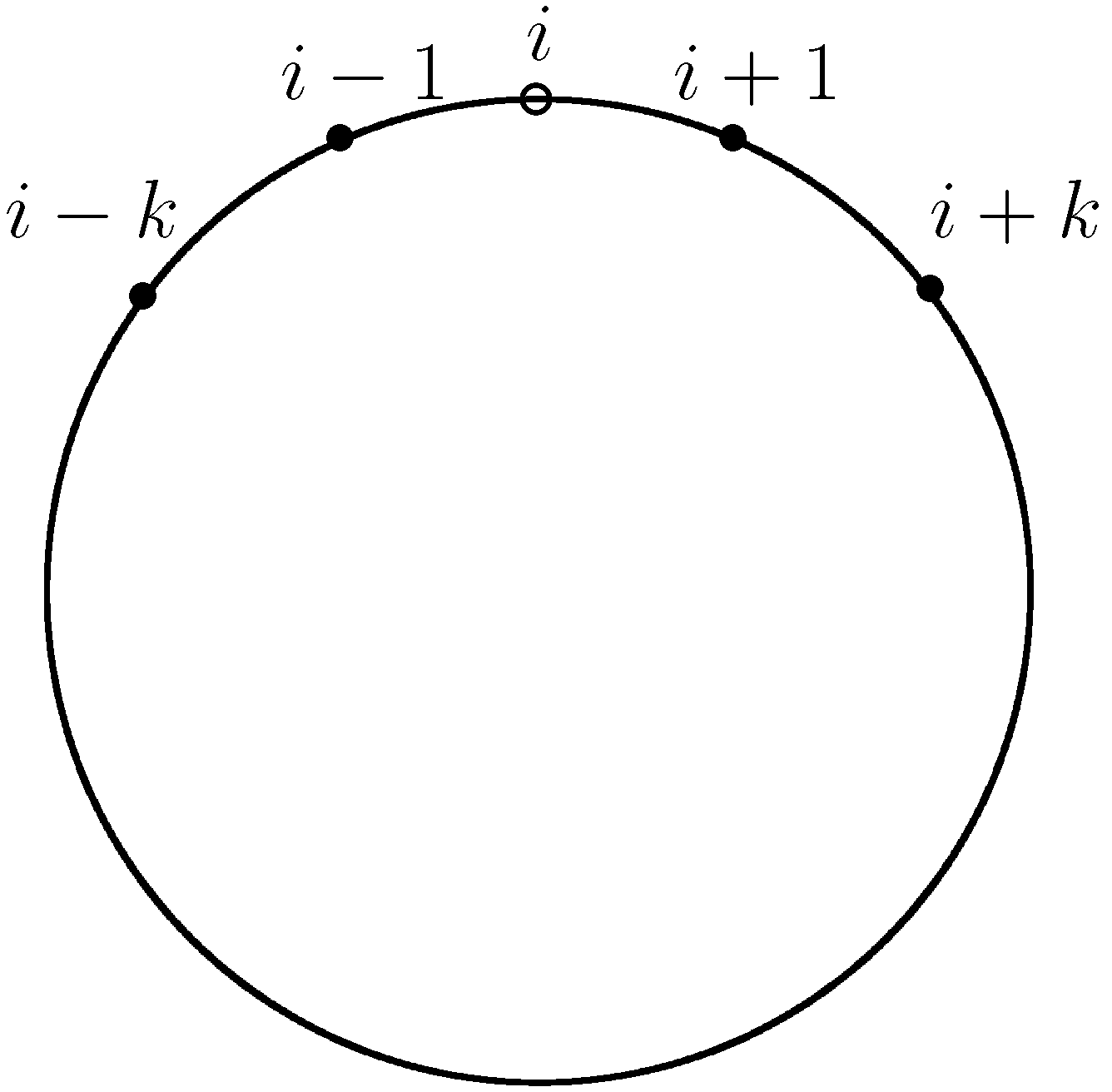

Ellison [

9] sets up a local interactions system in the circular city: Imagine our population of

N economic agents being arranged around a circle.

13 See

Figure 2 for an illustration. In this context, one can define

as the minimal distance separating players

i and

j. The shortest way between player

i and player

j can either be to the left or to the right of player

i. Hence,

is defined as:

With this specification we can define the following matching rule which matches each player with his

k closest neighbors on the left and with his

k closest neighbors on the right with equal probability,

i.e.,

We assume that

, so that no agent is matched with himself and agents are not matched with each other twice. We refer to this setting as the

-neighbors model. Of course, it is also possible in this context to think of more sophisticated matching rules such as (for

N odd)

This matching rule assigns positive probability to any match. However, the matching probability is declining in the distance separating two players.

Figure 2.

The circular city model of local interaction.

Figure 2.

The circular city model of local interaction.

Let us reconsider the

-neighbor matching rule. If one given player adopts strategy

s against another player who plays strategy

, the payoff of the first player is denoted

. If

is the profile of strategies adopted by players at time

t, the average payoff for player

i under the

-neighbor matching rule is

We assume that each period, every player given revision opportunity switches to a myopic best response,

i.e., a player adopts a best response to the distribution of play in the previous period. More formally, at time

player

i chooses

given the state

at

t. If a player has several alternative best replies, we assume that he randomly adopts one of them, assigning positive probability to each.

First, let us now reconsider

coordination games. Note that we have two natural candidates for LRE,

and

. Further, note that there might exist cycles where the system fluctuates between different states. For instance, for

(and for

N even) we have the following cycle

Note, however, that such cycles are never absorbing under our process with positive inertia. For, with positive probability some player will not adjust his strategy at some point in time and the circle will break down.

14Now, note that since strategy

A is risk dominant a player will always have

A as his best response whenever half of his

neighbors play

A. Consider

k adjacent

A-players.

With positive probability the boundary

B-players may revise their strategies. As they have

k A-neighbors they will switch to

A and we reach the state.

Iterating this argument, it follows that

A can spread out contagiously until we reach the state

. Hence, we have that from any state with

k adjacent

A-players there is a positive probability path leading to

. This implies that

.

Second, note that in order to move out of we have to destabilize any A-cluster that is such that A will spread out with certainty. This is the case if we have a cluster of adjacent A-players. For, (i) each of the agents in the cluster has k neighbors choosing A and thus will never switch, and (ii) agents at the boundary of such a cluster will switch to A whenever given revision opportunity. Hence, in order to leave the basin of attraction of we at least need one mutation per each agents, establishing . Hence,

Proposition 4 Ellison [9]. The state where everybody plays the risk dominant strategy is unique LRE under best reply learning in the circular city model of local interactions for . This is qualitatively the same result as the one obtained for global interaction by KMR. Note, however, that the nature of transition to the risk dominant convention is fundamentally different. In KMR a certain fraction of the population has to mutate to the risk dominant strategy so that all other agents will follow. On the contrary, in the circular city model only a small group mutating to the risk dominant strategy is enough to trigger a contagious spread to the risk dominant convention.

It is an easy exercise to reproduce the corresponding result for the circular city model of local interactions for general games in the presence of a -dominant strategy. Note that we have again, by the definition of -dominance, that a player will have the -dominant strategy as his best response whenever k of his neighbors choose it. Thus, in the presence of a -dominant strategy the insights of the case carry over to general games and we have that,

Proposition 5 Ellison [1]. The state where everybody plays a -dominant strategy is unique LRE under best reply learning in the circular city model of local interactions for . 3.2. On the Robustness of the Local Interactions Model

We will now reconsider the three aforementioned points of critique raised on the model of global interactions within the circular city model of local interactions. The fact that a risk dominant (or - dominant strategy) is contagious under local interactions will turn out to be key in challenging all three points of critique in large population.

First, let us consider the speed of convergence of the local interactions model. As argued already by KMR the low speed of convergence of the global model might render the model’s predictions irrelevant for large populations under global interactions. However, note that under local interactions the speed of convergence is independent of the population size as risk dominant strategies are able to spread out contagiously from a small cluster of the population adopting it. In particular, we have, by Ellison’s [

1] Radius-Coradius theorem, that the expected waiting time until

is first reached is of order

as

. This implies that the speed of convergence will be much faster under local interactions as compared to the global model. Therefore, one can expect to observe the limiting behavior of the system at an early stage of play.

Second, reconsider Bergin and Lipman’s [

19] critique stating that the prediction of KMR’s model are not robust to the underlying specification of noise. Lee, Szeidl, and Valentinyi [

25] argue that if a strategy is contagious the prediction in a local interactions model will be essentially independent of the underlying model of noise for a sufficiently large population. To illustrate their argument let us return to the example of

Section 2.4 where agents make mistakes twice as often when they are in the risk dominant convention as in the payoff dominant convention. Note now that the number of mistakes needed to move into the risk dominant convention is still

k and, thus, is independent of the population size. To upset the risk dominant convention it now takes

mutations (again measured in the rate of the original mistakes). Note, however, that this number of mutations is growing in the population size. Thus, for a sufficiently large population the risk dominant convention is easier to reach than to leave by mistakes and consequently remains LRE.

Weidenholzer [

26] shows that the contagious spread of the risk dominant strategy also implies that the local interaction model is robust to the addition and deletion of strictly dominated strategies in large populations. The main idea behind this result is that risk dominant strategies may still spread out contagiously from an initially small subset of the population. Thus, the number of mutations required to move into the basin of attraction of the risk dominant convention is independent of the population size. Conversely, even in the presence of dominated strategies the effect of mutations away from the risk dominant strategy is local and, hence, depends on the population size. To see this point reconsider the extended game from

Section 2.4 and consider the circular city model with

. Note that it still is true that it takes one mutation to move form

to

, establishing that

. Consider now the extended game

and the risk dominant convention

. Assume that one agent mutates to

C:

With positive probability the

C-player does not adjust her strategy whereas the

A-players switch to

B and we reach the state

Unless, there is no or only one

A-agent left, we will for sure move back to the risk dominant convention, establishing that

, whenever

. Thus, in the circular city model the selection of the risk dominant convention

remains for a sufficiently large population.

15One might be tempted to think that the nice features of the local interactions model can be used to justify results of a global interactions model. Note that this is legitimate in the presence of a risk dominant or

-dominant strategy which is selected in, both, the global and local framework. In particular, note that in symmetric

games there is always a risk dominant strategy. Hence, in

games the predictions of the local and the global model always have to be in line. However, once we move beyond the class

games the results may differ. To see this point, consider the following example by Young [

7].

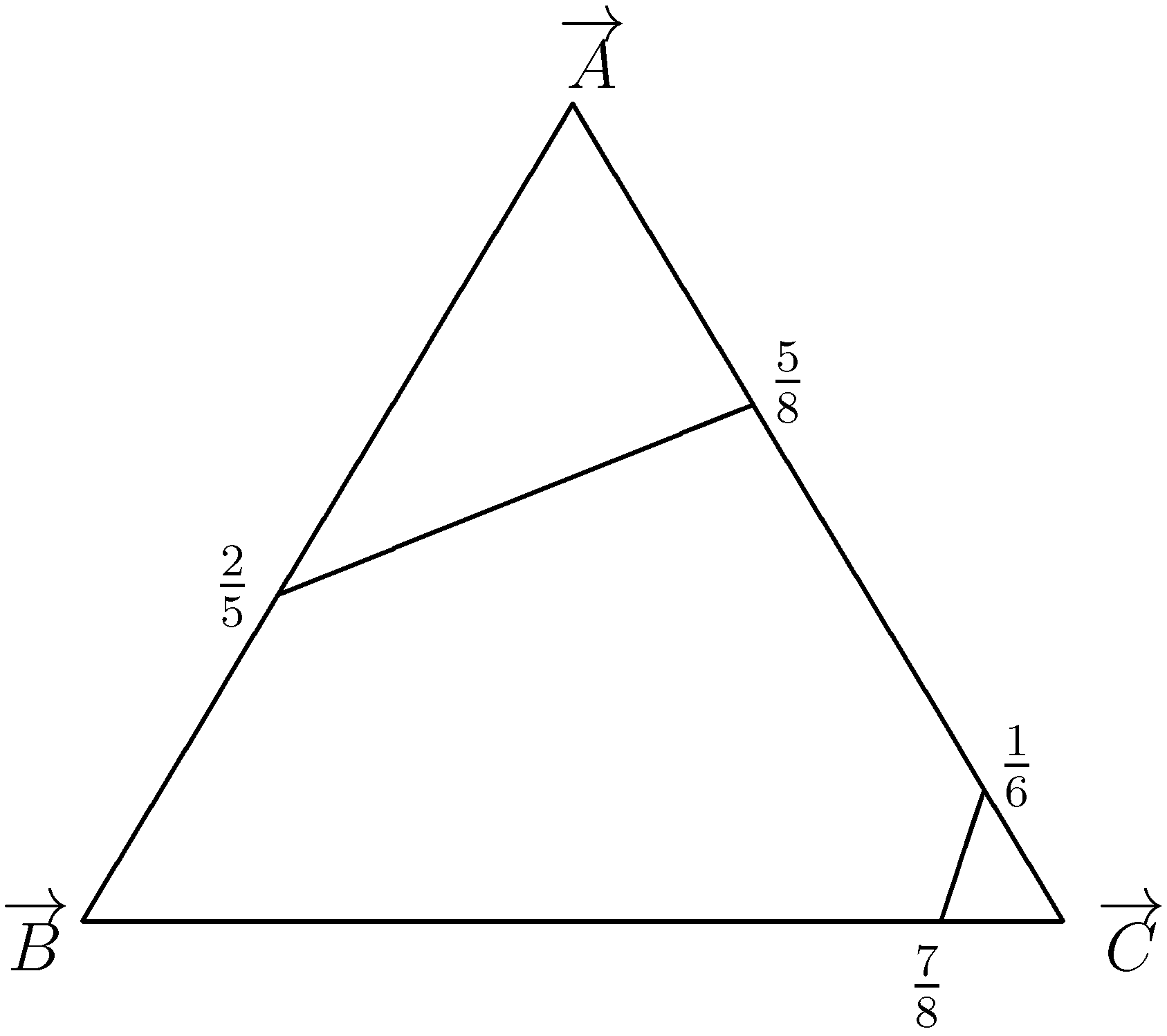

Figure 3 depicts the best-response regions for this game. First, note that in pairwise comparisons

A risk dominates

B and

C. Kandori and Rob [

11] define this property as

global pairwise risk dominance, GPRD. Now, consider the mixed strategy

. The best response against

σ is

B, and hence

A is not

-dominant. Thus, while

-dominance implies GPRD the opposite implication is wrong. The fact that

A is GPRD only reveals that

A is a better reply than

C against

σ. Under global interactions, we have that

and

. Thus,

is unique LRE under global interactions in a large enough population.

Figure 3.

The best response regions in Young’s example.

Figure 3.

The best response regions in Young’s example.

Let us now consider the two neighbor model. Consider the monomorphic state

and assume that one agent mutates to

B. With positive probability we reach the state

.

Likewise, consider

and assume that one agent mutates to

A. With positive probability, we reach the state

.

Hence, we have that

. Now, consider

. If one agent mutates to

B he will not prompt any of his neighbors to switch and will switch back himself after some time.

Likewise, assume that one agent mutates to

C. While the mutant will prompt other agents to switch to

B, after some time there will only be

A- and

B-players left from which point on

A can take over the entire population.

Thus, we can not leave the basin of attraction of

with one mutation, implying that

. Consequently,

is LRE in the two neighbor model, as opposed to

in the global interactions framework. Consequently, the nature of interaction influences the prediction.

Furthermore, note that while GPRD does not have any predictive value in the global interactions framework the previous example suggests that it might play a role in the local interactions framework. Indeed, Alós-Ferrer and Weidenholzer [

27] show that GPRD strategies are always selected in the circular city model with

in

games. However, they also show that GPRD looses its predictive power in more general

games. Further, they also exhibit an example where non-monomorphic states are selected. Hence, one can also observe the phenomena of

coexistence of conventionsin the circular city model of local interactions.

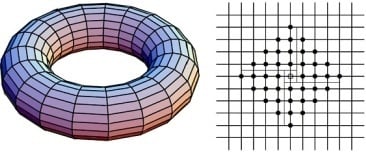

16 3.3. Interaction on the Lattice

Following Ellison [

1], we will now consider a different spatial structure where the players are situated on a grid, rather than a circle.

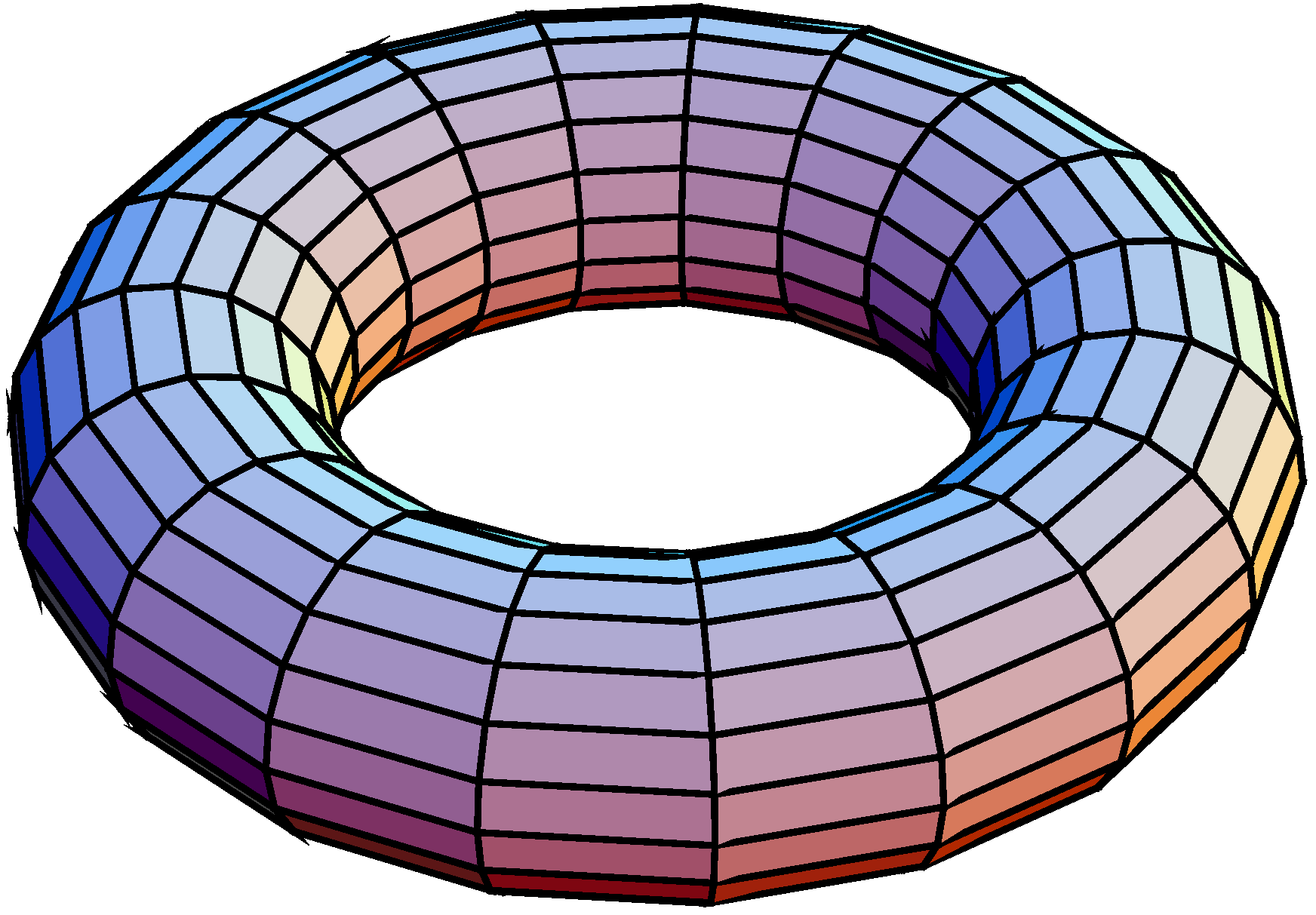

17 Formally, assume that

players are situated at the vertices of a lattice on the surface of a torus. Imagine a

lattice with vertically and horizontally aligned points being folded to form a torus where the north end is joined with the south end and the west end is joined with the east end of the rectangle.

Figure 4 provides an illustration of this interaction structure.

Following [

1] one can define the distance separating two players

and

as

A player is assumed only to be matched with players at a distance of at most

k with

and

,

i.e., player

is matched with player

if and only if

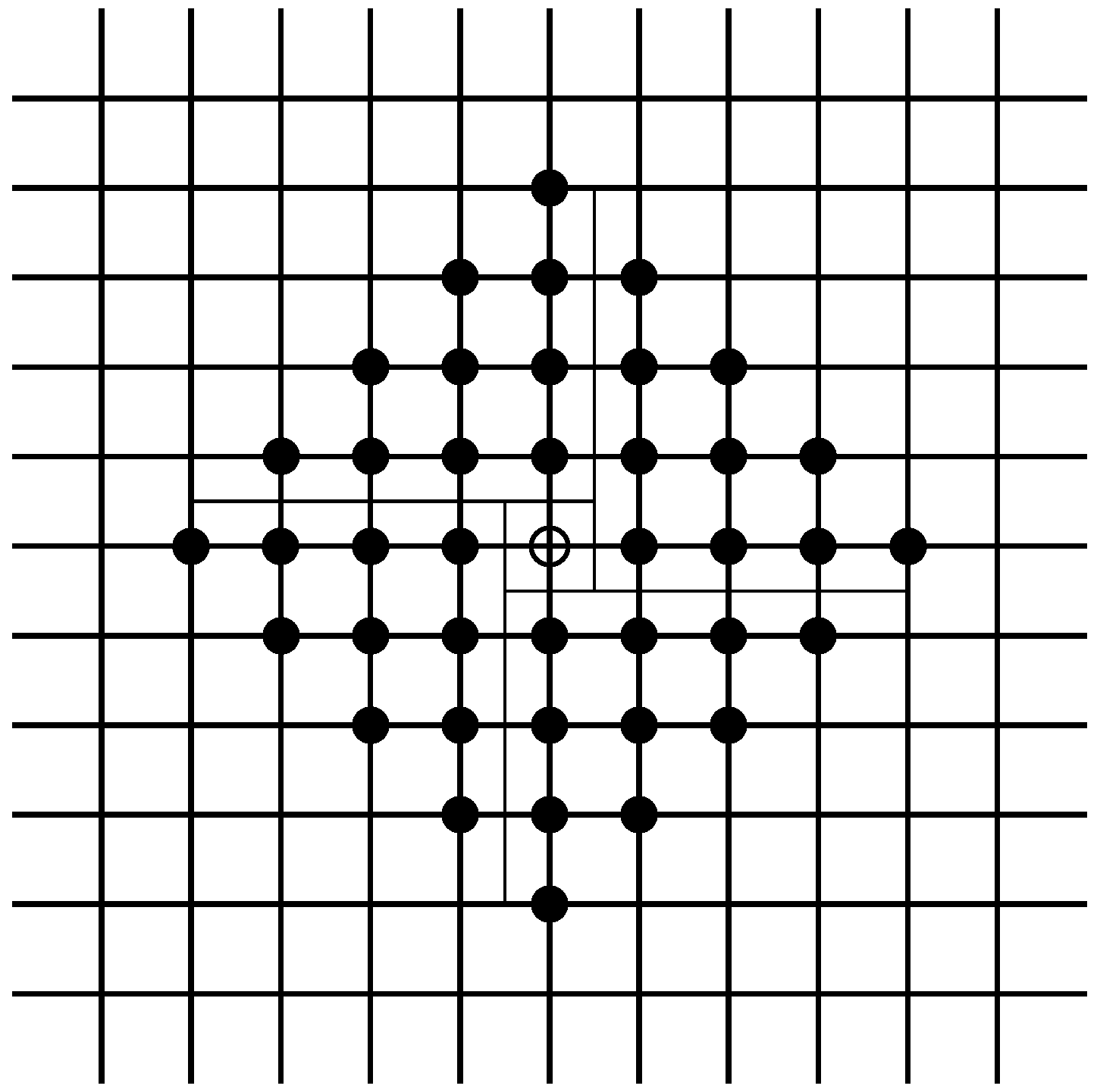

. Furthermore, note that (as can be seen from

Figure 5) within this setup each player has

neighbors. Thus, we define the neighborhood

of a player

, as the set of all of his neighbors. If

ω is the profile of strategies adopted by players at time

t, the total payoff for player

is

where

denotes the strategy of player

.

Figure 4.

Interaction on a torus.

Figure 4.

Interaction on a torus.

Figure 5.

Neighborhood of size on the lattice. It can be easily seen that a player has neighbors.

Figure 5.

Neighborhood of size on the lattice. It can be easily seen that a player has neighbors.

Each period, each player might receive the opportunity to revise strategy with positive probability. When presented with a revision opportunity a player switches to a myopic best response. More formally, player

at time

chooses

given the state

at

t. Eventual ties are assumed to be broken randomly.

A different kind of adjustment process is the

asynchronous best reply process in continuous time used by Blume [

21,

22]. Each player has an i.i.d. Poisson alarm clock. At randomly chosen moments in time a given player’s alarm clock goes off and a player receives the opportunity to adjust his strategy. Blume [

21] considers the following perturbed process. It is assumed that a player adopts a strategy according to the

logit choice rule. Under the logit choice rule players choose their strategy according to a full support logit distribution that puts more weight on strategies with a higher myopic payoff. As the noise level decreases the logit choice distribution converges to the degenerate distribution with all mass on the best reply. Blume [

21] shows that this process converges to the risk dominant convention in

coordination games in the long run. Blume [

22] considers an unperturbed adjustment process where whenever given possibility a player always adjusts to a best response to the current distribution of play. In varying the initial conditions he finds that the system converges to the risk dominant convention in

coordination games most of the time.

Let us now study Ellison’s [

1] model in detail. Assume that each player is only matched with his four closest neighbors with equal probability. Hence, the probability

for players

and

to be matched is given by

Note that, in general there may be many absorbing states. For instance, consider the following game

If four players in a square configuration play

A while the rest of the players plays

B the state is absorbing. Each

A-player gets a payoff of four. Switching to

B would only give him a payoff of two. Hence, the

A-players in the square will retain their strategy. Similarly, the adjacent

B-players have no incentives to change their strategies since this would decrease their payoff from three to two. One can construct a very large number of such non-monomorphic absorbing states by varying the size, shape, and locus of these blocks of

A players.

Note that in the two dimensional model a

-dominant strategy is not able to spread contagiously as in the one dimensional model. Rather, what matters in the two dimensional model is that clusters of players playing the

-dominant strategy grow as players mutating at the edge of these clusters cause new players to join them. Following Ellison [

1] we can in fact show that a

-dominant strategy (despite not being able to spread contagiously) is unique LRE in the model at hand.

To this end, assume now that strategy A is -dominant. If all players in a cross pattern (e.g., players and players ) play A all of them have at least two neighbors playing A and will retain their strategy. Furthermore, players playing A will expand from the center of the cross until the entire population plays A. So in order to leave we have to destabilize all possible crosses. So we need at least mutations. Hence we have .

Now, consider any state . With at most two mutations we can reach a state where players and play A. There is positive probability that players and retain their strategy and players and also switch to A. We then obtain a square of at least four A-players. Note that since all players in this square have two of their neighbors playing A they will not switch strategies. Furthermore, the dynamics can not destabilize this cluster of A-players. So assume the dynamics shifts us to a new state . If now player mutates to A player will follow. By adding successive single mutations we can shift two rows of players to strategy A. If we now work our way through two columns we obtain a cross configuration from which strategy A is able to spread contagiously. Hence, we can explicitly construct a path of modified cost at most two from to implying . Hence,

Proposition 6 Ellison [1]. A -dominant strategy is unique LRE under best reply learning in the lattice model whenever and . Furthermore, note that, even though the -dominant strategy is not able to spread contagiously, the speed of convergence is independent of the population size. In particular, we have that the expected waiting time until the risk dominant convention is first reached is of order .

5. Imitation

Note that in many situations economic agents lack the computing capacity to give a best response. Further, information costs might constrain them in gathering or processing all the information necessary to play a best response. Within a different context, it has to be noted that games are simplified representations of reality: it might be the case that the players who play the game do not recognize that they are actually playing a game, are not aware of the exact payoff structure, or simply do not know what strategies are available. In addition to this, people usually tend to be able to have a good estimate of how much their neighbors earn or what social status or prestige they enjoy. Under these circumstances players might be prompted to just copy successful behavior and abandon strategies that are less successful, thereby giving rise to an adjustment rule based on imitation, rather than rules based on best response.

28As already mentioned beforehand, the classic model of KMR is of an imitative nature. Within their setting agents imitate the strategy that has earned the on average highest payoff in the previous period. The underlying assumption in their adaptive process is that in each round all possible pairs are formed and agents concentrate on the average payoffs of these pairs. As in the case of best reply learning KMR’s imitative process leads to the adoption of the risk dominant convention in the long run. Robson and Vega-Redondo [

12] consider a modification of KMR’s framework where agents are

randomly matched in each round to play the coordination game and imitate strategies that earn high payoffs. Surprisingly, this process leads to the adoption of the payoff dominant strategy in the long run. The main reason behind this result is that once there are two agents playing the payoff dominant strategy they will be matched with strictly positive probability and may achieve the highest possible payoff. Under imitation learning all player will from then onwards adopt the payoff dominant strategy.

In order to best convey the underlying idea behind Robson and Vega-Redondo’s [

12] model, we will work with the

Imitate the Best Max Rule, IBM, which prescribes players to imitate the strategy that has yielded the highest payoff to some player. In Robson and Vega-Redondo [

12] original model players imitate strategies that have on average yielded the highest payoff, giving rise to the

Imitate the Best Average Rule, IBA.

29 The basic insights and the qualitative results are very similar under the two imitation rules, with the advantage of IBM being that it allows for a quicker and clearer exposition of those. Further, note that there is also a conceptual drawback of the IBA rule: If a strategy earns different payoffs to different players it can be the case that the player with the highest payoff switches strategies. To see this point, suppose there are three players. Further, suppose that players 1 and 2 use strategy

s and earn a payoff of 1 and 0, respectively and player 3 uses strategy

and earns a mediocre payoff of

. In this case, player 1 would switch strategies, even though he earns the highest payoff. Obviously, under the IBM rule it can never be the case that a player abandons the most successful strategy.

30Let us now consider the model of Robson and Vega-Redondo [

12] in more detail. Consider a population of

N players, where

N is assumed to be even. In each round the population is randomly matched into pairs to play our coordination game. Any way of pairing these players is assumed to be equally likely. Note that three different kinds of pairs can be formed: a pair of two

A-players with a payoff of

a to both of them, a pair of two

B-players with a payoff of

b to both of them, and a mixed pair with an

A- and a

B-player with a payoff of

c to the former and a payoff of

d to the latter player.

In each round, an agent presented with the opportunity to revise his strategy is assumed to copy the agent that has earned the highest payoff in the previous rounds. As above, with probability ϵ the agent ignores the prescription of the adjustment rule and chooses a strategy at random.

First, note that under the IBM rule only the two monomorphic states and are absorbing. To see this point, assume that some agent i earns the highest payoff in the overall population. With positive probability all agents may revise their strategy and will adopt the strategy of agent i. Thus, with positive probability we will reach either of the two monomorphic states. Now, consider the risk dominant convention and assume that two agents mutate to B. With positive probability, these two agents will be matched and will earn the highest possible payoff of b. With positive probability, all agents receive revision opportunity and will switch to B. Thus, there exists a positive probability path leading to the payoff dominant convention. It follows that we have, . Now, consider the payoff dominant convention . In order to move out of the basin of attraction of we need to reach a state that is such that no pair forms. For, otherwise all revising agents would adopt B. Thus, we need at least mutations to B, implying . It follows that,

Proposition 10 Robson and Vega-Redondo [12]. The state where everybody plays the risk dominant strategy is unique LRE under the IBM rule and under random matching for . Thus, the combination of imitation and a random interaction framework yields selection of the payoff dominant convention. This is remarkable in the sense that KMR select the risk dominant convention if interaction takes the form of a round robin tournament and points into the direction that imitation together with an “appropriate” interaction structure might allow agents to coordinate on the efficient convention.

We will now explore this idea in more detail within the local interactions framework of Ellison [

9]. It will turn out to be useful to normalize the payoff structure of the underlying game. Following Eshel, Samuelson, and Shaked [

13], without loss of generality one can transform payoffs to obtain

where

and

. Note that for coordination games we have

and

. Further, payoff dominance of

translates into

and risk dominance of

now reads as

.

Alós-Ferrer and Weidenholzer [

14] consider a setting where agents use the IBM rule to update their strategies. They find that whether a risk dominant or a payoff dominant convention will be established in the long run depends on the interaction radius of the individual agents. Formally, define the

interaction neighborhood (modulo

N) of a player

i, as the set of all of his neighbors. Thus, if

is the profile of strategies adopted by players at time

t, the total payoff for player

i is

Assume that each player observes the strategies played and the payoffs received by himself and all his neighbors. When given revision opportunity, an agent is assumed to adopt the strategy that has earned the highest payoff in his interaction neighborhood in the previous period.

Alós-Ferrer and Weidenholzer [

14] find that when players only interact with their two closest neighbors the selection of the risk dominant strategy persists. The logic behind this result is the following: One can show that there is a large number of absorbing states, where clusters of

A- and

B-players alternate, and that these states can be connected to each other through chains of single mutations.

Let us consider this point in more detail. In order to shorten our exposition, we only consider the case where

holds and remark that if

similar results can be obtained. So, consider the efficient convention and assume that two players mutates to

A.

The

B-players next to the

A players earn a payoff of 1, all

B players further away earn a payoff of 2, and the inner

A-players earn a payoff of

. Thus, under the IBM rule none of these players will switch and, thus, this state is absorbing. Now, we can reach

by a chain of single mutations. Hence, we have

.

Conversely, consider the risk dominant convention and assume that two agents mutate to

B.

Now the

B-players earn a payoff of 1, whereas the inner and the outer

A-players earn a payoff of

and

, respectively. Hence, none of the

A-players will switch and the

B-players will revert to

A. Thus, two mutations are not enough to leave the risk dominant convention, establishing

. It follows that,

Proposition 11 Alós-Ferrer and Weidenholzer [14]. In the two neighbor circular city model the risk dominant strategy is the unique LRE under the IBM rule. However, for larger neighborhoods payoff dominant strategies might be LRE. To see this point, consider the

-neighbors model and consider a state with

adjacent

A-players.

The two middle

B-players earn a payoff of

. The boundary

A-players obtain a payoff of at most

provided that

. The boundary

A-players observe the payoff of the middle

B-players and hence will switch strategy if

.

In the next period, at least four middle B-players earn . Iterating this argument, we reach the efficient convention .

This implies that for

any state with

adjacent

B players lies in the basin of attraction of

.

31 Note that we can move into

with

mutations implying

. To move out of the basin of attraction of

, we need to eliminate all

B-clusters of size

. This requires at least one mutation per cluster,

i.e.,

.

holds for

. Hence,

Proposition 12 Alós-Ferrer and Weidenholzer [14]. In the -neighbor circular city model a payoff dominant strategy is the unique LRE under the IBM rule for in a sufficiently large population. This implies that efficient conventions are easier to establish in larger neighborhoods. Furthermore, for every coordination game there exists a threshold neighborhood size such that an efficient equilibrium is LRE.

Alós-Ferrer and Weidenholzer [

15] consider a more generalized framework which applies to arbitrary networks. They show that if the information available (slightly) extends beyond the interaction neighborhood players coordinate on payoff dominant equilibria. The idea behind these “information spillovers” is that agents who interact with each other routinely also exchange information on what is going on in their respective interaction neighborhoods.

32 We will now lay out the main ideas of Alós-Ferrer and Weidenholzer [

15] model within the

-neighbors model of Ellison [

9].

Alós-Ferrer and Weidenholzer [

15] make a clear distinction between the

interaction neighborhood and the

information neighborhood. Players play the game against players in their interaction neighborhood but receive information about the pattern of play from their information neighborhood. As above, the interaction neighborhood of player

i is given by

(modulo

N). The

information neighborhood of player

i is assumed to consist of himself and his

, with

nearest neighbors.

i.e.,

(modulo

N). Players are assumed to always know what is going on in their interaction neighborhood,

i.e.,

. When deciding about their future behavior players consider the pattern of play in their information neighborhood. According to the IBM rule, a player adopts a strategy that has earned the highest payoff in his information neighborhood in the previous period. So, when revising strategies players do not only consider what is happening within their interaction neighborhood but also take into account the relative success of players who are not direct opponents.

Assume now that players always receive information from beyond their interaction neighborhood. So

and

. The most important feature of this setup is that once payoff dominant outcomes are established somewhere they can spread contagiously. This is similar to the spread of risk-dominant strategies in Ellison’s [

9] best-reply local interaction model.

The main reason for this result is that any state with

adjacent

B-players lies in the basin of attraction of

. To see this, consider any state with

adjacent

B-players. In the worst case they are surrounded by

A-players.

The inner

B-player now earns a payoff of

, which is the highest possible payoff. All

B- players and the boundary

A-players up to a distance of

m from the inner

B-player observe that

A earns this maximum payoff. Hence, the

B-players will retain their strategy and the boundary

A-players will switch to

B. In a next step the “new" boundary players will also change to

A and so forth. In this manner

B will extend to the whole population and we will eventually reach the state

. Thus, three mutations to

B are sufficient for a transition from

to

. Furthermore, from any hypothetical non-monomorphic state in an absorbing set, also

mutations suffice for a transition to

. Hence, we have

.

By the observation above, in order to leave the basin of attraction of we at least have to destabilize every B-cluster of size three. Hence, we need at least mutations, implying that . For , holds and we have that,

Proposition 13 Alós-Ferrer and Weidenholzer [15]. In the -circular city model with information neighborhood the payoff dominant convention is the unique LRE under the IBM rule in a sufficiently large population. In fact, Alós-Ferrer and Weidenholzer [

15] provide an even more general result which also applies to non-regular networks:

33 Whenever information extends beyond the interaction neighborhood,

34 the payoff dominant convention is unique LRE, provided that the number of disjoint neighborhoods of a network exceeds the number of players in the smallest neighborhood. The intuition behind this result is that once the efficient convention is played at some neighborhood (the smallest included) it will spread to the entire population. On the contrary, to upset the efficient convention it has to be destabilized in every disjoint neighborhood.

, MS-Word, and Scientific Workplace. This choice will probably be influenced to a larger extent by the technology standard the people one works with use than by the overall distribution of technology standards. Similarly, it is also reasonable to think that e.g., family members, neighbors, or business partners interact more often with each other than with anybody chosen randomly from the entire population. In such situations we speak of “local interactions”.

, MS-Word, and Scientific Workplace. This choice will probably be influenced to a larger extent by the technology standard the people one works with use than by the overall distribution of technology standards. Similarly, it is also reasonable to think that e.g., family members, neighbors, or business partners interact more often with each other than with anybody chosen randomly from the entire population. In such situations we speak of “local interactions”.