Integrated System for Auto-Registered Hyperspectral and 3D Structure Measurement at the Point Scale

Abstract

:1. Introduction

2. Background and Prototype

2.1. Background

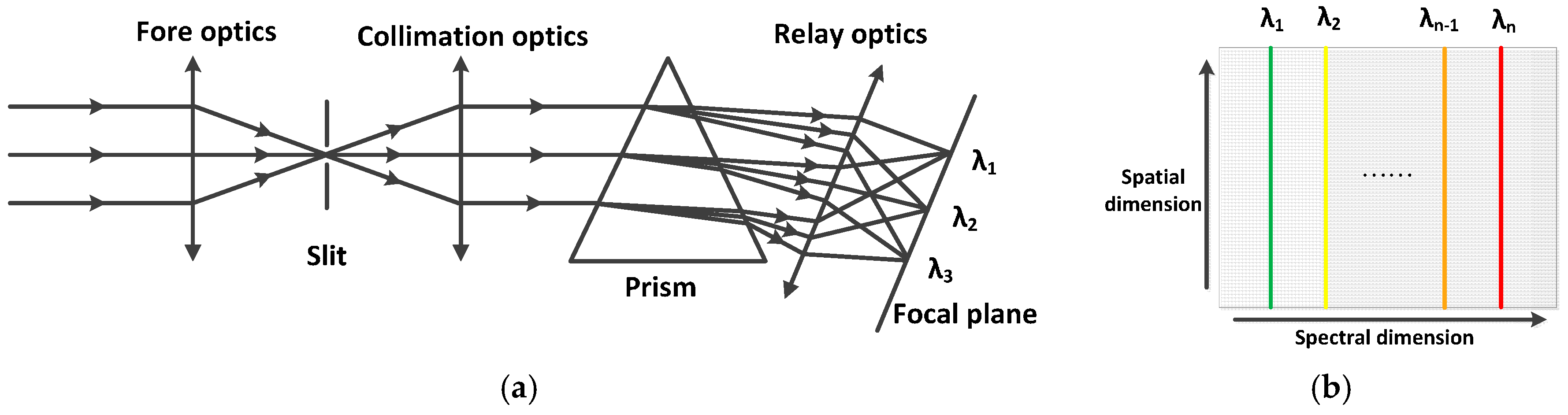

2.1.1. Principle of Prism Spectrometer

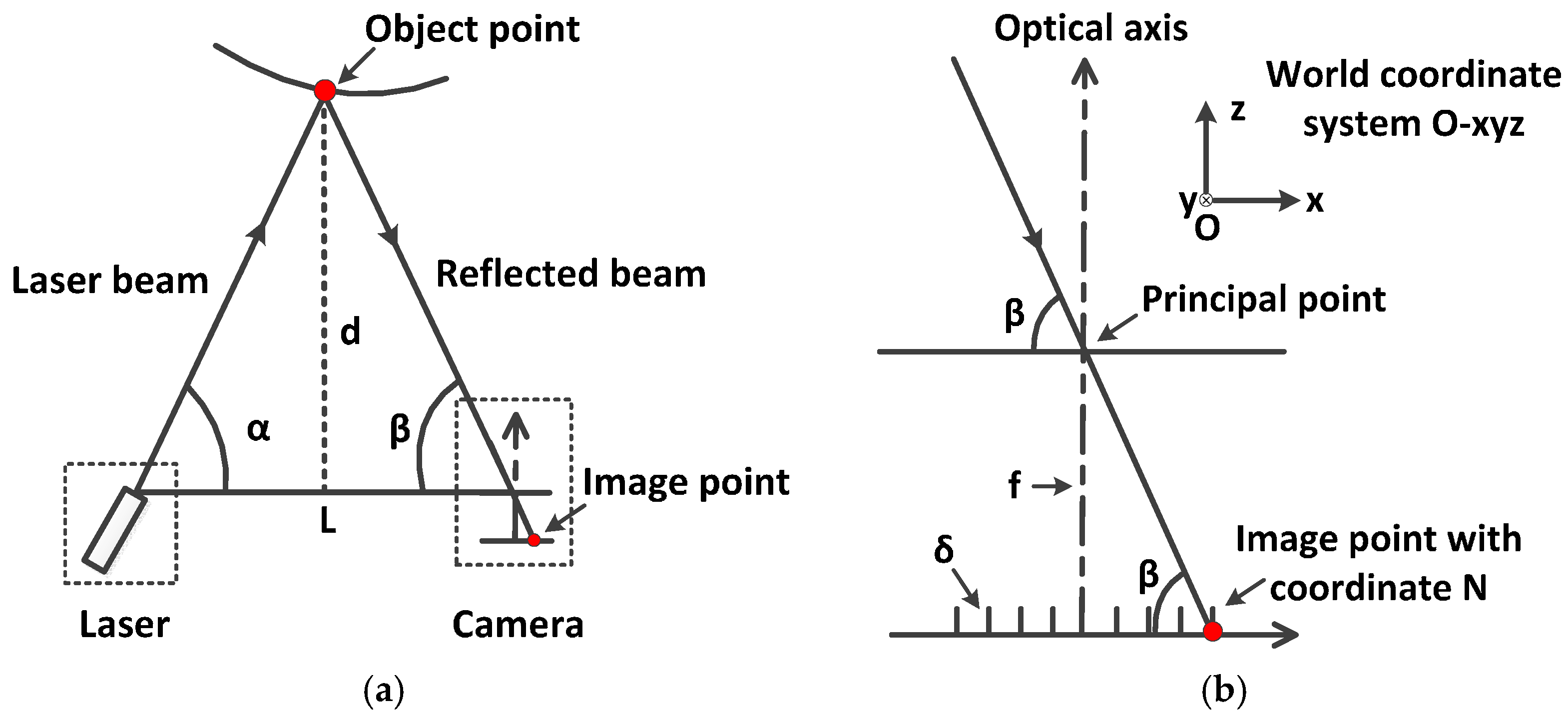

2.1.2. Principle of Laser Triangulation Ranging

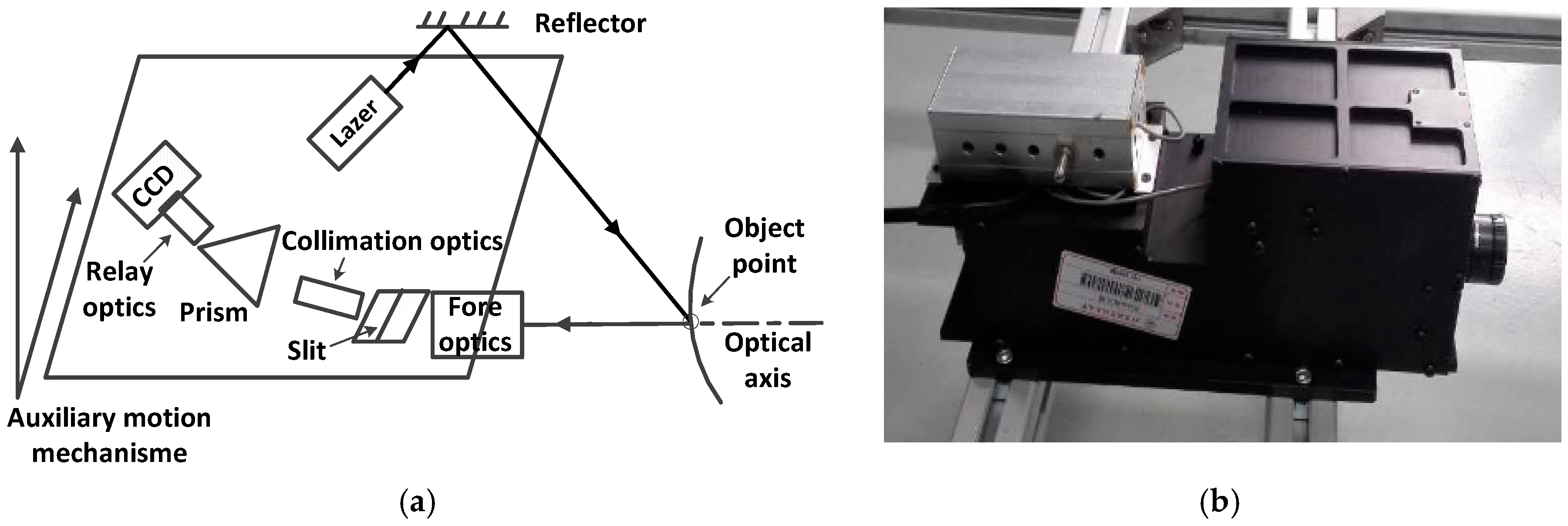

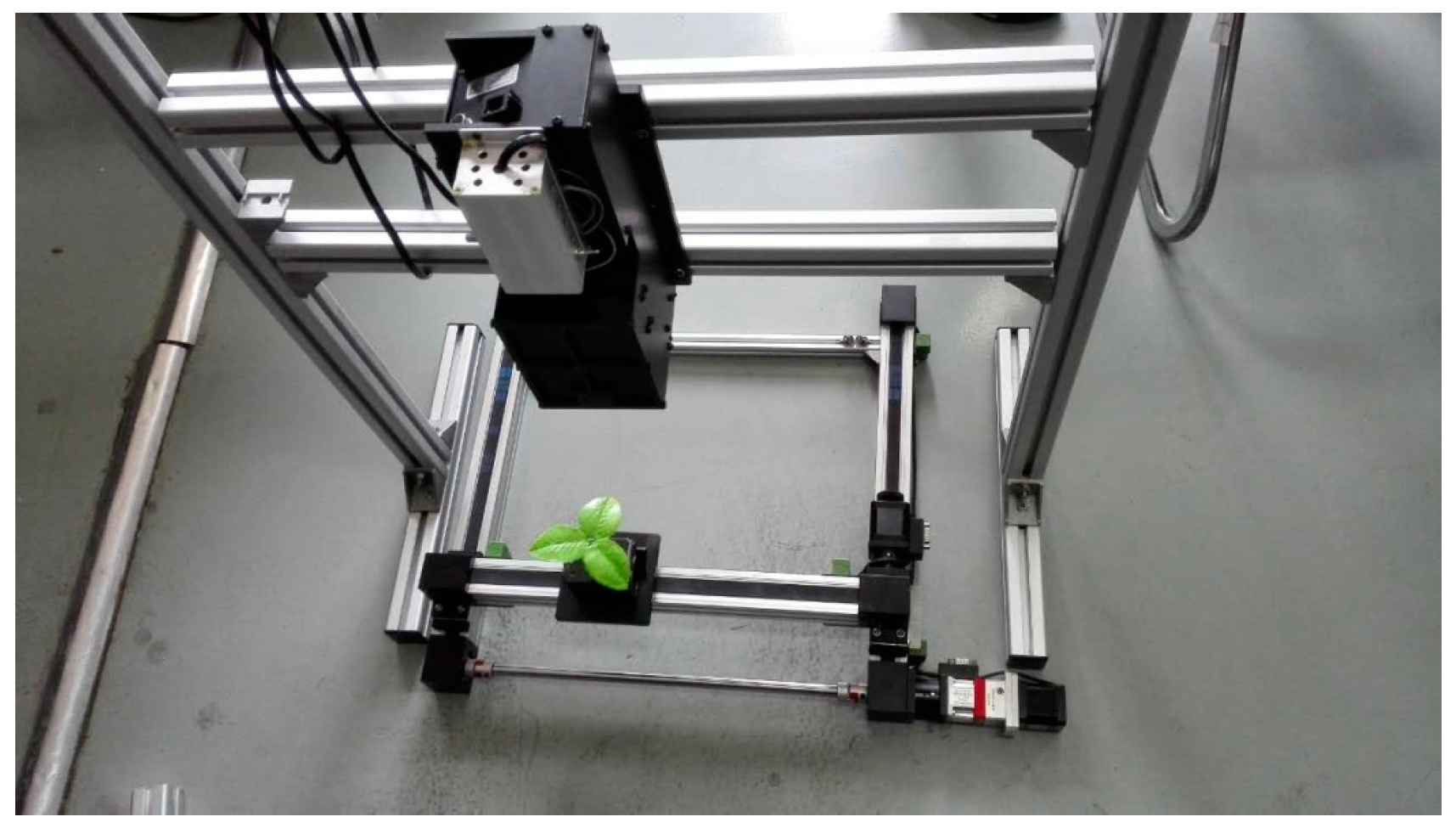

2.2. Prototype Design

- common optical path design of spectral dispersion and laser triangulation ranging;

- separation of spectral dispersion and laser triangulation ranging;

- extraction of the auto-registered data.

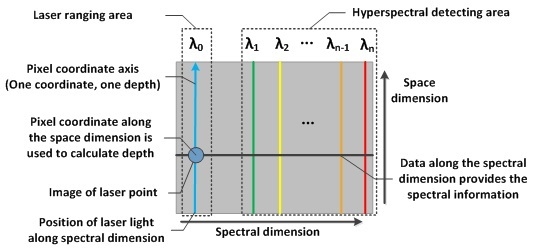

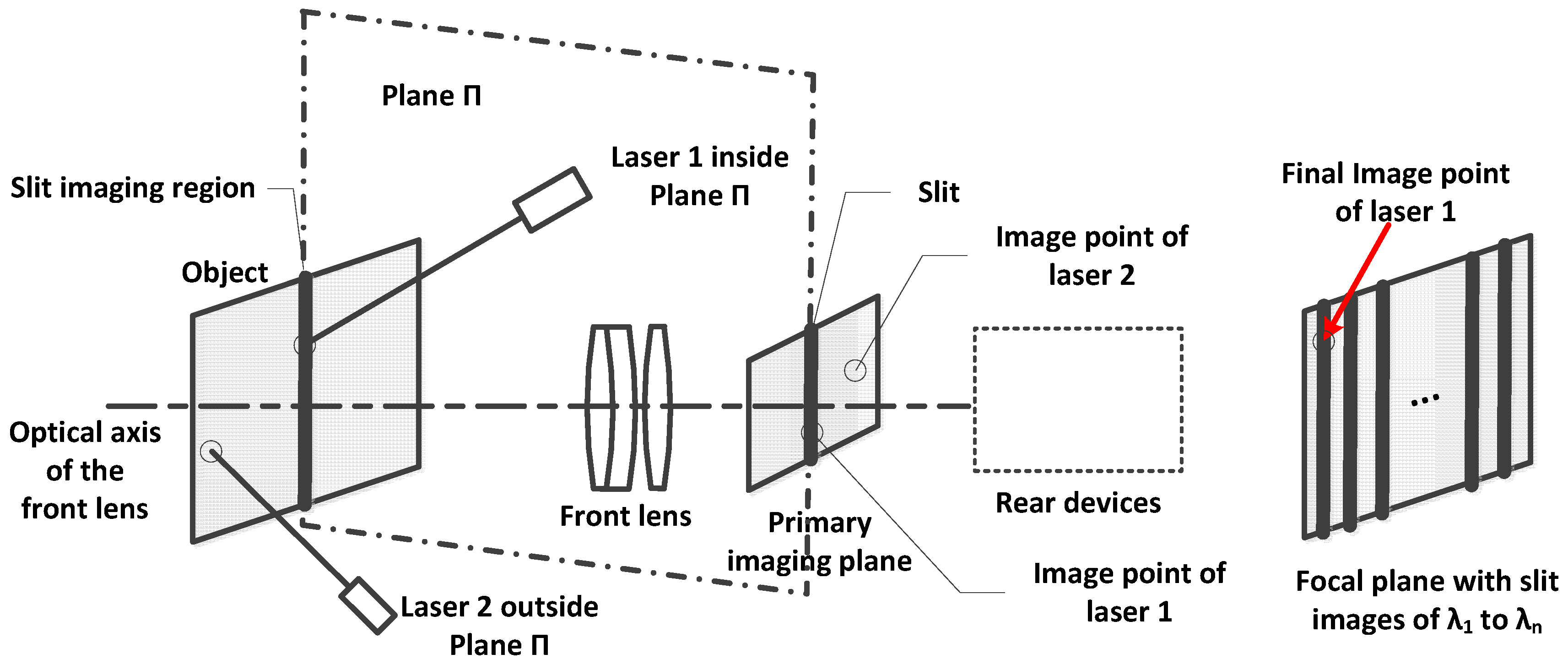

2.2.1. Common Optical Path Design

2.2.2. Separation of Spectral Dispersion and Laser Triangulation Ranging

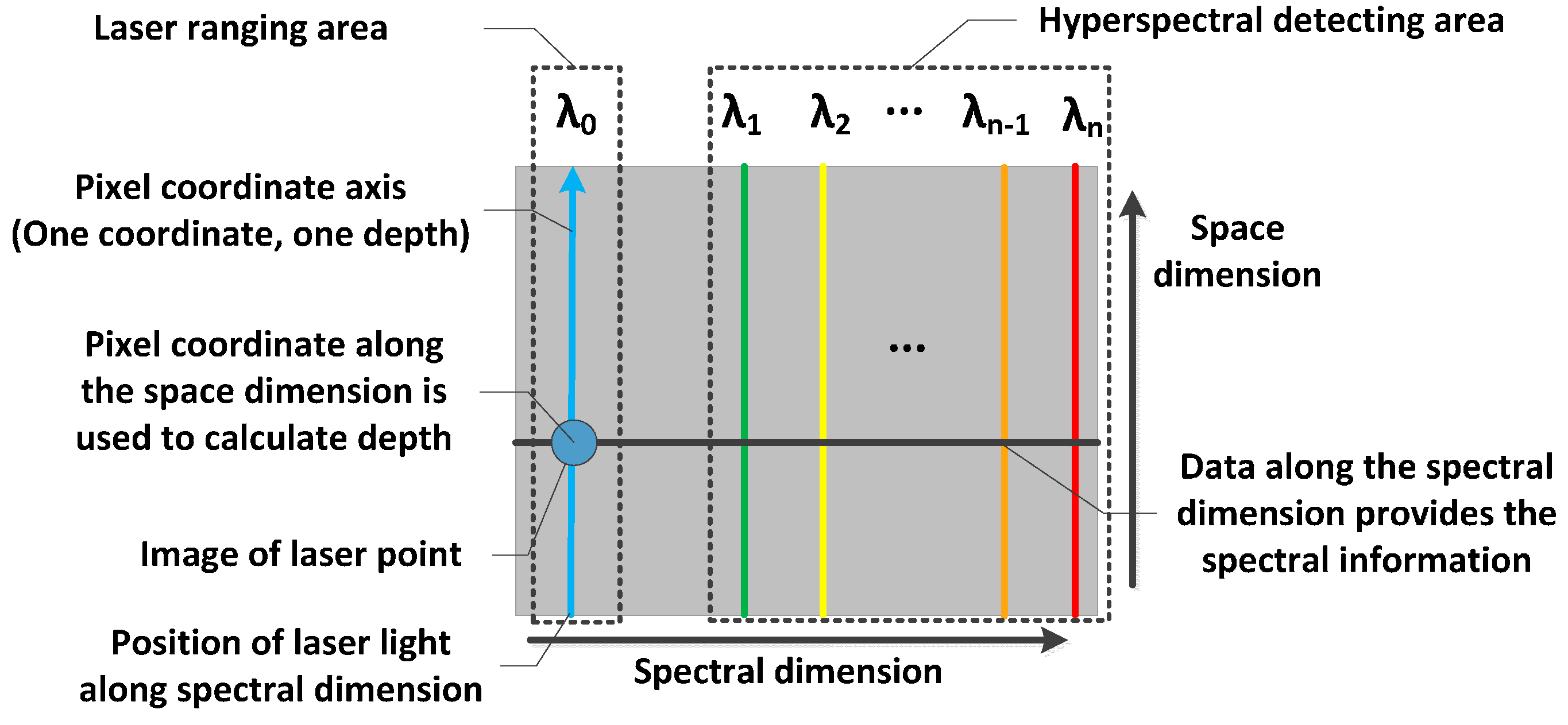

2.2.3. Extraction of the Auto-Registered Data

- (a)

- The depth value d is calculated with Formula (4) and is designated as the z coordinate in the world coordinate system O-xyz.

- (b)

- The x and y coordinates are recorded by the extra auxiliary motion mechanism. Thus, the 3D coordinates of the object point is determined.

- (c)

- With the pixel coordinate fixed along the spectral dimension, extract data on the same line of the focal plane along the spectral dimension, as shown in Figure 5. This data is the spectrum that corresponds to the exact object points where the laser illuminates.

2.3. Prototype Calibration

2.3.1. Spectral Calibration

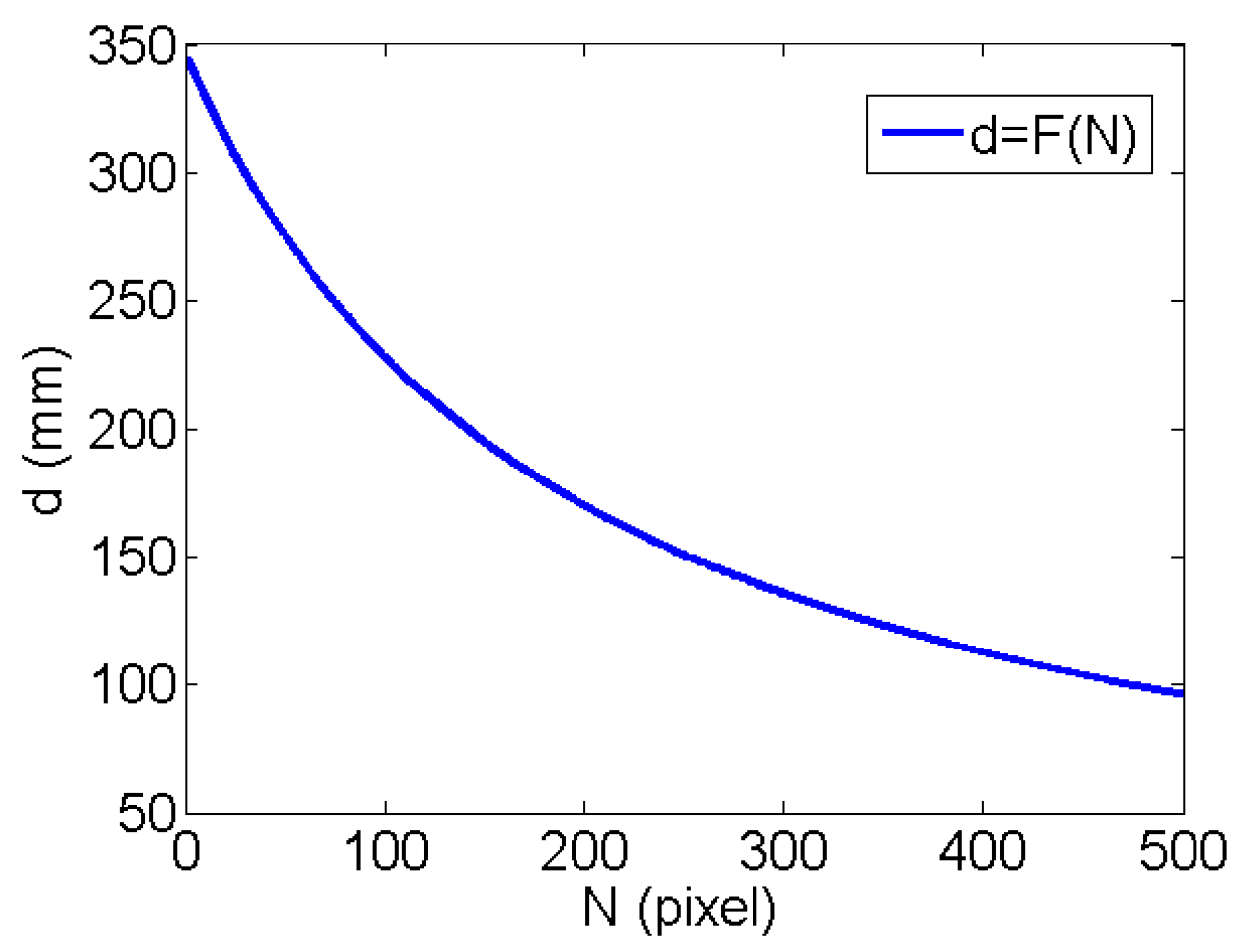

2.3.2. Depth Calibration

3. Experiment and Results

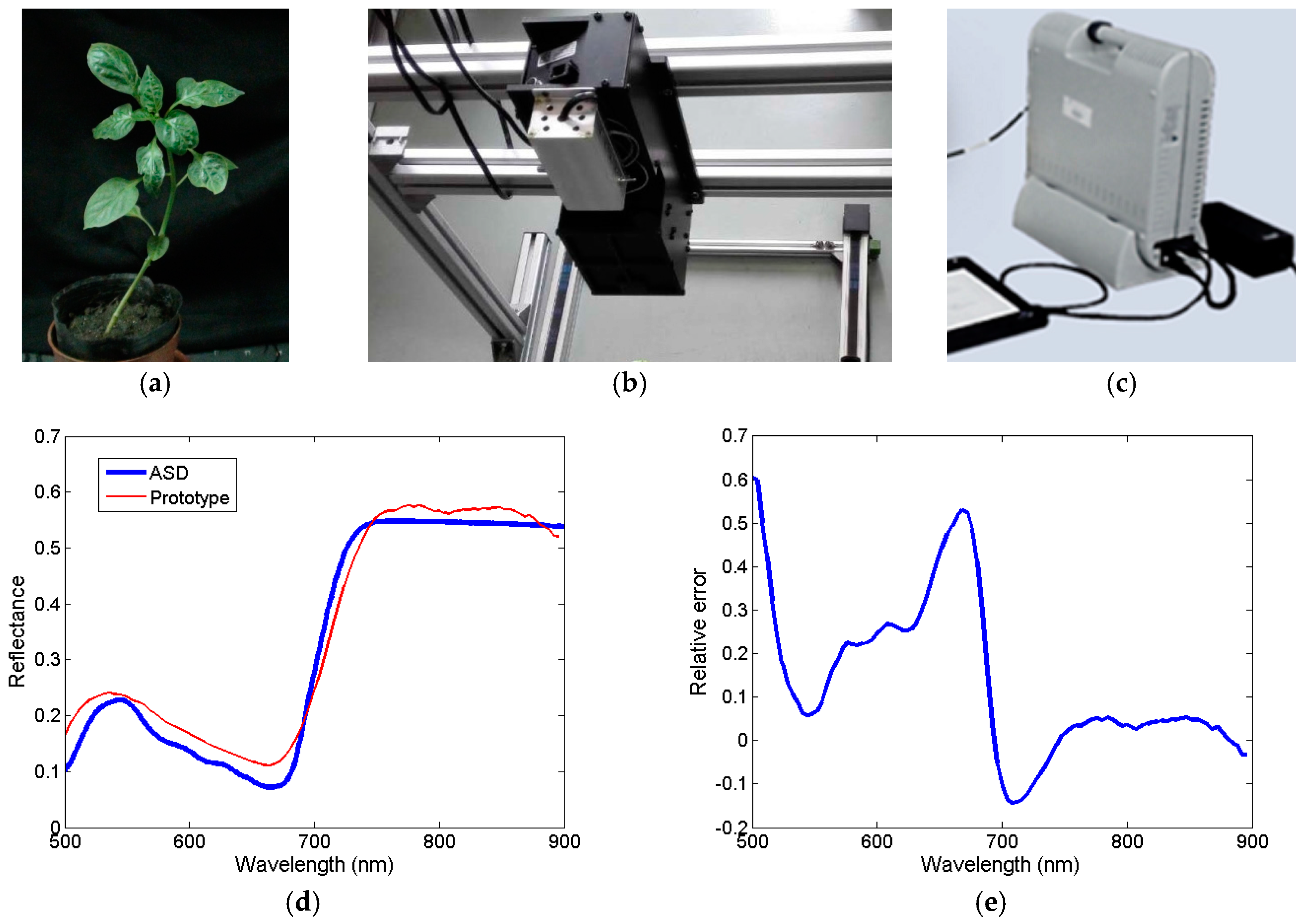

3.1. Test Experiment

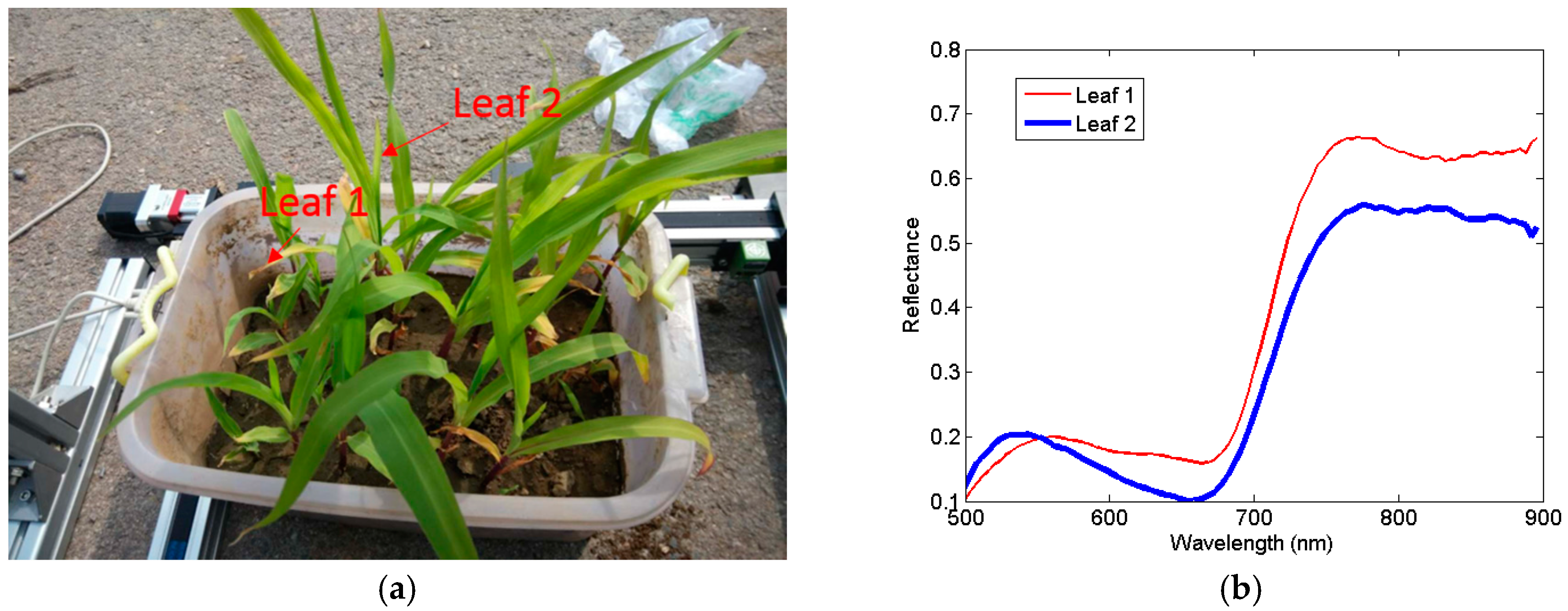

3.1.1. Spectral Measurement

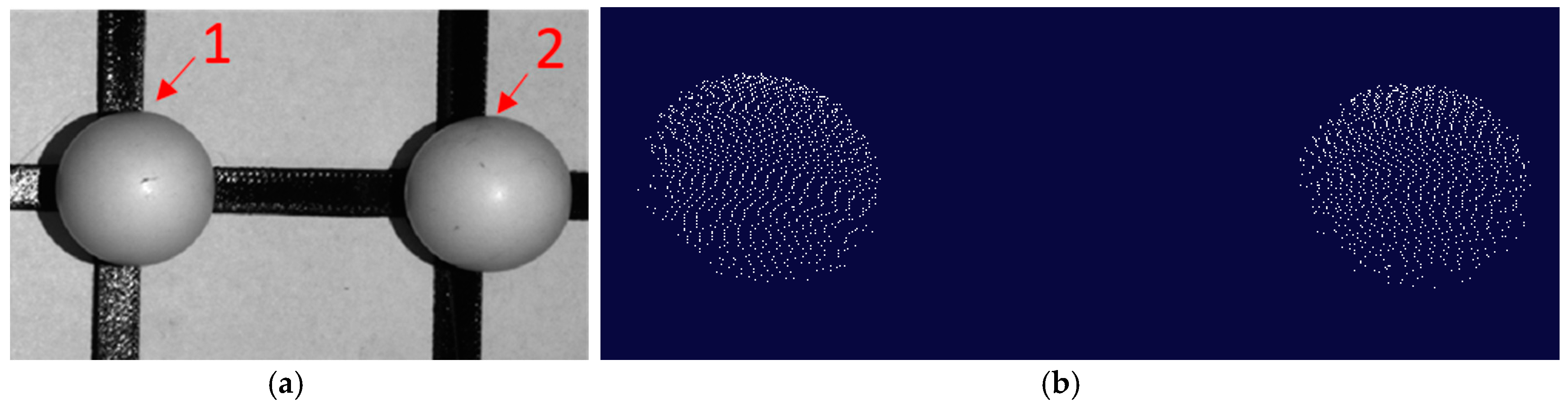

3.1.2. 3D Structure Measurement

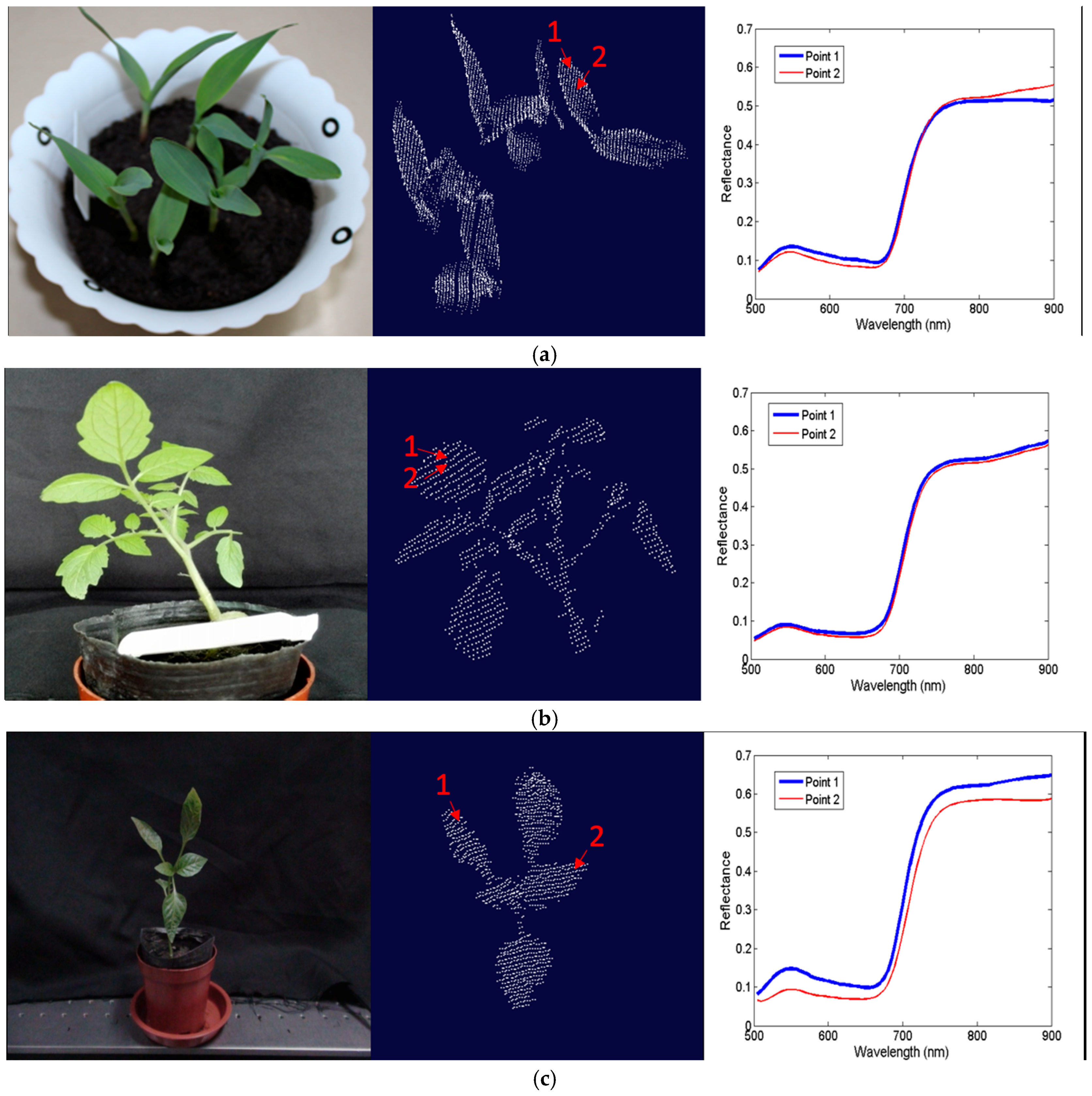

3.2. Vegetation Measurement

4. Discussion

4.1. Application Ability of the Prototype

4.2. Limitations of This Study

4.3. Future Work to Apply the Instrument in Precision Agriculture

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Liu, J.; Liu, L.; Xue, Y. Grid Workflow Validation Using Ontology-Based Tacit Knowledge: A Case Study for Quantitative Remote Sensing Applications. Comput. Geosci. 2016, 98, 46–54. [Google Scholar] [CrossRef]

- Sparks, A.; Kolden, C.; Talhelm, A. Spectral Indices Accurately Quantify Changes in Seedling Physiology Following Fire: Towards Mechanistic Assessments of Post-Fire Carbon Cycling. Remote Sens. 2016, 8, 572. [Google Scholar] [CrossRef]

- Friedli, M.; Kirchgessner, N.; Grieder, C. Terrestrial 3D laser scanning to track the increase in canopy height of both monocot and dicot crop species under field conditions. Plant Methods 2016, 12, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Xu, Z.; Yue, D.K. Analytical solution of beam spread function for ocean light radiative transfer. Opt. Express 2015, 23, 17966–17978. [Google Scholar] [CrossRef] [PubMed]

- Anderson, J.E.; Plourde, L.C.; Martin, M.E. Integrating waveform lidar with hyperspectral imagery for inventory of a northern temperate forest. Remote Sens. Environ. 2008, 112, 1856–1870. [Google Scholar] [CrossRef]

- Ivorra, E.; Verdu, S.; Sánchez, A.J. Predicting Gilthead Sea Bream (Sparus aurata) Freshness by a Novel Combined Technique of 3D Imaging and SW-NIR Spectral Analysis. Sensors 2016, 16, 1735. [Google Scholar] [CrossRef] [PubMed]

- Kalisperakis, I.; Stentoumis, C.; Grammatikopoulos, L. Leaf Area Index Estimation in Vineyards from Uav Hyperspectral Data, 2d Image Mosaics and 3d Canopy Surface Models. In Proceedings of the ISPRS International Conference on Unmanned Aerial Vehicles in Geomatics, Toronto, ON, Canada, 30 August–2 September 2015; Volume XL-1/W4. [Google Scholar]

- Asner, G.P. Carnegie Airborne Observatory: In-flight fusion of hyperspectral imaging and waveform light detection and ranging for three-dimensional studies of ecosystems. J. Appl. Remote Sens. 2007, 1, 013536. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Behmann, J.; Mahlein, A.K.; Paulus, S. Generation and application of hyperspectral 3D plant models: Methods and challenges. Mach. Vis. Appl. 2016, 27, 611–624. [Google Scholar] [CrossRef]

- Avbelj, J.; Iwaszczuk, D.; Müller, R. Coregistration refinement of hyperspectral images and DSM: An object-based approach using spectral information. ISPRS J. Photogramm. Remote Sens. 2014, 100, 23–34. [Google Scholar] [CrossRef]

- Liang, J.; Zia, A.; Zhou, J. 3D Plant Modelling via Hyperspectral Imaging. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Barcelona, Spain, 6–13 November 2013; pp. 172–177. [Google Scholar]

- Marzani, F.S.; Chane, C.S. Integration of 3D and multispectral data for cultural heritage applications: Survey and perspectives. Image Vis. Comput. 2013, 31, 91–102. [Google Scholar]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Zia, A.; Liang, J.; Zhou, J. 3D Reconstruction from Hyperspectral Images. IEEE Appl. Comput. Vis. 2015. [Google Scholar] [CrossRef]

- Itten, K.I.; Dell’Endice, F.; Hueni, A. APEX—The Hyperspectral ESA Airborne Prism Experiment. Sensors 2008, 8, 6235–6259. [Google Scholar] [CrossRef] [PubMed]

- Hoehler, M.S.; Smith, C.M. Application of blue laser triangulation sensors for displacement measurement through fire. Meas. Sci. Technol. 2016, 27, 115201. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Huang, P.S. Novel method for structured light system calibration. Opt. Eng. 2006, 45, 083601. [Google Scholar]

- Florek, S.; Becker-Ross, H.; Heitmann, U. Method for Wavelength Calibration in an Echelle Spectrometer. EP20030737282, 2008. [Google Scholar]

- Sadler, D.A.; Littlejohn, D.; Perkins, C.V. Automatic wavelength calibration procedure for use with an optical spectrometer and array detector. J. Anal. At. Spectrom. 1995, 10, 253–257. [Google Scholar] [CrossRef]

- Zhuo, H. Laser spot measuring and position method with sub-pixel precision. In Proceedings of the SPIE International Symposium on Photoelectronic Detection and Imaging 2007: Related Technologies and Applications, Beijing, China, 29 January 2008; Volume 6625, p. 66250A. [Google Scholar]

- Osborne, S.L.; Schepers, J.S.; Francis, D.D. Use of Spectral Radiance to Estimate In-Season Biomass and Grain Yield in Nitrogen- and Water-Stressed Corn. Crop Sci. 2002, 42, 165–171. [Google Scholar] [CrossRef] [PubMed]

- Delwiche, S.R. A graphical method to evaluate spectral preprocessing in multivariate regression calibrations: example with Savitzky-Golay filters and partial least squares regression. Appl. Spectrosc. 2010, 64, 73. [Google Scholar] [CrossRef] [PubMed]

| Item | Parameters |

|---|---|

| Mode | SP2300 |

| Scan range | 0–1400 nm |

| Wavelenght accuracy | ±0.2 nm |

| Repeatability | ±0.05 nm |

| Minimum drive step size | 0.005 nm |

| Slit Width | Spectral Range | Spectral Resolution | Working Distance (WD) | Pixel Size at WD |

|---|---|---|---|---|

| 100 µm | 500–900 nm | 4–8 nm @500–600 nm 8–15 nm @600–900 nm | 150–300 mm | 0.036–0.072 mm |

| Depth Accuracy | X and Y Positioning Accuracy | Laser Wavelength | Laser Point Size at WD | Measuring Speed |

| ±0.5 mm | ±0.03 mm | 450 nm | 0.8–1.2 mm | 10 points/s |

| Parameters | Sphere 1 | Sphere 2 |

|---|---|---|

| Measurement distance | 250 mm | 250 mm |

| Nominal diameter | 22.20 mm | 22.20 mm |

| Measured diameter | 21.87 mm | 21.84 mm |

| Error | −0.33 mm | −0.36 mm |

| Number of measured points | 3532 | 3757 |

| Time consumed | 370 s | 400 s |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, H.; Shi, S.; Gu, X.; Jia, G.; Xu, L. Integrated System for Auto-Registered Hyperspectral and 3D Structure Measurement at the Point Scale. Remote Sens. 2017, 9, 512. https://doi.org/10.3390/rs9060512

Zhao H, Shi S, Gu X, Jia G, Xu L. Integrated System for Auto-Registered Hyperspectral and 3D Structure Measurement at the Point Scale. Remote Sensing. 2017; 9(6):512. https://doi.org/10.3390/rs9060512

Chicago/Turabian StyleZhao, Huijie, Shaoguang Shi, Xingfa Gu, Guorui Jia, and Lunbao Xu. 2017. "Integrated System for Auto-Registered Hyperspectral and 3D Structure Measurement at the Point Scale" Remote Sensing 9, no. 6: 512. https://doi.org/10.3390/rs9060512