Multiple Constraints Based Robust Matching of Poor-Texture Close-Range Images for Monitoring a Simulated Landslide

Abstract

:1. Introduction

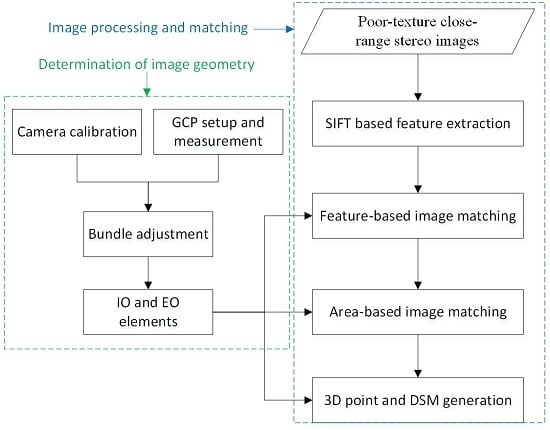

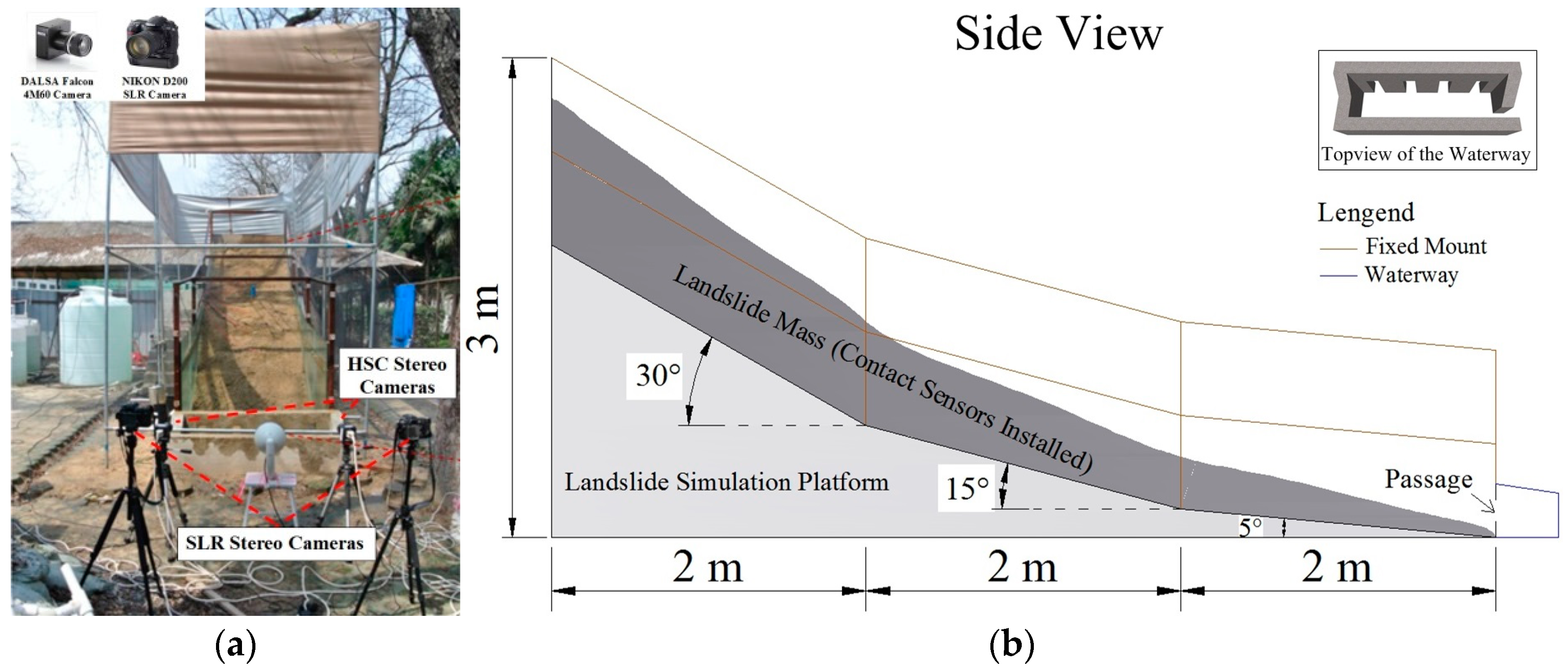

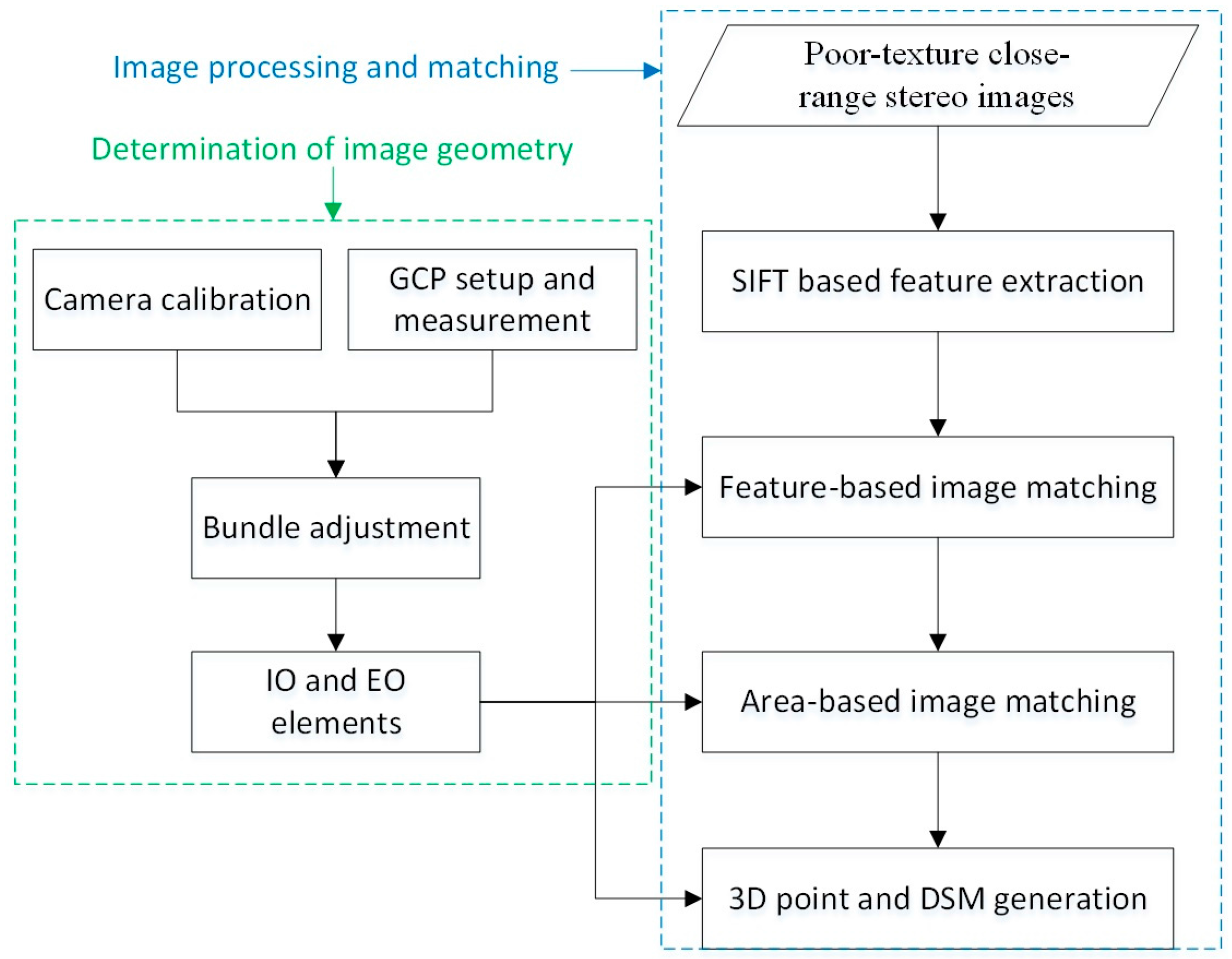

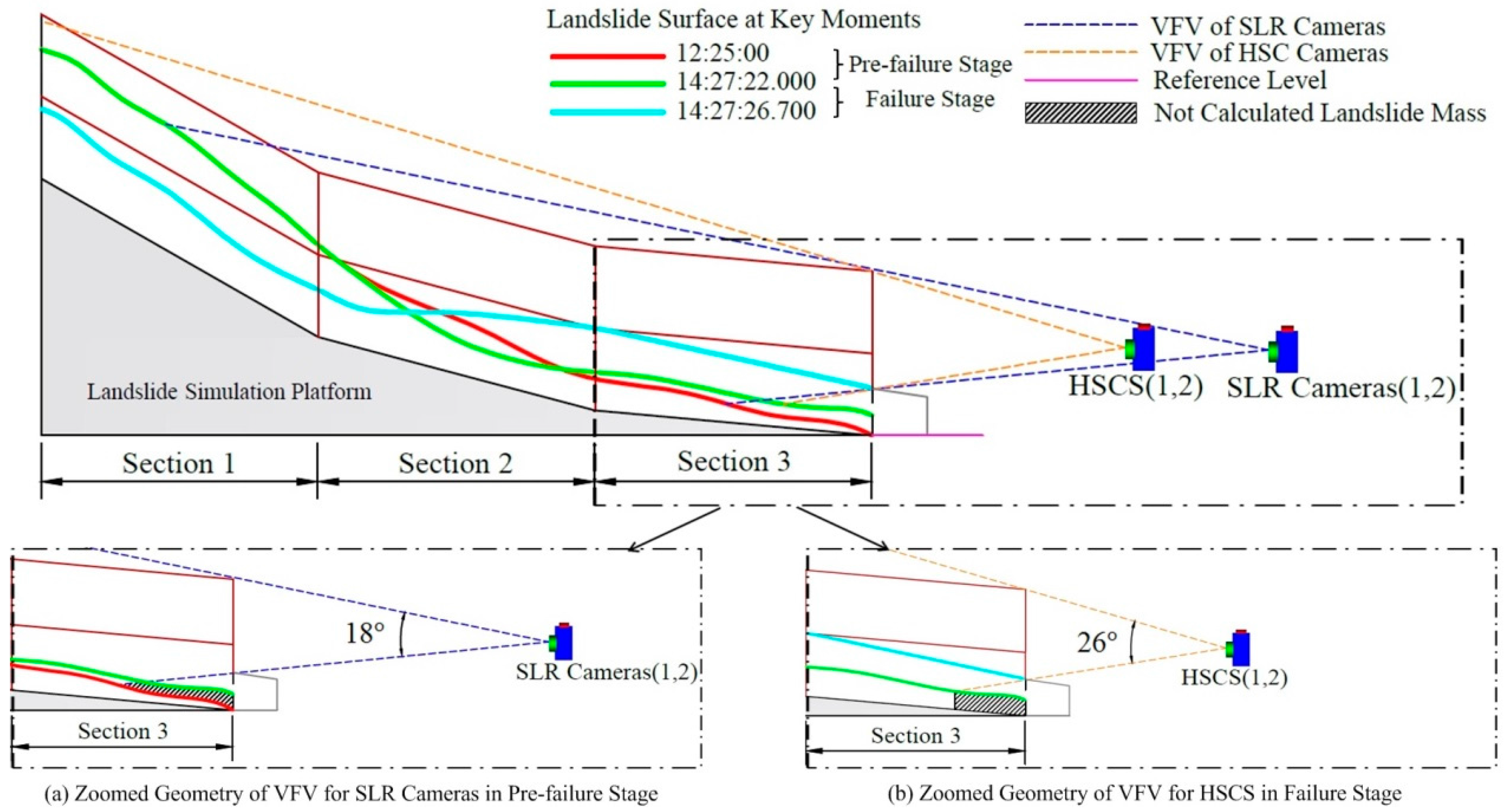

2. Landslide Platform Set Up and Simulation Experiment

3. Methodology

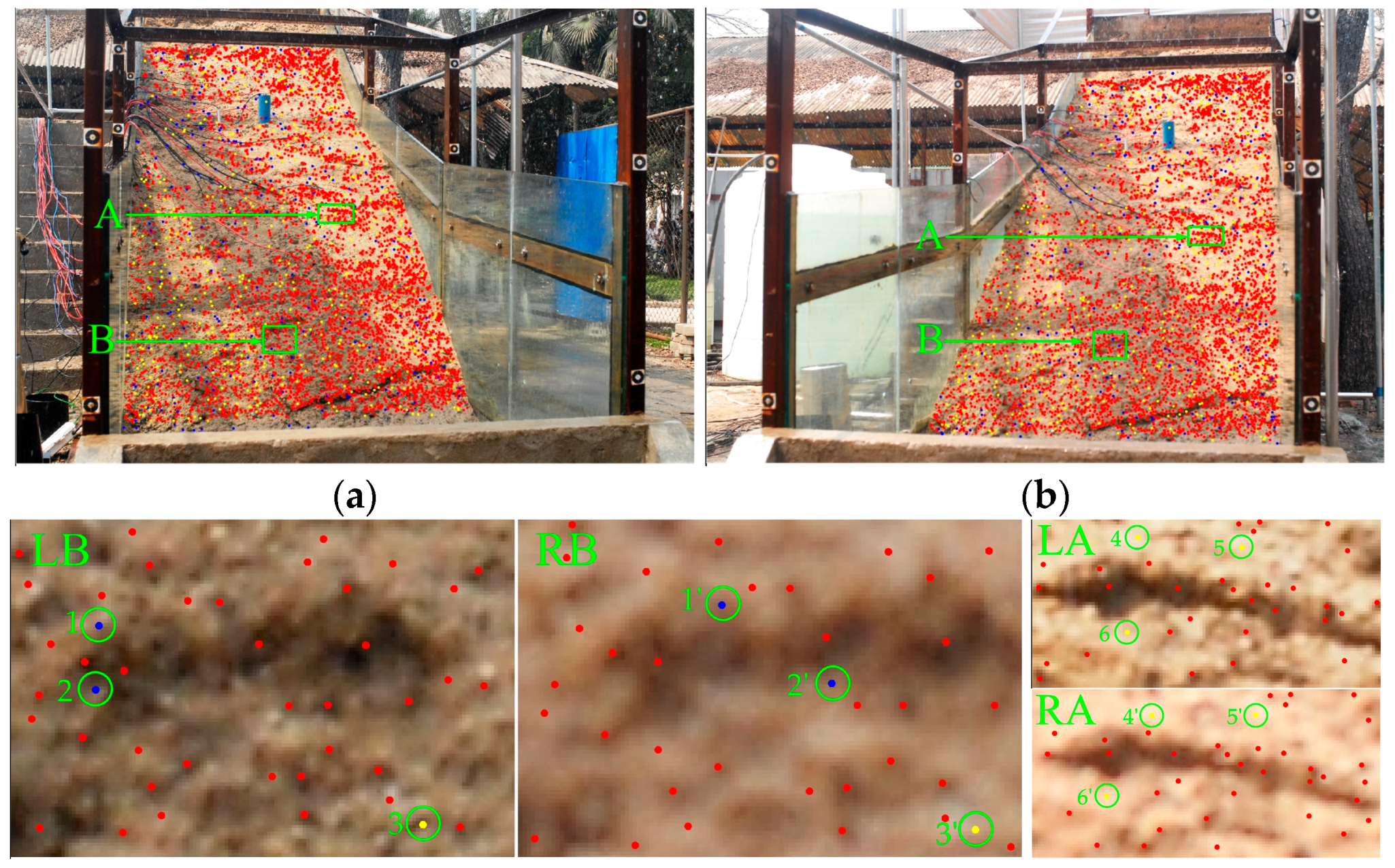

3.1. Feature Extraction

3.2. Feature-Based Image-Matching

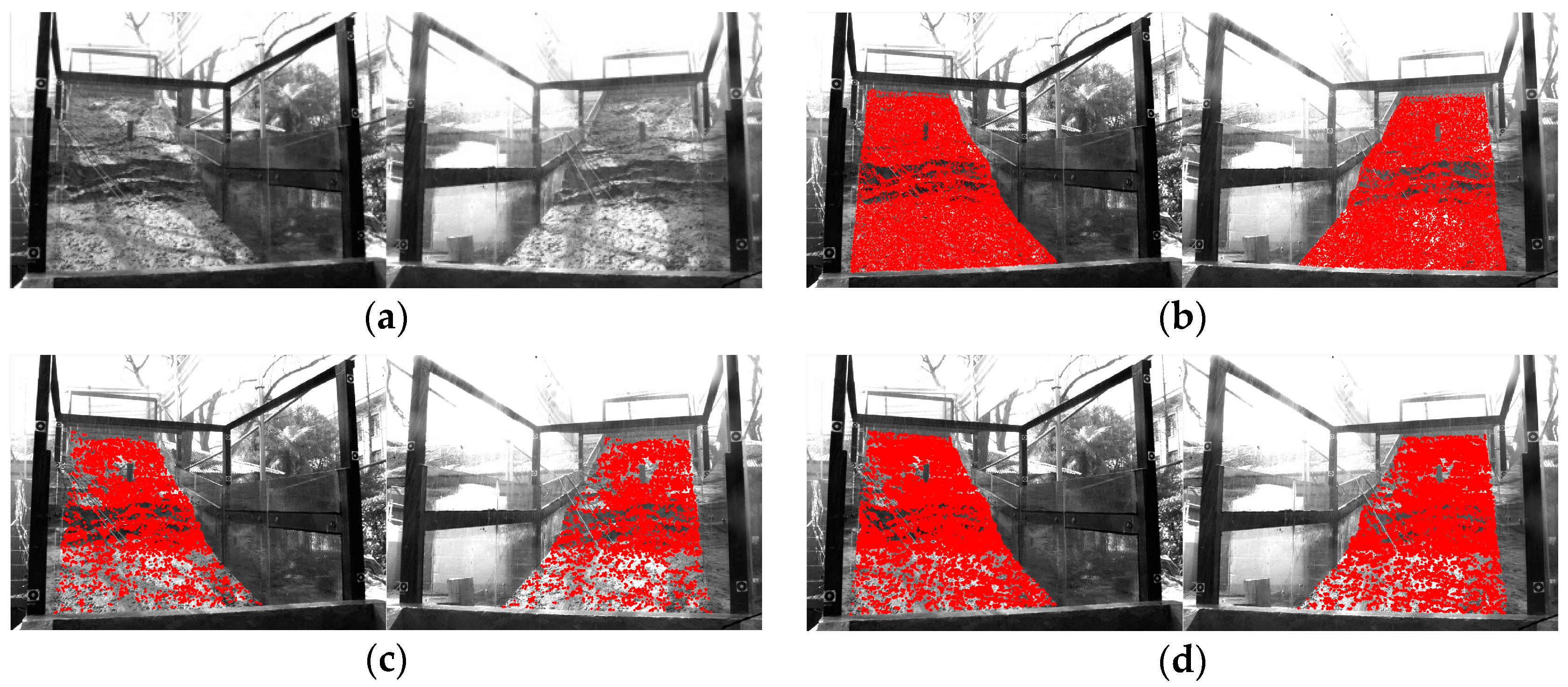

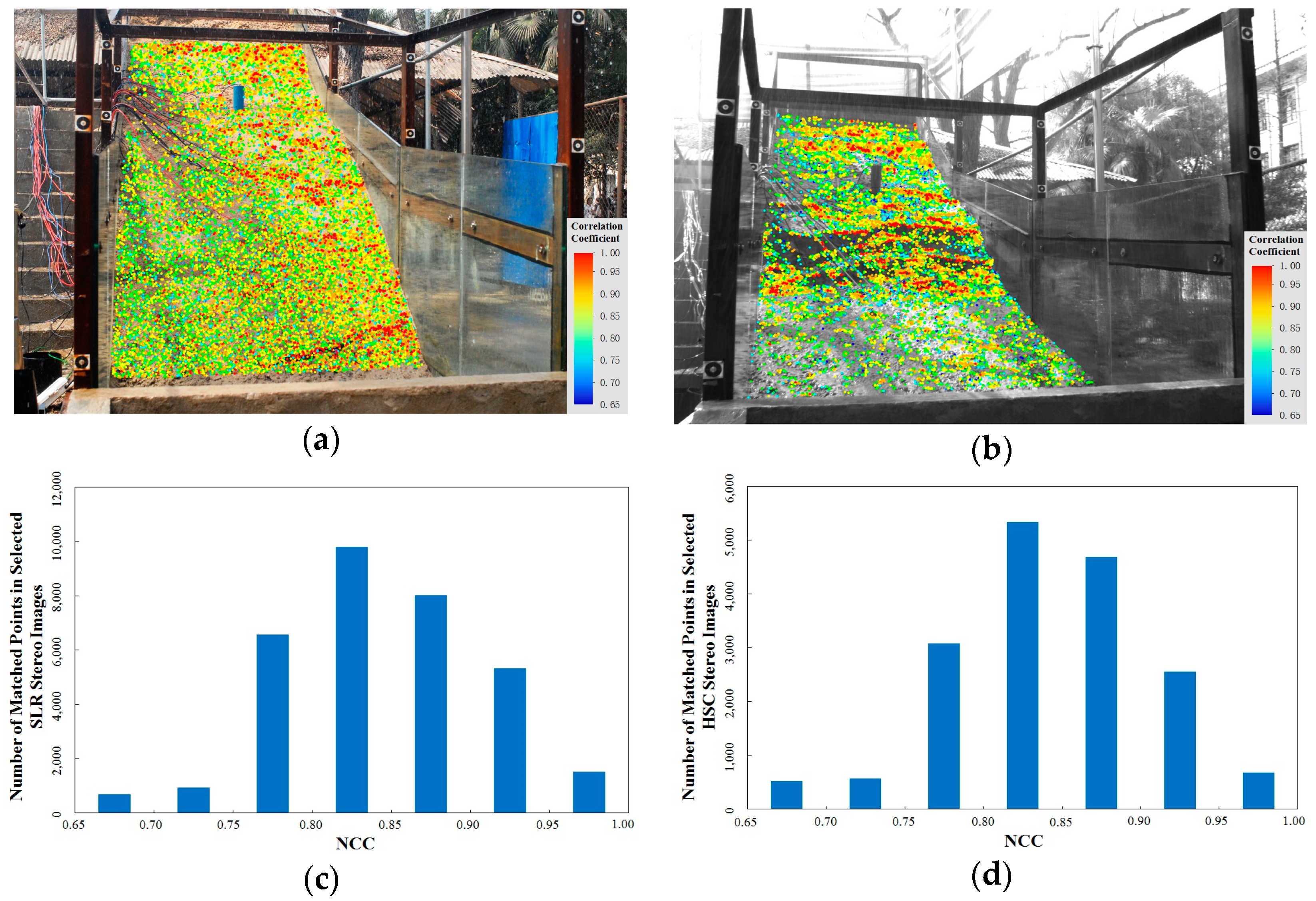

3.3. Area-Based Image-Matching

4. Results of Image Matching

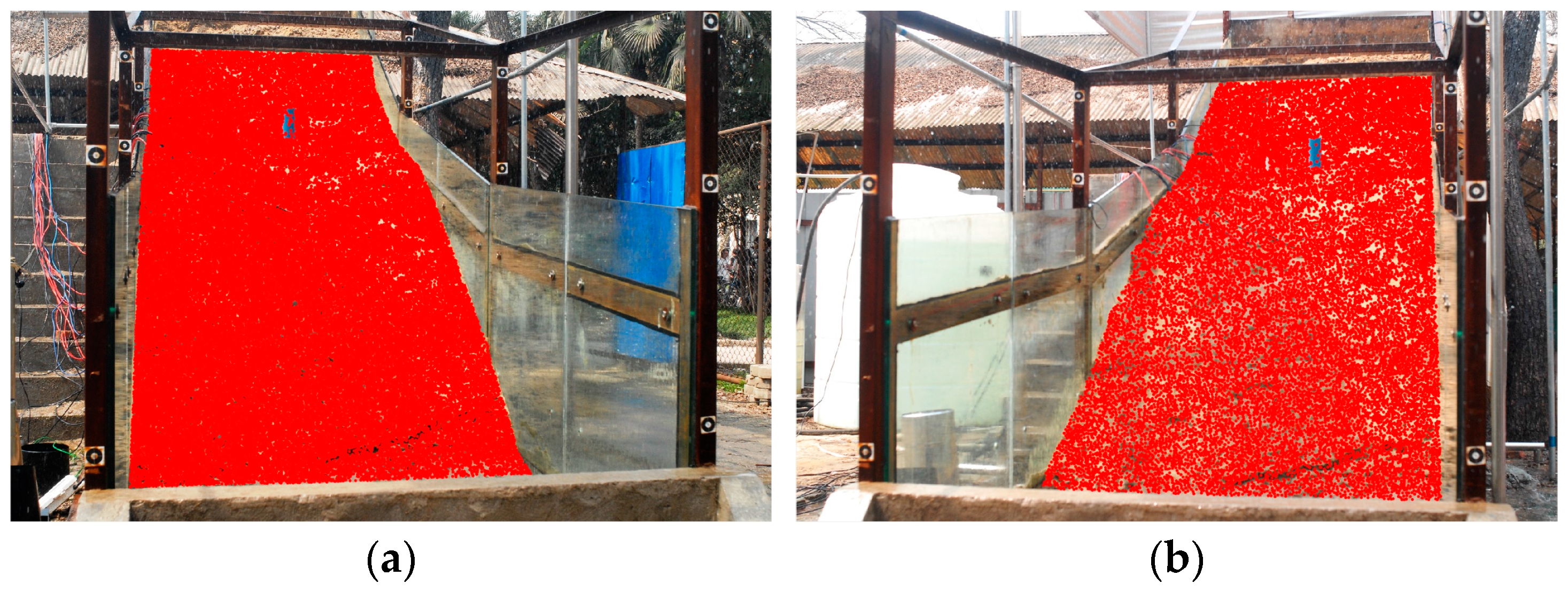

4.1. Image Processing for Stereo Image Series Recording Landslide Simulation Experiment

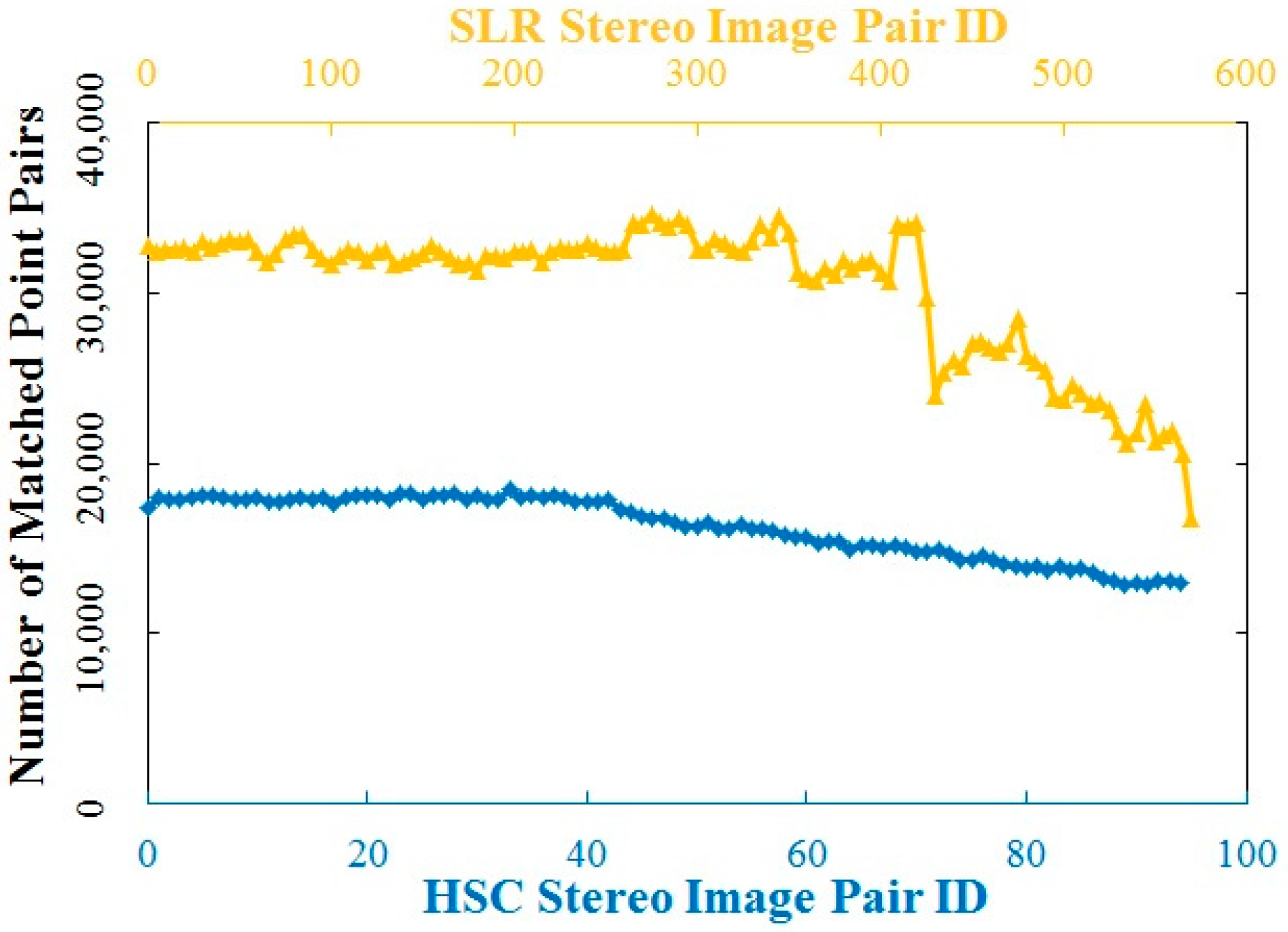

4.2. Evaluation of Image Matching Results

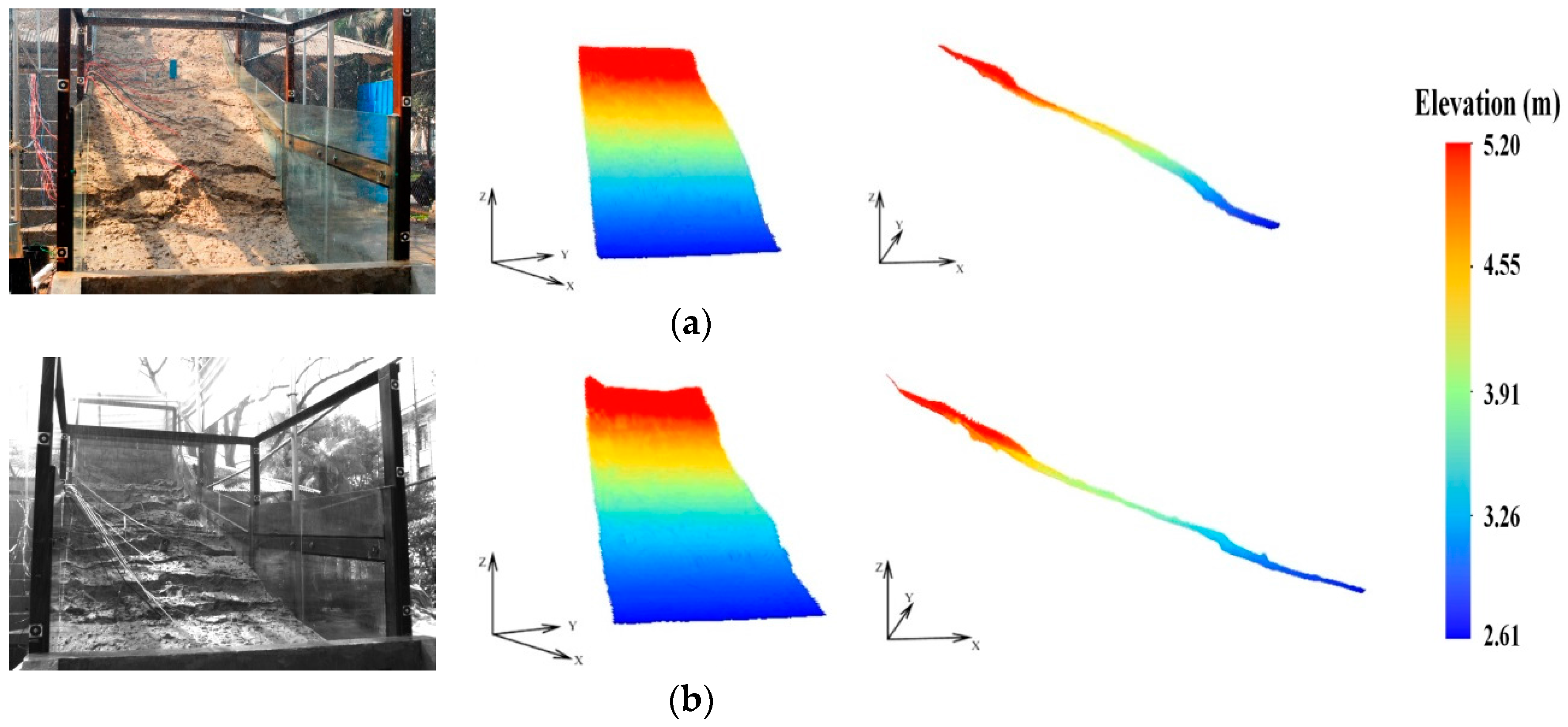

5. Results of Landslide Surface and Volume Changes

5.1. DSM Generation

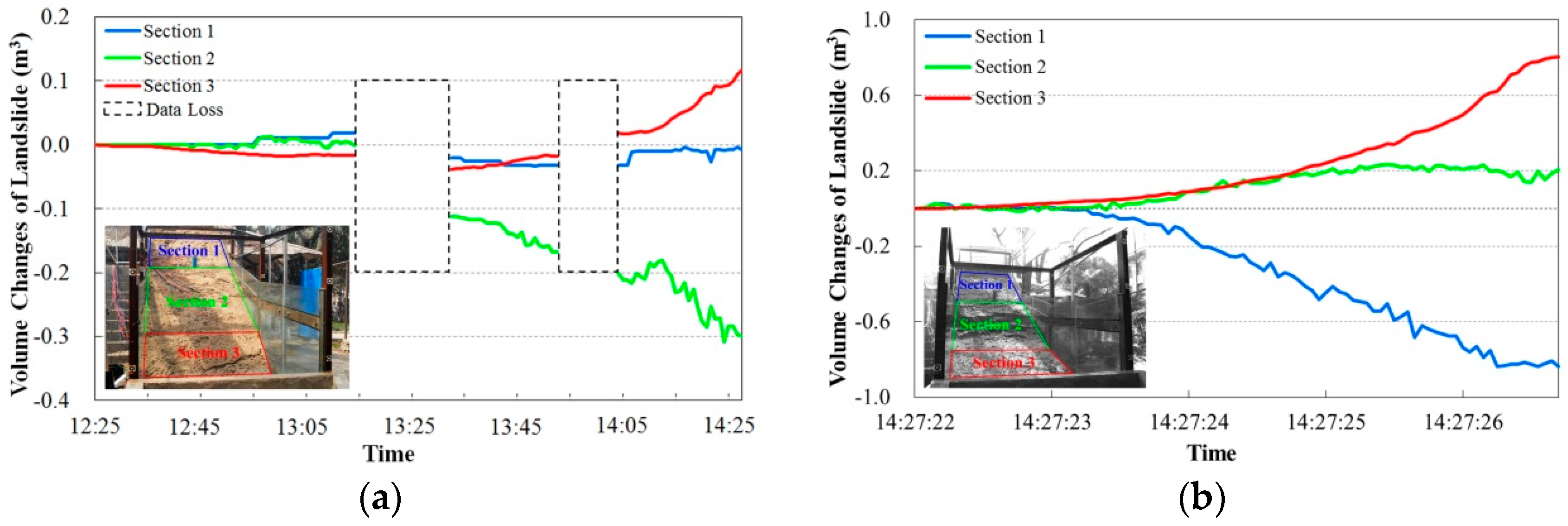

5.2. Landslide Volume Changes

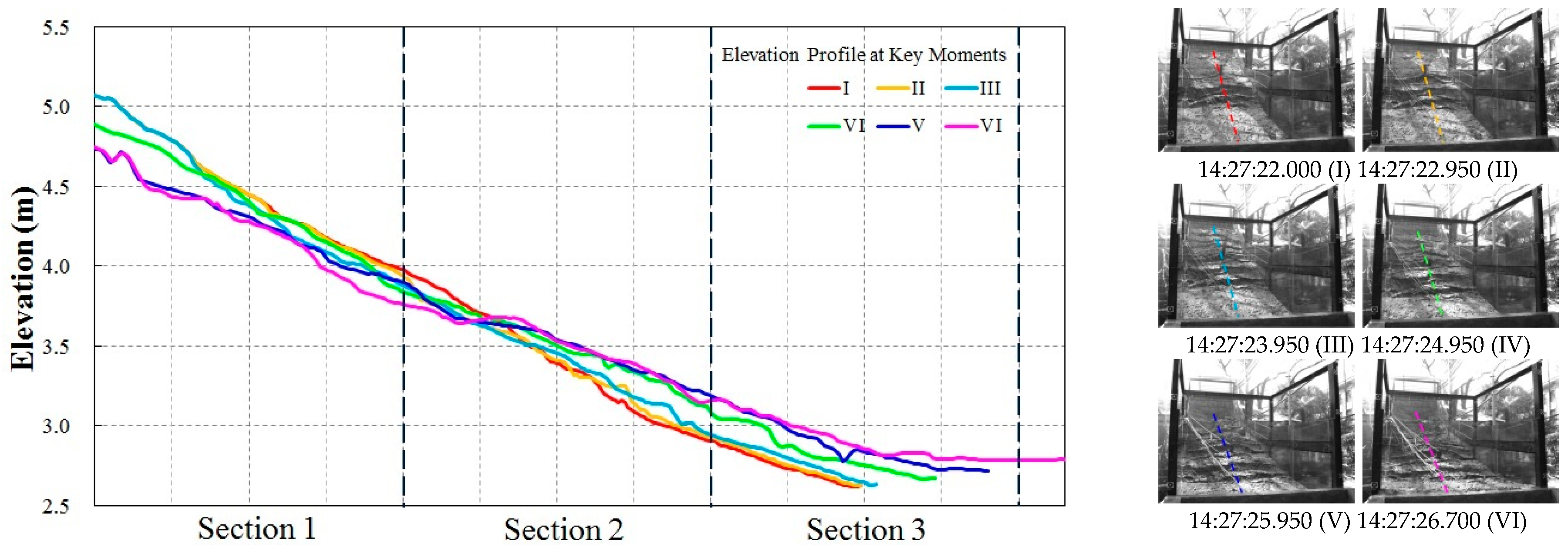

5.3. Landslide Surface Elevation Changes

6. Discussion

6.1. Comparison with Result of SGM Algorithm

6.2. Accuracy Evaluation

7. Conclusions

- (1)

- The proposed multiple-constraints based feature- to area- image matching methodology is capable of robustly matching the close-range, poor-texture images, obtaining almost evenly- and densely-distributed matches with sufficient matching accuracy.

- (2)

- The matching result of this method is relevant to the image quality that is usually affected by both the camera and capture settings, such as the image resolution and surface reflex of the object. For example, in the simulated landslide experiment, more matched points could be obtained from the color SLR images than from the grayscale HSC images due to the better imaging condition (e.g., higher resolution, less influence by water-pond regions).

- (3)

- The proposed robust image-matching method can be successfully applied to the low-frequency SLR and high-frequency HSC stereo image series collected in the simulated landslide experiment for generation of sequential DSMs, which helps to reveal the landslide evolution process triggered by rainfall, especially based on the volume and surface elevation changes in the instantaneous failure event.

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interes

Abbreviations

| SIFT | Scale Invariant Feature Transform |

| DSM | Digital Surface Model |

| SLR | Single-Lens Reflex |

| HSC | High-Speed Cameras |

| COSI-Corr | Co-registration of Optically Sensed Images and Correlation |

| PMS | PhotoModeler Scanner |

| GCP | Ground Control Point |

| PT | Perspective Transformation |

| EL | Epipolar Line |

| FM | Fundamental Matrix |

| IO | Internal Orientation |

| EO | External Orientation |

| NCC | Normalized Correlation Coefficient |

| VFV | Vertical Field View |

References

- Keefer, D.K.; Larsen, M.C. Assessing landslide hazards. Science 2007, 316, 1136–1138. [Google Scholar] [CrossRef] [PubMed]

- Xin, H. Slew of landslides unmask hidden geological hazards. Science 2010, 330, 744. [Google Scholar] [CrossRef] [PubMed]

- Hölbling, D.; Füreder, P.; Antolini, F.; Cigna, F.; Casagli, N.; Lang, S. A semi-automated object-based approach for landslide detection validated by persistent scatterer interferometry measures and landslide inventories. Remote Sens. 2012, 4, 1310–1336. [Google Scholar] [CrossRef] [Green Version]

- Behling, R.; Roessner, S.; Kaufmann, H.; Kleinschmit, B. Automated spatiotemporal landslide mapping over large areas using rapideye time series data. Remote Sens. 2014, 6, 8026–8055. [Google Scholar] [CrossRef]

- Lin, M.L.; Chen, T.W.; Lin, C.W.; Ho, D.J.; Cheng, K.P.; Yin, H.Y.; Chen, M.C. Detecting large-scale landslides using lidar data and aerial photos in the Namasha-Liuoguey area, Taiwan. Remote Sens. 2014, 6, 42–63. [Google Scholar] [CrossRef]

- Lu, P.; Bai, S.; Casagli, N. Investigating spatial patterns of persistent scatterer interferometry point targets and landslide occurrences in the arno river basin. Remote Sens. 2014, 6, 6817–6843. [Google Scholar] [CrossRef]

- Dou, J.; Oguchi, T.; Hayakawa, Y.S.; Uchiyama, S.; Saito, H.; Paudel, U. Susceptibility mapping using a certainty factor model and its validation in the Chuetsu Area, Central Japan. Landslide Sci. Safer Geoenviron. 2014, 2, 483–489. [Google Scholar] [CrossRef]

- Dou, J.; Yamagishi, H.; Pourghasemi, H.R.; Yunus, A.P.; Song, X.; Xu, Y.; Zhu, Z. An integrated artificial neural network model for the landslide susceptibility assessment of Osado Island, Japan. Nat. Hazards 2015, 78, 1749–1776. [Google Scholar] [CrossRef]

- Dou, J.; Chang, K.T.; Chen, S.; Yunus, A.; Liu, J.K.; Xia, H.; Zhu, Z. Automatic case-based reasoning approach for landslide detection: Integration of object-oriented image analysis and a genetic algorithm. Remote Sens. 2015, 7, 4318–4342. [Google Scholar] [CrossRef]

- Kasperski, J.; Delacourt, C.; Allemand, P.; Potherat, P.; Jaud, M.; Varrel, E. Application of a terrestrial laser scanner (TLS) to the study of the Séchilienne landslide (Isère, France). Remote Sens. 2010, 2, 2785–2802. [Google Scholar] [CrossRef]

- Brocca, L.; Ponziani, F.; Moramarco, T.; Melone, F.; Berni, N.; Wagner, W. Improving landslide forecasting using ascat-derived soil moisture data: A case study of the torgiovannetto landslide in Central Italy. Remote Sens. 2012, 4, 1232–1244. [Google Scholar] [CrossRef]

- Ghuffar, S.; Sz´ekely, B.; Roncat, A.; Pfeifer, N. Landslide displacement monitoring using 3D range flow on airborne and terrestrial LiDAR data. Remote Sens. 2013, 5, 2720–2745. [Google Scholar] [CrossRef]

- Ekström, G.; Stark, C.P. Simple scaling of catastrophic landslide dynamics. Science 2013, 339, 1416–1419. [Google Scholar] [CrossRef] [PubMed]

- Tofani, V.; Raspini, F.; Catani, F.; Casagli, N. Persistent Scatterer Interferometry (PSI) technique for landslide characterization and monitoring. Remote Sens. 2013, 5, 1045–1065. [Google Scholar] [CrossRef]

- Tantianuparp, P.; Shi, X.; Zhang, L.; Balz, T.; Liao, M. Characterization of landslide deformations in Three Gorges Area using multiple InSAR data stacks. Remote Sens. 2013, 5, 2704–2719. [Google Scholar] [CrossRef]

- Calò, F.; Ardizzone, F.; Castaldo, R.; Lollino, P.; Tizzani, P.; Guzzetti, F.; Lanari, R.; Angeli, M.G.; Pontoni, F.; Manunta, M. Enhanced landslide investigations through advanced DInSAR techniques: The Ivancich case study, Assisi, Italy. Remote Sens. Environ. 2014, 142, 69–82. [Google Scholar] [CrossRef]

- Chen, W.; Li, X.; Wang, Y.; Chen, G.; Liu, S. Forested landslide detection using LiDAR data and the random forest algorithm: A case study of the Three Gorges, China. Remote Sens. Environ. 2014, 152, 291–301. [Google Scholar] [CrossRef]

- Guzzetti, F.; Reichenbach, P.; Cardinali, M.; Galli, M.; Ardizzone, F. Probabilistic landslide hazard assessment at the basin scale. Geomorphology 2005, 72, 272–299. [Google Scholar] [CrossRef]

- Parker, R.N.; Densmore, A.L.; Rosser, N.J.; de Michele, M.; Li, Y.; Huang, R.; Whadcoat, S.; Petley, D.N. Mass wasting triggered by the 2008 Wenchuan earthquake is greater than orogenic growth. Nat. Geosci. 2011, 4, 449–452. [Google Scholar] [CrossRef] [Green Version]

- Dou, J.; Bui, D.T.; Yunus, A.P.; Jia, K.; Song, X.; Revhaug, I.; Xia, H.; Zhu, Z. Optimization of causative factors for landslide susceptibility evaluation using remote sensing and GIS data in parts of Niigata, Japan. PLoS ONE 2015. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dou, J.; Paudel, U.; Oguchi, T.; Uchiyama, S.; Hayakawa, Y.S. Shallow and deep-seated landslide differentiation using support vector machines: A case study of the Chuetsu Area, Japan. Terr. Atmos. Ocean. Sci. 2015, 26, 227–239. [Google Scholar] [CrossRef]

- Akbarimehr, M.; Motagh, M.; Haghshenas-Haghighi, M. Slope stability assessment of the Sarcheshmeh landslide, Northeast Iran, investigated using InSAR and GPS observations. Remote Sens. 2013, 5, 3681–3700. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Jong, S.D. Time series analysis of landslide dynamics using an unmanned aerial vehicle (UAV). Remote Sens. 2015, 7, 1736–1757. [Google Scholar] [CrossRef]

- Cui, P.; Guo, C.; Zhou, J.; Hao, M.; Xu, F. The mechanisms behind shallow failures in slopes comprised of landslide deposits. Eng. Geol. 2014, 180, 34–44. [Google Scholar] [CrossRef]

- Lourenço, S.D.N.; Sassa, K.; Fukuoka, H. Failure process and hydrologic response of a two layer physical model: Implications for rainfall-induced landslides. Geomorphology 2006, 73, 115–130. [Google Scholar] [CrossRef]

- Huang, C.C.; Yuin, S.C. Experimental investigation of rainfall criteria for shallow slope failures. Geomorphology 2010, 120, 326–338. [Google Scholar] [CrossRef]

- Qiao, G.; Lu, P.; Scaioni, M.; Xu, S.; Tong, X.; Feng, T.; Wu, H.; Chen, W.; Tian, Y.; Wang, W.; Li, R. Landslide investigation with remote sensing and sensor network: From susceptibility mapping and scaled-down simulation towards in situ sensor network design. Remote Sens. 2013, 5, 4319–4346. [Google Scholar] [CrossRef]

- Scaioni, M.; Lu, P.; Feng, T.; Chen, W.; Qiao, G.; Wu, H.; Tong, X.; Wang, W.; Li, R. Analysis of spatial sensor network observations during landslide simulation experiments. Eur. J. Environ. Civil Eng. 2013, 17, 802–825. [Google Scholar] [CrossRef]

- Hürlimann, M.; McArdell, B.W.; Rickli, C. Field and laboratory analysis of the runout characteristics of hillslope debris flows in Switzerland. Geomorphology 2015, 232, 20–32. [Google Scholar] [CrossRef] [Green Version]

- Feng, T.; Liu, X.; Scaioni, M.; Lin, X.; Li, R. Real-time landslide monitoring using close-range stereo image sequences analysis. In Proceedings of the 2012 International Conference on Systems and Informatics (ICSAI), Yantai, China, 19–20 May 2012; pp. 249–253.

- Matori, A.N.; Mokhtar, M.R.M.; Cahyono, B.K.; bin Wan Yusof, K. Close-range photogrammetric data for landslide monitoring on slope area. Hum. Sci. Eng. (CHUSER) 2012, 398–402. [Google Scholar] [CrossRef]

- Scaioni, M.; Feng, T.; Barazzetti, L.; Previtali, M.; Lu, P.; Qiao, G.; Wu, H.; Chen, W.; Tong, X.; Wang, W. Some applications of 2-D and 3-D photogrammetry during laboratory experiments for hydrogeological risk assessment. Geomat. Nat. Hazards Risk 2015, 6, 473–496. [Google Scholar] [CrossRef]

- Zhang, L.; Gruen, A. Multi-image matching for DSM generation from IKONOS imagery. ISPRS J. Photogramm. Remote Sens. 2006, 60, 195–211. [Google Scholar] [CrossRef]

- Ahmadabadian, A.H.; Robson, S.; Boehma, J.; Shortis, M.; Wenzel, K.; Fritsch, D. A comparison of dense matching algorithms for scaled surface reconstruction using stereo camera rigs. ISPRS J. Photogramm. Remote Sens. 2013, 78, 157–167. [Google Scholar] [CrossRef]

- Tan, X.; Sun, C.; Sirault, X.; Furbank, R.; Pham, T.D. Feature matching in stereo images encouraging uniform spatial distribution. Pattern Recognit. 2015, 48, 2530–2542. [Google Scholar] [CrossRef]

- Yuen, P.C.; Tsang, P.W.M.; Lam, F.K. Robust matching process: A dominant point approach. Pattern Recognit. Lett. 1994, 15, 1223–1233. [Google Scholar] [CrossRef]

- Lowe, D.G. Object recognition from local scale-invariant features. Comput. Vis. 1999, 2, 1150–1157. [Google Scholar]

- Di Stefano, L.; Marchionni, M.; Mattoccia, S. A fast area-based stereo matching algorithm. Image Vis. Comput. 2004, 22, 983–1005. [Google Scholar] [CrossRef]

- Marimon, D.; Ebrahimi, T. Orientation histogram-based matching for region tracking. In Proceedings of the Eighth International Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS’07), Santorini, Greece, 6–8 June 2007. [CrossRef]

- Debella-Gilo, M.; Kääb, A. Sub-pixel precision image matching for measuring surface displacements on mass movements using normalized cross-correlation. Remote Sens. Environ. 2011, 115, 130–142. [Google Scholar] [CrossRef]

- Guo, X.; Cao, X. Good match exploration using triangle constraint. Pattern Recognit. Lett. 2012, 33, 872–881. [Google Scholar] [CrossRef]

- Yu, Y.N.; Huang, K.Q.; Chen, W.; Tan, T.N. A novel algorithm for view and illumination invariant image matching. IEEE Trans. Image Process 2012, 21, 229–240. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Zhao, L.; Huang, S.; Yan, L.; Dissanayake, G. Line matching based on planar homography for stereo aerial images. ISPRS J. Photogramm. Remote Sens. 2015, 104, 1–17. [Google Scholar] [CrossRef]

- Lhuillier, M.; Quan, L. Match propagation for image-based modeling and rendering. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 1140–1146. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multiview stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Deng, L. Image matching using Gradient Orientation Selective Cross Correlation. Optik-Int. J. Light Electron Opt. 2013, 124, 4460–4464. [Google Scholar] [CrossRef]

- Stentoumis, C.; Grammatikopoulos, L.; Kalisperakis, I.; Karras, G. On accurate dense stereo-matching using a local adaptive. ISPRS J. Photogramm. Remote Sens. 2014, 91, 29–49. [Google Scholar] [CrossRef]

- Zhu, Q.; Wu, B.; Tian, Y. Propagation strategies for stereo image matching based on the dynamic triangle constraint. ISPRS J. Photogramm. Remote Sens. 2007, 62, 295–308. [Google Scholar] [CrossRef]

- Song, W.; Keller, J.M.; Haithcoat, T.L.; Davis, C.H. Relaxation-based point feature matching for vector map conflation. Trans. GIS 2011, 15, 43–60. [Google Scholar] [CrossRef]

- Stumpf, A.; Malet, J.P.; Allemand, P.; Ulrich, P. Surface reconstruction and landslide displacement measurements with Pléiades satellite images. ISPRS J. Photogramm. Remote Sens. 2014, 95, 1–12. [Google Scholar] [CrossRef]

- Leprince, S.; Barbot, S.; Ayoub, F.; Avouac, J.P. Automatic and precise orthorectification, coregistration, and subpixel correlation of satellite images, application to ground deformation measurements. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1529–1558. [Google Scholar] [CrossRef]

- Hirschmüller, H. Accurate and efficient stereo processing by Semi-Global Matching and mutual information. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–26 June 2005. [CrossRef]

- Hirschmüller, H. Stereo vision in structured environments by consistent Semi-Global Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006. [CrossRef]

- Hirschmüller, H.; Mayer, H.; Neukum, G. Stereo processing of HRSC Mars Express images by Semi-Global Matching. Int. Arch. Photogramm. Remote Sensing Spatial Inf. Sci. 2006, 36, 305–310. [Google Scholar]

- Hirschmüller, H. Stereo processing by Semi-Global Matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 238–341. [Google Scholar] [CrossRef]

- Bartelsen, J.; Mayer, H.; Hirschmüller, H.; Kuhn, A.; Michelini, M. Orientation and dense reconstruction of unordered terrestrial and aerial wide baseline image sets. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 25–30. [Google Scholar] [CrossRef]

- Wohlfeil, J.; Hirschmüller, H.; Piltz, B.; Börner, A.; Suppa, M. Fully automated generation of accurate digital surface models with sub-meter resolution from satellite imagery. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012. [Google Scholar] [CrossRef]

- Schumacher, F.; Greiner, T. Matching cost computation algorithm and high speed FPGA architecture for high quality real-time semi global matching stereo vision for road scenes. In Proceedings of the IEEE 17th International Conference on Intelligent Transportation Systems (ITSC), Qingdao, China, 8–11 October 2014; pp. 3064–3069.

- Spangenberg, R.; Langner, T.; Adfeldt, S.; Rojas, R. Large scale Semi-Global Matching on the CPU. In Proceedings of the 2014 IEEE Intlligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014. [CrossRef]

- Wu, B.; Zhang, Y.S.; Zhu, Q. Integrated point and edge matching on poor textural images constrained by self-adaptive triangulations. ISPRS J. Photogramm. Remote Sens. 2012, 68, 40–55. [Google Scholar] [CrossRef]

- Chen, M.; Shao, Z.F.; Liu, C.; Liu, J. Scale and rotation robust line-based matching for high resolution images. Optik-Int. J. Light Electron Opt. 2013, 124, 5318–5322. [Google Scholar] [CrossRef]

- Bulatov, D.; Wernerus, P.; Heipke, C. Multi-view dense matching supported by triangular meshes. ISPRS J. Photogramm. Remote Sens. 2011, 66, 907–918. [Google Scholar] [CrossRef]

- PhotoModeler Software. Available online: http://www.photomodeler.com (accessed on 10 November 2015).

- Lu, P.; Wu, H.; Qiao, G.; Li, W.; Scaioni, M.; Feng, T.; Liu, S.; Chen, W.; Li, N.; Liu, C.; et al. Model test study on monitoring dynamic process of slope failure through spatial sensor network. Environ. Earth Sci. 2015, 74, 3315–3332. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. Comput. Vis. Image Understand. (CVIU) 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Agrawal, M.; Konolige, K.; Blas, M.R. CenSurE: Center surround extremas for real time feature detection and matching. Lect. Notes Comput. Sci. 2008, 5305, 102–115. [Google Scholar] [CrossRef]

- Dou, J.; Li, X.; Yunus, A.P.; Paudel, U.; Chang, K.T.; Zhu, Z.; Pourghasemi, H.R. Automatic detection of sinkhole collapses at finer resolutions using a multi-component remote sensing approach. Nat. Hazards 2015, 78, 1021–1044. [Google Scholar] [CrossRef]

- Sedaghat, A.; Mokhtarzade, M.; Ebadi, H. Uniform robust scale-invariant feature matching for optical remote sensing images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4516–4527. [Google Scholar] [CrossRef]

- Wang, X.; Xu, Q.; Hao, Y.; Li, B.; Li, C. Robust and fast scale-invariance feature transform match of large-size multispectral image based on keypoint classification. J. Appl. Remote Sens. 2015, 9, 096028. [Google Scholar] [CrossRef]

- Moffitt, F.H.; Mikhail, E.M. Photogrammetry, 3rd ed.; Harper and Row: New York, NY, USA, 1980. [Google Scholar]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Hirschmüller, H.; Scharstein, D. Evaluation of stereo matching costs on images with radiometric differences. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 1582–1599. [Google Scholar] [CrossRef] [PubMed]

- Lawson, C.L. Software for Surface Interpolation. In Mathematical Software; Rice, J., Ed.; Academic Press: New York, NY, USA, 1977; Volume 3, pp. 161–194. [Google Scholar]

- Heckbert, S. Fundamentals of Texture Mapping and Image Warping. Master’s Thesis, Department of Electrical Engineering and Computer Science, University of California, Berkeley, CA, USA, 1989. [Google Scholar]

- Li, R.; Hwangbo, J.; Chen, Y.; Di, K. Rigorous Photogrammetric processing of HiRISE stereo imagery for Mars topographic mapping. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2558–2572. [Google Scholar] [CrossRef]

- Pratt, W.K. Digital Image Processing; John Wiley & Sons, Inc.: New York, NY, USA, 1991. [Google Scholar]

- Rusu, R.B.; Marton, Z.C.; Blodow, N.; Dolha, M.; Beetz, M. Towards 3D point cloud based object maps for household environments. Robot. Auton. Syst. J. 2008, 56, 927–941. [Google Scholar] [CrossRef]

- Sibson, R. A brief description of natural neighbor interpolation. Interpret. Multivar. Data 1981, 21, 21–36. [Google Scholar]

- Newcombe, R.A.; Izadi, S.; Hilliges, O.; Molyneaux, D.; Kim, D.; Davison, A.; Kohli, P.; Shotton, J.; Hodges, S.; Fitzgibbon, A. KinectFusion: Real-time dense surface mapping and tracking. In Proceedings of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Basel, Switzerland, 26–29 October 2011; pp. 127–136.

- Hirschmüller, H. Semi-global matching—Motivation, developments and applications. In Presented at the Photogrammetric Week, Stuttgart, Germany, 7–11 September 2011.

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012.

- LibSTgm Library. Available online: http://www.ifp.uni-stuttgart.de/publications/software/sure/index-lib.html (accessed on 26 January 2016).

| Items | SLR Camera | HSC Camera |

|---|---|---|

| Sensor | CCD | CMOS |

| Image size (pixel by pixel) | 2896 × 1944 | 2352 × 1728 |

| Focal length (mm) | 35.0 | 20.0 |

| Starting time | 12:25:00 | 14:27:22.000 |

| Ending time | 14:50:00 | 14:27:26.750 |

| Camera frequency | 6 frames/min | 20 frames/s |

| Number of image pairs | 737 | 95 |

| Camera Type | Selected Image | Number of Detected Features | ||

|---|---|---|---|---|

| SIFT | STAR | SURF | ||

| SLR | Left | 48,735 | 7049 | 23,694 |

| Right | 21,548 | 3182 | 19,609 | |

| HSC | Left | 34,759 | 4146 | 28,479 |

| Right | 43,412 | 5750 | 20,907 | |

| Item | Surface Volume (m3) | Volume Difference (m3) | ||||

|---|---|---|---|---|---|---|

| Camera | SLR | HSC | SLR | HSC | ||

| Time | 12:25:00 | 14:27:20 | 14:27:22.000 | 14:27:26.700 | 12:25:00–14:27:20 | 14:27:22.000–14:27:26.700 |

| Section 1 | 3.53 | 3.53 | 5.59 | 4.75 | −0.00 | −0.84 |

| Section 2 | 2.96 | 2.66 | 2.58 | 2.79 | −0.30 | 0.21 |

| Section 3 | 0.18 | 0.30 | 0.31 | 1.11 | 0.12 | 0.80 |

| Surface Volume Changes (m3) | −0.18 | 0.17 | ||||

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiao, G.; Mi, H.; Feng, T.; Lu, P.; Hong, Y. Multiple Constraints Based Robust Matching of Poor-Texture Close-Range Images for Monitoring a Simulated Landslide. Remote Sens. 2016, 8, 396. https://doi.org/10.3390/rs8050396

Qiao G, Mi H, Feng T, Lu P, Hong Y. Multiple Constraints Based Robust Matching of Poor-Texture Close-Range Images for Monitoring a Simulated Landslide. Remote Sensing. 2016; 8(5):396. https://doi.org/10.3390/rs8050396

Chicago/Turabian StyleQiao, Gang, Huan Mi, Tiantian Feng, Ping Lu, and Yang Hong. 2016. "Multiple Constraints Based Robust Matching of Poor-Texture Close-Range Images for Monitoring a Simulated Landslide" Remote Sensing 8, no. 5: 396. https://doi.org/10.3390/rs8050396