1. Introduction

Orthoimage mosaicking is the process of combining multiple orthorectrified images into a single seamless composite image. It is also a necessary process for covering a large geographic region in many applications, e.g., environmental monitoring, disaster management, and the construction of digital cities or smart cities [

1,

2]. When mosaicking orthoimages, the seam-based method is the most popular method. Generally, the seam-based method will define seamlines in overlapping regions. Then, in mosaicking, each pixel in the final result is represented entirely by one orthoimage based on which side of the seamline it lies on. Finally, a blending processing, also called feathering, based on seamlines is performed to make the seam invisible in the final mosaic. This paper focuses on the blending processing in mosaicking, i.e., a procedure to obtain a smooth transition between images along seamlines.

In orthoimages, objects above or below the digital terrain model (DTM) used for orthorectification will be geometrically displaced due to perspective imaging from different view angles. There are often obvious projection differences in overlapping areas and different facets of an object, e.g., a building, may appear in different images, especially for high-resolution aerial orthoimages in urban areas. Those phenomena are more obvious the higher the objects are [

1]. When such phenomena occur, there are obvious misalignments between orthoimages. Moving objects, e.g., cars, are also shown as obvious misalignments between orthoimages. Thus, in the blending processing, it becomes more difficult to make the seam invisible in the mosaic. Radiometric differences due to the different viewing angles or illumination conditions between orthoimages also bring difficulties and may cause visible shifts in brightness or color.

Generally, in blending processing, mosaic image

is a weighted combination of the input images

and

over the overlapping areas. The weighting coefficients vary as a function of the distance from the seamline. Milgram (1975) defined a linear ramp to pixel values on either side of the seamline as the weighting function to obtain equal values at the seamline itself [

3]. Li et al. (2015) improved the weighting function, and presented a cosine distance weighted blending method for high spatial resolution remote sensing images in which the weight calculation algorithm is based on the cosine distance [

4]. Burt and Adelson (1983) presented a pyramid blending approach where images were decomposed into a set of band-pass filtered component images. Then, different frequency bands were combined with different weighting coefficients and the component images in each spatial frequency band are assembled into a corresponding band-pass mosaic. Finally, these band-pass mosaic images are summed to obtain the desired image mosaic [

5,

6]. Brown and Lowe (2007) also used the same pyramid blending approach in [

5] for the panoramic image stitching [

7]. Shao et al. (2012) improved the pyramid blending approach in [

5] for asymmetrically informative biological images during microscope image stitching. They optimized the blending coefficients based on the constraint of the information imbalance between the earlier- and later-acquired images [

8]. Uyttendaele et al. (2001) presented a feathering method, which used averaging and interpolation functions to eliminate ghosting and reduce intensity differences [

9]. Zhu and Qian (2002) presented a hard correction method to remove a possible seam. It first obtained the mosaic image directly based on seamlines without any blending processing in overlapping areas. Then, the average difference within a certain extent of the two sides of the seamline was computed and corrected within a certain extent of the two sides of the seamline [

10]. Pérez et al. (2003) proposed a framework for image editing, i.e., object insertion, in the gradient domain. The object is cut from an image and inserted into a new background image. The insertion is performed by optimizing over the gradients of the inserted object [

11]. Levin et al. (2004) and Zomet et al. (2006) proposed a gradient-domain image stitching method. The method introduced several formal cost functions for the evaluation of the stitching quality in the gradient domain and defined the mosaic image as their optimum in the overlapping areas [

12,

13]. Jia and Tang (2008) proposed an image stitching approach by image deformation. The approach propagated the deformation into the target image smoothly and both structure deformation and colour correction were simultaneously achieved within the same framework operating in the image gradient domain [

14].

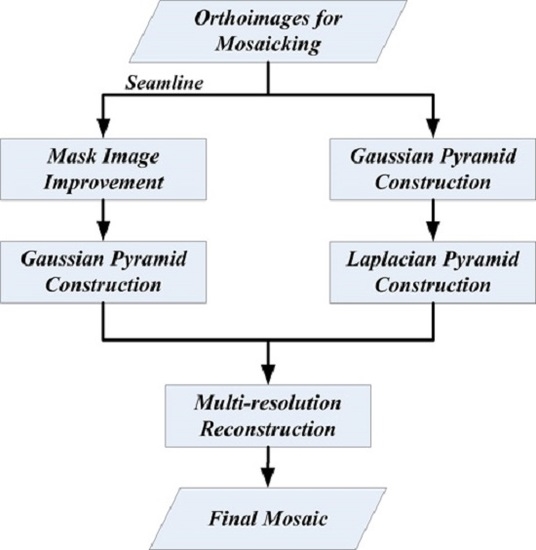

Most of these methods deal with natural images and focus on the visual effect. However, these methods still do not fully meet the requirements for remote sensed images in earth observation. In earth observation, orthoimages are orthorectifed remote sensed images with precise geocoding and should reflect the earth’s surface as accurately as possible. The visual effect is of secondary importance. A mosaic of orthoimages is no exception. Unlike natural images, misalignments in orthoimages generally appear in regions with objects such as buildings, bridges, and moving cars. For high-resolution aerial orthoimages in urban areas, such misalignments are usually large and different facades of objects may appear in different images. If only considering the visual effect, ghosting and artifacts may be created in the mosaic image that are not only not a true reflection of earth’s surface but also harmful in image interpretation. Thus, these regions should be treated differently from other regions to ensure the mosaic image can reflect the earth’s surface as accurately as possible. Therefore, in this paper, a multi-resolution blending method considering changed regions for orthoimage mosaicking is presented. In this method, regions with obvious projection differences (e.g., buildings) or moving objects (e.g., cars) are considered as changed regions. In the blending procedure, changed regions and unchanged regions are treated differently: changed regions will be blended within a limited width or blending will be avoided, and unchanged regions will be blended in the set blending width to achieve a smooth transition.

3. Results

The described algorithm above has been implemented in C++. The EDISON library is used for image segmentation and the Geospatial Data Abstraction Library (GDAL) is used to read and write image files. A number of digital aerial orthoimages have been employed to test the proposed algorithm, and two data sets are presented. In experiments on the two presented data sets, the MS segmentation parameters are set as (6, 5, 20), where are the bandwidth parameters and is the least significant feature size. The threshold to distinguish changed and unchanged pixels is set to 1.0. The threshold of RCR, , is set to 0.2.

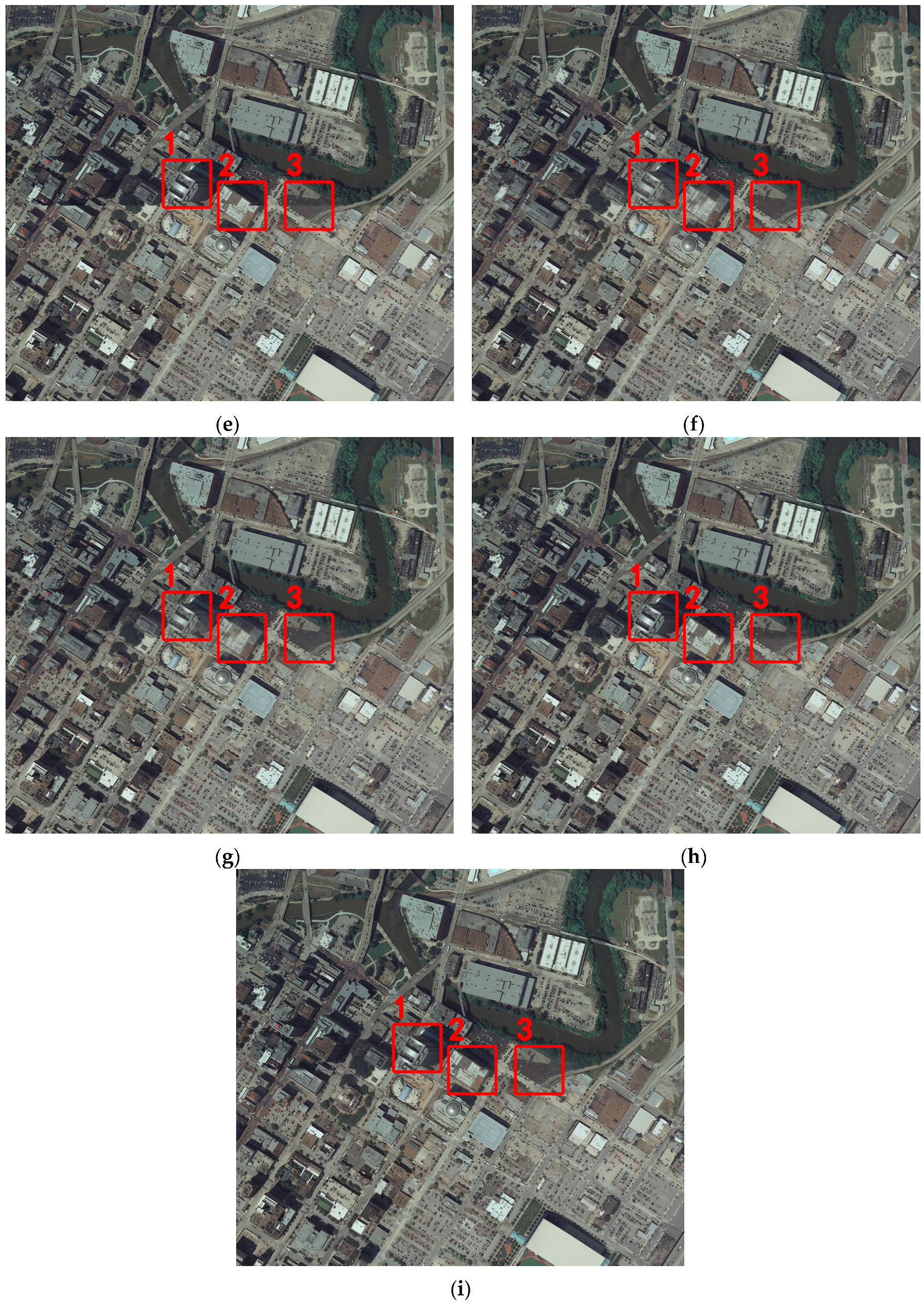

The image size of data set 1 is approximately 2000 by 1800 pixels. The performance of the presented method is demonstrated in

Figure 2 and

Figure 3. In

Figure 2 and

Figure 3, comparisons with the direct mosaic, the linear ramp weighting method [

3], the cosine distance weighted blending method [

4], and the pyramid blending method [

5] are also presented. In these experiments, the blending width in the linear ramp weighting method and the cosine distance weighted blending method is 400 pixels, and there are 200 pixels on each side of the seamline. The size of the smooth filter to generate the mask image in the presented method is also 400 pixels. The left and right images overlapping seamlines (dotted line) are shown in

Figure 2a,b, respectively. To simplify the problem, the seamline is considered a straight line in the experiments.

Figure 2c shows the obtained changed regions. In

Figure 2c, red regions are changed regions and green regions are unchanged regions. Most of buildings are recognized as changed regions.

Figure 2d shows the generated mask image, where values of pixels in the changed regions remain unchanged.

Figure 2e–i shows the results of the direct mosaic, the linear ramp weighting method, the cosine distance weighted blending method, the pyramid blending method, and the presented method, respectively.

Marked areas 1, 2, and 3 in

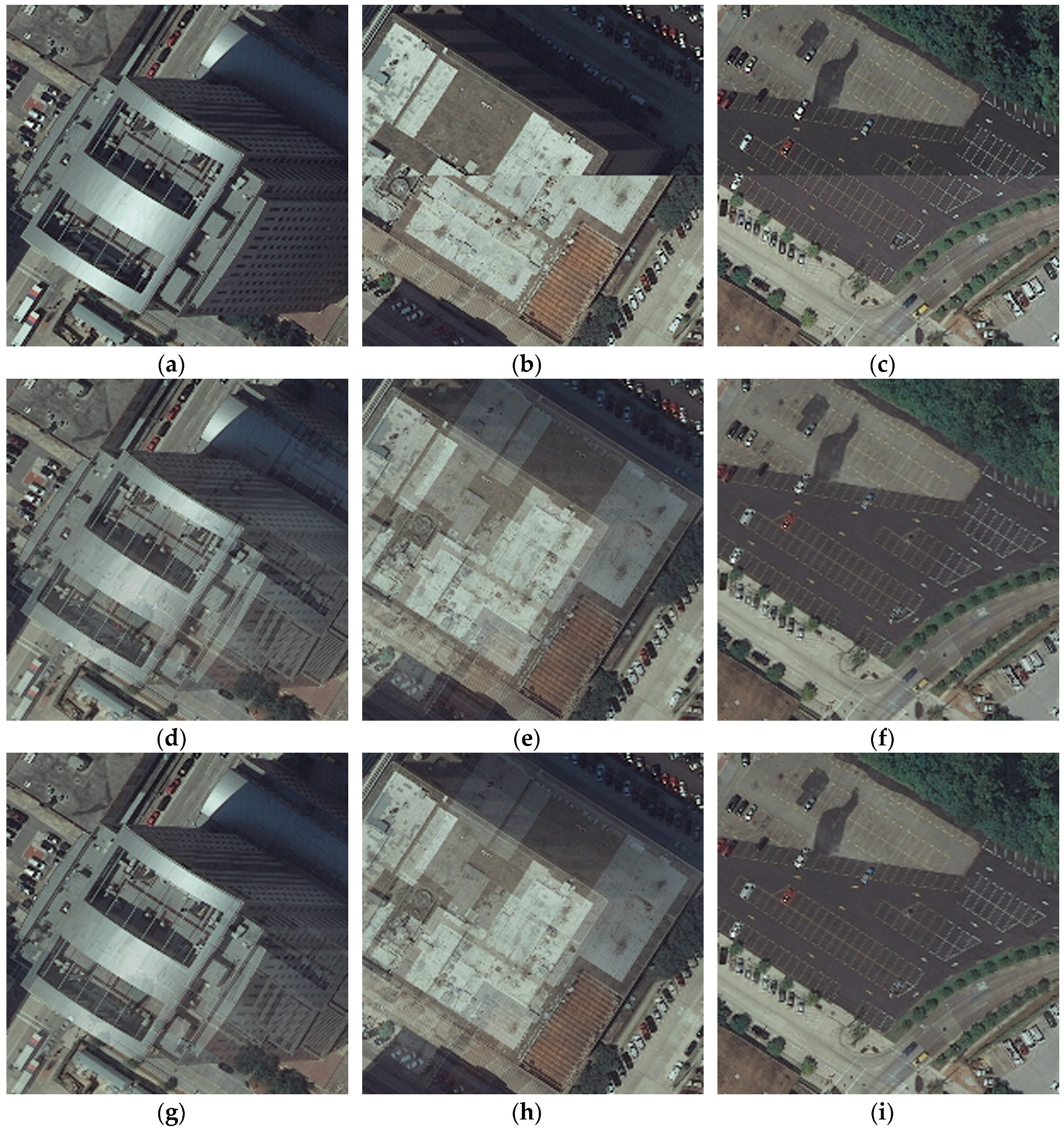

Figure 2 denote three typical cases of the seamline passing by buildings, the seamline passing through buildings, and the seamline passing through areas with large radiometric differences, respectively. Details of these three cases are shown in

Figure 3. In

Figure 3, marked areas of the direct mosaic, the linear ramp weighting method, the cosine distance weighted blending method, the pyramid blending method and the presented method are shown from top to bottom. The linear ramp weighting method achieves a smooth transition in the given blending width when the seamline passes through areas with large radiometric differences (

Figure 3f). However, ghosting and artifacts also appear when the seamline passes by buildings (

Figure 3d) or through buildings (

Figure 3e). The cosine distance weighted blending method also obtains a smooth transition when the seamline passes through areas with large radiometric differences (

Figure 3i), and ghosting and artifacts are less than the linear ramp weighting method when the seamline passes by buildings (

Figure 3g) or through buildings (

Figure 3h) due to the improved weight function. The pyramid blending method avoids ghosting and artifacts when the seamline passes by buildings (

Figure 3j) or through buildings (

Figure 3k), but the transition is not smooth when the seamline passes through areas with large radiometric differences (

Figure 3l). The presented method avoids the shortcomings of the linear ramp weighting, the cosine distance weighted blending method and the pyramid blending methods, and performs well in these three cases (

Figure 3m–o).

A quantitative comparison was also conducted. The correlation coefficient was utilized to evaluate the ability to preserve detailed information in the image, with the direct mosaic as a reference.

Table 1 shows comparison of the correlation coefficient between the results of the direct mosaic and the linear ramp weighting, the cosine distance weighted blending method, the pyramid blending, and the presented methods in each channel for data set 1. The linear ramp weighting method had the smallest correlation coefficient value in each channel due to ghosting and artifacts. The cosine distance weighted blending method had larger correlation coefficient value in each channel than the linear ramp weighting method. The pyramid blending method had the largest correlation coefficient value in each channel because of its narrow blending width. The presented method obtained correlation coefficient values close to that of the pyramid blending method. The comparison indicated that the ability to preserve detailed information of the presented method was better than the linear ramp weighting method and the cosine distance weighted blending method, and very close to that of the pyramid blending method. Considering the poor performance of the pyramid blending method when the seamline passes through areas with large radiometric differences, the presented method obtained the best outcome.

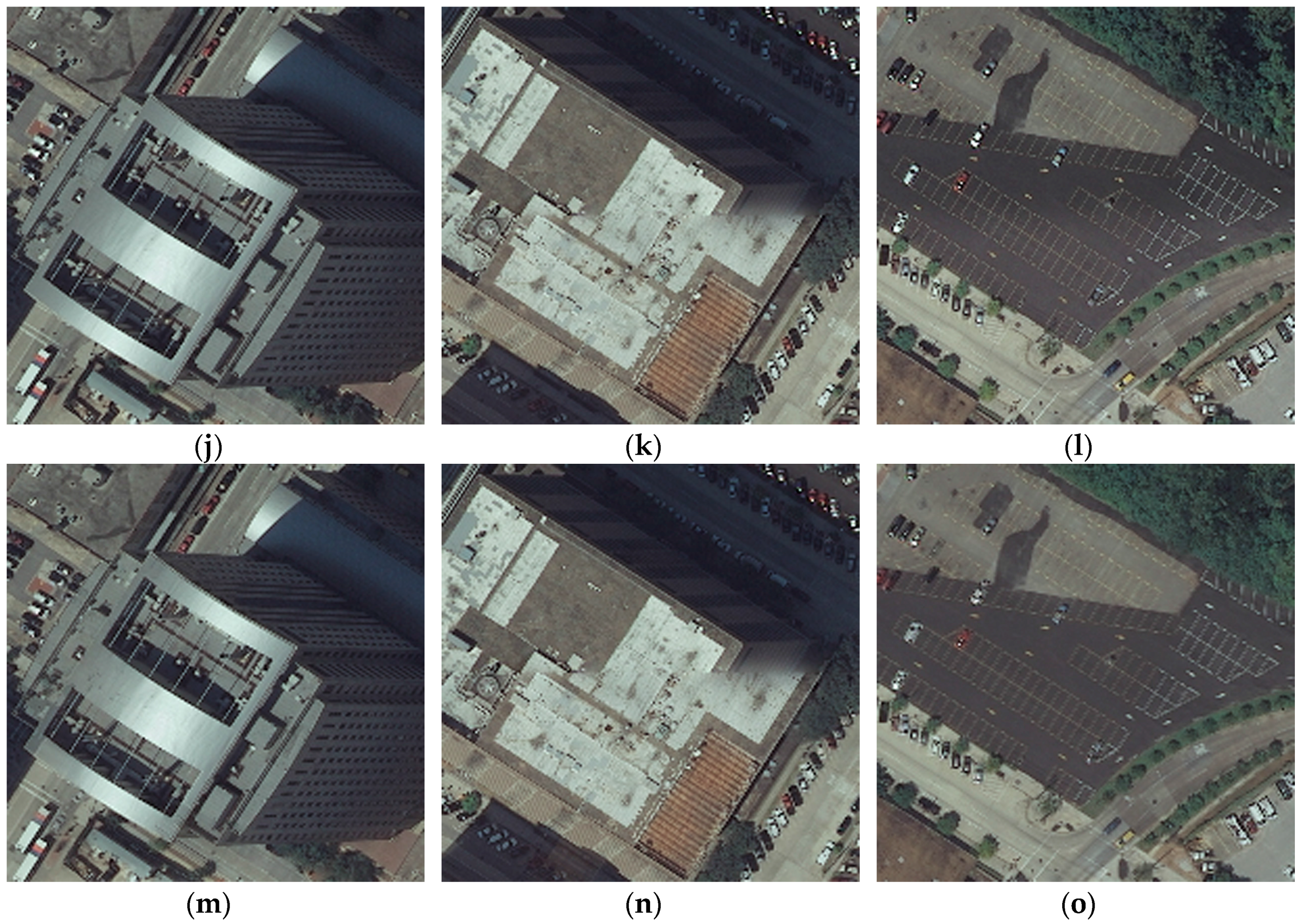

This paper also presents another data set, i.e., data set 2, to demonstrate the performance of the presented method. The image size of data set 2 is approximately 2800 by 2300 pixels.

Figure 4 and

Figure 5 show comparisons of the direct mosaic, the linear ramp weighting method, the cosine distance weighted blending method, the pyramid blending method and the presented method. The parameters of these methods are the same as data set 1. The left and right images overlapping seamlines (dotted line) are shown in

Figure 4a,b, respectively.

Figure 4c–g shows the results of the direct mosaic, the linear ramp weighting method, the cosine distance weighted blending method, the pyramid blending method, and the presented method, respectively.

Marked areas 1, 2, and 3 in

Figure 4 denote three typical cases of the seamline passing through areas with large radiometric differences, the seamline passing by buildings, and the seamline passing through buildings, respectively. Details of these three cases are shown in

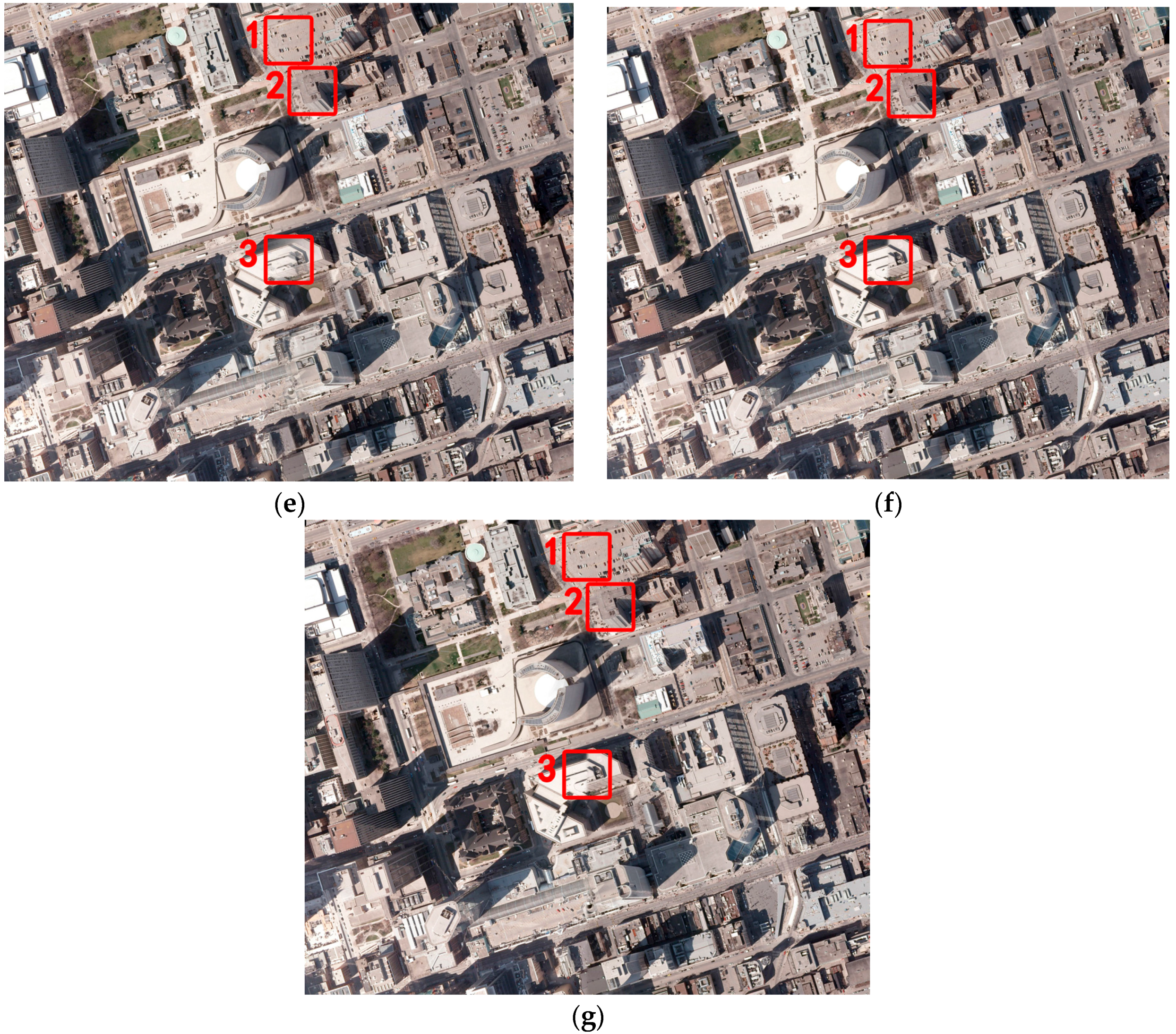

Figure 5. In

Figure 5, marked areas of the direct mosaic, the linear ramp weighting method, the pyramid blending method and the presented method are shown from top to bottom. The linear ramp weighting method obtains a smooth transition in the given blending width when the seamline passes through areas with large radiometric differences (

Figure 5d), but there are still ghosting and artifacts (marked areas in

Figure 5d) because there is a moving white car. More obvious ghosting and artifacts appear when the seamline passes by buildings (

Figure 5e), and when the seamline passes through buildings (

Figure 5f). The cosine distance weighted blending method obtains similar result to the linear ramp weighting method (

Figure 5g–i). Of course, ghosting and artifacts created by the cosine distance weighted blending method are less than the linear ramp weighting method when the seamline passes by buildings (

Figure 5h) or through buildings (

Figure 5i). The pyramid blending method successfully avoids ghosting and artifacts in these three cases (

Figure 5j–l), but the transition is not smooth when the seamline passes through areas with large radiometric differences (

Figure 5j). The presented method avoids shortcomings of the other three methods and performs well in these three cases (

Figure 5m–o). Of course, there are also flaws with the presented method. As shown in

Figure 5n, there is still ghosting in the building areas.

Table 2 shows comparison of correlation coefficient between in each channel for data set 2. The linear ramp weighting method had the smallest correlation coefficient in each channel. The cosine distance weighted blending method had larger correlation coefficient value in each channel than the linear ramp weighting method. The pyramid blending method had the largest correlation coefficient value in each channel. The presented method obtained correlation coefficient values close to that of the pyramid blending method. The comparison also indicated that the ability to preserve detailed information of the presented method was better than the linear ramp weighting method and the cosine distance weighted blending method, and very close to that of the pyramid blending method. Considering the poor performance of the pyramid blending method when the seamline passes through areas with large radiometric differences, the presented method also obtained the best outcome in data set 2.

4. Discussion

This paper provided detailed performance of the presented method in blending processing based on seamlines for orthorimage mosaicking in urban areas. To give an overall assessment of the linear ramp weighting [

3], the cosine distance weighted blending method [

4], the pyramid blending [

5], and the presented method, detailed comparisons between these methods in three typical cases of the seamline passing by buildings, the seamline passing through buildings, and the seamline passing through areas with large radiometric difference were also presented.

The linear ramp weighting method always obtained a smooth transition in the given blending width, but if there were projection differences (e.g., the seamline passing by or passing through buildings) or moving objects (e.g., cars) within the blending width, ghosting and artifacts appeared because the linear ramp weighting method was a blind blending procedure [

3]. This had been validated by the presented experimental results of the presented two data sets.

The cosine distance weighted blending method obtained similar results to the linear ramp weighting method. Because it improved the weighting function, the cosine distance weighted blending method obtained smoother transition than the linear ramp weighting method near the borders of the transition zone [

4]. Thus, ghosting and artifacts generated by the cosine distance weighted blending method were slighter than the linear ramp weighting method.

The pyramid blending method always avoided ghosting and artifacts, but the transition was not smooth when the seamline passed through areas with large radiometric differences. This was mainly because the blending in this method was carried out in a narrow width in fact [

5].

The presented method achieved the best outcome considering both the visual effect and the quantitative statistics. This was due to the selective blending strategy for changed and unchanged regions. It was implemented in the generation of the mask image. For changed regions, if the seamline passed by or through them, blending would be avoided or limited in a narrow width, which is similar to the pyramid blending method. For unchanged regions, if the seamline passed by or through them, blending would be carried out in the set blending width to achieve a smooth transition, which is similar to the linear ramp weighting method.

Of course, the presented method also has its shortcomings. A key step of the presented method is to distinguish changed and unchanged regions. Whether regions of buildings and moving objects can be accurately determined as changed regions has an important impact on the performance of the presented method. As shown in

Figure 5n, because the color of the building roof is very similar to that of the road and the boundary of the building roof is unclear, the building roof and the road are segmented into the same region, so there is still ghosting in the building areas. The determination of changed regions is dependent on image segmentation and change detection. However, there is still no automatic solution to selecting proper segmentation and change detection parameters at present. In practice, what we have done was to choose certain typical images and test which parameters would produce suitable results. Then, the selected parameters were applied to other images.

The threshold of RCR,

, should be moderate. In our experiments,

is set to 0.2. From our experience, the presented method is slightly sensitive to the value of

.

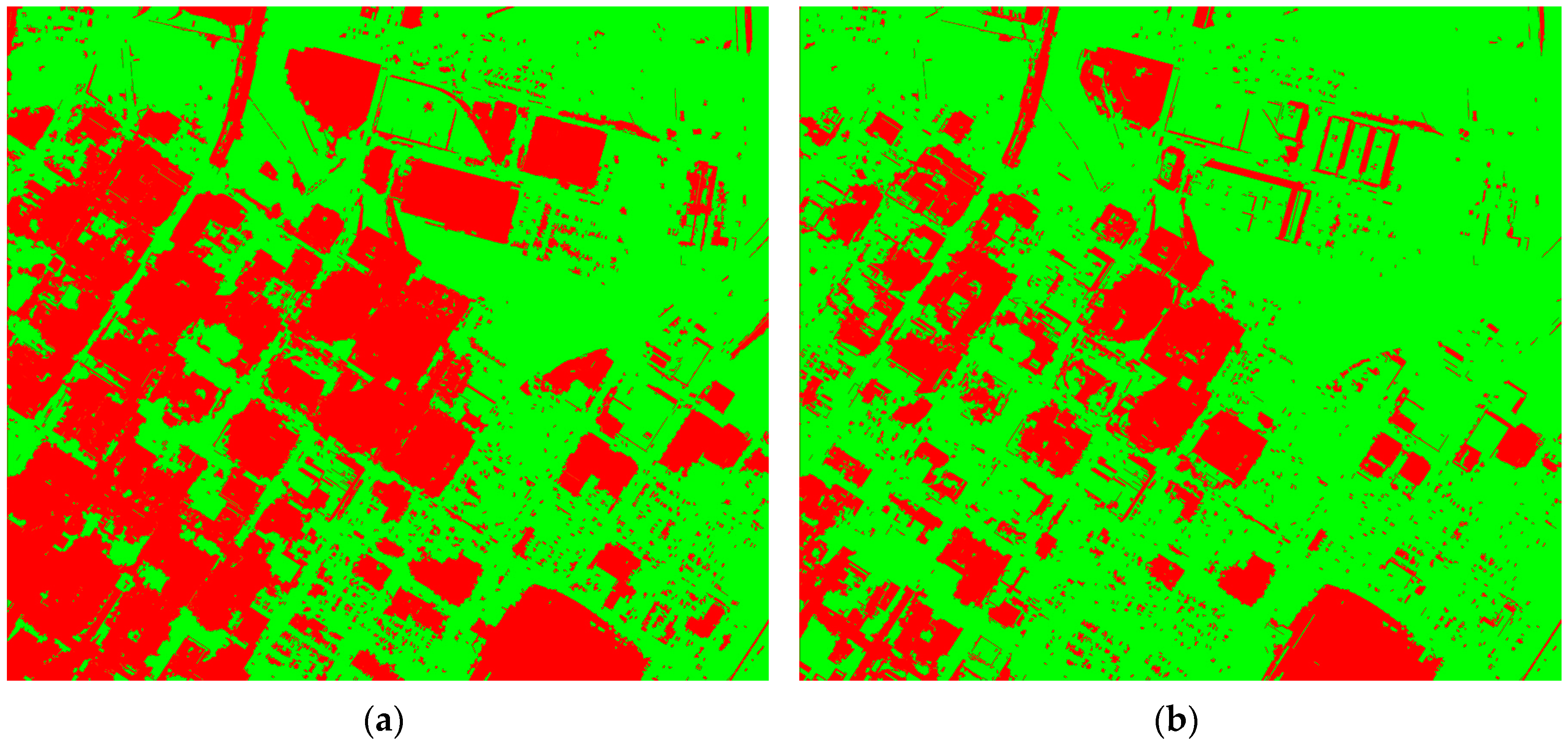

Figure 6 shows obtained changed regions with

(

Figure 6a) and

(

Figure 6b) for data set 1 respectively.

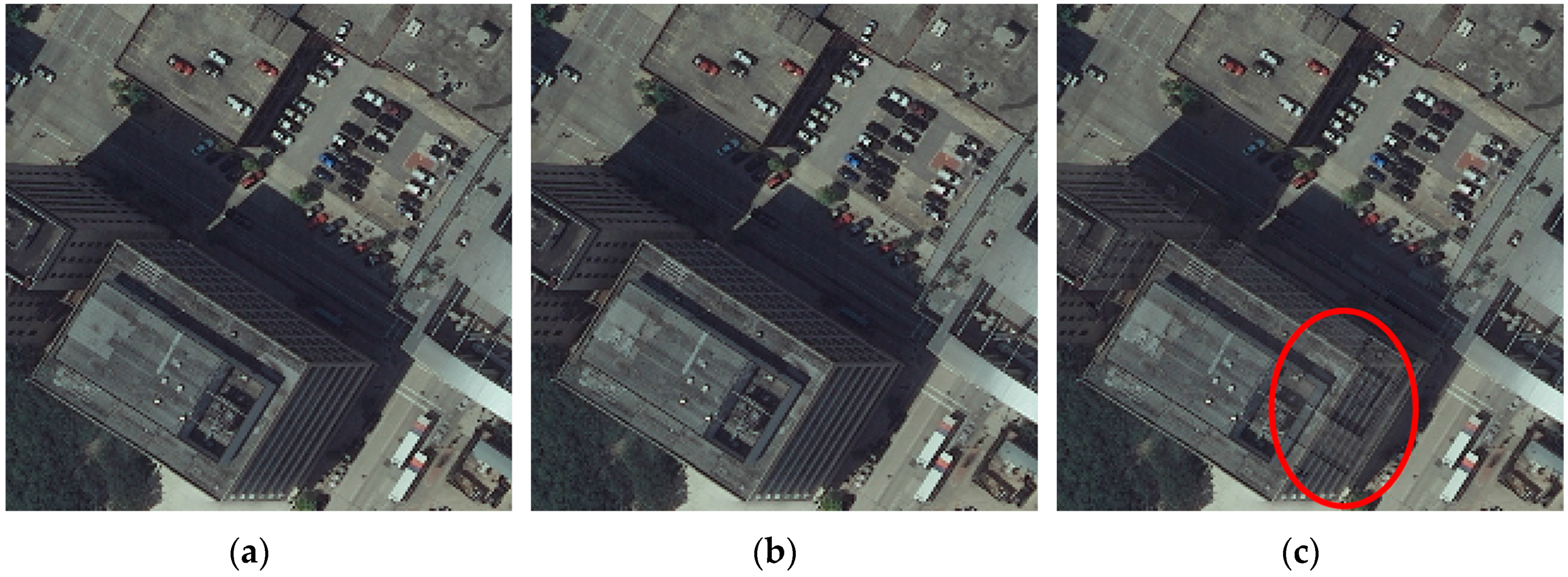

Figure 7 shows details of changed regions when

,

and

respectively.

Figure 8 further shows corresponding detailed results of the presented method when

,

and

respectively. Obviously, compared with

, more regions are considered as changed regions when

and less regions are considered as changed regions when

. When

, only parts of buildings are considered as changed regions, so there are still ghosting and artifacts in building areas after blending. As shown in

Figure 8c, ghosting and artifacts are very obvious especially in the marked ellipse areas. When

and

, blending results (

Figure 8a,b) are similar and satisfied. Thus, relative small value of

can achieve better result. Of course, too small value of

is also not suitable because if

is smaller, more regions will be considered as changed regions and the presented method will be more similar to the pyramid blending method [

5].

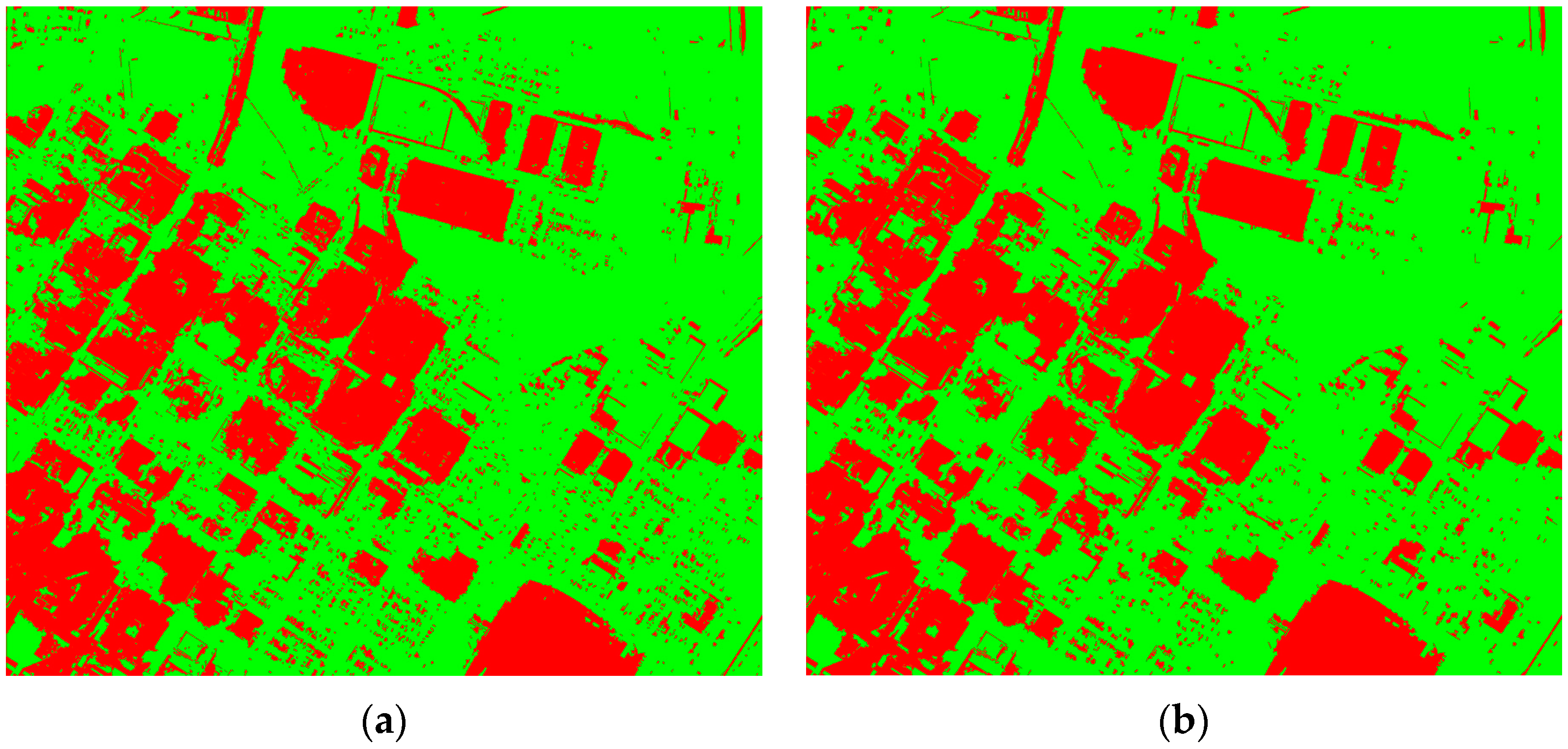

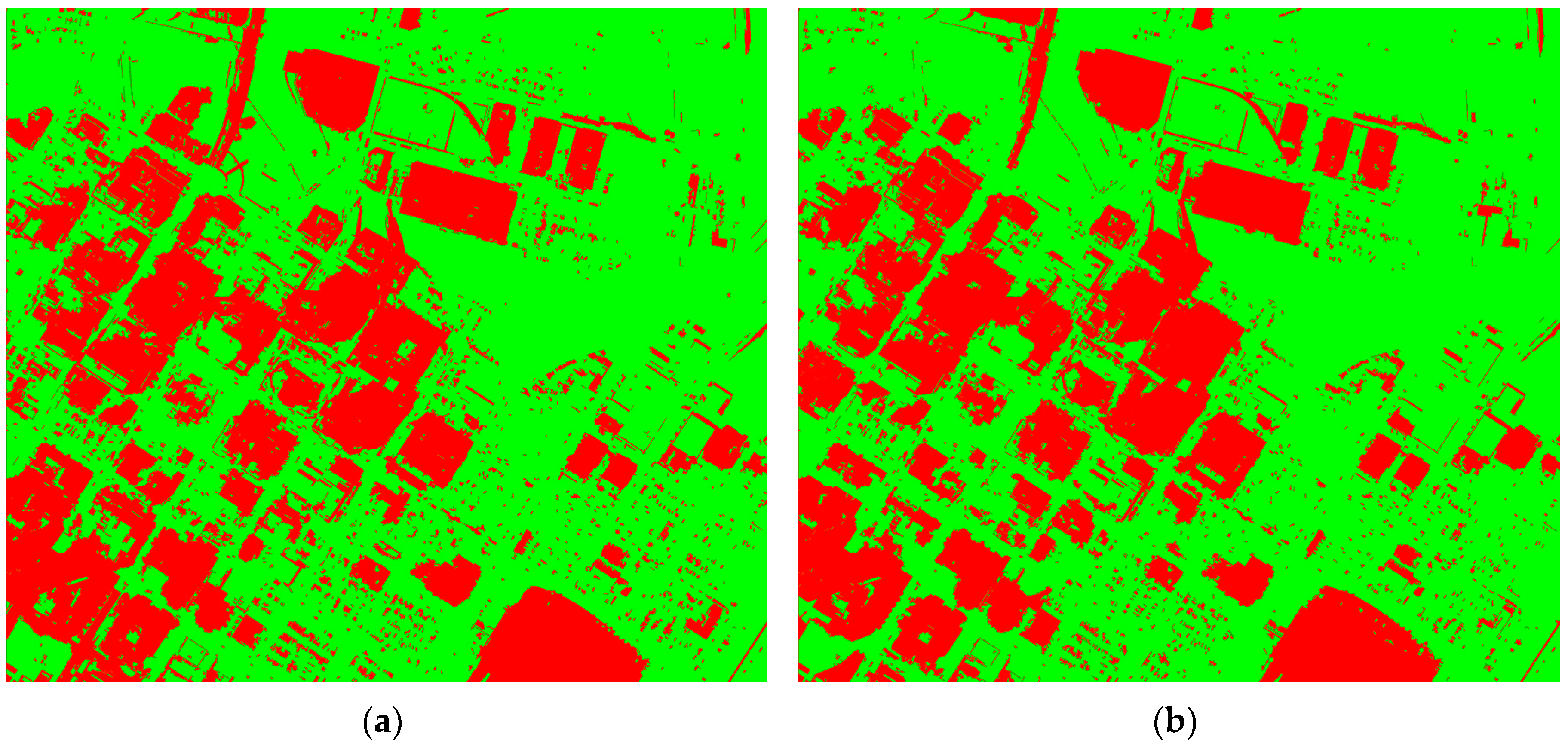

Different parameters of MS algorithm

are also set to analyze the sensitivity of the presented method. The presented method is not sensitive to the value of

because it yields almost the same changed regions for different values of

(compare

Figure 9a,b and

Figure 2c) and if changed regions are same, the blending results are also identical. However, the presented method is slightly sensitive to the values of

and

. Where for different values of

, results yielded by the presented method are slightly different. Though obtained regions are similar with different values of

(compare

Figure 10a,b and

Figure 2c), details of blending results are still slightly different.

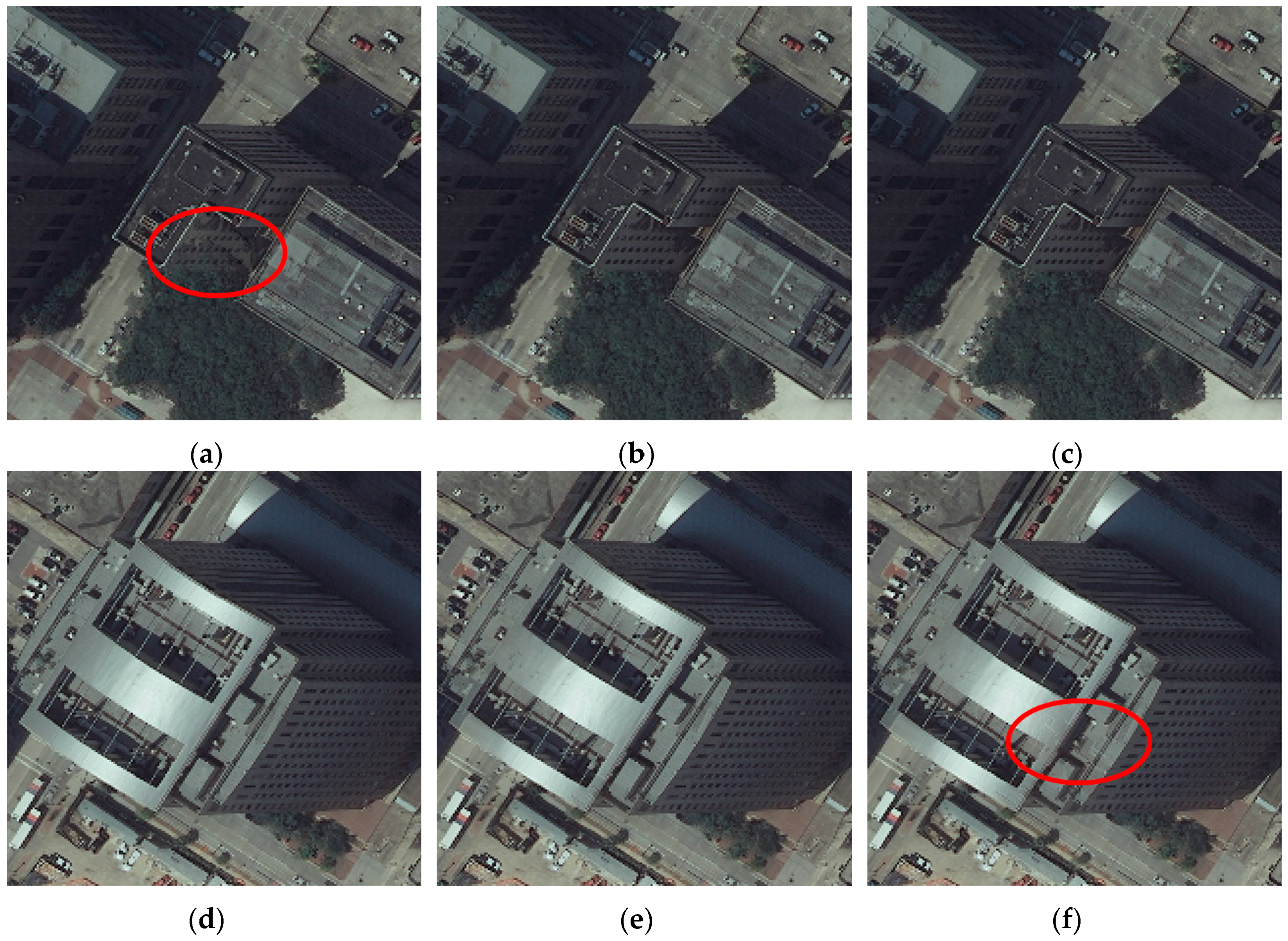

Figure 11a–c shows one selected region of blending results when

= (3, 5, 20),

= (6, 5, 20), and

= (9, 5, 20) respectively. Obviously, there are still some ghosting and artifacts when

= (3, 5, 20) (marked ellipse areas in

Figure 11a).

Figure 11d–f shows another selected region of blending results when

= (3, 5, 20),

= (6, 5, 20), and

= (9, 5, 20) respectively. Obviously, there are also some ghosting and artifacts when

= (9, 5, 20) (marked ellipse areas in

Figure 11f). As far as

is concerned, results are also slightly different for different values. As show in

Figure 12, obtained regions are slightly different with different values of

. When

= (6, 2.5, 20), changed regions are more dispersed, and when

= (6, 7.5, 20), changed regions are more concentrated.

Figure 13 further shows selected regions of blending results with

= (6, 2.5, 20),

= (6, 5, 20), and

= (6, 7.5, 20) respectively. Obviously, there are still some ghosting and artifacts when

= (6, 2.5, 20) and

= (6, 7.5, 20) (marked ellipse areas in

Figure 13a,c).